CentOS7.9+Kubernetes1.29.2+Docker25.0.3高可用集群二进制部署

CentOS7.9+Kubernetes1.29.2+Docker25.0.3高可用集群二进制部署

- Kubernetes高可用集群(Kubernetes1.29.2+Docker25.0.3)二进制部署

- 二进制软件部署flannel v0.22.3网络,使用的etcd是版本3,与之前使用版本2不同。查看官方文档进行了解。

- 截至北京时间2024年2月15日凌晨,k8s已经更新至1.29.2版。从v1.24起,Docker不能直接作为k8s的容器运行时。因为Docker庞大的生态和广泛的群众基础,我们可以通过补充安装cri-dockerd以满足容器运行时接口的条件。

- 版本关系

## 从kubernetes-server-linux-amd64.tar.gz解压后有kubeadm

]# /opt/bin/kubernetes/kubeadm config images list

registry.k8s.io/kube-apiserver:v1.29.2

registry.k8s.io/kube-controller-manager:v1.29.2

registry.k8s.io/kube-scheduler:v1.29.2

registry.k8s.io/kube-proxy:v1.29.2

registry.k8s.io/coredns/coredns:v1.11.1

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.10-0

一、总体规划

1.1 软件获取

- 所有软件均为开源软件,源文件从官方链接或官方镜像链接地址获取。

- 所有软件采用最新稳定版本(不包括主机操作系统)。

- 关于主机操作系统内核版本,参考相关说明指导进行升级。

1.1.1 centos 7.9

https://mirrors.tuna.tsinghua.edu.cn/centos/7.9.2009/isos/x86_64/

下载2009或2207其中任何一个版本都可以。

下载:https://mirrors.tuna.tsinghua.edu.cn/centos/7.9.2009/isos/x86_64/CentOS-7-x86_64-Minimal-2009.iso

下载:https://mirrors.tuna.tsinghua.edu.cn/centos/7.9.2009/isos/x86_64/CentOS-7-x86_64-Minimal-2207-02.iso

1.1.2 kubernetes v1.29.1

https://github.com/kubernetes/kubernetes/releases

下载:https://dl.k8s.io/v1.29.1/kubernetes-server-linux-amd64.tar.gz

1.1.3 docker 25.0.3

https://download.docker.com/linux/static/stable/x86_64/

下载:https://download.docker.com/linux/static/stable/x86_64/docker-25.0.3.tgz

1.1.4 cri-dockerd 0.3.9

https://github.com/Mirantis/cri-dockerd

下载:https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.9/cri-dockerd-0.3.9.amd64.tgz

1.1.5 etcd v3.5.12

https://github.com/etcd-io/etcd/

下载:https://github.com/etcd-io/etcd/releases/download/v3.5.12/etcd-v3.5.12-linux-amd64.tar.gz

1.1.6 cfssl v1.6.4

https://github.com/cloudflare/cfssl/releases

下载:https://github.com/cloudflare/cfssl/releases/download/v1.6.4/cfssljson_1.6.4_linux_amd64

下载:https://github.com/cloudflare/cfssl/releases/download/v1.6.4/cfssl_1.6.4_linux_amd64

下载:https://github.com/cloudflare/cfssl/releases/download/v1.6.4/cfssl-certinfo_1.6.4_linux_amd64

1.1.7 flannel v0.24.2

https://github.com/flannel-io/flannel

下载:https://github.com/flannel-io/flannel/releases/download/v0.24.2/flannel-v0.24.2-linux-amd64.tar.gz

1.1.8 cni-plugins v1.4.0、cni-plugin v1.4.0

下载:https://github.com/containernetworking/plugins/releases/download/v1.4.0/cni-plugins-linux-amd64-v1.4.0.tgz

下载:https://github.com/flannel-io/cni-plugin/releases/download/v1.4.0-flannel1/cni-plugin-flannel-linux-amd64-v1.4.0-flannel1.tgz

1.19 nginx 1.24.0

http://nginx.org/en/download.html

下载:http://nginx.org/download/nginx-1.24.0.tar.gz

1.2 集群主机规划

1.2.1 虚拟机安装及主机设置

- 主机名与hosts本地解析

| Hostname | Host IP | Docker IP | Role |

|---|---|---|---|

| k8s1.29.1-1 | 192.168.26.31 | 10.26.31.1/24 | master&worker、etcd、docker、flannel |

| k8s1.29.1-2 | 192.168.26.32 | 10.26.32.1/24 | master&worker、etcd、docker、flannel |

| k8s1.29.1-3 | 192.168.26.33 | 10.26.33.1/24 | master&worker、etcd、docker、flannel |

[root@vm51 ~]# hostname ## 修改:# hostnamectl set-hostname k8s-31

k8s-31

src]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.26.31 k8s1.29.1-1 vm31 etcd_node1 k8s-31

192.168.26.32 k8s1.29.1-2 vm32 etcd_node2 k8s-32

192.168.26.33 k8s1.29.1-3 vm33 etcd_node3 k8s-33

- 关闭防火墙

~]# systemctl disable --now firewalldRemoved symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.~]# firewall-cmd --statenot running

- 关闭SELINUX

~]# sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config~]# reboot~]# sestatusSELinux status: disabled

修改SELinux配置需要重启操作系统。

- 关闭交换分区

~]# sed -ri 's/.*swap.*/#&/' /etc/fstab~]# swapoff -a && sysctl -w vm.swappiness=0~]# cat /etc/fstab#UUID=2ee3ecc4-e9e9-47c5-9afe-7b7b46fd6bab swap swap defaults 0 0~]# free -mtotal used free shared buff/cache available

Mem: 3770 171 3460 19 138 3402

Swap: 0 0 0

- 配置ulimit

~]# ulimit -SHn 65535~]# cat << EOF >> /etc/security/limits.conf

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

EOF

1.2.2 ipvs管理工具安装及模块加载

获取离线安装rpm包,参考:附:安装ipvs工具

~]# yum install ipvsadm ipset sysstat conntrack libseccomp -y...

~]# cat >> /etc/modules-load.d/ipvs.conf <<EOF

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF~]# systemctl restart systemd-modules-load.service~]# lsmod | grep -e ip_vs -e nf_conntrack

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 4

ip_vs 145458 10 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack_ipv6 18935 3

nf_defrag_ipv6 35104 1 nf_conntrack_ipv6

nf_conntrack_netlink 40492 0

nf_conntrack_ipv4 19149 5

nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

nf_conntrack 143360 10 ip_vs,nf_nat,nf_nat_ipv4,nf_nat_ipv6,xt_conntrack,nf_nat_masquerade_ipv4,nf_nat_masquerade_ipv6,nf_conntrack_netlink,nf_conntrack_ipv4,nf_conntrack_ipv6

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

1.2.3 加载containerd相关内核模块

临时加载模块~]# modprobe overlay~]# modprobe br_netfilter永久性加载模块~]# cat > /etc/modules-load.d/containerd.conf << EOF

overlay

br_netfilter

EOF设置为开机启动~]# systemctl restart systemd-modules-load.service ##systemctl enable --now systemd-modules-load.service

1.2.4 开启内核路由转发及网桥过滤

~]# cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 131072

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

~]# sysctl --system所有节点配置完内核后,重启服务器,保证重启后内核依旧加载~]# reboot -h now重启后查看ipvs模块加载情况:~]# lsmod | grep --color=auto -e ip_vs -e nf_conntrack重启后查看containerd相关模块加载情况:~]# lsmod | egrep 'br_netfilter | overlay'

1.2.5 主机时间同步

略

1.2.6 创建软件部署目录

- /opt/app 存放部署资源文件

- /opt/cfg 存放配置文件

- /opt/cert 存放证书

- /opt/bin 链接/opt/app下的源文件,去除文件名中的版本号,方便升级时只需要更改链接即可。

]# mkdir -p /opt/{app,cfg,cert,bin}

- 将下载的软件及资源上传到主机k8s-31(192.168.26.31):/opt/app

1.3 集群网络规划

| 网络名称 | 网段 | 备注 |

|---|---|---|

| Node网络 | 192.168.26.0/24 | Node IP,Node节点的IP地址,即物理机(宿主机)的网卡地址。 |

| Service网络 | 10.168.0.0/16 | Cluster IP,也可叫Service IP,Service的IP地址。service-cluster-ip-range定义Service IP地址范围的参数。 |

| Pod网络 | 10.26.0.0/16 | Pod IP,Pod的IP地址,容器(docker0)网桥分配的地址。cluster-cidr定义Pod网络CIDR地址范围的参数。 |

配置:

apiserver:--service-cluster-ip-range 10.168.0.0/16 ##Service网络 10.168.0.0/16controller:--cluster-cidr 10.26.0.0/16 ##Pod网络 10.26.0.0/16--service-cluster-ip-range 10.168.0.0/16 ##service网络 10.168.0.0/16kubelet:--cluster-dns 10.168.0.2 ## 解析Service,10.168.0.2proxy:--cluster-cidr 10.26.0.0/16 ##Pod网络 10.26.0.0/16

1.4 资源清单与镜像

| 类型 | 名称 | 下载链接 | 说明 |

|---|---|---|---|

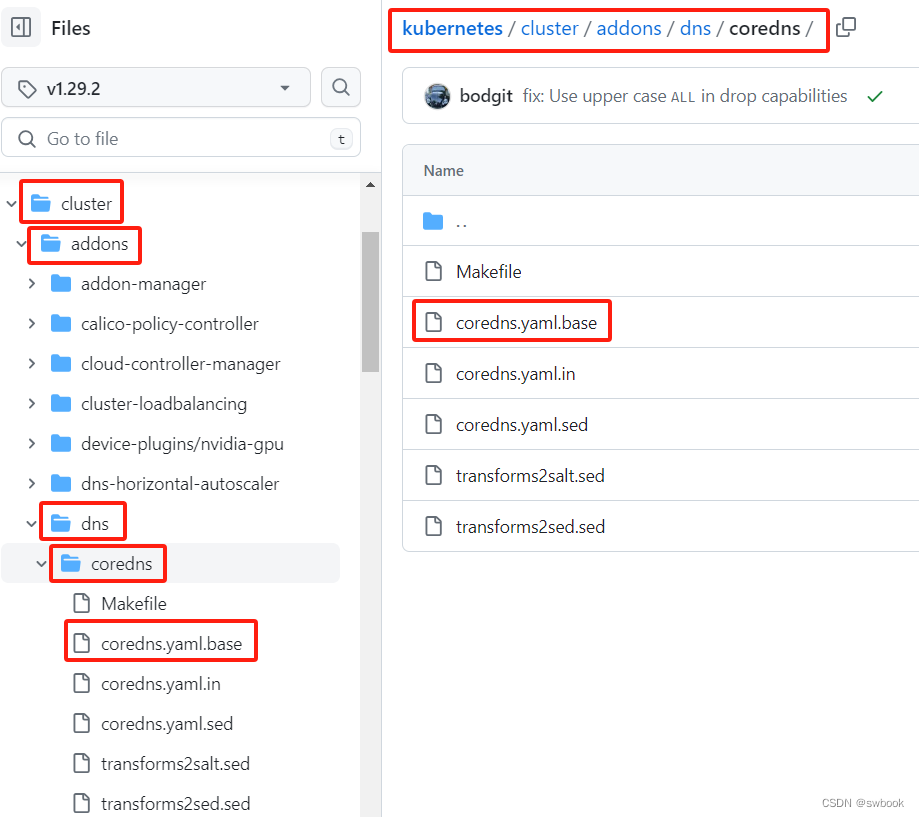

| yaml资源 | coredns.yaml.base | https://github.com/kubernetes/kubernetes/blob/v1.29.2/cluster/addons/dns/coredns/coredns.yaml.base | kubectl部署 |

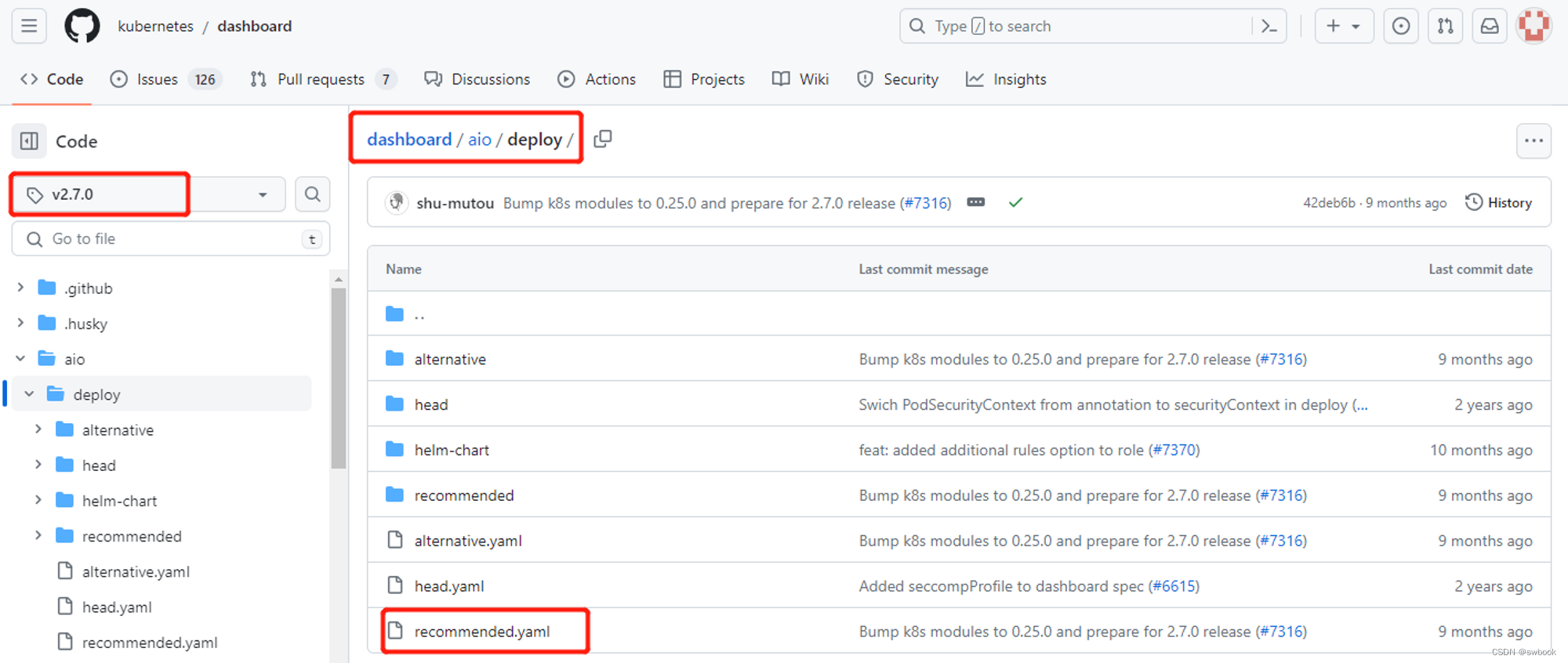

| yaml资源 | recommended.yaml | https://github.com/kubernetes/dashboard/blob/v2.7.0/aio/deploy/recommended.yaml | kubectl部署 |

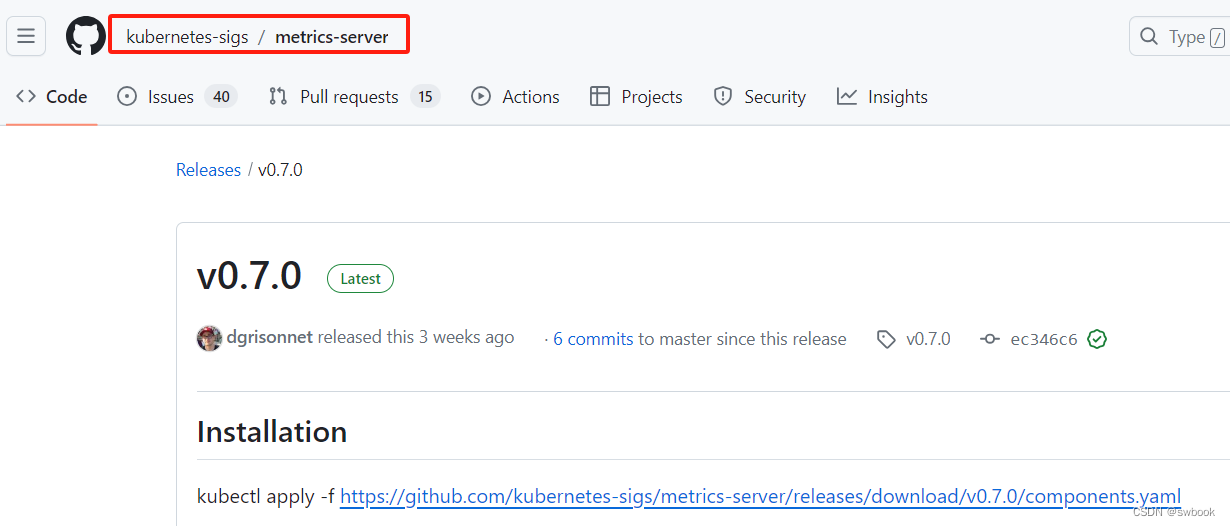

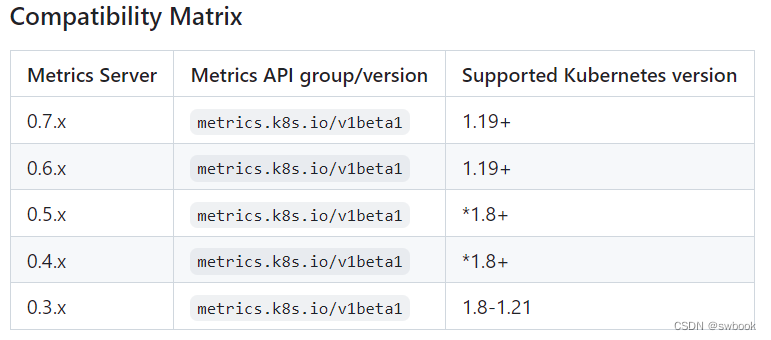

| yaml资源 | components.yaml(metrics server) | https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.7.0/components.yaml | kubectl部署 |

| 镜像 | metrics-server:v0.7.0 | registry.aliyuncs.com/google_containers/metrics-server:v0.7.0 | 部署pod… |

| 镜像 | coredns:v1.11.1 | registry.aliyuncs.com/google_containers/coredns: v1.11.1 | 部署pod… |

| 镜像 | dashboard:v2.7.0 | registry.aliyuncs.com/google_containers/dashboard:v2.7.0 | 部署pod… |

| 镜像 | metrics-scraper:v1.0.8 | registry.aliyuncs.com/google_containers/metrics-scraper:v1.0.8 | 部署pod… |

| 镜像 | pause:3.9 | registry.aliyuncs.com/google_containers/pause:3.9 | 部署pod… |

1.5 部署证书生成工具

app]# mv cfssl_1.6.4_linux_amd64 /usr/local/bin/cfssl

app]# mv cfssl-certinfo_1.6.4_linux_amd64 /usr/local/bin/cfssl-certinfo

app]# mv cfssljson_1.6.4_linux_amd64 /usr/local/bin/cfssljson

app]# chmod +x /usr/local/bin/cfssl*

app]# cfssl version

Version: 1.6.4

Runtime: go1.18

二、安装docker

2.1 解压、创建软连接、分发

## k8s-31:

~]# cd /opt/app/

app]# tar zxvf docker-25.0.3.tgz

app]# ls docker

containerd containerd-shim-runc-v2 ctr docker dockerd docker-init docker-proxy runc

app]# mv docker /opt/bin/docker-25.0.3

app]# ls /opt/bin/docker-25.0.3

containerd containerd-shim-runc-v2 ctr docker dockerd docker-init docker-proxy runc

app]# ln -s /opt/bin/docker-25.0.3/containerd /usr/bin/containerd

app]# ln -s /opt/bin/docker-25.0.3/containerd-shim-runc-v2 /usr/bin/containerd-shim-runc-v2

app]# ln -s /opt/bin/docker-25.0.3/ctr /usr/bin/ctr

app]# ln -s /opt/bin/docker-25.0.3/docker /usr/bin/docker

app]# ln -s /opt/bin/docker-25.0.3/dockerd /usr/bin/dockerd

app]# ln -s /opt/bin/docker-25.0.3/docker-init /usr/bin/docker-init

app]# ln -s /opt/bin/docker-25.0.3/docker-proxy /usr/bin/docker-proxy

app]# ln -s /opt/bin/docker-25.0.3/runc /usr/bin/runc

app]# docker -v

Docker version 25.0.3, build 4debf41

## 复制到k8s-32、k8s-33

app]# scp -r /opt/bin/docker-25.0.3/ root@k8s-32:/opt/bin/.

app]# scp -r /opt/bin/docker-25.0.3/ root@k8s-33:/opt/bin/.

## 在k8s-32、k8s-33创建软连接:同上

2.2 创建目录、配置文件

- 在3台主机上:

## k8s-31:

]# mkdir -p /data/docker /etc/docker

]# cat /etc/docker/daemon.json

{"data-root": "/data/docker","storage-driver": "overlay2","insecure-registries": ["harbor.oss.com:32402"],"registry-mirrors": ["https://5gce61mx.mirror.aliyuncs.com"],"bip": "10.26.31.1/24","exec-opts": ["native.cgroupdriver=systemd"],"live-restore": true

}## k8s-32:

]# cat /etc/docker/daemon.json

{"data-root": "/data/docker","storage-driver": "overlay2","insecure-registries": ["harbor.oss.com:32402"],"registry-mirrors": ["https://5gce61mx.mirror.aliyuncs.com"],"bip": "10.26.32.1/24","exec-opts": ["native.cgroupdriver=systemd"],"live-restore": true

}## k8s-33:

]# cat /etc/docker/daemon.json

{"data-root": "/data/docker","storage-driver": "overlay2","insecure-registries": ["harbor.oss.com:32402"],"registry-mirrors": ["https://5gce61mx.mirror.aliyuncs.com"],"bip": "10.26.33.1/24","exec-opts": ["native.cgroupdriver=systemd"],"live-restore": true

}

2.3 创建启动文件

]# cat /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

2.4 启动、检查

]# systemctl daemon-reload

]# systemctl start docker; systemctl enable docker

app]# docker info

Client:Version: 25.0.3Context: defaultDebug Mode: falseServer:Containers: 0Running: 0Paused: 0Stopped: 0Images: 0Server Version: 25.0.3Storage Driver: overlay2Backing Filesystem: xfsSupports d_type: trueUsing metacopy: falseNative Overlay Diff: trueuserxattr: falseLogging Driver: json-fileCgroup Driver: systemdCgroup Version: 1Plugins:Volume: localNetwork: bridge host ipvlan macvlan null overlayLog: awslogs fluentd gcplogs gelf journald json-file local splunk syslogSwarm: inactiveRuntimes: io.containerd.runc.v2 runcDefault Runtime: runcInit Binary: docker-initcontainerd version: 7c3aca7a610df76212171d200ca3811ff6096eb8runc version: v1.1.12-0-g51d5e94init version: de40ad0Security Options:seccompProfile: builtinKernel Version: 3.10.0-1160.90.1.el7.x86_64Operating System: CentOS Linux 7 (Core)OSType: linuxArchitecture: x86_64CPUs: 2Total Memory: 3.682GiBName: k8s-31ID: 82c85b74-eaa7-4bc5-8c10-7ae63867d428Docker Root Dir: /data/dockerDebug Mode: falseExperimental: falseInsecure Registries:harbor.oss.com:32402127.0.0.0/8Registry Mirrors:https://5gce61mx.mirror.aliyuncs.com/Live Restore Enabled: trueProduct License: Community Engine

app]# docker version

Client:Version: 25.0.3API version: 1.44Go version: go1.21.6Git commit: 4debf41Built: Tue Feb 6 21:13:00 2024OS/Arch: linux/amd64Context: defaultServer: Docker Engine - CommunityEngine:Version: 25.0.3API version: 1.44 (minimum version 1.24)Go version: go1.21.6Git commit: f417435Built: Tue Feb 6 21:13:08 2024OS/Arch: linux/amd64Experimental: falsecontainerd:Version: v1.7.13GitCommit: 7c3aca7a610df76212171d200ca3811ff6096eb8runc:Version: 1.1.12GitCommit: v1.1.12-0-g51d5e94docker-init:Version: 0.19.0GitCommit: de40ad0

app]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 00:0c:29:2b:81:d1 brd ff:ff:ff:ff:ff:ffinet 192.168.26.31/24 brd 192.168.26.255 scope global noprefixroute ens32valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fe2b:81d1/64 scope linkvalid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group defaultlink/ether 02:42:7b:83:16:e7 brd ff:ff:ff:ff:ff:ffinet 10.26.31.1/24 brd 10.26.31.255 scope global docker0valid_lft forever preferred_lft forever

2.5 拉取镜像

在k8s-33:

k8s-53 ~]# docker pull centos

...

k8s-53 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

centos latest 5d0da3dc9764 2 years ago 231MB

k8s-33 ~]# docker run -i -t --name test centos /bin/bash

[root@11854f9856c7 /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:0a:1a:21:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 10.26.33.2/24 brd 10.26.33.255 scope global eth0valid_lft forever preferred_lft foreverk8s-33 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

11854f9856c7 centos "/bin/bash" About a minute ago Exited (127) 2 seconds ago test

k8s-33 ~]# docker rm test

三、安装cri-dockerd

为什么要安装cri-dockerd插件?

-

K8s在刚开源时没有自己的容器引擎,而当时docker非常火爆是容器的代表,所以就在kubelet的代码里集成了对接docker的代码——docker shim,所以1.24版本之前是默认使用docker,不需要安装cri-dockerd。

-

K8s 1.24版本移除 docker-shim的代码,而 Docker Engine 默认又不支持CRI标准,因此二者默认无法再直接集成。为此,Mirantis 和 Docker 为了Docker Engine 提供一个能够支持到CRI规范的桥梁,就联合创建了cri-dockerd,从而能够让 Docker 作为K8s 容器引擎。

-

截至目前2023年10月20日,k8s已经更新至1.28.3版。从v1.24起,Docker不能直接作为k8s的容器运行时,因为在k8s v1.24版本移除了叫dockershim的组件,这是由k8s团队直接维护而非Docker团队维护的组件,这意味着Docker和k8s的关系不再像原来那般亲密,开发者需要使用其它符合CRI(容器运行时接口)的容器运行时工具(如containerd, CRI-O等),当然这并不意味着新版本的k8s彻底抛弃Docker(由于Docker庞大的生态和广泛的群众基础,显然这并不容易办到),在原本安装了Docker的基础上,可以通过补充安装cri-dockerd,以满足容器运行时接口的条件,从某种程度上说,cri-dockerd就是翻版的dockershim。

3.1 解压、创建软链接、分发

k8s-31 app]# tar -zxvf cri-dockerd-0.3.9.amd64.tgz

k8s-31 app]# mv cri-dockerd /opt/bin/cri-dockerd-0.3.9

k8s-31 app]# ll /opt/bin/cri-dockerd-0.3.9

总用量 46800

-rwxr-xr-x 1 1001 127 47923200 1月 2 23:31 cri-dockerd

k8s-31 app]# ln -s /opt/bin/cri-dockerd-0.3.9/cri-dockerd /usr/local/bin/cri-dockerd

k8s-31 app]# cri-dockerd --version

cri-dockerd 0.3.9 (c50b98d)

复制:

k8s-31 app]# scp -r /opt/bin/cri-dockerd-0.3.9 root@k8s-32:/opt/bin/.

k8s-31 app]# scp -r /opt/bin/cri-dockerd-0.3.9 root@k8s-33:/opt/bin/.

创建软链接:同上

3.2 修改配置文件、启动

]# cat /usr/lib/systemd/system/cri-dockerd.service

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

# Requires=cri-docker.socket ## 如果启动报错,则注释掉这一行[Service]

Type=notify

ExecStart=/usr/local/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always# Note that StartLimit* options were moved from "Service" to "Unit" in systemd 229.

# Both the old, and new location are accepted by systemd 229 and up, so using the old location

# to make them work for either version of systemd.

StartLimitBurst=3# Note that StartLimitInterval was renamed to StartLimitIntervalSec in systemd 230.

# Both the old, and new name are accepted by systemd 230 and up, so using the old name to make

# this option work for either version of systemd.

StartLimitInterval=60s# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity# Comment TasksMax if your systemd version does not support it.

# Only systemd 226 and above support this option.

TasksMax=infinity

Delegate=yes

KillMode=process[Install]

WantedBy=multi-user.target

]# systemctl daemon-reload

]# systemctl enable cri-dockerd && systemctl start cri-dockerd]# systemctl status cri-dockerd

● cri-dockerd.service - CRI Interface for Docker Application Container EngineLoaded: loaded (/usr/lib/systemd/system/cri-dockerd.service; disabled; vendor preset: disabled)Active: active (running) since 三 2024-02-14 23:04:32 CST; 15s agoDocs: https://docs.mirantis.comMain PID: 7950 (cri-dockerd)Tasks: 8Memory: 8.5MCGroup: /system.slice/cri-dockerd.service└─7950 /usr/local/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9...

四、创建证书

在k8s-31上/opt/cert目录下创建,然后分发。

4.1 创建CA根证书

- ca-csr.json

cert]# cat > ca-csr.json << EOF

{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "Kubernetes","OU": "Kubernetes-manual"}],"ca": {"expiry": "876000h"}

}

EOF

cert]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

cert]# ls ca*pem

ca-key.pem ca.pem

- ca-config.json

cat > ca-config.json << EOF

{"signing": {"default": {"expiry": "876000h"},"profiles": {"kubernetes": {"usages": ["signing","key encipherment","server auth","client auth"],"expiry": "876000h"}}}

}

EOF

4.2 创建etcd证书

- etcd-ca-csr.json

cat > etcd-ca-csr.json << EOF

{"CN": "etcd","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "etcd","OU": "Etcd Security"}],"ca": {"expiry": "876000h"}

}

EOF

- etcd-csr.json

cat > etcd-csr.json << EOF

{"CN": "etcd","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "etcd","OU": "Etcd Security"}]

}

EOF

cert]# cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare etcd-ca

cert]# ls etcd-ca*pem

etcd-ca-key.pem etcd-ca.pemcert]# cfssl gencert \-ca=./etcd-ca.pem \-ca-key=./etcd-ca-key.pem \-config=./ca-config.json \-hostname=127.0.0.1,k8s-31,k8s-32,k8s-33,192.168.26.31,192.168.26.32,192.168.26.33 \-profile=kubernetes \etcd-csr.json | cfssljson -bare ./etcd

cert]# ls etcd*pem

etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem

4.3 创建kube-apiserver证书

- apiserver-csr.json

cat > apiserver-csr.json << EOF

{"CN": "kube-apiserver","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "Kubernetes","OU": "Kubernetes-manual"}]

}

EOF

cert]# cfssl gencert \

-ca=./ca.pem \

-ca-key=./ca-key.pem \

-config=./ca-config.json \

-hostname=127.0.0.1,k8s-31,k8s-32,k8s-33,192.168.26.31,192.168.26.32,192.168.26.33,10.168.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local \

-profile=kubernetes apiserver-csr.json | cfssljson -bare ./apiserver

cert]# ls apiserver*pem

apiserver-key.pem apiserver.pem

- front-proxy-ca-csr.json

cat > front-proxy-ca-csr.json << EOF

{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"ca": {"expiry": "876000h"}

}

EOF

- front-proxy-client-csr.json

cat > front-proxy-client-csr.json << EOF

{"CN": "front-proxy-client","key": {"algo": "rsa","size": 2048}

}

EOF

## 生成kube-apiserver聚合证书

cert]# cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare ./front-proxy-ca

cert]# ls front-proxy-ca*pem

front-proxy-ca-key.pem front-proxy-ca.pemcert]# cfssl gencert \

-ca=./front-proxy-ca.pem \

-ca-key=./front-proxy-ca-key.pem \

-config=./ca-config.json \

-profile=kubernetes front-proxy-client-csr.json | cfssljson -bare ./front-proxy-client

cert]# ls front-proxy-client*pem

front-proxy-client-key.pem front-proxy-client.pem

4.4 创建kube-controller-manager的证书

- manager-csr.json,用于生成配置文件controller-manager.kubeconfig

cat > manager-csr.json << EOF

{"CN": "system:kube-controller-manager","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "system:kube-controller-manager","OU": "Kubernetes-manual"}]

}

EOF

cert]# cfssl gencert \-ca=./ca.pem \-ca-key=./ca-key.pem \-config=./ca-config.json \-profile=kubernetes \manager-csr.json | cfssljson -bare ./controller-manager

cert]# ls controller-manager*pem

controller-manager-key.pem controller-manager.pem

- admin-csr.json,用于生成配置文件admin.kubeconfig

cat > admin-csr.json << EOF

{"CN": "admin","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "system:masters","OU": "Kubernetes-manual"}]

}

EOF

cert]# cfssl gencert \-ca=./ca.pem \-ca-key=./ca-key.pem \-config=./ca-config.json \-profile=kubernetes \admin-csr.json | cfssljson -bare ./admin

cert]# ls admin*pem

admin-key.pem admin.pem

4.5 创建kube-schedule证书

- scheduler-csr.json,用于生成配置文件scheduler.kubeconfig

cat > scheduler-csr.json << EOF

{"CN": "system:kube-scheduler","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "system:kube-scheduler","OU": "Kubernetes-manual"}]

}

EOF

cert]# cfssl gencert \-ca=./ca.pem \-ca-key=./ca-key.pem \-config=./ca-config.json \-profile=kubernetes \scheduler-csr.json | cfssljson -bare ./scheduler

cert]# ls scheduler*pem

scheduler-key.pem scheduler.pem

4.6 创建kube-prox证书

- kube-proxy-csr.json,用于生成配置文件kube-proxy.kubeconfig

cat > kube-proxy-csr.json << EOF

{"CN": "system:kube-proxy","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Beijing","L": "Beijing","O": "system:kube-proxy","OU": "Kubernetes-manual"}]

}

EOF

cert]# cfssl gencert \-ca=./ca.pem \-ca-key=./ca-key.pem \-config=./ca-config.json \-profile=kubernetes \kube-proxy-csr.json | cfssljson -bare ./kube-proxy

cert]# ls kube-proxy*pem

kube-proxy-key.pem kube-proxy.pem

4.7 创建ServiceAccount Key - secret

cert]# openssl genrsa -out /opt/cert/sa.key 2048

cert]# openssl rsa -in /opt/cert/sa.key -pubout -out /opt/cert/sa.pub

cert]# ls /opt/cert/sa*

/opt/cert/sa.key /opt/cert/sa.pub

4.8 分发证书到各节点

cert]# scp /opt/cert/* root@k8s-32:/opt/cert/.

cert]# scp /opt/cert/* root@k8s-33:/opt/cert/.

五、部署etcd

5.1 准备etcd二进制软件

## 解压软件包,并将运行软件放到/opt/bin目录

k8s-31 app]# tar -xf etcd-v3.5.12-linux-amd64.tar.gz -C /opt/bin

k8s-31 app]# ls /opt/bin/etcd-v3.5.12-linux-amd64/ -l

总用量 52408

总用量 54908

drwxr-xr-x 3 528287 89939 40 1月 31 18:36 Documentation

-rwxr-xr-x 1 528287 89939 23543808 1月 31 18:36 etcd

-rwxr-xr-x 1 528287 89939 17743872 1月 31 18:36 etcdctl

-rwxr-xr-x 1 528287 89939 14864384 1月 31 18:36 etcdutl

-rw-r--r-- 1 528287 89939 42066 1月 31 18:36 README-etcdctl.md

-rw-r--r-- 1 528287 89939 7359 1月 31 18:36 README-etcdutl.md

-rw-r--r-- 1 528287 89939 9394 1月 31 18:36 README.md

-rw-r--r-- 1 528287 89939 7896 1月 31 18:36 READMEv2-etcdctl.md

k8s-31 app]# ln -s /opt/bin/etcd-v3.5.12-linux-amd64/etcdctl /usr/local/bin/etcdctl

k8s-31 app]# ln -s /opt/bin/etcd-v3.5.12-linux-amd64/etcd /opt/bin/etcd

k8s-31 app]# etcdctl version

etcdctl version: 3.5.12

API version: 3.5

## 复制软件到其他节点

]# scp -r /opt/bin/etcd-v3.5.12-linux-amd64/ root@k8s-32:/opt/bin/

]# scp -r /opt/bin/etcd-v3.5.12-linux-amd64/ root@k8s-33:/opt/bin/

## 在其他节点创建软链接

]# ln -s /opt/bin/etcd-v3.5.12-linux-amd64/etcdctl /usr/local/bin/etcdctl

]# ln -s /opt/bin/etcd-v3.5.12-linux-amd64/etcd /opt/bin/etcd

5.2 启动参数配置文件

- k8s-31:/opt/cfg/etcd.config.yml

cat > /opt/cfg/etcd.config.yml << EOF

name: 'k8s-31'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.26.31:2380'

listen-client-urls: 'https://192.168.26.31:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.26.31:2380'

advertise-client-urls: 'https://192.168.26.31:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-31=https://192.168.26.31:2380,k8s-32=https://192.168.26.32:2380,k8s-33=https://192.168.26.33:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:cert-file: '/opt/cert/etcd.pem'key-file: '/opt/cert/etcd-key.pem'client-cert-auth: truetrusted-ca-file: '/opt/cert/etcd-ca.pem'auto-tls: true

peer-transport-security:cert-file: '/opt/cert/etcd.pem'key-file: '/opt/cert/etcd-key.pem'peer-client-cert-auth: truetrusted-ca-file: '/opt/cert/etcd-ca.pem'auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF

etcd启动参数配置文件没有enable-v2: true时,etcd默认为v3,命令行中可以不加ETCDCTL_API=3

- k8s-32:/opt/cfg/etcd.config.yml

cat > /opt/cfg/etcd.config.yml << EOF

name: 'k8s-32'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.26.32:2380'

listen-client-urls: 'https://192.168.26.32:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.26.32:2380'

advertise-client-urls: 'https://192.168.26.32:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-31=https://192.168.26.31:2380,k8s-32=https://192.168.26.32:2380,k8s-33=https://192.168.26.33:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:cert-file: '/opt/cert/etcd.pem'key-file: '/opt/cert/etcd-key.pem'client-cert-auth: truetrusted-ca-file: '/opt/cert/etcd-ca.pem'auto-tls: true

peer-transport-security:cert-file: '/opt/cert/etcd.pem'key-file: '/opt/cert/etcd-key.pem'peer-client-cert-auth: truetrusted-ca-file: '/opt/cert/etcd-ca.pem'auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF

- k8s-33:/opt/cfg/etcd.config.yml

cat > /opt/cfg/etcd.config.yml << EOF

name: 'k8s-33'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.26.33:2380'

listen-client-urls: 'https://192.168.26.33:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.26.33:2380'

advertise-client-urls: 'https://192.168.26.33:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-31=https://192.168.26.31:2380,k8s-32=https://192.168.26.32:2380,k8s-33=https://192.168.26.33:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:cert-file: '/opt/cert/etcd.pem'key-file: '/opt/cert/etcd-key.pem'client-cert-auth: truetrusted-ca-file: '/opt/cert/etcd-ca.pem'auto-tls: true

peer-transport-security:cert-file: '/opt/cert/etcd.pem'key-file: '/opt/cert/etcd-key.pem'peer-client-cert-auth: truetrusted-ca-file: '/opt/cert/etcd-ca.pem'auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF

5.3 创建service、启动、检查

在edcd节点k8s-31、k8s-32、k8s-33

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Service

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target[Service]

Type=notify

ExecStart=/opt/bin/etcd --config-file=/opt/cfg/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536[Install]

WantedBy=multi-user.target

Alias=etcd3.serviceEOF

启动,检查

]# systemctl daemon-reload

]# systemctl enable --now etcd

]# systemctl status etcd

● etcd.service - Etcd ServiceLoaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)Active: active (running) since 三 2024-02-14 23:30:58 CST; 27s agoDocs: https://coreos.com/etcd/docs/latest/Main PID: 8234 (etcd)Tasks: 8Memory: 25.1MCGroup: /system.slice/etcd.service└─8234 /opt/bin/etcd --config-file=/opt/cfg/etcd.config.yml...

## 默认:ETCDCTL_API=3

]# etcdctl --endpoints="192.168.26.31:2379,192.168.26.32:2379,192.168.26.33:2379" \

--cacert=/opt/cert/etcd-ca.pem --cert=/opt/cert/etcd.pem --key=/opt/cert/etcd-key.pem endpoint health --write-out=table

+--------------------+--------+-------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+--------------------+--------+-------------+-------+

| 192.168.26.31:2379 | true | 16.326281ms | |

| 192.168.26.33:2379 | true | 16.370608ms | |

| 192.168.26.32:2379 | true | 16.186462ms | |

+--------------------+--------+-------------+-------+

]# etcdctl --endpoints="192.168.26.31:2379,192.168.26.32:2379,192.168.26.33:2379" \

--cacert=/opt/cert/etcd-ca.pem --cert=/opt/cert/etcd.pem --key=/opt/cert/etcd-key.pem endpoint status --write-out=table

+--------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+--------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| 192.168.26.31:2379 | a691f6e64894f556 | 3.5.12 | 20 kB | false | false | 2 | 11 | 11 | |

| 192.168.26.32:2379 | 1881c098ef00b752 | 3.5.12 | 25 kB | false | false | 2 | 11 | 11 | |

| 192.168.26.33:2379 | a75235b88a6dbf08 | 3.5.12 | 20 kB | true | false | 2 | 11 | 11 | |

+--------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

]# etcdctl --endpoints="192.168.26.31:2379,192.168.26.32:2379,192.168.26.33:2379" \

--cacert=/opt/cert/etcd-ca.pem --cert=/opt/cert/etcd.pem --key=/opt/cert/etcd-key.pem member list --write-out=table

+------------------+---------+--------+----------------------------+----------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+--------+----------------------------+----------------------------+------------+

| 1881c098ef00b752 | started | k8s-32 | https://192.168.26.32:2380 | https://192.168.26.32:2379 | false |

| a691f6e64894f556 | started | k8s-31 | https://192.168.26.31:2380 | https://192.168.26.31:2379 | false |

| a75235b88a6dbf08 | started | k8s-33 | https://192.168.26.33:2380 | https://192.168.26.33:2379 | false |

+------------------+---------+--------+----------------------------+----------------------------+------------+

六、部署flannel

官方参考:

- https://github.com/flannel-io/flannel/blob/v0.22.3/Documentation/configuration.md

- https://github.com/flannel-io/flannel/blob/v0.22.3/Documentation/running.md

6.1、确认etcd正常

参见etcd部分。

6.2、查看当前路由

k8s-31:

]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.26.2 0.0.0.0 UG 100 0 0 ens32

10.26.31.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

192.168.26.0 0.0.0.0 255.255.255.0 U 100 0 0 ens32k8s-32:

]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.26.2 0.0.0.0 UG 100 0 0 ens32

10.26.32.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

192.168.26.0 0.0.0.0 255.255.255.0 U 100 0 0 ens32k8s-33:

]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.26.2 0.0.0.0 UG 100 0 0 ens32

10.26.33.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

192.168.26.0 0.0.0.0 255.255.255.0 U 100 0 0 ens32

清除其它路由,如:route del -net 10.26.57.0 netmask 255.255.255.0 gw 192.168.26.53

6.3、添加flannel网络信息到etcd

- 在任一etcd节点进行操作

~]# etcdctl --endpoints="192.168.26.31:2379,192.168.26.32:2379,192.168.26.33:2379" \

--cacert=/opt/cert/etcd-ca.pem --cert=/opt/cert/etcd.pem --key=/opt/cert/etcd-key.pem \

put /coreos.com/network/config '{"Network": "10.26.0.0/16", "Backend": {"Type": "host-gw"}}'

- 查看

~]# etcdctl --endpoints="192.168.26.31:2379,192.168.26.32:2379,192.168.26.33:2379" \

--cacert=/opt/cert/etcd-ca.pem --cert=/opt/cert/etcd.pem --key=/opt/cert/etcd-key.pem get /coreos.com/network/config

/coreos.com/network/config

{"Network": "10.26.0.0/16", "Backend": {"Type": "host-gw"}}

6.4、flannel解压、创建软连接

k8s-31:

]# cd /opt/app

k8s-31 app]# mkdir /opt/bin/flannel-v0.24.2-linux-amd64

k8s-31 app]# tar zxvf flannel-v0.24.2-linux-amd64.tar.gz -C /opt/bin/flannel-v0.24.2-linux-amd64

flanneld

mk-docker-opts.sh

README.md

k8s-31 app]# scp -r /opt/bin/flannel-v0.24.2-linux-amd64 root@k8s-32:/opt/bin/.

k8s-31 app]# scp -r /opt/bin/flannel-v0.24.2-linux-amd64 root@k8s-33:/opt/bin/.

## 创建软连接

]# ln -s /opt/bin/flannel-v0.24.2-linux-amd64/flanneld /opt/bin/flanneld

6.5、添加配置文件

k8s-31:--public-ip=192.168.26.31;k8s-32:--public-ip=192.168.26.32;k8s-33:--public-ip=192.168.26.33

cat > /opt/cfg/kube-flanneld.conf << EOF

KUBE_FLANNELD_OPTS="--public-ip=192.168.26.31 \\

--etcd-endpoints=https://192.168.26.31:2379,https://192.168.26.32:2379,https://192.168.26.33:2379 \\

--etcd-keyfile=/opt/cert/etcd-key.pem \\

--etcd-certfile=/opt/cert/etcd.pem \\

--etcd-cafile=/opt/cert/etcd-ca.pem \\

--kube-subnet-mgr=false \\

--iface=ens32 \\

--iptables-resync=5 \\

--subnet-file=/run/flannel/subnet.env \\

--healthz-port=2401"

EOF

]# mkdir /run/flannel

]# vi /run/flannel/subnet.env

k8s-31:

FLANNEL_NETWORK=10.26.0.0/16

FLANNEL_SUBNET=10.26.31.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=falsek8s-32:

FLANNEL_NETWORK=10.26.0.0/16

FLANNEL_SUBNET=10.26.32.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=falsek8s-33:

FLANNEL_NETWORK=10.26.0.0/16

FLANNEL_SUBNET=10.26.33.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=false

6.6、添加启动文件

cat > /usr/lib/systemd/system/kube-flanneld.service << EOF

[Unit]

Description=Kubernetes flanneld

Documentation=https://github.com/coreos/flannel

After=network.target

Before=docker.service[Service]

EnvironmentFile=/opt/cfg/kube-flanneld.conf

## ExecStartPost=/usr/bin/mk-docker-opts.sh 这一行注释掉

ExecStart=/opt/bin/flanneld \$KUBE_FLANNELD_OPTS

Restart=on-failure

Type=notify

LimitNOFILE=65536[Install]

WantedBy=multi-user.target

EOF

6.7、启动、检查

]# systemctl daemon-reload && systemctl start kube-flanneld && systemctl enable kube-flanneld

]# systemctl status kube-flanneld

● kube-flanneld.service - Kubernetes flanneldLoaded: loaded (/usr/lib/systemd/system/kube-flanneld.service; enabled; vendor preset: disabled)Active: active (running) since 三 2024-02-14 23:59:27 CST; 17s agoDocs: https://github.com/coreos/flannelMain PID: 8365 (flanneld)CGroup: /system.slice/kube-flanneld.service└─8365 /opt/bin/flanneld --public-ip=192.168.26.31 --etcd-endpoints=https://192.168.26.31:2379,https://192.168.26.32:2379,https://192.168.26.33:......

k8s-31 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 00:0c:29:2b:81:d1 brd ff:ff:ff:ff:ff:ffinet 192.168.26.31/24 brd 192.168.26.255 scope global noprefixroute ens32valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fe2b:81d1/64 scope linkvalid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group defaultlink/ether 02:42:7b:83:16:e7 brd ff:ff:ff:ff:ff:ffinet 10.26.31.1/24 brd 10.26.31.255 scope global docker0valid_lft forever preferred_lft forever

采用host-gw方式,没有增加flannel网卡。如果采用vxlan方式,就会增加flannel网卡。

- k8s-31:增加了到192.168.26.32、192.168.26.33的docker容器的路由

k8s-31 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.26.2 0.0.0.0 UG 100 0 0 ens32

10.26.31.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

10.26.32.0 192.168.26.32 255.255.255.0 UG 0 0 0 ens32

10.26.33.0 192.168.26.33 255.255.255.0 UG 0 0 0 ens32

192.168.26.0 0.0.0.0 255.255.255.0 U 100 0 0 ens32

- k8s-32:增加了到192.168.26.31、192.168.26.33的docker容器的路由

k8s-32 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.26.2 0.0.0.0 UG 100 0 0 ens32

10.26.31.0 192.168.26.31 255.255.255.0 UG 0 0 0 ens32

10.26.32.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

10.26.33.0 192.168.26.33 255.255.255.0 UG 0 0 0 ens32

192.168.26.0 0.0.0.0 255.255.255.0 U 100 0 0 ens32

- k8s-33

k8s-33 ~]# route -n

Kernel IP routing table

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.26.2 0.0.0.0 UG 100 0 0 ens32

10.26.31.0 192.168.26.31 255.255.255.0 UG 0 0 0 ens32

10.26.32.0 192.168.26.32 255.255.255.0 UG 0 0 0 ens32

10.26.33.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

192.168.26.0 0.0.0.0 255.255.255.0 U 100 0 0 ens32

6.8、测试验证容器互访、容器与主机互访

在k8s-31、k8s-32、k8s-33启动容器:

31 ~]# docker run -i -t --name test31 centos /bin/bash

[root@330e9de087a0 /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:0a:1a:1f:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 10.26.31.2/24 brd 10.26.31.255 scope global eth0valid_lft forever preferred_lft forever32 ~]# docker run -i -t --name test32 centos /bin/bash

[root@971ef9eb40a4 /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:0a:1a:20:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 10.26.32.2/24 brd 10.26.32.255 scope global eth0valid_lft forever preferred_lft forever33 ~]# docker run -i -t --name test33 centos /bin/bash

[root@605466b3efa9 /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:0a:1a:21:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 10.26.33.2/24 brd 10.26.33.255 scope global eth0valid_lft forever preferred_lft forever

6.8.1 跨主机之间的容器访问(容器 > 容器):从k8s-31容器访问另一主机上的容器

## ping k8s-32上的容器10.26.32.2

[root@330e9de087a0 /]# ping 10.26.32.2 -c 3

PING 10.26.32.2 (10.26.32.2) 56(84) bytes of data.

64 bytes from 10.26.32.2: icmp_seq=1 ttl=62 time=0.390 ms

64 bytes from 10.26.32.2: icmp_seq=2 ttl=62 time=0.514 ms

64 bytes from 10.26.32.2: icmp_seq=3 ttl=62 time=0.249 ms--- 10.26.32.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.249/0.384/0.514/0.109 ms## ping k8s-33上的容器10.26.33.2

[root@330e9de087a0 /]# ping 10.26.33.2 -c 3

PING 10.26.33.2 (10.26.33.2) 56(84) bytes of data.

64 bytes from 10.26.33.2: icmp_seq=1 ttl=62 time=0.291 ms

64 bytes from 10.26.33.2: icmp_seq=2 ttl=62 time=0.541 ms

64 bytes from 10.26.33.2: icmp_seq=3 ttl=62 time=0.592 ms--- 10.26.33.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.291/0.474/0.592/0.133 ms

6.8.2 跨容器主机之间的访问(容器 > 主机):从k8s-31容器访问所有主机

[root@330e9de087a0 /]# ping 192.168.26.31 -c 3

PING 192.168.26.31 (192.168.26.31) 56(84) bytes of data.

64 bytes from 192.168.26.31: icmp_seq=1 ttl=64 time=0.036 ms

64 bytes from 192.168.26.31: icmp_seq=2 ttl=64 time=0.034 ms

64 bytes from 192.168.26.31: icmp_seq=3 ttl=64 time=0.035 ms--- 192.168.26.31 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms

rtt min/avg/max/mdev = 0.034/0.035/0.036/0.000 ms[root@330e9de087a0 /]# ping 192.168.26.32 -c 3

PING 192.168.26.32 (192.168.26.32) 56(84) bytes of data.

64 bytes from 192.168.26.32: icmp_seq=1 ttl=63 time=0.345 ms

64 bytes from 192.168.26.32: icmp_seq=2 ttl=63 time=0.630 ms

64 bytes from 192.168.26.32: icmp_seq=3 ttl=63 time=0.524 ms--- 192.168.26.32 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.345/0.499/0.630/0.120 ms[root@330e9de087a0 /]# ping 192.168.26.33 -c 3

PING 192.168.26.33 (192.168.26.33) 56(84) bytes of data.

64 bytes from 192.168.26.33: icmp_seq=1 ttl=63 time=0.337 ms

64 bytes from 192.168.26.33: icmp_seq=2 ttl=63 time=0.649 ms

64 bytes from 192.168.26.33: icmp_seq=3 ttl=63 time=0.505 ms--- 192.168.26.33 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.337/0.497/0.649/0.127 ms

6.8.3 跨主机容器之间的访问(主机 > 容器):从k8s-31主机访问所有主机上的容器

k8s-31 ~]# ping 10.26.31.2 -c 3

PING 10.26.31.2 (10.26.31.2) 56(84) bytes of data.

64 bytes from 10.26.31.2: icmp_seq=1 ttl=64 time=0.034 ms

64 bytes from 10.26.31.2: icmp_seq=2 ttl=64 time=0.031 ms

64 bytes from 10.26.31.2: icmp_seq=3 ttl=64 time=0.027 ms--- 10.26.31.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms

rtt min/avg/max/mdev = 0.027/0.030/0.034/0.007 msk8s-31 ~]# ping 10.26.32.2 -c 3

PING 10.26.32.2 (10.26.32.2) 56(84) bytes of data.

64 bytes from 10.26.32.2: icmp_seq=1 ttl=63 time=0.285 ms

64 bytes from 10.26.32.2: icmp_seq=2 ttl=63 time=0.643 ms

64 bytes from 10.26.32.2: icmp_seq=3 ttl=63 time=0.528 ms--- 10.26.32.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms

rtt min/avg/max/mdev = 0.285/0.485/0.643/0.150 msk8s-31 ~]# ping 10.26.33.2 -c 3

PING 10.26.33.2 (10.26.33.2) 56(84) bytes of data.

64 bytes from 10.26.33.2: icmp_seq=1 ttl=63 time=0.457 ms

64 bytes from 10.26.33.2: icmp_seq=2 ttl=63 time=0.522 ms

64 bytes from 10.26.33.2: icmp_seq=3 ttl=63 time=0.513 ms--- 10.26.33.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.457/0.497/0.522/0.034 ms

6.8.4 从容器访问百度(具备外网访问时测试)

[root@330e9de087a0 /]# ping www.baidu.com -c 3

PING www.a.shifen.com (183.2.172.185) 56(84) bytes of data.

64 bytes from www.baidu.com (183.2.172.185): icmp_seq=1 ttl=127 time=9.85 ms

64 bytes from www.baidu.com (183.2.172.185): icmp_seq=2 ttl=127 time=8.76 ms

64 bytes from www.baidu.com (183.2.172.185): icmp_seq=3 ttl=127 time=9.81 ms--- www.a.shifen.com ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 8.758/9.472/9.849/0.517 ms

6.8.5 k8s-32、k8s-33上的测试

步骤同上。

七、k8s核心组件

7.1 准备

## 方法一:解压软件包中指定的软件,并将运行软件放到/opt/bin目录

k8s-31 app]# tar -xf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /opt/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

k8s-31 app]# ls/opt/bin/kube*

kube-apiserver kube-controller-manager kubectl kubelet kube-proxy kube-scheduler## 解压全部

k8s-31 app]# tar -zxvf kubernetes-server-linux-amd64.tar.gz

k8s-31 app]# mv kubernetes /opt/bin/kubernetes-v1.29.2

k8s-31 app]# rm -rf /opt/bin/kubernetes-v1.29.2/{addons,kubernetes-src.tar.gz,LICENSES}

k8s-31 app]# ll /opt/bin/kubernetes-v1.29.2

总用量 0

drwxr-xr-x 3 root root 17 1月 18 00:09 server

k8s-31 app]# rm -rf /opt/bin/kubernetes-v1.29.2/server/bin/*.tar

k8s-31 app]# rm -rf /opt/bin/kubernetes-v1.29.2/server/bin/*_tag

k8s-31 app]# ll /opt/bin/kubernetes-v1.29.2/server/bin/

总用量 717160

-rwxr-xr-x 1 root root 61333504 2月 14 18:57 apiextensions-apiserver

-rwxr-xr-x 1 root root 48246784 2月 14 18:57 kubeadm

-rwxr-xr-x 1 root root 58761216 2月 14 18:57 kube-aggregator

-rwxr-xr-x 1 root root 123719680 2月 14 18:57 kube-apiserver

-rwxr-xr-x 1 root root 118349824 2月 14 18:57 kube-controller-manager

-rwxr-xr-x 1 root root 49704960 2月 14 18:57 kubectl

-rwxr-xr-x 1 root root 48087040 2月 14 18:57 kubectl-convert

-rwxr-xr-x 1 root root 111812608 2月 14 18:57 kubelet

-rwxr-xr-x 1 root root 1613824 2月 14 18:57 kube-log-runner

-rwxr-xr-x 1 root root 55263232 2月 14 18:57 kube-proxy

-rwxr-xr-x 1 root root 55943168 2月 14 18:57 kube-scheduler

-rwxr-xr-x 1 root root 1536000 2月 14 18:57 mounterk8s-31 app]# ln -s /opt/bin/kubernetes-v1.29.2/server/bin /opt/bin/kubernetes

k8s-31 app]# ln -s /opt/bin/kubernetes-v1.29.2/server/bin/kubectl /usr/local/bin/kubectl

k8s-31 app]# /opt/bin/kubernetes/kubelet --version

Kubernetes v1.29.2## 复制软件到其他节点,并创建软链接

]# scp -r /opt/bin/kubernetes-v1.29.2/ root@k8s-32:/opt/bin/

]# scp -r /opt/bin/kubernetes-v1.29.2/ root@k8s-33:/opt/bin/

## 创建软链接同上

7.2 Nginx高可用方案

使用 nginx方案,kube-apiserver中的配置为: --server=https://127.0.0.1:8443

- 安装开发工具包(参考附件),编译安装nginx

## 在一台有开发环境的主机上进行编译安装,然后复制到集群节点

app]# tar xvf nginx-1.24.0.tar.gz

app]# cd nginx-1.24.0

nginx-1.24.0]# ./configure --with-stream --without-http --without-http_uwsgi_module --without-http_scgi_module --without-http_fastcgi_module

......

nginx-1.24.0]# make && make install

......

nginx-1.24.0]# ls -l /usr/local/nginx

drwxr-xr-x 2 root root 333 2月 15 09:08 conf

drwxr-xr-x 2 root root 40 2月 15 09:08 html

drwxr-xr-x 2 root root 6 2月 15 09:08 logs

drwxr-xr-x 2 root root 19 2月 15 09:08 sbin

- nginx配置文件/usr/local/nginx/conf/kube-nginx.con

# 写入nginx配置文件

cat > /usr/local/nginx/conf/kube-nginx.conf <<EOF

worker_processes 1;

events {worker_connections 1024;

}

stream {upstream backend {least_conn;hash $remote_addr consistent;server 192.168.26.31:6443 max_fails=3 fail_timeout=30s;server 192.168.26.32:6443 max_fails=3 fail_timeout=30s;server 192.168.26.33:6443 max_fails=3 fail_timeout=30s;}server {listen 127.0.0.1:8443;proxy_connect_timeout 1s;proxy_pass backend;}

}

EOF

- 复制到集群节点

## 复制到集群节点

nginx-1.24.0]# scp -r /usr/local/nginx root@k8s-32:/usr/local/nginx/

nginx-1.24.0]# scp -r /usr/local/nginx root@k8s-33:/usr/local/nginx/

- 启动配置文件/etc/systemd/system/kube-nginx.service

# 写入启动配置文件

cat > /etc/systemd/system/kube-nginx.service <<EOF

[Unit]

Description=kube-apiserver nginx proxy

After=network.target

After=network-online.target

Wants=network-online.target[Service]

Type=forking

ExecStartPre=/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/kube-nginx.conf -p /usr/local/nginx -t

ExecStart=/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/kube-nginx.conf -p /usr/local/nginx

ExecReload=/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/kube-nginx.conf -p /usr/local/nginx -s reload

PrivateTmp=true

Restart=always

RestartSec=5

StartLimitInterval=0

LimitNOFILE=65536[Install]

WantedBy=multi-user.target

EOF

- 设置开机自启并检查启动是否成功

# 设置开机自启]# systemctl enable --now kube-nginx]# systemctl restart kube-nginx]# systemctl status kube-nginx● kube-nginx.service - kube-apiserver nginx proxyLoaded: loaded (/etc/systemd/system/kube-nginx.service; enabled; vendor preset: disabled)Active: active (running) since 四 2024-02-15 09:12:08 CST; 22s agoProcess: 80627 ExecStart=/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/kube-nginx.conf -p /usr/local/nginx (code=exited, status=0/SUCCESS)Process: 80625 ExecStartPre=/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/kube-nginx.conf -p /usr/local/nginx -t (code=exited, status=0/SUCCESS)Main PID: 80629 (nginx)Tasks: 2Memory: 804.0KCGroup: /system.slice/kube-nginx.service├─80629 nginx: master process /usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/kube-nginx.conf -p /usr/local/nginx└─80630 nginx: worker process......

7.3 部署kube-apiserver

- k8s-31:/usr/lib/systemd/system/kube-apiserver.service

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target[Service]

ExecStart=/opt/bin/kubernetes/kube-apiserver \\--v=2 \\--allow-privileged=true \\--bind-address=0.0.0.0 \\--secure-port=6443 \\--advertise-address=192.168.26.31 \\--service-cluster-ip-range=10.168.0.0/16 \\--service-node-port-range=30000-39999 \\--etcd-servers=https://192.168.26.31:2379,https://192.168.26.32:2379,https://192.168.26.33:2379 \\--etcd-cafile=/opt/cert/etcd-ca.pem \\--etcd-certfile=/opt/cert/etcd.pem \\--etcd-keyfile=/opt/cert/etcd-key.pem \\--client-ca-file=/opt/cert/ca.pem \\--tls-cert-file=/opt/cert/apiserver.pem \\--tls-private-key-file=/opt/cert/apiserver-key.pem \\--kubelet-client-certificate=/opt/cert/apiserver.pem \\--kubelet-client-key=/opt/cert/apiserver-key.pem \\--service-account-key-file=/opt/cert/sa.pub \\--service-account-signing-key-file=/opt/cert/sa.key \\--service-account-issuer=https://kubernetes.default.svc.cluster.local \\--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \\--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \\--authorization-mode=Node,RBAC \\--enable-bootstrap-token-auth=true \\--requestheader-client-ca-file=/opt/cert/front-proxy-ca.pem \\--proxy-client-cert-file=/opt/cert/front-proxy-client.pem \\--proxy-client-key-file=/opt/cert/front-proxy-client-key.pem \\--requestheader-allowed-names=aggregator \\--requestheader-group-headers=X-Remote-Group \\--requestheader-extra-headers-prefix=X-Remote-Extra- \\--requestheader-username-headers=X-Remote-User \\--enable-aggregator-routing=true# --feature-gates=IPv6DualStack=true# --token-auth-file=/opt/cert/token.csvRestart=on-failure

RestartSec=10s

LimitNOFILE=65535[Install]

WantedBy=multi-user.targetEOF

- k8s-32:/usr/lib/systemd/system/kube-apiserver.service

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target[Service]

ExecStart=/opt/bin/kubernetes/kube-apiserver \\--v=2 \\--allow-privileged=true \\--bind-address=0.0.0.0 \\--secure-port=6443 \\--advertise-address=192.168.26.32 \\--service-cluster-ip-range=10.168.0.0/16 \\--service-node-port-range=30000-39999 \\--etcd-servers=https://192.168.26.31:2379,https://192.168.26.32:2379,https://192.168.26.33:2379 \\--etcd-cafile=/opt/cert/etcd-ca.pem \\--etcd-certfile=/opt/cert/etcd.pem \\--etcd-keyfile=/opt/cert/etcd-key.pem \\--client-ca-file=/opt/cert/ca.pem \\--tls-cert-file=/opt/cert/apiserver.pem \\--tls-private-key-file=/opt/cert/apiserver-key.pem \\--kubelet-client-certificate=/opt/cert/apiserver.pem \\--kubelet-client-key=/opt/cert/apiserver-key.pem \\--service-account-key-file=/opt/cert/sa.pub \\--service-account-signing-key-file=/opt/cert/sa.key \\--service-account-issuer=https://kubernetes.default.svc.cluster.local \\--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \\--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \\--authorization-mode=Node,RBAC \\--enable-bootstrap-token-auth=true \\--requestheader-client-ca-file=/opt/cert/front-proxy-ca.pem \\--proxy-client-cert-file=/opt/cert/front-proxy-client.pem \\--proxy-client-key-file=/opt/cert/front-proxy-client-key.pem \\--requestheader-allowed-names=aggregator \\--requestheader-group-headers=X-Remote-Group \\--requestheader-extra-headers-prefix=X-Remote-Extra- \\--requestheader-username-headers=X-Remote-User \\--enable-aggregator-routing=true# --feature-gates=IPv6DualStack=true# --token-auth-file=/opt/cert/token.csvRestart=on-failure

RestartSec=10s

LimitNOFILE=65535[Install]

WantedBy=multi-user.targetEOF

- k8s-33:/usr/lib/systemd/system/kube-apiserver.service

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target[Service]

ExecStart=/opt/bin/kubernetes/kube-apiserver \\--v=2 \\--allow-privileged=true \\--bind-address=0.0.0.0 \\--secure-port=6443 \\--advertise-address=192.168.26.33 \\--service-cluster-ip-range=10.168.0.0/16 \\--service-node-port-range=30000-39999 \\--etcd-servers=https://192.168.26.31:2379,https://192.168.26.32:2379,https://192.168.26.33:2379 \\--etcd-cafile=/opt/cert/etcd-ca.pem \\--etcd-certfile=/opt/cert/etcd.pem \\--etcd-keyfile=/opt/cert/etcd-key.pem \\--client-ca-file=/opt/cert/ca.pem \\--tls-cert-file=/opt/cert/apiserver.pem \\--tls-private-key-file=/opt/cert/apiserver-key.pem \\--kubelet-client-certificate=/opt/cert/apiserver.pem \\--kubelet-client-key=/opt/cert/apiserver-key.pem \\--service-account-key-file=/opt/cert/sa.pub \\--service-account-signing-key-file=/opt/cert/sa.key \\--service-account-issuer=https://kubernetes.default.svc.cluster.local \\--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \\--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \\--authorization-mode=Node,RBAC \\--enable-bootstrap-token-auth=true \\--requestheader-client-ca-file=/opt/cert/front-proxy-ca.pem \\--proxy-client-cert-file=/opt/cert/front-proxy-client.pem \\--proxy-client-key-file=/opt/cert/front-proxy-client-key.pem \\--requestheader-allowed-names=aggregator \\--requestheader-group-headers=X-Remote-Group \\--requestheader-extra-headers-prefix=X-Remote-Extra- \\--requestheader-username-headers=X-Remote-User \\--enable-aggregator-routing=true# --feature-gates=IPv6DualStack=true# --token-auth-file=/opt/cert/token.csvRestart=on-failure

RestartSec=10s

LimitNOFILE=65535[Install]

WantedBy=multi-user.targetEOF

- 启动apiserver(所有master节点)

]# systemctl daemon-reload && systemctl enable --now kube-apiserver## 注意查看状态是否启动正常

]# systemctl status kube-apiserver

● kube-apiserver.service - Kubernetes API ServerLoaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)Active: active (running) since 四 2024-02-15 09:20:18 CST; 18s agoDocs: https://github.com/kubernetes/kubernetesMain PID: 81286 (kube-apiserver)Tasks: 8Memory: 292.5MCGroup: /system.slice/kube-apiserver.service└─81286 /opt/bin/kubernetes/kube-apiserver --v=2 --allow-privileged=true --bind-address=0.0.0.0 --secure-port=6443 --advertise-address=192.168.2...

......]# curl -k --cacert /opt/cert/ca.pem \

--cert /opt/cert/apiserver.pem \

--key /opt/cert/apiserver-key.pem \

https://192.168.26.31:6443/healthz

ok]# ip_head='192.168.26';for i in 31 32 33;do \

curl -k --cacert /opt/cert/ca.pem \

--cert /opt/cert/apiserver.pem \

--key /opt/cert/apiserver-key.pem \

https://${ip_head}.${i}:6443/healthz; \

done

okokok

7.4 kubectl配置

- 创建admin.kubeconfig。使用 nginx方案,

-server=https://127.0.0.1:8443。在一个节点执行一次即可

kubectl config set-cluster kubernetes \--certificate-authority=/opt/cert/ca.pem \--embed-certs=true \--server=https://127.0.0.1:8443 \--kubeconfig=/opt/cert/admin.kubeconfigkubectl config set-credentials kubernetes-admin \--client-certificate=/opt/cert/admin.pem \--client-key=/opt/cert/admin-key.pem \--embed-certs=true \--kubeconfig=/opt/cert/admin.kubeconfigkubectl config set-context kubernetes-admin@kubernetes \--cluster=kubernetes \--user=kubernetes-admin \--kubeconfig=/opt/cert/admin.kubeconfigkubectl config use-context kubernetes-admin@kubernetes --kubeconfig=/opt/cert/admin.kubeconfig]# mkdir ~/.kube

]# cp /opt/cert/admin.kubeconfig ~/.kube/config

]# scp -r ~/.kube root@k8s-32:~/.

]# scp -r ~/.kube root@k8s-33:~/.

- 配置kubectl子命令补全

~]# echo 'source <(kubectl completion bash)' >> ~/.bashrc~]# yum -y install bash-completion

~]# source /usr/share/bash-completion/bash_completion

~]# source <(kubectl completion bash)~]# kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get "https://127.0.0.1:10259/healthz": dial tcp 127.0.0.1:10259: connect: connection refused

controller-manager Unhealthy Get "https://127.0.0.1:10257/healthz": dial tcp 127.0.0.1:10257: connect: connection refused

etcd-0 Healthy ok

7.5 部署kube-controller-manager

所有master节点配置,且配置相同。 10.26.0.0/16为pod网段,按需求设置你自己的网段。

- 创建启动配置:/usr/lib/systemd/system/kube-controller-manager.service

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target[Service]

ExecStart=/opt/bin/kubernetes/kube-controller-manager \\--v=2 \\--bind-address=0.0.0.0 \\--root-ca-file=/opt/cert/ca.pem \\--cluster-signing-cert-file=/opt/cert/ca.pem \\--cluster-signing-key-file=/opt/cert/ca-key.pem \\--service-account-private-key-file=/opt/cert/sa.key \\--kubeconfig=/opt/cert/controller-manager.kubeconfig \\--leader-elect=true \\--use-service-account-credentials=true \\--node-monitor-grace-period=40s \\--node-monitor-period=5s \\--controllers=*,bootstrapsigner,tokencleaner \\--allocate-node-cidrs=true \\--service-cluster-ip-range=10.168.0.0/16 \\--cluster-cidr=10.26.0.0/16 \\--node-cidr-mask-size-ipv4=24 \\--requestheader-client-ca-file=/opt/cert/front-proxy-ca.pem Restart=always

RestartSec=10s[Install]

WantedBy=multi-user.targetEOF

- 创建kube-controller-manager.kubeconfig。使用 nginx方案,

-server=https://127.0.0.1:8443。在一个节点执行一次即可。

kubectl config set-cluster kubernetes \--certificate-authority=/opt/cert/ca.pem \--embed-certs=true \--server=https://127.0.0.1:8443 \--kubeconfig=/opt/cert/controller-manager.kubeconfig

## 设置一个环境项,一个上下文

kubectl config set-context system:kube-controller-manager@kubernetes \--cluster=kubernetes \--user=system:kube-controller-manager \--kubeconfig=/opt/cert/controller-manager.kubeconfig

## 设置一个用户项

kubectl config set-credentials system:kube-controller-manager \--client-certificate=/opt/cert/controller-manager.pem \--client-key=/opt/cert/controller-manager-key.pem \--embed-certs=true \--kubeconfig=/opt/cert/controller-manager.kubeconfig

## 设置默认环境

kubectl config use-context system:kube-controller-manager@kubernetes \--kubeconfig=/opt/cert/controller-manager.kubeconfig## 复制/opt/cert/controller-manager.kubeconfig到其它节点

]# scp /opt/cert/controller-manager.kubeconfig root@k8s-32:/opt/cert/controller-manager.kubeconfig

]# scp /opt/cert/controller-manager.kubeconfig root@k8s-33:/opt/cert/controller-manager.kubeconfig

- 启动kube-controller-manager,并查看状态

]# systemctl daemon-reload

]# systemctl enable --now kube-controller-manager

]# systemctl status kube-controller-manager

● kube-controller-manager.service - Kubernetes Controller ManagerLoaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled)Active: active (running) since 四 2024-02-15 09:30:49 CST; 21s agoDocs: https://github.com/kubernetes/kubernetesMain PID: 82205 (kube-controller)Tasks: 5Memory: 138.1MCGroup: /system.slice/kube-controller-manager.service└─82205 /opt/bin/kubernetes/kube-controller-manager --v=2 --bind-address=0.0.0.0 --root-ca-file=/opt/cert/ca.pem --cluster-signing-cert-file=/op...

.......

]# kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get "https://127.0.0.1:10259/healthz": dial tcp 127.0.0.1:10259: connect: connection refused

controller-manager Healthy ok

etcd-0 Healthy ok

7.6 部署kube-schedule

- 创建启动配置:/usr/lib/systemd/system/kube-scheduler.service

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target[Service]

ExecStart=/opt/bin/kubernetes/kube-scheduler \\--v=2 \\--bind-address=0.0.0.0 \\--leader-elect=true \\--kubeconfig=/opt/cert/scheduler.kubeconfigRestart=always

RestartSec=10s[Install]

WantedBy=multi-user.targetEOF

- 创建scheduler.kubeconfig。使用 nginx方案,

-server=https://127.0.0.1:8443。在一个节点执行一次即可。

kubectl config set-cluster kubernetes \--certificate-authority=/opt/cert/ca.pem \--embed-certs=true \--server=https://127.0.0.1:8443 \--kubeconfig=/opt/cert/scheduler.kubeconfigkubectl config set-credentials system:kube-scheduler \--client-certificate=/opt/cert/scheduler.pem \--client-key=/opt/cert/scheduler-key.pem \--embed-certs=true \--kubeconfig=/opt/cert/scheduler.kubeconfigkubectl config set-context system:kube-scheduler@kubernetes \--cluster=kubernetes \--user=system:kube-scheduler \--kubeconfig=/opt/cert/scheduler.kubeconfigkubectl config use-context system:kube-scheduler@kubernetes \--kubeconfig=/opt/cert/scheduler.kubeconfig## 复制/opt/cert/scheduler.kubeconfig到其它节点

]# scp /opt/cert/scheduler.kubeconfig root@k8s-32:/opt/cert/scheduler.kubeconfig

]# scp /opt/cert/scheduler.kubeconfig root@k8s-33:/opt/cert/scheduler.kubeconfig

- 启动并查看服务状态

]# systemctl daemon-reload

]# systemctl enable --now kube-scheduler

]# systemctl status kube-scheduler

● kube-scheduler.service - Kubernetes SchedulerLoaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled)Active: active (running) since 四 2024-02-15 09:34:40 CST; 21s agoDocs: https://github.com/kubernetes/kubernetesMain PID: 82568 (kube-scheduler)Tasks: 7Memory: 66.1MCGroup: /system.slice/kube-scheduler.service└─82568 /opt/bin/kubernetes/kube-scheduler --v=2 --bind-address=0.0.0.0 --leader-elect=true --kubeconfig=/opt/cert/scheduler.kubeconfig

......

]# kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy ok

7.7 配置bootstrapping

- 创建bootstrap-kubelet.kubeconfig。使用 nginx方案,

-server=https://127.0.0.1:8443。在一个节点执行一次即可。

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/cert/ca.pem \

--embed-certs=true --server=https://127.0.0.1:8443 \

--kubeconfig=/opt/cert/bootstrap-kubelet.kubeconfigkubectl config set-credentials tls-bootstrap-token-user \

--token=bc5692.ebcfbe81d917383c \

--kubeconfig=/opt/cert/bootstrap-kubelet.kubeconfigkubectl config set-context tls-bootstrap-token-user@kubernetes \

--cluster=kubernetes \

--user=tls-bootstrap-token-user \

--kubeconfig=/opt/cert/bootstrap-kubelet.kubeconfigkubectl config use-context tls-bootstrap-token-user@kubernetes \

--kubeconfig=/opt/cert/bootstrap-kubelet.kubeconfig## 复制/opt/cert/bootstrap-kubelet.kubeconfig到其它节点

]# scp /opt/cert/bootstrap-kubelet.kubeconfig root@k8s-32:/opt/cert/bootstrap-kubelet.kubeconfig

]# scp /opt/cert/bootstrap-kubelet.kubeconfig root@k8s-33:/opt/cert/bootstrap-kubelet.kubeconfig

token的位置在bootstrap.secret.yaml(附:yaml文件:bootstrap.secret.yaml),如果修改的话到这个文件修改。

## 创建token。(也可以自已定义)

~]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

bc5692ebcfbe81d917383c89e60d4388

- bootstrap.secret.yaml

## 修改:

apiVersion: v1

kind: Secret

metadata:name: bootstrap-token-bc5692 ##修改,对应token前6位namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:description: "The default bootstrap token generated by 'kubelet '."token-id: bc5692 ##修改,对应token前6位token-secret: ebcfbe81d917383c ##修改,对应token前7-22位共16个字符...

yaml]# kubectl create -f bootstrap.secret.yaml

secret/bootstrap-token-bc5692 created

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-certificate-rotation created

clusterrole.rbac.authorization.k8s.io/system:kube-apiserver-to-kubelet created

clusterrolebinding.rbac.authorization.k8s.io/system:kube-apiserver created

7.8 部署kubelet

- 创建目录

~]# mkdir /data/kubernetes/kubelet -p

- 启动文件/usr/lib/systemd/system/kubelet.service。默认使用docker作为Runtime。

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=cri-dockerd.service

Requires=cri-dockerd.service[Service]

WorkingDirectory=/data/kubernetes/kubelet

ExecStart=/opt/bin/kubernetes/kubelet \\--bootstrap-kubeconfig=/opt/cert/bootstrap-kubelet.kubeconfig \\--cert-dir=/opt/cert \\--kubeconfig=/opt/cert/kubelet.kubeconfig \\--config=/opt/cfg/kubelet.json \\--container-runtime-endpoint=unix:///var/run/cri-dockerd.sock \\--pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 \\--root-dir=/data/kubernetes/kubelet \\--v=2

Restart=on-failure

RestartSec=5[Install]

WantedBy=multi-user.target

EOF

/opt/cert/kubelet.kubeconfig为自动创建的文件,如果已存在就删除

- 所有k8s节点创建kubelet的配置文件/opt/cfg/kubelet.json

cat > /opt/cfg/kubelet.json << EOF

{"kind": "KubeletConfiguration","apiVersion": "kubelet.config.k8s.io/v1beta1","authentication": {"x509": {"clientCAFile": "/opt/cert/ca.pem"},"webhook": {"enabled": true,"cacheTTL": "2m0s"},"anonymous": {"enabled": false}},"authorization": {"mode": "Webhook","webhook": {"cacheAuthorizedTTL": "5m0s","cacheUnauthorizedTTL": "30s"}},"address": "192.168.26.31","port": 10250,"readOnlyPort": 10255,"cgroupDriver": "systemd", "hairpinMode": "promiscuous-bridge","serializeImagePulls": false,"clusterDomain": "cluster.local.","clusterDNS": ["10.168.0.2"]

}

EOF

注意修改:“address”: “192.168.26.31”;“address”: “192.168.26.32”; “address”: “192.168.26.33”

- 启动

~]# systemctl daemon-reload

~]# systemctl enable --now kubelet

~]# systemctl status kubelet

● kubelet.service - Kubernetes KubeletLoaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)Active: active (running) since 四 2024-02-15 09:46:38 CST; 19s agoDocs: https://github.com/kubernetes/kubernetesMain PID: 83546 (kubelet)Tasks: 11Memory: 124.0MCGroup: /system.slice/kubelet.service└─83546 /opt/bin/kubernetes/kubelet --bootstrap-kubeconfig=/opt/cert/bootstrap-kubelet.kubeconfig --cert-dir=/opt/cert --kubeconfig=/opt/cert/ku...

......

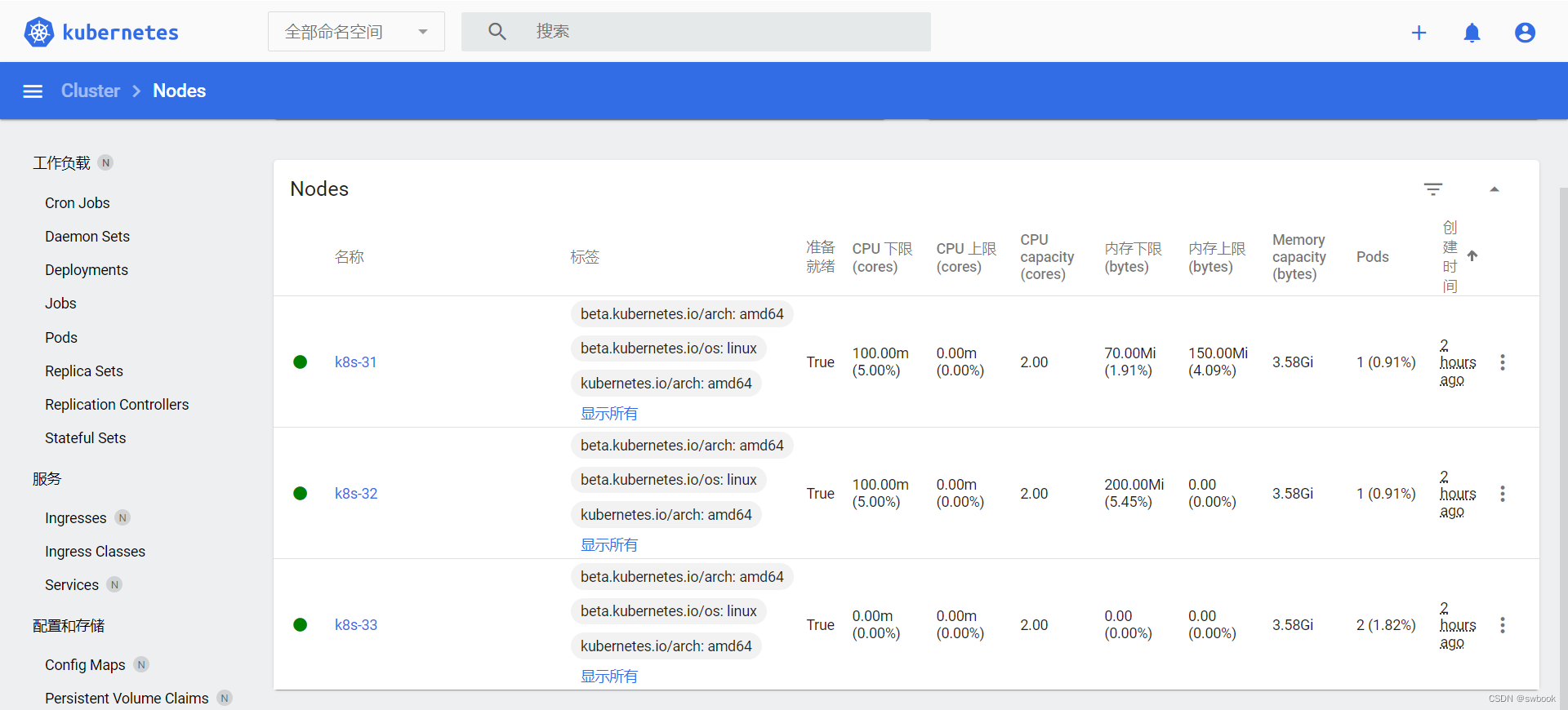

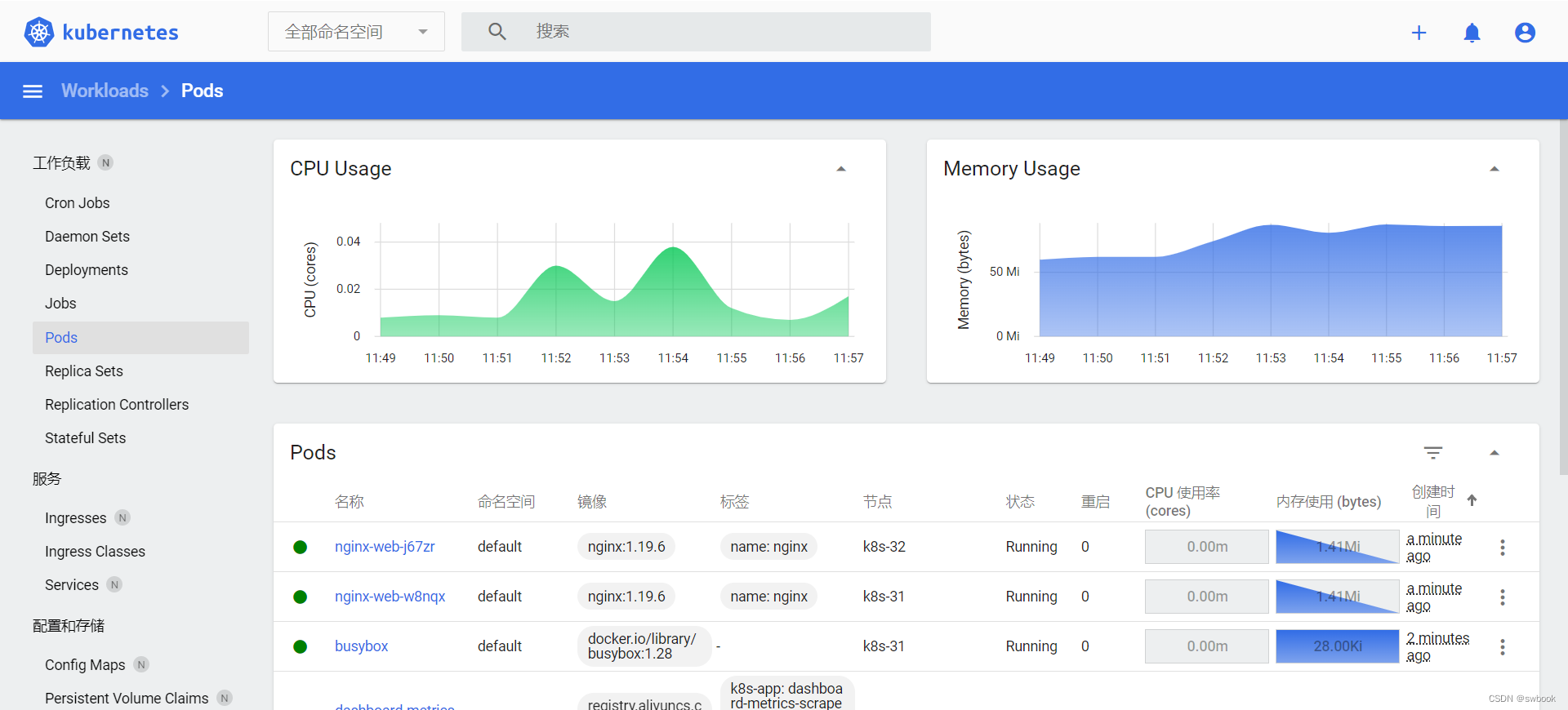

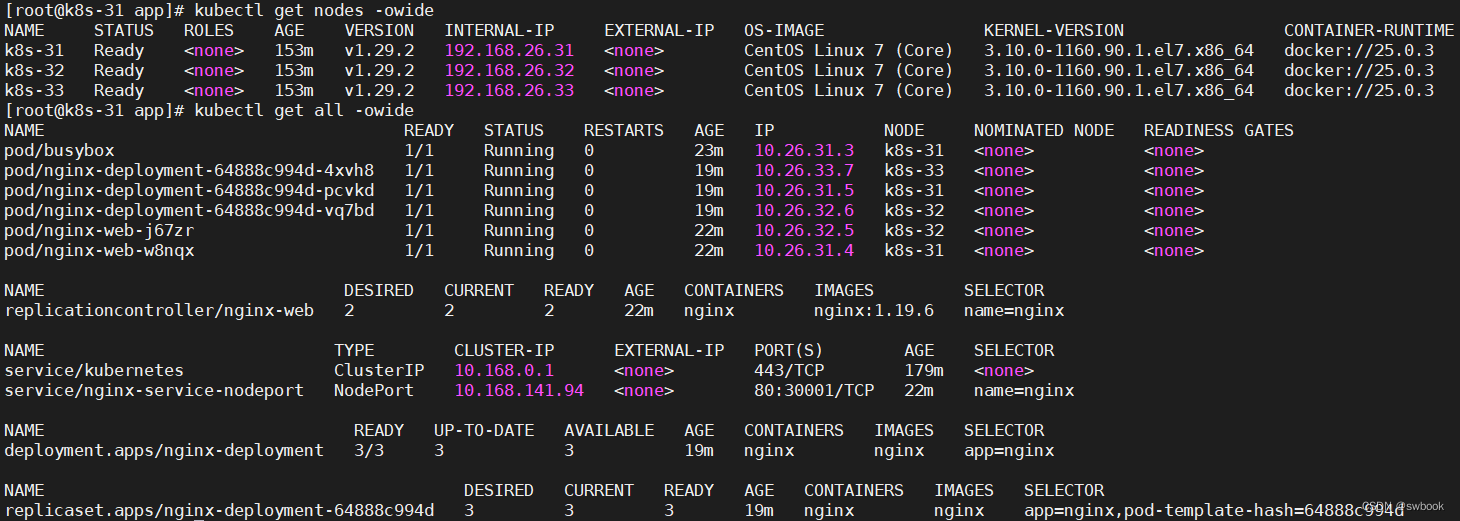

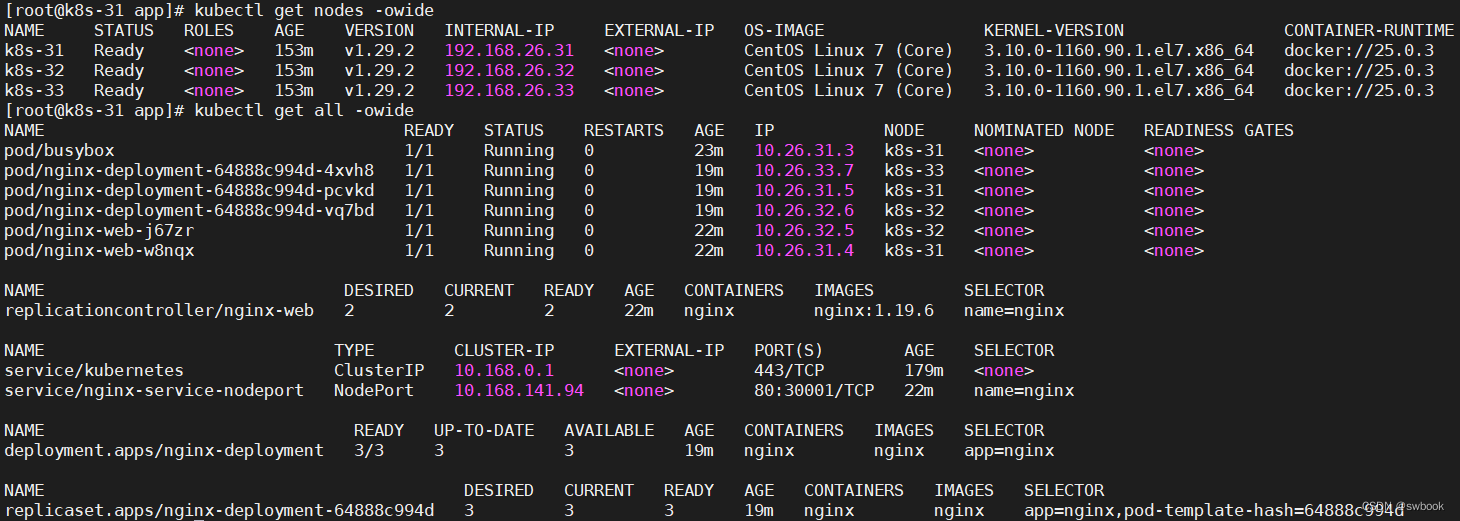

~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-31 NotReady <none> 52s v1.29.2 192.168.26.31 <none> CentOS Linux 7 (Core) 3.10.0-1160.90.1.el7.x86_64 docker://25.0.3

k8s-32 NotReady <none> 52s v1.29.2 192.168.26.32 <none> CentOS Linux 7 (Core) 3.10.0-1160.90.1.el7.x86_64 docker://25.0.3

k8s-33 NotReady <none> 52s v1.29.2 192.168.26.33 <none> CentOS Linux 7 (Core) 3.10.0-1160.90.1.el7.x86_64 docker://25.0.3

## 查看kubelet证书请求

~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

node-csr--Th1ZdIPpxMBn72aH0crbKqOnc35Aj-0Is2LnfncahQ 90s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:bc5692 <none> Approved,Issued

node-csr-N4scOMBFuHOnA4qCPwOVdkeGTr4JI0EY1EbcSXPVdMw 90s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:bc5692 <none> Approved,Issued

node-csr-wIRG2OUYOLGsYC4kY65y0bJ9cjyxF9LgMQba0mil_-A 90s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:bc5692 <none> Approved,Issued## 如果处于Pending状态,则批准申请

~]# kubectl certificate approve node-csr-......

如果node仍然是NotReady,则需要安装cni-plugin-flannel。(参见后面的核心插件部署)

~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-31 NotReady <none> 3m21s v1.29.2

k8s-32 NotReady <none> 3m21s v1.29.2

k8s-33 NotReady <none> 3m21s v1.29.2

7.9 部署kube-proxy

- 创建kube-proxy.kubeconfig。使用 nginx方案,

-server=https://127.0.0.1:8443。在一个节点执行一次即可。

]# kubectl config set-cluster kubernetes \--certificate-authority=/opt/cert/ca.pem \--embed-certs=true \--server=https://127.0.0.1:8443 \--kubeconfig=/opt/cert/kube-proxy.kubeconfig]# kubectl config set-credentials kube-proxy \--client-certificate=/opt/cert/kube-proxy.pem \--client-key=/opt/cert/kube-proxy-key.pem \--embed-certs=true \--kubeconfig=/opt/cert/kube-proxy.kubeconfig]# kubectl config set-context kube-proxy@kubernetes \--cluster=kubernetes \--user=kube-proxy \--kubeconfig=/opt/cert/kube-proxy.kubeconfig]# kubectl config use-context kube-proxy@kubernetes --kubeconfig=/opt/cert/kube-proxy.kubeconfig## 复制/opt/cert/kube-proxy.kubeconfig到各节点

]# scp /opt/cert/kube-proxy.kubeconfig root@k8s-32:/opt/cert/kube-proxy.kubeconfig

]# scp /opt/cert/kube-proxy.kubeconfig root@k8s-33:/opt/cert/kube-proxy.kubeconfig

- 所有k8s节点添加kube-proxy的service文件

cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target[Service]

ExecStart=/opt/bin/kubernetes/kube-proxy \\--config=/opt/cfg/kube-proxy.yaml \\--v=2Restart=always

RestartSec=10s[Install]

WantedBy=multi-user.targetEOF

- 所有k8s节点添加kube-proxy的配置

cat > /opt/cfg/kube-proxy.yaml << EOF

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:acceptContentTypes: ""burst: 10contentType: application/vnd.kubernetes.protobufkubeconfig: /opt/cert/kube-proxy.kubeconfigqps: 5

clusterCIDR: 10.26.0.0/16

configSyncPeriod: 15m0s

conntrack:max: nullmaxPerCore: 32768min: 131072tcpCloseWaitTimeout: 1h0m0stcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:masqueradeAll: falsemasqueradeBit: 14minSyncPeriod: 0ssyncPeriod: 30s

ipvs:masqueradeAll: trueminSyncPeriod: 5sscheduler: "rr"syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

udpIdleTimeout: 250msEOF

- 启动

]# systemctl daemon-reload

]# systemctl enable --now kube-proxy

]# systemctl status kube-proxy

● kube-proxy.service - Kubernetes Kube ProxyLoaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)Active: active (running) since 四 2024-02-15 09:53:08 CST; 16s agoDocs: https://github.com/kubernetes/kubernetesMain PID: 84254 (kube-proxy)Tasks: 5Memory: 63.6MCGroup: /system.slice/kube-proxy.service└─84254 /opt/bin/kubernetes/kube-proxy --config=/opt/cfg/kube-proxy.yaml --v=2

......

八、k8s核心插件

8.1 部署CNI网络插件装

- 官方链接

https://github.com/containernetworking/plugins

下载:cni-plugins-linux-amd64-v1.4.0.tgz:https://github.com/containernetworking/plugins/releases/download/v1.4.0/cni-plugins-linux-amd64-v1.4.0.tgz

https://github.com/flannel-io/cni-plugin

下载:cni-plugin-flannel-linux-amd64-v1.4.0-flannel1.tgz:https://github.com/flannel-io/cni-plugin/releases/download/v1.4.0-flannel1/cni-plugin-flannel-linux-amd64-v1.4.0-flannel1.tgz

- 下载、解压、分发

## 在所有节点创建目录

]# mkdir -p /opt/cni/bin

]# cd /opt/cni/bin## 下载

k8s-31 app]# curl -O -L https://github.com/containernetworking/plugins/releases/download/v1.4.0/cni-plugins-linux-amd64-v1.4.0.tgz

k8s-31 app]# curl -O -L https://github.com/flannel-io/cni-plugin/releases/download/v1.4.0-flannel1/cni-plugin-flannel-linux-amd64-v1.4.0-flannel1.tgz## 解压

k8s-31 app]# tar -C /opt/cni/bin -xzf cni-plugins-linux-amd64-v1.4.0.tgz

k8s-31 app]# tar -C /opt/cni/bin -xzf cni-plugin-flannel-linux-amd64-v1.4.0-flannel1.tgz

k8s-31 app]# mv /opt/cni/bin/flannel-amd64 /opt/cni/bin/flannel

k8s-31 app]# ls -l /opt/cni/bin

总用量 81320

-rwxr-xr-x 1 root root 4109351 12月 5 00:38 bandwidth

-rwxr-xr-x 1 root root 4652757 12月 5 00:39 bridge

-rwxr-xr-x 1 root root 11050013 12月 5 00:39 dhcp

-rwxr-xr-x 1 root root 4297556 12月 5 00:39 dummy

-rwxr-xr-x 1 root root 4736299 12月 5 00:39 firewall

-rwxr-xr-x 1 root root 2414517 1月 19 01:12 flannel

-rwxr-xr-x 1 root root 4191837 12月 5 00:39 host-device

-rwxr-xr-x 1 root root 3549866 12月 5 00:39 host-local

-rwxr-xr-x 1 root root 4315686 12月 5 00:39 ipvlan

-rwxr-xr-x 1 root root 3636792 12月 5 00:39 loopback

-rwxr-xr-x 1 root root 4349395 12月 5 00:39 macvlan

-rwxr-xr-x 1 root root 4085020 12月 5 00:39 portmap

-rwxr-xr-x 1 root root 4470977 12月 5 00:39 ptp

-rwxr-xr-x 1 root root 3851218 12月 5 00:39 sbr

-rwxr-xr-x 1 root root 3110828 12月 5 00:39 static

-rwxr-xr-x 1 root root 4371897 12月 5 00:39 tap

-rwxr-xr-x 1 root root 3726382 12月 5 00:39 tuning

-rwxr-xr-x 1 root root 4310173 12月 5 00:39 vlan

-rwxr-xr-x 1 root root 4001842 12月 5 00:39 vrf## 分发

]# scp -r /opt/cni/bin root@k8s-32:/opt/cni/.

]# scp -r /opt/cni/bin root@k8s-33:/opt/cni/.

- 创建

/etc/cni/net.d/10-flannel.conflist

~]# mkdir /etc/cni/net.d -p

~]# vi /etc/cni/net.d/10-flannel.conflist

{"name": "cbr0","cniVersion": "0.3.1","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]

}

- 查看节点状态

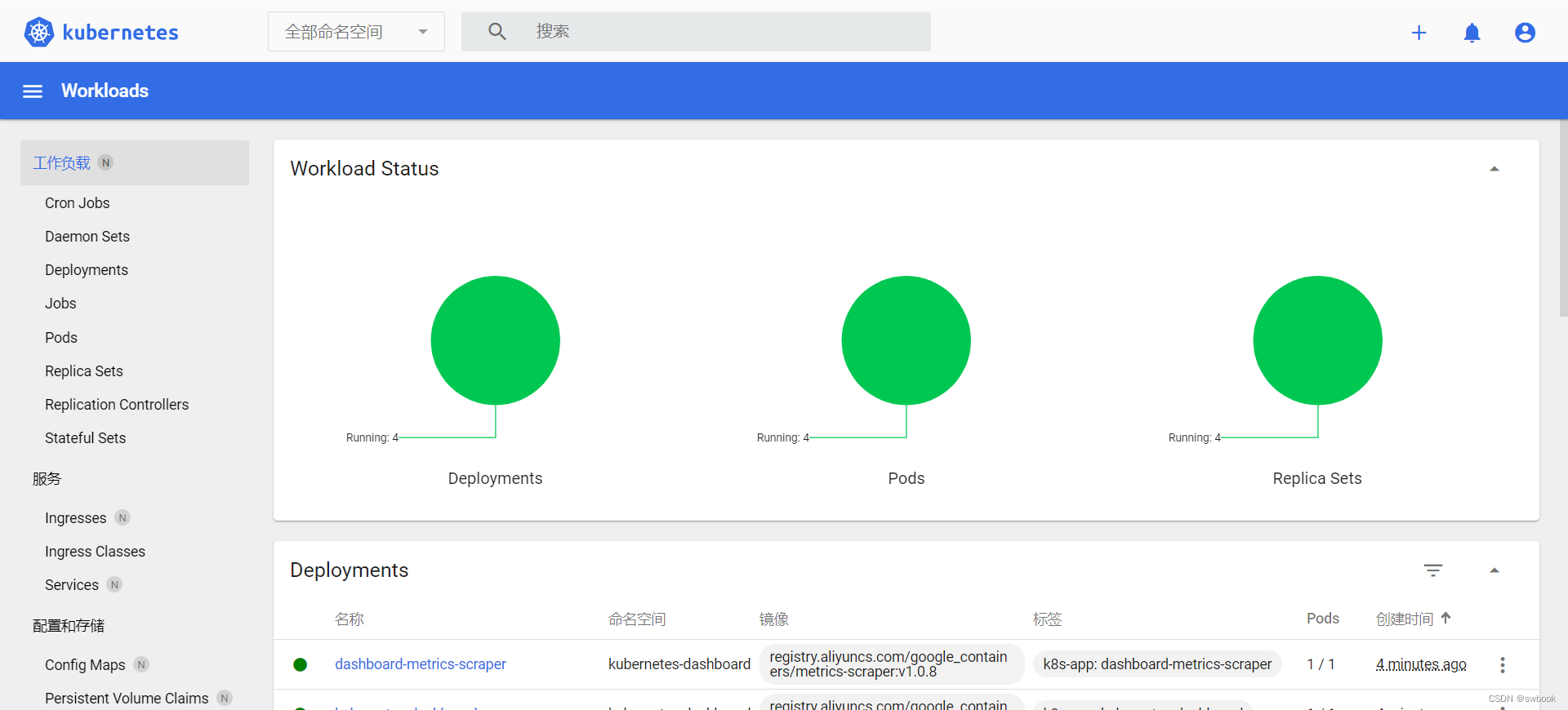

~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-31 Ready <none> 28m v1.29.2

k8s-32 Ready <none> 28m v1.29.2