跟TED演讲学英文:What moral decisions should driverless cars make by Iyad Rahwan

What moral decisions should driverless cars make?

Link: https://www.ted.com/talks/iyad_rahwan_what_moral_decisions_should_driverless_cars_make

Speaker: Iyad Rahwan

Date: September 2016

文章目录

- What moral decisions should driverless cars make?

- Introduction

- Vocabulary

- Transcript

- Summary

- 后记

Introduction

Should your driverless car kill you if it means saving five pedestrians? In this primer on the social dilemmas of driverless cars, Iyad Rahwan explores how the technology will challenge our morality and explains his work collecting data from real people on the ethical trade-offs we’re willing (and not willing) to make.

Vocabulary

swerve: 美 [swɜːrv] 突然地改变方向

bystander: 旁观者

but the car may swerve, hitting one bystander, 但是汽车可能会突然转向,撞到一个旁观者

crash into:撞上,撞到xxx上

a bunch of:一群

it will crash into a bunch of pedestrians crossing the street它会撞上一群过马路的行人

the trolley problem:电车难题

The trolley problem is a classic ethical dilemma in philosophy and ethics, often used to explore moral decision-making and the concept of utilitarianism. It presents a scenario where a person is faced with a moral choice that involves sacrificing one life to save others. The traditional setup involves a runaway trolley hurtling down a track towards a group of people who will be killed if it continues on its path. The person facing the dilemma has the option to divert the trolley onto a different track, where it will kill one person instead of the group.

The ethical question at the heart of the trolley problem revolves around whether it is morally justifiable to actively intervene to sacrifice one life to save many others. Philosophers use variations of this scenario to explore different factors that may influence moral decision-making, such as the number of lives at stake, the role of intention, and the consequences of one’s actions.

The trolley problem has practical applications beyond philosophical thought experiments, particularly in fields like autonomous vehicle technology. Engineers and ethicists grapple with similar dilemmas when programming self-driving cars, where decisions must be made about how the vehicle should respond in potentially fatal situations.

trolley:美 [ˈtrɑːli] 乘电车;

utilitarian:美 [ˌjuːtɪlɪˈteriən] 有效用的;实用的;功利主义的

Bentham says the car should follow utilitarian ethics 汽车应该遵循功利主义伦理

take its course: 顺其自然, 完成自身的发展阶段

and you should let the car take its course even if that’s going to harm more people 你应该让汽车顺其自然,即使这会伤害更多的人

pamphlet:美 [ˈpæmflət] 小册子

English economist William Forster Lloyd published a pamphlet 英国经济学家威廉·福斯特·劳埃德出版了一本小册子

graze:美 [ɡreɪz] 在田野里吃草

English farmers who are sharing a common land for their sheep to graze. 共享一片土地放羊的英国农民。

rejuvenate:美 [rɪˈdʒuːvəneɪt] 使感觉更年轻;使恢复青春活力;

the land will be rejuvenated 这片土地将重新焕发生机

detriment:美 [ˈdetrɪmənt] 伤害,损害

to the detriment of all the farmers, 对所有农民不利

the tragedy of the commons 共用品悲剧

The tragedy of the commons is a concept in economics and environmental science that refers to a situation where multiple individuals, acting independently and rationally in their own self-interest, deplete a shared limited resource, leading to its degradation or depletion. The term was popularized by the ecologist Garrett Hardin in a famous 1968 essay.

The tragedy of the commons arises when individuals prioritize their own short-term gains over the long-term sustainability of the shared resource. Since no single individual bears the full cost of their actions, there is little incentive to conserve or manage the resource responsibly. Instead, each person maximizes their own benefit, leading to overexploitation or degradation of the resource, which ultimately harms everyone.

Classic examples of the tragedy of the commons include overfishing in open-access fisheries, deforestation of public lands, and pollution of the air and water. In each case, individuals or groups exploit the resource without considering the negative consequences for others or the sustainability of the resource itself.

Addressing the tragedy of the commons often requires collective action and the establishment of regulations, property rights, or other mechanisms to manage and protect the shared resource. By aligning individual incentives with the common good, it becomes possible to mitigate overuse and ensure the sustainable management of resources for the benefit of all.

insidious:美 [ɪnˈsɪdiəs] 潜伏的;隐患的

the problem may be a little bit more insidious because there is not necessarily an individual human being making those decisions. 这个问题可能更隐蔽一点,因为不一定会是人做出这些决定。

jaywalking: 走路不遵守交通规则, 擅自穿越马路

punish jaywalking:惩罚乱穿马路

zeroth:第零个

Asimov introduced the zeroth law which takes precedence above all, and it’s that a robot may not harm humanity as a whole. 阿西莫夫提出了第零定律,该定律高于一切,即机器人不得伤害整个人类。

Transcript

Today I’m going to talk

about technology and society.

The Department of Transport

estimated that last year

35,000 people died

from traffic crashes in the US alone.

Worldwide, 1.2 million people

die every year in traffic accidents.

If there was a way we could eliminate

90 percent of those accidents,

would you support it?

Of course you would.

This is what driverless car technology

promises to achieve

by eliminating the main

source of accidents –

human error.

Now picture yourself

in a driverless car in the year 2030,

sitting back and watching

this vintage TEDxCambridge video.

(Laughter)

All of a sudden,

the car experiences mechanical failure

and is unable to stop.

If the car continues,

it will crash into a bunch

of pedestrians crossing the street,

but the car may swerve,

hitting one bystander,

killing them to save the pedestrians.

What should the car do,

and who should decide?

What if instead the car

could swerve into a wall,

crashing and killing you, the passenger,

in order to save those pedestrians?

This scenario is inspired

by the trolley problem,

which was invented

by philosophers a few decades ago

to think about ethics.

Now, the way we think

about this problem matters.

We may for example

not think about it at all.

We may say this scenario is unrealistic,

incredibly unlikely, or just silly.

But I think this criticism

misses the point

because it takes

the scenario too literally.

Of course no accident

is going to look like this;

no accident has two or three options

where everybody dies somehow.

Instead, the car is going

to calculate something

like the probability of hitting

a certain group of people,

if you swerve one direction

versus another direction,

you might slightly increase the risk

to passengers or other drivers

versus pedestrians.

It’s going to be

a more complex calculation,

but it’s still going

to involve trade-offs,

and trade-offs often require ethics.

We might say then,

"Well, let’s not worry about this.

Let’s wait until technology

is fully ready and 100 percent safe."

Suppose that we can indeed

eliminate 90 percent of those accidents,

or even 99 percent in the next 10 years.

What if eliminating

the last one percent of accidents

requires 50 more years of research?

Should we not adopt the technology?

That’s 60 million people

dead in car accidents

if we maintain the current rate.

So the point is,

waiting for full safety is also a choice,

and it also involves trade-offs.

People online on social media

have been coming up with all sorts of ways

to not think about this problem.

One person suggested

the car should just swerve somehow

in between the passengers –

(Laughter)

and the bystander.

Of course if that’s what the car can do,

that’s what the car should do.

We’re interested in scenarios

in which this is not possible.

And my personal favorite

was a suggestion by a blogger

to have an eject button in the car

that you press –

(Laughter)

just before the car self-destructs.

(Laughter)

So if we acknowledge that cars

will have to make trade-offs on the road,

how do we think about those trade-offs,

and how do we decide?

Well, maybe we should run a survey

to find out what society wants,

because ultimately,

regulations and the law

are a reflection of societal values.

So this is what we did.

With my collaborators,

Jean-François Bonnefon and Azim Shariff,

we ran a survey

in which we presented people

with these types of scenarios.

We gave them two options

inspired by two philosophers:

Jeremy Bentham and Immanuel Kant.

Bentham says the car

should follow utilitarian ethics:

it should take the action

that will minimize total harm –

even if that action will kill a bystander

and even if that action

will kill the passenger.

Immanuel Kant says the car

should follow duty-bound principles,

like “Thou shalt not kill.”

So you should not take an action

that explicitly harms a human being,

and you should let the car take its course

even if that’s going to harm more people.

What do you think?

Bentham or Kant?

Here’s what we found.

Most people sided with Bentham.

So it seems that people

want cars to be utilitarian,

minimize total harm,

and that’s what we should all do.

Problem solved.

But there is a little catch.

When we asked people

whether they would purchase such cars,

they said, “Absolutely not.”

(Laughter)

They would like to buy cars

that protect them at all costs,

but they want everybody else

to buy cars that minimize harm.

(Laughter)

We’ve seen this problem before.

It’s called a social dilemma.

And to understand the social dilemma,

we have to go a little bit

back in history.

In the 1800s,

English economist William Forster Lloyd

published a pamphlet

which describes the following scenario.

You have a group of farmers –

English farmers –

who are sharing a common land

for their sheep to graze.

Now, if each farmer

brings a certain number of sheep –

let’s say three sheep –

the land will be rejuvenated,

the farmers are happy,

the sheep are happy,

everything is good.

Now, if one farmer brings one extra sheep,

that farmer will do slightly better,

and no one else will be harmed.

But if every farmer made

that individually rational decision,

the land will be overrun,

and it will be depleted

to the detriment of all the farmers,

and of course,

to the detriment of the sheep.

We see this problem in many places:

in the difficulty of managing overfishing,

or in reducing carbon emissions

to mitigate climate change.

When it comes to the regulation

of driverless cars,

the common land now

is basically public safety –

that’s the common good –

and the farmers are the passengers

or the car owners who are choosing

to ride in those cars.

And by making the individually

rational choice

of prioritizing their own safety,

they may collectively be

diminishing the common good,

which is minimizing total harm.

It’s called the tragedy of the commons,

traditionally,

but I think in the case

of driverless cars,

the problem may be

a little bit more insidious

because there is not necessarily

an individual human being

making those decisions.

So car manufacturers

may simply program cars

that will maximize safety

for their clients,

and those cars may learn

automatically on their own

that doing so requires slightly

increasing risk for pedestrians.

So to use the sheep metaphor,

it’s like we now have electric sheep

that have a mind of their own.

(Laughter)

And they may go and graze

even if the farmer doesn’t know it.

So this is what we may call

the tragedy of the algorithmic commons,

and if offers new types of challenges.

Typically, traditionally,

we solve these types

of social dilemmas using regulation,

so either governments

or communities get together,

and they decide collectively

what kind of outcome they want

and what sort of constraints

on individual behavior

they need to implement.

And then using monitoring and enforcement,

they can make sure

that the public good is preserved.

So why don’t we just,

as regulators,

require that all cars minimize harm?

After all, this is

what people say they want.

And more importantly,

I can be sure that as an individual,

if I buy a car that may

sacrifice me in a very rare case,

I’m not the only sucker doing that

while everybody else

enjoys unconditional protection.

In our survey, we did ask people

whether they would support regulation

and here’s what we found.

First of all, people

said no to regulation;

and second, they said,

"Well if you regulate cars to do this

and to minimize total harm,

I will not buy those cars."

So ironically,

by regulating cars to minimize harm,

we may actually end up with more harm

because people may not

opt into the safer technology

even if it’s much safer

than human drivers.

I don’t have the final

answer to this riddle,

but I think as a starting point,

we need society to come together

to decide what trade-offs

we are comfortable with

and to come up with ways

in which we can enforce those trade-offs.

As a starting point,

my brilliant students,

Edmond Awad and Sohan Dsouza,

built the Moral Machine website,

which generates random scenarios at you –

basically a bunch

of random dilemmas in a sequence

where you have to choose what

the car should do in a given scenario.

And we vary the ages and even

the species of the different victims.

So far we’ve collected

over five million decisions

by over one million people worldwide

from the website.

And this is helping us

form an early picture

of what trade-offs

people are comfortable with

and what matters to them –

even across cultures.

But more importantly,

doing this exercise

is helping people recognize

the difficulty of making those choices

and that the regulators

are tasked with impossible choices.

And maybe this will help us as a society

understand the kinds of trade-offs

that will be implemented

ultimately in regulation.

And indeed, I was very happy to hear

that the first set of regulations

that came from

the Department of Transport –

announced last week –

included a 15-point checklist

for all carmakers to provide,

and number 14 was ethical consideration –

how are you going to deal with that.

We also have people

reflect on their own decisions

by giving them summaries

of what they chose.

I’ll give you one example –

I’m just going to warn you

that this is not your typical example,

your typical user.

This is the most sacrificed and the most

saved character for this person.

(Laughter)

Some of you may agree with him,

or her, we don’t know.

But this person also seems to slightly

prefer passengers over pedestrians

in their choices

and is very happy to punish jaywalking.

(Laughter)

So let’s wrap up.

We started with the question –

let’s call it the ethical dilemma –

of what the car should do

in a specific scenario:

swerve or stay?

But then we realized

that the problem was a different one.

It was the problem of how to get

society to agree on and enforce

the trade-offs they’re comfortable with.

It’s a social dilemma.

In the 1940s, Isaac Asimov

wrote his famous laws of robotics –

the three laws of robotics.

A robot may not harm a human being,

a robot may not disobey a human being,

and a robot may not allow

itself to come to harm –

in this order of importance.

But after 40 years or so

and after so many stories

pushing these laws to the limit,

Asimov introduced the zeroth law

which takes precedence above all,

and it’s that a robot

may not harm humanity as a whole.

I don’t know what this means

in the context of driverless cars

or any specific situation,

and I don’t know how we can implement it,

but I think that by recognizing

that the regulation of driverless cars

is not only a technological problem

but also a societal cooperation problem,

I hope that we can at least begin

to ask the right questions.

Thank you.

(Applause)

Summary

In Iyad Rahwan’s TED Talk, he delves into the ethical dilemmas surrounding the advent of driverless car technology. He begins by highlighting the potential of this technology to significantly reduce traffic accidents caused by human error, thereby saving countless lives. However, he poses a thought-provoking scenario: if faced with a situation where a driverless car must choose between different courses of action, such as swerving to avoid pedestrians at the risk of harming the passenger, who should decide and how? Rahwan draws parallels to the classic philosophical trolley problem to illustrate the complex ethical considerations at play in programming autonomous vehicles.

Rahwan emphasizes the societal implications of adopting driverless car technology and the challenges it poses. He discusses the tension between individual preferences for safety and societal values, pointing out the paradox where individuals may support utilitarian ethics for autonomous vehicles while prioritizing their own safety when it comes to purchasing decisions. This dilemma reflects the classic tragedy of the commons, where individual rational choices may lead to suboptimal outcomes for society as a whole. Rahwan argues that addressing these challenges requires collective decision-making and regulation informed by societal values.

To explore societal values and preferences regarding the ethical dilemmas of driverless cars, Rahwan and his collaborators conducted surveys and developed the Moral Machine website. Through this platform, they collected data on people’s choices in hypothetical scenarios, revealing diverse perspectives and priorities across cultures. Rahwan underscores the importance of understanding and reconciling these differences in shaping regulations for autonomous vehicles. He concludes by advocating for ongoing dialogue and cooperation to navigate the ethical complexities of driverless car technology, ultimately aiming to ensure that societal values are reflected in its implementation.

后记

2024年5月6日18点43分于上海。

相关文章:

跟TED演讲学英文:What moral decisions should driverless cars make by Iyad Rahwan

What moral decisions should driverless cars make? Link: https://www.ted.com/talks/iyad_rahwan_what_moral_decisions_should_driverless_cars_make Speaker: Iyad Rahwan Date: September 2016 文章目录 What moral decisions should driverless cars make?Introduct…...

-规格化交互信息Metric)

【ITK配准】第七期 尺度(Metric)-规格化交互信息Metric

很高兴在雪易的CSDN遇见你 VTK技术爱好者 QQ:870202403 公众号:VTK忠粉 前言 本文分享ITK中的互信息Metric,即itk::ITK中的互信息Metric,即itk::MutualInformationImageToImageMetric ,希望对各位小伙伴有所帮助! 感谢各位小伙伴的点赞+关注,小易会继续努力分享…...

Python练习 20240508一次小测验

Python基础 10道基础练习题 1. 个人所得税计算器描述输入输出示例…...

桥梁施工污水需要哪些工艺设备

桥梁施工过程中产生的污水通常包含泥浆、油污、化学品残留等污染物。为了有效处理这些污水,确保施工现场的环境保护和合规性,通常需要以下工艺设备: 沉砂池:用于去除污水中的砂粒和其他重质无机物,减少对后续处理设备的…...

ADOP带你了解:长距离 PoE 交换机

您是否知道当今的企业需要的网络连接超出了传统交换机所能容纳的长度?这就是我们在长距离 PoE 交换机方面的专业化变得重要的地方。我们了解扩展网络覆盖范围的挑战,无论是在广阔的园区还是在多栋建筑之间。使用这些可靠的交换机,我们不仅可以…...

想要品质飞跃?找六西格玛培训公司就对了!

在当今复杂多变的市场环境中,企业的竞争早已不再是单一的价格或产品竞争,而是转向了对品质、效率和创新的全面追求。六西格玛,作为一种全球公认的质量管理方法论,正成为越来越多企业追求品质革命的重要工具。在这其中,…...

【工具】Office/WPS 插件|AI 赋能自动化生成 PPT 插件测评 —— 必优科技 ChatPPT

本文参加百度的有奖征文活动,更主要的也是借此机会去体验一下 AI 生成 PPT 的产品的现状,因此本文是设身处地从用户的角度去体验、使用这个产品,并反馈最真实的建议和意见,除了明确该产品的优点之外,也发现了不少缺陷和…...

4000定制网站,因为没有案例,客户走了

接到一个要做企业站点的客户,属于定制开发,预算4000看起来是不是还行的一个订单? 接单第一步:筛客户 从客户询盘的那一刻开始就要围绕核心要素:预算和工期,凡是不符合预期的一律放掉就好了,没必…...

内容安全(AV)

防病毒网关(AV)简介 基于网络侧 识别 病毒文件,工作范围2~7层。这里的网关指的是内网和外网之间的一个关口,在此进行病毒的查杀。在深信服中就有一个EDR设备,该设备就是有两种部署,一个部署在网关…...

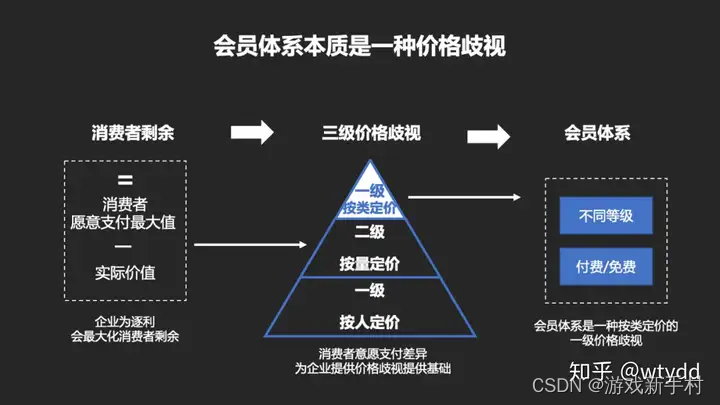

互联网产品为什么要搭建会员体系?

李诞曾经说过一句话:每个人都可以讲5分钟脱口秀。这句话换到会员体系里面同样适用,每个人都能聊点会员体系相关的东西。 比如会员体系属于用户运营的范畴,比如怎样用户分层,比如用户标签及CDP、会员积分、会员等级、会员权益和付…...

富格林:学习安全策略远离欺诈亏损

富格林悉知,黄金交易市场的每一分都可能发生变化。市场的波动会让很多人欢喜或沮丧,有人因此赚得盆满钵满,但也有人落入陷阱亏损连连,在现货黄金投资中,需要学习正规的做单技能,制定正规合理做单策略&#…...

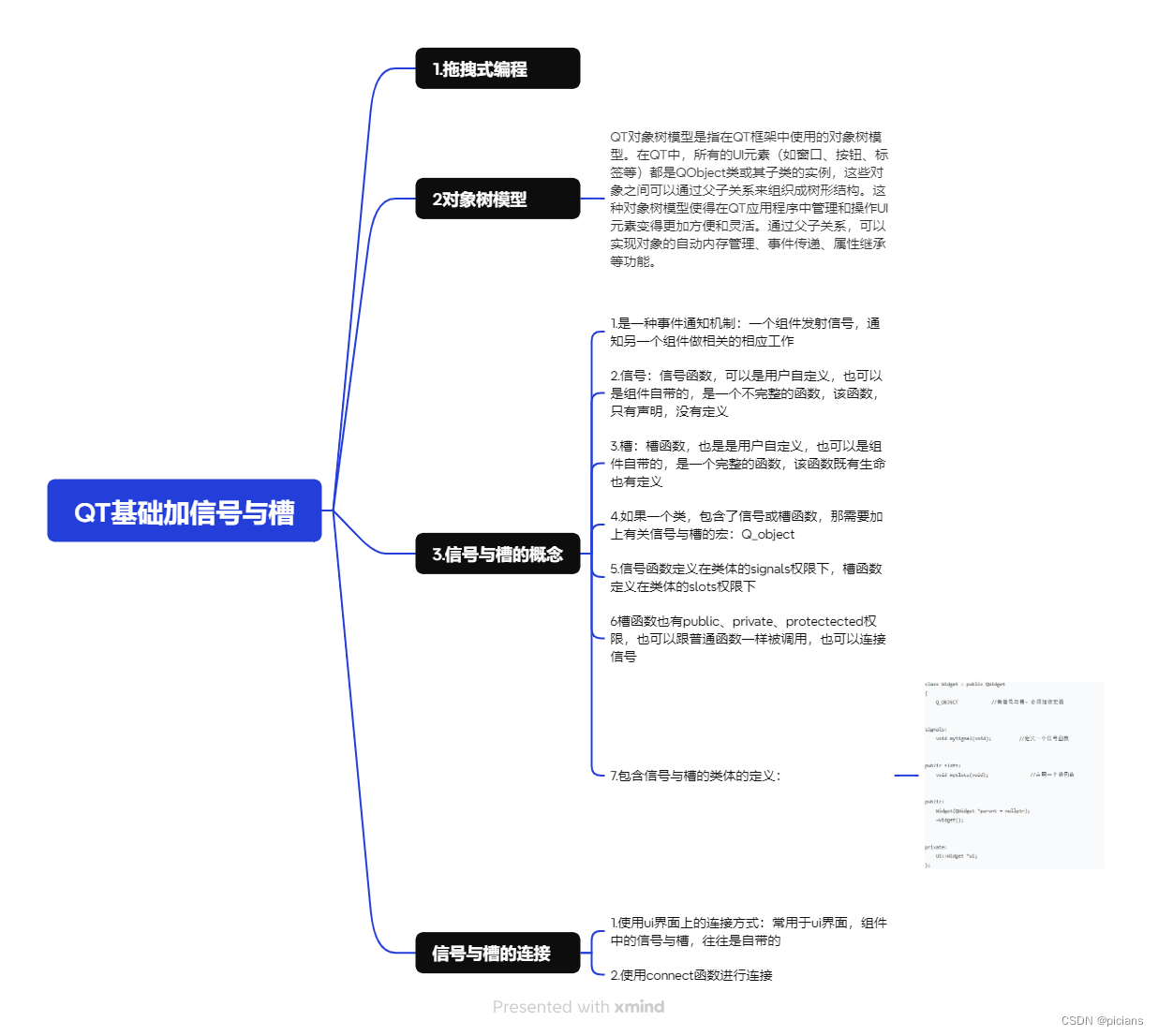

学QT的第二天~

小黑子鉴别界面 #include "mywidget.h" void MyWidget::bth1() { if(edit3 ->text()"520cxk"&&edit4 ->text()"1314520") { qDebug()<< "你好,真爱粉"; this->close(); } else { speecher->sa…...

QSplitter分裂器的使用方法

1.QSplitter介绍 QSplitter是Qt框架提供的一个基础窗口控件类,主要用于分割窗口,使用户能够通过拖动分隔条来调节子窗口的大小。 2.QSplitter的添加方法 (1)通过Qt Creator的界面设计工具添加; (2…...

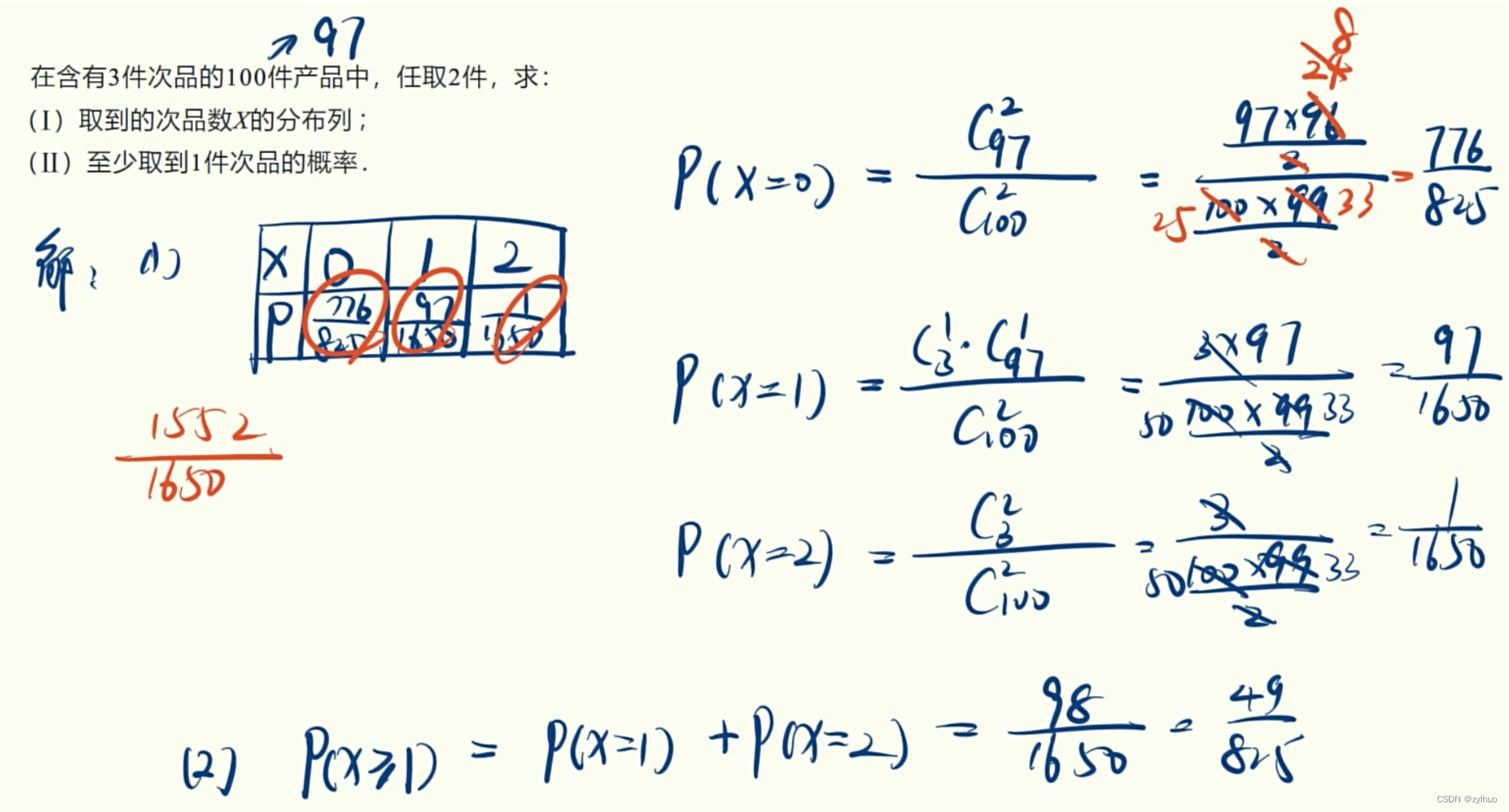

AI-数学-高中52-离散型随机变量概念及其分布列、两点分布

原作者视频:【随机变量】【一数辞典】2离散型随机变量及其分布列_哔哩哔哩_bilibili 离散型随机变量分布列:X表示离散型随机变量可能在取值,P:对应分布在概率,P括号里X1表示事件的名称。 示例:...

Amazon IoT 服务的组件

我们要讨论的第一个组件是设备 SDK。 您的连接设备需要进行编码,以便它们可以与平台连接并执行操作。 Amazon Device SDK 是一个软件开发套件,其中包括一组用于连接、身份验证和交换消息的客户端库。 SDK 提供多种流行语言版本,例如 C、Node.…...

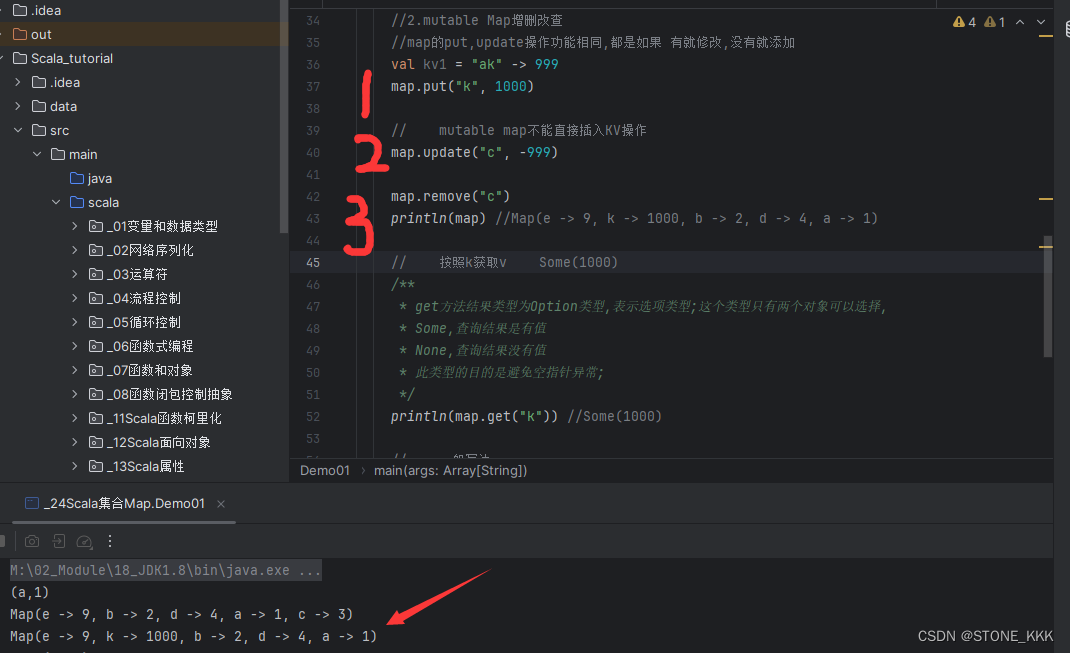

24_Scala集合Map

文章目录 Scala集合Map1.构建Map2.增删改查3.Map的get操作细节 Scala集合Map –默认immutable –概念和Java一致 1.构建Map –创建kv键值对 && kv键值对的表达 –创建immutable map –创建mutable map //1.1 构建一个kv键值对 val kv "a" -> 1 print…...

Agent AI智能体:我们的生活即将如何改变?

你有没有想过,那个帮你设置闹钟、提醒你朋友的生日,甚至帮你订外卖的智能助手,其实就是Agent AI智能体?它们已经在我们生活中扮演了越来越重要的角色。现在,让我们一起想象一下,随着这些AI智能体变得越来越…...

浪子易支付 最新版本源码 增加杉德、付呗支付插件 PayPal、汇付、虎皮椒插件

内容目录 一、详细介绍二、效果展示1.部分代码2.效果图展示 三、学习资料下载 一、详细介绍 2024/05/01: 1.更换全新的手机版支付页面风格 2.聚合收款码支持填写备注 3.后台支付统计新增利润、代付统计 4.删除结算记录支持直接退回商户金额 2024/03/31:…...

Java|用爬虫解决问题

使用Java进行网络爬虫开发是一种常见的选择,因为Java语言的稳定性和丰富的库支持使得处理网络请求、解析HTML/XML、数据抓取等任务变得更加便捷。下面是一个简单的Java爬虫示例,使用了Jsoup库来抓取网页内容。这个示例将展示如何抓取一个网页的标题。 准…...

美国站群服务器的CN2线路在国际互联网通信中的优势?

美国站群服务器的CN2线路在国际互联网通信中的优势? CN2线路,或称中国电信国际二类线路,是中国电信在全球范围内建设的高速骨干网络。这条线路通过海底光缆系统将中国与全球连接起来,为用户提供高速、低延迟的网络服务。CN2线路在国际互联网…...

设计模式和设计原则回顾

设计模式和设计原则回顾 23种设计模式是设计原则的完美体现,设计原则设计原则是设计模式的理论基石, 设计模式 在经典的设计模式分类中(如《设计模式:可复用面向对象软件的基础》一书中),总共有23种设计模式,分为三大类: 一、创建型模式(5种) 1. 单例模式(Sing…...

C++_核心编程_多态案例二-制作饮品

#include <iostream> #include <string> using namespace std;/*制作饮品的大致流程为:煮水 - 冲泡 - 倒入杯中 - 加入辅料 利用多态技术实现本案例,提供抽象制作饮品基类,提供子类制作咖啡和茶叶*//*基类*/ class AbstractDr…...

使用van-uploader 的UI组件,结合vue2如何实现图片上传组件的封装

以下是基于 vant-ui(适配 Vue2 版本 )实现截图中照片上传预览、删除功能,并封装成可复用组件的完整代码,包含样式和逻辑实现,可直接在 Vue2 项目中使用: 1. 封装的图片上传组件 ImageUploader.vue <te…...

实现弹窗随键盘上移居中

实现弹窗随键盘上移的核心思路 在Android中,可以通过监听键盘的显示和隐藏事件,动态调整弹窗的位置。关键点在于获取键盘高度,并计算剩余屏幕空间以重新定位弹窗。 // 在Activity或Fragment中设置键盘监听 val rootView findViewById<V…...

QT: `long long` 类型转换为 `QString` 2025.6.5

在 Qt 中,将 long long 类型转换为 QString 可以通过以下两种常用方法实现: 方法 1:使用 QString::number() 直接调用 QString 的静态方法 number(),将数值转换为字符串: long long value 1234567890123456789LL; …...

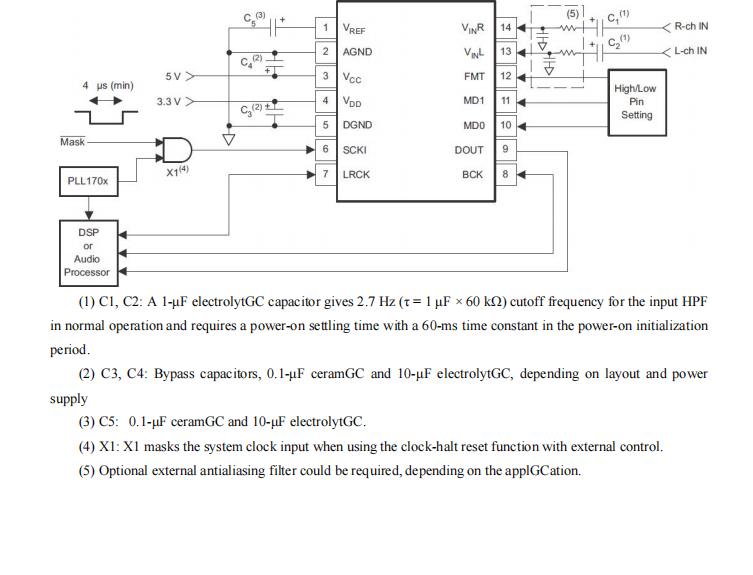

GC1808高性能24位立体声音频ADC芯片解析

1. 芯片概述 GC1808是一款24位立体声音频模数转换器(ADC),支持8kHz~96kHz采样率,集成Δ-Σ调制器、数字抗混叠滤波器和高通滤波器,适用于高保真音频采集场景。 2. 核心特性 高精度:24位分辨率,…...

鸿蒙DevEco Studio HarmonyOS 5跑酷小游戏实现指南

1. 项目概述 本跑酷小游戏基于鸿蒙HarmonyOS 5开发,使用DevEco Studio作为开发工具,采用Java语言实现,包含角色控制、障碍物生成和分数计算系统。 2. 项目结构 /src/main/java/com/example/runner/├── MainAbilitySlice.java // 主界…...

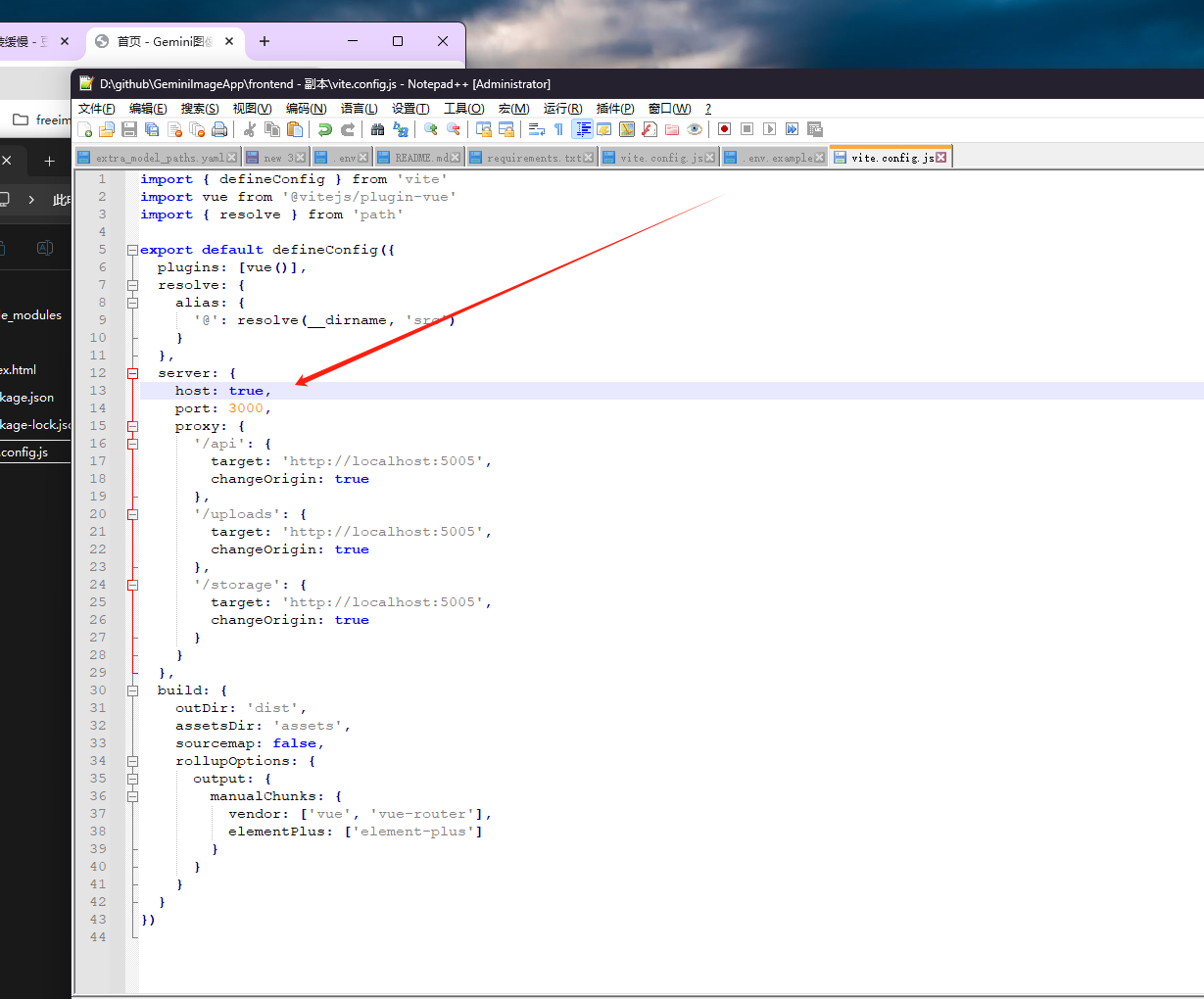

推荐 github 项目:GeminiImageApp(图片生成方向,可以做一定的素材)

推荐 github 项目:GeminiImageApp(图片生成方向,可以做一定的素材) 这个项目能干嘛? 使用 gemini 2.0 的 api 和 google 其他的 api 来做衍生处理 简化和优化了文生图和图生图的行为(我的最主要) 并且有一些目标检测和切割(我用不到) 视频和 imagefx 因为没 a…...

零知开源——STM32F103RBT6驱动 ICM20948 九轴传感器及 vofa + 上位机可视化教程

STM32F1 本教程使用零知标准板(STM32F103RBT6)通过I2C驱动ICM20948九轴传感器,实现姿态解算,并通过串口将数据实时发送至VOFA上位机进行3D可视化。代码基于开源库修改优化,适合嵌入式及物联网开发者。在基础驱动上新增…...

【LeetCode】算法详解#6 ---除自身以外数组的乘积

1.题目介绍 给定一个整数数组 nums,返回 数组 answer ,其中 answer[i] 等于 nums 中除 nums[i] 之外其余各元素的乘积 。 题目数据 保证 数组 nums之中任意元素的全部前缀元素和后缀的乘积都在 32 位 整数范围内。 请 不要使用除法,且在 O…...