动手学强化学习 第 18 章 离线强化学习 训练代码

基于 https://github.com/boyu-ai/Hands-on-RL/blob/main/%E7%AC%AC18%E7%AB%A0-%E7%A6%BB%E7%BA%BF%E5%BC%BA%E5%8C%96%E5%AD%A6%E4%B9%A0.ipynb

理论 离线强化学习

修改了警告和报错

运行环境

Debian GNU/Linux 12

Python 3.9.19

torch 2.0.1

gym 0.26.2运行代码

CQL.py

#!/usr/bin/env pythonimport numpy as np

import gym

from tqdm import tqdm

import random

import rl_utils

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.distributions import Normal

import matplotlib.pyplot as pltclass PolicyNetContinuous(torch.nn.Module):def __init__(self, state_dim, hidden_dim, action_dim, action_bound):super(PolicyNetContinuous, self).__init__()self.fc1 = torch.nn.Linear(state_dim, hidden_dim)self.fc_mu = torch.nn.Linear(hidden_dim, action_dim)self.fc_std = torch.nn.Linear(hidden_dim, action_dim)self.action_bound = action_bounddef forward(self, x):x = F.relu(self.fc1(x))mu = self.fc_mu(x)std = F.softplus(self.fc_std(x))dist = Normal(mu, std)normal_sample = dist.rsample() # rsample()是重参数化采样log_prob = dist.log_prob(normal_sample)action = torch.tanh(normal_sample)# 计算tanh_normal分布的对数概率密度log_prob = log_prob - torch.log(1 - torch.tanh(action).pow(2) + 1e-7)action = action * self.action_boundreturn action, log_probclass QValueNetContinuous(torch.nn.Module):def __init__(self, state_dim, hidden_dim, action_dim):super(QValueNetContinuous, self).__init__()self.fc1 = torch.nn.Linear(state_dim + action_dim, hidden_dim)self.fc2 = torch.nn.Linear(hidden_dim, hidden_dim)self.fc_out = torch.nn.Linear(hidden_dim, 1)def forward(self, x, a):cat = torch.cat([x, a], dim=1)x = F.relu(self.fc1(cat))x = F.relu(self.fc2(x))return self.fc_out(x)class SACContinuous:''' 处理连续动作的SAC算法 '''def __init__(self, state_dim, hidden_dim, action_dim, action_bound,actor_lr, critic_lr, alpha_lr, target_entropy, tau, gamma,device):self.actor = PolicyNetContinuous(state_dim, hidden_dim, action_dim,action_bound).to(device) # 策略网络self.critic_1 = QValueNetContinuous(state_dim, hidden_dim,action_dim).to(device) # 第一个Q网络self.critic_2 = QValueNetContinuous(state_dim, hidden_dim,action_dim).to(device) # 第二个Q网络self.target_critic_1 = QValueNetContinuous(state_dim,hidden_dim, action_dim).to(device) # 第一个目标Q网络self.target_critic_2 = QValueNetContinuous(state_dim,hidden_dim, action_dim).to(device) # 第二个目标Q网络# 令目标Q网络的初始参数和Q网络一样self.target_critic_1.load_state_dict(self.critic_1.state_dict())self.target_critic_2.load_state_dict(self.critic_2.state_dict())self.actor_optimizer = torch.optim.Adam(self.actor.parameters(),lr=actor_lr)self.critic_1_optimizer = torch.optim.Adam(self.critic_1.parameters(),lr=critic_lr)self.critic_2_optimizer = torch.optim.Adam(self.critic_2.parameters(),lr=critic_lr)# 使用alpha的log值,可以使训练结果比较稳定self.log_alpha = torch.tensor(np.log(0.01), dtype=torch.float)self.log_alpha.requires_grad = True # 对alpha求梯度self.log_alpha_optimizer = torch.optim.Adam([self.log_alpha],lr=alpha_lr)self.target_entropy = target_entropy # 目标熵的大小self.gamma = gammaself.tau = tauself.device = devicedef take_action(self, state):state = torch.tensor(np.array([state]), dtype=torch.float).to(self.device)action = self.actor(state)[0]return [action.item()]def calc_target(self, rewards, next_states, dones): # 计算目标Q值next_actions, log_prob = self.actor(next_states)entropy = -log_probq1_value = self.target_critic_1(next_states, next_actions)q2_value = self.target_critic_2(next_states, next_actions)next_value = torch.min(q1_value,q2_value) + self.log_alpha.exp() * entropytd_target = rewards + self.gamma * next_value * (1 - dones)return td_targetdef soft_update(self, net, target_net):for param_target, param in zip(target_net.parameters(),net.parameters()):param_target.data.copy_(param_target.data * (1.0 - self.tau) +param.data * self.tau)def update(self, transition_dict):states = torch.tensor(transition_dict['states'],dtype=torch.float).to(self.device)actions = torch.tensor(transition_dict['actions'],dtype=torch.float).view(-1, 1).to(self.device)rewards = torch.tensor(transition_dict['rewards'],dtype=torch.float).view(-1, 1).to(self.device)next_states = torch.tensor(transition_dict['next_states'],dtype=torch.float).to(self.device)dones = torch.tensor(transition_dict['dones'],dtype=torch.float).view(-1, 1).to(self.device)rewards = (rewards + 8.0) / 8.0 # 对倒立摆环境的奖励进行重塑# 更新两个Q网络td_target = self.calc_target(rewards, next_states, dones)critic_1_loss = torch.mean(F.mse_loss(self.critic_1(states, actions), td_target.detach()))critic_2_loss = torch.mean(F.mse_loss(self.critic_2(states, actions), td_target.detach()))self.critic_1_optimizer.zero_grad()critic_1_loss.backward()self.critic_1_optimizer.step()self.critic_2_optimizer.zero_grad()critic_2_loss.backward()self.critic_2_optimizer.step()# 更新策略网络new_actions, log_prob = self.actor(states)entropy = -log_probq1_value = self.critic_1(states, new_actions)q2_value = self.critic_2(states, new_actions)actor_loss = torch.mean(-self.log_alpha.exp() * entropy -torch.min(q1_value, q2_value))self.actor_optimizer.zero_grad()actor_loss.backward()self.actor_optimizer.step()# 更新alpha值alpha_loss = torch.mean((entropy - self.target_entropy).detach() * self.log_alpha.exp())self.log_alpha_optimizer.zero_grad()alpha_loss.backward()self.log_alpha_optimizer.step()self.soft_update(self.critic_1, self.target_critic_1)self.soft_update(self.critic_2, self.target_critic_2)env_name = 'Pendulum-v1'

env = gym.make(env_name)

state_dim = env.observation_space.shape[0]

action_dim = env.action_space.shape[0]

action_bound = env.action_space.high[0] # 动作最大值actor_lr = 3e-4

critic_lr = 3e-3

alpha_lr = 3e-4

num_episodes = 100

hidden_dim = 128

gamma = 0.99

tau = 0.005 # 软更新参数

buffer_size = 100000

minimal_size = 1000

batch_size = 64

target_entropy = -env.action_space.shape[0]

device = torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu")replay_buffer = rl_utils.ReplayBuffer(buffer_size)agent = SACContinuous(state_dim, hidden_dim, action_dim, action_bound,actor_lr, critic_lr, alpha_lr, target_entropy, tau,gamma, device)return_list = rl_utils.train_off_policy_agent(env, agent, num_episodes,replay_buffer, minimal_size,batch_size)episodes_list = list(range(len(return_list)))

plt.plot(episodes_list, return_list)

plt.xlabel('Episodes')

plt.ylabel('Returns')

plt.title('SAC on {}'.format(env_name))

plt.show()class CQL:''' CQL算法 '''def __init__(self, state_dim, hidden_dim, action_dim, action_bound,actor_lr, critic_lr, alpha_lr, target_entropy, tau, gamma,device, beta, num_random):self.actor = PolicyNetContinuous(state_dim, hidden_dim, action_dim,action_bound).to(device)self.critic_1 = QValueNetContinuous(state_dim, hidden_dim,action_dim).to(device)self.critic_2 = QValueNetContinuous(state_dim, hidden_dim,action_dim).to(device)self.target_critic_1 = QValueNetContinuous(state_dim, hidden_dim,action_dim).to(device)self.target_critic_2 = QValueNetContinuous(state_dim, hidden_dim,action_dim).to(device)self.target_critic_1.load_state_dict(self.critic_1.state_dict())self.target_critic_2.load_state_dict(self.critic_2.state_dict())self.actor_optimizer = torch.optim.Adam(self.actor.parameters(),lr=actor_lr)self.critic_1_optimizer = torch.optim.Adam(self.critic_1.parameters(),lr=critic_lr)self.critic_2_optimizer = torch.optim.Adam(self.critic_2.parameters(),lr=critic_lr)self.log_alpha = torch.tensor(np.log(0.01), dtype=torch.float)self.log_alpha.requires_grad = True # 对alpha求梯度self.log_alpha_optimizer = torch.optim.Adam([self.log_alpha],lr=alpha_lr)self.target_entropy = target_entropy # 目标熵的大小self.gamma = gammaself.tau = tauself.beta = beta # CQL损失函数中的系数self.num_random = num_random # CQL中的动作采样数def take_action(self, state):state = torch.tensor(np.array([state]), dtype=torch.float).to(device)action = self.actor(state)[0]return [action.item()]def soft_update(self, net, target_net):for param_target, param in zip(target_net.parameters(),net.parameters()):param_target.data.copy_(param_target.data * (1.0 - self.tau) +param.data * self.tau)def update(self, transition_dict):states = torch.tensor(transition_dict['states'],dtype=torch.float).to(device)actions = torch.tensor(transition_dict['actions'],dtype=torch.float).view(-1, 1).to(device)rewards = torch.tensor(transition_dict['rewards'],dtype=torch.float).view(-1, 1).to(device)next_states = torch.tensor(transition_dict['next_states'],dtype=torch.float).to(device)dones = torch.tensor(transition_dict['dones'],dtype=torch.float).view(-1, 1).to(device)rewards = (rewards + 8.0) / 8.0 # 对倒立摆环境的奖励进行重塑next_actions, log_prob = self.actor(next_states)entropy = -log_probq1_value = self.target_critic_1(next_states, next_actions)q2_value = self.target_critic_2(next_states, next_actions)next_value = torch.min(q1_value,q2_value) + self.log_alpha.exp() * entropytd_target = rewards + self.gamma * next_value * (1 - dones)critic_1_loss = torch.mean(F.mse_loss(self.critic_1(states, actions), td_target.detach()))critic_2_loss = torch.mean(F.mse_loss(self.critic_2(states, actions), td_target.detach()))# 以上与SAC相同,以下Q网络更新是CQL的额外部分batch_size = states.shape[0]random_unif_actions = torch.rand([batch_size * self.num_random, actions.shape[-1]],dtype=torch.float).uniform_(-1, 1).to(device)random_unif_log_pi = np.log(0.5 ** next_actions.shape[-1])tmp_states = states.unsqueeze(1).repeat(1, self.num_random,1).view(-1, states.shape[-1])tmp_next_states = next_states.unsqueeze(1).repeat(1, self.num_random, 1).view(-1, next_states.shape[-1])random_curr_actions, random_curr_log_pi = self.actor(tmp_states)random_next_actions, random_next_log_pi = self.actor(tmp_next_states)q1_unif = self.critic_1(tmp_states, random_unif_actions).view(-1, self.num_random, 1)q2_unif = self.critic_2(tmp_states, random_unif_actions).view(-1, self.num_random, 1)q1_curr = self.critic_1(tmp_states, random_curr_actions).view(-1, self.num_random, 1)q2_curr = self.critic_2(tmp_states, random_curr_actions).view(-1, self.num_random, 1)q1_next = self.critic_1(tmp_states, random_next_actions).view(-1, self.num_random, 1)q2_next = self.critic_2(tmp_states, random_next_actions).view(-1, self.num_random, 1)q1_cat = torch.cat([q1_unif - random_unif_log_pi,q1_curr - random_curr_log_pi.detach().view(-1, self.num_random, 1),q1_next - random_next_log_pi.detach().view(-1, self.num_random, 1)],dim=1)q2_cat = torch.cat([q2_unif - random_unif_log_pi,q2_curr - random_curr_log_pi.detach().view(-1, self.num_random, 1),q2_next - random_next_log_pi.detach().view(-1, self.num_random, 1)],dim=1)qf1_loss_1 = torch.logsumexp(q1_cat, dim=1).mean()qf2_loss_1 = torch.logsumexp(q2_cat, dim=1).mean()qf1_loss_2 = self.critic_1(states, actions).mean()qf2_loss_2 = self.critic_2(states, actions).mean()qf1_loss = critic_1_loss + self.beta * (qf1_loss_1 - qf1_loss_2)qf2_loss = critic_2_loss + self.beta * (qf2_loss_1 - qf2_loss_2)self.critic_1_optimizer.zero_grad()qf1_loss.backward(retain_graph=True)self.critic_1_optimizer.step()self.critic_2_optimizer.zero_grad()qf2_loss.backward(retain_graph=True)self.critic_2_optimizer.step()# 更新策略网络new_actions, log_prob = self.actor(states)entropy = -log_probq1_value = self.critic_1(states, new_actions)q2_value = self.critic_2(states, new_actions)actor_loss = torch.mean(-self.log_alpha.exp() * entropy -torch.min(q1_value, q2_value))self.actor_optimizer.zero_grad()actor_loss.backward()self.actor_optimizer.step()# 更新alpha值alpha_loss = torch.mean((entropy - self.target_entropy).detach() * self.log_alpha.exp())self.log_alpha_optimizer.zero_grad()alpha_loss.backward()self.log_alpha_optimizer.step()self.soft_update(self.critic_1, self.target_critic_1)self.soft_update(self.critic_2, self.target_critic_2)random.seed(0)

np.random.seed(0)

env.reset(seed=0)

torch.manual_seed(0)beta = 5.0

num_random = 5

num_epochs = 100

num_trains_per_epoch = 500agent = CQL(state_dim, hidden_dim, action_dim, action_bound, actor_lr,critic_lr, alpha_lr, target_entropy, tau, gamma, device, beta,num_random)return_list = []

for i in range(10):with tqdm(total=int(num_epochs / 10), desc='Iteration %d' % i) as pbar:for i_epoch in range(int(num_epochs / 10)):# 此处与环境交互只是为了评估策略,最后作图用,不会用于训练epoch_return = 0state = env.reset()[0]done = Falsefor num in range(10000):action = agent.take_action(state)next_state, reward, done, _, __ = env.step(action)state = next_stateepoch_return += rewardif done:print(done)breakreturn_list.append(epoch_return)for _ in range(num_trains_per_epoch):b_s, b_a, b_r, b_ns, b_d = replay_buffer.sample(batch_size)transition_dict = {'states': b_s,'actions': b_a,'next_states': b_ns,'rewards': b_r,'dones': b_d}agent.update(transition_dict)if (i_epoch + 1) % 10 == 0:pbar.set_postfix({'epoch':'%d' % (num_epochs / 10 * i + i_epoch + 1),'return':'%.3f' % np.mean(return_list[-10:])})pbar.update(1)epochs_list = list(range(len(return_list)))

plt.plot(epochs_list, return_list)

plt.xlabel('Epochs')

plt.ylabel('Returns')

plt.title('CQL on {}'.format(env_name))

plt.show()mv_return = rl_utils.moving_average(return_list, 9)

plt.plot(episodes_list, mv_return)

plt.xlabel('Episodes')

plt.ylabel('Returns')

plt.title('CQL on {}'.format(env_name))

plt.show()

rl_utils.py

from tqdm import tqdm

import numpy as np

import torch

import collections

import randomclass ReplayBuffer:def __init__(self, capacity):self.buffer = collections.deque(maxlen=capacity)def add(self, state, action, reward, next_state, done):self.buffer.append((state, action, reward, next_state, done))def sample(self, batch_size):transitions = random.sample(self.buffer, batch_size)state, action, reward, next_state, done = zip(*transitions)return np.array(state), action, reward, np.array(next_state), donedef size(self):return len(self.buffer)def moving_average(a, window_size):cumulative_sum = np.cumsum(np.insert(a, 0, 0))middle = (cumulative_sum[window_size:] - cumulative_sum[:-window_size]) / window_sizer = np.arange(1, window_size - 1, 2)begin = np.cumsum(a[:window_size - 1])[::2] / rend = (np.cumsum(a[:-window_size:-1])[::2] / r)[::-1]return np.concatenate((begin, middle, end))def train_on_policy_agent(env, agent, num_episodes):return_list = []for i in range(10):with tqdm(total=int(num_episodes / 10), desc='Iteration %d' % i) as pbar:for i_episode in range(int(num_episodes / 10)):episode_return = 0transition_dict = {'states': [], 'actions': [], 'next_states': [], 'rewards': [], 'dones': []}state = env.reset()[0]done = Falsewhile not done and len(transition_dict['states']) < 2000:action = agent.take_action(state)next_state, reward, done, _, __ = env.step(action)transition_dict['states'].append(state)transition_dict['actions'].append(action)transition_dict['next_states'].append(next_state)transition_dict['rewards'].append(reward)transition_dict['dones'].append(done)state = next_stateepisode_return += rewardreturn_list.append(episode_return)agent.update(transition_dict)if (i_episode + 1) % 10 == 0:pbar.set_postfix({'episode': '%d' % (num_episodes / 10 * i + i_episode + 1),'return': '%.3f' % np.mean(return_list[-10:])})pbar.update(1)return return_listdef train_off_policy_agent(env, agent, num_episodes, replay_buffer, minimal_size, batch_size):return_list = []for i in range(10):with tqdm(total=int(num_episodes / 10), desc='Iteration %d' % i) as pbar:for i_episode in range(int(num_episodes / 10)):episode_return = 0state = env.reset()[0]done = Falsefor num in range(1000):action = agent.take_action(state)next_state, reward, done, _, __ = env.step(action)replay_buffer.add(state, action, reward, next_state, done)state = next_stateepisode_return += rewardif replay_buffer.size() > minimal_size:b_s, b_a, b_r, b_ns, b_d = replay_buffer.sample(batch_size)transition_dict = {'states': b_s, 'actions': b_a, 'next_states': b_ns, 'rewards': b_r,'dones': b_d}agent.update(transition_dict)return_list.append(episode_return)if (i_episode + 1) % 10 == 0:pbar.set_postfix({'episode': '%d' % (num_episodes / 10 * i + i_episode + 1),'return': '%.3f' % np.mean(return_list[-10:])})pbar.update(1)return return_listdef compute_advantage(gamma, lmbda, td_delta):td_delta = td_delta.detach().numpy()advantage_list = []advantage = 0.0for delta in td_delta[::-1]:advantage = gamma * lmbda * advantage + deltaadvantage_list.append(advantage)advantage_list.reverse()return torch.tensor(np.array(advantage_list), dtype=torch.float)

相关文章:

动手学强化学习 第 18 章 离线强化学习 训练代码

基于 https://github.com/boyu-ai/Hands-on-RL/blob/main/%E7%AC%AC18%E7%AB%A0-%E7%A6%BB%E7%BA%BF%E5%BC%BA%E5%8C%96%E5%AD%A6%E4%B9%A0.ipynb 理论 离线强化学习 修改了警告和报错 运行环境 Debian GNU/Linux 12 Python 3.9.19 torch 2.0.1 gym 0.26.2 运行代码 CQL.…...

Python笔试面试题AI答之面向对象常考知识点

Python面向对象面试题面试题覆盖了Python面向对象编程(OOP)的多个重要概念和技巧,包括元类(Metaclass)、自省(Introspection)、面向切面编程(AOP)和装饰器、重载…...

面试经典算法150题系列-数组/字符串操作之买卖股票最佳时机

买卖股票最佳时机 给定一个数组 prices ,它的第 i 个元素 prices[i] 表示一支给定股票第 i 天的价格。 你只能选择 某一天 买入这只股票,并选择在 未来的某一个不同的日子 卖出该股票。设计一个算法来计算你所能获取的最大利润。 返回你可以从这笔交易…...

安装jdk和tomcat

安装nodejs 1.安装nodejs,这是一个jdk一样的软件运行环境 yum -y list installed|grep epel yum -y install nodejs node -v 2.下载对应的nodejs软件npm yum -y install npm npm -v npm set config .....淘宝镜像 3.安装vue/cli command line interface 命令行接…...

mongodb 备份还原

### 加入 MongoDB 官方 repositoryecho [mongodb-org-4.4] nameMongoDB Repository baseurlhttps://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/4.4/x86_64/ gpgcheck1 enabled1 gpgkeyhttps://www.mongodb.org/static/pgp/server-4.4.asc| tee /etc/yum.repos.d/mo…...

day27——homework

1、使用两个线程完成两个文件的拷贝,分支线程1拷贝前一半,分支线程2拷贝后一半,主线程回收两个分支线程的资源 #include <stdio.h> #include <stdlib.h> #include <pthread.h> #include <fcntl.h> #include <uni…...

shell脚本自动化部署

1、自动化部署DNS [rootweb ~]# vim dns.sh [roottomcat ~]# yum -y install bind-utils [roottomcat ~]# echo "nameserver 192.168.8.132" > /etc/resolv.conf [roottomcat ~]# nslookup www.a.com 2、自动化部署rsync [rootweb ~]# vim rsync.sh [rootweb ~]# …...

C语言| 文件操作详解(二)

目录 四、有关文件的随机读写函数 4.1 fseek 4.2 ftell 4.3 rewind 五、判定文件读取结束的标准与读写文件中途发生错误的解决办法 5.1 判定文件读取结束的标准 5.2 函数ferror与feof 5.2.1 函数ferror 5.2.2 函数feof 在上一章中,我们主要介绍了文件类型…...

保证项目如期上线,测试人能做些什么?

要保证项目按照正常进度发布,需要整个研发团队齐心协力。 有很多原因都可能会造成项目延期。 1、产品经理频繁修改需求 2、开发团队存在技术难题 3、测试团队测不完 今天我想跟大家聊一下,测试团队如何保证项目按期上线,以及在这个过程中可能…...

【杂谈】在大学如何学得计算机知识,浅谈大一经验总结

大学新生的入门经验简谈 我想在学习编程这条路上,很多同学感到些许困惑,摸爬滚打一年,转眼就要进入大二学习了,下面浅谈个人经验与反思总结。倘若说你是迷茫的,希望这点经验对你有帮助;但倘若你有更好的建…...

Superset二次开发之柱状图实现同时显示百分比、原始值、汇总值的功能

背景 柱状图贡献模式选择行,堆积样式选择Stack,默认展示百分比,可以展示每个堆积的百分比,但是无法实现同时展示百分比、原始值、汇总值的效果。借助Tooltip可以实现,但是不直观。 柱状图来自Echarts插件,可以先考虑Echarts的柱状图如何实现此需求,再研究Superset项目的…...

堆的创建和说明

文章目录 目录 文章目录 前言 小堆: 大堆: 二、使用步骤 1.创建二叉树 2.修改为堆 3.向上调整 结果实现 总结 前言 我们已经知道了二叉树的样子,但是一般的二叉树是没有什么意义的,所以我们会使用一些特殊的二叉树来进行实现&a…...

【玩转python】入门篇day14-函数

1、函数的定义 函数通过def定义,包括函数名、参数、返回值 # 定义函数 def test(a,b): # a,b表示形式参数print(a b)#函数体(具体的功能)return a*b #返回值# 函数调用 test(12,43) # 12和43表示实际参数,在调用函数时,会替换形式参数a,b下面这个展示了稍微复…...

uni-app 将base64图片转换成临时地址

function getTempFilePath(base64Data) {return new Promise((resolve, reject) > {const fs uni.getFileSystemManager()base64Data base64Data.split(,)[1]const fileName temp_image_ Date.now() .png // 自定义文件名,可根据需要修改const filePath un…...

C#用Socket实现TCP客户端

1、TCP客户端实现代码 using System; using System.Collections.Generic; using System.Linq; using System.Net; using System.Net.Sockets; using System.Text; using System.Threading; using System.Threading.Tasks;namespace PtLib.TcpClient {public delegate void Tcp…...

jmeter-beanshell学习15-输入日期,计算前后几天的日期

又遇到新问题了,想要根据获取的日期,计算出前面两天的日期。网上找了半天,全都是写获取当天日期,然后计算昨天的日期,照葫芦画瓢也没改出来想要的,最后求助了开发同学。 先放上网上获取当天,计…...

Zabbix 7.0 安装

在zabbix官网中有着比较完善的安装步骤,针对不同的系统都有。可以直接按照举例说明进行安装。本文只是针对其提供的安装步骤进行一些说明解释补充。 安装环境 操作系统版本:AlmaLinux 9.4(10.10.20.200)zabbix版本:7.…...

软考高级-系统架构设计师

2024广东深圳考试时间 报考人员可登录中国计算机技术职业资格网(http://www.ruankao.org.cn)进行网上报名,报名前须扫码绑定个人微信,不允许代报名。 上半年考试报名信息填报时间:2024年3月25日9:00-4月2日…...

Notepad++ 安装 compare 插件

文章目录 文章介绍对比效果安装过程参考链接 文章介绍 compare 插件用于对比文本差异 对比效果 安装过程 搜索compare插件 参考链接 添加链接描述...

大数据技术原理-spark的安装

摘要 本实验报告详细记录了在"大数据技术原理"课程中进行的Spark安装与应用实验。实验环境包括Spark、Hadoop和Java。实验内容涵盖了Spark的安装、配置、启动,以及使用Spark进行基本的数据操作,如读取本地文件、文件内容计数、模式匹配和行数…...

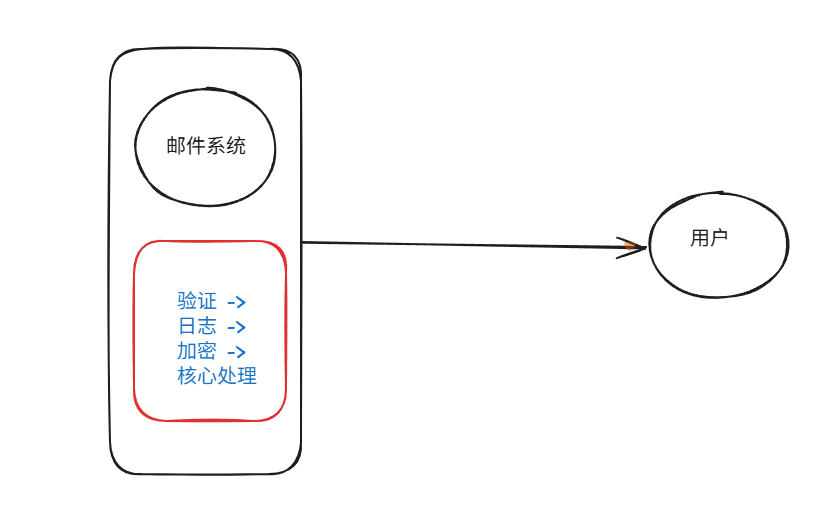

装饰模式(Decorator Pattern)重构java邮件发奖系统实战

前言 现在我们有个如下的需求,设计一个邮件发奖的小系统, 需求 1.数据验证 → 2. 敏感信息加密 → 3. 日志记录 → 4. 实际发送邮件 装饰器模式(Decorator Pattern)允许向一个现有的对象添加新的功能,同时又不改变其…...

AI Agent与Agentic AI:原理、应用、挑战与未来展望

文章目录 一、引言二、AI Agent与Agentic AI的兴起2.1 技术契机与生态成熟2.2 Agent的定义与特征2.3 Agent的发展历程 三、AI Agent的核心技术栈解密3.1 感知模块代码示例:使用Python和OpenCV进行图像识别 3.2 认知与决策模块代码示例:使用OpenAI GPT-3进…...

1688商品列表API与其他数据源的对接思路

将1688商品列表API与其他数据源对接时,需结合业务场景设计数据流转链路,重点关注数据格式兼容性、接口调用频率控制及数据一致性维护。以下是具体对接思路及关键技术点: 一、核心对接场景与目标 商品数据同步 场景:将1688商品信息…...

Linux云原生安全:零信任架构与机密计算

Linux云原生安全:零信任架构与机密计算 构建坚不可摧的云原生防御体系 引言:云原生安全的范式革命 随着云原生技术的普及,安全边界正在从传统的网络边界向工作负载内部转移。Gartner预测,到2025年,零信任架构将成为超…...

鸿蒙中用HarmonyOS SDK应用服务 HarmonyOS5开发一个生活电费的缴纳和查询小程序

一、项目初始化与配置 1. 创建项目 ohpm init harmony/utility-payment-app 2. 配置权限 // module.json5 {"requestPermissions": [{"name": "ohos.permission.INTERNET"},{"name": "ohos.permission.GET_NETWORK_INFO"…...

Linux --进程控制

本文从以下五个方面来初步认识进程控制: 目录 进程创建 进程终止 进程等待 进程替换 模拟实现一个微型shell 进程创建 在Linux系统中我们可以在一个进程使用系统调用fork()来创建子进程,创建出来的进程就是子进程,原来的进程为父进程。…...

2023赣州旅游投资集团

单选题 1.“不登高山,不知天之高也;不临深溪,不知地之厚也。”这句话说明_____。 A、人的意识具有创造性 B、人的认识是独立于实践之外的 C、实践在认识过程中具有决定作用 D、人的一切知识都是从直接经验中获得的 参考答案: C 本题解…...

【分享】推荐一些办公小工具

1、PDF 在线转换 https://smallpdf.com/cn/pdf-tools 推荐理由:大部分的转换软件需要收费,要么功能不齐全,而开会员又用不了几次浪费钱,借用别人的又不安全。 这个网站它不需要登录或下载安装。而且提供的免费功能就能满足日常…...

Ubuntu Cursor升级成v1.0

0. 当前版本低 使用当前 Cursor v0.50时 GitHub Copilot Chat 打不开,快捷键也不好用,当看到 Cursor 升级后,还是蛮高兴的 1. 下载 Cursor 下载地址:https://www.cursor.com/cn/downloads 点击下载 Linux (x64) ,…...

在 Spring Boot 中使用 JSP

jsp? 好多年没用了。重新整一下 还费了点时间,记录一下。 项目结构: pom: <?xml version"1.0" encoding"UTF-8"?> <project xmlns"http://maven.apache.org/POM/4.0.0" xmlns:xsi"http://ww…...