AI部署——主流模型推理部署框架

我们以最经典的Yolov5目标检测网络为例解释一下10种主流推理部署框架的大概内容,省略模型训练的过程,只讨论模型转换、环境配置、推理部署等步骤。

- Intel的OpenVINO — CPU

- Nvidia的TensorRT — GPU/CPU

- OpenCV DNN Module — GPU/CPU

- Microsoft ONNX Runtime — GPU/CPU

- 腾讯 NCNN— 移动端

- 阿里巴巴 MNN — 移动端

- Rockchip(瑞芯微)的RKNN — 移动端(NPU)

- 百度PaddlePaddle(飞桨) — 服务器端/移动端

- 华为 MindSpore — 移动、边缘和云端(深度学习框架)

- Google TensorFlow Lite — 移动端

Intel的OpenVINO — CPU

OpenVINO是英特尔基于自身现有的硬件平台开发的一种可以加快高性能计算机视觉和深度学习视觉应用开发速度工具套件,支持各种英特尔平台的硬件加速器上进行深度学习,并且允许直接异构执行。 支持在Windows与Linux系统,Python/C++语言。

首先,确保已安装OpenVINO工具包。可以从OpenVINO官网下载并安装。

tar -xvzf l_openvino_toolkit_p_2020.3.194.tgz

cd l_openvino_toolkit_p_2020.3.194

运行图形化安装命令:sudo ./install_GUI.sh至此,OpenVINO 核心组件安装完成,接下来安装依赖包。

cd /opt/intel/openvino/install_dependencies

sudo -E ./install_openvino_dependencies.sh

安装完成后,设置环境变量:source /opt/intel/openvino/bin/setupvars.sh

接下来配置模型优化器,依次运行以下命令:

cd /opt/intel/openvino/deployment_tools/model_optimizer/install_prerequisites

sudo ./install_prerequisites.sh

上面这条命令会安装所有的深度学习框架的支持,如果只希望安装某一个框架的支持,以安装Caffe 框架支持为例,可以这么做:sudo ./install_prerequisites_caffe.sh

接下来,我们使用YOLOv5的自带工具将模型导出为ONNX格式:

python export.py --weights yolov5s.pt --img 640 --batch 1 --device cpu --simplify

再使用OpenVINO提供的Model Optimizer工具,将ONNX模型转换为OpenVINO IR格式(.xml和.bin文件)。运行以下命令:

mo --input_model yolov5s.onnx --output_dir . --data_type FP16

# python mo_onnx.py --input_model yolov5s.onnx --output_dir .

使用netron观察模型output节点,接下来就是编写模型推理代码。

模型载入

import openvino.runtime as ov

import cv2

import numpy as np

import openvino.preprocess as op

def Init():global coreglobal modelglobal compiled_model

global infer_request

#核心创建

core = ov.Core()

#读取用YOLOv5模型转换而来的IR模型

model = core.read_model("best2.xml", "best2.bin")

#运用PPP(PrePostProcessor)对模型进行预处理

Premodel = op.PrePostProcessor(model)

Premodel.input().tensor().set_element_type(ov.Type.u8).set_layout(ov.Layout("NHWC")).set_color_format(op.ColorFormat.BGR)

Premodel.input().preprocess().convert_element_type(ov.Type.f32).convert_color(op.ColorFormat.RGB).scale([255., 255., 255.])Premodel.input().model().set_layout(ov.Layout("NCHW"))Premodel.output(0).tensor().set_element_type(ov.Type.f32)model = Premodel.build()compiled_model = core.compile_model(model, "CPU") #加载模型,可用CPU or GPUinfer_request = compiled_model.create_infer_request() #生成推理

图像尺寸调整

def resizeimg(image, new_shape):

old_size = image.shape[:2]

#记录新形状和原生图像矩形形状的比率ratio = float(new_shape[-1] / max(old_size)) new_size = tuple([int(x * ratio) for x in old_size])image = cv2.resize(image, (new_size[1], new_size[0]))delta_w = new_shape[1] - new_size[1]delta_h = new_shape[0] - new_size[0]

color = [100, 100, 100]

new_im = cv2.copyMakeBorder(image, 0, delta_h, 0, delta_w, cv2.BORDER_CONSTANT, value=color) #增广操作return new_im, delta_w, delta_h

推理过程以及结果展示

#************************************#

# 推理主程序 #

def main(img,infer_request):push =[]img_re,dw,dh = resizeimg(img,(640,640)) #尺寸处理input_tensor = np.expand_dims(img_re, 0) #获得输入张量infer_request.infer({0: input_tensor}) #输入到推理引擎output = infer_request.get_output_tensor(0) #获得推理结果detections = output.data[0] #获得检测数据boxes = []class_ids = []confidences = []for prediction in detections:confidence = prediction[4].item() #获取置信度if confidence >= 0.6: #初步过滤,过滤掉绝大多数的无效数据classes_scores = prediction[5:]_, _, _, max_indx = cv2.minMaxLoc(classes_scores)class_id = max_indx[1]if (classes_scores[class_id] > .25):confidences.append(confidence)class_ids.append(class_id)x, y, w, h = prediction[0].item(), prediction[1].item(), prediction[2].item(), prediction[3].item() #获取有效信息xmin = x - (w / 2) #由于NMSBoxes缘故,需要从中心点得到左上角点ymin = y - (h / 2)box = np.array([xmin, ymin, w, h]) #记录数据boxes.append(box)indexes = cv2.dnn.NMSBoxes(boxes, confidences, 0.5, 0.5) #NMS筛选detections = []for i in indexes:j = i.item()detections.append({"class_index": class_ids[j], "confidence": confidences[j], "box": boxes[j]}) #储存获取的目标名称和框选位for detection in detections:box = detection["box"]classId = detection["class_index"]confidence = detection["confidence"]

if(confidence<0.88): #再次过滤continueelse :push.append(classId)rx = img.shape[1] / (img_re.shape[1] - dw)ry = img.shape[0] / (img_re.shape[0] - dh)img_re = cv2.rectangle(img_re, (int(box[0]), int(box[1])), (int(box[0] + box[2]), int(box[1] + box[3])), (0, 255, 0), 3)box[0] = rx * box[0] #恢复原尺寸box,如果尺寸不变可以忽略box[1] = box[1] *rybox[2] = rx * box[2]box[3] = box[3] *ryxmax = box[0] + box[2]ymax = box[1] + box[3]img = cv2.rectangle(img, (int(box[0]), int(box[1])), (int(xmax), int(ymax)), (0, 255, 0), 3) #绘制物体框img = cv2.rectangle(img, (int(box[0]), int(box[1]) - 20), (int(xmax), int(box[1])), (0, 255, 0), cv2.FILLED) #绘制目标名称底色填充矩形img = cv2.putText(img, str(label[classId])+' '+str(int(confidence*100))+'%', (int(box[0]), int(box[1]) - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 0)) #绘制目标名称cv2.imshow("d", img_re)cv2.imshow('w',img)cv2.waitKey(0)

主程序

#********************主程序***********************#

def MainToSolve(infer):img = cv2.imread("boundtest.jpg") #如果需要实时,只需要将输入img变成从摄像机抓取的帧画面

main(img,infer)#从这里开始,初始化以及推理

Init()

MainToSolve(infer_request)

Nvidia的TensorRT — GPU/CPU

TensorRT是可以在NVIDIA各种GPU硬件平台下运行的一个C++推理框架。我们利用Pytorch、TF或者其他框架训练好的模型,可以转化为TensorRT的格式,然后利用TensorRT推理引擎去运行我们这个模型,从而提升这个模型在英伟达GPU上运行的速度。速度提升的比例是比较可观的。

模型转换:

python export.py ---weights weights/v5lite-g.pt --batch-size 1 --imgsz 640 --include onnx --simplify

trtexec --explicitBatch --onnx=./v5lite-g.onnx --saveEngine=v5lite-g.trt --fp16

YOLOv5-Lite的TensorRT — C++版本示例

模型加载

void V5lite::LoadEngine() {// create and load enginestd::fstream existEngine;existEngine.open(engine_file, std::ios::in);readTrtFile(engine_file, engine);assert(engine != nullptr);

}

预处理输入图像

std::vector<float> V5lite::prepareImage(std::vector<cv::Mat> &vec_img) {std::vector<float> result(BATCH_SIZE * IMAGE_WIDTH * IMAGE_HEIGHT * INPUT_CHANNEL);float *data = result.data();int index = 0;for (const cv::Mat &src_img : vec_img){if (!src_img.data)continue;float ratio = float(IMAGE_WIDTH) / float(src_img.cols) < float(IMAGE_HEIGHT) / float(src_img.rows) ? float(IMAGE_WIDTH) / float(src_img.cols) : float(IMAGE_HEIGHT) / float(src_img.rows);cv::Mat flt_img = cv::Mat::zeros(cv::Size(IMAGE_WIDTH, IMAGE_HEIGHT), CV_8UC3);cv::Mat rsz_img;cv::resize(src_img, rsz_img, cv::Size(), ratio, ratio);rsz_img.copyTo(flt_img(cv::Rect(0, 0, rsz_img.cols, rsz_img.rows)));flt_img.convertTo(flt_img, CV_32FC3, 1.0 / 255);//HWC TO CHWint channelLength = IMAGE_WIDTH * IMAGE_HEIGHT;std::vector<cv::Mat> split_img = {cv::Mat(IMAGE_HEIGHT, IMAGE_WIDTH, CV_32FC1, data + channelLength * (index + 2)),cv::Mat(IMAGE_HEIGHT, IMAGE_WIDTH, CV_32FC1, data + channelLength * (index + 1)),cv::Mat(IMAGE_HEIGHT, IMAGE_WIDTH, CV_32FC1, data + channelLength * index)};index += 3;cv::split(flt_img, split_img);}return result;

}

模型推理

bool V5lite::InferenceFolder(const std::string &folder_name) {std::vector<std::string> sample_images = readFolder(folder_name);//get contextassert(engine != nullptr);context = engine->createExecutionContext();assert(context != nullptr);//get buffersassert(engine->getNbBindings() == 2);void *buffers[2];std::vector<int64_t> bufferSize;int nbBindings = engine->getNbBindings();bufferSize.resize(nbBindings);for (int i = 0; i < nbBindings; ++i) {nvinfer1::Dims dims = engine->getBindingDimensions(i);nvinfer1::DataType dtype = engine->getBindingDataType(i);int64_t totalSize = volume(dims) * 1 * getElementSize(dtype);bufferSize[i] = totalSize;std::cout << "binding" << i << ": " << totalSize << std::endl;cudaMalloc(&buffers[i], totalSize);}//get streamcudaStream_t stream;cudaStreamCreate(&stream);int outSize = bufferSize[1] / sizeof(float) / BATCH_SIZE;EngineInference(sample_images, outSize, buffers, bufferSize, stream);// release the stream and the bufferscudaStreamDestroy(stream);cudaFree(buffers[0]);cudaFree(buffers[1]);// destroy the enginecontext->destroy();engine->destroy();

}OpenCV DNN Module — GPU/CPU

OpenCV的DNN(Deep Neural Network)模块是一个强大的工具,允许开发者在计算机视觉应用中使用深度学习模型。该模块支持多种深度学习框架和模型格式,并提供了高效的推理能力。

安装依赖

sudo apt-get update

sudo apt-get install build-essential cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev

sudo apt-get install python3-dev python3-numpy libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff-dev

sudo apt-get install libdc1394-22-dev

编译opencv

cd opencv

mkdir build

cd build

cmake -D CMAKE_BUILD_TYPE=Release -D CMAKE_INSTALL_PREFIX=/usr/local -D OPENCV_EXTRA_MODULES_PATH=../../opencv_contrib/modules -D WITH_CUDA=ON -D WITH_CUDNN=ON -D OPENCV_DNN_CUDA=ON ..

make -j$(nproc)

sudo make install

sudo ldconfig

示例代码

#include <vector>

#include <string>

#include <utility>#include <opencv2/opencv.hpp>using namespace std;

using namespace cv;

using namespace dnn;vector< pair<dnn::Backend, dnn::Target> > backendTargetPairs = {std::make_pair<dnn::Backend, dnn::Target>(dnn::DNN_BACKEND_OPENCV, dnn::DNN_TARGET_CPU),std::make_pair<dnn::Backend, dnn::Target>(dnn::DNN_BACKEND_CUDA, dnn::DNN_TARGET_CUDA),std::make_pair<dnn::Backend, dnn::Target>(dnn::DNN_BACKEND_CUDA, dnn::DNN_TARGET_CUDA_FP16),std::make_pair<dnn::Backend, dnn::Target>(dnn::DNN_BACKEND_TIMVX, dnn::DNN_TARGET_NPU),std::make_pair<dnn::Backend, dnn::Target>(dnn::DNN_BACKEND_CANN, dnn::DNN_TARGET_NPU) };vector<string> labelYolox = {"person", "bicycle", "car", "motorcycle", "airplane", "bus","train", "truck", "boat", "traffic light", "fire hydrant","stop sign", "parking meter", "bench", "bird", "cat", "dog","horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe","backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee","skis", "snowboard", "sports ball", "kite", "baseball bat","baseball glove", "skateboard", "surfboard", "tennis racket","bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl","banana", "apple", "sandwich", "orange", "broccoli", "carrot","hot dog", "pizza", "donut", "cake", "chair", "couch","potted plant", "bed", "dining table", "toilet", "tv", "laptop","mouse", "remote", "keyboard", "cell phone", "microwave","oven", "toaster", "sink", "refrigerator", "book", "clock","vase", "scissors", "teddy bear", "hair drier", "toothbrush" };class YoloX {

private:Net net;string modelPath;Size inputSize;float confThreshold;float nmsThreshold;float objThreshold;dnn::Backend backendId;dnn::Target targetId;int num_classes;vector<int> strides;Mat expandedStrides;Mat grids;public:YoloX(string modPath, float confThresh = 0.35, float nmsThresh = 0.5, float objThresh = 0.5, dnn::Backend bId = DNN_BACKEND_DEFAULT, dnn::Target tId = DNN_TARGET_CPU) :modelPath(modPath), confThreshold(confThresh),nmsThreshold(nmsThresh), objThreshold(objThresh),backendId(bId), targetId(tId){this->num_classes = int(labelYolox.size());this->net = readNet(modelPath);this->inputSize = Size(640, 640);this->strides = vector<int>{ 8, 16, 32 };this->net.setPreferableBackend(this->backendId);this->net.setPreferableTarget(this->targetId);this->generateAnchors();}Mat preprocess(Mat img){Mat blob;Image2BlobParams paramYolox;paramYolox.datalayout = DNN_LAYOUT_NCHW;paramYolox.ddepth = CV_32F;paramYolox.mean = Scalar::all(0);paramYolox.scalefactor = Scalar::all(1);paramYolox.size = Size(img.cols, img.rows);paramYolox.swapRB = true;blob = blobFromImageWithParams(img, paramYolox);return blob;}Mat infer(Mat srcimg){Mat inputBlob = this->preprocess(srcimg);this->net.setInput(inputBlob);vector<Mat> outs;this->net.forward(outs, this->net.getUnconnectedOutLayersNames());Mat predictions = this->postprocess(outs[0]);return predictions;}Mat postprocess(Mat outputs){Mat dets = outputs.reshape(0,outputs.size[1]);Mat col01;add(dets.colRange(0, 2), this->grids, col01);Mat col23;exp(dets.colRange(2, 4), col23);vector<Mat> col = { col01, col23 };Mat boxes;hconcat(col, boxes);float* ptr = this->expandedStrides.ptr<float>(0);for (int r = 0; r < boxes.rows; r++, ptr++){boxes.rowRange(r, r + 1) = *ptr * boxes.rowRange(r, r + 1);}// get boxesMat boxes_xyxy(boxes.rows, boxes.cols, CV_32FC1, Scalar(1));Mat scores = dets.colRange(5, dets.cols).clone();vector<float> maxScores(dets.rows);vector<int> maxScoreIdx(dets.rows);vector<Rect2d> boxesXYXY(dets.rows);for (int r = 0; r < boxes_xyxy.rows; r++, ptr++){boxes_xyxy.at<float>(r, 0) = boxes.at<float>(r, 0) - boxes.at<float>(r, 2) / 2.f;boxes_xyxy.at<float>(r, 1) = boxes.at<float>(r, 1) - boxes.at<float>(r, 3) / 2.f;boxes_xyxy.at<float>(r, 2) = boxes.at<float>(r, 0) + boxes.at<float>(r, 2) / 2.f;boxes_xyxy.at<float>(r, 3) = boxes.at<float>(r, 1) + boxes.at<float>(r, 3) / 2.f;// get scores and class indicesscores.rowRange(r, r + 1) = scores.rowRange(r, r + 1) * dets.at<float>(r, 4);double minVal, maxVal;Point maxIdx;minMaxLoc(scores.rowRange(r, r+1), &minVal, &maxVal, nullptr, &maxIdx);maxScoreIdx[r] = maxIdx.x;maxScores[r] = float(maxVal);boxesXYXY[r].x = boxes_xyxy.at<float>(r, 0);boxesXYXY[r].y = boxes_xyxy.at<float>(r, 1);boxesXYXY[r].width = boxes_xyxy.at<float>(r, 2);boxesXYXY[r].height = boxes_xyxy.at<float>(r, 3);}vector<int> keep;NMSBoxesBatched(boxesXYXY, maxScores, maxScoreIdx, this->confThreshold, this->nmsThreshold, keep);Mat candidates(int(keep.size()), 6, CV_32FC1);int row = 0;for (auto idx : keep){boxes_xyxy.rowRange(idx, idx + 1).copyTo(candidates(Rect(0, row, 4, 1)));candidates.at<float>(row, 4) = maxScores[idx];candidates.at<float>(row, 5) = float(maxScoreIdx[idx]);row++;}if (keep.size() == 0)return Mat();return candidates;}void generateAnchors(){vector< tuple<int, int, int> > nb;int total = 0;for (auto v : this->strides){int w = this->inputSize.width / v;int h = this->inputSize.height / v;nb.push_back(tuple<int, int, int>(w * h, w, v));total += w * h;}this->grids = Mat(total, 2, CV_32FC1);this->expandedStrides = Mat(total, 1, CV_32FC1);float* ptrGrids = this->grids.ptr<float>(0);float* ptrStrides = this->expandedStrides.ptr<float>(0);int pos = 0;for (auto le : nb){int r = get<1>(le);for (int i = 0; i < get<0>(le); i++, pos++){*ptrGrids++ = float(i % r);*ptrGrids++ = float(i / r);*ptrStrides++ = float((get<2>(le)));}}}

};std::string keys =

"{ help h | | Print help message. }"

"{ model m | object_detection_yolox_2022nov.onnx | Usage: Path to the model, defaults to object_detection_yolox_2022nov.onnx }"

"{ input i | | Path to input image or video file. Skip this argument to capture frames from a camera.}"

"{ confidence | 0.5 | Class confidence }"

"{ obj | 0.5 | Enter object threshold }"

"{ nms | 0.5 | Enter nms IOU threshold }"

"{ save s | true | Specify to save results. This flag is invalid when using camera. }"

"{ vis v | 1 | Specify to open a window for result visualization. This flag is invalid when using camera. }"

"{ backend bt | 0 | Choose one of computation backends: "

"0: (default) OpenCV implementation + CPU, "

"1: CUDA + GPU (CUDA), "

"2: CUDA + GPU (CUDA FP16), "

"3: TIM-VX + NPU, "

"4: CANN + NPU}";pair<Mat, double> letterBox(Mat srcimg, Size targetSize = Size(640, 640))

{Mat paddedImg(targetSize.height, targetSize.width, CV_32FC3, Scalar::all(114.0));Mat resizeImg;double ratio = min(targetSize.height / double(srcimg.rows), targetSize.width / double(srcimg.cols));resize(srcimg, resizeImg, Size(int(srcimg.cols * ratio), int(srcimg.rows * ratio)), INTER_LINEAR);resizeImg.copyTo(paddedImg(Rect(0, 0, int(srcimg.cols * ratio), int(srcimg.rows * ratio))));return pair<Mat, double>(paddedImg, ratio);

}Mat unLetterBox(Mat bbox, double letterboxScale)

{return bbox / letterboxScale;

}Mat visualize(Mat dets, Mat srcimg, double letterbox_scale, double fps = -1)

{Mat resImg = srcimg.clone();if (fps > 0)putText(resImg, format("FPS: %.2f", fps), Size(10, 25), FONT_HERSHEY_SIMPLEX, 1, Scalar(0, 0, 255), 2);for (int row = 0; row < dets.rows; row++){Mat boxF = unLetterBox(dets(Rect(0, row, 4, 1)), letterbox_scale);Mat box;boxF.convertTo(box, CV_32S);float score = dets.at<float>(row, 4);int clsId = int(dets.at<float>(row, 5));int x0 = box.at<int>(0, 0);int y0 = box.at<int>(0, 1);int x1 = box.at<int>(0, 2);int y1 = box.at<int>(0, 3);string text = format("%s : %f", labelYolox[clsId].c_str(), score * 100);int font = FONT_HERSHEY_SIMPLEX;int baseLine = 0;Size txtSize = getTextSize(text, font, 0.4, 1, &baseLine);rectangle(resImg, Point(x0, y0), Point(x1, y1), Scalar(0, 255, 0), 2);rectangle(resImg, Point(x0, y0 + 1), Point(x0 + txtSize.width + 1, y0 + int(1.5 * txtSize.height)), Scalar(255, 255, 255), -1);putText(resImg, text, Point(x0, y0 + txtSize.height), font, 0.4, Scalar(0, 0, 0), 1);}return resImg;

}int main(int argc, char** argv)

{CommandLineParser parser(argc, argv, keys);parser.about("Use this script to run Yolox deep learning networks in opencv_zoo using OpenCV.");if (parser.has("help")){parser.printMessage();return 0;}string model = parser.get<String>("model");float confThreshold = parser.get<float>("confidence");float objThreshold = parser.get<float>("obj");float nmsThreshold = parser.get<float>("nms");bool vis = parser.get<bool>("vis");bool save = parser.get<bool>("save");int backendTargetid = parser.get<int>("backend");if (model.empty()){CV_Error(Error::StsError, "Model file " + model + " not found");}YoloX modelNet(model, confThreshold, nmsThreshold, objThreshold,backendTargetPairs[backendTargetid].first, backendTargetPairs[backendTargetid].second);//! [Open a video file or an image file or a camera stream]VideoCapture cap;if (parser.has("input"))cap.open(samples::findFile(parser.get<String>("input")));elsecap.open(0);if (!cap.isOpened())CV_Error(Error::StsError, "Cannot open video or file");Mat frame, inputBlob;double letterboxScale;static const std::string kWinName = model;int nbInference = 0;while (waitKey(1) < 0){cap >> frame;if (frame.empty()){cout << "Frame is empty" << endl;waitKey();break;}pair<Mat, double> w = letterBox(frame);inputBlob = get<0>(w);letterboxScale = get<1>(w);TickMeter tm;tm.start();Mat predictions = modelNet.infer(inputBlob);tm.stop();cout << "Inference time: " << tm.getTimeMilli() << " ms\n";Mat img = visualize(predictions, frame, letterboxScale, tm.getFPS());if (save && parser.has("input")){imwrite("result.jpg", img);}if (vis){imshow(kWinName, img);}}return 0;

}

Microsoft ONNX Runtime — GPU/CPU

Microsoft 和合作伙伴社区创建了 ONNX 作为表示机器学习模型的开放标准。 许多框架(包括 TensorFlow、PyTorch、scikit-learn、Keras、Chainer、MXNet 和 MATLAB)的模型都可以导出或转换为标准 ONNX 格式。 在模型采用 ONNX 格式后,可以在各种平台和设备上运行这些模型。

示例代码

import cv2

import numpy as np

import onnxruntime as ort

import timedef plot_one_box(x, img, color=None, label=None, line_thickness=None):"""description: Plots one bounding box on image img,this function comes from YoLov5 project.param: x: a box likes [x1,y1,x2,y2]img: a opencv image objectcolor: color to draw rectangle, such as (0,255,0)label: strline_thickness: intreturn:no return"""tl = (line_thickness or round(0.002 * (img.shape[0] + img.shape[1]) / 2) + 1) # line/font thicknesscolor = color or [random.randint(0, 255) for _ in range(3)]c1, c2 = (int(x[0]), int(x[1])), (int(x[2]), int(x[3]))cv2.rectangle(img, c1, c2, color, thickness=tl, lineType=cv2.LINE_AA)if label:tf = max(tl - 1, 1) # font thicknesst_size = cv2.getTextSize(label, 0, fontScale=tl / 3, thickness=tf)[0]c2 = c1[0] + t_size[0], c1[1] - t_size[1] - 3cv2.rectangle(img, c1, c2, color, -1, cv2.LINE_AA) # filledcv2.putText(img,label,(c1[0], c1[1] - 2),0,tl / 3,[225, 255, 255],thickness=tf,lineType=cv2.LINE_AA,)def _make_grid( nx, ny):xv, yv = np.meshgrid(np.arange(ny), np.arange(nx))return np.stack((xv, yv), 2).reshape((-1, 2)).astype(np.float32)def cal_outputs(outs,nl,na,model_w,model_h,anchor_grid,stride):row_ind = 0grid = [np.zeros(1)] * nlfor i in range(nl):h, w = int(model_w/ stride[i]), int(model_h / stride[i])length = int(na * h * w)if grid[i].shape[2:4] != (h, w):grid[i] = _make_grid(w, h)outs[row_ind:row_ind + length, 0:2] = (outs[row_ind:row_ind + length, 0:2] * 2. - 0.5 + np.tile(grid[i], (na, 1))) * int(stride[i])outs[row_ind:row_ind + length, 2:4] = (outs[row_ind:row_ind + length, 2:4] * 2) ** 2 * np.repeat(anchor_grid[i], h * w, axis=0)row_ind += lengthreturn outsdef post_process_opencv(outputs,model_h,model_w,img_h,img_w,thred_nms,thred_cond):conf = outputs[:,4].tolist()c_x = outputs[:,0]/model_w*img_wc_y = outputs[:,1]/model_h*img_hw = outputs[:,2]/model_w*img_wh = outputs[:,3]/model_h*img_hp_cls = outputs[:,5:]if len(p_cls.shape)==1:p_cls = np.expand_dims(p_cls,1)cls_id = np.argmax(p_cls,axis=1)p_x1 = np.expand_dims(c_x-w/2,-1)p_y1 = np.expand_dims(c_y-h/2,-1)p_x2 = np.expand_dims(c_x+w/2,-1)p_y2 = np.expand_dims(c_y+h/2,-1)areas = np.concatenate((p_x1,p_y1,p_x2,p_y2),axis=-1)areas = areas.tolist()ids = cv2.dnn.NMSBoxes(areas,conf,thred_cond,thred_nms)if len(ids)>0:return np.array(areas)[ids],np.array(conf)[ids],cls_id[ids]else:return [],[],[]

def infer_img(img0,net,model_h,model_w,nl,na,stride,anchor_grid,thred_nms=0.4,thred_cond=0.5):# 图像预处理img = cv2.resize(img0, [model_w,model_h], interpolation=cv2.INTER_AREA)img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)img = img.astype(np.float32) / 255.0blob = np.expand_dims(np.transpose(img, (2, 0, 1)), axis=0)# 模型推理outs = net.run(None, {net.get_inputs()[0].name: blob})[0].squeeze(axis=0)# 输出坐标矫正outs = cal_outputs(outs,nl,na,model_w,model_h,anchor_grid,stride)# 检测框计算img_h,img_w,_ = np.shape(img0)boxes,confs,ids = post_process_opencv(outs,model_h,model_w,img_h,img_w,thred_nms,thred_cond)return boxes,confs,idsif __name__ == "__main__":# 模型加载model_pb_path = "best.onnx"so = ort.SessionOptions()net = ort.InferenceSession(model_pb_path, so)# 标签字典dic_labels= {0:'boat',1:'noboat',}# 模型参数model_h = 320model_w = 320nl = 3na = 3stride=[8.,16.,32.]anchors = [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]]anchor_grid = np.asarray(anchors, dtype=np.float32).reshape(nl, -1, 2)video = 0cap = cv2.VideoCapture(video)flag_det = Falsewhile True:success, img0 = cap.read()if success:if flag_det:t1 = time.time()det_boxes,scores,ids = infer_img(img0,net,model_h,model_w,nl,na,stride,anchor_grid,thred_nms=0.4,thred_cond=0.5)t2 = time.time()for box,score,id in zip(det_boxes,scores,ids):label = '%s:%.2f'%(dic_labels[id],score)plot_one_box(box.astype(np.int16), img0, color=(255,0,0), label=label, line_thickness=None)str_FPS = "FPS: %.2f"%(1./(t2-t1))cv2.putText(img0,str_FPS,(50,50),cv2.FONT_HERSHEY_COMPLEX,1,(0,255,0),3)cv2.imshow("video",img0)key=cv2.waitKey(1) & 0xFF if key == ord('q'):breakelif key & 0xFF == ord('s'):flag_det = not flag_detprint(flag_det)cap.release()

腾讯 NCNN— 移动端

ncnn 是腾讯优图实验室首个开源项目,是一个为手机端极致优化的高性能神经网络前向计算框架。ncnn 从设计之初深刻考虑手机端的部署和使用。 无第三方依赖,跨平台,手机端 cpu 的速度快于目前所有已知的开源框架。 基于 ncnn,开发者能够将深度学习算法轻松移植到手机端高效执行, 开发出人工智能 APP,将 AI 带到你的指尖。 ncnn 目前已在腾讯多款应用中使用,如:QQ,Qzone,微信,天天 P 图等。

Android&NCNN学习—nanodet实例

关于舰船检测项目调试记录

#include "layer.h"

#include "net.h"#if defined(USE_NCNN_SIMPLEOCV)

#include "simpleocv.h"

#else

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#endif

#include <float.h>

#include <stdio.h>

#include <vector>

#include <sys/time.h>// 0 : FP16

// 1 : INT8

#define USE_INT8 0// 0 : Image

// 1 : Camera

#define USE_CAMERA 0struct Object

{cv::Rect_<float> rect;int label;float prob;

};static inline float intersection_area(const Object& a, const Object& b)

{cv::Rect_<float> inter = a.rect & b.rect;return inter.area();

}static void qsort_descent_inplace(std::vector<Object>& faceobjects, int left, int right)

{int i = left;int j = right;float p = faceobjects[(left + right) / 2].prob;while (i <= j){while (faceobjects[i].prob > p)i++;while (faceobjects[j].prob < p)j--;if (i <= j){// swapstd::swap(faceobjects[i], faceobjects[j]);i++;j--;}}#pragma omp parallel sections{#pragma omp section{if (left < j) qsort_descent_inplace(faceobjects, left, j);}#pragma omp section{if (i < right) qsort_descent_inplace(faceobjects, i, right);}}

}static void qsort_descent_inplace(std::vector<Object>& faceobjects)

{if (faceobjects.empty())return;qsort_descent_inplace(faceobjects, 0, faceobjects.size() - 1);

}static void nms_sorted_bboxes(const std::vector<Object>& faceobjects, std::vector<int>& picked, float nms_threshold)

{picked.clear();const int n = faceobjects.size();std::vector<float> areas(n);for (int i = 0; i < n; i++){areas[i] = faceobjects[i].rect.area();}for (int i = 0; i < n; i++){const Object& a = faceobjects[i];int keep = 1;for (int j = 0; j < (int)picked.size(); j++){const Object& b = faceobjects[picked[j]];// intersection over unionfloat inter_area = intersection_area(a, b);float union_area = areas[i] + areas[picked[j]] - inter_area;// float IoU = inter_area / union_areaif (inter_area / union_area > nms_threshold)keep = 0;}if (keep)picked.push_back(i);}

}static inline float sigmoid(float x)

{return static_cast<float>(1.f / (1.f + exp(-x)));

}// unsigmoid

static inline float unsigmoid(float y) {return static_cast<float>(-1.0 * (log((1.0 / y) - 1.0)));

}static void generate_proposals(const ncnn::Mat &anchors, int stride, const ncnn::Mat &in_pad,const ncnn::Mat &feat_blob, float prob_threshold,std::vector <Object> &objects) {const int num_grid = feat_blob.h;if (prob_threshold > 0.6)float unsig_pro = unsigmoid(prob_threshold);int num_grid_x;int num_grid_y;if (in_pad.w > in_pad.h) {num_grid_x = in_pad.w / stride;num_grid_y = num_grid / num_grid_x;} else {num_grid_y = in_pad.h / stride;num_grid_x = num_grid / num_grid_y;}const int num_class = feat_blob.w - 5;const int num_anchors = anchors.w / 2;for (int q = 0; q < num_anchors; q++) {const float anchor_w = anchors[q * 2];const float anchor_h = anchors[q * 2 + 1];const ncnn::Mat feat = feat_blob.channel(q);for (int i = 0; i < num_grid_y; i++) {for (int j = 0; j < num_grid_x; j++) {const float *featptr = feat.row(i * num_grid_x + j);// find class index with max class scoreint class_index = 0;float class_score = -FLT_MAX;float box_score = featptr[4];if (prob_threshold > 0.6) {// while prob_threshold > 0.6, unsigmoid better than sigmoidif (box_score > unsig_pro) {for (int k = 0; k < num_class; k++) {float score = featptr[5 + k];if (score > class_score) {class_index = k;class_score = score;}}float confidence = sigmoid(box_score) * sigmoid(class_score);if (confidence >= prob_threshold) {float dx = sigmoid(featptr[0]);float dy = sigmoid(featptr[1]);float dw = sigmoid(featptr[2]);float dh = sigmoid(featptr[3]);float pb_cx = (dx * 2.f - 0.5f + j) * stride;float pb_cy = (dy * 2.f - 0.5f + i) * stride;float pb_w = pow(dw * 2.f, 2) * anchor_w;float pb_h = pow(dh * 2.f, 2) * anchor_h;float x0 = pb_cx - pb_w * 0.5f;float y0 = pb_cy - pb_h * 0.5f;float x1 = pb_cx + pb_w * 0.5f;float y1 = pb_cy + pb_h * 0.5f;Object obj;obj.rect.x = x0;obj.rect.y = y0;obj.rect.width = x1 - x0;obj.rect.height = y1 - y0;obj.label = class_index;obj.prob = confidence;objects.push_back(obj);}} else {for (int k = 0; k < num_class; k++) {float score = featptr[5 + k];if (score > class_score) {class_index = k;class_score = score;}}float confidence = sigmoid(box_score) * sigmoid(class_score);if (confidence >= prob_threshold) {float dx = sigmoid(featptr[0]);float dy = sigmoid(featptr[1]);float dw = sigmoid(featptr[2]);float dh = sigmoid(featptr[3]);float pb_cx = (dx * 2.f - 0.5f + j) * stride;float pb_cy = (dy * 2.f - 0.5f + i) * stride;float pb_w = pow(dw * 2.f, 2) * anchor_w;float pb_h = pow(dh * 2.f, 2) * anchor_h;float x0 = pb_cx - pb_w * 0.5f;float y0 = pb_cy - pb_h * 0.5f;float x1 = pb_cx + pb_w * 0.5f;float y1 = pb_cy + pb_h * 0.5f;Object obj;obj.rect.x = x0;obj.rect.y = y0;obj.rect.width = x1 - x0;obj.rect.height = y1 - y0;obj.label = class_index;obj.prob = confidence;objects.push_back(obj);}}}}}}

}static int detect_yolov5(const cv::Mat& bgr, std::vector<Object>& objects)

{ncnn::Net yolov5;#if USE_INT8yolov5.opt.use_int8_inference=true;

#elseyolov5.opt.use_vulkan_compute = true;yolov5.opt.use_bf16_storage = true;

#endif// original pretrained model from https://github.com/ultralytics/yolov5// the ncnn model https://github.com/nihui/ncnn-assets/tree/master/models#if USE_INT8yolov5.load_param("v5lite-i8e.param");yolov5.load_model("yolov5-i8e.bin");

#elseyolov5.load_param("v5lite-e.param");yolov5.load_model("v5lite-e.bin");

#endifconst int target_size = 320;const float prob_threshold = 0.60f;const float nms_threshold = 0.60f;int img_w = bgr.cols;int img_h = bgr.rows;// letterbox pad to multiple of 32int w = img_w;int h = img_h;float scale = 1.f;if (w > h){scale = (float)target_size / w;w = target_size;h = h * scale;}else{scale = (float)target_size / h;h = target_size;w = w * scale;}ncnn::Mat in = ncnn::Mat::from_pixels_resize(bgr.data, ncnn::Mat::PIXEL_BGR2RGB, img_w, img_h, w, h);// pad to target_size rectangle// yolov5/utils/datasets.py letterboxint wpad = (w + 31) / 32 * 32 - w;int hpad = (h + 31) / 32 * 32 - h;ncnn::Mat in_pad;ncnn::copy_make_border(in, in_pad, hpad / 2, hpad - hpad / 2, wpad / 2, wpad - wpad / 2, ncnn::BORDER_CONSTANT, 114.f);const float norm_vals[3] = {1 / 255.f, 1 / 255.f, 1 / 255.f};in_pad.substract_mean_normalize(0, norm_vals);ncnn::Extractor ex = yolov5.create_extractor();ex.input("images", in_pad);std::vector<Object> proposals;// stride 8{ncnn::Mat out;ex.extract("output", out);ncnn::Mat anchors(6);anchors[0] = 10.f;anchors[1] = 13.f;anchors[2] = 16.f;anchors[3] = 30.f;anchors[4] = 33.f;anchors[5] = 23.f;std::vector<Object> objects8;generate_proposals(anchors, 8, in_pad, out, prob_threshold, objects8);proposals.insert(proposals.end(), objects8.begin(), objects8.end());}// stride 16{ncnn::Mat out;ex.extract("1111", out);ncnn::Mat anchors(6);anchors[0] = 30.f;anchors[1] = 61.f;anchors[2] = 62.f;anchors[3] = 45.f;anchors[4] = 59.f;anchors[5] = 119.f;std::vector<Object> objects16;generate_proposals(anchors, 16, in_pad, out, prob_threshold, objects16);proposals.insert(proposals.end(), objects16.begin(), objects16.end());}// stride 32{ncnn::Mat out;ex.extract("2222", out);ncnn::Mat anchors(6);anchors[0] = 116.f;anchors[1] = 90.f;anchors[2] = 156.f;anchors[3] = 198.f;anchors[4] = 373.f;anchors[5] = 326.f;std::vector<Object> objects32;generate_proposals(anchors, 32, in_pad, out, prob_threshold, objects32);proposals.insert(proposals.end(), objects32.begin(), objects32.end());}// sort all proposals by score from highest to lowestqsort_descent_inplace(proposals);// apply nms with nms_thresholdstd::vector<int> picked;nms_sorted_bboxes(proposals, picked, nms_threshold);int count = picked.size();objects.resize(count);for (int i = 0; i < count; i++){objects[i] = proposals[picked[i]];// adjust offset to original unpaddedfloat x0 = (objects[i].rect.x - (wpad / 2)) / scale;float y0 = (objects[i].rect.y - (hpad / 2)) / scale;float x1 = (objects[i].rect.x + objects[i].rect.width - (wpad / 2)) / scale;float y1 = (objects[i].rect.y + objects[i].rect.height - (hpad / 2)) / scale;// clipx0 = std::max(std::min(x0, (float)(img_w - 1)), 0.f);y0 = std::max(std::min(y0, (float)(img_h - 1)), 0.f);x1 = std::max(std::min(x1, (float)(img_w - 1)), 0.f);y1 = std::max(std::min(y1, (float)(img_h - 1)), 0.f);objects[i].rect.x = x0;objects[i].rect.y = y0;objects[i].rect.width = x1 - x0;objects[i].rect.height = y1 - y0;}return 0;

}static void draw_objects(const cv::Mat& bgr, const std::vector<Object>& objects)

{static const char* class_names[] = {"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light","fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow","elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee","skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard","tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple","sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch","potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone","microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear","hair drier", "toothbrush"};cv::Mat image = bgr.clone();for (size_t i = 0; i < objects.size(); i++){const Object& obj = objects[i];fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob,obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);cv::rectangle(image, obj.rect, cv::Scalar(0, 255, 0));char text[256];sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);int baseLine = 0;cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);int x = obj.rect.x;int y = obj.rect.y - label_size.height - baseLine;if (y < 0)y = 0;if (x + label_size.width > image.cols)x = image.cols - label_size.width;cv::rectangle(image, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),cv::Scalar(255, 255, 255), -1);cv::putText(image, text, cv::Point(x, y + label_size.height),cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));}

#if USE_CAMERAimshow("外接摄像头", image);cv::waitKey(1);

#elsecv::imwrite("result.jpg", image);

#endif

}#if USE_CAMERA

int main(int argc, char** argv)

{cv::VideoCapture capture;capture.open(0); //修改这个参数可以选择打开想要用的摄像头cv::Mat frame;while (true){capture >> frame;cv::Mat m = frame;std::vector<Object> objects;detect_yolov5(frame, objects);draw_objects(m, objects);if (cv::waitKey(30) >= 0)break;}

}

#else

int main(int argc, char** argv)

{if (argc != 2){fprintf(stderr, "Usage: %s [imagepath]\n", argv[0]);return -1;}const char* imagepath = argv[1];struct timespec begin, end;long time;clock_gettime(CLOCK_MONOTONIC, &begin);cv::Mat m = cv::imread(imagepath, 1);if (m.empty()){fprintf(stderr, "cv::imread %s failed\n", imagepath);return -1;}std::vector<Object> objects;detect_yolov5(m, objects);clock_gettime(CLOCK_MONOTONIC, &end);time = (end.tv_sec - begin.tv_sec) + (end.tv_nsec - begin.tv_nsec);printf(">> Time : %lf ms\n", (double)time/1000000);draw_objects(m, objects);return 0;

}

#endif

阿里巴巴 MNN — 移动端

MNN 是一个轻量级的深度学习端侧推理引擎,核心解决深度神经网络模型在端侧推理运行问题,涵盖深度神经网络模型的优化、转换和推理。目前,MNN已经在手淘、手猫、优酷、聚划算、UC、飞猪、千牛等 20 多个 App 中使用。

示例代码

#include <iostream>

#include <string>

#include <ctime>

#include <stdio.h>

#include <omp.h>#include <MNN/MNNDefine.h>

#include <MNN/MNNForwardType.h>

#include <MNN/Interpreter.hpp>#include "utils.h"

#define use_camera 0

#define mnnd 1std::vector<BoxInfo> decode(cv::Mat &cv_mat, std::shared_ptr<MNN::Interpreter> &net, MNN::Session *session, int INPUT_SIZE)

{std::vector<int> dims{1, INPUT_SIZE, INPUT_SIZE, 3};auto nhwc_Tensor = MNN::Tensor::create<float>(dims, NULL, MNN::Tensor::TENSORFLOW);auto nhwc_data = nhwc_Tensor->host<float>();auto nhwc_size = nhwc_Tensor->size();std::memcpy(nhwc_data, cv_mat.data, nhwc_size);auto inputTensor = net->getSessionInput(session, nullptr);inputTensor->copyFromHostTensor(nhwc_Tensor);net->runSession(session);MNN::Tensor *tensor_scores = net->getSessionOutput(session, "outputs");MNN::Tensor tensor_scores_host(tensor_scores, tensor_scores->getDimensionType());tensor_scores->copyToHostTensor(&tensor_scores_host);auto pred_dims = tensor_scores_host.shape();#if mnndconst unsigned int num_proposals = pred_dims.at(1);const unsigned int num_classes = pred_dims.at(2) - 5;std::vector<BoxInfo> bbox_collection;for (unsigned int i = 0; i < num_proposals; ++i){const float *offset_obj_cls_ptr = tensor_scores_host.host<float>() + (i * (num_classes + 5)); // row ptrfloat obj_conf = offset_obj_cls_ptr[4];if (obj_conf < 0.5)continue;float cls_conf = offset_obj_cls_ptr[5];unsigned int label = 0;for (unsigned int j = 0; j < num_classes; ++j){float tmp_conf = offset_obj_cls_ptr[j + 5];if (tmp_conf > cls_conf){cls_conf = tmp_conf;label = j;}}float conf = obj_conf * cls_conf; if (conf < 0.50)continue;float cx = offset_obj_cls_ptr[0];float cy = offset_obj_cls_ptr[1];float w = offset_obj_cls_ptr[2];float h = offset_obj_cls_ptr[3];float x1 = (cx - w / 2.f);float y1 = (cy - h / 2.f);float x2 = (cx + w / 2.f);float y2 = (cy + h / 2.f);BoxInfo box;box.x1 = std::max(0.f, x1);box.y1 = std::max(0.f, y1);box.x2 = std::min(x2, (float)INPUT_SIZE - 1.f);box.y2 = std::min(y2, (float)INPUT_SIZE - 1.f);box.score = conf;box.label = label;bbox_collection.push_back(box);}

#elseconst unsigned int num_proposals = pred_dims.at(0);const unsigned int num_datainfo = pred_dims.at(1);std::vector<BoxInfo> bbox_collection;for (unsigned int i = 0; i < num_proposals; ++i){const float *offset_obj_cls_ptr = tensor_scores_host.host<float>() + (i * num_datainfo); // row ptrfloat obj_conf = offset_obj_cls_ptr[4];if (obj_conf < 0.5)continue;float x1 = offset_obj_cls_ptr[0];float y1 = offset_obj_cls_ptr[1];float x2 = offset_obj_cls_ptr[2];float y2 = offset_obj_cls_ptr[3];BoxInfo box;box.x1 = std::max(0.f, x1);box.y1 = std::max(0.f, y1);box.x2 = std::min(x2, (float)INPUT_SIZE - 1.f);box.y2 = std::min(y2, (float)INPUT_SIZE - 1.f);box.score = offset_obj_cls_ptr[4];box.label = offset_obj_cls_ptr[5];bbox_collection.push_back(box);}

#endifdelete nhwc_Tensor;return bbox_collection;

}int main(int argc, char const *argv[])

{std::string model_name = "../models/v5lite-e-mnnd_fp16.mnn";std::shared_ptr<MNN::Interpreter> net = std::shared_ptr<MNN::Interpreter>(MNN::Interpreter::createFromFile(model_name.c_str()));if (nullptr == net){return 0;}MNN::ScheduleConfig config;config.numThread = 4;config.type = static_cast<MNNForwardType>(MNN_FORWARD_CPU);MNN::BackendConfig backendConfig;// backendConfig.precision = (MNN::BackendConfig::PrecisionMode)1;backendConfig.precision = MNN::BackendConfig::Precision_Low_BF16;config.backendConfig = &backendConfig;MNN::Session *session = net->createSession(config);std::vector<BoxInfo> bbox_collection;cv::Mat image;MatInfo mmat_objection;mmat_objection.inpSize = 320;#if use_cameracv::VideoCapture capture;capture.open(0);cv::Mat frame;while (true){bbox_collection.clear();struct timespec begin, end;long time;clock_gettime(CLOCK_MONOTONIC, &begin);capture >> frame;cv::Mat raw_image = frame;cv::Mat pimg = preprocess(raw_image, mmat_objection);bbox_collection = decode(pimg, net, session, mmat_objection.inpSize);nms(bbox_collection, 0.50);draw_box(raw_image, bbox_collection, mmat_objection);clock_gettime(CLOCK_MONOTONIC, &end);time = (end.tv_sec - begin.tv_sec) + (end.tv_nsec - begin.tv_nsec);if(time > 0) printf(">> Time : %lf ms\n", (double)time / 1000000);}

#elsefor (size_t i = 0; i < 100; i++){bbox_collection.clear();struct timespec begin, end;long time;clock_gettime(CLOCK_MONOTONIC, &begin);std::string image_name = "../images/000000001000.jpg";cv::Mat raw_image = cv::imread(image_name.c_str());cv::Mat pimg = preprocess(raw_image, mmat_objection);bbox_collection = decode(pimg, net, session, mmat_objection.inpSize);nms(bbox_collection, 0.50);draw_box(raw_image, bbox_collection, mmat_objection);clock_gettime(CLOCK_MONOTONIC, &end);time = (end.tv_sec - begin.tv_sec) + (end.tv_nsec - begin.tv_nsec);if(time > 0) printf(">> Time : %lf ms\n", (double)time / 1000000);}

#endifreturn 0;

}

Rockchip(瑞芯微)的RKNN — 移动端(NPU)

RKNN-Toolkit 是Rockchip为Rockchip NPU平台提供的模型转换和推理工具,Rockchip 提供了完整了模型转换 Python 工具,方便用户将自主研发的算法模型转换成 RKNN 模型,同时 Rockchip 也提供了C/C++和Python API 接口,适用于AI算法在嵌入式设备上的部署。RKNN Toolkit2 提供了模型转换、量化功能、模型推理、性能和内存评估、量化精度分析、模型加密等功能。

rknn-toolkit2 github官方库

// Copyright (c) 2021 by Rockchip Electronics Co., Ltd. All Rights Reserved.

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License./*-------------------------------------------Includes

-------------------------------------------*/

#include <dlfcn.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/time.h>#define _BASETSD_H#include "RgaUtils.h"#include "postprocess.h"#include "rknn_api.h"

#include "preprocess.h"#define PERF_WITH_POST 1

/*-------------------------------------------Functions

-------------------------------------------*/static void dump_tensor_attr(rknn_tensor_attr *attr)

{std::string shape_str = attr->n_dims < 1 ? "" : std::to_string(attr->dims[0]);for (int i = 1; i < attr->n_dims; ++i){shape_str += ", " + std::to_string(attr->dims[i]);}printf(" index=%d, name=%s, n_dims=%d, dims=[%s], n_elems=%d, size=%d, w_stride = %d, size_with_stride=%d, fmt=%s, ""type=%s, qnt_type=%s, ""zp=%d, scale=%f\n",attr->index, attr->name, attr->n_dims, shape_str.c_str(), attr->n_elems, attr->size, attr->w_stride,attr->size_with_stride, get_format_string(attr->fmt), get_type_string(attr->type),get_qnt_type_string(attr->qnt_type), attr->zp, attr->scale);

}double __get_us(struct timeval t) { return (t.tv_sec * 1000000 + t.tv_usec); }static unsigned char *load_data(FILE *fp, size_t ofst, size_t sz)

{unsigned char *data;int ret;data = NULL;if (NULL == fp){return NULL;}ret = fseek(fp, ofst, SEEK_SET);if (ret != 0){printf("blob seek failure.\n");return NULL;}data = (unsigned char *)malloc(sz);if (data == NULL){printf("buffer malloc failure.\n");return NULL;}ret = fread(data, 1, sz, fp);return data;

}static unsigned char *load_model(const char *filename, int *model_size)

{FILE *fp;unsigned char *data;fp = fopen(filename, "rb");if (NULL == fp){printf("Open file %s failed.\n", filename);return NULL;}fseek(fp, 0, SEEK_END);int size = ftell(fp);data = load_data(fp, 0, size);fclose(fp);*model_size = size;return data;

}static int saveFloat(const char *file_name, float *output, int element_size)

{FILE *fp;fp = fopen(file_name, "w");for (int i = 0; i < element_size; i++){fprintf(fp, "%.6f\n", output[i]);}fclose(fp);return 0;

}/*-------------------------------------------Main Functions

-------------------------------------------*/

int main(int argc, char **argv)

{if (argc < 3){printf("Usage: %s <rknn model> <input_image_path> <resize/letterbox> <output_image_path>\n", argv[0]);return -1;}int ret;rknn_context ctx;size_t actual_size = 0;int img_width = 0;int img_height = 0;int img_channel = 0;const float nms_threshold = NMS_THRESH; // 默认的NMS阈值const float box_conf_threshold = BOX_THRESH; // 默认的置信度阈值struct timeval start_time, stop_time;char *model_name = (char *)argv[1];char *input_path = argv[2];std::string option = "letterbox";std::string out_path = "./out.jpg";if (argc >= 4){option = argv[3];}if (argc >= 5){out_path = argv[4];}// init rga contextrga_buffer_t src;rga_buffer_t dst;memset(&src, 0, sizeof(src));memset(&dst, 0, sizeof(dst));printf("post process config: box_conf_threshold = %.2f, nms_threshold = %.2f\n", box_conf_threshold, nms_threshold);/* Create the neural network */printf("Loading mode...\n");int model_data_size = 0;unsigned char *model_data = load_model(model_name, &model_data_size);ret = rknn_init(&ctx, model_data, model_data_size, 0, NULL);if (ret < 0){printf("rknn_init error ret=%d\n", ret);return -1;}rknn_sdk_version version;ret = rknn_query(ctx, RKNN_QUERY_SDK_VERSION, &version, sizeof(rknn_sdk_version));if (ret < 0){printf("rknn_init error ret=%d\n", ret);return -1;}printf("sdk version: %s driver version: %s\n", version.api_version, version.drv_version);rknn_input_output_num io_num;ret = rknn_query(ctx, RKNN_QUERY_IN_OUT_NUM, &io_num, sizeof(io_num));if (ret < 0){printf("rknn_init error ret=%d\n", ret);return -1;}printf("model input num: %d, output num: %d\n", io_num.n_input, io_num.n_output);rknn_tensor_attr input_attrs[io_num.n_input];memset(input_attrs, 0, sizeof(input_attrs));for (int i = 0; i < io_num.n_input; i++){input_attrs[i].index = i;ret = rknn_query(ctx, RKNN_QUERY_INPUT_ATTR, &(input_attrs[i]), sizeof(rknn_tensor_attr));if (ret < 0){printf("rknn_init error ret=%d\n", ret);return -1;}dump_tensor_attr(&(input_attrs[i]));}rknn_tensor_attr output_attrs[io_num.n_output];memset(output_attrs, 0, sizeof(output_attrs));for (int i = 0; i < io_num.n_output; i++){output_attrs[i].index = i;ret = rknn_query(ctx, RKNN_QUERY_OUTPUT_ATTR, &(output_attrs[i]), sizeof(rknn_tensor_attr));dump_tensor_attr(&(output_attrs[i]));}int channel = 3;int width = 0;int height = 0;if (input_attrs[0].fmt == RKNN_TENSOR_NCHW){printf("model is NCHW input fmt\n");channel = input_attrs[0].dims[1];height = input_attrs[0].dims[2];width = input_attrs[0].dims[3];}else{printf("model is NHWC input fmt\n");height = input_attrs[0].dims[1];width = input_attrs[0].dims[2];channel = input_attrs[0].dims[3];}printf("model input height=%d, width=%d, channel=%d\n", height, width, channel);rknn_input inputs[1];memset(inputs, 0, sizeof(inputs));inputs[0].index = 0;inputs[0].type = RKNN_TENSOR_UINT8;inputs[0].size = width * height * channel;inputs[0].fmt = RKNN_TENSOR_NHWC;inputs[0].pass_through = 0;// 读取图片printf("Read %s ...\n", input_path);cv::Mat orig_img = cv::imread(input_path, 1);if (!orig_img.data){printf("cv::imread %s fail!\n", input_path);return -1;}cv::Mat img;cv::cvtColor(orig_img, img, cv::COLOR_BGR2RGB);img_width = img.cols;img_height = img.rows;printf("img width = %d, img height = %d\n", img_width, img_height);// 指定目标大小和预处理方式,默认使用LetterBox的预处理BOX_RECT pads;memset(&pads, 0, sizeof(BOX_RECT));cv::Size target_size(width, height);cv::Mat resized_img(target_size.height, target_size.width, CV_8UC3);// 计算缩放比例float scale_w = (float)target_size.width / img.cols;float scale_h = (float)target_size.height / img.rows;if (img_width != width || img_height != height){// 直接缩放采用RGA加速if (option == "resize"){printf("resize image by rga\n");ret = resize_rga(src, dst, img, resized_img, target_size);if (ret != 0){fprintf(stderr, "resize with rga error\n");return -1;}// 保存预处理图片cv::imwrite("resize_input.jpg", resized_img);}else if (option == "letterbox"){printf("resize image with letterbox\n");float min_scale = std::min(scale_w, scale_h);scale_w = min_scale;scale_h = min_scale;letterbox(img, resized_img, pads, min_scale, target_size);// 保存预处理图片cv::imwrite("letterbox_input.jpg", resized_img);}else{fprintf(stderr, "Invalid resize option. Use 'resize' or 'letterbox'.\n");return -1;}inputs[0].buf = resized_img.data;}else{inputs[0].buf = img.data;}gettimeofday(&start_time, NULL);rknn_inputs_set(ctx, io_num.n_input, inputs);rknn_output outputs[io_num.n_output];memset(outputs, 0, sizeof(outputs));for (int i = 0; i < io_num.n_output; i++){outputs[i].index = i;outputs[i].want_float = 0;}// 执行推理ret = rknn_run(ctx, NULL);ret = rknn_outputs_get(ctx, io_num.n_output, outputs, NULL);gettimeofday(&stop_time, NULL);printf("once run use %f ms\n", (__get_us(stop_time) - __get_us(start_time)) / 1000);// 后处理detect_result_group_t detect_result_group;std::vector<float> out_scales;std::vector<int32_t> out_zps;for (int i = 0; i < io_num.n_output; ++i){out_scales.push_back(output_attrs[i].scale);out_zps.push_back(output_attrs[i].zp);}post_process((int8_t *)outputs[0].buf, (int8_t *)outputs[1].buf, (int8_t *)outputs[2].buf, height, width,box_conf_threshold, nms_threshold, pads, scale_w, scale_h, out_zps, out_scales, &detect_result_group);// 画框和概率char text[256];for (int i = 0; i < detect_result_group.count; i++){detect_result_t *det_result = &(detect_result_group.results[i]);sprintf(text, "%s %.1f%%", det_result->name, det_result->prop * 100);printf("%s @ (%d %d %d %d) %f\n", det_result->name, det_result->box.left, det_result->box.top,det_result->box.right, det_result->box.bottom, det_result->prop);int x1 = det_result->box.left;int y1 = det_result->box.top;int x2 = det_result->box.right;int y2 = det_result->box.bottom;rectangle(orig_img, cv::Point(x1, y1), cv::Point(x2, y2), cv::Scalar(256, 0, 0, 256), 3);putText(orig_img, text, cv::Point(x1, y1 + 12), cv::FONT_HERSHEY_SIMPLEX, 0.4, cv::Scalar(255, 255, 255));}printf("save detect result to %s\n", out_path.c_str());imwrite(out_path, orig_img);ret = rknn_outputs_release(ctx, io_num.n_output, outputs);// 耗时统计int test_count = 10;gettimeofday(&start_time, NULL);for (int i = 0; i < test_count; ++i){rknn_inputs_set(ctx, io_num.n_input, inputs);ret = rknn_run(ctx, NULL);ret = rknn_outputs_get(ctx, io_num.n_output, outputs, NULL);

#if PERF_WITH_POSTpost_process((int8_t *)outputs[0].buf, (int8_t *)outputs[1].buf, (int8_t *)outputs[2].buf, height, width,box_conf_threshold, nms_threshold, pads, scale_w, scale_h, out_zps, out_scales, &detect_result_group);

#endifret = rknn_outputs_release(ctx, io_num.n_output, outputs);}gettimeofday(&stop_time, NULL);printf("loop count = %d , average run %f ms\n", test_count,(__get_us(stop_time) - __get_us(start_time)) / 1000.0 / test_count);deinitPostProcess();// releaseret = rknn_destroy(ctx);if (model_data){free(model_data);}return 0;

}

百度PaddlePaddle(飞桨) — 服务器端/移动端

百度出品的深度学习平台飞桨(PaddlePaddle)是主流深度学习框架中一款完全国产化的产品,与Google TensorFlow、Facebook Pytorch齐名。2016 年飞桨正式开源,是国内首个全面开源开放、技术领先、功能完备的产业级深度学习平台。相比国内其他平台,飞桨是一个功能完整的深度学习平台,也是唯一成熟稳定、具备大规模推广条件的深度学习平台。

华为 MindSpore — 移动、边缘和云端(深度学习框架)

MindSpore是端边云全场景按需协同的华为自研AI计算框架,提供全场景统一API,为全场景AI的模型开发、模型运行、模型部署提供端到端能力。MindSpore采用端-边-云按需协作分布式架构、微分原生编程新范式以及AI Native新执行模式,实现更好的资源效率、安全可信,同时降低行业AI开发门槛、释放昇腾芯片算力,助力普惠AI。

Google TensorFlow Lite — 移动端

TensorFlowLite是Google在2017年5月推出的轻量级机器学习解决方案,主要针对移动端设备和嵌入式设备。针对移动端设备特点,TensorFlow Lite使用了诸多技术对内核进行了定制优化,预熔激活,量子化内核。TensorFlow Lite具有以下特征:跨平台,核心代码由C++编写,可以运行在不同平台上;轻量级,代码体积小,模型文件小,运行内存低;支持硬件加速。

参考地址 :

【OpenCV+OPENVINO使用】openvino安装教程

自训练YOLOv5 模型使用 OpenVINO™ 优化并部署在英特尔开发套件

yolov5使用onnxruntime进行c++部署

基于树莓派4B的YOLOv5-Lite目标检测的移植与部署(含训练教程)

一个针对 OpenCV DNN 进行调整的模型动物园,具有不同平台上的基准测试。

AI平台-MNN【推理引擎】

《MindSpore基础实践》——MindSpore基础

移动端机器学习框架TensorFlow Lite简介与实践

走进国产深度学习框架——百度飞桨(paddlepaddle)

相关文章:

AI部署——主流模型推理部署框架

我们以最经典的Yolov5目标检测网络为例解释一下10种主流推理部署框架的大概内容,省略模型训练的过程,只讨论模型转换、环境配置、推理部署等步骤。 Intel的OpenVINO — CPUNvidia的TensorRT — GPU/CPUOpenCV DNN Module — GPU/CPUMicrosoft ONNX Runti…...

PyTorch之loading fbgemm.dll异常的解决办法

前言 PyTorch是一个深度学习框架,当我们在本地调试大模型时,可能会选用并安装它,目前已更新至2.4版本。 一、安装必备 1. window 学习或开发阶段,我们通常在window环境下进行,因此需满足以下条件: Windo…...

Vscode——如何实现 Ctrl+鼠标左键 跳转函数内部的方法

一、对于Python代码 安装python插件即可实现 二、对于C/C代码 安装C/C插件即可实现...

力扣热题100_回溯_78_子集

文章目录 题目链接解题思路解题代码 题目链接 78. 子集 给你一个整数数组 nums ,数组中的元素 互不相同 。返回该数组所有可能的子集(幂集)。 解集 不能 包含重复的子集。你可以按 任意顺序 返回解集。 示例 1: 输入ÿ…...

进程架构)

浏览器如何工作(一)进程架构

分享cosine 大佬,版权©️大佬所有 浏览器的核心功能 浏览器,“浏览” 是这个产品的核心,浏览无非分为两步: 获取想浏览的资源 展示得到的资源 现代浏览器还增加了交互功能,这涉及到脚本运行。因此,…...

【LeetCode】两数之和

给定一个整数数组 nums 和一个整数目标值 target,请你在该数组中找出 和为目标值 target 的那 两个 整数,并返回它们的数组下标。 你可以假设每种输入只会对应一个答案,并且你不能使用两次相同的元素。 你可以按任意顺序返回答案。 示例 1…...

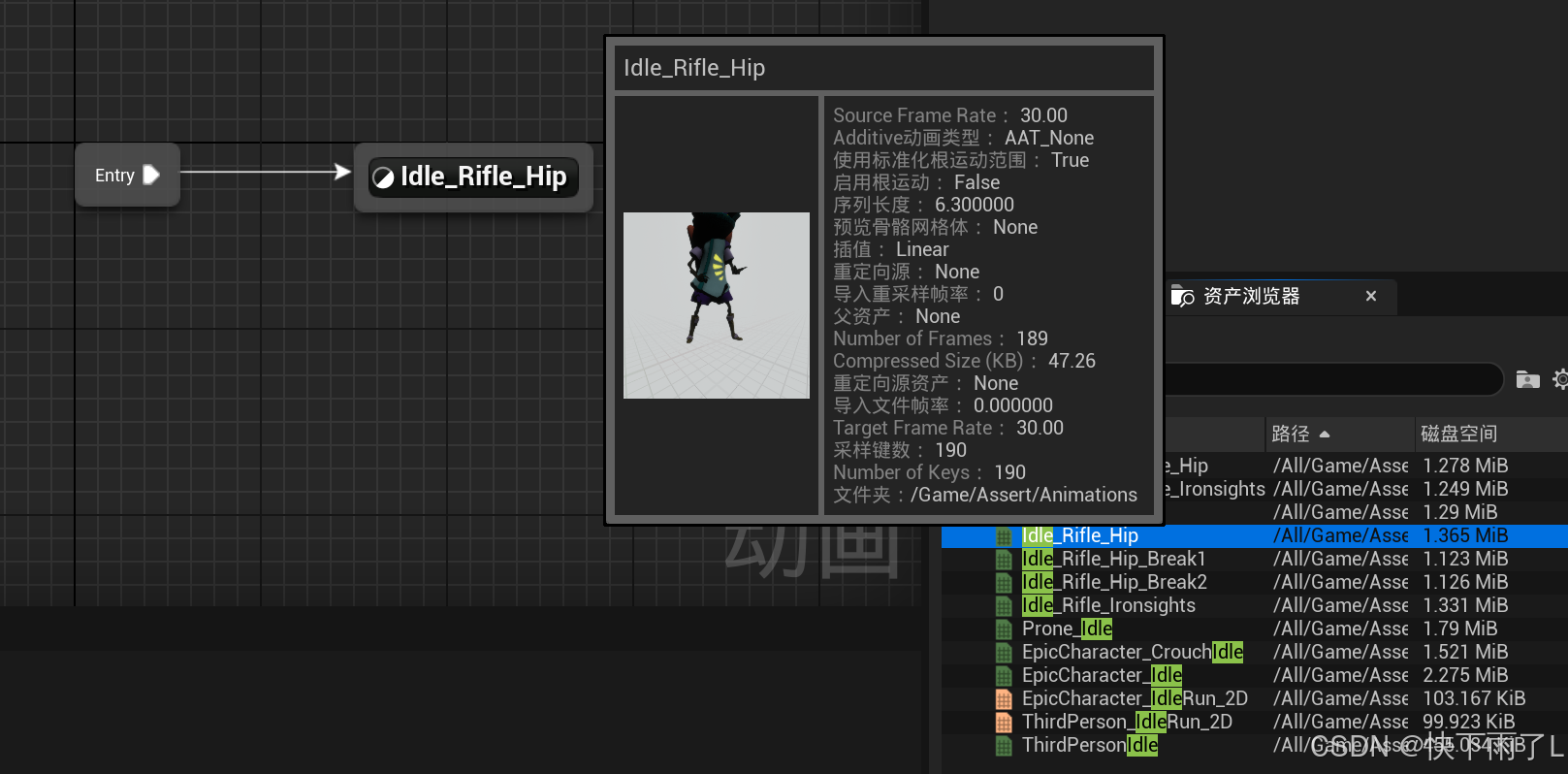

UE5学习笔记11-为拿取武器添加动画

一、一点说明 动画实例通过扩展为所有机器上的每个字符都存在动画蓝图,动画实例只能访问该计算机上的变量。 二、思路 我在武器组件中有一个武器类的指针,判断当前指针是否为空去判断当前角色是否装备武器 三、实现 1.在角色C类中添加是否装备武器的函…...

】)

68. 文本左右对齐【 力扣(LeetCode) 】

一、题目描述 给定一个单词数组 words 和一个长度 maxWidth ,重新排版单词,使其成为每行恰好有 maxWidth 个字符,且左右两端对齐的文本。 你应该使用 “贪心算法” 来放置给定的单词;也就是说,尽可能多地往每行中放置单…...

【中等】 猿人学web第一届 第6题 js混淆-回溯

文章目录 请求流程请求参数 加密参数定位r() 方法z() 方法 加密参数还原JJENCOde js代码加密环境检测_n("jsencrypt")12345 计算全部中奖的总金额请求代码注意 请求流程 请求参数 打开 调试工具,查看数据接口 https://match.yuanrenxue.cn/api/match/6 请…...

低、中、高频率段具体在不同应用中的范围是多少

1、低频率段(Low Frequency Range) ①建筑声学和噪声控制:通常将20 Hz 到 200 Hz 的频率范围视为低频段。在这一范围内,声音的波长较长,通常与低音(如重低音音乐)和建筑结构中的振动有关。 ②…...

Oxford Model600 Model400低温氦压缩机cryogenic helium compressor手侧

Oxford Model600 Model400低温氦压缩机cryogenic helium compressor手侧...

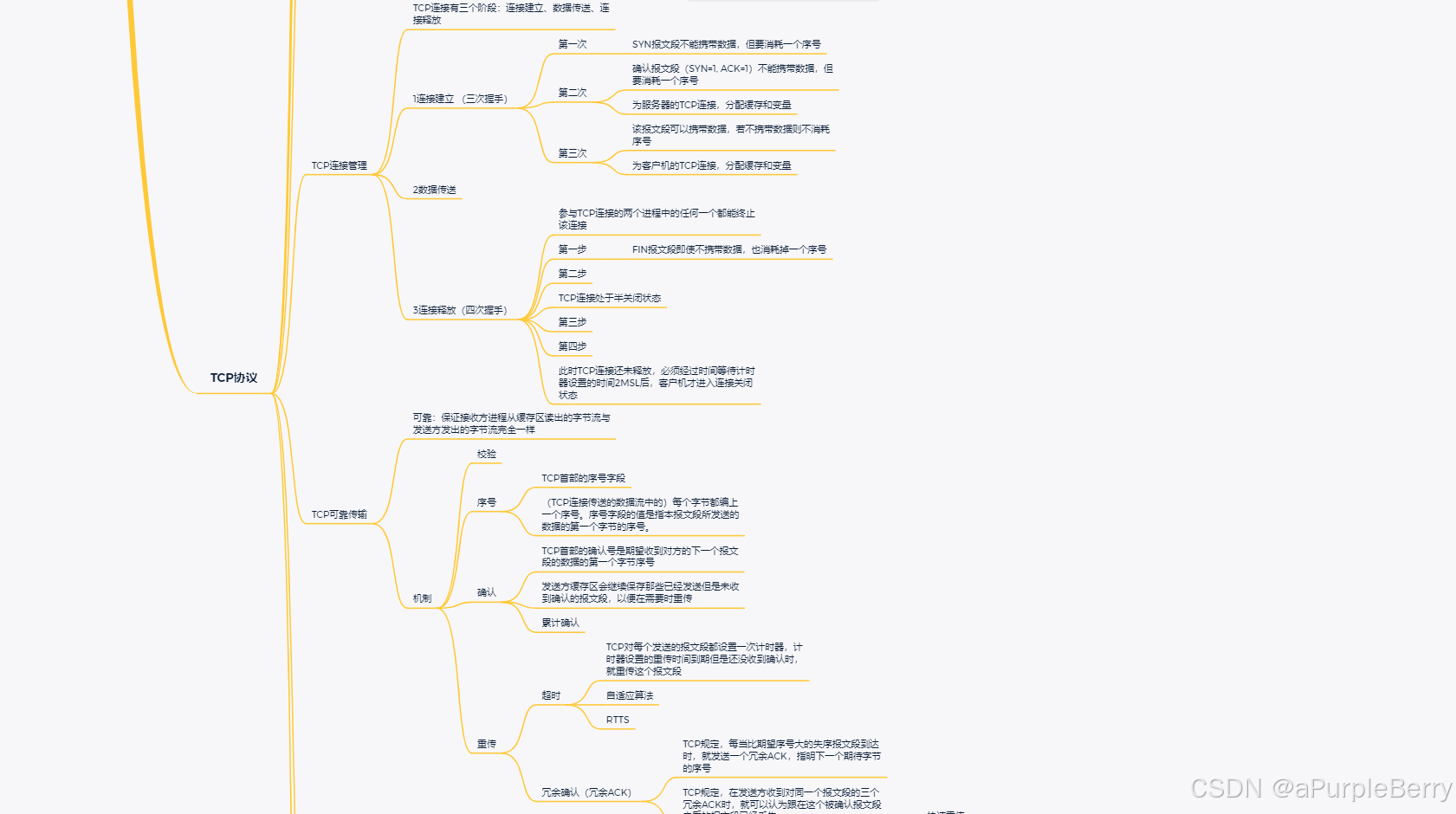

Golang面试题四(并发编程)

目录 1.Go常见的并发模型 2.哪些方法安全读写共享变量 3.如何排查数据竞争问题 4.Go有哪些同步原语 1. Mutex (互斥锁) 2. RWMutex (读写互斥锁) 3. Atomic 3.1.使用场景 3.2.整型操作 3.3.指针操作 3.4.使用示例 4. Channel 使用场景 使用示例 5. sync.WaitGr…...

计算机学生高效记录并整理编程学习笔记的方法

哪些知识点需要做笔记? 以下是我认为计算机学生大学四年可以积累的笔记。 ① 编程语言类(C语言CJava):保留课堂笔记中可运行的代码部分,课后debug跑一跑。学习语言初期应该多写代码(从仿写到自己写&#…...

【书生大模型实战】L2-LMDeploy 量化部署实践闯关任务

一、关卡任务 基础任务(完成此任务即完成闯关) 使用结合W4A16量化与kv cache量化的internlm2_5-7b-chat模型封装本地API并与大模型进行一次对话,作业截图需包括显存占用情况与大模型回复,参考4.1 API开发(优秀学员必做)使用Func…...

《编程学习笔记之道:构建知识宝库的秘诀》

在编程的浩瀚世界里,我们如同勇敢的探险家,不断追寻着知识的宝藏。而高效的笔记记录和整理方法,就像是我们手中的指南针,指引着我们在这片知识海洋中前行,不至于迷失方向。在这篇文章中,我们将深入探讨如何…...

DETR论文,基于transformer的目标检测网络 DETR:End-to-End Object Detection with Transformers

transformer的基本结构: encoder-decoder的基本流程为: 1)对于输入,首先进行embedding操作,即将输入映射为向量的形式,包含两部分操作,第一部分是input embedding:例如,在NLP领域&…...

untiy有渲染线程和逻辑线程嘛

之前我也这么认为,其实unity引擎是单线程的,当然后续的jobs不在考虑范围内 如果你在一个awake 或者 start方法中 延时,是会卡住主线程的 比如 其实游戏引擎有一个基础简单理解,那就是不断的进行一个循环,在这个周期循…...

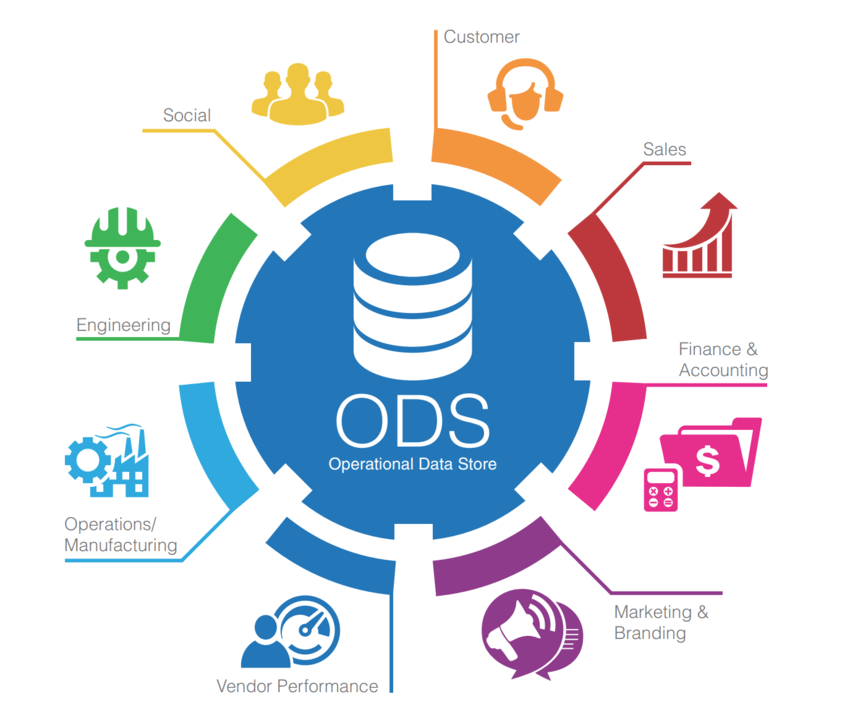

什么是数据仓库ODS层?为什么需要ODS层?

在大数据时代,数据仓库的重要性不言而喻。它不仅是企业数据存储与管理的核心,更是数据分析与决策支持的重要基础。而在数据仓库的各个层次中,ODS层(Operational Data Store,操作型数据存储)作为关键一环&am…...

permutation sequence(

60. Permutation Sequence class Solution:def getPermutation(self, n: int, k: int) -> str:def rec(k, l, ans, n):if(n0): return# 保留第一个位置,剩下数字的组合leftCom math.factorial(n - 1) #用于计算 (n-1) 的阶乘值ele k // leftCommod k % leftCo…...

PCL 三线性插值

文章目录 一、简介二、实现代码三、实现效果参考资料一、简介 三线性插值是一种在三维空间中使用已知数据点进行插值的方法。它是在立方体内的插值方法,通过利用立方体的八个顶点的已知值来估算立方体内任意一点的值。三线性插值扩展了一维的线性插值和二维的双线性插值。其基…...

手游刚开服就被攻击怎么办?如何防御DDoS?

开服初期是手游最脆弱的阶段,极易成为DDoS攻击的目标。一旦遭遇攻击,可能导致服务器瘫痪、玩家流失,甚至造成巨大经济损失。本文为开发者提供一套简洁有效的应急与防御方案,帮助快速应对并构建长期防护体系。 一、遭遇攻击的紧急应…...

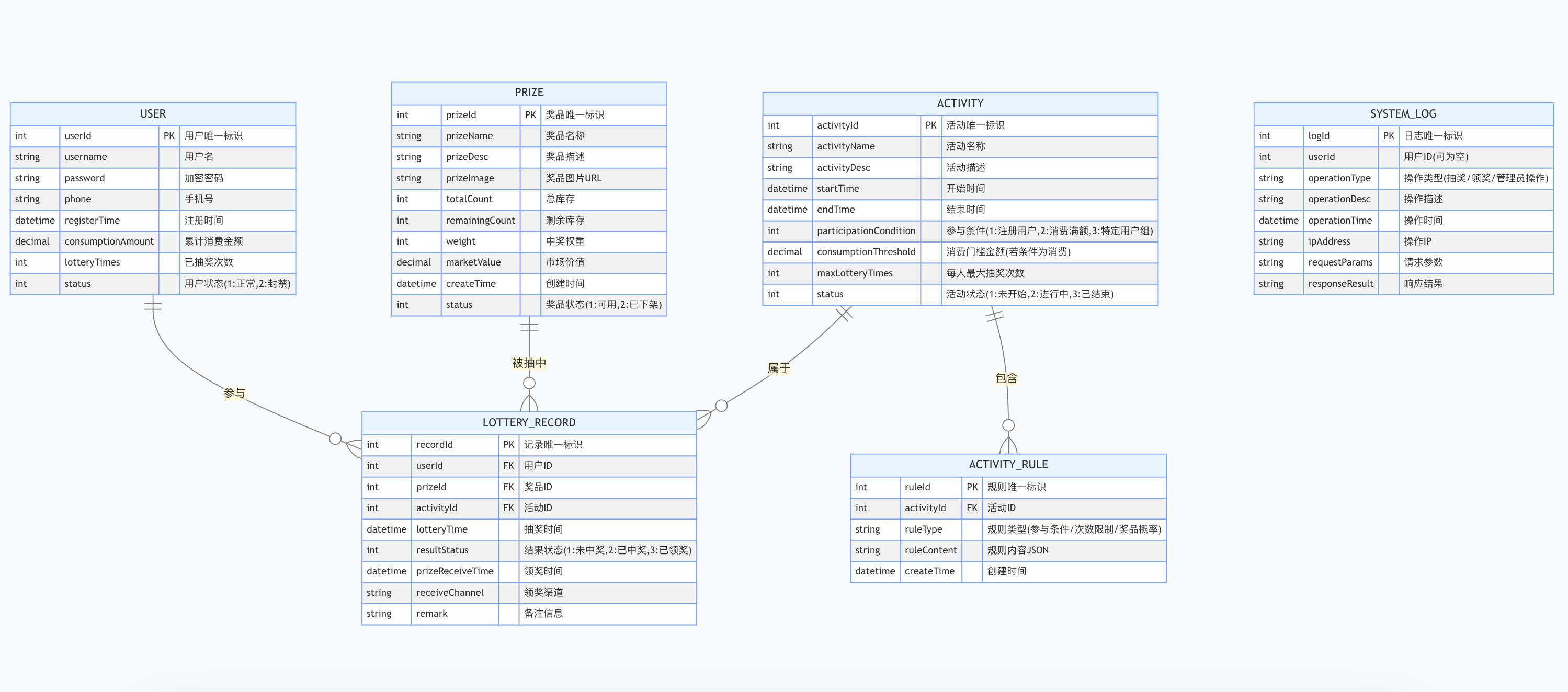

简易版抽奖活动的设计技术方案

1.前言 本技术方案旨在设计一套完整且可靠的抽奖活动逻辑,确保抽奖活动能够公平、公正、公开地进行,同时满足高并发访问、数据安全存储与高效处理等需求,为用户提供流畅的抽奖体验,助力业务顺利开展。本方案将涵盖抽奖活动的整体架构设计、核心流程逻辑、关键功能实现以及…...

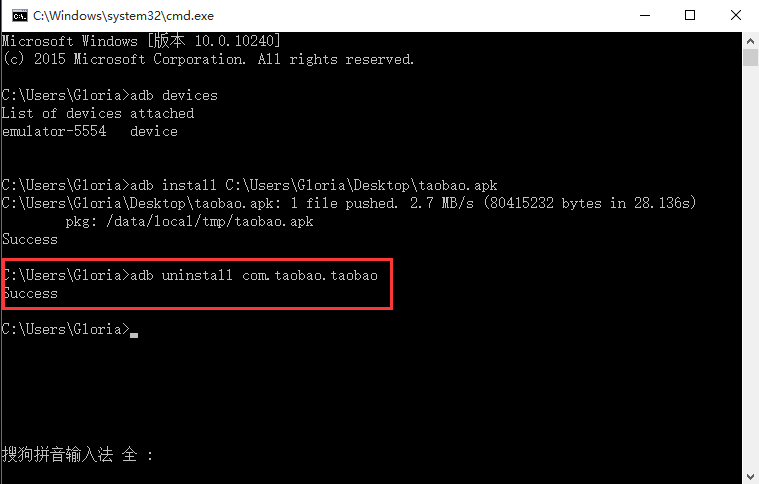

Appium+python自动化(十六)- ADB命令

简介 Android 调试桥(adb)是多种用途的工具,该工具可以帮助你你管理设备或模拟器 的状态。 adb ( Android Debug Bridge)是一个通用命令行工具,其允许您与模拟器实例或连接的 Android 设备进行通信。它可为各种设备操作提供便利,如安装和调试…...

Qt Widget类解析与代码注释

#include "widget.h" #include "ui_widget.h"Widget::Widget(QWidget *parent): QWidget(parent), ui(new Ui::Widget) {ui->setupUi(this); }Widget::~Widget() {delete ui; }//解释这串代码,写上注释 当然可以!这段代码是 Qt …...

Auto-Coder使用GPT-4o完成:在用TabPFN这个模型构建一个预测未来3天涨跌的分类任务

通过akshare库,获取股票数据,并生成TabPFN这个模型 可以识别、处理的格式,写一个完整的预处理示例,并构建一个预测未来 3 天股价涨跌的分类任务 用TabPFN这个模型构建一个预测未来 3 天股价涨跌的分类任务,进行预测并输…...

代理篇12|深入理解 Vite中的Proxy接口代理配置

在前端开发中,常常会遇到 跨域请求接口 的情况。为了解决这个问题,Vite 和 Webpack 都提供了 proxy 代理功能,用于将本地开发请求转发到后端服务器。 什么是代理(proxy)? 代理是在开发过程中,前端项目通过开发服务器,将指定的请求“转发”到真实的后端服务器,从而绕…...

MySQL 8.0 事务全面讲解

以下是一个结合两次回答的 MySQL 8.0 事务全面讲解,涵盖了事务的核心概念、操作示例、失败回滚、隔离级别、事务性 DDL 和 XA 事务等内容,并修正了查看隔离级别的命令。 MySQL 8.0 事务全面讲解 一、事务的核心概念(ACID) 事务是…...

水泥厂自动化升级利器:Devicenet转Modbus rtu协议转换网关

在水泥厂的生产流程中,工业自动化网关起着至关重要的作用,尤其是JH-DVN-RTU疆鸿智能Devicenet转Modbus rtu协议转换网关,为水泥厂实现高效生产与精准控制提供了有力支持。 水泥厂设备众多,其中不少设备采用Devicenet协议。Devicen…...

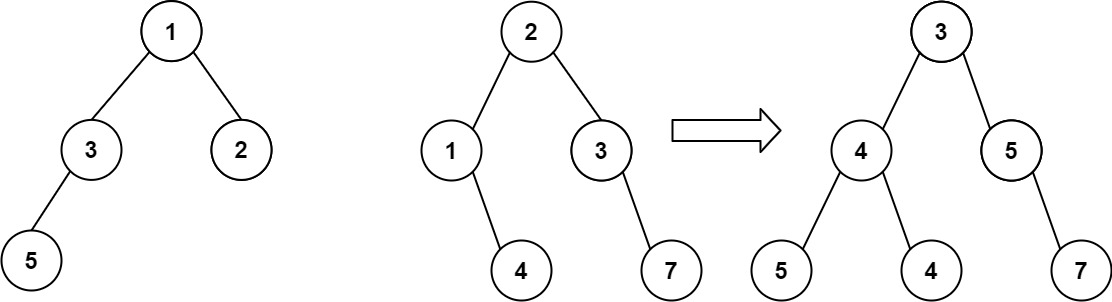

算法打卡第18天

从中序与后序遍历序列构造二叉树 (力扣106题) 给定两个整数数组 inorder 和 postorder ,其中 inorder 是二叉树的中序遍历, postorder 是同一棵树的后序遍历,请你构造并返回这颗 二叉树 。 示例 1: 输入:inorder [9,3,15,20,7…...

node.js的初步学习

那什么是node.js呢? 和JavaScript又是什么关系呢? node.js 提供了 JavaScript的运行环境。当JavaScript作为后端开发语言来说, 需要在node.js的环境上进行当JavaScript作为前端开发语言来说,需要在浏览器的环境上进行 Node.js 可…...