LAMM: Label Alignment for Multi-Modal Prompt Learning

系列论文研读目录

文章目录

- 系列论文研读目录

- 文章题目含义

- Abstract

- Introduction

- Related Work

- Vision Language Models

- Prompt Learning

- Methodology

- Preliminaries of CLIP

- Label Alignment

- Hierarchical Loss 分层损失

- Parameter Space 参数空间

- Feature Space 特征空间

- Logits Space Logits空间

- Total Loss 全损

- Experiments

- Few-shot Settings

- Datasets

- Baselines

- Comparision to the State-of-the-art Methods

- Main Results on 11 Datasets. 11个数据集的主要结果。

- Domain Generalization 领域泛化

- Combination with State-of-the-art methods 结合最先进的方法

- The improvement of incorporating LAMM 引入LAMM的改进

- Comparisons of different LAMMs 不同的LABLET的比较

- Class Incremental Learning 类增量学习

- Influence of Hierarchical Loss 等级损失的影响

- Ablation Experiments

- Ablations of Hierarchical Loss 等级损失的消融

- Initialization 初始化

- Vision Backbone 视觉骨干

- Conclusion

- Acknowledgments

- 10.轻松搞懂 Zero-Shot、One-Shot、Few-Shot

文章链接

文章题目含义

LAMM:多模态提示学习的标签对齐

Abstract

With the success of pre-trained visual-language (VL) models such as CLIP in visual representation tasks, transferring pre-trained models to downstream tasks has become a crucial paradigm. Recently, the prompt tuning paradigm, which draws inspiration from natural language processing (NLP), has made significant progress in VL field. However, preceding methods mainly focus on constructing prompt templates for text and visual inputs, neglecting the gap in class label representations between the VL models and downstream tasks. To address this challenge, we introduce an innovative label alignment method named LAMM, which can dynamically adjust the category embeddings of downstream datasets through end-to-end training. Moreover, to achieve a more appropriate label distribution, we propose a hierarchical loss, encompassing the alignment of the parameter space, feature space, and logits space. We conduct experiments on 11 downstream vision datasets and demonstrate that our method significantly improves the performance of existing multi-modal prompt learning models in few-shot scenarios, exhibiting an average accuracy improvement of 2.31(%) compared to the state-of-the-art methods on 16 shots. Moreover, our methodology exhibits the preeminence in continual learning compared to other prompt tuning methods. Importantly, our method is synergistic with existing prompt tuning methods and can boost the performance on top of them. Our code and dataset will be publicly available at https://github.com/gaojingsheng/LAMM.随着预训练的视觉语言(VL)模型(如CLIP)在视觉表征任务中的成功,将预训练的模型转移到下游任务已成为一个重要的范式。近年来,受自然语言处理(NLP)启发的即时调优范式在语言学领域取得了重大进展。然而,以前的方法主要集中在为文本和视觉输入构建提示模板,忽略了VL模型和下游任务之间的类标签表示的差距。为了应对这一挑战,我们引入了一种名为LAMM的创新标签对齐方法,该方法可以通过端到端训练动态调整下游数据集的类别嵌入。此外,为了实现更合适的标签分布,我们提出了一个分层损失,包括对齐的参数空间,特征空间和logits空间。我们在11个下游视觉数据集上进行了实验,并证明我们的方法显着提高了现有多模态提示学习模型在少数镜头场景中的性能,与16个镜头的最先进方法相比,平均准确率提高了2.31%。此外,我们的方法表现出卓越的持续学习相比,其他及时调整方法。重要的是,我们的方法与现有的快速调优方法是协同的,并且可以在它们之上提高性能。我们的代码和数据集将在https://github.com/gaojingsheng/LAMM上公开。

Introduction

-

Building machines to comprehend multi-modal information in real-world environments is one of the primary goals of artificial intelligence, where vision and language are the two crucial modalities (Du et al. 2022). One effective implementation method is to pre-train a foundational visionlanguage (VL) model on a large-scale visual-text dataset and then transfer it to downstream application scenarios (Radford et al. 2021; Jia et al. 2021). Typically, VL models employ two separate encoders to encode image and text features, followed by the design of an appropriate loss function for training. However, finetuning on extensively trained models is costly and intricate, thus making the question of how to effectively transfer pre-trained VL models to downstream tasks a inspiring and valuable issue.构建机器来理解现实世界环境中的多模态信息是人工智能的主要目标之一,其中视觉和语言是两个关键的模态(Du et al. 2022)。一种有效的实现方法是在大规模视觉文本数据集上预训练基础视觉语言(VL)模型,然后将其转移到下游应用场景(拉德福等人,2021; Jia等人,2021)。通常,VL模型采用两个单独的编码器来编码图像和文本特征,然后设计适当的损失函数进行训练。然而,对广泛训练的模型进行微调是昂贵且复杂的,因此如何有效地将预训练的VL模型转移到下游任务是一个鼓舞人心且有价值的问题。

-

Prompt learning provides an effective solution to this problem, which provides downstream tasks with corresponding textual descriptions based on human prior knowledge and can effectively enhance the zero-shot and few-shot recognition capability ofVL models. Through trainable templates with a small number of task-specific parameters, the process of constructing templates is further automated via gradient descent instead of manual constructions (Lester, Al-Rfou, and Constant 2021). Specifically, existing multimodal prompt tuning methods (Zhou et al. 2022b,a; Khattak et al. 2022) use the frozen CLIP (Radford et al. 2021) model and design trainable prompts separately for the textual and visual encoders. These approaches ensure that VL models could be better transferred to downstream tasks without any changes to the VL model’s parameters. However, their approach mainly focuses on the prompt template that is applicable to all categories, overlooking the feature representation of each category.提示学习为这一问题提供了有效的解决方案,它基于人类先验知识为下游任务提供相应的文本描述,可以有效提高VL模型的zero-shot和few-sho识别能力。通过具有少量特定于任务的参数的可训练模板,构建模板的过程通过梯度下降而不是手动构建进一步自动化(Lester、Al-Rfou和Constant 2021)。具体而言,现有的多模态提示调整方法(Zhou et al. 2022 b,a; Khattak et al. 2022)使用冻结CLIP(拉德福et al. 2021)模型,并分别为文本和视觉编码器设计可训练提示。这些方法确保VL模型可以更好地转移到下游任务,而不需要对VL模型的参数进行任何更改。然而,他们的方法主要集中在适用于所有类别的提示模板上,忽略了每个类别的特征表示。

-

The token in the text template is crucial in classifying an image into the proper category. For example, as depicted in Figure 1, llamas and alpacas are two animals that resemble each other closely. In CLIP, there exists a propensity to misclassify a llama as an alpaca owing to the overrepresentation of alpaca data in the pre-training dataset. By refining the text embedding position, CLIP can distinguish between these two species with trained feature space. Hence, identifying an optimal representation for each category in downstream tasks within the VL model is crucial. In the field of NLP, there exists the soft verbalizer (Cui et al. 2022), which enables the model to predict the representation of in the text template to represent the category of the original sentence on its own. Unlike NLP, it is infeasible to task the text encoder of the VL model with predicting the image category directly. Nevertheless, we can optimize the category embeddings of various categories within the downstream datasets to increase the similarity between each image and its corresponding category description.文本模板中的标记对于将图像分类到适当的类别中至关重要。例如,如图1所示,美洲驼和羊驼是两种彼此非常相似的动物。在CLIP中,由于预训练数据集中羊驼数据的过度表示,存在将美洲驼误分类为羊驼的倾向。通过细化文本嵌入位置,CLIP可以用训练好的特征空间区分这两个物种。因此,在VL模型中确定下游任务中每个类别的最佳表示是至关重要的。在NLP领域,存在软动词化器(Cui et al. 2022),它使模型能够预测文本模板中的表示,以自己表示原始句子的类别。与NLP不同,直接预测图像类别是不可行的VL模型的文本编码器。然而,我们可以优化下游数据集中各种类别的类别嵌入,以增加每个图像及其相应类别描述之间的相似性。

-

Consequently, we introduce a label alignment technique named LAMM, which automatically searches optimal embeddings through gradient optimization. To the best of our knowledge, the concept of trainable category token is first proposed in the pre-trained VL models. Simultaneously, to prevent the semantic features of the entire prompt template from deviating too far, we introduce a hierarchical loss during our training phase. The hierarchical loss facilitates alignment of category representations among parameter, feature and logits spaces. With these operations, the generalization ability of CLIP model can be preserved in LAMM, which makes LAMM better distinguish different categories in downstream tasks while preserving the semantics of the original category descriptions. Furthermore, given that LAMM solely fine-tunes the label embeddings within the downstream dataset, it doesn’t encounter the issue of catastrophic forgetting typically encountered in conventional methods during continual learning.因此,本文提出了一种标记对齐技术LAMM,它通过梯度优化来自动搜索最优嵌入。据我们所知,可训练类别标记的概念是在预训练的VL模型中首次提出的。同时,为了避免整个提示模板的语义特征偏离太远,我们在训练阶段引入了层次丢失。层次损失便于在参数、特征和logit空间之间对齐类别表示。通过这些操作,CLIP模型的泛化能力在LAMM中得以保留,使得LAMM在下游任务中更好地区分不同的类别,同时保留了原始类别描述的语义。此外,假定LAMM仅微调下游数据集中的标签嵌入,则它不会遇到在连续学习期间传统方法中通常遇到的灾难性遗忘问题。

-

We conduct experiments on 11 datasets, covering a range of downstream recognition scenarios. In terms of models, we test the vanilla CLIP, CoOp (Zhou et al. 2022b), and MaPLe (Khattak et al. 2022), which currently perform best in multi-modal prompt learning. Extensive experiments demonstrate the effectiveness of the proposed method within few-shot learning, illuminating its merits in both domain generalization and continual learning. Furthermore, our approach, being compatible to prevailing multi-modal prompt techniques, amplifies their efficacy across downstream datasets, ensuring consistent enhancement.我们在11个数据集上进行了实验,涵盖了一系列下游识别场景。在模型方面,我们测试了目前在多模态即时学习中表现最好的香草CLIP、CoOp(Zhou et al. 2022b)和MaPLe(Khattak et al. 2022)。与多种代表性算法的对比实验,验证了本方法的有效性。实验验证了该框架的有效性。此外,我们的方法与主流的多模态提示技术兼容,在下游数据集上增强了它们的效力,确保了一致的增强。

Related Work

Vision Language Models

In recent years, the development of Vision-Language Pre-Trained Models (VL-PTMs) has made tremendous progress, as evidenced by models such as CLIP (Radford et al. 2021), ALIGN (Jia et al. 2021), LiT (Zhai et al. 2022) and FILIP (Yao et al. 2022). These VL-PTMs are pre-trained on large-scale image-text corpora and learn universal cross-modal representations, which are beneficial for achieving strong performance in downstream VL tasks. For instance, CLIP are pre-trained on massive collections of image-caption pairs sourced from the internet, utilizing a contrastive loss that brings the representations of matching image-text pairs closer while pushing those of non-matching pairs further apart. After the pretrained stage, CLIP has demonstrated exceptional perfor mance on learning universal cross-modal representation in image-recognition (Gao et al. 2021), object detection (Zang et al. 2022), image segmentation (Li et al. 2022) and vision question answering (Sung, Cho, and Bansal 2022).近年来,视觉语言预训练模型(VL-PTM)的发展取得了巨大进展,CLIP(拉德福et al. 2021)、ALIGN(Jia et al. 2021)、LiT(Zhai et al. 2022)和FILIP(Yao et al. 2022)等模型就是明证。这些VL-PTM在大规模图像-文本语料库上进行预训练,并学习通用的跨模态表示,这有利于在下游VL任务中实现强大的性能。例如,CLIP是在来自互联网的大量图像-标题对集合上进行预训练的,利用对比损失,使匹配的图像-文本对的表示更接近,同时使不匹配的图像-文本对的表示更远。在预训练阶段之后,CLIP在图像识别(Gao et al. 2021),对象检测(Zang et al. 2022),图像分割(Li et al. 2022)和视觉问题回答(Sung,Cho和Bansal 2022)中学习通用跨模态表示方面表现出了卓越的性能。

Prompt Learning

- Prompt Learning Prompt learning adapt the pre-trained language models (PLMs) by designing a prompt template to leverage the power of PLMs to unprecedented heights, especially in few-shot settings (Liu et al. 2021). With the emergence of large-scale VL models, numerous researchers have attempted to integrate prompt learning into VL scenarios, resulting in the development of prompt paradigms that are better adapted for these scenarios. CoOp (Zhou et al. 2022b) first introduces the prompt tuning approach to VL models by learnable prompt template words. Co-CoOp (Zhou et al. 2022a) improves the performance on novel classes by incorporating instance-level image information. Unlike prompt engineering in text templates, VPT (Jia et al. 2022) inserts learnable parameters into the vision encoder. MaPLe (Khattak et al. 2022) appends a soft prompt to the hidden representations at each layer of the text and image encoders, resulting in a new solid performance in few-shot image recognition.提示学习提示学习通过设计提示模板来调整预先训练的语言模型(PLM),以将PLM的功能发挥到前所未有的高度,特别是在少数情况下(Liu et al. 2021)。随着大规模VL模型的出现,许多研究者试图将提示学习整合到VL场景中,从而发展出更适合这些场景的提示范式。CoOp(Zhou et al. 2022 b)首先通过可学习的提示模板词引入了VL模型的提示调优方法。Co-CoOp(Zhou et al. 2022 a)通过合并实例级图像信息来提高新类的性能。与文本模板中的提示工程不同,VPT(Jia et al. 2022)将可学习的参数插入到视觉编码器中。MaPLe(Khattak等人,2022)在文本和图像编码器的每一层的隐藏表示中添加软提示,从而在少镜头图像识别中获得新的可靠性能。

- Previous multi-modal prompts have primarily focused on the engineering of prompt templates for textual and visual inputs while neglecting the significance of label representations in such templates. However, label verbalizers have already been proven to be effective in few-shot text classification (Schick and Sch¨utze 2021), where verbalizers aim to reduce the gap between model outputs and label words. To alleviate the required expertise and workload for constructing a manual verbalizer, Gao et al. (Gao, Fisch, and Chen 2021) design search-based methods for better verbalizer choices during the training optimization process. Some other researches (Hambardzumyan, Khachatrian, and May 2021; Cui et al. 2022) propose trainable vectors as soft verbalizers to replace label words, which eliminates the difficulty of searching the entire dictionary.以前的多模态提示主要集中在用于文本和视觉输入的提示模板的工程上,而忽略了标签表示在这样的模板中的重要性。然而,标签动词化器已经被证明在少量文本分类中是有效的(Schick和Schüutze 2021),其中动词化器旨在减少模型输出和标签词之间的差距。为了减轻构建手动言语化器所需的专业知识和工作量,Gao等人(Gao,菲施和Chen 2021)设计了基于搜索的方法,以便在训练优化过程中更好地选择言语化器。其他一些研究(Hambardzumyan,Khachatrian和May 2021; Cui et al. 2022)提出了可训练向量作为软动词来取代标签词,这消除了搜索整个词典的困难。

- Hence, we introduce trainable vectors to substitute label words in multi-modal prompts. Our approach aims to align label representations in the downstream datasets with pretrained VL models, reducing the discrepancy in category descriptions between downstream datasets and VL-PTMs.因此,我们引入可训练向量来替代多模态提示中的标签词。我们的方法旨在将下游数据集中的标签表示与预训练的VL模型对齐,减少下游数据集和VL-PTM之间的类别描述差异。

Methodology

In this section, we will introduce how our proposed LAMM can be incorporated into CLIP seamlessly, accompanied by our hierarchical loss. The whole architecture of LAMM and hierarchical alignment is shown in Figure 2.在本节中,我们将介绍如何将我们提出的LAMM无缝地合并到CLIP中,并伴随着我们的分层损失。LAMM和分层对齐的整个架构如图2所示。

Preliminaries of CLIP

CLIP是一种VL-PTM,包括一个视觉编码器和一个文本编码器。这两个编码器分别提取图像和文本信息,并将它们映射到公共特征空间Rd,其中两个特征空间对齐良好。给定输入图像x,图像编码器将提取对应的图像表示Ix = Ix(x)。对于每个下游数据集,将有k个类,每个类将被填写在一个手动提示模板中,例如“a photo of <CLASS>”。然后,文本编码器将通过进一步处理为每个类别生成特征表示,从而产生每个类别特征。在训练过程中,CLIP最大化图像表示与其对应的类别表示之间的余弦相似性,同时最小化不匹配对之间的余弦相似性。在zero-shot推理期间,第i个类别的预测概率计算为:

其中,τ是CLIP获取的温度参数,函数cos表示余弦相似度。

Label Alignment

虽然CLIP具有很强的零次性能,但为下游任务提供相应的文本描述,可以有效增强CLIP的零次和少次识别能力。以往文本模板的快速调优工作主要集中在“一张照片”的训练上,而忽略了“一张照片”的优化。为了有效地将下游任务中的类标签与预先训练的模型对齐,我们提出了LAMM,它通过端到端训练自动优化标签嵌入。我们以CLIP为例,CLIP上的LAMM只对下游任务的类嵌入表示进行微调。这样,LAMM的prompt模板转换为:

其中< Mi >(i= 1,2,…,k)表示第i个类别的可学习标记。类似于等式1,LAMM的预测概率被计算为:

在训练过程中,我们只更新< Mi >每个下游数据集中的类别向量{ }k i=1,这将减少图像表示与其对应类别表示之间的差距。

因此,LAMM可以应用到现有的多模态提示方法中。以CoOp为例,CoOp和vanilla CLIP之间的区别是用M可学习的令牌替换提示模板。因此,CoOp+LAMM的提示模板变为:

通过用可训练向量替换类表示,我们可以将我们的方法集成到任何现有的多模态提示方法中。

Hierarchical Loss 分层损失

A well-aligned feature space is the key of strong zeroshot ability of CLIP, which also facilitates the learning of downstream tasks. Given that LAMM does not introduce any modifications or additions to the CLIP model’s parameters, the image representation within the aligned feature space remains fixed, while the trainable embedding of each category changes the text representation within the feature space. Nevertheless, a single text representation corresponds to multiple images of a given category in the downstream datasets, despite the entire training process being conducted under few-shot settings. This situation may lead to overfitting of the trainable class embeddings to the limited number of images in the training set. Hence, we propose a hierarchical loss (HL) to safeguard the generalization ability in the parameter space, feature space and logits space.一个良好对齐的特征空间是CLIP强大的零触发能力的关键,这也有利于下游任务的学习。鉴于LAMM不会对CLIP模型的参数进行任何修改或添加,对齐的特征空间内的图像表示保持固定,而每个类别的可训练嵌入会改变特征空间内的文本表示。然而,单个文本表示对应于下游数据集中给定类别的多个图像,尽管整个训练过程是在少数镜头设置下进行的。这种情况可能导致可训练类嵌入过度拟合到训练集中有限数量的图像。因此,我们提出了一个分层损失(HL),以保障在参数空间,特征空间和logits空间的泛化能力。

Parameter Space 参数空间

To mitigate the risk of overfitting in models, numerous machine learning techniques employ parameter regularization to enhance the generalization ability on unseen samples. In this regard, the weight consolidation (WC) (Kirkpatrick et al. 2017) loss is employed as follows:为了减轻模型中过拟合的风险,许多机器学习技术采用参数正则化来增强对未知样本的泛化能力。在这方面,采用重量固结(WC)(柯克帕特里克等人,2017年)损失如下:

其中,θ是当前模型的可训练参数,θ < 是参考参数。在 L A M M 中, θ 表示可训练的标签嵌入,而 < 是参考参数。在LAMM中,θ表示可训练的标签嵌入,而< 是参考参数。在LAMM中,θ表示可训练的标签嵌入,而<θ表示原始标签嵌入。虽然参数正则化可以解决过拟合问题,但过度正则化可能会阻碍模型充分捕获训练数据中存在的特征和模式的能力。我们将LWC的系数设置为与训练镜头的数量成反比,这表明更少的镜头将伴随着参数空间内更严格的正则化过程。

Feature Space 特征空间

In addition to the parameter space, it is crucial for the text feature of our trained category to align with the characteristics of the training images. During the training process, the text feature of our trained category gradually converges towards the characteristics present in the training images. However, if the representations of the fewshot training images for a particular category do not align with those of the entire image dataset, it can lead to the label semantics overfitting to specific samples. For example, consider the label embedding of the “llama” image illustrated in Figure 2. The label embedding may overfit to the background grass information, even though llamas may not always be associated with grass in all instances. In order to mitigate the overfitting of text features for each category, we employ a text feature alignment loss to restrict the optimization region of the text feature. Drawing inspiration from previous work on similarity at the semantic level (Gao, Yao, and Chen 2021), we employ a cosine similarity loss for alignment. In this approach, for a category template zi in Equation 2, the original prompt template yi from Equation 1 serves as the center of its optimization region. The cosine loss is formulated as follows:除了参数空间之外,我们训练类别的文本特征与训练图像的特征保持一致也很重要。在训练过程中,我们训练的类别的文本特征逐渐向训练图像中存在的特征收敛。然而,如果特定类别的少量训练图像的表示与整个图像数据集的表示不一致,则可能导致标签语义过拟合到特定样本。例如,考虑图2所示的“美洲驼”图像的标签嵌入。标签嵌入可能过拟合到背景草信息,即使美洲驼可能并不总是在所有情况下与草相关联。为了减轻每个类别的文本特征的过拟合,我们采用文本特征对齐损失来限制文本特征的优化区域。从先前在语义水平上的相似性工作(Gao,Yao和Chen 2021)中汲取灵感,我们采用余弦相似性损失进行对齐。在该方法中,对于等式2中的类别模板zi,来自等式1的原始提示模板yi用作其优化区域的中心。余弦损失公式如下:

Logits Space Logits空间

The strong generalization ability of CLIP plays a crucial role in the effectiveness of multi-modal prompting methods within few-shot scenarios. While the previous two losses enhance the generalization capability of LAMM through regularization in the parameter and feature spaces, it is desirable to minimize the distribution shift of logits between image representations and different text representations, as compared to the zero-shot CLIP. Therefore, we introduce a knowledge distillation loss in the classification logits space, which allows for the transfer of generalization knowledge from CLIP to LAMM. The distillation loss can be formulated as follows:CLIP强大的泛化能力对小场景下多模态提示方法的有效性起着至关重要的作用。虽然前两个损失通过参数和特征空间中的正则化增强了LAMM的泛化能力,但与零触发CLIP相比,希望最小化图像表示和不同文本表示之间的logit分布偏移。因此,我们在分类logits空间中引入了知识蒸馏损失,这允许将泛化知识从CLIP转移到LAMM。蒸馏损失可以用公式表示如下:

Total Loss 全损

To train the LAMM for downstream tasks, cross-entropy (CE) loss is applied to the similarity score as same to finetuning the CLIP model:为了训练下游任务的LAMM,交叉熵(CE)损失被应用于相似性得分,与微调CLIP模型相同:

where τ is a parameter learned during the pre-training. In this way, the total loss is:其中τ是在预训练期间学习的参数。这样,总损失为:

其中λ1、λ2、λ3是超参数。为了避免调整参数的冗余,我们根据经验设置λ1 = 1/n,λ2 = 1,λ3 = 0.05,其中n表示训练镜头的数量。

Experiments

Few-shot Settings

Datasets

We follow the datasets used in previous works (Zhou et al. 2022b; Khattak et al. 2022) and evaluate our method on 11 image classification datasets, including Caltech101 (Fei-Fei, Fergus, and Perona 2007), ImageNet (Deng et al. 2009), OxfordPets (Parkhi et al. 2012), Cars (Krause et al. 2013), Flowers102 (Nilsback and Zisserman 2008), Food101 (Bossard, Guillaumin, and Gool 2014), FGVC (Maji et al. 2013), SUN397 (Xiao et al. 2010), UCF101 (Soomro, Zamir, and Shah 2012), DTD (Cimpoi et al. 2014) and EuroSAT (Helber et al. 2019). Besides, we follow (Radford et al. 2021; Zhou et al. 2022b) to set up the few-shot evaluation protocol for our few-shot learning experiments. Specifically, we use 1, 2, 4, 8, and 16 shots for training respectively, and evaluate the models on the full test sets. All experimental results are the average of the results obtained from running the experiments on seeds 1, 2 and 3.我们遵循以前工作中使用的数据集(Zhou等人,2022 b; Khattak等人,2022年),并在11个图像分类数据集上评估我们的方法,包括Caltech 101(Fei-Fei,费尔格斯和Perona 2007),ImageNet(Deng等人,2009年),OxfordPets(Parkhi等人,2012年),汽车(Krause等人,2013年),Flowers 102(Nilsback和Zisserman,2008),Food 101(Bossard,Guillaumin和Gool 2014),FGVC(Maji等人,2013)、SUN 397(Xiao等人,2010)、UCF 101(Soomro、Zamir和Shah,2012)、DTD(Cimpoi等人,2014)和EuroSAT(Helber等人,2019)。此外,我们遵循(拉德福et al. 2021; Zhou et al. 2022 b)为我们的少镜头学习实验建立了少镜头评估协议。具体来说,我们分别使用1、2、4、8和16个镜头进行训练,并在完整的测试集上对模型进行评估。所有实验结果均为对种子1、2和3进行实验获得的结果的平均值。

Baselines

We compare the results across LAMM, CoOp, and MaPLe. Furthermore, we incorporate LAMM into CoOp and MaPLe to prove the compatibility of LAMM. To maintain fair controlled experiments, all prompt templates in our experiments are initialized from “a photo of ”. Besides, the pre-trained model adopted here is ViT-B/16 CLIP since MaPLe can only be adopted in transformer-based VL-PTMs.We keep the same training parameters (e.g., learning rate, epochs, and other prompt parameters) of each model in their original settings, where the epoch of CoOp is 50 and MaPLe is 5. As for vanilla CLIP + LAMM, we follow the settings of CoOp. All of our experiments are conducted on a single NVIDIA A100. The corresponding hyper-parameters are fixed across all datasets in our work. Moreover, adjusting parameters for different training shots and datasets can boost performance.我们比较了LAMM、CoOp和MaPLe的结果。此外,我们将LAMM纳入CoOp和Maple,以证明LAMM的兼容性。为了保证控制实验的公平性,实验中所有的提示模板都是从“a photo of“开始初始化的。此外,这里采用的预训练模型是ViT-B/16 CLIP,因为MaPLe只能在基于transformer的VL-PTM中采用。我们保持相同的训练参数(例如,学习率、历元和其他提示参数),其中CoOp的历元为50,MaPLe为5。至于vanilla CLIP + LAMM,我们遵循CoOp的设置。我们所有的实验都是在一台NVIDIA A100上进行的。相应的超参数在我们工作中的所有数据集上都是固定的。此外,调整不同训练镜头和数据集的参数可以提高性能。

Comparision to the State-of-the-art Methods

Main Results on 11 Datasets. 11个数据集的主要结果。

We compare LAMM, CoOp, MaPLe and zero-shot CLIP on the 11 datasets as mentioned above, demonstrated in in Figure 3. We can observe that LAMM yield best performance among all shots compared to the state-of-the-art multi-modal prompt methods. LAMM only replaces for each category with a trainable vector, while CoOp replaces “a photo of” with trainable vectors. However, compared to CLIP, LAMM demonstrates an enhancement of +1.02, +2.11, +2.65, +2.57, and +2.57(%), on the 1, 2, 4, 8, and 16 shots, respectively. The preceding result highlights the importance of finetuning label embeddings for downstream tasks, compared to the previous focus solely on prompt template learning in multimodal prompt learning. It suggests that label embeddings are even more crucial than prompt templates for pre-trained models’ transferability to downstream tasks. Furthermore, as the number of shot increases, the improvement observed with LAMM becomes more clear. This can be attributed to the challenge LAMM faces in learning representative embeddings for categories in downstream tasks when provided with fewer shots.我们在上述11个数据集上比较了LAMM、CoOp、MaPLe和零激发CLIP,如图3所示。我们可以观察到,与最先进的多模态提示方法相比,LAMM在所有镜头中产生最佳性能。LAMM仅用可训练向量替换每个类别,而CoOp用可训练向量替换“a photo of”。然而,与CLIP相比,LAMM在第1、2、4、8和16次注射时分别显示出+1.02、+2.11、+2.65、+2.57和+2.57(%)的增强。上述结果强调了微调标签嵌入对下游任务的重要性,而之前只关注多模态提示学习中的提示模板学习。这表明,标签嵌入比提示模板更重要,因为预训练模型可转移到下游任务。此外,随着发射次数的增加,使用LAMM观察到的改善变得更加明显。这可以归因于LAMM在学习下游任务中的类别的代表性嵌入时面临的挑战。

Domain Generalization 领域泛化

We evaluate the cross-dataset generalization ability of LAMM by training it on ImageNet and evaluating on ImageNetV2 (Recht et al. 2019) and Imagenet-Sketch (Wang et al. 2019), following Khattak et al. (2022). The evaluating datasets have same categories with the training set. But the three datasets are different in domain distribution. The experimental results are shown in Table 1. LAMM achieves the best performance, which surpasses MaPLe (Khattak et al. 2022) 1.06% on ImageNetV2 and falls behind MaPLe 1.04% on ImageNet-Sketch. On average, LAMM exceeds MaPLe 0.91%. This indicates the LAMM is also capable of out-of-distribution tasks.我们通过在ImageNet上训练LAMM并在ImageNet V2(Recht et al. 2019)和Imagenet-Sketch(Wang et al. 2019)上进行评估,来评估LAMM的跨数据集泛化能力。评估数据集与训练集具有相同的类别。但这三个数据集在领域分布上是不同的。实验结果如表1所示。LAMM实现了最佳性能,在ImageNetV 2上超过了MaPLe(Khattak et al. 2022)1.06%,在ImageNet-Sketch上福尔斯于MaPLe 1.04%。平均而言,LAMM超过MaPLe 0.91%。这表明LAMM也能够执行分发外任务。

Combination with State-of-the-art methods 结合最先进的方法

The improvement of incorporating LAMM 引入LAMM的改进

- We compare existing methods CoOp and MaPLe with and without LAMM, which uses trainable vectors and a hierarchical loss function. From Figure 3, we observe that LAMM notably enhances performance in few-shot scenarios, except in 1shot learning. For CoOp, the average accuracy variations across 11 datasets with 1, 2, 4, 8, and 16 shots are +0.77, +2.13, +3.03, +2.98, +3.17(%), respectively. As for MaPLe, the variations are -0.71, +1.79, +1.29, +1.72, +2.15(%). The incorporation ofLAMM has yielded exceedingly favorable outcomes across all three methodologies, reaffirming LAMM’s status as an exceptional few-shot learner.我们比较了现有的方法CoOp和Maple与和没有LAMM,它使用可训练向量和分层损失函数。从图3中,我们观察到LAMM在少数情况下显着提高了性能,除了在1 shot学习中。对于CoOp,11个数据集(1、2、4、8和16次激发)的平均准确度变化分别为+0.77、+2.13、+3.03、+2.98、+3.17(%)。对于MaPLe,变化为-0.71,+1.79,+1.29,+1.72,+2.15(%)。LAMM的合并在所有三种方法中都取得了非常有利的结果,重申了LAMM作为一个特殊的少数学习者的地位。

- Besides, the enhanced performance of LAMM improves significantly with the increase in shots, and the reason behind this is that our approach primarily operates on label representation. When the number of training sample is small, such as in the case of 1-shot learning, the label representation learns numerous specific features associated with the single image, which constitutes a significant portion of noise rather than the representation of the entire category. For instance, the significant reduction in accuracy of MaPLe with LAMM in the case of 1-shot learning is primarily due to the decrease of 5.38% accuracy on the EuroSAT (Krause et al. 2013). This is owing to the fact that the EuroSAT is a satellite-image dataset, which suggests that the gap between the feature spaces of images and text is considerable. Consequently, it makes LAMM more susceptible to overfitting on the features of limited-sample images. Moreover, MaPLe has more trainable parameters than CoOp, which makes it more prone to overfitting than CoOp.此外,LAMM的增强性能随着镜头的增加而显着提高,这背后的原因是我们的方法主要基于标签表示。当训练样本的数量很小时,例如在单次学习的情况下,标签表示学习与单个图像相关联的许多特定特征,这构成了噪声的重要部分,而不是整个类别的表示。例如,在单次学习的情况下,使用LAMM的Maple的准确性显著降低,主要是由于EuroSAT的准确性降低了5.38%(Krause等人,2013)。这是因为EuroSAT是一个卫星图像数据集,这表明图像和文本的特征空间之间存在相当大的差距。因此,它使LAMM更容易过拟合的功能有限的样本图像。此外,Maple比CoOp具有更多的可训练参数,这使得它比CoOp更容易过拟合。

Comparisons of different LAMMs 不同的LABLET的比较

After incorporating LAMM into previous methods, we evaluate the performance of LAMM, CoOp+LAMM, and MaPLe+LAMM. From Table 3, we can observe that CoOp+LAMM achieving the best results. To be more specific, LAMM achieves a reduction of +0.25, -0.02, -0.38, -0.41, -0.60(%) compared to CoOp+LAMM on 1, 2, 4, 8, and 16 shots. We conclude that CoOp+LAMM can achieve superior results due to CoOp’s ability to search a soft template that is more effective than “a photo of”, without significantly altering the semantic premise of the prompt template. In contrast, MaPLe introduces more trainable parameters, which may result in a greater deviation of the semantic meaning of the soft prompt template from that of “a photo of”.在将LAMM纳入以前的方法后,我们评估了LAMM,CoOp+LAMM和MaPLe+LAMM的性能。从表3中,我们可以观察到CoOp+LAMM实现了最佳结果。更具体地,与CoOp+LAMM相比,LAMM在1、2、4、8和16次注射时实现+0.25、-0.02、-0.38、-0.41、-0.60(%)的减少。我们得出结论,CoOp+LAMM可以实现上级的结果,由于CoOp的能力,搜索一个软模板,这是更有效的比“的照片”,而不显着改变提示模板的语义前提。相比之下,MaPLe引入了更多的可训练参数,这可能会导致软提示模板的语义与“a photo of”的语义偏差更大。

Class Incremental Learning 类增量学习

-

Since LAMM exclusively modifies the training class embedding without altering any parameters of the CLIP model, it is confined to using the unmodified CLIP and the untrained novel class embedding during base-to-novel testing. In this way, LAMM’s performance for novel classes is same to that of the zero-shot CLIP. However, except MaPLe exceed zeroshot CLIP 0.92% on novel class testing, other prompt tuning methods falls behind zero-shot CLIP.由于LAMM只修改训练类嵌入而不改变CLIP模型的任何参数,因此它仅限于在基础到新的测试期间使用未修改的CLIP和未训练的新类嵌入。通过这种方式,LAMM对新类的性能与零触发CLIP相同。然而,除了在新类测试中,Maple超过zeroshot CLIP 0.92%之外,其他快速调谐方法福尔斯都落后于zeroshot CLIP。

-

Apart from base-to-novel testing, incremental learning is also an significant issue when aiming to broaden a model’s knowledge base. Following MaPLe, we partition the datasets into base and novel classes, designating the base classes as Set 1 and the novel ones as Set 2. Initially, each model is trained on Set 1, after which the continual training persists on Set 2. Finally, we evaluate the performance of each model on both Set 1 and Set 2. The results are shown in Table 2. The term ’Degradation’ the disparity in performance on Set 1 prior to and subsequent to incremental training on Set 2. Such a decline represents the forgetting of prior tasks during the incremental learning process.除了基础到新的测试,增量学习也是一个重要的问题,旨在扩大模型的知识库。遵循MaPLe,我们将数据集划分为基础类和新类,将基础类指定为Set 1,将新类指定为Set 2。最初,每个模型在Set 1上训练,之后继续在Set 2上训练。最后,我们评估了每个模型在第1组和第2组上的性能。结果示于表2中。术语“降级”是指在集合2上进行增量训练之前和之后,集合1上的性能差异。这种下降表示在增量学习过程中忘记了先前的任务。

-

It is evident that LAMM exhibits superior performance on both Set 1 and Set 2, particularly on Set 1. The observed decline in performance of CoOp and MaPLe on Set 1 can be attributed to the phenomenon of forgetting during continual learning. Especially for CoOp, its performance on Set 1 closely approximates that of zero-shot CLIP. This indicates that the adaptable text template is highly sensitive to variations in testing tasks. Consequently, CoOp necessitates an entirely new template upon encountering novel classes. Although MaPLe outperforms CoOp, it still undergoes an average degradation of -5.92%.很明显,LAMM在组1和组2上都表现出上级的性能,特别是在组1上。在第1组上观察到的CoOp和Maple的性能下降可以归因于在持续学习期间的遗忘现象。特别是对于CoOp,其在集合1上的性能非常接近零激发CLIP的性能。这表明自适应文本模板对测试任务的变化高度敏感。因此,CoOp在遇到新类时需要一个全新的模板。尽管Maple的性能优于CoOp,但它仍然经历了-5.92%的平均降解。

-

As LAMM solely manipulates the embedding of new classes while preserving the existing class embeddings, the performance of LAMM on previous classes remains stable during continual training on new classes. Conversely, in the case of CoOp and MaPLe, when the number of categories to be learned increases, the model’s performance will significantly decline if retraining from scratch on all categories is not undertaken. This unique prowess of LAMM in incremental learning implies its suitability and desirability for deployment in downstream applications.由于LAMM只处理新类的嵌入,同时保留现有的类嵌入,因此LAMM对先前类的性能在新类的持续训练期间保持稳定。相反,在CoOp和Maple的情况下,当要学习的类别数量增加时,如果不对所有类别进行从头开始的再训练,模型的性能将显著下降。LAMM在增量学习中的这种独特能力意味着它在下游应用程序中部署的适用性和可取性。

Influence of Hierarchical Loss 等级损失的影响

To validate the effect of the proposed loss function, we conduct a comprehensive study on three models among all the 11 datasets. For comparison, we consider LAMM, CoOp+LAMM, and MaPLe+LAMM with or without hierarchical loss. Table 3 presents the averaged results over three runs. We observe that the results exhibit a significant difference upon the introduction of the loss, with hierarchical loss proving to be highly essential in enhancing the performance of LAMM. Furthermore, the degree of improvement brought by hierarchical loss becomes more apparent when there are fewer shots involved. This is due to that finetuning to align the label with few samples, especially with a single image, can result in the label overfitting to other noise information within the image. Since hierarchical loss incorporates strong constraints over parameter space, text feature space and logits space, which ensures that the semantic meaning of the label does not deviate too far from its original semantic meaning after training, it can improve LAMM’s performance more significantly with fewer samples compared to without hierarchical loss.为了验证所提出的损失函数的效果,我们对所有11个数据集中的三个模型进行了全面的研究。为了比较,我们考虑LAMM、CoOp+LAMM和有或没有分层损失的MaPLe+LAMM。表3列出了三次运行的平均结果。我们观察到,结果表现出显着的差异后,引入的损失,层次损失证明是非常重要的,在提高性能的LAMM。此外,当涉及的镜头较少时,层次损失带来的改善程度变得更加明显。这是由于微调以使标签与较少的样本对齐,特别是与单个图像对齐,可能导致标签过拟合到图像内的其他噪声信息。由于分层丢失对参数空间、文本特征空间和logits空间都有很强的约束,保证了训练后标签的语义不会偏离其原始语义太远,因此与没有分层丢失相比,它可以用更少的样本更显著地提高LAMM的性能。

Ablation Experiments

Ablations of Hierarchical Loss 等级损失的消融

In the context of the hierarchical loss, it is composed of losses from three dimensions: parameter space, feature space, and logits space. Therefore, we conducted an ablation study on these varying levels of loss in the context of 16-shot, as illustrated in Table 4. It is evident that each of the three losses contributes positively to the final outcome. However, their cumulative effects cannot be simply combined, given that the loss from one feature space can influence the characteristics of another space. The universal objective of these losses is to retain the generalization capability ofCLIP within LAMM, preventing overfitting on specific samples.在层次损失的上下文中,它由三个维度的损失组成:参数空间,特征空间和logits空间。因此,我们在16次击发的情况下对这些不同程度的丢失进行了消融研究,如表4所示。很明显,这三个损失中的每一个都对最终结果产生了积极的影响。然而,考虑到一个特征空间的损失可能会影响另一个空间的特征,因此它们的累积效应不能简单地组合起来。这些损失的通用目标是保持LAMM中CLIP的泛化能力,防止特定样本的过拟合。

Initialization 初始化

We conduct a comparison between initialization from category words and random initialization. The former uses the original word embedding of each category as the initialized category embedding, while the latter randomly initializes the category embeddings. Table 5 shows the results, which are conducted on LAMM among 11 datasets and suggest that random initialization is inferior to category word initialization. This illustrates that training representations for each category from scratch in fewshot training is quite challenging, and this is reflected in the increasing discrepancy between random initialization and word initialization as the shot number decreases.我们进行了一个比较从类别词初始化和随机初始化。前者将每个类别的原始词嵌入作为初始化的类别嵌入,后者将类别嵌入随机化。表5显示了在11个数据集的LAMM上进行的结果,表明随机初始化劣于类别词初始化。这说明在fewshot训练中从头开始训练每个类别的表示是非常具有挑战性的,这反映在随机初始化和单词初始化之间的差异随着镜头数量的减少而增加。

Vision Backbone 视觉骨干

Figure 4 illustrates the average results on 11 datasets of various visual backbones, including CNN and ViT architectures. The results demonstrate that LAMM can consistently improve the performance of CoOp across different visual backbones. This further indicates that LAMM can enhance the VL model’s transferability to downstream tasks in a stable manner.图4展示了各种视觉骨干的11个数据集的平均结果,包括CNN和ViT架构。结果表明,LAMM可以一致地提高跨不同视觉骨干的CoOp性能。这进一步表明,LAMM可以增强VL模型的下游任务的可移植性,在一个稳定的方式。

Conclusion

Compared to traditional few-shot learning methods, prompt learning based on VL-PTMs has demonstrated strong transferability in downstream tasks. Our research reveals that in addition to prompt template learning, reducing the gap between VL-PTMs and downstream task label representations is also a significant research issue. Our paper provides a comprehensive study on how to align label representations in downstream tasks to VL-PTMs in a plug-and-play way. Our proposed LAMM has demonstrated a significant improvement in the performance of previous multi-modal prompt methods in few-shot scenarios. In particular, by simply incorporating LAMM into vanilla CLIP, we can achieve better results than previous multi-modal prompt methods. LAMM also demonstrates robustness in out-of-distribution scenarios, along with its superiority in the incremental learning process. These findings further demonstrate the immense potential of optimizing the transfer of VL-PTMs to downstream tasks, not only limited to image recognition, but also encompassing visually semantic tasks such as image segmentation, object detection, and more. We hope that the insights gained from our work on label representation learning will facilitate the development of more effective transfer methods for VL-PTMs.与传统的少样本学习方法相比,基于VL-PTM的即时学习在下游任务中表现出很强的可移植性。我们的研究表明,除了提示模板学习,减少VL-PTM和下游任务标签表示之间的差距也是一个重要的研究课题。我们的论文提供了一个全面的研究如何对齐标签表示在下游任务VL-PTM的即插即用的方式。我们提出的LAMM已经证明了以前的多模态提示方法在几个镜头的情况下的性能显着改善。特别是,通过简单地将LAMM合并到vanilla CLIP中,我们可以获得比以前的多模态提示方法更好的结果。LAMM还展示了在分布外场景中的鲁棒性,沿着它在增量学习过程中的优越性。这些发现进一步证明了优化VL-PTM向下游任务转移的巨大潜力,不仅限于图像识别,还包括视觉语义任务,如图像分割,对象检测等。我们希望,从我们的工作中获得的见解标签表示学习将促进更有效的转移方法的VL-PTM的发展。

Acknowledgments

This research was supported by the National Natural Science Foundation of China (Grant No.61977045).本研究得到了国家自然科学基金(批准号:61977045)的资助。

10.轻松搞懂 Zero-Shot、One-Shot、Few-Shot

https://zhuanlan.zhihu.com/p/696054303

相关文章:

LAMM: Label Alignment for Multi-Modal Prompt Learning

系列论文研读目录 文章目录 系列论文研读目录文章题目含义AbstractIntroductionRelated WorkVision Language ModelsPrompt Learning MethodologyPreliminaries of CLIPLabel AlignmentHierarchical Loss 分层损失Parameter Space 参数空间Feature Space 特征空间Logits Space …...

mac编译opencv 通用架构库的记录

1,通用架构 (x86_64;arm64)要设置的配置项: CPU_BASELINE CPU_DISPATCH 上面这两个我设置成SSE_3,其他选项未尝试,比如不设置。 CMAKE_OSX_ARCHITECTURES:x86_64;arm64 WITH_IPP:不勾选 2,contrib库的添加: 第一次…...

Python 向IP地址发送字符串

Python 向IP地址发送字符串 在网络编程中,使得不同设备间能够进行数据传输是一项基本任务。Python提供了强大的库,帮助开发者轻松地实现这种通信。本文将介绍如何使用Python通过UDP协议向特定的IP地址发送字符串信息。 UDP协议简介 UDP(用…...

上升响应式Web设计:纯HTML和CSS的实现技巧-1

响应式Web设计(Responsive Web Design, RWD)是一种旨在确保网站在不同设备和屏幕尺寸下都能良好运行的网页设计策略。通过纯HTML和CSS实现响应式设计,主要依赖于媒体查询(Media Queries)、灵活的布局、可伸缩的图片和字…...

利用java结合python实现gis在线绘图,主要技术java+python+matlab+idw+Kriging

主要技术javapythonmatlabidwKriging** GIS中的等值面和等高线绘图主要用于表达连续空间数据的分布情况,特别适用于需要展示三维空间中某个变量随位置变化的应用场景。 具体来说,以下是一些适合使用GIS等值面和等高线绘图的场景: 地形与地貌…...

Android全面解析之context机制(三): 从源码角度分析context创建流程(下)

前言 前面已经讲了什么是context以及从源码角度分析context创建流程(上)。限于篇幅把四大组件中的广播和内容提供器的context获取流程放在了这篇文章。广播和内容提供器并不是context家族里的一员,所以他们本身并不是context,因而…...

执行docker compose命令出现 Additional property include is not allowed

问题背景 在由docker-compose.yml的文件目录下执行命令 docker compose up -d 出现错误 Additional ininoperty include is not allowed 原因 我的docker-compose.yml 文件中出现了include标签旧版本的docker-compose 不支持此标签 解决办法 下载支持的docker-compose 解决…...

STM32通过I2C硬件读写MPU6050

目录 STM32通过I2C硬件读写MPU6050 1. STM32的I2C外设简介 2. STM32的I2C基本框图 3. STIM32硬件I2C主机发送流程 10位地址与7位地址的区别 7位主机发送的时序流程 7位主机接收的时序流程 4. STM32硬件与软件的波形对比 5. STM32配置硬件I2C外设流程 6. STM32的I2C.h…...

ubuntu2204-中文输入法-pycharm-python-django开发环境搭建

文章目录 1.系统常用设置1.1.安装中文输入法1.2.配置输入法1.3.卸载输入法1.4.配置镜像源2.java安装3.pycharm安装与启动4.卸载ubuntu2204默认版本5.安装Anaconda5.1.安装软件依赖包5.2.安装命令5.3.激活安装5.4.常用命令5.5.修改默认启动源6.安装mysql6.1.离线安装mysql6.2.在…...

【学习笔记】Matlab和python双语言的学习(一元线性回归)

文章目录 前言一、一元线性回归回归分析的一般步骤一元线性回归的基本形式回归方程参数的最小二乘法估计对回归方程的各种检验估计标准误差的计算回归直线的拟合优度判定系数显著性检验 二、示例三、代码实现----Matlab四、代码实现----python回归系数的置信区间公式残差的置信…...

LeetCode //C - 316. Remove Duplicate Letters

316. Remove Duplicate Letters Given a string s, remove duplicate letters so that every letter appears once and only once. You must make sure your result is the smallest in lexicographical order among all possible results. Example 1: Input: s “bcabc”…...

【ARM+Codesys 客户案例 】RK3568/A40i/STM32+CODESYS在工厂自动化中的应用:PCB板焊接机

现代化生产中,电子元件通常会使用自动化设备来进行生产,例如像PCB(印刷电路板)的组装。但是生产过程中也会面临一些问题,类似于如何解决在PCB板上牢固、精准地安装各种组件呢?IBL Lttechnik GmbH公司的CM80…...

【二分查找】--- 初阶题目赏析

Welcome to 9ilks Code World (๑•́ ₃ •̀๑) 个人主页: 9ilk (๑•́ ₃ •̀๑) 文章专栏: 算法Joureny 上篇我们讲解了关于二分的朴素模板和边界模板,本篇博客我们试着运用这些模板。 🏠 搜索插入位置 📌 题目…...

【PostgreSQL003】PostgreSQL数据表空间膨胀,磁盘爆满,应用宕机(经验总结,已更新)

1.一直以来想写下基于PostgreSQL的系列文章,作为较火的数据ETL工具,也是日常项目开发中常用的一款工具,最近刚好挤时间梳理、总结下这块儿的知识体系。 2.熟悉、梳理、总结下PostgreSQL数据库相关知识体系。空间膨胀(主键、外键、…...

C语言第20天笔记

文件操作 概述 什么是 文件 文件时保存在外存储器上(一般代指磁盘,也可以是U盘、移动硬盘等)的数据的集合。 文件操作体现在哪几个方面 1. 文件内容的读取 2. 文件内容的写入 数据的读取和写入可被视为针对文件进行输入和输出的操作&a…...

为什么穷大方

为什么有些人明明很穷,却非常的大方呢? 因为他们认知太低,根本不懂钱的重要性,总是想着及时享乐,所以一年到头也存不了什么钱。等到家人孩子需要用钱的时候,什么也拿不出来,还到处去求人。 而真…...

HiveSQL实战——大数据开发面试高频SQL题

查询每个区域的男女用户数 0 问题描述 每个区域内男生、女生分别有多少个 1 数据准备 use wxthive; create table t1_stu_table (id int,name string,class string,sex string ); insert overwrite table t1_stu_table values(4,张文华,二区,男),(3,李思雨,一区,女),(1…...

RabbitMQ集群 - 普通集群搭建、宕机情况

文章目录 RabbitMQ 普通集群概述集群搭建数据准备启动容器宕机情况 RabbitMQ 普通集群 概述 1)普通模式中所有节点没有主从之分,所有节点的元数据(交换机、队列、绑定等)都是一致的. 例如只要有任意一个节点上面 新增交换机&…...

xssDOM型练习

文章目录 例1要求 例2代码解析方法 例3例4例5例6例7例8 例1 本题通过get接收并传递参数,所有参数不经过过滤直接放入h2标签里面。 要求 1.需要页面弹出1337 2.不能与用户交互 官方认为innerHTML中script标签不安全,所以将其禁用,但只禁用了…...

python中的gradio使用麦克风时报错

python中的gradio使用麦克风时报错 当运行至 import gradio as gr with gr.Blocks() as demo:with gr.Tab("microphone transcriber"):gr.Audio(source"microphone", type"numpy", streamingTrue)demo.queue()##访问链接 https://ip:1235/demo…...

OpenLayers 可视化之热力图

注:当前使用的是 ol 5.3.0 版本,天地图使用的key请到天地图官网申请,并替换为自己的key 热力图(Heatmap)又叫热点图,是一种通过特殊高亮显示事物密度分布、变化趋势的数据可视化技术。采用颜色的深浅来显示…...

DAY 47

三、通道注意力 3.1 通道注意力的定义 # 新增:通道注意力模块(SE模块) class ChannelAttention(nn.Module):"""通道注意力模块(Squeeze-and-Excitation)"""def __init__(self, in_channels, reduction_rat…...

五年级数学知识边界总结思考-下册

目录 一、背景二、过程1.观察物体小学五年级下册“观察物体”知识点详解:由来、作用与意义**一、知识点核心内容****二、知识点的由来:从生活实践到数学抽象****三、知识的作用:解决实际问题的工具****四、学习的意义:培养核心素养…...

用机器学习破解新能源领域的“弃风”难题

音乐发烧友深有体会,玩音乐的本质就是玩电网。火电声音偏暖,水电偏冷,风电偏空旷。至于太阳能发的电,则略显朦胧和单薄。 不知你是否有感觉,近两年家里的音响声音越来越冷,听起来越来越单薄? —…...

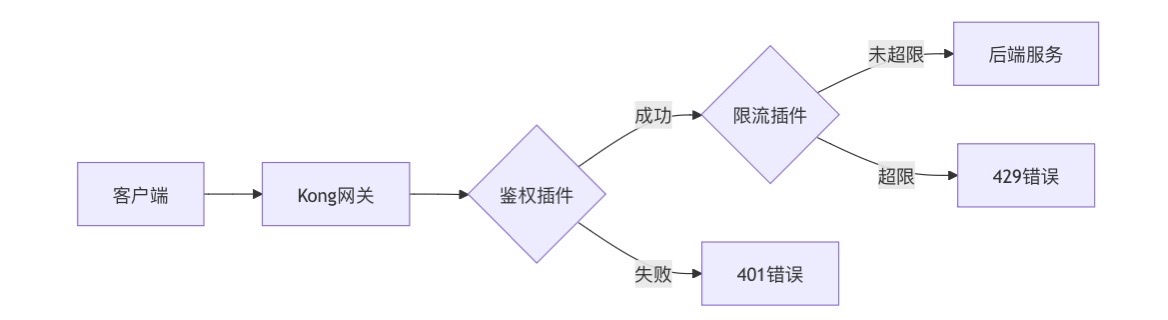

云原生安全实战:API网关Kong的鉴权与限流详解

🔥「炎码工坊」技术弹药已装填! 点击关注 → 解锁工业级干货【工具实测|项目避坑|源码燃烧指南】 一、基础概念 1. API网关(API Gateway) API网关是微服务架构中的核心组件,负责统一管理所有API的流量入口。它像一座…...

)

41道Django高频题整理(附答案背诵版)

解释一下 Django 和 Tornado 的关系? Django和Tornado都是Python的web框架,但它们的设计哲学和应用场景有所不同。 Django是一个高级的Python Web框架,鼓励快速开发和干净、实用的设计。它遵循MVC设计,并强调代码复用。Django有…...

从0开始一篇文章学习Nginx

Nginx服务 HTTP介绍 ## HTTP协议是Hyper Text Transfer Protocol(超文本传输协议)的缩写,是用于从万维网(WWW:World Wide Web )服务器传输超文本到本地浏览器的传送协议。 ## HTTP工作在 TCP/IP协议体系中的TCP协议上&#…...

分布式计算框架学习笔记

一、🌐 为什么需要分布式计算框架? 资源受限:单台机器 CPU/GPU 内存有限。 任务复杂:模型训练、数据处理、仿真并发等任务耗时严重。 并行优化:通过任务拆分和并行执行提升效率。 可扩展部署:适配从本地…...

Python[数据结构及算法 --- 栈]

一.栈的概念 在 Python 中,栈(Stack)是一种 “ 后进先出(LIFO)”的数据结构,仅允许在栈顶进行插入(push)和删除(pop)操作。 二.栈的抽象数据类型 1.抽象数…...

)

Three.js进阶之粒子系统(一)

一些特定模糊现象,经常使用粒子系统模拟,如火焰、爆炸等。Three.js提供了多种粒子系统,下面介绍粒子系统 一、Sprite粒子系统 使用场景:下雨、下雪、烟花 ce使用代码: var materialnew THRESS.SpriteMaterial();//…...