C++卷积神经网络

C++卷积神经网络

#include"TP_NNW.h"

#include<iostream>

#pragma warning(disable:4996)

using namespace std;

using namespace mnist;float* SGD(Weight* W1, Weight& W5, Weight& Wo, float** X)

{Vector2 ve(28, 28);float* temp = new float[10];Vector2 Cout;float*** y1 = Conv(X, ve, Cout, W1, 20);for (int i = 0; i < 20; i++)for (int n = 0; n < Cout.height; n++)for (int m = 0; m < Cout.width; m++)y1[i][n][m] = ReLU(y1[i][n][m]);float*** y2 = y1;Vector2 Cout2;float*** y3 = Pool(y1, Cout, 20, Cout2);float* y4 = reshape(y3, Cout2, 20, true);float* v5 = dot(W5, y4);float* y5 = ReLU(v5, W5);float* v = dot(Wo, y5);float* y = Softmax(v, Wo);for (int i = 0; i < Wo.len.height; i++)temp[i] = y[i];return temp;

}

void trainSGD(Weight* W1, Weight& W5, Weight& Wo, FILE* fp, FILE* tp)

{Vector2 ve(28, 28);unsigned char* reader = new unsigned char[ve.height * ve.width];float** X = apply2(ve.height, ve.width);unsigned char hao;hot_one<char> D(10);Weight* momentum1 = new Weight[20];//动量Weight momentum5;Weight momentumo;Weight* dW1 = new Weight[20];//动量Weight dW5;Weight dWo;for (int i = 0; i < 20; i++)W1[0] >> momentum1[i];W5 >> momentum5;Wo >> momentumo;int N = 8000;//训练集取前8000个int bsize = 100;//100个纠正一次int b_len;int* blist = bList(bsize, N, &b_len);for (int batch = 0; batch < b_len; batch++){for (int i = 0; i < 20; i++)W1[0] >> dW1[i];W5 >> dW5;Wo >> dWo;int begins = blist[batch];for (int k = begins; k < begins + bsize && k < N; k++){::fread(reader, sizeof(unsigned char), ve.height * ve.width, fp);//读取图像Toshape2(X, reader, ve);//组合成二维数组Vector2 Cout;//储存卷积后数组的尺寸 20float*** y1 = Conv(X, ve, Cout, W1, 20);//卷积for (int i = 0; i < 20; i++)for (int n = 0; n < Cout.height; n++){for (int m = 0; m < Cout.width; m++){y1[i][n][m] = ReLU(y1[i][n][m]);//通过ReLU函数}}float*** y2 = y1;//给变量y2Vector2 Cout2;//记录池化后的尺寸 10float*** y3 = Pool(y1, Cout, 20, Cout2);//池化层float* y4 = reshape(y3, Cout2, 20, true);//作为神经元输入float* v5 = dot(W5, y4);//矩阵乘法float* y5 = ReLU(v5, W5);//ReLU函数float* v = dot(Wo, y5);//举证乘法float* y = Softmax(v, Wo);//soft分类::fread(&hao, sizeof(unsigned char), 1, tp);//读取标签D.re(hao);float* e = new float[10];for (int i = 0; i < 10; i++)e[i] = ((float)D.one[i]) - y[i];float* delta = e;float* e5 = FXCB_err(Wo, delta);float* delta5 = Delta2(y5, e5, W5);float* e4 = FXCB_err(W5, delta5);float*** e3 = Toshape3(e4, 20, Cout2);float*** e2 = apply3(20, Cout.height, Cout.width);Weight one(2, 2, ones);/*for (int i = 0; i < 20; i++){::printf("第%d层\n", i);for (int n = 0; n < Cout2.height; n++){for (int m = 0; m < Cout2.width; m++)::printf("%0.3f ", e3[i][n][m]);puts("");}}getchar();*/for (int i = 0; i < 20; i++)//---------------------------------kron(e2[i], Cout, e3[i], Cout2, one.WG, one.len);/*for (int i = 0; i < 20; i++){::printf("第%d层\n", i);for (int n = 0; n < Cout.height; n++){for (int m = 0; m < Cout.width; m++)::printf("%f ", e2[i][n][m]);puts("");}}getchar();*/float*** delta2 = apply3(20, Cout.height, Cout.width);for (int i = 0; i < 20; i++)for (int n = 0; n < Cout.height; n++)for (int m = 0; m < Cout.width; m++)delta2[i][n][m] = (y2[i][n][m] > 0) * e2[i][n][m];float*** delta_x = (float***)malloc(sizeof(float***) * 20);Vector2 t1;for (int i = 0; i < 20; i++)delta_x[i] = conv2(X, ve, delta2[i], Cout, &t1);for (int i = 0; i < 20; i++)for (int n = 0; n < t1.height; n++)for (int m = 0; m < t1.width; m++)dW1[i].WG[n][m] += delta_x[i][n][m];dW5.re(delta5, y4, 1);dWo.re(delta, y5, 1);Free3(delta_x, 20, t1.height);Free3(delta2, 20, Cout.height);one.release();Free3(e2, 20, Cout.height);Free3(e3, 20, Cout2.height);free(e4);free(delta5);free(e5);free(v5);delete e;free(y5);free(v);free(y);Free3(y1, 20, Cout.height);free(y4);}for (int i = 0; i < 20; i++)dW1[i] /= (bsize);dW5 /= (bsize);dWo /= (bsize);for (int i = 0; i < 20; i++)for (int n = 0; n < W1[0].len.height; n++)for (int m = 0; m < W1[0].len.width; m++){momentum1[i].WG[n][m] = ALPHA * dW1[i].WG[n][m] + BETA * momentum1[i].WG[n][m];W1[i].WG[n][m] += momentum1[i].WG[n][m];}for (int n = 0; n < W5.len.height; n++)for (int m = 0; m < W5.len.width; m++)momentum5.WG[n][m] = ALPHA * dW5.WG[n][m] + BETA * momentum5.WG[n][m];W5 += momentum5;for (int n = 0; n < Wo.len.height; n++)for (int m = 0; m < Wo.len.width; m++)momentumo.WG[n][m] = ALPHA * dWo.WG[n][m] + BETA * momentumo.WG[n][m];Wo += momentumo;}for (int i = 0; i < 20; i++){momentum1[i].release();dW1[i].release();}momentum5.release();momentumo.release();Free2(X, ve.height);free(blist);delete reader;D.release();dW5.release();dWo.release();return;

}

void trainSGD1(Weight* W1, Weight& W5, Weight& Wo, FILE* fp, FILE* tp)

{Vector2 ve(28, 28);unsigned char* reader = new unsigned char[ve.height * ve.width];float** X = apply2(ve.height, ve.width);unsigned char hao;hot_one<char> D(10);Weight* momentum1 = new Weight[20];//动量Weight momentum5;Weight momentumo;Weight* dW1 = new Weight[20];//动量Weight dW5;Weight dWo;for (int i = 0; i < 20; i++)W1[0] >> momentum1[i];W5 >> momentum5;Wo >> momentumo;int N = 108;//训练集取前8000个int bsize = 12;//100个纠正一次int b_len;int* blist = bList(bsize, N, &b_len);for (int batch = 0; batch < b_len; batch++){for (int i = 0; i < 20; i++)W1[0] >> dW1[i];W5 >> dW5;Wo >> dWo;int begins = blist[batch];for (int k = begins; k < begins + bsize && k < N; k++){::fread(reader, sizeof(unsigned char), ve.height * ve.width, fp);//读取图像Toshape2(X, reader, ve);//组合成二维数组Vector2 Cout;//储存卷积后数组的尺寸 20float*** y1 = Conv(X, ve, Cout, W1, 20);//卷积for (int i = 0; i < 20; i++)for (int n = 0; n < Cout.height; n++){for (int m = 0; m < Cout.width; m++){y1[i][n][m] = ReLU(y1[i][n][m]);//通过ReLU函数}}float*** y2 = y1;//给变量y2Vector2 Cout2;//记录池化后的尺寸 10float*** y3 = Pool(y1, Cout, 20, Cout2);//池化层float* y4 = reshape(y3, Cout2, 20, true);//作为神经元输入float* v5 = dot(W5, y4);//矩阵乘法float* y5 = ReLU(v5, W5);//ReLU函数float* v = dot(Wo, y5);//举证乘法float* y = Softmax(v, Wo);//soft分类::fread(&hao, sizeof(unsigned char), 1, tp);//读取标签D.re(hao);float* e = new float[10];for (int i = 0; i < 10; i++)e[i] = ((float)D.one[i]) - y[i];float* delta = e;float* e5 = FXCB_err(Wo, delta);float* delta5 = Delta2(y5, e5, W5);float* e4 = FXCB_err(W5, delta5);float*** e3 = Toshape3(e4, 20, Cout2);float*** e2 = apply3(20, Cout.height, Cout.width);Weight one(2, 2, ones);/*for (int i = 0; i < 20; i++){::printf("第%d层\n", i);for (int n = 0; n < Cout2.height; n++){for (int m = 0; m < Cout2.width; m++)::printf("%0.3f ", e3[i][n][m]);puts("");}}getchar();*/for (int i = 0; i < 20; i++)//---------------------------------kron(e2[i], Cout, e3[i], Cout2, one.WG, one.len);/*for (int i = 0; i < 20; i++){::printf("第%d层\n", i);for (int n = 0; n < Cout.height; n++){for (int m = 0; m < Cout.width; m++)::printf("%f ", e2[i][n][m]);puts("");}}getchar();*/float*** delta2 = apply3(20, Cout.height, Cout.width);for (int i = 0; i < 20; i++)for (int n = 0; n < Cout.height; n++)for (int m = 0; m < Cout.width; m++)delta2[i][n][m] = (y2[i][n][m] > 0) * e2[i][n][m];float*** delta_x = (float***)malloc(sizeof(float***) * 20);Vector2 t1;for (int i = 0; i < 20; i++)delta_x[i] = conv2(X, ve, delta2[i], Cout, &t1);for (int i = 0; i < 20; i++)for (int n = 0; n < t1.height; n++)for (int m = 0; m < t1.width; m++)dW1[i].WG[n][m] += delta_x[i][n][m];dW5.re(delta5, y4, 1);dWo.re(delta, y5, 1);Free3(delta_x, 20, t1.height);Free3(delta2, 20, Cout.height);one.release();Free3(e2, 20, Cout.height);Free3(e3, 20, Cout2.height);free(e4);free(delta5);free(e5);free(v5);delete e;free(y5);free(v);free(y);Free3(y1, 20, Cout.height);free(y4);}for (int i = 0; i < 20; i++)dW1[i] /= (bsize);dW5 /= (bsize);dWo /= (bsize);for (int i = 0; i < 20; i++)for (int n = 0; n < W1[0].len.height; n++)for (int m = 0; m < W1[0].len.width; m++){momentum1[i].WG[n][m] = ALPHA * dW1[i].WG[n][m] + BETA * momentum1[i].WG[n][m];W1[i].WG[n][m] += momentum1[i].WG[n][m];}for (int n = 0; n < W5.len.height; n++)for (int m = 0; m < W5.len.width; m++)momentum5.WG[n][m] = ALPHA * dW5.WG[n][m] + BETA * momentum5.WG[n][m];W5 += momentum5;for (int n = 0; n < Wo.len.height; n++)for (int m = 0; m < Wo.len.width; m++)momentumo.WG[n][m] = ALPHA * dWo.WG[n][m] + BETA * momentumo.WG[n][m];Wo += momentumo;}for (int i = 0; i < 20; i++){momentum1[i].release();dW1[i].release();}momentum5.release();momentumo.release();Free2(X, ve.height);free(blist);delete reader;D.release();dW5.release();dWo.release();return;

}

float rand1()

{float temp = (rand() % 20) / (float)10;if (temp < 0.0001)temp = 0.07;temp *= (rand() % 2 == 0) ? -1 : 1;return temp * 0.01;

}

float rand2()

{float temp = (rand() % 10) / (float)10;float ret = (2 * temp - 1) * sqrt(6) / sqrt(360 + 2000);if (ret < 0.0001 && ret>-0.0001)ret = 0.07;return ret;

}

float rand3()

{float temp = (rand() % 10) / (float)10;float ret = (2 * temp - 1) * sqrt(6) / sqrt(10 + 100);if (ret < 0.0001 && ret>-0.0001)ret = 0.07;return ret;

}void train()

{FILE* fp = fopen("t10k-images.idx3-ubyte", "rb");FILE* tp = fopen("t10k-labels.idx1-ubyte", "rb");int rdint;::fread(&rdint, sizeof(int), 1, fp);::printf("训练集幻数:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, fp);::printf("训练集数量:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, fp);::printf("训练集高度:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, fp);::printf("训练集宽度:%d\n", ReverseInt(rdint));int start1 = ftell(fp);::fread(&rdint, sizeof(int), 1, tp);::printf("标签幻数:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, tp);::printf("标签数量:%d\n", ReverseInt(rdint));int start2 = ftell(tp);Weight* W1 = new Weight[20];WD(W1, 9, 9, 20, rand1);Weight W5(100, 2000, rand2);Weight Wo(10, W5.len.height, rand3);for (int k = 0; k < 3; k++){trainSGD(W1, W5, Wo, fp, tp);fseek(fp, start1, 0);fseek(tp, start2, 0);::printf("第%d次训练结束\n", k + 1);}fclose(fp);fclose(tp);fp = fopen("mnist_Weight.acp", "wb");for (int i = 0; i < 20; i++)W1[i].save(fp);W5.save(fp);Wo.save(fp);fclose(fp);::printf("训练完成");getchar();

}

void train1()

{FILE* fp = fopen("out_img.acp", "rb");FILE* tp = fopen("out_label.acp", "rb");int rdint;::fread(&rdint, sizeof(int), 1, fp);::printf("训练集幻数:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, fp);::printf("训练集数量:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, fp);::printf("训练集高度:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, fp);::printf("训练集宽度:%d\n", ReverseInt(rdint));int start1 = ftell(fp);::fread(&rdint, sizeof(int), 1, tp);::printf("标签幻数:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, tp);::printf("标签数量:%d\n", ReverseInt(rdint));int start2 = ftell(tp);Weight* W1 = new Weight[20];WD(W1, 9, 9, 20, rand1);Weight W5(100, 2000, rand2);Weight Wo(10, W5.len.height, rand3);for (int k = 0; k < 1000; k++){trainSGD1(W1, W5, Wo, fp, tp);fseek(fp, start1, 0);fseek(tp, start2, 0);::printf("第%d次训练结束\n", k + 1);}fclose(fp);fclose(tp);fp = fopen("mnist_Weight.acp", "wb");for (int i = 0; i < 20; i++)W1[i].save(fp);W5.save(fp);Wo.save(fp);fclose(fp);::printf("训练完成");getchar();

}

void test()

{FILE* fp = fopen("mnist_Weight.acp", "rb");Weight* W1 = new Weight[20];WD(W1, 9, 9, 20, rand1);Weight W5(100, 2000, rand1);Weight Wo(10, W5.len.height, rand1);for (int i = 0; i < 20; i++)W1[i].load(fp);W5.load(fp);Wo.load(fp);fclose(fp);fp = fopen("t10k-images.idx3-ubyte", "rb");FILE* tp = fopen("t10k-labels.idx1-ubyte", "rb");int rdint;::fread(&rdint, sizeof(int), 1, fp);::printf("训练集幻数:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, fp);::printf("训练集数量:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, fp);::printf("训练集高度:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, fp);::printf("训练集宽度:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, tp);::printf("标签幻数:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, tp);::printf("标签数量:%d\n", ReverseInt(rdint));unsigned char* res = new unsigned char[28 * 28];float** X = apply2(28, 28);unsigned char biaoqian;Vector2 t2828 = Vector2(28, 28);for (int i = 0; i < 50; i++){::fread(res, sizeof(unsigned char), 28 * 28, fp);Toshape2(X, res, 28, 28);print(X, t2828);float* h = SGD(W1, W5, Wo, X);//带入神经网络int c = -1;for (int i = 0; i < 10; i++){if (h[i] > 0.85){c = i;break;}}::fread(&biaoqian, sizeof(unsigned char), 1, tp);::printf("正确结果应当为“%d”, 神经网络识别为“%d” \n", biaoqian, c);}

}

void sb()

{Weight* W1;Weight W5(100, 2000, rand2);Weight Wo(10, W5.len.height, rand3);//::printf("加载权重完毕\n");Vector2 out;char path[256];for (int r = 0; r < 4; r++){sprintf(path, "acp%d.png", r);float** img = Get_data_by_Mat(path, out);//print(img, out);float* h = SGD(W1, W5, Wo, img);//带入神经网络int c = -1;float x = 0;for (int i = 0; i < 10; i++){if (h[i] > 0.85 && h[i] > x){x = h[i];c = i;}}::printf("%d ", c);Free2(img, out.height);free(h);remove(path);}puts("");

}void sb(char* path)

{Weight* W1 = new Weight[20];Weight W5(100, 2000, rand2);Weight Wo(10, W5.len.height, rand3);FILE* fp = fopen("mnist_Weight.acp", "rb");puts("开始加载权重");WD(W1, 9, 9, 20, rand1);for (int i = 0; i < 20; i++)W1[i].load(fp);W5.load(fp);Wo.load(fp);fclose(fp);::printf("加载权重完毕\n");Vector2 out;float** img = Get_data_by_Mat(path, out);printf("图像载入完毕");//print(img, out);float* h = SGD(W1, W5, Wo, img);//带入神经网络int c = -1;float max = -1;for (int i = 0; i < 10; i++){::printf("%f\n", h[i]);/*if (h[i] > 0.65 && h[i] > x){x = h[i];c = i;}*/if (max< h[i]){max = h[i];c = i;}}::printf("神经网络认为它是数字-->%d 相似度为:%f", c, max);Free2(img, out.height);free(h);}

bool thank(int x1,int x2, int y1, int y2, int z1, int z2 )

{int dis = 0;int xx = (x1 - x2);dis += xx * xx;xx = (y1 - y2);dis += xx * xx;xx = (z1 - z2);dis += xx * xx;dis = (int)sqrt(dis);if (dis < 100)return true;return false;

}

void qg(char* path)

{::printf(path);::printf("识别为:");//Mat img = imread(path);CImage img;img.Load(path);//Vec3b yes = Vec3b(204, 198, 204);CImage sav;// = Mat(120, 80, CV_8UC3);sav.Create(120, 80, 24);ResizeCImage(img, img.GetWidth() * 10, img.GetHeight() * 10);int XS = img.GetBPP() / 8;int pitch = img.GetPitch();//resize(img, img, Size(img.cols * 10, img.rows * 10));unsigned char* rgb = (unsigned char*)img.GetBits();for (int i = 0; i < img.GetHeight(); i++)for (int j = 0; j < img.GetWidth(); j++){//Vec3b rgb = img.at<Vec3b>(i, j);int x1= *(rgb + (j * XS) + (i * pitch) + 0);int y1 = *(rgb + (j * XS) + (i * pitch) + 1);int z1 = *(rgb + (j * XS) + (i * pitch) + 2);if (thank(x1, 204, y1, 198, z1, 204)){*(rgb + (j * XS) + (i * pitch) + 0) = 255;*(rgb + (j * XS) + (i * pitch) + 1) = 255;*(rgb + (j * XS) + (i * pitch) + 2) = 255;//img.at<Vec3b>(i, j) = Vec3b(255, 255, 255);}/*elseimg.at<Vec3b>(i, j) = Vec3b(0, 0, 0);*/}/*char p[256];for (int k = 0; k < 4; k++){sprintf(p, "acp%d.png", k);for (int i = 35 + (k * 80); i < 115 + (k * 80); i++)for (int j = 30; j < 150; j++)sav.at<Vec3b>(j - 30, i - (35 + (k * 80))) = img.at<Vec3b>(j, i);imwrite(p, sav);}img.release();sav.release();*/sb();

}

void test1()

{FILE* fp = fopen("mnist_Weight.acp", "rb");Weight* W1 = new Weight[20];WD(W1, 9, 9, 20, rand1);Weight W5(100, 2000, rand1);Weight Wo(10, W5.len.height, rand1);for (int i = 0; i < 20; i++)W1[i].load(fp);W5.load(fp);Wo.load(fp);fclose(fp);fp = fopen("out_img.acp", "rb");FILE* tp = fopen("out_label.acp", "rb");int rdint;::fread(&rdint, sizeof(int), 1, fp);::printf("训练集幻数:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, fp);::printf("训练集数量:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, fp);::printf("训练集高度:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, fp);::printf("训练集宽度:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, tp);::printf("标签幻数:%d\n", ReverseInt(rdint));::fread(&rdint, sizeof(int), 1, tp);::printf("标签数量:%d\n", ReverseInt(rdint));unsigned char* res = new unsigned char[28 * 28];float** X = apply2(28, 28);unsigned char biaoqian;Vector2 t2828 = Vector2(28, 28);for (int i = 0; i < 10; i++){::fread(res, sizeof(unsigned char), 28 * 28, fp);Toshape2(X, res, 28, 28);print(X, t2828);float* h = SGD(W1, W5, Wo, X);//带入神经网络int c = -1;for (int i = 0; i < 10; i++){if (h[i] > 0.85){c = i;break;}}::fread(&biaoqian, sizeof(unsigned char), 1, tp);::printf("正确结果应当为“%d”, 神经网络识别为“%d” \n", biaoqian, c);}

}

void main(int argc, char** argv)

{//train();//请先调用这个训练,训练结束后就可以直接加载权重了if (argc > 1){sb(argv[1]);getchar();}

}#include"TP_NNW.h"

#include<iostream>

#pragma warning(disable:4996)

void Weight::apply(int H, int W)

{fz = true;this->len.height = H;this->len.width = W;this->WG = apply2(H, W);//申请内存for (int i = 0; i < H; i++)for (int j = 0; j < W; j++)this->WG[i][j] = Get_rand();//得到随机值

}void Weight::apply(int H, int W, float(*def)())

{fz = true;this->len.height = H;this->len.width = W;this->WG = apply2(H, W);for (int i = 0; i < H; i++)for (int j = 0; j < W; j++)this->WG[i][j] = def();

}Weight::~Weight()

{this->release();

}Weight::Weight(int H/*高度*/, int W/*宽度*/)

{W = W <= 0 ? 1 : W;//防止出现0和负数H = H <= 0 ? 1 : H;//防止出现0和负数fz = true;this->apply(H, W);

}Weight::Weight(int H/*高度*/, int W/*宽度*/, float(*def)())

{W = W <= 0 ? 1 : W;H = H <= 0 ? 1 : H;fz = true;this->apply(H, W, def);

}void Weight::re(float* delta, float* inp, float alpha)

{for (int i = 0; i < this->len.height; i++){for (int j = 0; j < this->len.width; j++)this->WG[i][j] += alpha * delta[i] * inp[j];}

}void Weight::save(FILE* fp)

{for (int i = 0; i < this->len.height; i++)for (int j = 0; j < this->len.width; j++)fwrite(&this->WG[i][j], sizeof(float), 1, fp);

}void Weight::load(FILE* fp)

{for (int i = 0; i < this->len.height; i++)for (int j = 0; j < this->len.width; j++)fread(&this->WG[i][j], sizeof(float), 1, fp);

}void Weight::release()

{if (this->fz){Free2(this->WG, this->len.height);//free(this->WG);}this->fz = false;

}

void Weight::operator >> (Weight& temp)

{temp.release();//free(temp.WG);temp.apply(this->len.height, this->len.width, zeros);

}

void Weight::operator+=(Weight& temp)

{for (int i = 0; i < this->len.height; i++)for (int j = 0; j < this->len.width; j++)this->WG[i][j] += temp.WG[i][j];

}//void Weight::operator/=(int & temp)

//{

// for (int i = 0; i < this->len.height; i++)

// for (int j = 0; j < this->len.width; j++)

// this->WG[i][j] /= temp;

//}void Weight::operator/=(int temp)

{for (int i = 0; i < this->len.height; i++)for (int j = 0; j < this->len.width; j++)this->WG[i][j] /= temp;

}void Weight::operator<<(Weight& temp)

{Free2(this->WG, this->len.height);this->len.height = temp.len.height;this->len.width = temp.len.width;this->WG = temp.WG;

}void WD(Weight* WGS, int H, int W, int len)

{for (int i = 0; i < len; i++){WGS[i].apply(H, W);}

}

void WD(Weight* WGS, int H, int W, int len, float(*def)())

{for (int i = 0; i < len; i++){WGS[i].apply(H, W, def);}

}

float zeros()

{return 0;

}void print(float* y, int y_len)

{for (int i = 0; i < y_len; i++){printf("%0.2f ", y[i]);//printf("%d ", y[i]>0?1:0);}puts("");

}void print(float* y, Vector2& vec)

{print(y, vec.height);

}void print(float** y, Vector2& vec)

{for (int i = 0; i < vec.height; i++)print(y[i], vec.width);

}void print(char* y, int y_len)

{for (int i = 0; i < y_len; i++){printf("%d ", y[i]);}puts("");

}void print(char** y, Vector2& vec)

{for (int i = 0; i < vec.height; i++)print(y[i], vec.width);

}void print(Weight& w)

{print(w.WG, w.len);

}void print(Weight* w, int len)

{for (int i = 0; i < len; i++){printf("\n第%d层\n", i + 1);print(w[i]);}

}float** apply2(int H, int W)

{float** temp = (float**)malloc(sizeof(float**) * H);for (int i = 0; i < H; i++)temp[i] = (float*)malloc(sizeof(float*) * W);return temp;

}float*** apply3(int P, int H/*高度*/, int W/*宽度*/)

{float*** temp = (float***)malloc(sizeof(float***) * P);for (int i = 0; i < P; i++)temp[i] = apply2(H, W);return temp;

}char** apply2_char(int H, int W)

{char** temp = (char**)malloc(sizeof(float**) * H);for (int i = 0; i < H; i++)temp[i] = (char*)malloc(sizeof(float*) * W);return temp;

}

float ones()

{return 1;

}

float*** Conv(float** X, Vector2& inp, Vector2& out, Weight* W, int W_len)

{out.height = inp.height - W[0].len.height + 1;out.width = inp.width - W[0].len.width + 1;float*** temp = (float***)malloc(sizeof(float***) * W_len);for (int k = 0; k < W_len; k++)temp[k] = conv2(X, inp, W[k].WG, W[0].len);return temp;

}

float*** Pool(float*** y, Vector2& inp, int P, Vector2& out)

{int h = inp.height / 2, w = inp.width / 2;out.height = h;out.width = w;float*** temp = apply3(P, h, w);float** filter = apply2(2, 2);for (int i = 0; i < 2; i++)for (int j = 0; j < 2; j++)filter[i][j] = 0.25;for (int k = 0; k < P; k++){Vector2 len;Vector2 t22 = Vector2(2, 2);float** img = conv2(y[k], inp, filter, t22, &len);for (int i = 0; i < h; i++)for (int j = 0; j < w; j++)temp[k][i][j] = img[i * 2][j * 2];Free2(img, len.height);}Free2(filter, 2);return temp;

}

float* apply1(int H)

{float* temp = (float*)malloc(sizeof(float*) * H);return temp;

}char* apply1_char(int H)

{char* temp = (char*)malloc(sizeof(char*) * H);return temp;

}float Get_rand()

{float temp = (float)(rand() % 10) / (float)10;return rand() % 2 == 0 ? temp : -temp;

}float Sigmoid(float x)

{return 1 / (1 + exp(-x));

}float* Sigmoid(float* x, Weight& w)

{return Sigmoid(x, w.len.height);

}float* Sigmoid(float* x, int height)

{float* y = (float*)malloc(sizeof(float*) * height);for (int i = 0; i < height; i++)y[i] = Sigmoid(x[i]);return y;

}float ReLU(float x)

{return x > 0 ? x : 0;

}float* ReLU(float* x, Weight& w)

{return ReLU(x, w.len.height);

}float* ReLU(float* x, int height)

{float* y = (float*)malloc(sizeof(float*) * height);for (int i = 0; i < height; i++)y[i] = ReLU(x[i]);return y;

}float* Softmax(float* x, Weight& w)

{return Softmax(x, w.len.height);

}float dsigmoid(float x)

{return x * (1 - x);

}float* Softmax(float* x, int height)

{float* t = new float[height];float* ex = new float[height];float sum = 0;for (int i = 0; i < height; i++){ex[i] = exp(x[i]);sum += ex[i];}for (int i = 0; i < height; i++){t[i] = ex[i] / sum;}delete ex;return t;

}float* FXCB_err(Weight& w, float* delta)

{float* temp = (float*)malloc(sizeof(float*) * w.len.width);for (int i = 0; i < w.len.width; i++)temp[i] = 0;for (int i = 0; i < w.len.width; i++)for (int j = 0; j < w.len.height; j++)temp[i] += w.WG[j][i] * delta[j];return temp;

}float* Delta1(float* y, float* e, Weight& w)

{float* temp = (float*)malloc(sizeof(float*) * w.len.height);for (int i = 0; i < w.len.height; i++)temp[i] = y[i] * (1 - y[i]) * e[i];return temp;

}float* Delta2(float* v, float* e, Weight& w)

{float* temp = (float*)malloc(sizeof(float*) * w.len.height);for (int i = 0; i < w.len.height; i++)temp[i] = v[i] > 0 ? e[i] : 0;return temp;

}float* dot(Weight& W, float* inp, int* len)

{float* temp = (float*)malloc(sizeof(float*) * W.len.height);for (int i = 0; i < W.len.height; i++)temp[i] = 0;for (int i = 0; i < W.len.height; i++){for (int j = 0; j < W.len.width; j++)temp[i] += (W.WG[i][j] * inp[j]);}if (len != NULL)*len = W.len.height;return temp;

}char* randperm(int max, int count)

{char* temp = new char[count] {0};for (int i = 0; i < count; i++){while (1){char t = rand() % max;bool nothave = true;for (int j = 0; j < i; j++)if (t == temp[j]){nothave = false;break;}if (nothave){temp[i] = t;break;}}}return temp;

}void Dropout(float* y, float ratio, Weight& w)

{float* ym = new float[w.len.height] {0};float round = w.len.height * (1 - ratio);int num = (round - (float)(int)round >= 0.5f ? (int)round + 1 : (int)round);char* idx = randperm(w.len.height, num);for (int i = 0; i < num; i++){ym[idx[i]] = (1 / (1 - ratio));}for (int i = 0; i < w.len.height; i++){y[i] *= ym[i];}delete idx;delete ym;

}float** conv2(float** x, Vector2& x_len, float** fiter, Vector2& fiter_len, Vector2* out_len, int flag, int distance, int fill)

{switch (flag){case Valid:return VALID(x, x_len.height, x_len.width, fiter, fiter_len.height, fiter_len.width, distance, out_len);case Same:return SAME(x, x_len.height, x_len.width, fiter, fiter_len.height, fiter_len.width, distance, fill, out_len);}return nullptr;

}float** VALID(float** x, int x_h, int x_w, float** fiter, int fiter_h, int fiter_w, int distance, Vector2* out_len)

{int h = VALID_out_len(x_h, fiter_h, distance);int w = VALID_out_len(x_w, fiter_w, distance);float** temp = apply2(h, w);float** t = fiter;if (out_len != NULL){out_len->height = h;out_len->width = w;}for (int i = 0; i < x_h + 1 - fiter_h; i += distance)for (int j = 0; j < x_w + 1 - fiter_w; j += distance){float count = 0;for (int n = i; n < i + fiter_h; n++)for (int m = j; m < j + fiter_w; m++){if (n >= x_h || m >= x_w)continue;count += (x[n][m] * t[n - i][m - j]);}temp[(i / distance)][(j / distance)] = count;}//free(t);return temp;

}float** SAME(float** x, int x_h, int x_w, float** fiter, int fiter_h, int fiter_w, int distance, int fill, Vector2* out_len)

{return nullptr;

}int VALID_out_len(int x_len, int fiter_len, int distance)

{float temp = (float)(x_len - fiter_len) / (float)distance;int t = temp - (int)((float)temp) >= 0.5 ? (int)temp + 1 : (int)temp;t++;return t;

}void show_Weight(Weight& W)

{for (int i = 0; i < W.len.height; i++){for (int j = 0; j < W.len.width; j++){printf("%0.3f ", W.WG[i][j]);}puts("");}

}

void rot90(Weight& x)

{int h = x.len.width, w = x.len.height;x.WG = rot90(x.WG, x.len, true);x.len.width = w;x.len.height = h;

}

float** rot90(float** x, Vector2& x_len, bool release)

{float** temp = apply2(x_len.width, x_len.height);for (int i = 0; i < x_len.height; i++)for (int j = 0; j < x_len.width; j++){temp[x_len.width - 1 - j][i] = x[i][j];}if (release){Free2(x, x_len.height);//free(x);}return temp;

}float** rot180(float** x, Vector2& x_len, bool release)

{float** temp = apply2(x_len.height, x_len.width);for (int i = 0; i < x_len.height; i++){for (int j = 0; j < x_len.width; j++){temp[x_len.height - 1 - i][x_len.width - 1 - j] = x[i][j];}}if (release){Free2(x, x_len.height);//free(x);}return temp;

}

void ResizeCImage(CImage& image, int newWidth, int newHeight) {// 创建新的CImage对象,并设置大小CImage resizedImage;resizedImage.Create(newWidth, newHeight, image.GetBPP());// 使用Gdiplus::Graphics将原始图像绘制到新图像上,并进行缩放SetStretchBltMode(resizedImage.GetDC(), HALFTONE);image.StretchBlt(resizedImage.GetDC(), 0, 0, newWidth, newHeight);// 完成绘制后,释放新图像的设备上下文resizedImage.ReleaseDC();// 将结果拷贝回原始的CImage对象image.Destroy();image.Attach(resizedImage.Detach());resizedImage.Destroy();

}

float** Get_data_by_Mat(char* filepath, Vector2& out_len)

{CImage mat;// = cv::imread(filepath, 0);//cv::resize(mat, mat, cv::Size(28, 28));mat.Load(filepath);ResizeCImage(mat, 28, 28);/*cv::imshow("tt", mat);cv::waitKey(0);*/out_len.height = mat.GetHeight();out_len.width = mat.GetWidth();float** temp = apply2(mat.GetHeight(), mat.GetWidth());unsigned char* rgb = (unsigned char*)mat.GetBits();int pitch = mat.GetPitch();int hui = 0;int XS = mat.GetBPP()/8;for (int i = 0; i < out_len.height; i++)for (int j = 0; j < out_len.width; j++){hui = 0;for (int kkk = 0; kkk < 3; kkk++){hui += *(rgb + (j * XS) + (i * pitch) + kkk);}hui /= 3;temp[i][j] = ((float)hui / (float)255);//temp[i][j] = 1 - temp[i][j];}mat.Destroy();return temp;

}char** Get_data_by_Mat_char(char* filepath, Vector2& out_len, int threshold)

{CImage mat;mat.Load(filepath);//cv::Mat mat = cv::imread(filepath, 0);out_len.height = mat.GetHeight();out_len.width = mat.GetWidth();char** temp = apply2_char(out_len.height, out_len.width);unsigned char* rgb = (unsigned char*)mat.GetBits();int pitch = mat.GetPitch();int hui = 0;int XS = mat.GetBPP() / 8;for (int i = 0; i < out_len.height; i++)for (int j = 0; j < out_len.width; j++){hui = 0;for (int kkk = 0; kkk < 3; kkk++){hui += *(rgb + (j * XS) + (i * pitch) + kkk);}hui /= 3;temp[i][j] = hui > threshold ? 0 : 1;}mat.Destroy();return temp;

}void Get_data_by_Mat(char* filepath, Weight& w)

{w.WG = Get_data_by_Mat(filepath, w.len);

}Weight Get_data_by_Mat(char* filepath)

{Weight temp;Get_data_by_Mat(filepath, temp);return temp;

}Vector2::Vector2()

{this->height = 0;this->width = 0;

}Vector2::Vector2(char height, int width)

{this->height = height;this->width = width;

}XML::XML(FILE* fp, char* name, int layer)

{this->fp = fp;this->name = name;this->layer = layer;

}void XML::showchild()

{char reader[500];while (fgets(reader, 500, this->fp)){int len = strlen(reader);int lay = 0;for (; lay < len; lay++){if (reader[lay] != '\t')break;}if (lay == this->layer){if (reader[lay + 1] == '/')continue;char show[500];memset(show, 0, 500);for (int i = lay + 1; i < len - 2; i++){if (reader[i] == '>')break;show[i - lay - 1] = reader[i];}puts(show);}}fseek(this->fp, 0, 0);

}void bit::operator=(int x)

{this->B = x;

}float* reshape(float** x, int h, int w)

{float* temp = (float*)malloc(sizeof(float*) * w * h);int count = 0;for (int i = 0; i < h; i++)for (int j = 0; j < w; j++){temp[count++] = x[i][j];}return temp;

}float* reshape(float** x, Vector2& x_len)

{return reshape(x, x_len.height, x_len.width);

}float* reshape(float*** x, Vector2& x_len, int P, bool releace)

{float* temp = apply1(x_len.height * x_len.width * P);int c = 0;for (int i = 0; i < P; i++)for (int n = 0; n < x_len.height; n++)for (int m = 0; m < x_len.width; m++)temp[c++] = x[i][n][m];if (releace)Free3(x, P, x_len.height);//free(x);return temp;

}int* bList(int distance, int max, int* out_len)

{int num = (max % distance != 0);int t = (int)(max / distance);t += num;if (out_len != NULL)*out_len = t;int* out = (int*)malloc(sizeof(int*) * t);for (int i = 0; i < t; i++){out[i] = i * distance;}return out;

}void Free2(float** x, int h)

{for (int i = 0; i < h; i++)free(x[i]);free(x);

}void Free3(float*** x, int p, int h)

{for (int i = 0; i < p; i++)for (int j = 0; j < h; j++)free(x[i][j]);for (int i = 0; i < p; i++)free(x[i]);free(x);

}void kron(float** out, Vector2& out_len, float** inp, Vector2& inp_len, float** filter, Vector2& filter_len)

{for (int i = 0; i < inp_len.height; i++)for (int j = 0; j < inp_len.width; j++){for (int n = i * 2; n < out_len.height && n < ((i * 2) + filter_len.height); n++)for (int m = (j * 2); m < ((j * 2) + filter_len.width) && m < out_len.width; m++){out[n][m] = inp[i][j] * filter[n - (i * 2)][m - (j * 2)] * 0.25;}}

}char** mnist::Toshape2(char* x, int h, int w)

{char** temp = apply2_char(h, w);int c = 0;for (int i = 0; i < h; i++)for (int j = 0; j < w; j++)temp[i][j] = x[c++];return temp;

}char** mnist::Toshape2(char* x, Vector2& x_len)

{return mnist::Toshape2(x, x_len.height, x_len.width);

}void mnist::Toshape2(char** out, char* x, int h, int w)

{int c = 0;for (int i = 0; i < h; i++)for (int j = 0; j < w; j++)out[i][j] = x[c++];

}void mnist::Toshape2(char** out, char* x, Vector2& x_len)

{mnist::Toshape2(out, x, x_len.height, x_len.width);

}float** mnist::Toshape2_F(char* x, int h, int w)

{float** temp = apply2(h, w);int c = 0;for (int i = 0; i < h; i++)for (int j = 0; j < w; j++)temp[i][j] = ((float)x[c++] / (float)255);return temp;

}float** mnist::Toshape2_F(char* x, Vector2& x_len)

{return mnist::Toshape2_F(x, x_len.height, x_len.width);

}void mnist::Toshape2(float** out, char* x, int h, int w)

{int c = 0;for (int i = 0; i < h; i++)for (int j = 0; j < w; j++)out[i][j] = ((float)x[c++] / (float)255);

}void mnist::Toshape2(float** out, char* x, Vector2& x_len)

{mnist::Toshape2(out, x, x_len.height, x_len.width);

}void mnist::Toshape2(float** out, unsigned char* x, int h, int w)

{int c = 0;for (int i = 0; i < h; i++)for (int j = 0; j < w; j++){out[i][j] = ((float)x[c++] / (float)255);}

}void mnist::Toshape2(float** out, unsigned char* x, Vector2& x_len)

{mnist::Toshape2(out, x, x_len.height, x_len.width);

}float*** mnist::Toshape3(float* x, int P, Vector2& x_len)

{float*** temp = apply3(P, x_len.height, x_len.width);int c = 0;for (int i = 0; i < P; i++)for (int j = 0; j < x_len.height; j++)for (int n = 0; n < x_len.width; n++)temp[i][j][n] = x[c++];return temp;

}int mnist::ReverseInt(int i)

{unsigned char ch1, ch2, ch3, ch4;ch1 = i & 255;ch2 = (i >> 8) & 255;ch3 = (i >> 16) & 255;ch4 = (i >> 24) & 255;return((int)ch1 << 24) + ((int)ch2 << 16) + ((int)ch3 << 8) + ch4;

}#pragma once

#include<Windows.h>

#include<atlimage.h>

#define ALPHA 0.01

#define BETA 0.95

#define RATIO 0.2

void ResizeCImage(CImage& image, int newWidth, int newHeight);

struct bit

{unsigned B : 1;void operator=(int x);

};

enum Conv_flag

{Valid = 0,Same = 1

};

struct Vector2 {int height, width;Vector2();Vector2(char height, int width);

};

class Weight

{

private:void apply(int H/*高度*/, int W/*宽度*/);void apply(int H/*高度*/, int W/*宽度*/, float(*def)());

public:bool fz;Vector2 len;float** WG;~Weight();Weight() { fz = false; }Weight(int H/*高度*/, int W/*宽度*/);Weight(int H/*高度*/, int W/*宽度*/, float (*def)());void re(float* delta, float* inp, float alpha = ALPHA);void save(FILE* fp);void load(FILE* fp);void release();void operator>>(Weight& temp);void operator+=(Weight& temp);//void operator/=(int &temp);void operator/=(int temp);void operator<<(Weight& temp);void friend WD(Weight* WGS, int H, int W, int len);void friend WD(Weight* WGS, int H, int W, int len, float(*def)());

};

float zeros();

float ones();

float*** Pool(float*** y, Vector2& inp, int P, Vector2& out);//池化

float*** Conv(float** X, Vector2& inp, Vector2& out, Weight* W, int W_len);//卷积

void print(float* y, int y_len = 1);

void print(float* y, Vector2& vec);

void print(float** y, Vector2& vec);

void print(char* y, int y_len = 1);

void print(char** y, Vector2& vec);

void print(Weight& w);

void print(Weight* w, int len = 1);

float** apply2(int H/*高度*/, int W/*宽度*/);

float*** apply3(int P, int H/*高度*/, int W/*宽度*/);

char** apply2_char(int H/*高度*/, int W/*宽度*/);

float* apply1(int H);

char* apply1_char(int H);

float Get_rand();

float Sigmoid(float x);

float* Sigmoid(float* x, Weight& w);

float* Sigmoid(float* x, int height);

float ReLU(float x);

float* ReLU(float* x, Weight& w);

float* ReLU(float* x, int height);

float* Softmax(float* x, Weight& w);

float dsigmoid(float x);

float* Softmax(float* x, int height);

float* FXCB_err(Weight& w, float* delta);

float* Delta1(float* y, float* e, Weight& w);

float* Delta2(float* v, float* e, Weight& w);

float* dot(Weight& W/*权重*/, float* inp/*输入数据*/, int* len = NULL);

char* randperm(int max, int count);

void Dropout(float* y, float ratio, Weight& w);

float** conv2(float** x, Vector2& x_len, float** fiter, Vector2& fiter_len,Vector2* out_len = NULL, int flag = Valid, int distance = 1, int fill = 0);

float** VALID(float** x, int x_h, int x_w, float** fiter, int fiter_h,int fiter_w, int distance, Vector2* out_len = NULL);

float** SAME(float** x, int x_h, int x_w, float** fiter, int fiter_h,int fiter_w, int distance, int fill, Vector2* out_len = NULL);

int VALID_out_len(int x_len, int fiter_len, int distance);

void show_Weight(Weight& W);

void rot90(Weight& x);

float** rot90(float** x, Vector2& x_len, bool release = false);

float** rot180(float** x, Vector2& x_len, bool release = false);

float** Get_data_by_Mat(char* filepath, Vector2& out_len);

char** Get_data_by_Mat_char(char* filepath, Vector2& out_len, int threshold = 127);

void Get_data_by_Mat(char* filepath, Weight& w);

Weight Get_data_by_Mat(char* filepath);

float* reshape(float** x, int h, int w);

float* reshape(float** x, Vector2& x_len);

float* reshape(float*** x, Vector2& x_len, int P, bool releace = false);

namespace mnist

{char** Toshape2(char* x, int h, int w);char** Toshape2(char* x, Vector2& x_len);void Toshape2(char** out, char* x, int h, int w);void Toshape2(char** out, char* x, Vector2& x_len);float** Toshape2_F(char* x, int h, int w);float** Toshape2_F(char* x, Vector2& x_len);void Toshape2(float** out, char* x, int h, int w);void Toshape2(float** out, char* x, Vector2& x_len);void Toshape2(float** out, unsigned char* x, int h, int w);void Toshape2(float** out, unsigned char* x, Vector2& x_len);float*** Toshape3(float* x, int P, Vector2& x_len);int ReverseInt(int i);

}

struct XML

{char* name;FILE* fp;int layer;XML(FILE* fp, char* name, int layer);void showchild();

};

template<class T>

class hot_one

{bool fz;

public:T* one;int num;int count;hot_one() { this->fz = false; }hot_one(int type_num, int set_num = 0){type_num = type_num <= 0 ? 1 : type_num;if (set_num >= type_num)set_num = 0;this->count = type_num;this->fz = true;this->num = set_num;this->one = new T[type_num]{ 0 };this->one[set_num] = 1;}void re(int set_num){this->one[num] = 0;this->num = set_num;this->one[this->num] = 1;}void release(){if (this->fz)delete one;this->fz = false;}~hot_one(){this->release();}

};

int* bList(int distance, int max, int* out_len);

void Free2(float** x, int h);

void Free3(float*** x, int p, int h);

void kron(float** out, Vector2& out_len, float** inp, Vector2& inp_len, float** filter,Vector2& filter_len);相关文章:

C++卷积神经网络

C卷积神经网络 #include"TP_NNW.h" #include<iostream> #pragma warning(disable:4996) using namespace std; using namespace mnist;float* SGD(Weight* W1, Weight& W5, Weight& Wo, float** X) {Vector2 ve(28, 28);float* temp new float[10];V…...

go 读取yaml映射到struct

安装 go get gopkg.in/yaml.v3创建yaml Mysql:Host: 192.168.214.134Port: 3306UserName: wwPassword: wwDatabase: go_dbCharset: utf8mb4ParseTime: trueLoc: LocalListValue:- haha- test- vv JWTSecret: nidaye定义结构体 type Mysql struct {Host string yaml:&…...

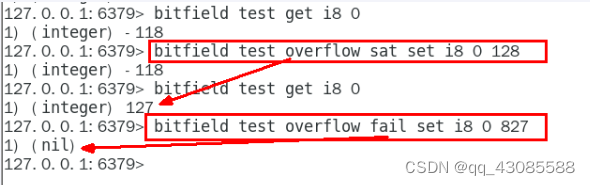

Redis 10 大数据类型

1. which 10 1. redis字符串 2. redis 列表 3. redis哈希表 4. redis集合 5. redis有序集合 6. redis地理空间 7. redis基数统计 8. redis位图 9. redis位域 10. redis流 2. 获取redis常见操作指令 官网英文:https://redis.io/commands 官网中文:https:/…...

优化生产流程:数字化工厂中的OPC UA分布式IO模块应用

背景 近年来,为了提升在全球范围内的竞争力,制造企业希望自己工厂的机器之间协同性更强,自动化设备采集到的数据能够发挥更大的价值,越来越多的传统型工业制造企业开始加入数字化工厂建设的行列,实现智能制造。 数字化…...

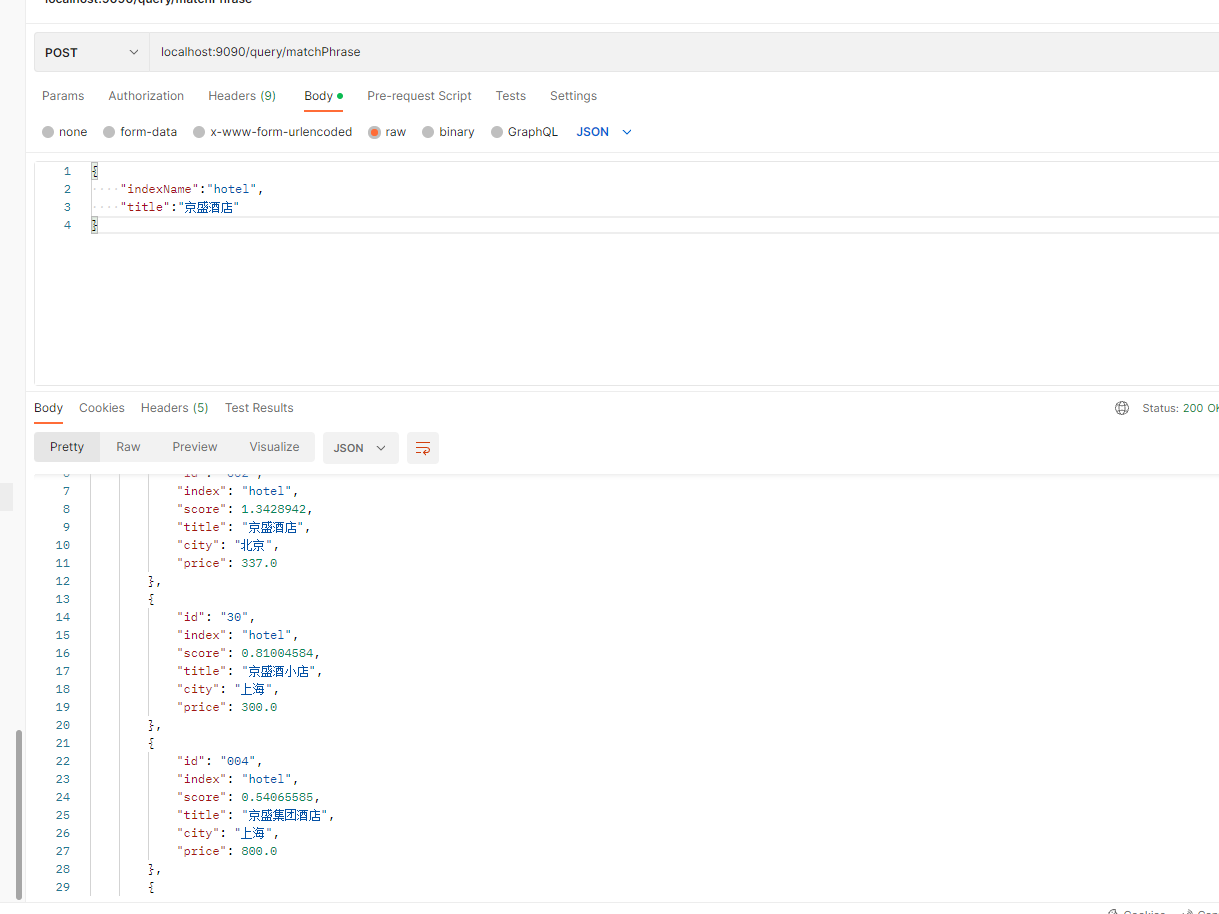

Elasticsearch(十四)搜索---搜索匹配功能⑤--全文搜索

一、前言 不同于之前的term。terms等结构化查询,全文搜索首先对查询词进行分析,然后根据查询词的分词结果构建查询。这里所说的全文指的是文本类型数据(text类型),默认的数据形式是人类的自然语言,如对话内容、图书名…...

已解决Gradle错误:“Unable to load class ‘org.gradle.api.plugins.MavenPlugin‘”

🌷🍁 博主猫头虎 带您 Go to New World.✨🍁 🦄 博客首页——猫头虎的博客🎐 🐳《面试题大全专栏》 文章图文并茂🦕生动形象🦖简单易学!欢迎大家来踩踩~🌺 &a…...

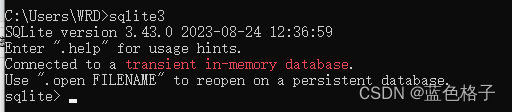

windows中安装sqlite

1. 下载文件 官网下载地址:https://www.sqlite.org/download.html 下载sqlite-dll-win64-x64-3430000.zip和sqlite-tools-win32-x86-3430000.zip文件(32位系统下载sqlite-dll-win32-x86-3430000.zip)。 2. 安装过程 解压文件 解压上一步…...

前端面试:【系统设计与架构】前端架构模式的演进

前端架构模式在现代Web开发中扮演着关键角色,它们帮助我们组织和管理前端应用的复杂性。本文将介绍一些常见的前端架构模式,包括MVC、MVVM、Flux和Redux,以及它们的演进和应用。 1. MVC(Model-View-Controller)&#x…...

【CSS】em单位的理解

1、em单位的定义 MDN的解释:它是相对于父元素的字体大小的一个单位。 例如:父元素font-size:16px;子元素的font-size:2em(也就是32px) 注:有一个误区,虽然他是一个相对…...

无涯教程-Python机器学习 - Based on human supervision函数

Python机器学习 中的 Based on human s - 无涯教程网无涯教程网提供https://www.learnfk.com/python-machine-learning/machine-learning-with-python-based-on-human-supervision.html...

【滑动窗口】leetcode209:长度最小的子数组

一.题目描述 长度最小的子数组 二.思路分析 题目要求:找出长度最小的符合要求的连续子数组,这个要求就是子数组的元素之和大于等于target。 如何确定一个连续的子数组?确定它的左右边界即可。如此一来,我们最先想到的就是暴力枚…...

C++ STL unordered_map

map hashmap 文章目录 Map、HashMap概念map、hashmap 的区别引用头文件初始化赋值unordered_map 自定义键值类型unordered_map 的 value 自定义数据类型遍历常用方法插入查找 key修改 value删除元素清空元素 unordered_map 中每一个元素都是一个 key-value 对,数据…...

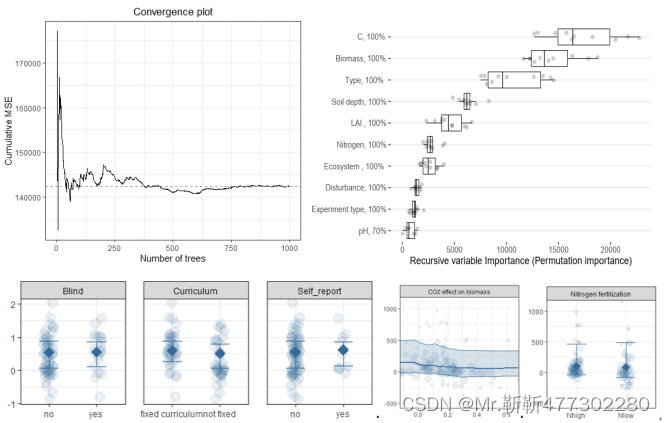

全流程R语言Meta分析核心技术应用

Meta分析是针对某一科研问题,根据明确的搜索策略、选择筛选文献标准、采用严格的评价方法,对来源不同的研究成果进行收集、合并及定量统计分析的方法,最早出现于“循证医学”,现已广泛应用于农林生态,资源环境等方面。…...

Go并发可视化解释 - Select语句

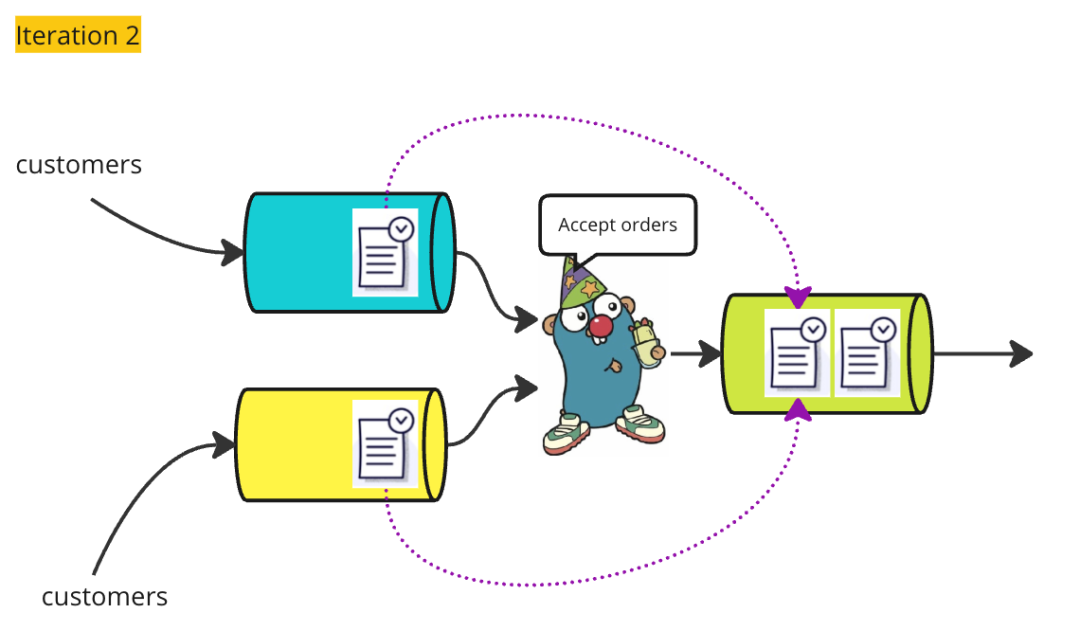

昨天,我发布了一篇文章,用可视化的方式解释了Golang中通道(Channel)的工作原理。如果你对通道的理解仍然存在困难,最好呢请在阅读本文之前先查看那篇文章。作为一个快速的复习:Partier、Candier 和 Stringe…...

在线SM4(国密)加密解密工具

在线SM4(国密)加密解密工具...

golang的类型断言语法

例子1 在 Go 中,err.(interface{ Timeout() bool }) 是一个类型断言语法。它用于检查一个接口类型的变量 err 是否实现了一个带有 Timeout() bool 方法的接口。 具体而言,该类型断言的语法如下: if v, ok : err.(interface{ Timeout() boo…...

提速换挡 | 至真科技用技术打破业务壁垒,助力出海破局增长

各个行业都在谈出海,但真正成功的又有多少? 李宁出海十年海外业务收入占比仅有1.3%,走出去战略基本失败。 京东出海业务磕磕绊绊,九年过去国际化业务至今在财报上都不配拥有姓名。 几百万砸出去买量,一点水花都没有…...

第3篇:vscode搭建esp32 arduino开发环境

第1篇:Arduino与ESP32开发板的安装方法 第2篇:ESP32 helloword第一个程序示范点亮板载LED 1.下载vscode并安装 https://code.visualstudio.com/ 运行VSCodeUserSetup-x64-1.80.1.exe 2.点击扩展,搜索arduino,并点击安装 3.点击扩展设置,配置arduino…...

Apache Shiro是什么

特点 Apache Shiro是一个强大且易用的Java安全框架,用于身份验证、授权、会话管理和加密。它的设计目标是简化应用程序的安全性实现,使开发人员能够更轻松地处理各种安全性问题,从而提高应用程序的安全性和可维护性。下面是一些Apache Shiro的关键特点和概念: 特点和概念…...

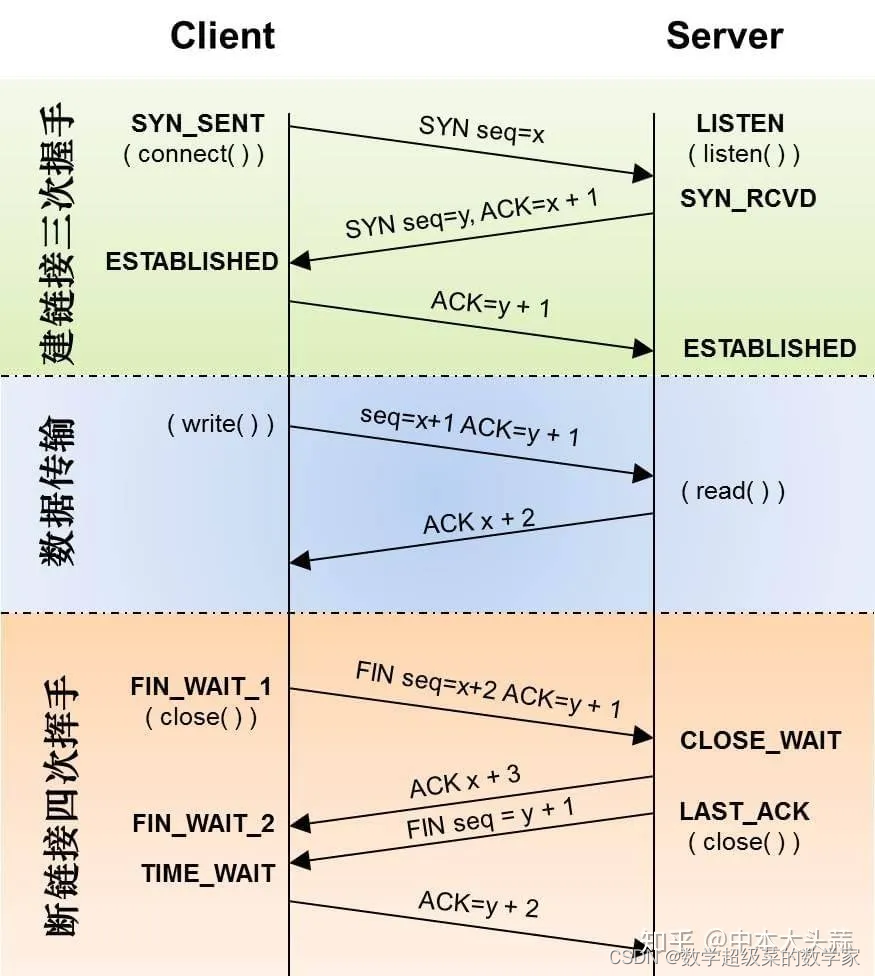

Socket基本原理

一、简单介绍 Socket,又称套接字,是Linux跨进程通信(IPC,Inter Process Communication)方式的一种。相比于其他IPC方式,Socket牛逼在于可做到同一台主机内跨进程通信,不同主机间的跨进程通信。…...

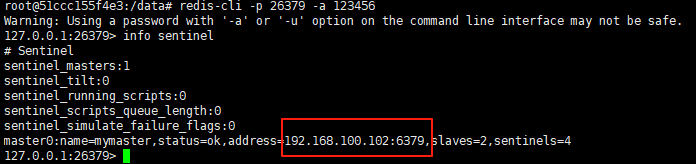

使用docker在3台服务器上搭建基于redis 6.x的一主两从三台均是哨兵模式

一、环境及版本说明 如果服务器已经安装了docker,则忽略此步骤,如果没有安装,则可以按照一下方式安装: 1. 在线安装(有互联网环境): 请看我这篇文章 传送阵>> 点我查看 2. 离线安装(内网环境):请看我这篇文章 传送阵>> 点我查看 说明:假设每台服务器已…...

51c自动驾驶~合集58

我自己的原文哦~ https://blog.51cto.com/whaosoft/13967107 #CCA-Attention 全局池化局部保留,CCA-Attention为LLM长文本建模带来突破性进展 琶洲实验室、华南理工大学联合推出关键上下文感知注意力机制(CCA-Attention),…...

树莓派超全系列教程文档--(61)树莓派摄像头高级使用方法

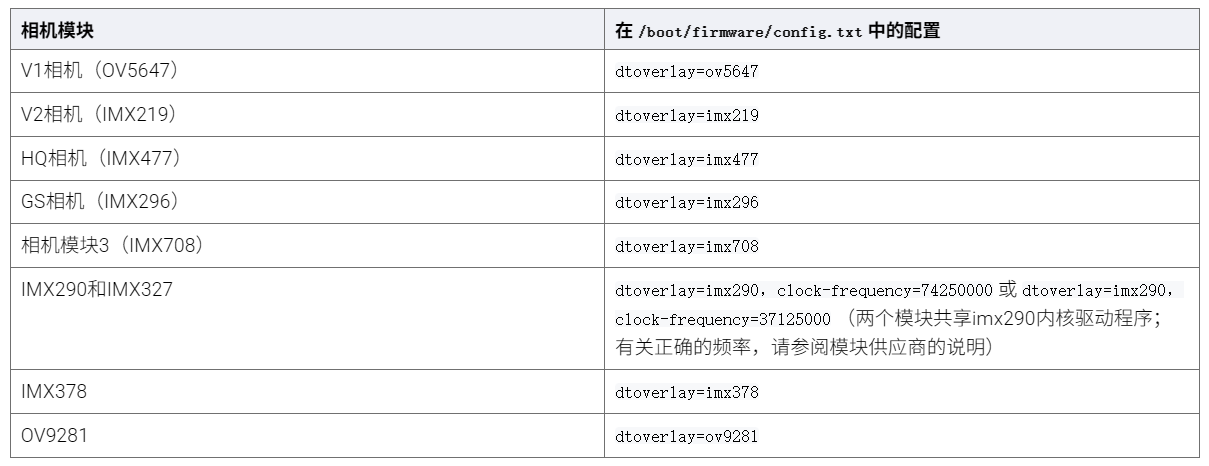

树莓派摄像头高级使用方法 配置通过调谐文件来调整相机行为 使用多个摄像头安装 libcam 和 rpicam-apps依赖关系开发包 文章来源: http://raspberry.dns8844.cn/documentation 原文网址 配置 大多数用例自动工作,无需更改相机配置。但是,一…...

2025年能源电力系统与流体力学国际会议 (EPSFD 2025)

2025年能源电力系统与流体力学国际会议(EPSFD 2025)将于本年度在美丽的杭州盛大召开。作为全球能源、电力系统以及流体力学领域的顶级盛会,EPSFD 2025旨在为来自世界各地的科学家、工程师和研究人员提供一个展示最新研究成果、分享实践经验及…...

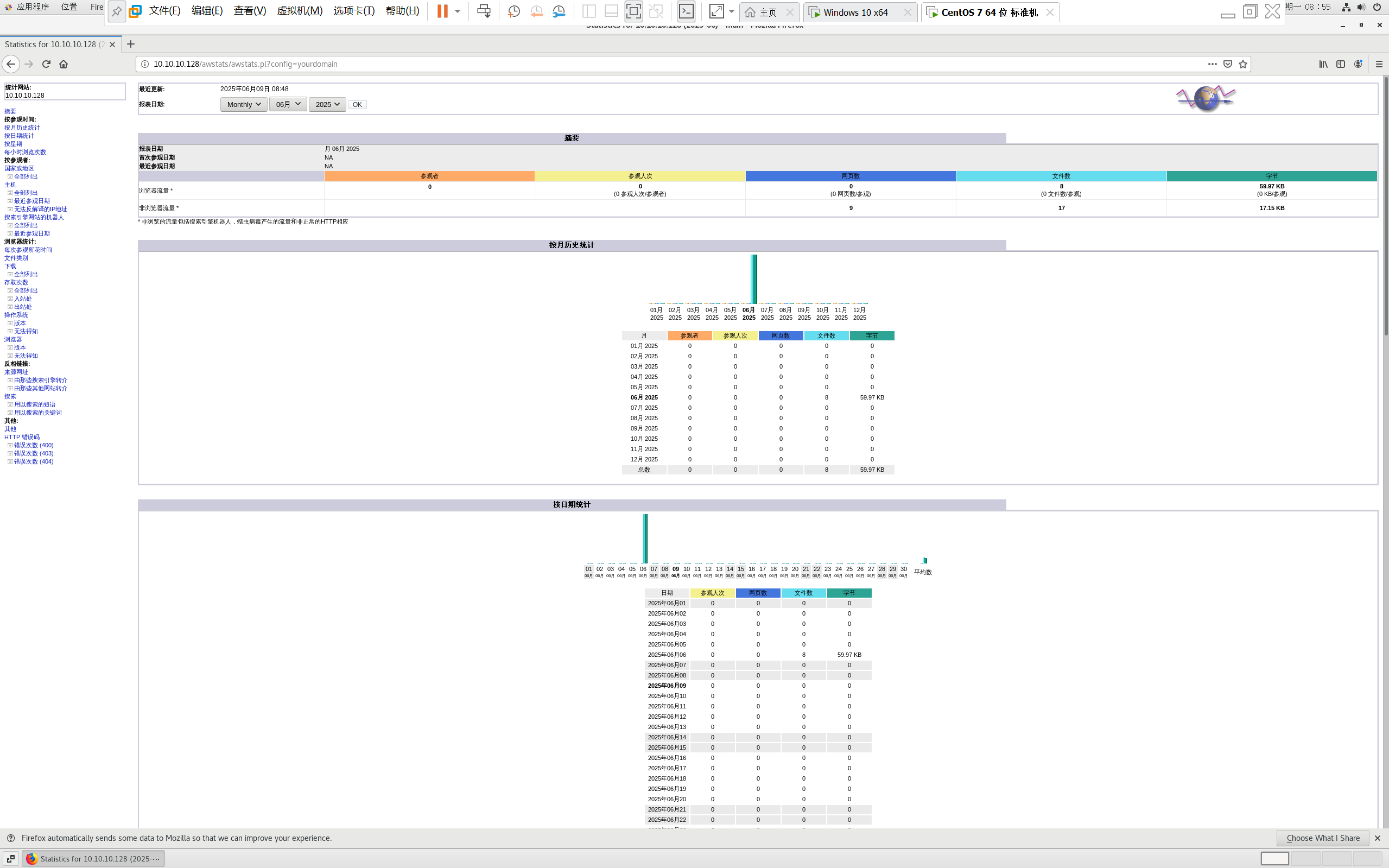

centos 7 部署awstats 网站访问检测

一、基础环境准备(两种安装方式都要做) bash # 安装必要依赖 yum install -y httpd perl mod_perl perl-Time-HiRes perl-DateTime systemctl enable httpd # 设置 Apache 开机自启 systemctl start httpd # 启动 Apache二、安装 AWStats࿰…...

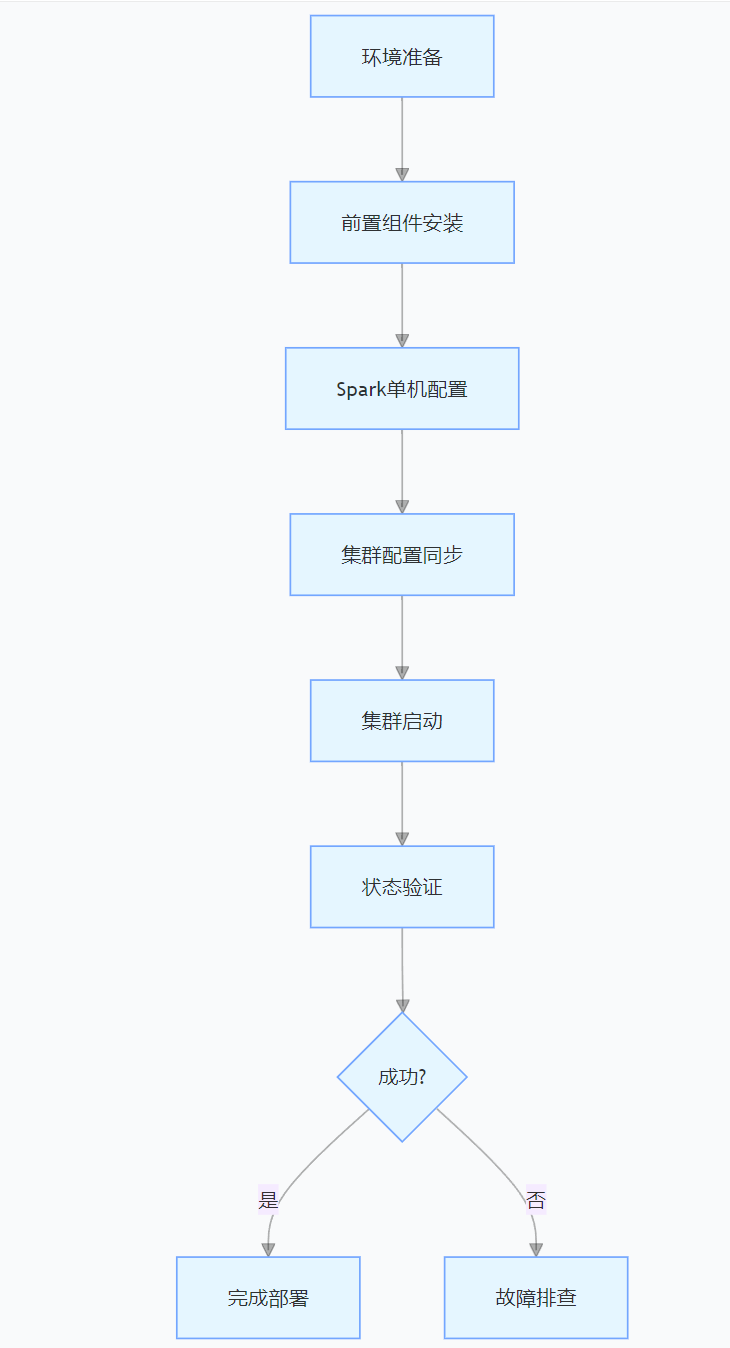

CentOS下的分布式内存计算Spark环境部署

一、Spark 核心架构与应用场景 1.1 分布式计算引擎的核心优势 Spark 是基于内存的分布式计算框架,相比 MapReduce 具有以下核心优势: 内存计算:数据可常驻内存,迭代计算性能提升 10-100 倍(文档段落:3-79…...

ETLCloud可能遇到的问题有哪些?常见坑位解析

数据集成平台ETLCloud,主要用于支持数据的抽取(Extract)、转换(Transform)和加载(Load)过程。提供了一个简洁直观的界面,以便用户可以在不同的数据源之间轻松地进行数据迁移和转换。…...

《基于Apache Flink的流处理》笔记

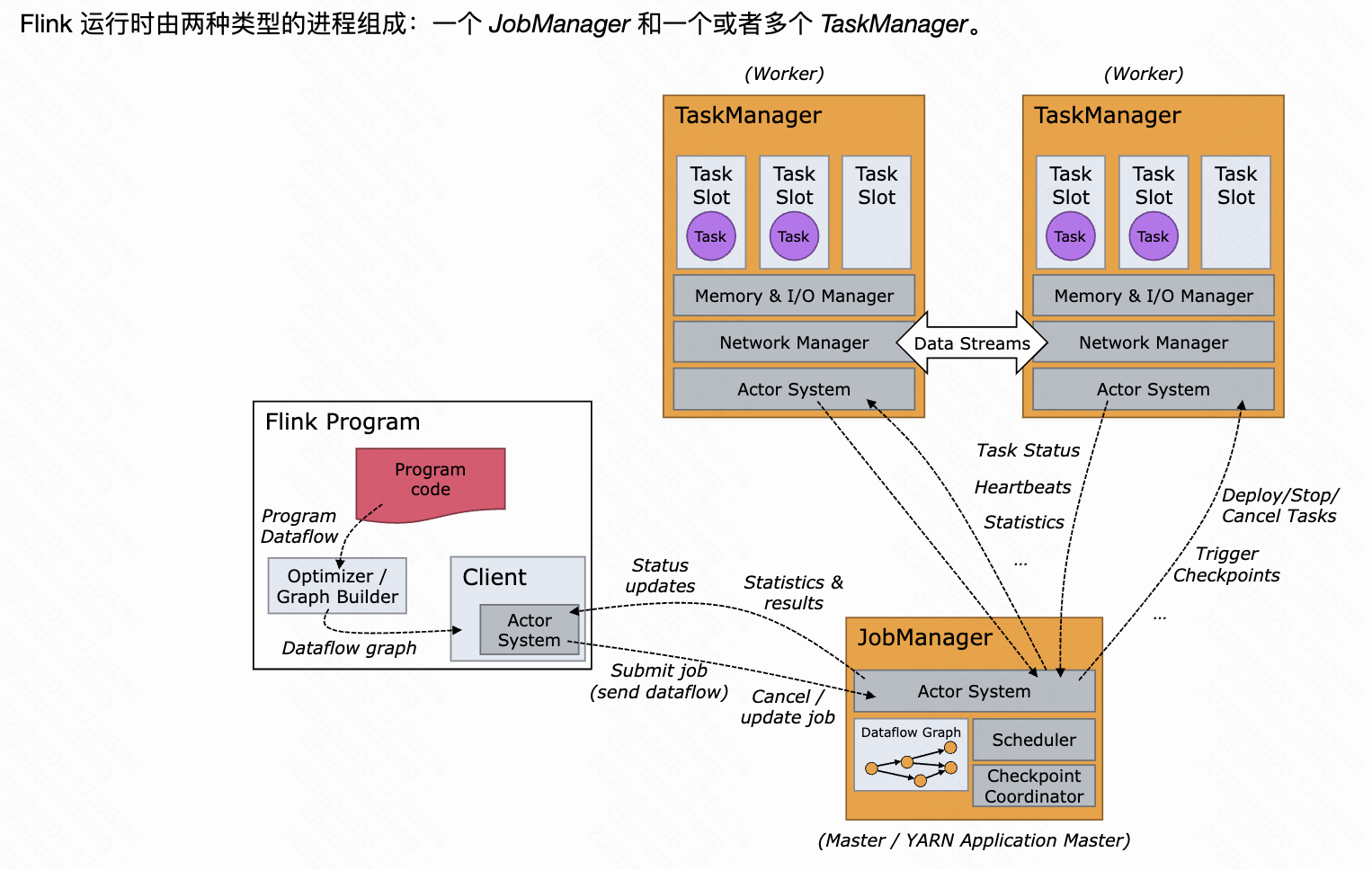

思维导图 1-3 章 4-7章 8-11 章 参考资料 源码: https://github.com/streaming-with-flink 博客 https://flink.apache.org/bloghttps://www.ververica.com/blog 聚会及会议 https://flink-forward.orghttps://www.meetup.com/topics/apache-flink https://n…...

springboot整合VUE之在线教育管理系统简介

可以学习到的技能 学会常用技术栈的使用 独立开发项目 学会前端的开发流程 学会后端的开发流程 学会数据库的设计 学会前后端接口调用方式 学会多模块之间的关联 学会数据的处理 适用人群 在校学生,小白用户,想学习知识的 有点基础,想要通过项…...

C++:多态机制详解

目录 一. 多态的概念 1.静态多态(编译时多态) 二.动态多态的定义及实现 1.多态的构成条件 2.虚函数 3.虚函数的重写/覆盖 4.虚函数重写的一些其他问题 1).协变 2).析构函数的重写 5.override 和 final关键字 1&#…...