yolov8剪枝实践

本文使用的剪枝库是torch-pruning ,实验了该库的三个剪枝算法GroupNormPruner、BNScalePruner和GrowingRegPruner。

安装使用

- 安装依赖库

pip install torch-pruning

- 把 https://github.com/VainF/Torch-Pruning/blob/master/examples/yolov8/yolov8_pruning.py,文件拷贝到yolov8的根目录下。或者使用我的剪枝代码,在原有的基础上稍作修改,保存了不同剪枝阶段的模型。

# This code is adapted from Issue [#147](https://github.com/VainF/Torch-Pruning/issues/147), implemented by @Hyunseok-Kim0.

import argparse

import math

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "7"

from copy import deepcopy

from datetime import datetime

from pathlib import Path

from typing import List, Unionimport numpy as np

import torch

import torch.nn as nn

from matplotlib import pyplot as plt

from ultralytics import YOLO, __version__

from ultralytics.nn.modules import Detect, C2f, Conv, Bottleneck

from ultralytics.nn.tasks import attempt_load_one_weight

from ultralytics.yolo.engine.model import TASK_MAP

from ultralytics.yolo.engine.trainer import BaseTrainer

from ultralytics.yolo.utils import yaml_load, LOGGER, RANK, DEFAULT_CFG_DICT, DEFAULT_CFG_KEYS

from ultralytics.yolo.utils.checks import check_yaml

from ultralytics.yolo.utils.torch_utils import initialize_weights, de_parallelimport torch_pruning as tpdef save_pruning_performance_graph(x, y1, y2, y3):"""Draw performance change graphParameters----------x : ListParameter numbers of all pruning stepsy1 : ListmAPs after fine-tuning of all pruning stepsy2 : ListMACs of all pruning stepsy3 : ListmAPs after pruning (not fine-tuned) of all pruning stepsReturns-------"""try:plt.style.use("ggplot")except:passx, y1, y2, y3 = np.array(x), np.array(y1), np.array(y2), np.array(y3)y2_ratio = y2 / y2[0]# create the figure and the axis objectfig, ax = plt.subplots(figsize=(8, 6))# plot the pruned mAP and recovered mAPax.set_xlabel('Pruning Ratio')ax.set_ylabel('mAP')ax.plot(x, y1, label='recovered mAP')ax.scatter(x, y1)ax.plot(x, y3, color='tab:gray', label='pruned mAP')ax.scatter(x, y3, color='tab:gray')# create a second axis that shares the same x-axisax2 = ax.twinx()# plot the second set of dataax2.set_ylabel('MACs')ax2.plot(x, y2_ratio, color='tab:orange', label='MACs')ax2.scatter(x, y2_ratio, color='tab:orange')# add a legendlines, labels = ax.get_legend_handles_labels()lines2, labels2 = ax2.get_legend_handles_labels()ax2.legend(lines + lines2, labels + labels2, loc='best')ax.set_xlim(105, -5)ax.set_ylim(0, max(y1) + 0.05)ax2.set_ylim(0.05, 1.05)# calculate the highest and lowest points for each set of datamax_y1_idx = np.argmax(y1)min_y1_idx = np.argmin(y1)max_y2_idx = np.argmax(y2)min_y2_idx = np.argmin(y2)max_y1 = y1[max_y1_idx]min_y1 = y1[min_y1_idx]max_y2 = y2_ratio[max_y2_idx]min_y2 = y2_ratio[min_y2_idx]# add text for the highest and lowest values near the pointsax.text(x[max_y1_idx], max_y1 - 0.05, f'max mAP = {max_y1:.2f}', fontsize=10)ax.text(x[min_y1_idx], min_y1 + 0.02, f'min mAP = {min_y1:.2f}', fontsize=10)ax2.text(x[max_y2_idx], max_y2 - 0.05, f'max MACs = {max_y2 * y2[0] / 1e9:.2f}G', fontsize=10)ax2.text(x[min_y2_idx], min_y2 + 0.02, f'min MACs = {min_y2 * y2[0] / 1e9:.2f}G', fontsize=10)plt.title('Comparison of mAP and MACs with Pruning Ratio')plt.savefig('pruning_perf_change.png')def infer_shortcut(bottleneck):c1 = bottleneck.cv1.conv.in_channelsc2 = bottleneck.cv2.conv.out_channelsreturn c1 == c2 and hasattr(bottleneck, 'add') and bottleneck.addclass C2f_v2(nn.Module):# CSP Bottleneck with 2 convolutionsdef __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansionsuper().__init__()self.c = int(c2 * e) # hidden channelsself.cv0 = Conv(c1, self.c, 1, 1)self.cv1 = Conv(c1, self.c, 1, 1)self.cv2 = Conv((2 + n) * self.c, c2, 1) # optional act=FReLU(c2)self.m = nn.ModuleList(Bottleneck(self.c, self.c, shortcut, g, k=((3, 3), (3, 3)), e=1.0) for _ in range(n))def forward(self, x):# y = list(self.cv1(x).chunk(2, 1))y = [self.cv0(x), self.cv1(x)]y.extend(m(y[-1]) for m in self.m)return self.cv2(torch.cat(y, 1))def transfer_weights(c2f, c2f_v2):c2f_v2.cv2 = c2f.cv2c2f_v2.m = c2f.mstate_dict = c2f.state_dict()state_dict_v2 = c2f_v2.state_dict()# Transfer cv1 weights from C2f to cv0 and cv1 in C2f_v2old_weight = state_dict['cv1.conv.weight']half_channels = old_weight.shape[0] // 2state_dict_v2['cv0.conv.weight'] = old_weight[:half_channels]state_dict_v2['cv1.conv.weight'] = old_weight[half_channels:]# Transfer cv1 batchnorm weights and buffers from C2f to cv0 and cv1 in C2f_v2for bn_key in ['weight', 'bias', 'running_mean', 'running_var']:old_bn = state_dict[f'cv1.bn.{bn_key}']state_dict_v2[f'cv0.bn.{bn_key}'] = old_bn[:half_channels]state_dict_v2[f'cv1.bn.{bn_key}'] = old_bn[half_channels:]# Transfer remaining weights and buffersfor key in state_dict:if not key.startswith('cv1.'):state_dict_v2[key] = state_dict[key]# Transfer all non-method attributesfor attr_name in dir(c2f):attr_value = getattr(c2f, attr_name)if not callable(attr_value) and '_' not in attr_name:setattr(c2f_v2, attr_name, attr_value)c2f_v2.load_state_dict(state_dict_v2)def replace_c2f_with_c2f_v2(module):for name, child_module in module.named_children():if isinstance(child_module, C2f):# Replace C2f with C2f_v2 while preserving its parametersshortcut = infer_shortcut(child_module.m[0])c2f_v2 = C2f_v2(child_module.cv1.conv.in_channels, child_module.cv2.conv.out_channels,n=len(child_module.m), shortcut=shortcut,g=child_module.m[0].cv2.conv.groups,e=child_module.c / child_module.cv2.conv.out_channels)transfer_weights(child_module, c2f_v2)setattr(module, name, c2f_v2)else:replace_c2f_with_c2f_v2(child_module)def save_model_v2(self: BaseTrainer):"""Disabled half precision saving. originated from ultralytics/yolo/engine/trainer.py"""ckpt = {'epoch': self.epoch,'best_fitness': self.best_fitness,'model': deepcopy(de_parallel(self.model)),'ema': deepcopy(self.ema.ema),'updates': self.ema.updates,'optimizer': self.optimizer.state_dict(),'train_args': vars(self.args), # save as dict'date': datetime.now().isoformat(),'version': __version__}# Save last, best and deletetorch.save(ckpt, self.last)if self.best_fitness == self.fitness:torch.save(ckpt, self.best)if (self.epoch > 0) and (self.save_period > 0) and (self.epoch % self.save_period == 0):torch.save(ckpt, self.wdir / f'epoch{self.epoch}.pt')del ckptdef final_eval_v2(self: BaseTrainer):"""originated from ultralytics/yolo/engine/trainer.py"""for f in self.last, self.best:if f.exists():strip_optimizer_v2(f) # strip optimizersif f is self.best:LOGGER.info(f'\nValidating {f}...')self.metrics = self.validator(model=f)self.metrics.pop('fitness', None)self.run_callbacks('on_fit_epoch_end')def strip_optimizer_v2(f: Union[str, Path] = 'best.pt', s: str = '') -> None:"""Disabled half precision saving. originated from ultralytics/yolo/utils/torch_utils.py"""x = torch.load(f, map_location=torch.device('cpu'))args = {**DEFAULT_CFG_DICT, **x['train_args']} # combine model args with default args, preferring model argsif x.get('ema'):x['model'] = x['ema'] # replace model with emafor k in 'optimizer', 'ema', 'updates': # keysx[k] = Nonefor p in x['model'].parameters():p.requires_grad = Falsex['train_args'] = {k: v for k, v in args.items() if k in DEFAULT_CFG_KEYS} # strip non-default keys# x['model'].args = x['train_args']torch.save(x, s or f)mb = os.path.getsize(s or f) / 1E6 # filesizeLOGGER.info(f"Optimizer stripped from {f},{f' saved as {s},' if s else ''} {mb:.1f}MB")def train_v2(self: YOLO, pruning=False, **kwargs):"""Disabled loading new model when pruning flag is set. originated from ultralytics/yolo/engine/model.py"""self._check_is_pytorch_model()if self.session: # Ultralytics HUB sessionif any(kwargs):LOGGER.warning('WARNING ⚠️ using HUB training arguments, ignoring local training arguments.')kwargs = self.session.train_argsoverrides = self.overrides.copy()overrides.update(kwargs)if kwargs.get('cfg'):LOGGER.info(f"cfg file passed. Overriding default params with {kwargs['cfg']}.")overrides = yaml_load(check_yaml(kwargs['cfg']))overrides['mode'] = 'train'if not overrides.get('data'):raise AttributeError("Dataset required but missing, i.e. pass 'data=coco128.yaml'")if overrides.get('resume'):overrides['resume'] = self.ckpt_pathself.task = overrides.get('task') or self.taskself.trainer = TASK_MAP[self.task][1](overrides=overrides, _callbacks=self.callbacks)if not pruning:if not overrides.get('resume'): # manually set model only if not resumingself.trainer.model = self.trainer.get_model(weights=self.model if self.ckpt else None, cfg=self.model.yaml)self.model = self.trainer.modelelse:# pruning modeself.trainer.pruning = Trueself.trainer.model = self.model# replace some functions to disable half precision savingself.trainer.save_model = save_model_v2.__get__(self.trainer)self.trainer.final_eval = final_eval_v2.__get__(self.trainer)self.trainer.hub_session = self.session # attach optional HUB sessionself.trainer.train()# Update model and cfg after trainingif RANK in (-1, 0):self.model, _ = attempt_load_one_weight(str(self.trainer.best))self.overrides = self.model.argsself.metrics = getattr(self.trainer.validator, 'metrics', None)def prune(args):# load trained yolov8 modelbase_name = 'prune/' + str(datetime.now()) + '/'model = YOLO(args.model)model.__setattr__("train_v2", train_v2.__get__(model))pruning_cfg = yaml_load(check_yaml(args.cfg))batch_size = pruning_cfg['batch']# use coco128 dataset for 10 epochs fine-tuning each pruning iteration step# this part is only for sample code, number of epochs should be included in config filepruning_cfg['data'] = "./ultralytics/datasets/soccer.yaml"pruning_cfg['epochs'] = 4model.model.train()replace_c2f_with_c2f_v2(model.model)initialize_weights(model.model) # set BN.eps, momentum, ReLU.inplacefor name, param in model.model.named_parameters():param.requires_grad = Trueexample_inputs = torch.randn(1, 3, pruning_cfg["imgsz"], pruning_cfg["imgsz"]).to(model.device)macs_list, nparams_list, map_list, pruned_map_list = [], [], [], []base_macs, base_nparams = tp.utils.count_ops_and_params(model.model, example_inputs)# do validation before pruning modelpruning_cfg['name'] = base_name+f"baseline_val"pruning_cfg['batch'] = 128validation_model = deepcopy(model)metric = validation_model.val(**pruning_cfg)init_map = metric.box.mapmacs_list.append(base_macs)nparams_list.append(100)map_list.append(init_map)pruned_map_list.append(init_map)print(f"Before Pruning: MACs={base_macs / 1e9: .5f} G, #Params={base_nparams / 1e6: .5f} M, mAP={init_map: .5f}")# prune same ratio of filter based on initial sizech_sparsity = 1 - math.pow((1 - args.target_prune_rate), 1 / args.iterative_steps)for i in range(args.iterative_steps):model.model.train()for name, param in model.model.named_parameters():param.requires_grad = Trueignored_layers = []unwrapped_parameters = []for m in model.model.modules():if isinstance(m, (Detect,)):ignored_layers.append(m)example_inputs = example_inputs.to(model.device)pruner = tp.pruner.GroupNormPruner(model.model,example_inputs,importance=tp.importance.GroupNormImportance(), # L2 norm pruning,iterative_steps=1,ch_sparsity=ch_sparsity,ignored_layers=ignored_layers,unwrapped_parameters=unwrapped_parameters)# Test regularization#output = model.model(example_inputs)#(output[0].sum() + sum([o.sum() for o in output[1]])).backward()#pruner.regularize(model.model)pruner.step()# pre fine-tuning validationpruning_cfg['name'] = base_name+f"step_{i}_pre_val"pruning_cfg['batch'] = 128validation_model.model = deepcopy(model.model)metric = validation_model.val(**pruning_cfg)pruned_map = metric.box.mappruned_macs, pruned_nparams = tp.utils.count_ops_and_params(pruner.model, example_inputs.to(model.device))current_speed_up = float(macs_list[0]) / pruned_macsprint(f"After pruning iter {i + 1}: MACs={pruned_macs / 1e9} G, #Params={pruned_nparams / 1e6} M, "f"mAP={pruned_map}, speed up={current_speed_up}")# fine-tuningfor name, param in model.model.named_parameters():param.requires_grad = Truepruning_cfg['name'] = base_name+f"step_{i}_finetune"pruning_cfg['batch'] = batch_size # restore batch sizemodel.train_v2(pruning=True, **pruning_cfg)# post fine-tuning validationpruning_cfg['name'] = base_name+f"step_{i}_post_val"pruning_cfg['batch'] = 128validation_model = YOLO(model.trainer.best)metric = validation_model.val(**pruning_cfg)current_map = metric.box.mapprint(f"After fine tuning mAP={current_map}")macs_list.append(pruned_macs)nparams_list.append(pruned_nparams / base_nparams * 100)pruned_map_list.append(pruned_map)map_list.append(current_map)# remove pruner after single iterationdel prunermodel.model.zero_grad() # Remove gradientssave_path = 'runs/detect/'+base_name+f"step_{i}_pruned_model.pth"torch.save(model.model,save_path) # without .state_dictprint('pruned model saved in',save_path)# model = torch.load('model.pth') # load the pruned modelsave_pruning_performance_graph(nparams_list, map_list, macs_list, pruned_map_list)# if init_map - current_map > args.max_map_drop:# print("Pruning early stop")# break# model.export(format='onnx')if __name__ == "__main__":parser = argparse.ArgumentParser()parser.add_argument('--model', default='runs/detect/train/weights/last.pt', help='Pretrained pruning target model file')parser.add_argument('--cfg', default='default.yaml',help='Pruning config file.'' This file should have same format with ultralytics/yolo/cfg/default.yaml')parser.add_argument('--iterative-steps', default=4, type=int, help='Total pruning iteration step')parser.add_argument('--target-prune-rate', default=0.2, type=float, help='Target pruning rate')parser.add_argument('--max-map-drop', default=1, type=float, help='Allowed maximum map drop after fine-tuning')args = parser.parse_args()prune(args)

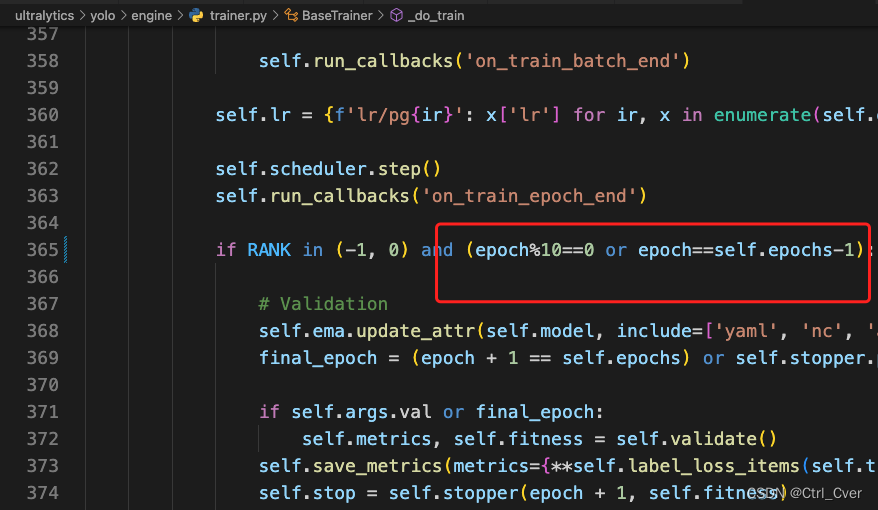

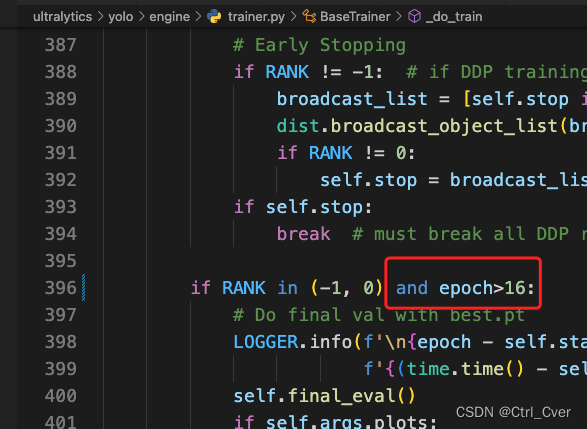

- 在代码的这些位置加上一些限制,不然它会经常的验证模型:

实验结果: 实验中,待续~

相关文章:

yolov8剪枝实践

本文使用的剪枝库是torch-pruning ,实验了该库的三个剪枝算法GroupNormPruner、BNScalePruner和GrowingRegPruner。 安装使用 安装依赖库 pip install torch-pruning 把 https://github.com/VainF/Torch-Pruning/blob/master/examples/yolov8/yolov8_pruning.py&…...

功能基础篇6——系统接口,操作系统与解释器系统

系统 os Python标准库,os模块提供Python与多种操作系统交互的接口 import os import stat# 文件夹 print(os.mkdir(r./dir)) # None 新建单级空文件夹 print(os.rmdir(r./dir)) # None 删除单级空文件夹 print(os.makedirs(r.\dir\dir\dir)) # None 递归创建空…...

由于导线材质不同绕组直流电阻不平衡率超标

实测证明, 有的变压器绕组的直流电阻偏大, 有的偏差较大, 其主要原因是某些导线的铜和银的含量低于国家标准规定限额。 有时即使采用合格的导线, 但由于导线截面尺寸偏差不同, 也可以导致绕组直流电阻不平衡率超标。 …...

选择智慧公厕解决方案,开创智慧城市公共厕所新时代

在城市建设和发展中,公厕作为一个不可或缺的城市基础设施,直接关系到城市形象的提升和居民生活品质的改善。然而,传统的公厕存在着管理不便、卫生状况差、设施陈旧等问题。为了解决这些困扰着城市发展的难题,智慧公厕源头厂家广州…...

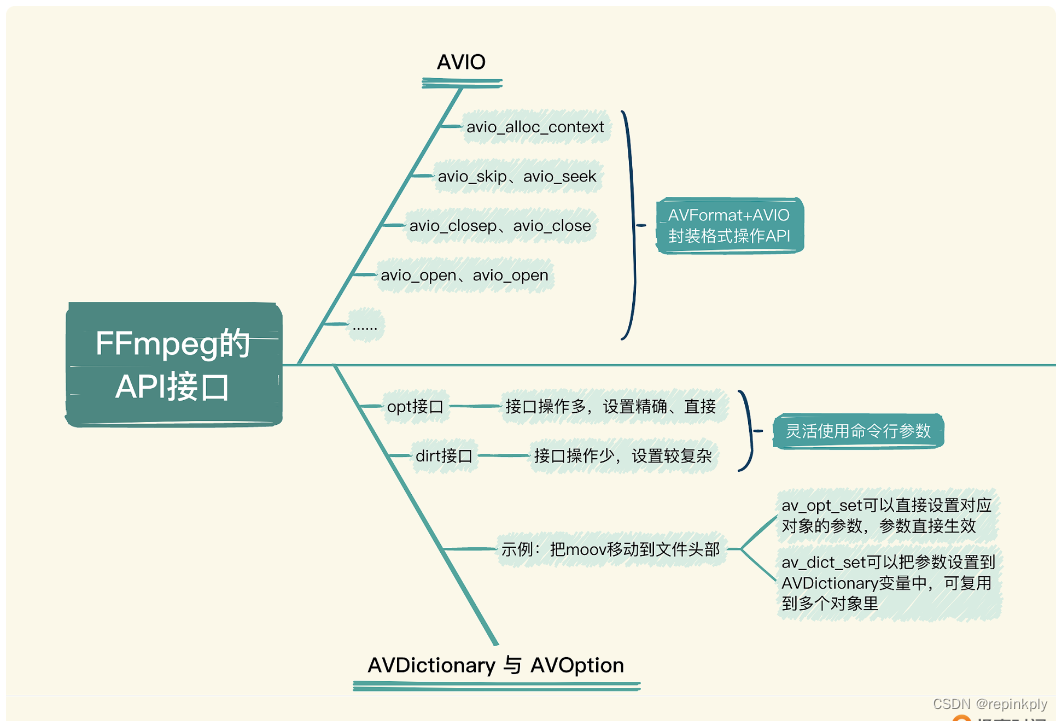

FFmpeg 基础模块:AVIO、AVDictionary 与 AVOption

目录 AVIO AVDictionary 与 AVOption 小结 思考 我们了解了 AVFormat 中的 API 接口的功能,从实际操作经验看,这些接口是可以满足大多数音视频的 mux 与 demux,或者说 remux 场景的。但是除此之外,在日常使用 API 开发应用的时…...

代数——第3章——向量空间

第三章 向量空间(Vector Spaces) fmmer mit den einfachsten Beispielen anfangen. (始终从最简单的例子开始。) ------------------------------David Hilbert 3.1 (R^n)的子空间 我们的向量空间的基础模型(本章主题)是n 维实向量空间 的子空间。我们将在本节讨论它。…...

2023年软考网工上半年下午真题

试题一: 阅读以下说明,回答问题1至问题4,将解答填入答题纸对应的解答栏内。 [说明] 某企业办公楼网络拓扑如图1-1所示。该网络中交换机Switch1-Switch 4均是二层设备,分布在办公楼的各层,上联采用干兆光纤。核心交换…...

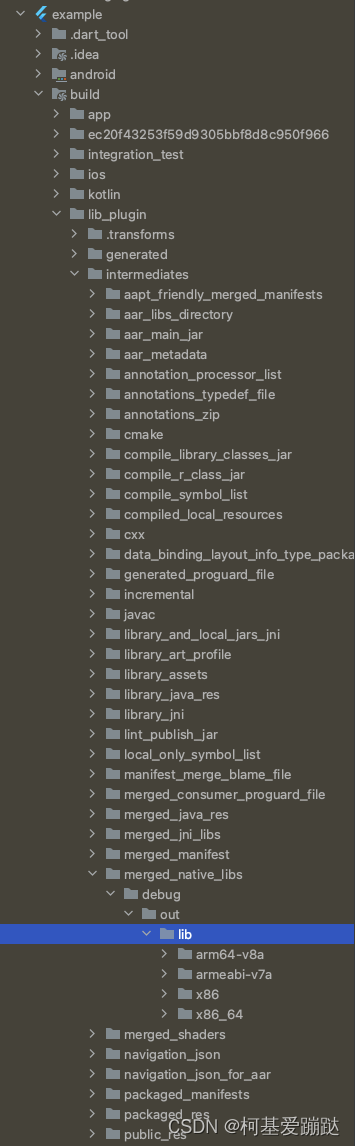

Flutter 直接调用so动态库,或调用C/C++源文件内函数

开发环境 MacBook Pro Apple M2 Pro | macOS Sonoma 14.0 Android Studio Giraffe | 2022.3.1 Patch 1 XCode Version 15.0 Flutter 3.13.2 • channel stable Tools • Dart 3.1.0 • DevTools 2.25.0 先说下历程,因为我已经使用了Flutter3的版本,起初…...

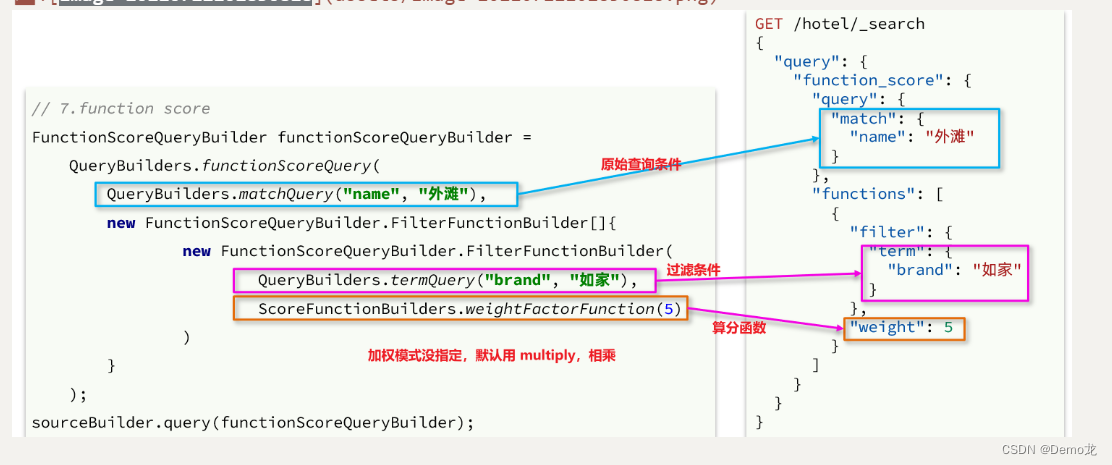

elasticsearch(ES)分布式搜索引擎03——(RestClient查询文档,ES旅游案例实战)

目录 3.RestClient查询文档3.1.快速入门3.1.1.发起查询请求3.1.2.解析响应3.1.3.完整代码3.1.4.小结 3.2.match查询3.3.精确查询3.4.布尔查询3.5.排序、分页3.6.高亮3.6.1.高亮请求构建3.6.2.高亮结果解析 4.旅游案例4.1.酒店搜索和分页4.1.1.需求分析4.1.2.定义实体类4.1.3.定…...

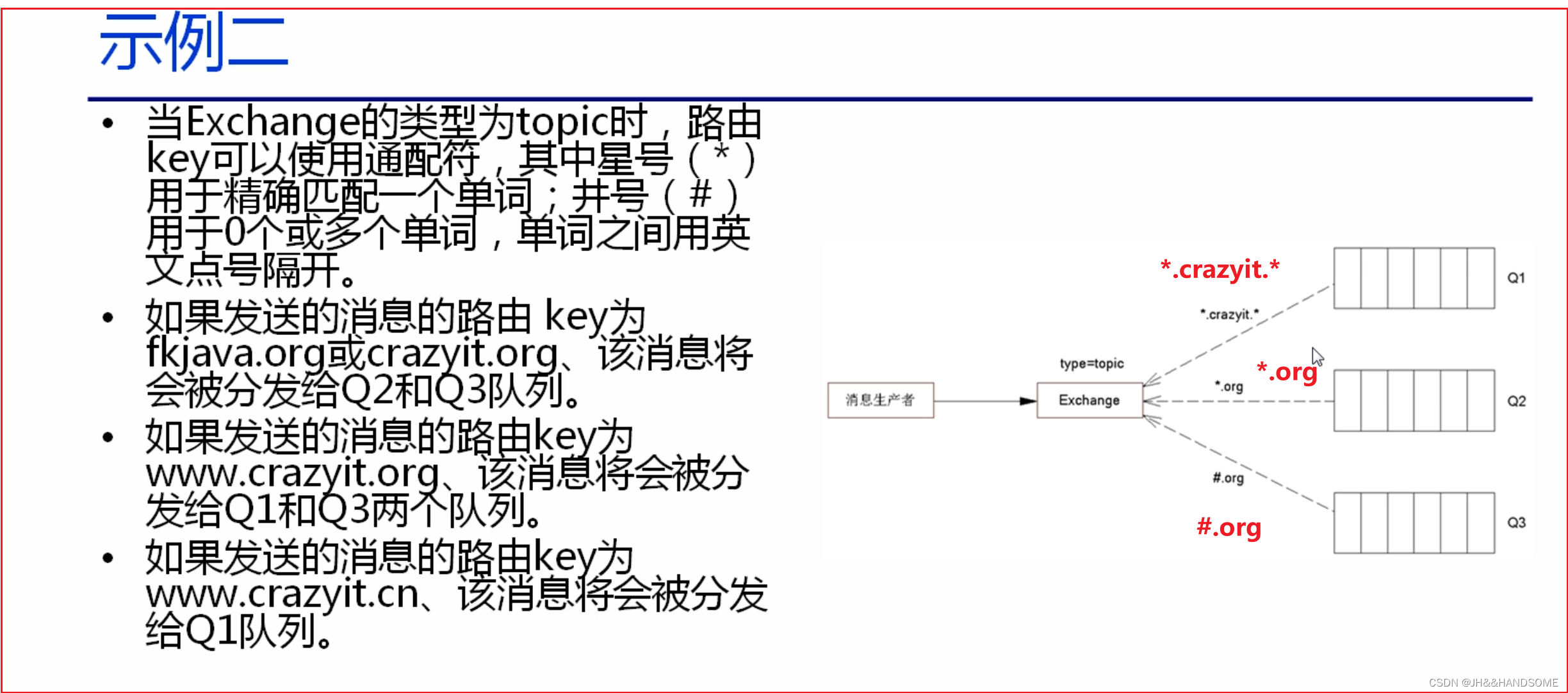

198、RabbitMQ 的核心概念 及 工作机制概述; Exchange 类型 及 该类型对应的路由规则

JMS 也是一种消息机制 AMQP ( Advanced Message Queuing Protocol ) 高级消息队列协议 ★ RabbitMQ的核心概念 Connection: 代表客户端(包括消息生产者和消费者)与RabbitMQ之间的连接。 Channel: 连接内部的Channel。 Exch…...

系统架构设计:18 论基于DSSA的软件架构设计与应用

目录 一 特定领域软件架构DSSA 1 DSSA 2 DSSA的基本活动和产物 (1)DSSA的基本活动和产物...

Android原生实现控件outline方案(API28及以上)

Android控件的Outline效果的实现方式有很多种,这里介绍一下另一种使用Canvas.drawPath()方法来绘制控件轮廓Path路径的实现方案(API28及以上)。 实现效果: 属性 添加Outline相关属性,主要包括颜色和Stroke宽度&…...

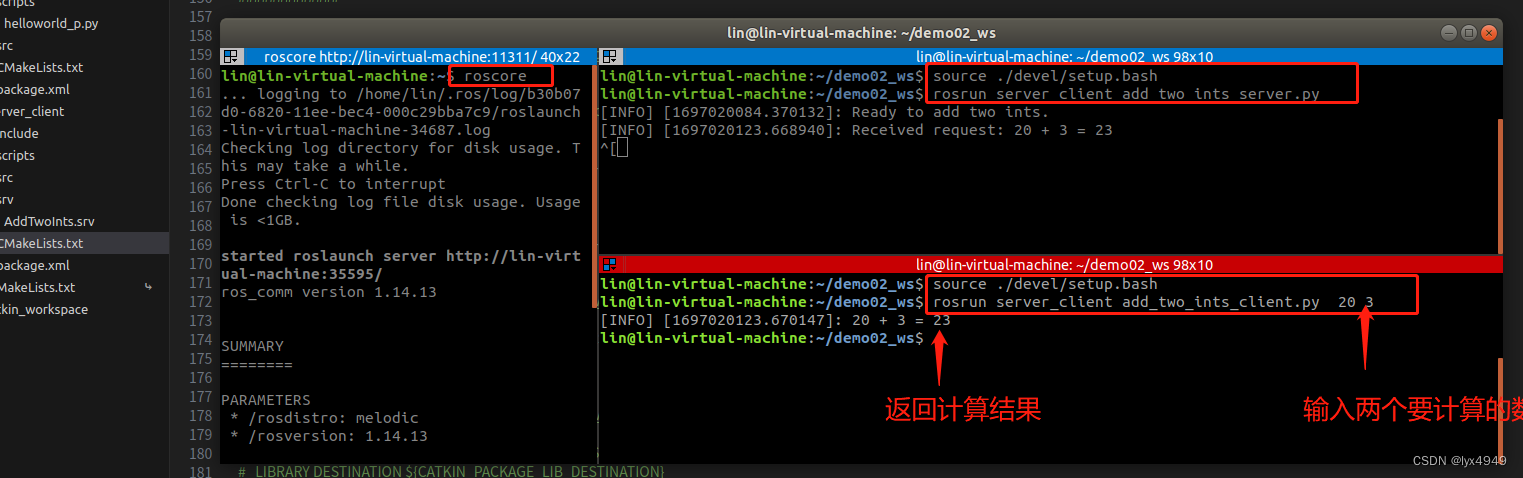

ROS学习笔记(六)---服务通信机制

1. 服务通信是什么 在ROS中,服务通信机制是一种点对点的通信方式,用于节点之间的请求和响应。它允许一个节点(服务请求方)向另一个节点(服务提供方)发送请求,并等待响应。 服务通信机制在ROS中…...

常见的C/C++开源QP问题求解器

1. qpSWIFT qpSWIFT 是面向嵌入式和机器人应用的轻量级稀疏二次规划求解器。它采用带有 Mehrotra Predictor 校正步骤和 Nesterov Todd 缩放的 Primal-Dual Interioir Point 方法。 开发语言:C文档:传送门项目:传送门 2. OSQP OSQP&#…...

前端axios发送请求,在请求头添加参数

1.在封装接口传参时,定义形参,params是正常传参,name则是我想要在请求头传参 export function getCurlList (params, name) {return request({url: ********,method: get,params,name}) } 2.接口调用 const res await getCurlList(params,…...

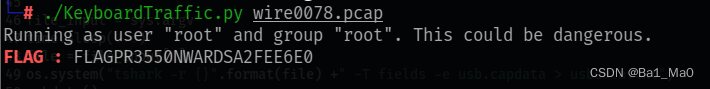

CTF Misc(3)流量分析基础以及原理

前言 流量分析在ctf比赛中也是常见的题目,参赛者通常会收到一个网络数据包的数据集,这些数据包记录了网络通信的内容和细节。参赛者的任务是通过分析这些数据包,识别出有用的信息,例如登录凭据、加密算法、漏洞利用等等 工具安装…...

Telink泰凌微TLSR8258蓝牙开发笔记(二)

在开发过程中遇到了以下问题,记录一下 1.在与ios手机连接后,手机app使能notify,设备与手机通过write和notify进行数据交换,但是在连接传输数据一端时间后,设备收到write命令后不能发出notify命令,打印错误…...

vue3+elementPlus:el-tree复制粘贴数据功能,并且有弹窗组件

在tree控件里添加contextmenu属性表示右键点击事件。 因右键自定义菜单事件需要获取当前点击的位置,所以此处绑定动态样式来控制菜单实时跟踪鼠标右键点击位置。 //html <div class"box-list"><el-tree ref"treeRef" node-key"id…...

JTS:10 Crosses

这里写目录标题 版本点与线点与面线与面线与线 版本 org.locationtech.jts:jts-core:1.19.0 链接: github public class GeometryCrosses {private final GeometryFactory geometryFactory new GeometryFactory();private static final Logger LOGGER LoggerFactory.getLog…...

MySQL中的SHOW FULL PROCESSLIST命令

在MySQL数据库管理中,理解和监控当前正在执行的进程是至关重要的一环。MySQL提供了一系列强大的工具和命令,使得这项任务变得相对容易。其中,SHOW FULL PROCESSLIST命令就是一个非常有用的工具,它可以帮助我们查看MySQL服务器中的…...

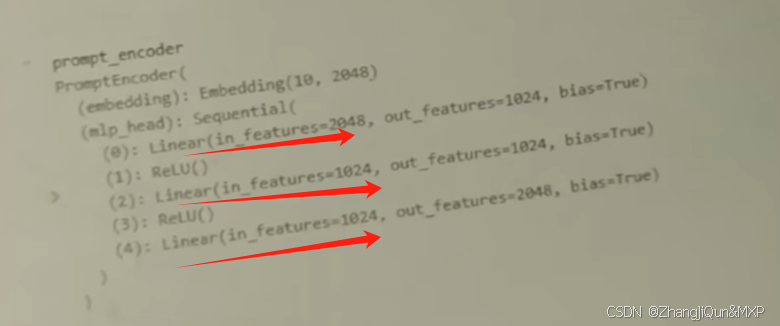

Prompt Tuning、P-Tuning、Prefix Tuning的区别

一、Prompt Tuning、P-Tuning、Prefix Tuning的区别 1. Prompt Tuning(提示调优) 核心思想:固定预训练模型参数,仅学习额外的连续提示向量(通常是嵌入层的一部分)。实现方式:在输入文本前添加可训练的连续向量(软提示),模型只更新这些提示参数。优势:参数量少(仅提…...

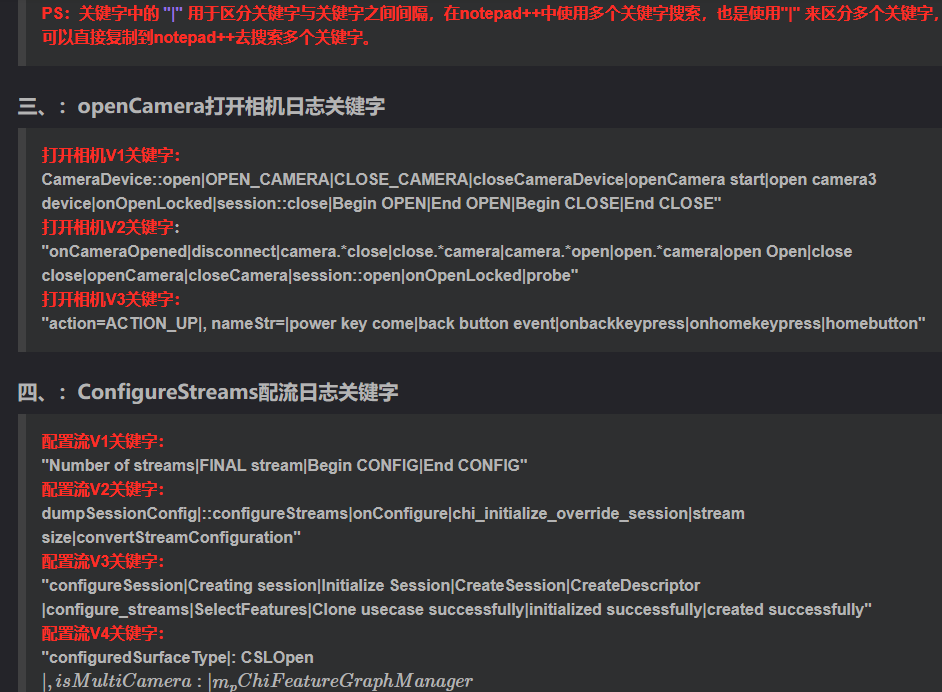

相机Camera日志实例分析之二:相机Camx【专业模式开启直方图拍照】单帧流程日志详解

【关注我,后续持续新增专题博文,谢谢!!!】 上一篇我们讲了: 这一篇我们开始讲: 目录 一、场景操作步骤 二、日志基础关键字分级如下 三、场景日志如下: 一、场景操作步骤 操作步…...

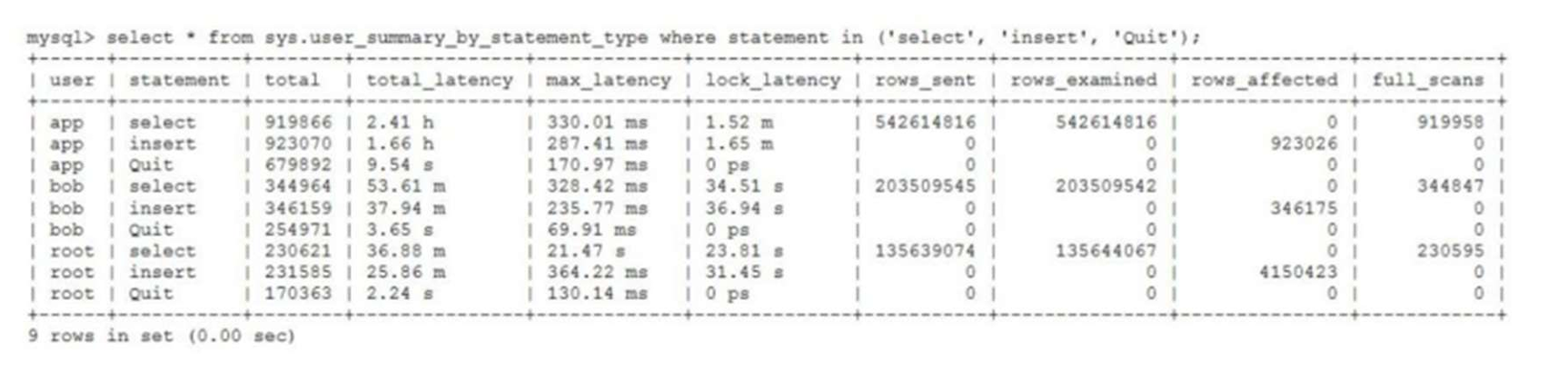

MySQL 8.0 OCP 英文题库解析(十三)

Oracle 为庆祝 MySQL 30 周年,截止到 2025.07.31 之前。所有人均可以免费考取原价245美元的MySQL OCP 认证。 从今天开始,将英文题库免费公布出来,并进行解析,帮助大家在一个月之内轻松通过OCP认证。 本期公布试题111~120 试题1…...

rnn判断string中第一次出现a的下标

# coding:utf8 import torch import torch.nn as nn import numpy as np import random import json""" 基于pytorch的网络编写 实现一个RNN网络完成多分类任务 判断字符 a 第一次出现在字符串中的位置 """class TorchModel(nn.Module):def __in…...

Razor编程中@Html的方法使用大全

文章目录 1. 基础HTML辅助方法1.1 Html.ActionLink()1.2 Html.RouteLink()1.3 Html.Display() / Html.DisplayFor()1.4 Html.Editor() / Html.EditorFor()1.5 Html.Label() / Html.LabelFor()1.6 Html.TextBox() / Html.TextBoxFor() 2. 表单相关辅助方法2.1 Html.BeginForm() …...

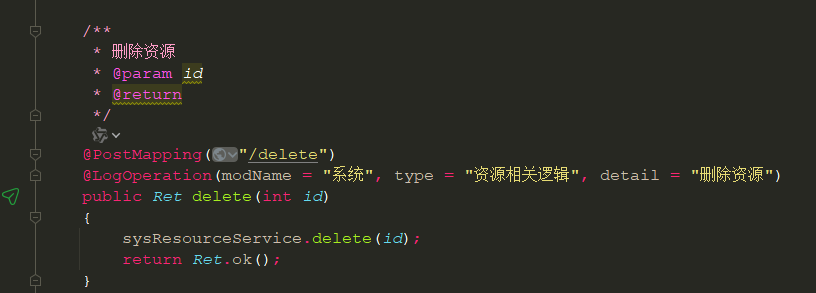

springboot 日志类切面,接口成功记录日志,失败不记录

springboot 日志类切面,接口成功记录日志,失败不记录 自定义一个注解方法 import java.lang.annotation.ElementType; import java.lang.annotation.Retention; import java.lang.annotation.RetentionPolicy; import java.lang.annotation.Target;/***…...

c# 局部函数 定义、功能与示例

C# 局部函数:定义、功能与示例 1. 定义与功能 局部函数(Local Function)是嵌套在另一个方法内部的私有方法,仅在包含它的方法内可见。 • 作用:封装仅用于当前方法的逻辑,避免污染类作用域,提升…...

ubuntu22.04有线网络无法连接,图标也没了

今天突然无法有线网络无法连接任何设备,并且图标都没了 错误案例 往上一顿搜索,试了很多博客都不行,比如 Ubuntu22.04右上角网络图标消失 最后解决的办法 下载网卡驱动,重新安装 操作步骤 查看自己网卡的型号 lspci | gre…...

k8s从入门到放弃之HPA控制器

k8s从入门到放弃之HPA控制器 Kubernetes中的Horizontal Pod Autoscaler (HPA)控制器是一种用于自动扩展部署、副本集或复制控制器中Pod数量的机制。它可以根据观察到的CPU利用率(或其他自定义指标)来调整这些对象的规模,从而帮助应用程序在负…...

python打卡第47天

昨天代码中注意力热图的部分顺移至今天 知识点回顾: 热力图 作业:对比不同卷积层热图可视化的结果 def visualize_attention_map(model, test_loader, device, class_names, num_samples3):"""可视化模型的注意力热力图,展示模…...