基于k8s的web服务器构建

文章目录

- k8s综合项目

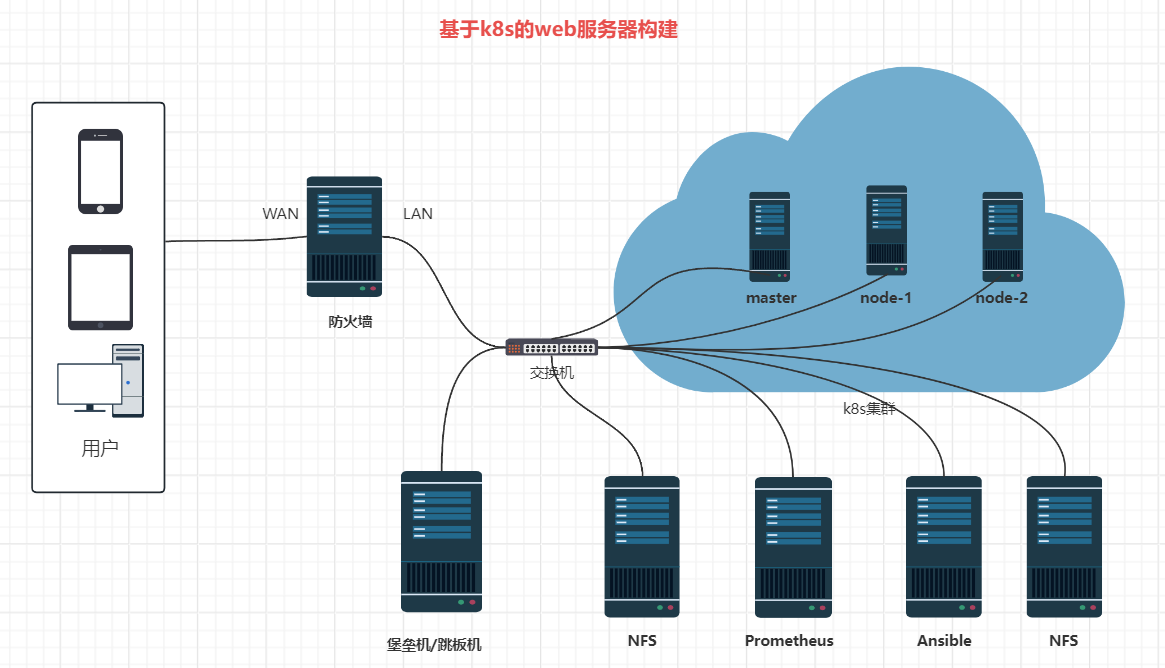

- 1、项目规划图

- 2、项目描述

- 3、项目环境

- 4、前期准备

- 4.1、环境准备

- 4.2、ip划分

- 4.3、静态配置ip地址

- 4.4、修改主机名

- 4.5、部署k8s集群

- 4.5.1、关闭防火墙和selinux

- 4.5.2、升级系统

- 4.5.3、每台主机都配置hosts文件,相互之间通过主机名互相访问

- 4.5.4、配置master和node之间的免密通道

- 4.5.5、关闭交换分区swap,提升性能(三台一起操作)

- 4.5.6、为什么要关闭swap交换分区?

- 4.5.7、修改机器内核参数(三台一起操作)

- 4.5.8、配置阿里云的repo源(三台一起)

- 4.5.9、配置时间同步(三台一起)

- 4.5.10、安装docker服务(三台一起)

- 4.5.11、安装docker的最新版本

- 4.5.12、配置镜像加速器

- 4.5.13、继续配置Kubernetes

- 4.5.14、安装初始化k8s需要的软件包(三台一起)

- 4.5.15、kubeadm初始化k8s集群

- 4.5.16、基于kubeadm.yaml文件初始化k8s

- 4.5.17、改一下node的角色为worker

- 4.5.18、安装网络插件

- 4.5.19、安装kubectl top node

- 4.5.20、让node节点也可以访问 kubectl get node

- 5、先搭建k8s里边的内容

- 5.1、搭建nfs服务器,给web 服务提供网站数据,创建好相关的pv、pvc

- 5.1.1、设置共享目录

- 5.1.2、创建共享目录

- 5.1.3、刷新nfs或者重新输出共享目录

- 5.1.4、创建一个pv使用nfs服务器共享的目录

- 5.1.5、应用一下

- 5.1.6、创建pvc使用存储类:example-nfs

- 5.1.7、创建pod启动pvc

- 5.1.8、测试

- 5.2、将自己go语言的代码镜像从harbor仓库中拉取出来

- 5.2.1、先把go语言的代码制作成镜像

- 5.2.2、然后上传到harbor仓库

- node节点拉取ghweb镜像

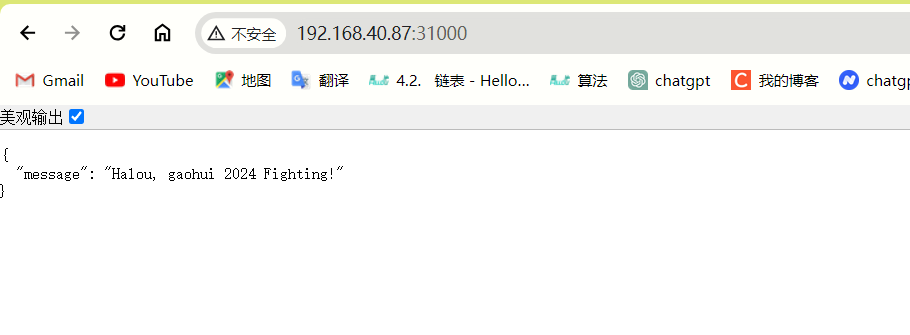

- 5.3、启动HPA功能部署自己的web pod,当cpu使用率达到50%的时候,进行水平扩缩,最小10个业务pod,最多20个业务pod。

- 5.5、使用探针(liveness、readiness、startup)的(httpget、exec)方法对web业务pod进行监控,一旦出现问题马上重启,增强业务pod的可靠性。

- 5.6、搭建ingress controller 和ingress规则,给web服务做基于域名的负载均衡

- 5.7、部署和访问 Kubernetes 仪表板(Dashboard)

- 5.8、使用ab工具对整个k8s集群里的web服务进行压力测试

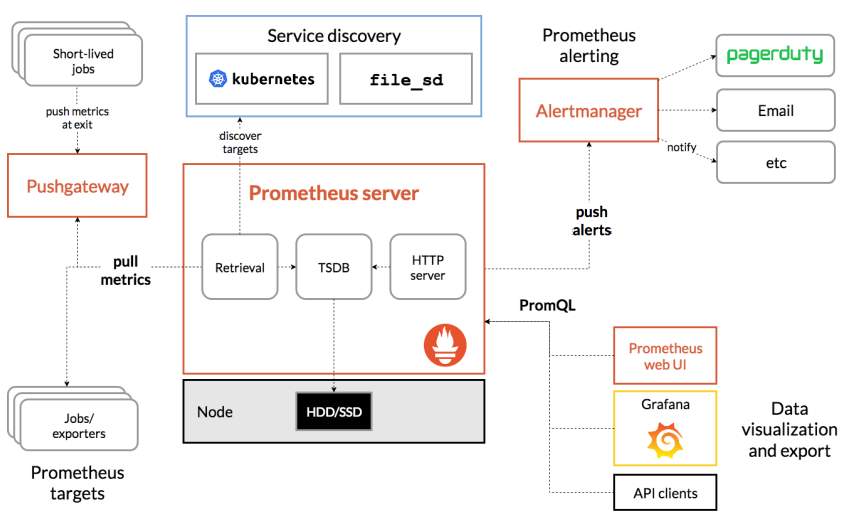

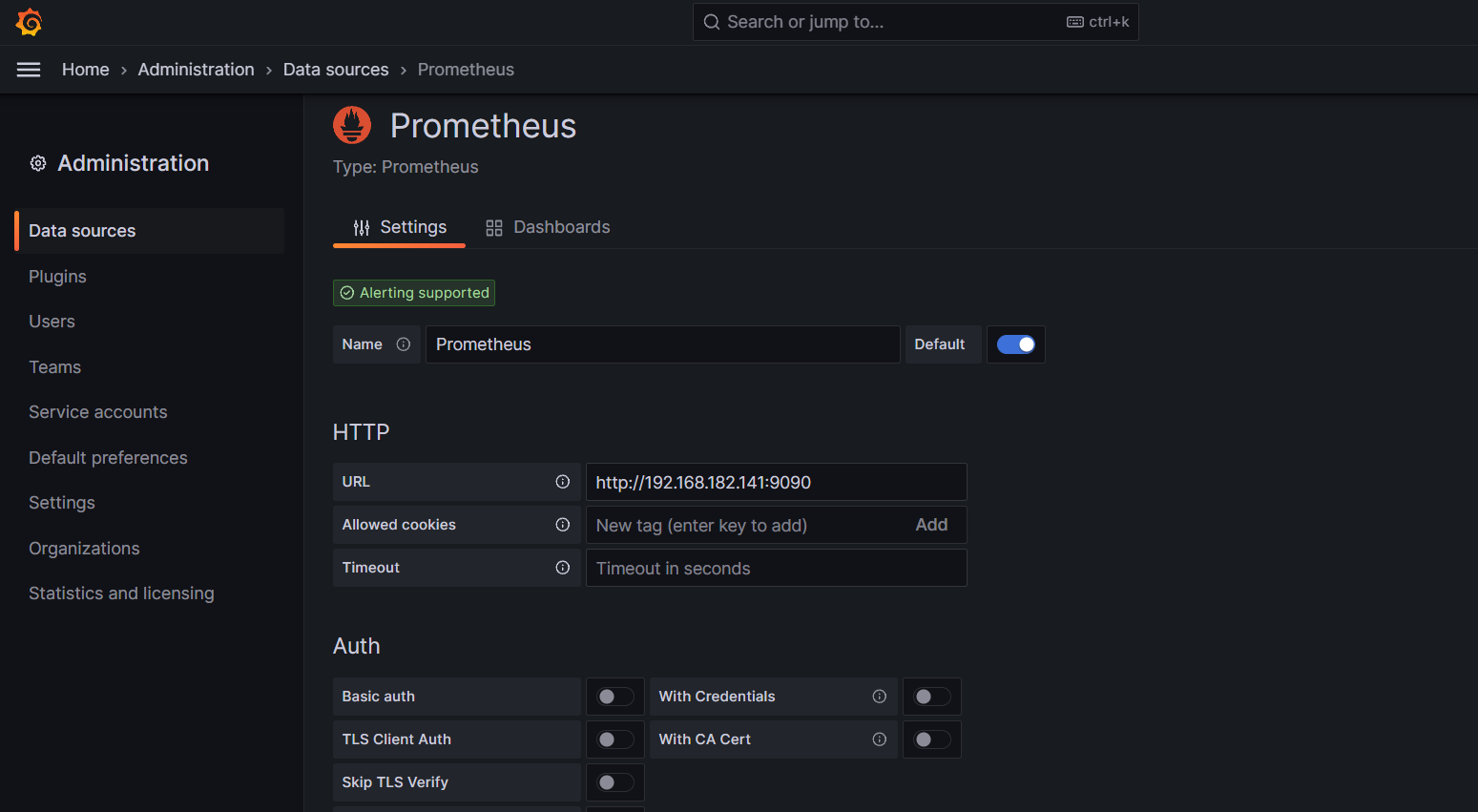

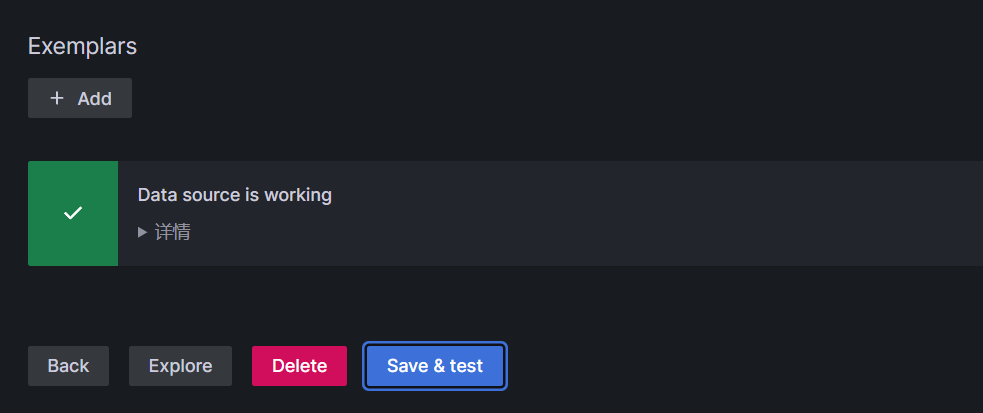

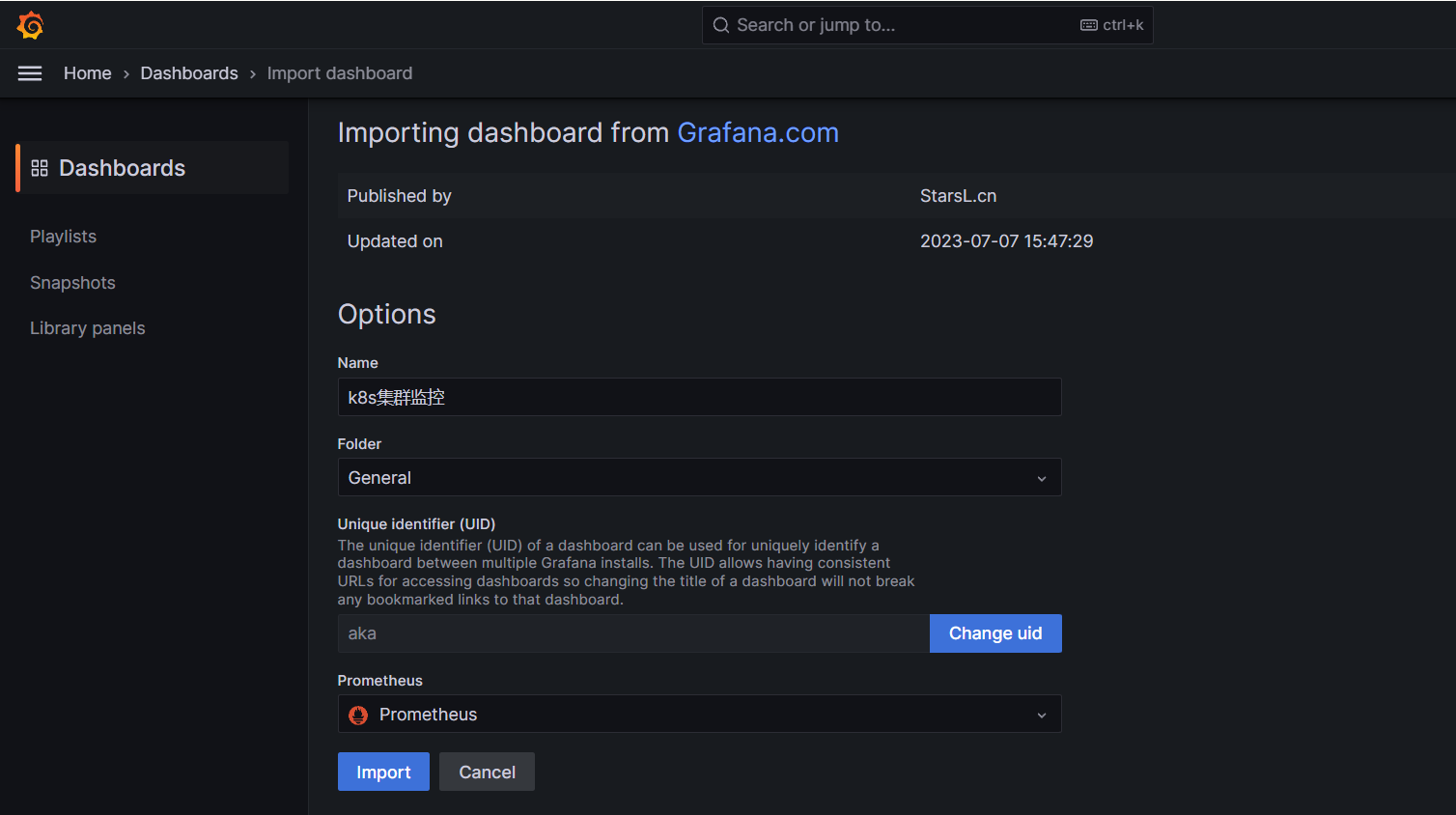

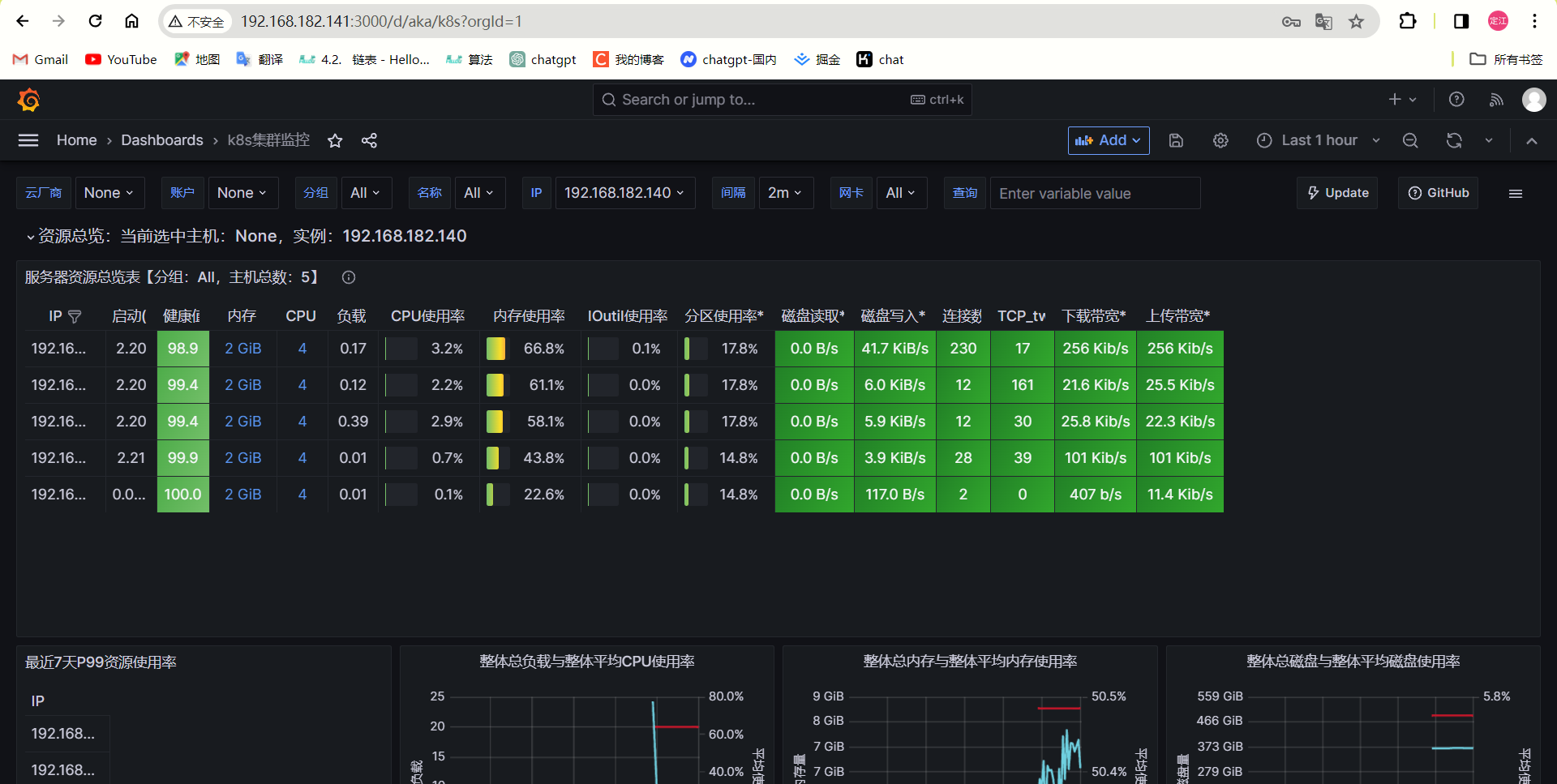

- 6、搭建Prometheus服务器

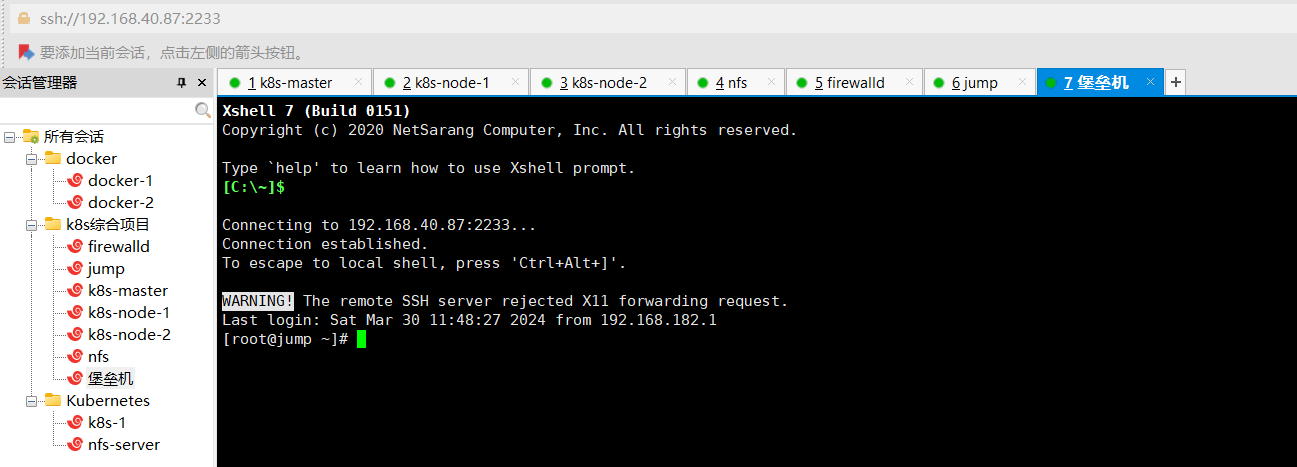

- 6.1、为了方便多台机器操作,先部署ansible在堡垒机上

- 6.2、搭建prometheus 服务器和grafana出图软件,监控所有的服务器

- 6.2.1、安装exporter

- 6.2.2、在Prometheus服务器上添加被监控的服务器

- 6.2.3、安装grafana出图展示

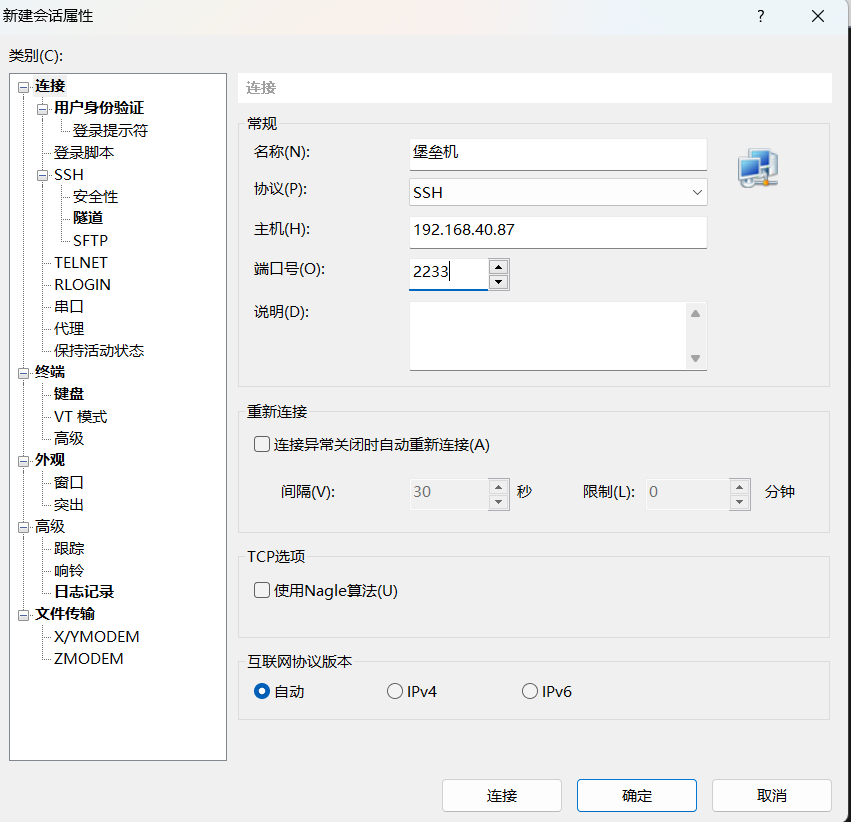

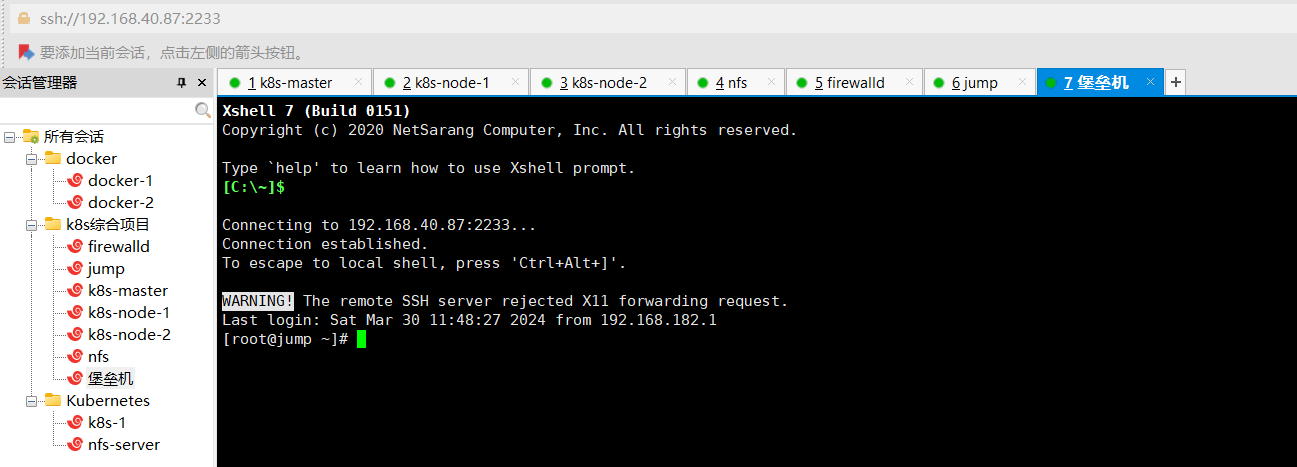

- 7、进行跳板机和防火墙的配置

- 7.1、将k8s集群里的机器还有nfs服务器,进行tcp wrappers的配置,只允许堡垒机ssh进来,拒绝其他的机器ssh过去。

- 7.2、搭建防火墙服务器

- 7.3、编写dnat和snat策略

- 7.4、将整个k8s集群里的服务器的网关设置为防火墙服务器的LAN口的ip地址(192.168.182.177)

- 7.5、测试SNAT功能

- 7.6、测试dnat功能

- 7.7、测试堡垒机发布

- 8、项目心得

k8s综合项目

1、项目规划图

2、项目描述

项目描述/项目功能: 模拟企业里的k8s生产环境,部署web,nfs,harbor,Prometheus,granfa等应用,构建一个高可用高性能的web系统,同时能监控整个k8s集群的使用。

3、项目环境

CentOS 7.9,ansible 2.9.27,Docker 2.6.0.0,Docker Compose 2.18.1,Kubernetes 1.20.6,Harbor 2.1.0,nfs v4,metrics-server 0.6.0,ingress-nginx-controllerv1.1.0,kube-webhook-certgen-v1.1.0,Dashboard v2.5.0,Prometheus 2.44.0,Grafana 9.5.1。

4、前期准备

4.1、环境准备

9台全新的Linux服务器,关闭firewall和seLinux,配置静态ip地址,修改主机名,添加hosts解析

但是由于我的电脑本身只有16G内存,搞不了9台,所以把prometheus,ansble,堡垒机放到一台服务器上,把NFS服务器和harbor仓库放到了一台服务器上。

4.2、ip划分

| 主机名 | ip |

|---|---|

| 防火墙 | 192.168.40.87 |

| 堡垒机/跳板机+prometheus+ansible | 192.168.182.141 |

| NFS服务器,harbor仓库 | 192.168.182.140 |

| master | 192.168.182.142 |

| node-1 | 192.168.182.143 |

| node-2 | 192.168.182.144 |

4.3、静态配置ip地址

'以master为例'

[root@master ~]# cd /etc/sysconfig/network-scripts/

[root@master network-scripts]# ls

ifcfg-ens33 ifdown-eth ifdown-post ifdown-Team ifup-aliases ifup-ipv6 ifu

ifcfg-lo ifdown-ippp ifdown-ppp ifdown-TeamPort ifup-bnep ifup-isdn ifu

ifdown ifdown-ipv6 ifdown-routes ifdown-tunnel ifup-eth ifup-plip ifu

ifdown-bnep ifdown-isdn ifdown-sit ifup ifup-ippp ifup-plusb ifu

[root@master network-scripts]# vim ifcfg-ens33

[root@master network-scripts]# cat ifcfg-ens33

BOOTPROTO="none"

DEFROUTE="yes"

NAME="ens33"

UUID="9c5e3120-2fcf-4124-b924-f2976d52512f"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.182.142

PREFIX=24

GATEWAY=192.168.182.2

DNS1=114.114.114.114

[root@master network-scripts]#

[root@master network-scripts]# service network restart

Restarting network (via systemctl): [ 确定 ]

[root@master network-scripts]# ping www.baidu.com

PING www.a.shifen.com (183.2.172.42) 56(84) bytes of data.

64 bytes from 183.2.172.42 (183.2.172.42): icmp_seq=1 ttl=128 time=18.1 ms

64 bytes from 183.2.172.42 (183.2.172.42): icmp_seq=2 ttl=128 time=17.7 ms

^C

--- www.a.shifen.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 17.724/17.956/18.188/0.232 ms4.4、修改主机名

hostnamectl set-hostname master && bash

hostnamectl set-hostname node-1 && bash

hostnamectl set-hostname node-2 && bash

hostnamectl set-hostname nfs && bash

hostnamectl set-hostname firewalld && bash

hostnamectl set-hostname jump && bash

4.5、部署k8s集群

4.5.1、关闭防火墙和selinux

[root@localhost ~]# service firewalld stop

Redirecting to /bin/systemctl stop firewalld.service

[root@localhost ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@localhost ~]# setenforce 0

[root@localhost ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@localhost ~]#

4.5.2、升级系统

yum update -y

4.5.3、每台主机都配置hosts文件,相互之间通过主机名互相访问

'加入这三行'

192.168.182.142 master

192.168.182.143 node-1

192.168.182.144 node-2[root@master ~]# vim /etc/hosts

[root@master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.182.142 master

192.168.182.143 node-1

192.168.182.144 node-2

[root@master ~]#

4.5.4、配置master和node之间的免密通道

ssh-keygen

cd /root/.ssh/

ssh-copy-id -i id_rsa.pub root@node-1

ssh-copy-id -i id_rsa.pub root@node-2

4.5.5、关闭交换分区swap,提升性能(三台一起操作)

[root@master .ssh]# swapoff -a

永久关闭:注释``swap挂载,给swap`这行开头加一下注释

[root@master .ssh]# vim /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

4.5.6、为什么要关闭swap交换分区?

Swap是交换分区,如果机器内存不够,会使用swap分区,但是swap分区的性能较低,k8s设计的时候为了能提升性能,默认是不允许使用交换分区的。``Kubeadm`初始化的时候会检测swap是否关闭,如果没关闭,那就初始化失败。如果不想要关闭交换分区,安装k8s的时候可以指定–ignore-preflight-errors=Swap来解决。

4.5.7、修改机器内核参数(三台一起操作)

[root@master .ssh]# modprobe br_netfilter

[root@master .ssh]# echo "modprobe br_netfilter" >> /etc/profile

[root@master .ssh]# cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

[root@master .ssh]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

[root@master .ssh]#

4.5.8、配置阿里云的repo源(三台一起)

yum install -y yum-utilsyum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repoyum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm配置安装k8s组件需要的阿里云的repo源vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

4.5.9、配置时间同步(三台一起)

[root@master ~]# yum install ntpdate -y

[root@master ~]# ntpdate cn.pool.ntp.org3 Mar 10:15:12 ntpdate[73056]: adjust time server 84.16.67.12 offset 0.007718 sec

[root@master ~]#

加入计划任务

[root@master ~]# crontab -e

no crontab for root - using an empty one

crontab: installing new crontab

[root@master ~]# crontab -l

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org

[root@master ~]#

[root@master ~]# service crond restart

Redirecting to /bin/systemctl restart crond.service

[root@master ~]#

4.5.10、安装docker服务(三台一起)

4.5.11、安装docker的最新版本

[root@master ~]# sudo yum install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -y

[root@master ~]# systemctl start docker && systemctl enable docker.service

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

您在 /var/spool/mail/root 中有新邮件

[root@master ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@master ~]#

4.5.12、配置镜像加速器

[root@master ~]# vim /etc/docker/daemon.json

{"registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com"],"exec-opts": ["native.cgroupdriver=systemd"]

} [root@master ~]#

[root@master ~]# systemctl daemon-reload

您在 /var/spool/mail/root 中有新邮件

[root@master ~]# systemctl restart docker

[root@master ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@master ~]#

4.5.13、继续配置Kubernetes

4.5.14、安装初始化k8s需要的软件包(三台一起)

k8s 1.24开始就不再使用``docker作为底层的容器运行时软件,采用containerd`作为底层的容器运行时软件

[root@master ~]# yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6

Kubeadm: kubeadm是一个工具,用来初始化k8s集群的

kubelet: 安装在集群所有节点上,用于启动Pod的

kubectl: 通过kubectl可以部署和管理应用,查看各种资源,创建、删除和更新各种组件

[root@master ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

您在 /var/spool/mail/root 中有新邮件

[root@master ~]#

4.5.15、kubeadm初始化k8s集群

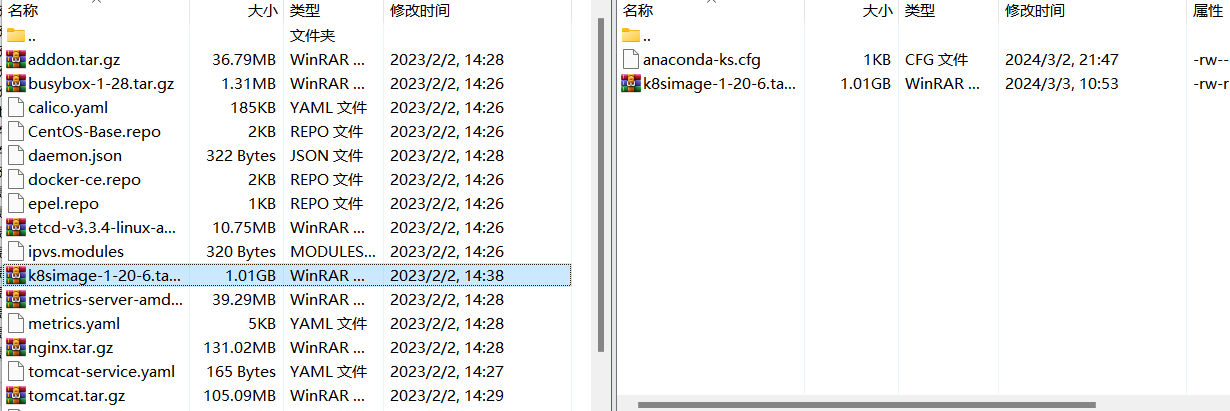

把初始化k8s集群需要的离线镜像包上传到master、node-1、node-2机器上,手动解压

利用xftp传到master上的root用户的家目录下

再利用``scp`传递到node-1和node-2上(之前建立过免密通道)

[root@master ~]# scp k8simage-1-20-6.tar.gz root@node-1:/root

k8simage-1-20-6.tar.gz 100% 1033MB 129.0MB/s 00:08

[root@master ~]# scp k8simage-1-20-6.tar.gz root@node-2:/root

k8simage-1-20-6.tar.gz 100% 1033MB 141.8MB/s 00:07

[root@master ~]#

导入镜像(三台一起)

[root@master ~]# docker load -i k8simage-1-20-6.tar.gz

生成一个yml文件(在master上操作)

[root@master ~]# kubeadm config print init-defaults > kubeadm.yaml

您在 /var/spool/mail/root 中有新邮件

[root@master ~]# ls

anaconda-ks.cfg k8simage-1-20-6.tar.gz kubeadm.yaml

[root@master ~]# cat kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authentication

kind: InitConfiguration

localAPIEndpoint:advertiseAddress: 1.2.3.4bindPort: 6443

nodeRegistration:criSocket: /var/run/dockershim.sockname: mastertaints:- effect: NoSchedulekey: node-role.kubernetes.io/master

---

apiServer:timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:type: CoreDNS

etcd:local:dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

networking:dnsDomain: cluster.localserviceSubnet: 10.96.0.0/12

scheduler: {}

[root@master ~]#

'修改内容'

[root@master ~]# cat kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authentication

kind: InitConfiguration

localAPIEndpoint:advertiseAddress: 192.168.182.142bindPort: 6443

nodeRegistration:criSocket: /var/run/dockershim.sockname: mastertaints:- effect: NoSchedulekey: node-role.kubernetes.io/master

---

apiServer:timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:type: CoreDNS

etcd:local:dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

networking:dnsDomain: cluster.localserviceSubnet: 10.96.0.0/12podSubnet: 10.244.0.0/16

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

[root@master ~]# 4.5.16、基于kubeadm.yaml文件初始化k8s

[root@master ~]# kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification

[root@master ~]# mkdir -p $HOME/.kube

您在 /var/spool/mail/root 中有新邮件

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@master ~]#

接下来去node-1和node-2上去执行

[root@node-1 ~]# kubeadm join 192.168.182.142:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:f655c7887580b8aae5a4b510253c14c76615b0ccc2d8a84aa9759fd02d278f41

去master上查看是否成功

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 8m22s v1.20.6

node-1 NotReady <none> 67s v1.20.6

node-2 NotReady <none> 61s v1.20.6

[root@master ~]#

4.5.17、改一下node的角色为worker

[root@master ~]# kubectl label node node-1 node-role.kubernetes.io/worker=worker

node/node-1 labeled

[root@master ~]# kubectl label node node-2 node-role.kubernetes.io/worker=worker

node/node-2 labeled

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 15m v1.20.6

node-1 NotReady worker 8m12s v1.20.6

node-2 NotReady worker 8m6s v1.20.6

[root@master ~]#

4.5.18、安装网络插件

先利用xftp上传文件:calico.yml到/root/

[root@master ~]# kubectl apply -f calico.yaml[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 4h27m v1.20.6

node-1 Ready worker 4h25m v1.20.6

node-2 Ready worker 4h25m v1.20.6

[root@master ~]# STATUS的状态变为:Ready —>成功了

4.5.19、安装kubectl top node

首先要装个软件:metrics-server----》可获取pod的cpu,内存使用情况

-

在外界下载下:metrics-server的yaml文件,然后上传到虚拟机,进行解压

[root@master pod]# unzip metrics-server.zip [root@master pod]# -

进入metrics-server文件夹,把tar包传递给node节点

[root@master metrics-server]# lscomponents.yaml metrics-server-v0.6.3.tar [root@master metrics-server]# scp metrics-server-v0.6.3.tar node-1:/root metrics-server-v0.6.3.tar 100% 67MB 150.8MB/s 00:00 [root@master metrics-server]# scp metrics-server-v0.6.3.tar node-2:/root metrics-server-v0.6.3.tar 100% 67MB 151.7MB/s 00:00 [root@master metrics-server]# -

三台导入镜像

[root@node-1 ~]# docker load -i metrics-server-v0.6.3.tar -

启用metrics-server pod

[root@master metrics-server]# kubectl apply -f components.yaml serviceaccount/metrics-server created clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created clusterrole.rbac.authorization.k8s.io/system:metrics-server created rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created service/metrics-server created deployment.apps/metrics-server created apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created [root@master metrics-server]#[root@master metrics-server]# kubectl get pod -n kube-system|grep metrics metrics-server-769f6c8464-ctxl7 1/1 Running 0 49s [root@master metrics-server]# -

查看是否可用

[root@master metrics-server]# kubectl top node NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master 118m 5% 1180Mi 68% node-1 128m 6% 985Mi 57% node-2 60m 3% 634Mi 36% [root@master metrics-server]#

4.5.20、让node节点也可以访问 kubectl get node

'现在master节点上传递给node'

[root@master ~]# scp /etc/kubernetes/admin.conf node-1:/root

admin.conf 100% 5567 5.4MB/s 00:00

[root@master ~]# scp /etc/kubernetes/admin.conf node-2:/root

admin.conf 100% 5567 7.4MB/s 00:00

[root@master ~]#

'再在这个node节点上操作'

mkdir -p $HOME/.kube

sudo cp -i /root/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@node-1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 28m v1.20.6

node-1 Ready worker 27m v1.20.6

node-2 Ready worker 27m v1.20.6

[root@node-1 ~]#

5、先搭建k8s里边的内容

5.1、搭建nfs服务器,给web 服务提供网站数据,创建好相关的pv、pvc

'给每一台机器都下载'

'建议k8s集群内的所有的节点都安装nfs-utils软件,因为节点服务器里创建卷需要支持nfs网络文件系统在node-1、node-2上都安装nfs-utils软件,不需要启动nfs服务,主要是使用nfs服务器共享的文件夹,需要去挂载nfs文件系统'

yum install nfs-utils -y

只是在nfs服务器上启动nfs服务,就可以了

[root@nfs ~]# service nfs restart

Redirecting to /bin/systemctl restart nfs.service

[root@nfs ~]# nfs服务器上的防火墙和selinux都是禁用的

5.1.1、设置共享目录

[root@nfs ~]# vim /etc/exports

[root@nfs ~]# cat /etc/exports

/web 192.168.182.0/24(rw,sync,all_squash)

[root@nfs ~]#

5.1.2、创建共享目录

[root@nfs ~]# mkdir /web

[root@nfs ~]# cd /web/

[root@nfs web]# echo "welcome to my-web" >index.html

[root@nfs web]# cat index.html

welcome to my-web

[root@nfs web]#

'设置/web文件夹的权限,允许其他人过来读写'

[root@nfs web]# chmod 777 /web

[root@nfs web]# chown nfsnobody:nfsnobody /web

[root@nfs web]# ll -d /web

drwxrwxrwx. 2 nfsnobody nfsnobody 24 3月 27 18:21 /web

[root@nfs web]#

5.1.3、刷新nfs或者重新输出共享目录

exportfs -a 输出所有共享目录

exportfs -v 显示输出的共享目录

exportfs -r 重新输出所有的共享目录

[root@nfs web]# exportfs -rv

exporting 192.168.182.0/24:/web

[root@nfs web]#

'或者执行'

[root@nfs web]# service nfs restart

Redirecting to /bin/systemctl restart nfs.service

[root@nfs web]#

5.1.4、创建一个pv使用nfs服务器共享的目录

[root@master storage]# vim nfs-pv.yaml

[root@master storage]# cat nfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:name: sc-nginx-pv-2labels:type: sc-nginx-pv-2

spec:capacity:storage: 5Gi accessModes:- ReadWriteManystorageClassName: nfs #存储类对应的名字nfs:path: "/web" #nfs共享的目录server: 192.168.182.140 #nfs服务器的ip地址readOnly: false #访问模式

[root@master storage]#

5.1.5、应用一下

[root@master storage]# kubectl apply -f nfs-pv.yaml

persistentvolume/sc-nginx-pv-2 created

[root@master storage]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

sc-nginx-pv-2 5Gi RWX Retain Bound default/sc-nginx-pvc-2 nfs 5s

task-pv-volume 10Gi RWO Retain Bound default/task-pv-claim manual 6h17m

[root@master storage]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

sc-nginx-pvc-2 Bound sc-nginx-pv-2 5Gi RWX nfs 9m19s

task-pv-claim Bound task-pv-volume 10Gi RWO manual 5h58m

[root@master storage]#

5.1.6、创建pvc使用存储类:example-nfs

[root@master storage]# vim pvc-sc.yaml

[root@master storage]# cat pvc-sc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: sc-nginx-pvc-2

spec:accessModes:- ReadWriteMany resources:requests:storage: 1GistorageClassName: nfs

[root@master storage]#

[root@master storage]# kubectl apply -f pvc-sc.yaml

persistentvolumeclaim/sc-nginx-pvc-2 created

[root@master storage]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

sc-nginx-pvc-2 Pending nfs 8s

task-pv-claim Bound task-pv-volume 10Gi RWO manual 5h49m

[root@master storage]#

5.1.7、创建pod启动pvc

[root@master storage]# vim pod-nfs.yaml

[root@master storage]# cat pod-nfs.yaml

apiVersion: v1

kind: Pod

metadata:name: sc-pv-pod-nfs

spec:volumes:- name: sc-pv-storage-nfspersistentVolumeClaim:claimName: sc-nginx-pvc-2containers:- name: sc-pv-container-nfsimage: nginximagePullPolicy: IfNotPresentports:- containerPort: 80name: "http-server"volumeMounts:- mountPath: "/usr/share/nginx/html"name: sc-pv-storage-nfs

[root@master storage]#

应用一下:

[root@master storage]# kubectl apply -f pod-nfs.yaml

pod/sc-pv-pod-nfs created

[root@master storage]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

sc-pv-pod-nfs 1/1 Running 0 63s 10.244.84.130 node-1 <none> <none>

您在 /var/spool/mail/root 中有新邮件

[root@master storage]#

[root@master storage]#

5.1.8、测试

[root@master storage]# curl 10.244.84.130

welcome to my-web

[root@master storage]#

修改nfs中的index.html在master上查看效果:

[root@nfs web]# vim index.html

welcome to my-web

welcome to changsha

[root@nfs-server web]#

[root@master storage]# curl 10.244.84.130

welcome to my-web

welcome to changsha

您在 /var/spool/mail/root 中有新邮件

[root@master storage]#

5.2、将自己go语言的代码镜像从harbor仓库中拉取出来

5.2.1、先把go语言的代码制作成镜像

[root@docker ~]# mkdir /go

[root@docker ~]# cd /go

[root@docker go]# ls

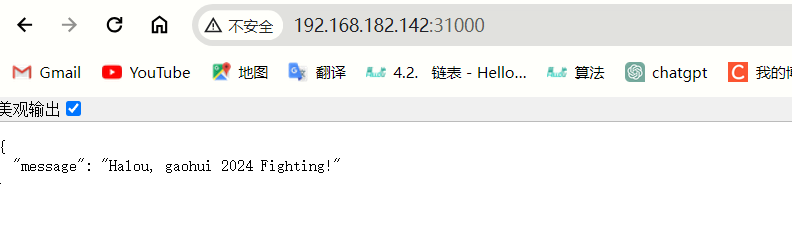

[root@docker go]# vim server.go

[root@docker go]# cat server.go

package mainimport ("net/http""github.com/gin-gonic/gin"

)func main() {r := gin.Default()r.GET("/", func(c *gin.Context) {c.JSON(http.StatusOK, gin.H{"message": "Halou, gaohui 2024 Fighting!",})})r.Run()

}[root@master go]#

[root@docker go]# go mod init web

go: creating new go.mod: module web

go: to add module requirements and sums:go mod tidy

[root@docker go]# go env -w GOPROXY=https://goproxy.cn,direct

[root@docker go]#

[root@docker go]# go mod tidy

[root@docker go]# go run server.go

[GIN-debug] [WARNING] Creating an Engine instance with the Logger and Recovery middleware already attached.[GIN-debug] [WARNING] Running in "debug" mode. Switch to "release" mode in production.- using env: export GIN_MODE=release- using code: gin.SetMode(gin.ReleaseMode)[GIN-debug] GET / --> main.main.func1 (3 handlers)

[GIN-debug] [WARNING] You trusted all proxies, this is NOT safe. We recommend you to set a value.

Please check https://pkg.go.dev/github.com/gin-gonic/gin#readme-don-t-trust-all-proxies for details.

[GIN-debug] Environment variable PORT is undefined. Using port :8080 by default

[GIN-debug] Listening and serving HTTP on :8080

[GIN] 2024/02/01 - 19:05:04 | 200 | 137.998µs | 192.168.153.1 | GET "/"'开始编译server.go成一个二进制文件(测试)'

[root@docker go]# go build -o ghweb .

[root@docker go]# ls

ghweb go.mod go.sum server.go

[root@docker go]# ./ghweb

[GIN-debug] [WARNING] Creating an Engine instance with the Logger and Recovery middleware already attached.[GIN-debug] [WARNING] Running in "debug" mode. Switch to "release" mode in production.- using env: export GIN_MODE=release- using code: gin.SetMode(gin.ReleaseMode)[GIN-debug] GET / --> main.main.func1 (3 handlers)

[GIN-debug] [WARNING] You trusted all proxies, this is NOT safe. We recommend you to set a value.

Please check https://pkg.go.dev/github.com/gin-gonic/gin#readme-don-t-trust-all-proxies for details.

[GIN-debug] Environment variable PORT is undefined. Using port :8080 by default

[GIN-debug] Listening and serving HTTP on :8080

^C

[root@docker go]#

'编写dockerfile文件'

[root@docker go]# vim Dockerfile

FROM centos:7

WORKDIR /go

COPY . /go

RUN ls /go && pwd

ENTRYPOINT ["/go/ghweb"]

[root@docker go]#

制作镜像:

[root@docker go]# docker build -t ghweb:1.0 .

[+] Building 29.2s (9/9) FINISHED docker:default=> [internal] load build definition from Dockerfile 0.0s=> => transferring dockerfile: 117B 0.0s=> [internal] load metadata for docker.io/library/centos:7 21.0s=> [internal] load .dockerignore 0.0s=> => transferring context: 2B 0.0s=> [1/4] FROM docker.io/library/centos:7@sha256:9d4bcbbb213dfd745b58be38b13b99 7.8s=> => resolve docker.io/library/centos:7@sha256:9d4bcbbb213dfd745b58be38b13b99 0.0s=> => sha256:9d4bcbbb213dfd745b58be38b13b996ebb5ac315fe75711bd 1.20kB / 1.20kB 0.0s=> => sha256:dead07b4d8ed7e29e98de0f4504d87e8880d4347859d839686a31 529B / 529B 0.0s=> => sha256:eeb6ee3f44bd0b5103bb561b4c16bcb82328cfe5809ab675b 2.75kB / 2.75kB 0.0s=> => sha256:2d473b07cdd5f0912cd6f1a703352c82b512407db6b05b4 76.10MB / 76.10MB 4.2s=> => extracting sha256:2d473b07cdd5f0912cd6f1a703352c82b512407db6b05b43f25537 3.4s=> [internal] load build context 0.0s=> => transferring context: 11.61MB 0.0s=> [2/4] WORKDIR /go 0.1s=> [3/4] COPY . /go 0.1s=> [4/4] RUN ls /go && pwd 0.3s=> exporting to image 0.0s=> => exporting layers 0.0s=> => writing image sha256:59a5509da737328cc0dbe6c91a33409b7cdc5e5eeb8a46efa7d 0.0s=> => naming to docker.io/library/ghweb:1.0 0.0s

[root@master go]#

'查看镜像'

[root@docker go]# docker images|grep ghweb

ghweb 1.0 458531408d3b 11 seconds ago 216MB

[root@docker go]#

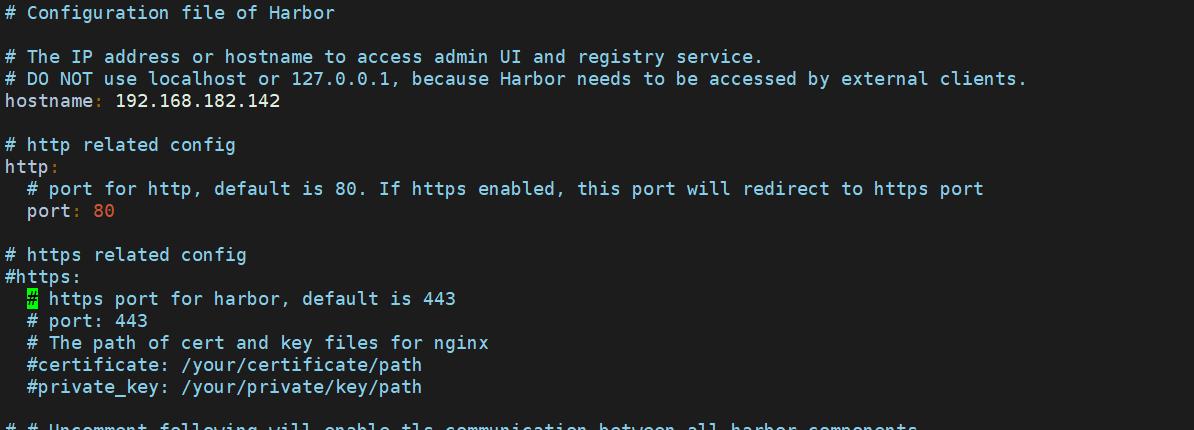

5.2.2、然后上传到harbor仓库

https://github.com/goharbor/harbor/releases/tag/v2.1.0 先去官网下载2.1的版本

'新建harbor文件夹,放进去解压'

[root@nfs ~]# mkdir /harbor

[root@nfs ~]# cd /harbor/

[root@nfs harbor]#

[root@nfs harbor]# tar xf harbor-offline-installer-v2.1.0.tgz

[root@nfs harbor]# ls

docker-compose harbor harbor-offline-installer-v2.1.0.tgz

[root@nfsharbor]#

[root@nfs harbor]# cd harbor

[root@nfs harbor]# ls

common.sh harbor.v2.1.0.tar.gz harbor.yml.tmpl install.sh LICENSE prepare

[root@nfs harbor]# cp harbor.yml.tmpl harbor.yml

'修改 harbor.yml 中的hostname和prot 注释掉 https(简化)'

[root@nfs harbor]# vim harbor.yml

然后拖拽docker compose这个软件进入当前目录,并且加入可执行权限

[root@nfs harbor]# cp ../docker-compose .

[root@nfs harbor]# ls

common.sh docker-compose harbor.v2.1.0.tar.gz harbor.yml harbor.yml.tmpl install.sh LICENSE prepare

[root@nfs harbor]# chmod +x docker-compose

[root@nfs harbor]# cp docker-compose /usr/bin/

[root@nfs harbor]# ./install.sh

为什么拷贝到 /usr/bin 是因为在环境变量中可以找到

'查看是否成功'

[root@nfs harbor]# docker compose ls

NAME STATUS CONFIG FILES

harbor running(9) /harbor/harbor/docker-compose.yml

您在 /var/spool/mail/root 中有新邮件

[root@nfs harbor]#

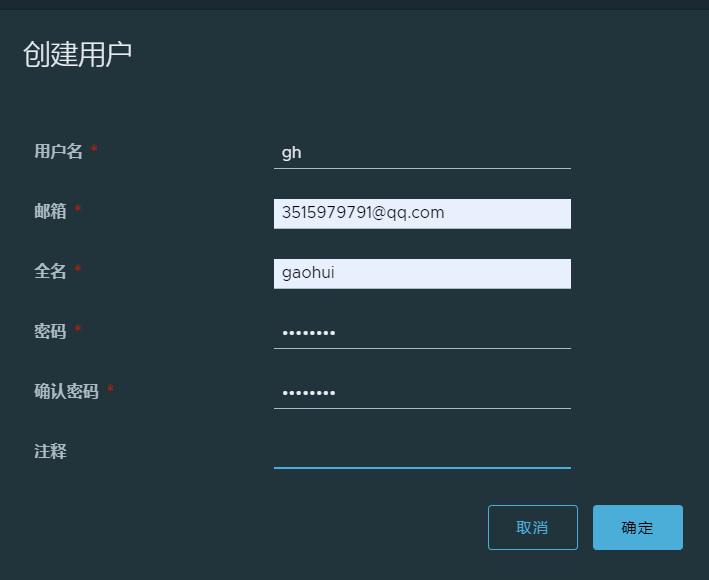

先新建项目

再新建用户

账号:gh

密码:Sc123456

然后得在项目中添加成员,之后利用gh的用户登录

传镜像到仓库

[root@nfs-server docker]# docker login 192.168.182.140:8089

Username: gh

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-storeLogin Succeeded

[root@nfs-server docker]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

192.168.182.140:8089/gao/ghweb 1.0 458531408d3b 12 minutes ago 216MB

ghweb 1.0 458531408d3b 12 minutes ago 216MB

registry.cn-beijing.aliyuncs.com/google_registry/hpa-example latest 4ca4c13a6d7c 8 years ago 481MB

[root@nfs-server docker]# docker push 192.168.182.140:8089/gao/ghweb:1.0

The push refers to repository [192.168.182.140:8089/gao/ghweb]

aed658a8d439: Pushed

3e7a541e1360: Pushed

a72a96e845e5: Pushed

174f56854903: Pushed

1.0: digest: sha256:53ad51fdfd846e8494c547609d2f45331150d2da5081c2f7867affdc65c55cfd size: 1153

[root@nfs-server docker]#

node节点拉取ghweb镜像

'k8s集群每个节点都登入到harbor中,以便于从harbor中拉回镜像。'

[root@master ~]# vim /etc/docker/daemon.json

您在 /var/spool/mail/root 中有新邮件

[root@master ~]# cat /etc/docker/daemon.json

{"registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com"],"insecure-registries":["192.168.182.140:8089"],"exec-opts": ["native.cgroupdriver=systemd"]

}

[root@master ~]# '重新加载配置,重启docker服务'

[root@master ~]# systemctl daemon-reload && systemctl restart docker'登录harbor'

[root@master ~]# docker login 192.168.182.140:8089

Username: gh

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-storeLogin Succeeded

您在 /var/spool/mail/root 中有新邮件

[root@master ~]# '从harbor中拉取镜像'

[root@master ~]# docker pull 192.168.182.140:8089/gao/ghweb:1.0

1.0: Pulling from gao/ghweb

2d473b07cdd5: Pull complete

deb4bb5a3691: Pull complete

880231ee488c: Pull complete

ec220df6aef4: Pull complete

Digest: sha256:53ad51fdfd846e8494c547609d2f45331150d2da5081c2f7867affdc65c55cfd

Status: Downloaded newer image for 192.168.182.140:8089/gao/ghweb:1.0

192.168.182.140:8089/gao/ghweb:1.0

[root@master ~]# docker images|grep ghweb

192.168.182.140:8089/gao/ghweb 1.0 458531408d3b 20 minutes ago 216MB

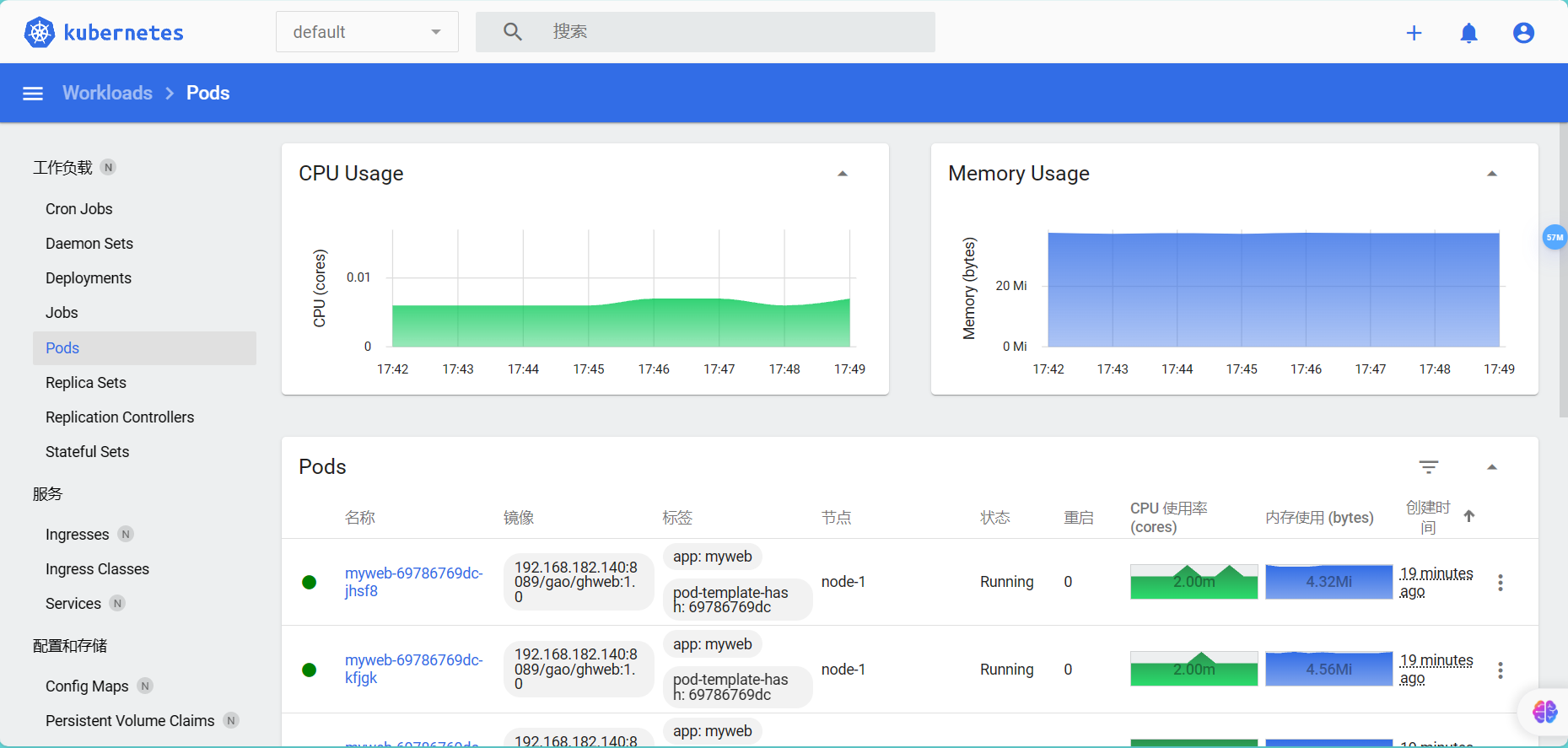

[root@master ~]# 5.3、启动HPA功能部署自己的web pod,当cpu使用率达到50%的时候,进行水平扩缩,最小10个业务pod,最多20个业务pod。

[root@master ~]# mkdir /hpa

您在 /var/spool/mail/root 中有新邮件

[root@master ~]# cd /hpa

[root@master hpa]# vim my-web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: mywebname: myweb

spec:replicas: 3selector:matchLabels:app: mywebtemplate:metadata:labels:app: mywebspec:containers:- name: mywebimage: 192.168.182.140:8089/gao/ghweb:1.0imagePullPolicy: IfNotPresentports:- containerPort: 8089resources:limits:cpu: 300mrequests:cpu: 100m

---

apiVersion: v1

kind: Service

metadata:labels:app: myweb-svcname: myweb-svc

spec:selector:app: mywebtype: NodePortports:- port: 8089protocol: TCPtargetPort: 8089nodePort: 30001

[root@master hpa]#

[root@master hpa]# kubectl apply -f my-web.yaml

deployment.apps/myweb created

service/myweb-svc created

[root@master hpa]#

创建HPA功能

[root@master hpa]# kubectl autoscale deployment myweb --cpu-percent=50 --min=10 --max=20

horizontalpodautoscaler.autoscaling/myweb autoscaled

您在 /var/spool/mail/root 中有新邮件

[root@master hpa]#

[root@master hpa]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myweb-7558d9fbc4-869f5 1/1 Running 0 12s

myweb-7558d9fbc4-c5wdr 1/1 Running 0 12s

myweb-7558d9fbc4-dgdbs 1/1 Running 0 82s

myweb-7558d9fbc4-hmt62 1/1 Running 0 12s

myweb-7558d9fbc4-r84bc 1/1 Running 0 12s

myweb-7558d9fbc4-rld88 1/1 Running 0 82s

myweb-7558d9fbc4-s82vh 1/1 Running 0 82s

myweb-7558d9fbc4-sn5dp 1/1 Running 0 12s

myweb-7558d9fbc4-t9pvl 1/1 Running 0 12s

myweb-7558d9fbc4-vzlnb 1/1 Running 0 12s

sc-pv-pod-nfs 1/1 Running 1 7h27m

[root@master hpa]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

myweb Deployment/myweb 0%/50% 10 20 10 33s

[root@master hpa]#

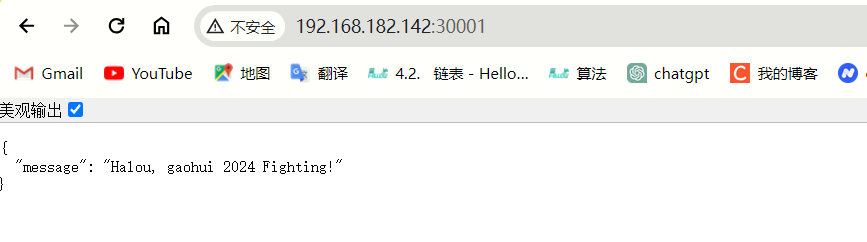

访问30001端口

5.5、使用探针(liveness、readiness、startup)的(httpget、exec)方法对web业务pod进行监控,一旦出现问题马上重启,增强业务pod的可靠性。

[root@master hpa]# vim my-web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: mywebname: myweb

spec:replicas: 3selector:matchLabels:app: mywebtemplate:metadata:labels:app: mywebspec:containers:- name: mywebimage: 192.168.182.140:8089/gao/ghweb:1.0imagePullPolicy: IfNotPresentports:- containerPort: 8080resources:limits:cpu: 300mrequests:cpu: 100mlivenessProbe:exec:command:- ls- /initialDelaySeconds: 5periodSeconds: 5readinessProbe:exec:command:- ls- /initialDelaySeconds: 5periodSeconds: 5 startupProbe:exec:command:- ls- /failureThreshold: 3periodSeconds: 10lifecycle:postStart:exec:command: ["/bin/sh", "-c", "echo Container started"]

---

apiVersion: v1

kind: Service

metadata:labels:app: myweb-svcname: myweb-svc

spec:selector:app: mywebtype: NodePortports:- port: 8080protocol: TCPtargetPort: 8080nodePort: 30001

[root@master hpa]#

[root@master hpa]# kubectl apply -f my-web.yaml

deployment.apps/myweb configured

service/myweb-svc unchanged

[root@master hpa]#

Liveness: exec [ls /] delay=5s timeout=1s period=5s #success=1 #failure=3Readiness: exec [ls /] delay=5s timeout=1s period=5s #success=1 #failure=3Startup: exec [ls /] delay=0s timeout=1s period=10s #success=1 #failure=3

5.6、搭建ingress controller 和ingress规则,给web服务做基于域名的负载均衡

'ingress controller 本质上是一个nginx软件,用来做负载均衡。'

'ingress 是k8s内部管理nginx配置(nginx.conf)的组件,用来给ingress controller传参。'

[root@master ingress]# ls

ingress-controller-deploy.yaml nfs-pvc.yaml sc-ingress.yaml

ingress_nginx_controller.tar nfs-pv.yaml sc-nginx-svc-1.yaml

kube-webhook-certgen-v1.1.0.tar.gz nginx-deployment-nginx-svc-2.yaml

[root@master ingress]#

- ingress-controller-deploy.yaml 是部署ingress controller使用的yaml文件

- ingress-nginx-controllerv.tar.gz ingress-nginx-controller镜像

- kube-webhook-certgen-v1.1.0.tar.gz kube-webhook-certgen镜像

- sc-ingress.yaml 创建ingress的配置文件

- sc-nginx-svc-1.yaml 启动sc-nginx-svc服务和相关pod的yaml

- nginx-deployment-nginx-svc-2.yaml 启动sc-nginx-svc-2服务和相关pod的yaml

[root@master ingress]# scp kube-webhook-certgen-v1.1.0.tar.gz node-2:/root

kube-webhook-certgen-v1.1.0.tar.gz 100% 47MB 123.6MB/s 00:00

[root@master ingress]# scp kube-webhook-certgen-v1.1.0.tar.gz node-1:/root

kube-webhook-certgen-v1.1.0.tar.gz 100% 47MB 144.4MB/s 00:00

[root@master ingress]# scp ingress_nginx_controller.tar node-1:/root

ingress_nginx_controller.tar 100% 276MB 129.2MB/s 00:02

[root@master ingress]# scp ingress_nginx_controller.tar node-2:/root

ingress_nginx_controller.tar 100% 276MB 129.8MB/s 00:02

[root@master ingress]# docker load -i ingress_nginx_controller.tar

e2eb06d8af82: Loading layer 5.865MB/5.865MB

ab1476f3fdd9: Loading layer 120.9MB/120.9MB

ad20729656ef: Loading layer 4.096kB/4.096kB

0d5022138006: Loading layer 38.09MB/38.09MB

8f757e3fe5e4: Loading layer 21.42MB/21.42MB

a933df9f49bb: Loading layer 3.411MB/3.411MB

7ce1915c5c10: Loading layer 309.8kB/309.8kB

986ee27cd832: Loading layer 6.141MB/6.141MB

b94180ef4d62: Loading layer 38.37MB/38.37MB

d36a04670af2: Loading layer 2.754MB/2.754MB

2fc9eef73951: Loading layer 4.096kB/4.096kB

1442cff66b8e: Loading layer 51.67MB/51.67MB

1da3c77c05ac: Loading layer 3.584kB/3.584kB

Loaded image: registry.cn-hangzhou.aliyuncs.com/yutao517/ingress_nginx_controller:v1.1.0

您在 /var/spool/mail/root 中有新邮件

[root@master ingress]# docker load -i kube-webhook-certgen-v1.1.0.tar.gz

c0d270ab7e0d: Loading layer 3.697MB/3.697MB

ce7a3c1169b6: Loading layer 45.38MB/45.38MB

Loaded image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

[root@master ingress]#

'使用ingress-controller-deploy.yaml 文件去启动ingress controller'

[root@master ingress]# kubectl apply -f ingress-controller-deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

您在 /var/spool/mail/root 中有新邮件

[root@master ingress]#

[root@master ingress]# kubectl get ns

NAME STATUS AGE

default Active 46h

ingress-nginx Active 26s

kube-node-lease Active 46h

kube-public Active 46h

kube-system Active 46h

[root@master ingress]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.99.32.216 <none> 80:32351/TCP,443:32209/TCP 52s

ingress-nginx-controller-admission ClusterIP 10.108.207.217 <none> 443/TCP 52s

[root@master ingress]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-6wrfc 0/1 Completed 0 59s

ingress-nginx-admission-patch-z4hwb 0/1 Completed 1 59s

ingress-nginx-controller-589dccc958-9cbht 1/1 Running 0 59s

ingress-nginx-controller-589dccc958-r79rt 1/1 Running 0 59s

[root@master ingress]#

接下来:创建pod和暴露pod的服务

[root@master ingress]# cat sc-nginx-svc-1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: sc-nginx-deploylabels:app: sc-nginx-feng

spec:replicas: 3selector:matchLabels:app: sc-nginx-fengtemplate:metadata:labels:app: sc-nginx-fengspec:containers:- name: sc-nginx-fengimage: nginximagePullPolicy: IfNotPresentports:- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:name: sc-nginx-svclabels:app: sc-nginx-svc

spec:selector:app: sc-nginx-fengports:- name: name-of-service-portprotocol: TCPport: 80targetPort: 80

[root@master ingress]#

[root@master ingress]# kubectl apply -f sc-nginx-svc-1.yaml

deployment.apps/sc-nginx-deploy created

service/sc-nginx-svc created

[root@master ingress]#

[root@master ingress]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

sc-nginx-deploy 3/3 3 3 8m7s

[root@master ingress]#

[root@master ingress]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 47h

myweb-svc NodePort 10.98.10.240 <none> 8080:30001/TCP 22h

sc-nginx-svc ClusterIP 10.111.4.156 <none> 80/TCP 9m27s

[root@master ingress]#

[root@master ingress]# kubectl apply -f sc-ingress.yaml

ingress.networking.k8s.io/sc-ingress created

[root@master ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

sc-ingress nginx www.feng.com,www.zhang.com 80 8s

[root@master ingress]#

[root@master ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

sc-ingress nginx www.feng.com,www.zhang.com 192.168.182.143,192.168.182.144 80 27s

您在 /var/spool/mail/root 中有新邮件

[root@master ingress]# '查看ingress controller 里的nginx.conf 文件里是否有ingress对应的规则'

[root@master ingress]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-6wrfc 0/1 Completed 0 68m

ingress-nginx-admission-patch-z4hwb 0/1 Completed 1 68m

ingress-nginx-controller-589dccc958-9cbht 1/1 Running 0 68m

ingress-nginx-controller-589dccc958-r79rt 1/1 Running 0 68m

您在 /var/spool/mail/root 中有新邮件

[root@master ingress]#

[root@master ingress]# kubectl exec -it ingress-nginx-controller-589dccc958-9cbht -n ingress-nginx -- bash

bash-5.1$ cat nginx.conf|grep zhang.com## start server www.zhang.comserver_name www.zhang.com ;## end server www.zhang.com

bash-5.1$

在其他的宿主机(nfs服务器上)或者windows机器上使用域名进行访问

'先添加hosts'

[root@nfs ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.182.143 www.feng.com

192.168.182.144 www.zhang.com

[root@nfs-server ~]#

[root@nfs etc]# curl www.feng.com

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p><p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p><p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@nfs etc]# '启动2个服务和pod,使用了pv+pvc+nfs'

[root@master ingress]# cat nfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:name: sc-nginx-pvlabels:type: sc-nginx-pv

spec:capacity:storage: 10Gi accessModes:- ReadWriteManystorageClassName: nfsnfs:path: "/web" #nfs共享的目录server: 192.168.182.140 #nfs服务器的ip地址readOnly: false

[root@master ingress]#

[root@master ingress]# kubectl apply -f nfs-pv.yaml

persistentvolume/sc-nginx-pv created

[root@master ingress]# cat nfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: sc-nginx-pvc

spec:accessModes:- ReadWriteMany resources:requests:storage: 1GistorageClassName: nfs #使用nfs类型的pv

[root@master ingress]# kubectl apply -f nfs-pvc.yaml

persistentvolumeclaim/sc-nginx-pvc created

[root@master ingress]#

[root@master ingress]# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/sc-nginx-pv 10Gi RWX Retain Bound default/sc-nginx-pvc nfs 31s

persistentvolume/sc-nginx-pv-2 5Gi RWX Retain Bound default/sc-nginx-pvc-2 nfs 31hNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/sc-nginx-pvc Bound sc-nginx-pv 10Gi RWX nfs 28s

persistentvolumeclaim/sc-nginx-pvc-2 Bound sc-nginx-pv-2 5Gi RWX nfs 31h

[root@master ingress]#

启第二个pod和第二个服务

[root@master ingress]# kubectl apply -f nginx-deployment-nginx-svc-2.yaml

deployment.apps/nginx-deployment created

service/sc-nginx-svc-2 created

[root@master ingress]#

[root@master ingress]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.99.32.216 <none> 80:32351/TCP,443:32209/TCP 81m

ingress-nginx-controller-admission ClusterIP 10.108.207.217 <none> 443/TCP 81m

[root@master ingress]#

访问宿主机暴露的端口号32351或者80都可以👆

[root@nfs ~]# curl www.zhang.com

welcome to my-web

welcome to changsha

Halou-gh

[root@nfs ~]#

[root@nfs ~]# curl www.feng.com

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p><p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p><p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@nfs ~]#

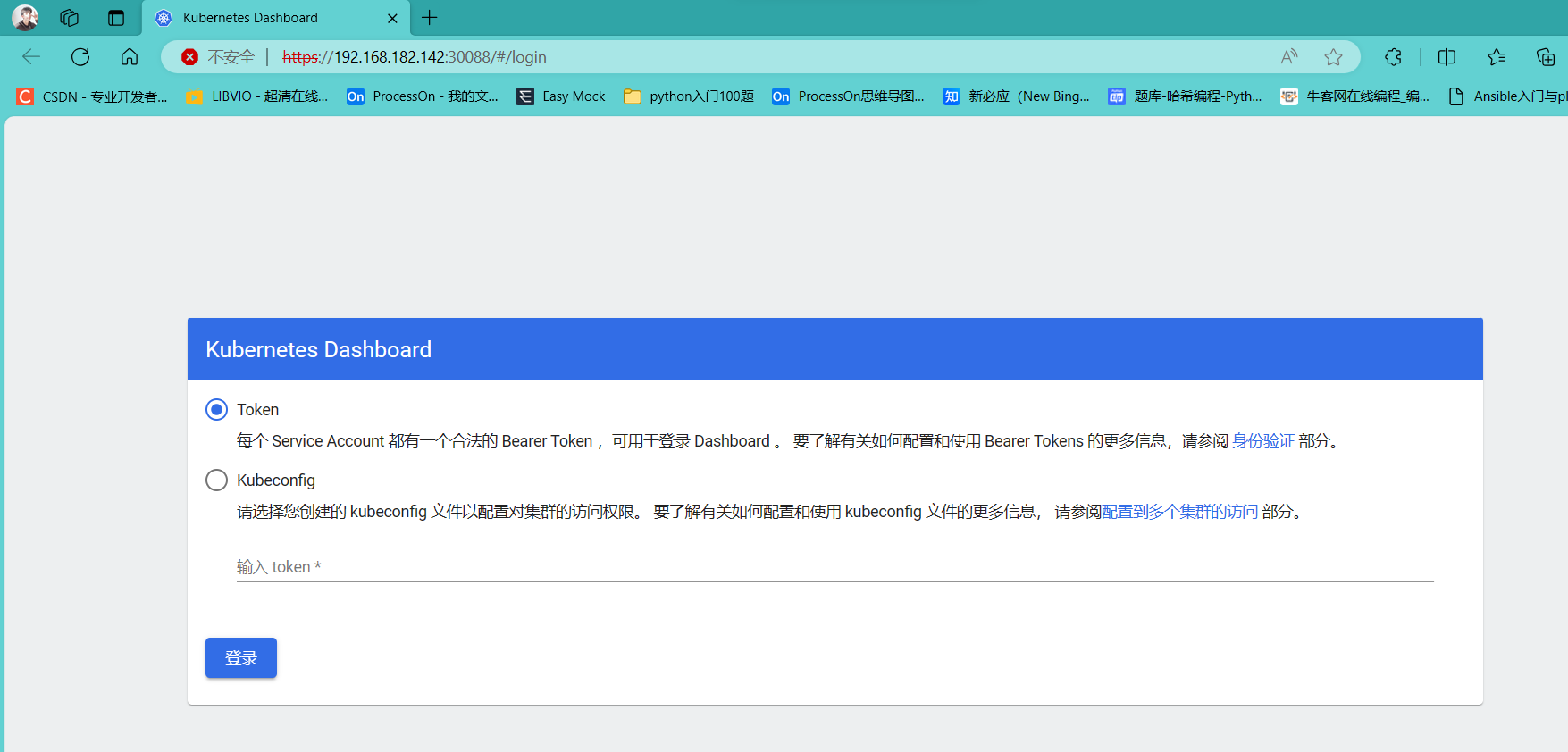

5.7、部署和访问 Kubernetes 仪表板(Dashboard)

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

使用的dashboard的版本是v2.7.0

'下载yaml文件'

recommended.yaml

'修改配置文件,将service对应的类型设置为NodePort'

[root@master dashboard]# vim recommended.yaml

---kind: Service

apiVersion: v1

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboard

spec:type: NodePort #指定类型ports:- port: 443targetPort: 8443nodePort: 30088 #指定宿主机端口号selector:k8s-app: kubernetes-dashboard---

其他的配置都不修改应用上面的配置,启动dashboard相关的实例

'启动dashboard'

[root@master dashboard]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@master dashboard]#

'查看是否启动dashboard的pod'

[root@master dashboard]# kubectl get pod --all-namespaces|grep dashboard

kubernetes-dashboard dashboard-metrics-scraper-66dd8bdd86-nvwsj 1/1 Running 0 2m20s

kubernetes-dashboard kubernetes-dashboard-785c75749d-nqsm7 1/1 Running 0 2m20s

[root@master dashboard]#

查看服务是否启动

[root@master dashboard]# kubectl get svc --all-namespaces|grep dash

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.103.48.55 <none> 8000/TCP 3m45s

kubernetes-dashboard kubernetes-dashboard NodePort 10.96.62.20 <none> 443:30088/TCP 3m45s

您在 /var/spool/mail/root 中有新邮件

[root@master dashboard]#

在浏览器里访问,使用https协议去访问30088端口

https://192.168.182.142:30088/

出现一个登录画图,需要输入token

获取dashboard 的secret的名字

kubectl get secret -n kubernetes-dashboard|grep dashboard-token

[root@master dashboard]# kubectl get secret -n kubernetes-dashboard|grep dashboard-token

kubernetes-dashboard-token-9hsh5 kubernetes.io/service-account-token 3 6m58s

您在 /var/spool/mail/root 中有新邮件

[root@master dashboard]#

kubectl describe secret kubernetes-dashboard-token-9hsh5 -n kubernetes-dashboard

[root@master dashboard]# kubectl describe secret kubernetes-dashboard-token-9hsh5 -n kubernetes-dashboard

Name: kubernetes-dashboard-token-9hsh5

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboardkubernetes.io/service-account.uid: d05961ce-a39b-4445-bc1b-643439b59f41Type: kubernetes.io/service-account-tokenData

====

ca.crt: 1066 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkRNdlRFVE9XeDFPdU95Q3FEcEtYUXJHZ0dvcnJPdlBUdEp3MEVtSzF5MHcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi05aHNoNSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImQwNTk2MWNlLWEzOWItNDQ0NS1iYzFiLTY0MzQzOWI1OWY0MSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.sbnbgil-sHV71WF1K4nOKTQKOOXNIam-NbUTFqfCdx6lBNN3IVnQFiISdXsjmDELi3q6kmVfpw000KPdavZ307Em2cGLI2F7aOy281dafcelZzIBjdMhw5KHrlzc0JkbL-jQfDvgk7t6T5zABqKfC8LsdButSsMviw8N0eFC5Iz9gSlxDieZDzzPCXVXUnCBWmAxcpOhUfJn81HyoFk6deVK71lwR5zm_KnbjCoTQAYbaCXfoB8fjn3-cyVFMtHbt0rU3mPyV5kYJEuH4WlGGYYMxQfrm0I8elQbyyENKtlI0DK_15Am_wp0I1Gw81eLg53h67FFQrSKHe9QxPx6Cw

[root@master dashboard]#

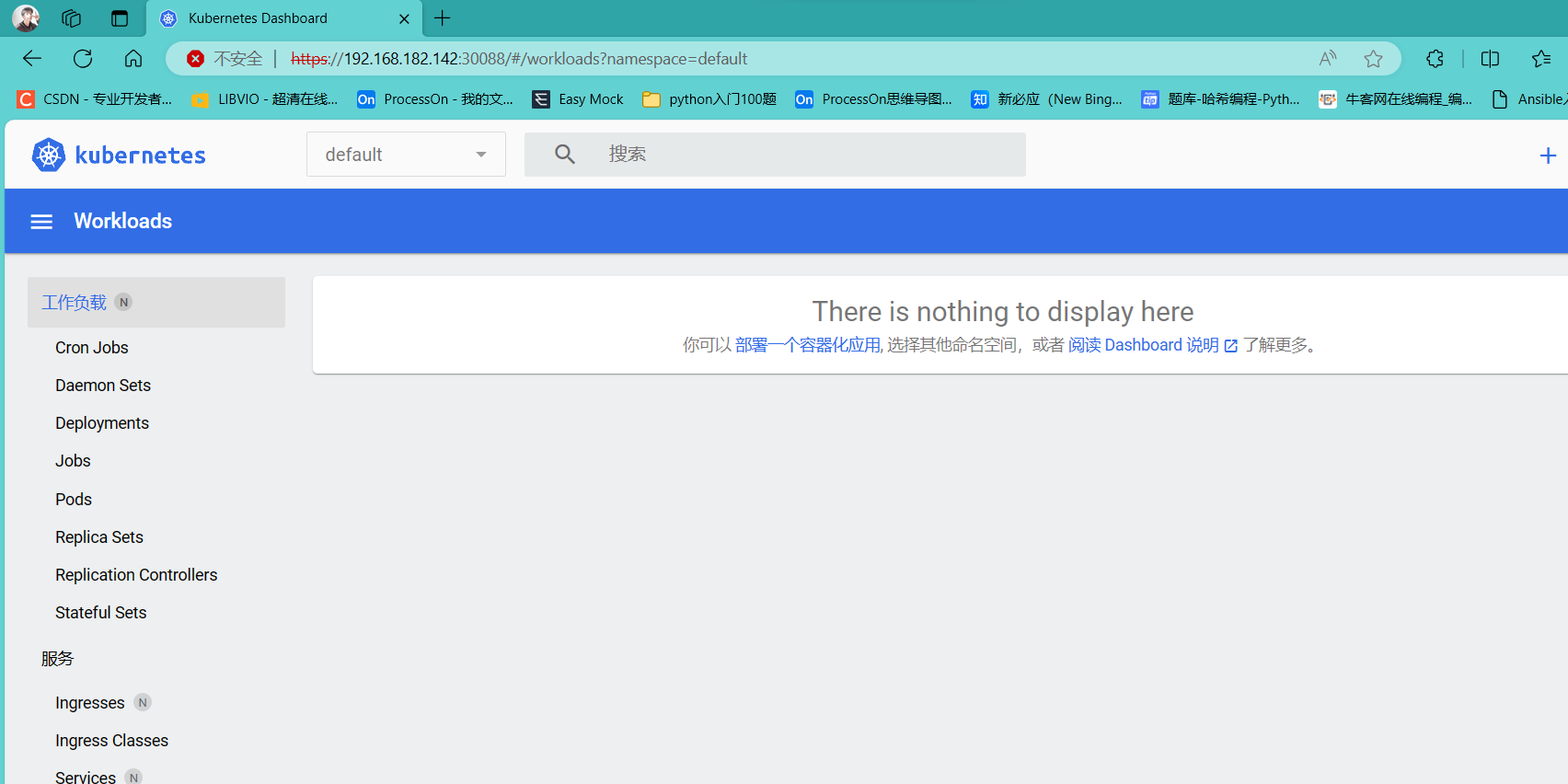

登录成功后,发现dashboard不能访问任何的资源对象,因为没有权限,需要RBAC鉴权

授权kubernetes-dashboard,防止找不到namespace资源

kubectl create clusterrolebinding serviceaccount-cluster-admin --clusterrole=cluster-admin --user=system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard

[root@master dashboard]# kubectl create clusterrolebinding serviceaccount-cluster-admin --clusterrole=cluster-admin --user=system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard

clusterrolebinding.rbac.authorization.k8s.io/serviceaccount-cluster-admin created

您在 /var/spool/mail/root 中有新邮件

[root@master dashboard]#

然后刷新一下页面就有了

如果要删除角色绑定:

[root@master ~]#kubectl delete clusterrolebinding serviceaccount-cluster-admin

5.8、使用ab工具对整个k8s集群里的web服务进行压力测试

压力测试软件:ab

ab是Apache自带的一个压力测试软件,可以通过ab命令和选项对某个URL进行压力测试。

ab建议在linux环境下使用。

ab的主要命令:

ab主要使用的两个选项就是-n和-c。

其他选项使用命合ab-h进行查看。

命命格式是: ab -n10 -c10 URL

'编写yaml文件'

[root@master hpa]# cat nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: ab-nginx

spec:selector:matchLabels:run: ab-nginxtemplate:metadata:labels:run: ab-nginxspec:nodeName: node-2containers:- name: ab-nginximage: 192.168.182.140:8089/gao/ghweb:1.0imagePullPolicy: IfNotPresentports:- containerPort: 8080resources:limits:cpu: 100mrequests:cpu: 50m

---

apiVersion: v1

kind: Service

metadata:name: ab-nginx-svclabels:run: ab-nginx-svc

spec:type: NodePortports:- port: 8080targetPort: 8080nodePort: 31000selector:run: ab-nginx

---

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:name: ab-nginx

spec:scaleTargetRef:apiVersion: apps/v1kind: Deploymentname: ab-nginxminReplicas: 5maxReplicas: 20targetCPUUtilizationPercentage: 50

[root@master hpa]#

启动开启了HPA功能的nginx的部署控制器

[root@master hpa]# kubectl apply -f nginx.yaml

deployment.apps/ab-nginx unchanged

service/ab-nginx-svc created

horizontalpodautoscaler.autoscaling/ab-nginx created

[root@master hpa]#

[root@master hpa]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

ab-nginx 5/5 5 5 30s

myweb 3/3 3 3 31m

nginx-deployment 3/3 3 3 44m

sc-nginx-deploy 3/3 3 3 70m[root@master hpa]# kubectl get pod

NAME READY STATUS RESTARTS AGE

ab-nginx-6d7db4b69f-2j5dz 1/1 Running 0 27s

ab-nginx-6d7db4b69f-6dwcq 1/1 Running 0 27s

ab-nginx-6d7db4b69f-7wkkd 1/1 Running 0 27s

ab-nginx-6d7db4b69f-8mjp6 1/1 Running 0 27s

ab-nginx-6d7db4b69f-gfmsq 1/1 Running 0 43s

myweb-69786769dc-jhsf8 1/1 Running 0 31m

myweb-69786769dc-kfjgk 1/1 Running 0 31m

myweb-69786769dc-msxrf 1/1 Running 0 31m

nginx-deployment-6c685f999-dkkfg 1/1 Running 0 44m

nginx-deployment-6c685f999-khjsp 1/1 Running 0 44m

nginx-deployment-6c685f999-svcvz 1/1 Running 0 44m

sc-nginx-deploy-7bb895f9f5-pmbcd 1/1 Running 0 70m

sc-nginx-deploy-7bb895f9f5-wf55g 1/1 Running 0 70m

sc-nginx-deploy-7bb895f9f5-zbjr9 1/1 Running 0 70m

sc-pv-pod-nfs 1/1 Running 1 31h

[root@master hpa]# [root@master hpa]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

ab-nginx Deployment/ab-nginx 0%/50% 5 20 5 84s

您在 /var/spool/mail/root 中有新邮件

[root@master hpa]#

去访问31000端口

下载ab压力测试软件,在nfs机器上装(不要在集群内部装)

[root@nfs ~]# yum install httpd-tools -y

'在master上一直盯着hpa看'

[root@master hpa]# kubectl get hpa --watch

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

ab-nginx Deployment/ab-nginx 0%/50% 5 20 5 5m2s

'给master新建个会话,查看pod的变化'

[root@master hpa]# kubectl get pod --watch

NAME READY STATUS RESTARTS AGE

ab-nginx-6d7db4b69f-2j5dz 1/1 Running 0 5m13s

ab-nginx-6d7db4b69f-6dwcq 1/1 Running 0 5m13s

ab-nginx-6d7db4b69f-7wkkd 1/1 Running 0 5m13s

ab-nginx-6d7db4b69f-8mjp6 1/1 Running 0 5m13s

ab-nginx-6d7db4b69f-gfmsq 1/1 Running 0 5m29s

myweb-69786769dc-jhsf8 1/1 Running 0 36m

myweb-69786769dc-kfjgk 1/1 Running 0 36m

myweb-69786769dc-msxrf 1/1 Running 0 36m

nginx-deployment-6c685f999-dkkfg 1/1 Running 0 49m

nginx-deployment-6c685f999-khjsp 1/1 Running 0 49m

nginx-deployment-6c685f999-svcvz 1/1 Running 0 49m

sc-nginx-deploy-7bb895f9f5-pmbcd 1/1 Running 0 75m

sc-nginx-deploy-7bb895f9f5-wf55g 1/1 Running 0 75m

sc-nginx-deploy-7bb895f9f5-zbjr9 1/1 Running 0 75m

sc-pv-pod-nfs 1/1 Running 1 31h'开始测试'

ab -n1000 -c50 http://192.168.182.142:31000/

'一直增加'

ab-nginx Deployment/ab-nginx 60%/50% 5 20 5 7m54s

ab-nginx Deployment/ab-nginx 86%/50% 5 20 6 8m9s

ab-nginx Deployment/ab-nginx 83%/50% 5 20 9 8m25s

ab-nginx Deployment/ab-nginx 69%/50% 5 20 9 8m40s

ab-nginx Deployment/ab-nginx 55%/50% 5 20 9 8m56s

ab-nginx Deployment/ab-nginx 55%/50% 5 20 10 9m11s

ab-nginx Deployment/ab-nginx 14%/50% 5 20 10 9m41s

'超过50%就会创建'

ab-nginx-6d7db4b69f-zdv2h 0/1 ContainerCreating 0 0s

ab-nginx-6d7db4b69f-zdv2h 0/1 ContainerCreating 0 1s

ab-nginx-6d7db4b69f-zdv2h 1/1 Running 0 2s

ab-nginx-6d7db4b69f-l4vbw 0/1 Pending 0 0s

ab-nginx-6d7db4b69f-5qb9p 0/1 Pending 0 0s

ab-nginx-6d7db4b69f-vzcn7 0/1 Pending 0 0s

ab-nginx-6d7db4b69f-l4vbw 0/1 ContainerCreating 0 0s

ab-nginx-6d7db4b69f-5qb9p 0/1 ContainerCreating 0 0s

ab-nginx-6d7db4b69f-vzcn7 0/1 ContainerCreating 0 0s

ab-nginx-6d7db4b69f-l4vbw 0/1 ContainerCreating 0 1s

ab-nginx-6d7db4b69f-5qb9p 0/1 ContainerCreating 0 1s

ab-nginx-6d7db4b69f-vzcn7 0/1 ContainerCreating 0 2s

ab-nginx-6d7db4b69f-l4vbw 1/1 Running 0 2s

ab-nginx-6d7db4b69f-vzcn7 1/1 Running 0 2s

ab-nginx-6d7db4b69f-5qb9p 1/1 Running 0 2s

不压力测试的时候 就会慢慢降低下来

6、搭建Prometheus服务器

6.1、为了方便多台机器操作,先部署ansible在堡垒机上

'安装ansible'

[root@jump ~]# yum install epel-release -y

[root@jump ~]# yum install ansible -y

'修改hosts文件'

[root@jump ~]# cd /etc/ansible/

[root@jump ansible]# ls

ansible.cfg hosts roles

[root@jump ansible]# vim hosts #加入要控制的机器

[k8s]

192.168.182.142

192.168.182.143

192.168.182.144[nfs]

192.168.182.140[firewall]

192.168.182.177

'在ansible服务器和其他的服务器之间建立免密通道(单向信任关系)'

1.生成密钥对

ssh-keygen

2.上传公钥到其他服务器

ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.182.142

3.测试ansible服务器能否控制所有的服务器

[root@jump ansible]# ansible all -m shell -a 'ip add'

6.2、搭建prometheus 服务器和grafana出图软件,监控所有的服务器

'1.提前下载好所需要的软件'

[root@jump prom]# ls

grafana-enterprise-9.5.1-1.x86_64.rpm prometheus-2.44.0-rc.1.linux-amd64.tar.gz

node_exporter-1.7.0.linux-amd64.tar.gz

[root@jump prom]#

'2.解压源码包'

[root@jump prom]# tar xf prometheus-2.44.0-rc.1.linux-amd64.tar.gz '修改名字'

[root@jump prom]# mv prometheus-2.44.0-rc.1.linux-amd64 prometheus

[root@jump prom]# ls

grafana-enterprise-9.5.1-1.x86_64.rpm prometheus-2.44.0-rc.1.linux-amd64.tar.gz

node_exporter-1.7.0.linux-amd64.tar.gz

prometheus

[root@jump prom]# '临时和永久修改PATH变量,添加prometheus的路径'

[root@jump prom]# PATH=/prom/prometheus:$PATH

[root@jump prom]# echo 'PATH=/prom/prometheus:$PATH' >>/etc/profile

[root@jump prom]# which prometheus

/prom/prometheus/prometheus

[root@jump prom]# '把prometheus做成一个服务来进行管理,非常方便日后维护和使用'

[root@jump prom]# vim /usr/lib/systemd/system/prometheus.service

[Unit]

Description=prometheus

[Service]

ExecStart=/prom/prometheus/prometheus --config.file=/prom/prometheus/prometheus.yml

ExecReload=/bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

[Install]

WantedBy=multi-user.target'重新加载systemd相关的服务,识别Prometheus服务的配置文件'

[root@jump prom]# systemctl daemon-reload'启动Prometheus服务'

[root@jump prom]# systemctl start prometheus

[root@jump prom]# systemctl restart prometheus

[root@jump prom]# '查看服务是否启动'

[root@jump prom]# ps aux|grep prome

root 17551 0.1 2.2 796920 42344 ? Ssl 13:15 0:00 /prom/prometheus/prometheus --config.file=/prom/prometheus/prometheus.yml

root 17561 0.0 0.0 112824 972 pts/0 S+ 13:16 0:00 grep --color=auto prome

[root@jump prom]# '设置开机自启动'

[root@jump prom]# systemctl enable prometheus

Created symlink from /etc/systemd/system/multi-user.target.wants/prometheus.service to /usr/lib/systemd/system/prometheus.service.

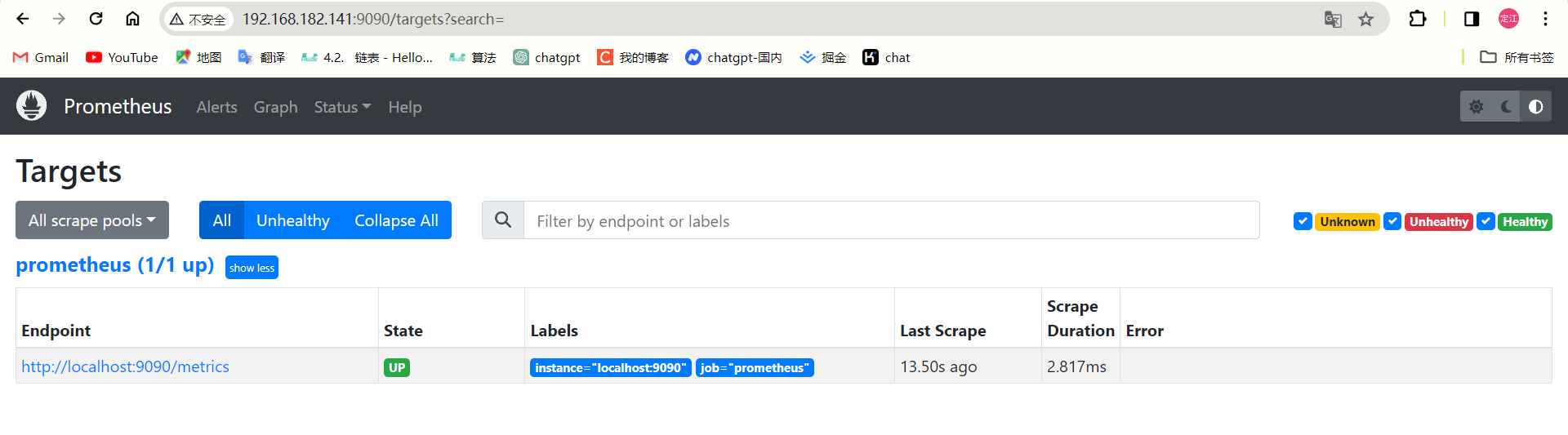

[root@jump prom]# '去访问9090端口'

6.2.1、安装exporter

'将node-exporter传递到所有的服务器上的/root目录下'

ansible all -m copy -a 'src=node_exporter-1.7.0.linux-amd64.tar.gz dest=/root/'

[root@jump prom]# ansible all -m copy -a 'src=node_exporter-1.7.0.linux-amd64.tar.gz dest=/root/'

'编写在其他机器上安装node_exporter的脚本'

[root@jump prom]# vim install_node_exporter.sh

#!/bin/bashtar xf /root/node_exporter-1.7.0.linux-amd64.tar.gz -C /

cd /

mv node_exporter-1.7.0.linux-amd64/ node_exporter

cd /node_exporter/

PATH=/node_exporter/:$PATH

echo 'PATH=/node_exporter/:$PATH' >>/etc/profile#生成nodeexporter.service文件

cat >/usr/lib/systemd/system/node_exporter.service <<EOF

[Unit]

Description=node_exporter

[Service]

ExecStart=/node_exporter/node_exporter --web.listen-address 0.0.0.0:9090

ExecReload=/bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF#让systemd进程识别node_exporter服务

systemctl daemon-reload

#设置开机启动

systemctl enable node_exporter

#启动node_exporter

systemctl start node_exporter

'在ansible服务器上执行安装node_exporter的脚本'

[root@jump prom]# ansible all -m script -a "/prom/install_node_exporter.sh"

'在其他的服务器上查看是否安装node_exporter成功'

[root@firewalld ~]# ps aux|grep node

root 24717 0.0 0.4 1240476 9200 ? Ssl 13:24 0:00 /node_exporter/node_exporter --web.listen-address 0.0.0.0:9090

root 24735 0.0 0.0 112824 972 pts/0 S+ 13:29 0:00 grep --color=auto node

[root@firewalld ~]#

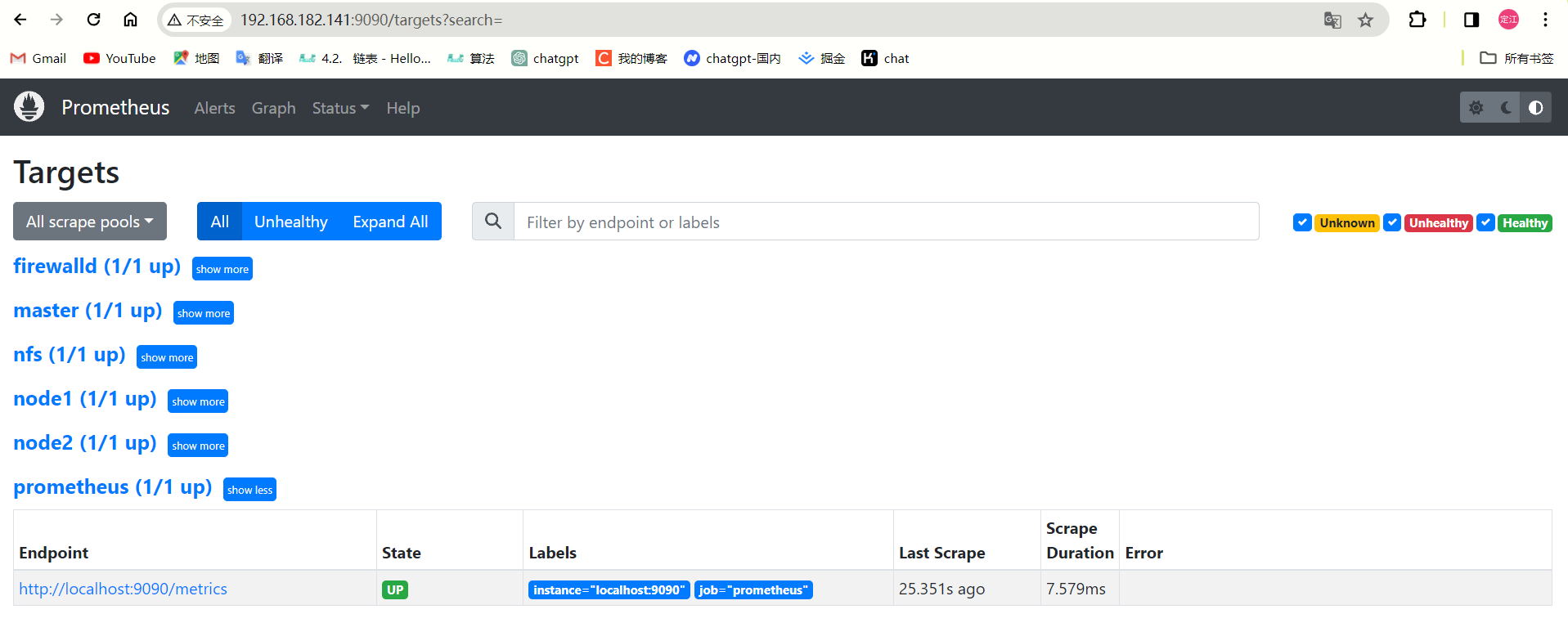

6.2.2、在Prometheus服务器上添加被监控的服务器

'在prometheus服务器上添加抓取数据的配置,添加node节点服务器,将抓取的数据存储到时序数据库里'

[root@jump prometheus]# ls

console_libraries consoles LICENSE NOTICE prometheus prometheus.yml promtool

[root@jump prometheus]# vim prometheus.yml

#添加下面的配置- job_name: "master"static_configs:- targets: ["192.168.182.142:9090"]- job_name: "node1"static_configs:- targets: ["192.168.182.143:9090"]- job_name: "node2"static_configs:- targets: ["192.168.182.144:9090"]- job_name: "nfs"static_configs:- targets: ["192.168.182.140:9090"]- job_name: "firewalld"static_configs:- targets: ["192.168.182.177:9090"]

'重启Prometheus服务'

[root@jump prometheus]# service prometheus restart

Redirecting to /bin/systemctl restart prometheus.service

[root@jump prometheus]#

6.2.3、安装grafana出图展示

'安装'

[root@jump prom]# yum install grafana-enterprise-9.5.1-1.x86_64.rpm '启动grafana'

[root@jump prom]# systemctl start grafana-server'设置开机自启动'

[root@jump prom]# systemctl enable grafana-server

Created symlink from /etc/systemd/system/multi-user.target.wants/grafana-server.service to /usr/lib/systemd/system/grafana-server.service.'查看是否启动'

[root@jump prom]# ps aux|grep grafana

grafana 17968 2.8 5.5 1288920 103588 ? Ssl 13:41 0:02 /usr/share/grafana/bin/grafana server --config=/etc/grafana/grafana.ini --pidfile=/var/run/grafana/grafana-server.pid --packaging=rpm cfg:default.paths.logs=/var/log/grafana cfg:default.paths.data=/var/lib/grafana cfg:default.paths.plugins=/var/lib/grafana/plugins cfg:default.paths.provisioning=/etc/grafana/provisioning

root 18030 0.0 0.0 112824 972 pts/0 S+ 13:43 0:00 grep --color=auto grafana

[root@jump prom]# netstat -antplu|grep grafana

tcp 0 0 192.168.182.141:42942 34.120.177.193:443 ESTABLISHED 17968/grafana

tcp6 0 0 :::3000 :::* LISTEN 17968/grafana

[root@jump prom]#

登录,在浏览器里登录,端口是3000 账号密码都是admin

添加数据源:

选择模板:

7、进行跳板机和防火墙的配置

7.1、将k8s集群里的机器还有nfs服务器,进行tcp wrappers的配置,只允许堡垒机ssh进来,拒绝其他的机器ssh过去。

[root@jump ~]# cd /prom

[root@jump prom]# vim set_tcp_wrappers.sh

[root@jump prom]# cat set_tcp_wrappers.sh

#!/bin/bash#set /etc/hosts.allow文件的内容,只允许堡垒机访问sshd服务echo 'sshd:192.168.182.141' >>/etc/hosts.allow

#单独允许我的windows系统也可以访问echo 'sshd:192.168.40.93' >>/etc/hosts.allow

#拒绝其他的所有的机器访问sshd

echo 'sshd:ALL' >>/etc/hosts.deny

[root@jump prom]#

ansible k8s -m script -a "/prom/set_tcp_wrappers.sh"

ansible nfs -m script -a "/prom/set_tcp_wrappers.sh"

'测试是否生效,只允许堡垒机ssh过去'

'拿nfs去跳master'

[root@nfs ~]# ssh root@192.168.182.142

ssh_exchange_identification: read: Connection reset by peer

[root@nfs ~]# '拿jump机器去跳'

[root@jump prom]# ssh root@192.168.182.142

Last login: Sat Mar 30 13:55:24 2024 from 192.168.182.141

[root@master ~]# exit

登出

Connection to 192.168.182.142 closed.

[root@jump prom]#

7.2、搭建防火墙服务器

'关闭防火墙和selinux'

service firewalld stop

systemctl disable firewalld

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

'修改ip地址'

'一个wan口:192.168.40.87'

'一个lan口: 192.168.182.177'

[root@firewalld network-scripts]# cat ifcfg-ens33

BOOTPROTO="none"

DEFROUTE="yes"

NAME="ens33"

UUID="e3072a9e-9e43-4855-9941-cabf05360e32"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.40.87

PREFIX=24

GATEWAY=192.168.40.166

DNS1=114.114.114.114

[root@firewalld network-scripts]#

[root@firewalld network-scripts]# cat ifcfg-ens34

BOOTPROTO=none

DEFROUTE=yes

NAME=ens34

UUID=0d04766d-7a98-4a68-b9a9-eb7377a4df80

DEVICE=ens34

ONBOOT=yes

IPADDR=192.168.182.177

PREFIX=24

[root@firewalld network-scripts]#

'永久打开路由功能'

[root@firewalld ~]# vim /etc/sysctl.conf

net.ipv4.ip_forward = 1

[root@firewalld ~]# sysctl -p 让内核读取配置文件,开启路由功能

net.ipv4.ip_forward = 1

[root@firewalld ~]#

7.3、编写dnat和snat策略

'编写策略snat和dnat'

[root@firewalld ~]# mkdir /nat

[root@firewalld ~]# cd /nat/

[root@firewalld nat]# vim set_snat_dnat.sh

[root@firewalld nat]# cat set_snat_dnat.sh

#!/bin/bash#开启路由功能

echo 1 >/proc/sys/net/ipv4/ip_forward

#修改/etc/sysctl.conf里添加下面的配置

#net.ipv4.ip_forward = 1#清除防火墙规则

iptables=/usr/sbin/iptables$iptables -F

$iptables -t nat -F#set snat policy

$iptables -t nat -A POSTROUTING -s 192.168.182.0/24 -o ens33 -j MASQUERADE#set dnat policy,发布我的web服务

$iptables -t nat -A PREROUTING -d 192.168.40.87 -i ens33 -p tcp --dport 30001 -j DNAT --to-destination 192.168.182.142:30001

$iptables -t nat -A PREROUTING -d 192.168.40.87 -i ens33 -p tcp --dport 31000 -j DNAT --to-destination 192.168.182.142:31000#发布堡垒机,访问防火墙的2233端口转发到堡垒机的22端口

$iptables -t nat -A PREROUTING -d 192.168.40.87 -i ens33 -p tcp --dport 2233 -j DNAT --to-destination 192.168.182.141:22

[root@firewalld nat]#

'执行'

[root@firewalld nat]# bash set_snat_dnat.sh'查看脚本的执行效果'

iptables -L -t nat -n

[root@firewalld nat]# iptables -L -t nat -n

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

DNAT tcp -- 0.0.0.0/0 192.168.40.87 tcp dpt:30001 to:192.168.182.142:30001

DNAT tcp -- 0.0.0.0/0 192.168.40.87 tcp dpt:31000 to:192.168.182.142:31000

DNAT tcp -- 0.0.0.0/0 192.168.40.87 tcp dpt:2233 to:192.168.182.141:22Chain INPUT (policy ACCEPT)

target prot opt source destination Chain OUTPUT (policy ACCEPT)

target prot opt source destination Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE all -- 192.168.182.0/24 0.0.0.0/0

[root@firewalld nat]#

'保存规则'

[root@firewalld nat]# iptables-save >/etc/sysconfig/iptables_rules'设置snat和dnat策略开机启动'

[root@firewalld nat]# vim /etc/rc.local

[root@firewalld nat]# vim /etc/rc.local

iptables-restore </etc/sysconfig/iptables_rules

[root@firewalld nat]#

[root@firewalld nat]# chmod +x /etc/rc.d/rc.local

7.4、将整个k8s集群里的服务器的网关设置为防火墙服务器的LAN口的ip地址(192.168.182.177)

'以master为例'

[root@master network-scripts]# cat ifcfg-ens33

BOOTPROTO="none"

DEFROUTE="yes"

NAME="ens33"

UUID="e2cd1765-6b1c-4ff5-88e0-a2bf8bd4203e"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.182.142

PREFIX=24

GATEWAY=192.168.182.177

DNS1=114.114.114.114

[root@master network-scripts]# [root@master network-scripts]# service network restart

Restarting network (via systemctl): [ 确定 ]

7.5、测试SNAT功能

[root@master ~]# ping www.baidu.com

PING www.a.shifen.com (183.2.172.185) 56(84) bytes of data.

64 bytes from 183.2.172.185 (183.2.172.185): icmp_seq=1 ttl=50 time=40.0 ms

64 bytes from 183.2.172.185 (183.2.172.185): icmp_seq=2 ttl=50 time=33.0 ms

64 bytes from 183.2.172.185 (183.2.172.185): icmp_seq=3 ttl=50 time=34.7 ms

^C

--- www.a.shifen.com ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 33.048/35.938/40.021/2.972 ms

[root@master ~]# 7.6、测试dnat功能

7.7、测试堡垒机发布

成功了!!!!!

8、项目心得

- 在配置snat和dnat的时候,想着把里边的环境都改成hostonly,结果服务瘫了,又换成了nat

- 在做项目期间,挂起之后导致master和node直接的连接断了,不得不重新恢复快照继续做

- 不管是部署k8s还是防火墙,都得仔细仔细再仔细,不然漏了一个步骤,排查起来很不方便

- 有很多镜像都是在国外,拉不进来的

- 解决办法是找国内的平替

- 或者直接买一个新加坡的服务器,从那台拉,再导出来

- 在部署 Kubernetes 仪表板(Dashboard),登录需要token验证,只有15分钟

- 需要修改:recommend.yaml 修改参数增加时常

- master节点上是不跑业务pod,上边设置了污点

- 在启动新pod的时候,删除那些不重要的pod,不然会出现node节点爆满,pod起不来

相关文章:

基于k8s的web服务器构建

文章目录 k8s综合项目1、项目规划图2、项目描述3、项目环境4、前期准备4.1、环境准备4.2、ip划分4.3、静态配置ip地址4.4、修改主机名4.5、部署k8s集群4.5.1、关闭防火墙和selinux4.5.2、升级系统4.5.3、每台主机都配置hosts文件,相互之间通过主机名互相访问4.5.4、…...

【名词解释】ImageCaption任务中的CIDEr、n-gram、TF-IDF、BLEU、METEOR、ROUGE 分别是什么?它们是怎样计算的?

CIDEr CIDEr(Consensus-based Image Description Evaluation)是一种用于自动评估图像描述(image captioning)任务性能的指标。它主要通过计算生成的描述与一组参考描述之间的相似性来评估图像描述的质量。CIDEr的独特之处在于它考…...

C++其他语法..

1.运算符重载 之前有一个案例如下所示 其中我们可以通过add方法将两个点组成一个新的点 class Point {friend Point add(Point, Point);int m_x;int m_y; public:Point(int x, int y) : m_x(x), m_y(y) {}void display() {cout << "(" << m_x <<…...

【Vue3源码学习】— CH2.6 effect.ts:详解

effect.ts:详解 1. 理解activeEffect1.1 定义1.2 通过一个例子来说明这个过程a. 副作用函数的初始化b. 执行副作用函数前c. 访问state.countd. get拦截器中的track调用e. 修改state.count时的set拦截器f. trigger函数中的依赖重新执行 1.3 实战应用1.4 activeEffect…...

C语言:文件操作(一)

目录 前言 1、为什么使用文件 2、什么是文件 2.1 程序文件 2.2 数据文件 2.3 文件名 3、文件的打开和关闭 3.1 文件指针 3.2 文件的打开和关闭 结(一) 前言 本篇文章将介绍C语言的文件操作,在后面的内容讲到:为什么使用文…...

集中进行一系列处理——函数

需要多次执行相同的处理,除了编写循环语句之外,还可以集中起来对它进行定义。 对一系列处理进行定义的做法被称为函数,步骤,子程序。 对函数进行定一后,只需要调用该函数就可以了。如果需要对处理的内容进行修正&…...

git diff

1. 如何将库文件的变化生成到patch中 git diff --binary commit1 commit2 > test.patch 打patch: git apply test.patch 2. 如何消除trailing whitespace 问题 git diff --ignore-space-at-eol commit1 commit2 > test.patch 打patch: git ap…...

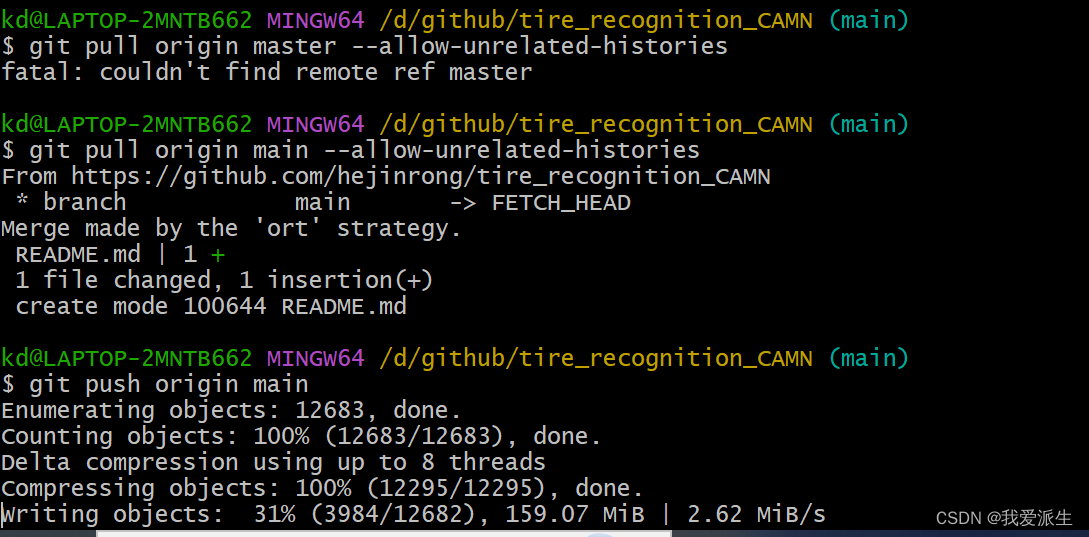

新手使用GIT上传本地项目到Github(个人笔记)

亲测下面的文章很有用处。 1. 初次使用git上传代码到github远程仓库 - 知乎 (zhihu.com) 2. 使用Git时出现refusing to merge unrelated histories的解决办法 - 知乎...

结合《人力资源管理系统》的Java基础题

1.编写一个Java方法,接受一个整数数组作为参数,返回该数组中工资高于平均工资的员工数量。假设数组中的每个元素都代表一个员工的工资。 2.设计一个Java方法,接受一个字符串数组和一个关键字作为参数,返回包含该关键字的姓名的员…...

PostgreSQL备份还原数据库

1.切换PostgreSQL bin目录 配置Postgresql环境变量后可以不用切换 pg_dump 、psql都在postgresql bin目录下,所以需要切换到bin目录执行命令 2.备份数据库 方式一 语法 pg_dump -h <ip> -U <pg_username> -p <port> -d <databaseName>…...

实现读写分离与优化查询性能:通过物化视图在MySQL、PostgreSQL和SQL Server中的应用

实现读写分离与优化查询性能:通过物化视图在MySQL、PostgreSQL和SQL Server中的应用 在数据库管理中,读写分离是一种常见的性能优化方法,它通过将读操作和写操作分发到不同的服务器或数据库实例上,来减轻单个数据库的负载&#x…...

pytest中文使用文档----10skip和xfail标记

1. 跳过测试用例的执行 1.1. pytest.mark.skip装饰器1.2. pytest.skip方法1.3. pytest.mark.skipif装饰器1.4. pytest.importorskip方法1.5. 跳过测试类1.6. 跳过测试模块1.7. 跳过指定文件或目录1.8. 总结 2. 标记用例为预期失败的 2.1. 去使能xfail标记 3. 结合pytest.param方…...

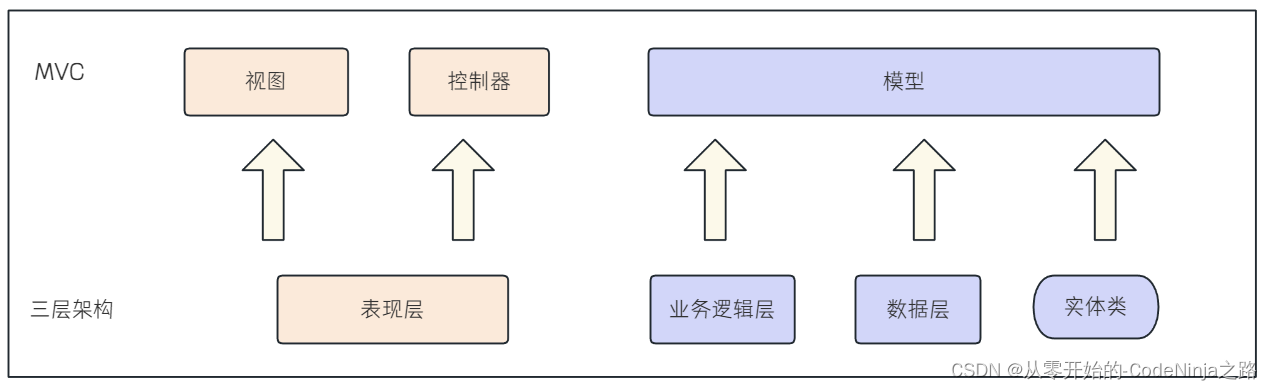

【Spring MVC】快速学习使用Spring MVC的注解及三层架构

💓 博客主页:从零开始的-CodeNinja之路 ⏩ 收录文章:【Spring MVC】快速学习使用Spring MVC的注解及三层架构 🎉欢迎大家点赞👍评论📝收藏⭐文章 目录 Spring Web MVC一: 什么是Spring Web MVC࿱…...

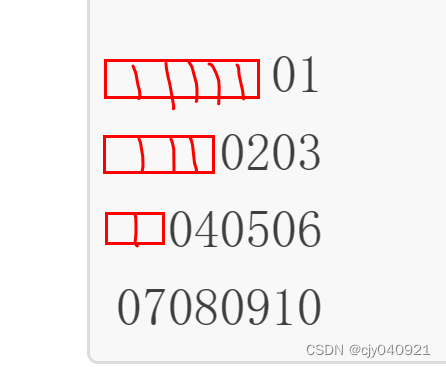

Python(乱学)

字典在转化为其他类型时,会出现是否舍弃value的操作,只有在转化为字符串的时候才不会舍弃value 注释的快捷键是ctrl/ 字符串无法与整数,浮点数,等用加号完成拼接 5不入??? 还有一种格式化的方法…...

OpenHarmony实战:轻量级系统之子系统移植概述

OpenHarmony系统功能按照“系统 > 子系统 > 部件”逐级展开,支持根据实际需求裁剪某些非必要的部件,本文以部分子系统、部件为例进行介绍。若想使用OpenHarmony系统的能力,需要对相应子系统进行适配。 OpenHarmony芯片适配常见子系统列…...

Neo4j基础知识

图数据库简介 图数据库是基于数学里图论的思想和算法而实现的高效处理复杂关系网络的新型数据库系统。它善于高效处理大量的、复杂的、互连的、多变的数据。其计算效率远远高于传统的关系型数据库。 在图形数据库当中,每个节点代表一个对象,节点之间的…...

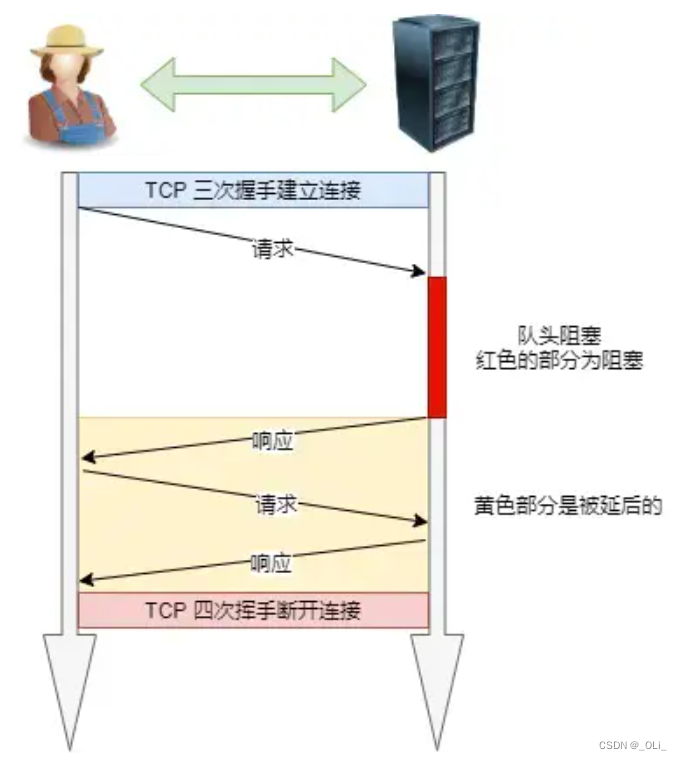

HTTP/1.1 特性(计算机网络)

HTTP/1.1 的优点有哪些? 「简单、灵活和易于扩展、应用广泛和跨平台」 1. 简单 HTTP 基本的报文格式就是 header body,头部信息也是 key-value 简单文本的形式,易于理解。 2. 灵活和易于扩展 HTTP 协议里的各类请求方法、URI/URL、状态码…...

每日一题————P5725 【深基4.习8】求三角形

题目: 题目乍一看非常的简单,属于初学者都会的问题——————————但是实际上呢,有一些小小的坑在里面。 就是三角形的打印。 平常我们在写代码的时候,遇到打印三角形的题,一般简简单单两个for循环搞定 #inclu…...

第三题:时间加法

题目描述 现在时间是 a 点 b 分,请问 t 分钟后,是几点几分? 输入描述 输入的第一行包含一个整数 a。 第二行包含一个整数 b。 第三行包含一个整数 t。 其中,0≤a≤23,0≤b≤59,0≤t, 分钟后还是在当天。 输出描…...

【RAG】内部外挂知识库搭建-本地GPT

大半年的项目告一段落了,现在自己找找感兴趣的东西学习下,看看可不可以搞出个效果不错的local GPT,自研下大模型吧 RAG是什么? 检索增强生成(RAG)是指对大型语言模型输出进行优化,使其能够在生成响应之前引用训练数据来…...

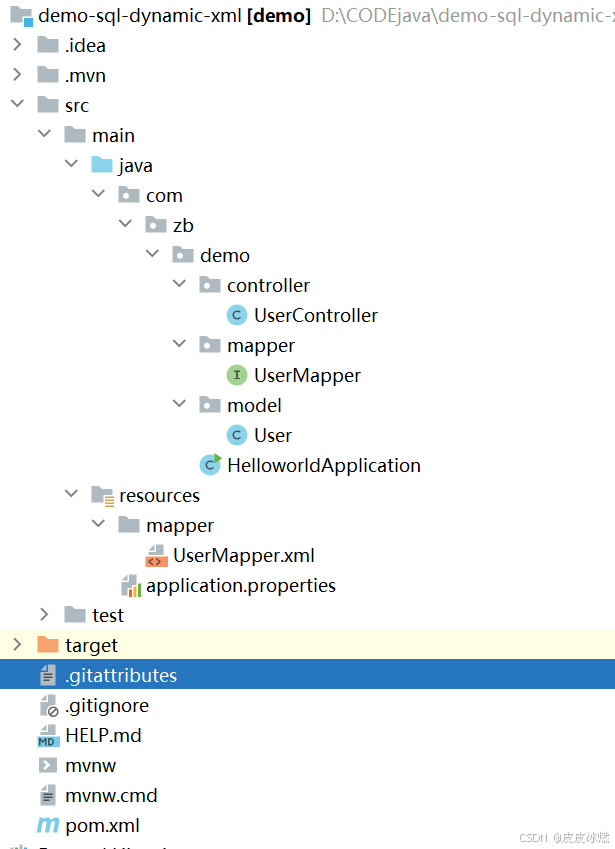

SpringBoot-17-MyBatis动态SQL标签之常用标签

文章目录 1 代码1.1 实体User.java1.2 接口UserMapper.java1.3 映射UserMapper.xml1.3.1 标签if1.3.2 标签if和where1.3.3 标签choose和when和otherwise1.4 UserController.java2 常用动态SQL标签2.1 标签set2.1.1 UserMapper.java2.1.2 UserMapper.xml2.1.3 UserController.ja…...

反向工程与模型迁移:打造未来商品详情API的可持续创新体系

在电商行业蓬勃发展的当下,商品详情API作为连接电商平台与开发者、商家及用户的关键纽带,其重要性日益凸显。传统商品详情API主要聚焦于商品基本信息(如名称、价格、库存等)的获取与展示,已难以满足市场对个性化、智能…...

Swift 协议扩展精进之路:解决 CoreData 托管实体子类的类型不匹配问题(下)

概述 在 Swift 开发语言中,各位秃头小码农们可以充分利用语法本身所带来的便利去劈荆斩棘。我们还可以恣意利用泛型、协议关联类型和协议扩展来进一步简化和优化我们复杂的代码需求。 不过,在涉及到多个子类派生于基类进行多态模拟的场景下,…...

前端导出带有合并单元格的列表

// 导出async function exportExcel(fileName "共识调整.xlsx") {// 所有数据const exportData await getAllMainData();// 表头内容let fitstTitleList [];const secondTitleList [];allColumns.value.forEach(column > {if (!column.children) {fitstTitleL…...

C++中string流知识详解和示例

一、概览与类体系 C 提供三种基于内存字符串的流,定义在 <sstream> 中: std::istringstream:输入流,从已有字符串中读取并解析。std::ostringstream:输出流,向内部缓冲区写入内容,最终取…...

用docker来安装部署freeswitch记录

今天刚才测试一个callcenter的项目,所以尝试安装freeswitch 1、使用轩辕镜像 - 中国开发者首选的专业 Docker 镜像加速服务平台 编辑下面/etc/docker/daemon.json文件为 {"registry-mirrors": ["https://docker.xuanyuan.me"] }同时可以进入轩…...

【Oracle】分区表

个人主页:Guiat 归属专栏:Oracle 文章目录 1. 分区表基础概述1.1 分区表的概念与优势1.2 分区类型概览1.3 分区表的工作原理 2. 范围分区 (RANGE Partitioning)2.1 基础范围分区2.1.1 按日期范围分区2.1.2 按数值范围分区 2.2 间隔分区 (INTERVAL Partit…...

学校时钟系统,标准考场时钟系统,AI亮相2025高考,赛思时钟系统为教育公平筑起“精准防线”

2025年#高考 将在近日拉开帷幕,#AI 监考一度冲上热搜。当AI深度融入高考,#时间同步 不再是辅助功能,而是决定AI监考系统成败的“生命线”。 AI亮相2025高考,40种异常行为0.5秒精准识别 2025年高考即将拉开帷幕,江西、…...

Mobile ALOHA全身模仿学习

一、题目 Mobile ALOHA:通过低成本全身远程操作学习双手移动操作 传统模仿学习(Imitation Learning)缺点:聚焦与桌面操作,缺乏通用任务所需的移动性和灵活性 本论文优点:(1)在ALOHA…...

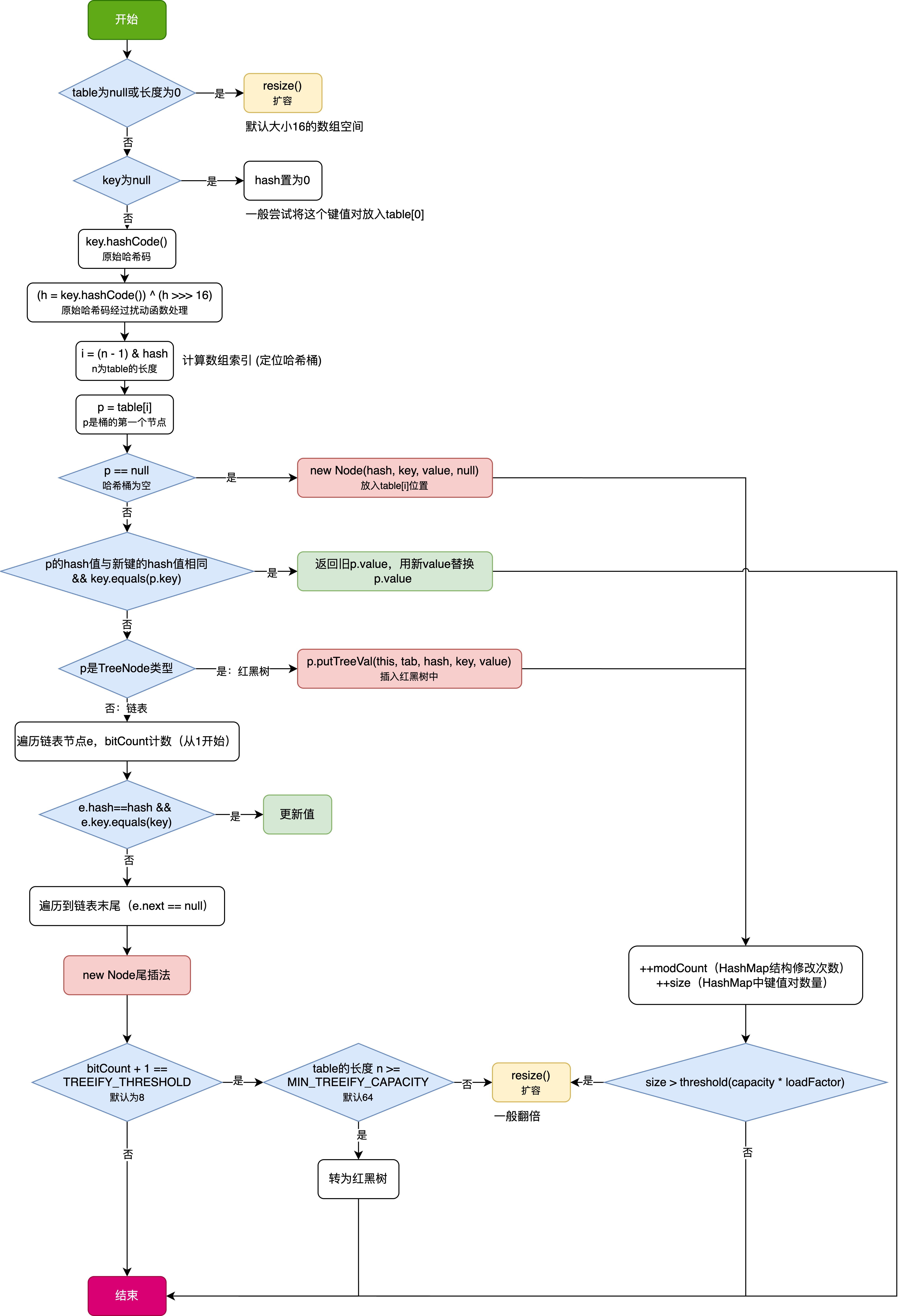

HashMap中的put方法执行流程(流程图)

1 put操作整体流程 HashMap 的 put 操作是其最核心的功能之一。在 JDK 1.8 及以后版本中,其主要逻辑封装在 putVal 这个内部方法中。整个过程大致如下: 初始判断与哈希计算: 首先,putVal 方法会检查当前的 table(也就…...