Datax,hbase与mysql数据相互同步

参考文章:datax mysql 和hbase的 相互导入

目录

0、软件版本说明

1、hbase数据同步至mysql

1.1、hbase数据

1.2、mysql数据

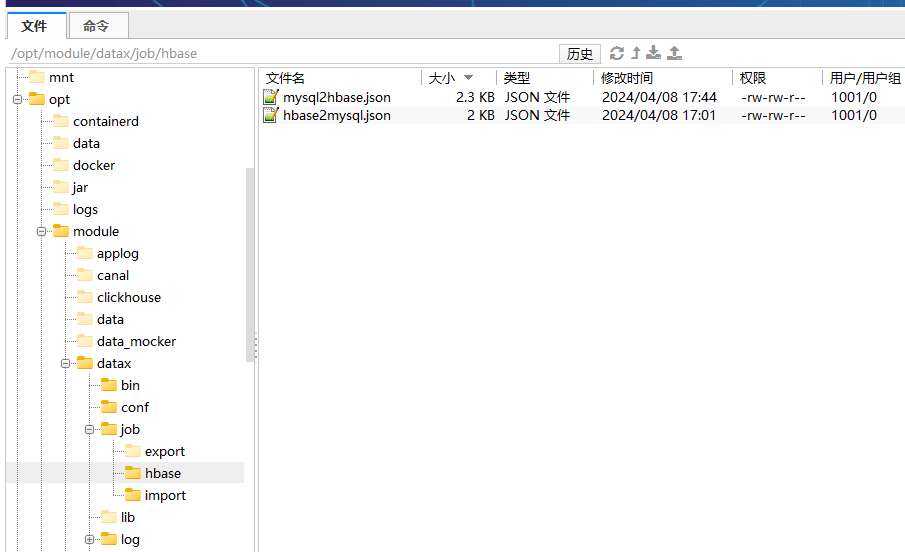

1.3、json脚本(hbase2mysql.json)

1.4、同步成功日志

2、mysql数据同步至hbase

1.1、hbase数据

1.2、mysql数据

1.3、json脚本(mysql2hbase.json)

1.4、同步成功日志

3、总结

0、软件版本说明

- hbase版本:2.0.5

- mysql版本:8.0.34

1、hbase数据同步至mysql

1.1、hbase数据

hbase(main):018:0> scan "bigdata:student"

ROW COLUMN+CELL 1001 column=info:age, timestamp=1712562704820, value=18 1001 column=info:name, timestamp=1712562696088, value=lisi 1002 column=info:age, timestamp=1712566667737, value=222 1002 column=info:name, timestamp=1712566689576, value=\xE5\xAE\x8B\xE5\xA3\xB9

2 row(s)

Took 0.0805 seconds

hbase(main):019:0> [atguigu@node001 hbase]$ cd hbase-2.0.5/

[atguigu@node001 hbase-2.0.5]$ bin/hbase shellFor more on the HBase Shell, see http://hbase.apache.org/book.html

hbase(main):002:0> create_namespace 'bigdata'

Took 3.4979 seconds

hbase(main):003:0> list_namespace

NAMESPACE

EDU_REALTIME

SYSTEM

bigdata

default

hbase

5 row(s)

Took 0.1244 seconds

hbase(main):004:0> create_namespace 'bigdata2'

Took 0.5109 seconds

hbase(main):005:0> list_namespace

NAMESPACE

EDU_REALTIME

SYSTEM

bigdata

bigdata2

default

hbase

6 row(s)

Took 0.0450 seconds

hbase(main):006:0> create 'bigdata:student', {NAME => 'info', VERSIONS =>5}, {NAME => 'msg'}

Created table bigdata:student

Took 4.7854 seconds

=> Hbase::Table - bigdata:student

hbase(main):007:0> create 'bigdata2:student', {NAME => 'info', VERSIONS =>5}, {NAME => 'msg'}

Created table bigdata2:student

Took 2.4732 seconds

=> Hbase::Table - bigdata2:student

hbase(main):008:0> list

TABLE

EDU_REALTIME:DIM_BASE_CATEGORY_INFO

EDU_REALTIME:DIM_BASE_PROVINCE

EDU_REALTIME:DIM_BASE_SOURCE

EDU_REALTIME:DIM_BASE_SUBJECT_INFO

EDU_REALTIME:DIM_CHAPTER_INFO

EDU_REALTIME:DIM_COURSE_INFO

EDU_REALTIME:DIM_KNOWLEDGE_POINT

EDU_REALTIME:DIM_TEST_PAPER

EDU_REALTIME:DIM_TEST_PAPER_QUESTION

EDU_REALTIME:DIM_TEST_POINT_QUESTION

EDU_REALTIME:DIM_TEST_QUESTION_INFO

EDU_REALTIME:DIM_TEST_QUESTION_OPTION

EDU_REALTIME:DIM_USER_INFO

EDU_REALTIME:DIM_VIDEO_INFO

SYSTEM:CATALOG

SYSTEM:FUNCTION

SYSTEM:LOG

SYSTEM:MUTEX

SYSTEM:SEQUENCE

SYSTEM:STATS

bigdata2:student

bigdata:student

22 row(s)

Took 0.0711 seconds

=> ["EDU_REALTIME:DIM_BASE_CATEGORY_INFO", "EDU_REALTIME:DIM_BASE_PROVINCE", "EDU_REALTIME:DIM_BASE_SOURCE", "EDU_REALTIME:DIM_BASE_SUBJECT_INFO", "EDU_REALTIME:DIM_CHAPTER_INFO", "EDU_REALTIME:DIM_COURSE_INFO", "EDU_REALTIME:DIM_KNOWLEDGE_POINT", "EDU_REALTIME:DIM_TEST_PAPER", "EDU_REALTIME:DIM_TEST_PAPER_QUESTION", "EDU_REALTIME:DIM_TEST_POINT_QUESTION", "EDU_REALTIME:DIM_TEST_QUESTION_INFO", "EDU_REALTIME:DIM_TEST_QUESTION_OPTION", "EDU_REALTIME:DIM_USER_INFO", "EDU_REALTIME:DIM_VIDEO_INFO", "SYSTEM:CATALOG", "SYSTEM:FUNCTION", "SYSTEM:LOG", "SYSTEM:MUTEX", "SYSTEM:SEQUENCE", "SYSTEM:STATS", "bigdata2:student", "bigdata:student"]

hbase(main):009:0> put 'bigdata:student','1001','info:name','zhangsan'

Took 0.8415 seconds

hbase(main):010:0> put 'bigdata:student','1001','info:name','lisi'

Took 0.0330 seconds

hbase(main):011:0> put 'bigdata:student','1001','info:age','18'

Took 0.0201 seconds

hbase(main):012:0> get 'bigdata:student','1001'

COLUMN CELL info:age timestamp=1712562704820, value=18 info:name timestamp=1712562696088, value=lisi

1 row(s)

Took 0.4235 seconds

hbase(main):013:0> scan student

NameError: undefined local variable or method `student' for main:Objecthbase(main):014:0> scan bigdata:student

NameError: undefined local variable or method `student' for main:Objecthbase(main):015:0> scan "bigdata:student"

ROW COLUMN+CELL 1001 column=info:age, timestamp=1712562704820, value=18 1001 column=info:name, timestamp=1712562696088, value=lisi

1 row(s)

Took 0.9035 seconds

hbase(main):016:0> put 'bigdata:student','1002','info:age','222'

Took 0.0816 seconds

hbase(main):017:0> put 'bigdata:student','1002','info:name','宋壹'

Took 0.0462 seconds

hbase(main):018:0> scan "bigdata:student"

ROW COLUMN+CELL 1001 column=info:age, timestamp=1712562704820, value=18 1001 column=info:name, timestamp=1712562696088, value=lisi 1002 column=info:age, timestamp=1712566667737, value=222 1002 column=info:name, timestamp=1712566689576, value=\xE5\xAE\x8B\xE5\xA3\xB9

2 row(s)

Took 0.0805 seconds

hbase(main):019:0> 1.2、mysql数据

SELECT VERSION(); -- 查看mysql版本/*Navicat Premium Data TransferSource Server : 大数据-node001Source Server Type : MySQLSource Server Version : 80034 (8.0.34)Source Host : node001:3306Source Schema : testTarget Server Type : MySQLTarget Server Version : 80034 (8.0.34)File Encoding : 65001Date: 08/04/2024 17:11:56

*/SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;-- ----------------------------

-- Table structure for student

-- ----------------------------

DROP TABLE IF EXISTS `student`;

CREATE TABLE `student` (`info` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,`msg` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci ROW_FORMAT = Dynamic;-- ----------------------------

-- Records of student

-- ----------------------------

INSERT INTO `student` VALUES ('111', '111111');

INSERT INTO `student` VALUES ('222', '222222');

INSERT INTO `student` VALUES ('18', 'lisi');

INSERT INTO `student` VALUES ('222', '宋壹');SET FOREIGN_KEY_CHECKS = 1;1.3、json脚本(hbase2mysql.json)

{"job": {"content": [{"reader": {"name": "hbase11xreader","parameter": {"hbaseConfig": {"hbase.zookeeper.quorum": "node001:2181"},"table": "bigdata:student","encoding": "utf-8","mode": "normal","column": [{"name": "info:age","type": "string"},{"name": "info:name","type": "string"}],"range": {"startRowkey": "","endRowkey": "","isBinaryRowkey": true}}},"writer": {"name": "mysqlwriter","parameter": {"column": ["info","msg"],"connection": [{"jdbcUrl": "jdbc:mysql://node001:3306/test","table": ["student"]}],"username": "root","password": "123456","preSql": [],"session": [],"writeMode": "insert"}}}],"setting": {"speed": {"channel": "1"}}}

}1.4、同步成功日志

[atguigu@node001 datax]$ python bin/datax.py job/hbase/hbase2mysql.jsonDataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.2024-04-08 17:02:00.785 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2024-04-08 17:02:00.804 [main] INFO Engine - the machine info => osInfo: Red Hat, Inc. 1.8 25.372-b07jvmInfo: Linux amd64 3.10.0-862.el7.x86_64cpu num: 4totalPhysicalMemory: -0.00GfreePhysicalMemory: -0.00GmaxFileDescriptorCount: -1currentOpenFileDescriptorCount: -1GC Names [PS MarkSweep, PS Scavenge]MEMORY_NAME | allocation_size | init_size PS Eden Space | 256.00MB | 256.00MB Code Cache | 240.00MB | 2.44MB Compressed Class Space | 1,024.00MB | 0.00MB PS Survivor Space | 42.50MB | 42.50MB PS Old Gen | 683.00MB | 683.00MB Metaspace | -0.00MB | 0.00MB 2024-04-08 17:02:00.840 [main] INFO Engine -

{"content":[{"reader":{"name":"hbase11xreader","parameter":{"column":[{"name":"info:age","type":"string"},{"name":"info:name","type":"string"}],"encoding":"utf-8","hbaseConfig":{"hbase.zookeeper.quorum":"node001:2181"},"mode":"normal","range":{"endRowkey":"","isBinaryRowkey":true,"startRowkey":""},"table":"bigdata:student"}},"writer":{"name":"mysqlwriter","parameter":{"column":["info","msg"],"connection":[{"jdbcUrl":"jdbc:mysql://node001:3306/test","table":["student"]}],"password":"******","preSql":[],"session":[],"username":"root","writeMode":"insert"}}}],"setting":{"speed":{"channel":"1"}}

}2024-04-08 17:02:00.875 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2024-04-08 17:02:00.881 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2024-04-08 17:02:00.881 [main] INFO JobContainer - DataX jobContainer starts job.

2024-04-08 17:02:00.885 [main] INFO JobContainer - Set jobId = 0

2024-04-08 17:02:03.040 [job-0] INFO OriginalConfPretreatmentUtil - table:[student] all columns:[

info,msg

].

2024-04-08 17:02:03.098 [job-0] INFO OriginalConfPretreatmentUtil - Write data [

insert INTO %s (info,msg) VALUES(?,?)

], which jdbcUrl like:[jdbc:mysql://node001:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true]

2024-04-08 17:02:03.099 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2024-04-08 17:02:03.099 [job-0] INFO JobContainer - DataX Reader.Job [hbase11xreader] do prepare work .

2024-04-08 17:02:03.099 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do prepare work .

2024-04-08 17:02:03.100 [job-0] INFO JobContainer - jobContainer starts to do split ...

2024-04-08 17:02:03.100 [job-0] INFO JobContainer - Job set Channel-Number to 1 channels.

四月 08, 2024 5:02:03 下午 org.apache.hadoop.util.NativeCodeLoader <clinit>

警告: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

四月 08, 2024 5:02:03 下午 org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper <init>

信息: Process identifier=hconnection-0x50313382 connecting to ZooKeeper ensemble=node001:2181

2024-04-08 17:02:03.982 [job-0] INFO ZooKeeper - Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

2024-04-08 17:02:03.983 [job-0] INFO ZooKeeper - Client environment:host.name=node001

2024-04-08 17:02:03.983 [job-0] INFO ZooKeeper - Client environment:java.version=1.8.0_372

2024-04-08 17:02:03.983 [job-0] INFO ZooKeeper - Client environment:java.vendor=Red Hat, Inc.

2024-04-08 17:02:03.983 [job-0] INFO ZooKeeper - Client environment:java.home=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.372.b07-1.el7_9.x86_64/jre

2024-04-08 17:02:03.983 [job-0] INFO ZooKeeper - Client environment:java.class.path=/opt/module/datax/lib/commons-io-2.4.jar:/opt/module/datax/lib/groovy-all-2.1.9.jar:/opt/module/datax/lib/datax-core-0.0.1-SNAPSHOT.jar:/opt/module/datax/lib/fluent-hc-4.4.jar:/opt/module/datax/lib/commons-beanutils-1.9.2.jar:/opt/module/datax/lib/commons-codec-1.9.jar:/opt/module/datax/lib/httpclient-4.4.jar:/opt/module/datax/lib/commons-cli-1.2.jar:/opt/module/datax/lib/commons-lang-2.6.jar:/opt/module/datax/lib/logback-core-1.0.13.jar:/opt/module/datax/lib/hamcrest-core-1.3.jar:/opt/module/datax/lib/fastjson-1.1.46.sec01.jar:/opt/module/datax/lib/commons-lang3-3.3.2.jar:/opt/module/datax/lib/commons-logging-1.1.1.jar:/opt/module/datax/lib/janino-2.5.16.jar:/opt/module/datax/lib/commons-configuration-1.10.jar:/opt/module/datax/lib/slf4j-api-1.7.10.jar:/opt/module/datax/lib/datax-common-0.0.1-SNAPSHOT.jar:/opt/module/datax/lib/datax-transformer-0.0.1-SNAPSHOT.jar:/opt/module/datax/lib/logback-classic-1.0.13.jar:/opt/module/datax/lib/httpcore-4.4.jar:/opt/module/datax/lib/commons-collections-3.2.1.jar:/opt/module/datax/lib/commons-math3-3.1.1.jar:.

2024-04-08 17:02:03.984 [job-0] INFO ZooKeeper - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2024-04-08 17:02:03.984 [job-0] INFO ZooKeeper - Client environment:java.io.tmpdir=/tmp

2024-04-08 17:02:03.984 [job-0] INFO ZooKeeper - Client environment:java.compiler=<NA>

2024-04-08 17:02:03.984 [job-0] INFO ZooKeeper - Client environment:os.name=Linux

2024-04-08 17:02:03.984 [job-0] INFO ZooKeeper - Client environment:os.arch=amd64

2024-04-08 17:02:03.984 [job-0] INFO ZooKeeper - Client environment:os.version=3.10.0-862.el7.x86_64

2024-04-08 17:02:03.987 [job-0] INFO ZooKeeper - Client environment:user.name=atguigu

2024-04-08 17:02:03.988 [job-0] INFO ZooKeeper - Client environment:user.home=/home/atguigu

2024-04-08 17:02:03.988 [job-0] INFO ZooKeeper - Client environment:user.dir=/opt/module/datax

2024-04-08 17:02:03.990 [job-0] INFO ZooKeeper - Initiating client connection, connectString=node001:2181 sessionTimeout=90000 watcher=hconnection-0x503133820x0, quorum=node001:2181, baseZNode=/hbase

2024-04-08 17:02:04.069 [job-0-SendThread(node001:2181)] INFO ClientCnxn - Opening socket connection to server node001/192.168.10.101:2181. Will not attempt to authenticate using SASL (unknown error)

2024-04-08 17:02:04.092 [job-0-SendThread(node001:2181)] INFO ClientCnxn - Socket connection established to node001/192.168.10.101:2181, initiating session

2024-04-08 17:02:04.139 [job-0-SendThread(node001:2181)] INFO ClientCnxn - Session establishment complete on server node001/192.168.10.101:2181, sessionid = 0x200000707b70025, negotiated timeout = 40000

2024-04-08 17:02:06.334 [job-0] INFO Hbase11xHelper - HBaseReader split job into 1 tasks.

2024-04-08 17:02:06.335 [job-0] INFO JobContainer - DataX Reader.Job [hbase11xreader] splits to [1] tasks.

2024-04-08 17:02:06.336 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] splits to [1] tasks.

2024-04-08 17:02:06.366 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2024-04-08 17:02:06.394 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2024-04-08 17:02:06.402 [job-0] INFO JobContainer - Running by standalone Mode.

2024-04-08 17:02:06.426 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2024-04-08 17:02:06.457 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2024-04-08 17:02:06.458 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2024-04-08 17:02:06.529 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

四月 08, 2024 5:02:06 下午 org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper <init>

信息: Process identifier=hconnection-0x3b2eec42 connecting to ZooKeeper ensemble=node001:2181

2024-04-08 17:02:06.680 [0-0-0-reader] INFO ZooKeeper - Initiating client connection, connectString=node001:2181 sessionTimeout=90000 watcher=hconnection-0x3b2eec420x0, quorum=node001:2181, baseZNode=/hbase

2024-04-08 17:02:06.740 [0-0-0-reader-SendThread(node001:2181)] INFO ClientCnxn - Opening socket connection to server node001/192.168.10.101:2181. Will not attempt to authenticate using SASL (unknown error)

2024-04-08 17:02:06.773 [0-0-0-reader-SendThread(node001:2181)] INFO ClientCnxn - Socket connection established to node001/192.168.10.101:2181, initiating session

2024-04-08 17:02:06.808 [0-0-0-reader-SendThread(node001:2181)] INFO ClientCnxn - Session establishment complete on server node001/192.168.10.101:2181, sessionid = 0x200000707b70026, negotiated timeout = 40000

2024-04-08 17:02:06.960 [0-0-0-reader] INFO HbaseAbstractTask - The task set startRowkey=[], endRowkey=[].

2024-04-08 17:02:07.262 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[738]ms

2024-04-08 17:02:07.263 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2024-04-08 17:02:16.483 [job-0] INFO StandAloneJobContainerCommunicator - Total 2 records, 11 bytes | Speed 1B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.248s | Percentage 100.00%

2024-04-08 17:02:16.483 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2024-04-08 17:02:16.484 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do post work.

2024-04-08 17:02:16.485 [job-0] INFO JobContainer - DataX Reader.Job [hbase11xreader] do post work.

2024-04-08 17:02:16.485 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2024-04-08 17:02:16.487 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /opt/module/datax/hook

2024-04-08 17:02:16.491 [job-0] INFO JobContainer - [total cpu info] => averageCpu | maxDeltaCpu | minDeltaCpu -1.00% | -1.00% | -1.00%[total gc info] => NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime PS MarkSweep | 1 | 1 | 1 | 0.136s | 0.136s | 0.136s PS Scavenge | 1 | 1 | 1 | 0.072s | 0.072s | 0.072s 2024-04-08 17:02:16.491 [job-0] INFO JobContainer - PerfTrace not enable!

2024-04-08 17:02:16.493 [job-0] INFO StandAloneJobContainerCommunicator - Total 2 records, 11 bytes | Speed 1B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.248s | Percentage 100.00%

2024-04-08 17:02:16.495 [job-0] INFO JobContainer -

任务启动时刻 : 2024-04-08 17:02:00

任务结束时刻 : 2024-04-08 17:02:16

任务总计耗时 : 15s

任务平均流量 : 1B/s

记录写入速度 : 0rec/s

读出记录总数 : 2

读写失败总数 : 0[atguigu@node001 datax]$ 2、mysql数据同步至hbase

1.1、hbase数据

"bigdata2:student"一开始是空数据,后来使用datax执行同步任务后,可以看到:"bigdata2:student"新增了一些数据。

hbase(main):019:0> scan "bigdata2:student"

ROW COLUMN+CELL

0 row(s)

Took 1.6445 seconds

hbase(main):020:0> scan "bigdata2:student"

ROW COLUMN+CELL 111111111 column=info:age, timestamp=123456789, value=111 111111111 column=info:name, timestamp=123456789, value=111111 18lisi column=info:age, timestamp=123456789, value=18 18lisi column=info:name, timestamp=123456789, value=lisi 222222222 column=info:age, timestamp=123456789, value=222 222222222 column=info:name, timestamp=123456789, value=222222 333\xE5\xAE\x8B\xE5\xA3\xB9 column=info:age, timestamp=123456789, value=333 333\xE5\xAE\x8B\xE5\xA3\xB9 column=info:name, timestamp=123456789, value=\xE5\xAE\x8B\xE5\xA3\xB9

4 row(s)

Took 0.3075 seconds

hbase(main):021:0> 1.2、mysql数据

SELECT VERSION(); -- 查看mysql版本/*Navicat Premium Data TransferSource Server : 大数据-node001Source Server Type : MySQLSource Server Version : 80034 (8.0.34)Source Host : node001:3306Source Schema : testTarget Server Type : MySQLTarget Server Version : 80034 (8.0.34)File Encoding : 65001Date: 08/04/2024 17:11:56

*/SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;-- ----------------------------

-- Table structure for student

-- ----------------------------

DROP TABLE IF EXISTS `student`;

CREATE TABLE `student` (`info` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,`msg` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci ROW_FORMAT = Dynamic;-- ----------------------------

-- Records of student

-- ----------------------------

INSERT INTO `student` VALUES ('111', '111111');

INSERT INTO `student` VALUES ('222', '222222');

INSERT INTO `student` VALUES ('18', 'lisi');

INSERT INTO `student` VALUES ('222', '宋壹');SET FOREIGN_KEY_CHECKS = 1;1.3、json脚本(mysql2hbase.json)

{"job": {"setting": {"speed": {"channel": 1}},"content": [{"reader": {"name": "mysqlreader","parameter": {"column": ["info","msg"],"connection": [{"jdbcUrl": ["jdbc:mysql://127.0.0.1:3306/test"],"table": ["student"]}],"username": "root","password": "123456","where": ""}},"writer": {"name": "hbase11xwriter","parameter": {"hbaseConfig": {"hbase.zookeeper.quorum": "node001:2181"},"table": "bigdata2:student","mode": "normal","rowkeyColumn": [{"index": 0,"type": "string"},{"index": 1,"type": "string","value": "_"}],"column": [{"index": 0,"name": "info:age","type": "string"},{"index": 1,"name": "info:name","type": "string"}],"versionColumn": {"index": -1,"value": "123456789"},"encoding": "utf-8"}}}]}

}1.4、同步成功日志

[atguigu@node001 datax]$ python bin/datax.py job/hbase/mysql2hbase.json DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.2024-04-08 17:44:45.536 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2024-04-08 17:44:45.552 [main] INFO Engine - the machine info => osInfo: Red Hat, Inc. 1.8 25.372-b07jvmInfo: Linux amd64 3.10.0-862.el7.x86_64cpu num: 4totalPhysicalMemory: -0.00GfreePhysicalMemory: -0.00GmaxFileDescriptorCount: -1currentOpenFileDescriptorCount: -1GC Names [PS MarkSweep, PS Scavenge]MEMORY_NAME | allocation_size | init_size PS Eden Space | 256.00MB | 256.00MB Code Cache | 240.00MB | 2.44MB Compressed Class Space | 1,024.00MB | 0.00MB PS Survivor Space | 42.50MB | 42.50MB PS Old Gen | 683.00MB | 683.00MB Metaspace | -0.00MB | 0.00MB 2024-04-08 17:44:45.579 [main] INFO Engine -

{"content":[{"reader":{"name":"mysqlreader","parameter":{"column":["info","msg"],"connection":[{"jdbcUrl":["jdbc:mysql://127.0.0.1:3306/test"],"table":["student"]}],"password":"******","username":"root","where":""}},"writer":{"name":"hbase11xwriter","parameter":{"column":[{"index":0,"name":"info:age","type":"string"},{"index":1,"name":"info:name","type":"string"}],"encoding":"utf-8","hbaseConfig":{"hbase.zookeeper.quorum":"node001:2181"},"mode":"normal","rowkeyColumn":[{"index":0,"type":"string"},{"index":1,"type":"string","value":"_"}],"table":"bigdata2:student","versionColumn":{"index":-1,"value":"123456789"}}}}],"setting":{"speed":{"channel":1}}

}2024-04-08 17:44:45.615 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2024-04-08 17:44:45.618 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2024-04-08 17:44:45.619 [main] INFO JobContainer - DataX jobContainer starts job.

2024-04-08 17:44:45.622 [main] INFO JobContainer - Set jobId = 0

Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.

2024-04-08 17:44:47.358 [job-0] INFO OriginalConfPretreatmentUtil - Available jdbcUrl:jdbc:mysql://127.0.0.1:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true.

2024-04-08 17:44:47.734 [job-0] INFO OriginalConfPretreatmentUtil - table:[student] has columns:[info,msg].

2024-04-08 17:44:47.761 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2024-04-08 17:44:47.762 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] do prepare work .

2024-04-08 17:44:47.763 [job-0] INFO JobContainer - DataX Writer.Job [hbase11xwriter] do prepare work .

2024-04-08 17:44:47.764 [job-0] INFO JobContainer - jobContainer starts to do split ...

2024-04-08 17:44:47.764 [job-0] INFO JobContainer - Job set Channel-Number to 1 channels.

2024-04-08 17:44:47.773 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] splits to [1] tasks.

2024-04-08 17:44:47.774 [job-0] INFO JobContainer - DataX Writer.Job [hbase11xwriter] splits to [1] tasks.

2024-04-08 17:44:47.815 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2024-04-08 17:44:47.821 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2024-04-08 17:44:47.825 [job-0] INFO JobContainer - Running by standalone Mode.

2024-04-08 17:44:47.839 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2024-04-08 17:44:47.846 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2024-04-08 17:44:47.846 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2024-04-08 17:44:47.870 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2024-04-08 17:44:47.876 [0-0-0-reader] INFO CommonRdbmsReader$Task - Begin to read record by Sql: [select info,msg from student

] jdbcUrl:[jdbc:mysql://127.0.0.1:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true].

2024-04-08 17:44:48.010 [0-0-0-reader] INFO CommonRdbmsReader$Task - Finished read record by Sql: [select info,msg from student

] jdbcUrl:[jdbc:mysql://127.0.0.1:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true].

四月 08, 2024 5:44:49 下午 org.apache.hadoop.util.NativeCodeLoader <clinit>

警告: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

四月 08, 2024 5:44:50 下午 org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper <init>

信息: Process identifier=hconnection-0x26654712 connecting to ZooKeeper ensemble=node001:2181

2024-04-08 17:44:50.143 [0-0-0-writer] INFO ZooKeeper - Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

2024-04-08 17:44:50.143 [0-0-0-writer] INFO ZooKeeper - Client environment:host.name=node001

2024-04-08 17:44:50.143 [0-0-0-writer] INFO ZooKeeper - Client environment:java.version=1.8.0_372

2024-04-08 17:44:50.143 [0-0-0-writer] INFO ZooKeeper - Client environment:java.vendor=Red Hat, Inc.

2024-04-08 17:44:50.143 [0-0-0-writer] INFO ZooKeeper - Client environment:java.home=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.372.b07-1.el7_9.x86_64/jre

2024-04-08 17:44:50.144 [0-0-0-writer] INFO ZooKeeper - Client environment:java.class.path=/opt/module/datax/lib/commons-io-2.4.jar:/opt/module/datax/lib/groovy-all-2.1.9.jar:/opt/module/datax/lib/datax-core-0.0.1-SNAPSHOT.jar:/opt/module/datax/lib/fluent-hc-4.4.jar:/opt/module/datax/lib/commons-beanutils-1.9.2.jar:/opt/module/datax/lib/commons-codec-1.9.jar:/opt/module/datax/lib/httpclient-4.4.jar:/opt/module/datax/lib/commons-cli-1.2.jar:/opt/module/datax/lib/commons-lang-2.6.jar:/opt/module/datax/lib/logback-core-1.0.13.jar:/opt/module/datax/lib/hamcrest-core-1.3.jar:/opt/module/datax/lib/fastjson-1.1.46.sec01.jar:/opt/module/datax/lib/commons-lang3-3.3.2.jar:/opt/module/datax/lib/commons-logging-1.1.1.jar:/opt/module/datax/lib/janino-2.5.16.jar:/opt/module/datax/lib/commons-configuration-1.10.jar:/opt/module/datax/lib/slf4j-api-1.7.10.jar:/opt/module/datax/lib/datax-common-0.0.1-SNAPSHOT.jar:/opt/module/datax/lib/datax-transformer-0.0.1-SNAPSHOT.jar:/opt/module/datax/lib/logback-classic-1.0.13.jar:/opt/module/datax/lib/httpcore-4.4.jar:/opt/module/datax/lib/commons-collections-3.2.1.jar:/opt/module/datax/lib/commons-math3-3.1.1.jar:.

2024-04-08 17:44:50.144 [0-0-0-writer] INFO ZooKeeper - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2024-04-08 17:44:50.144 [0-0-0-writer] INFO ZooKeeper - Client environment:java.io.tmpdir=/tmp

2024-04-08 17:44:50.144 [0-0-0-writer] INFO ZooKeeper - Client environment:java.compiler=<NA>

2024-04-08 17:44:50.144 [0-0-0-writer] INFO ZooKeeper - Client environment:os.name=Linux

2024-04-08 17:44:50.144 [0-0-0-writer] INFO ZooKeeper - Client environment:os.arch=amd64

2024-04-08 17:44:50.144 [0-0-0-writer] INFO ZooKeeper - Client environment:os.version=3.10.0-862.el7.x86_64

2024-04-08 17:44:50.144 [0-0-0-writer] INFO ZooKeeper - Client environment:user.name=atguigu

2024-04-08 17:44:50.144 [0-0-0-writer] INFO ZooKeeper - Client environment:user.home=/home/atguigu

2024-04-08 17:44:50.144 [0-0-0-writer] INFO ZooKeeper - Client environment:user.dir=/opt/module/datax

2024-04-08 17:44:50.145 [0-0-0-writer] INFO ZooKeeper - Initiating client connection, connectString=node001:2181 sessionTimeout=90000 watcher=hconnection-0x266547120x0, quorum=node001:2181, baseZNode=/hbase

2024-04-08 17:44:50.256 [0-0-0-writer-SendThread(node001:2181)] INFO ClientCnxn - Opening socket connection to server node001/192.168.10.101:2181. Will not attempt to authenticate using SASL (unknown error)

2024-04-08 17:44:50.381 [0-0-0-writer-SendThread(node001:2181)] INFO ClientCnxn - Socket connection established to node001/192.168.10.101:2181, initiating session

2024-04-08 17:44:50.427 [0-0-0-writer-SendThread(node001:2181)] INFO ClientCnxn - Session establishment complete on server node001/192.168.10.101:2181, sessionid = 0x200000707b70028, negotiated timeout = 40000

2024-04-08 17:44:53.794 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[5930]ms

2024-04-08 17:44:53.795 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2024-04-08 17:44:57.857 [job-0] INFO StandAloneJobContainerCommunicator - Total 4 records, 29 bytes | Speed 2B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2024-04-08 17:44:57.858 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2024-04-08 17:44:57.858 [job-0] INFO JobContainer - DataX Writer.Job [hbase11xwriter] do post work.

2024-04-08 17:44:57.859 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] do post work.

2024-04-08 17:44:57.859 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2024-04-08 17:44:57.862 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /opt/module/datax/hook

2024-04-08 17:44:57.866 [job-0] INFO JobContainer - [total cpu info] => averageCpu | maxDeltaCpu | minDeltaCpu -1.00% | -1.00% | -1.00%[total gc info] => NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime PS MarkSweep | 1 | 1 | 1 | 0.120s | 0.120s | 0.120s PS Scavenge | 1 | 1 | 1 | 0.095s | 0.095s | 0.095s 2024-04-08 17:44:57.867 [job-0] INFO JobContainer - PerfTrace not enable!

2024-04-08 17:44:57.868 [job-0] INFO StandAloneJobContainerCommunicator - Total 4 records, 29 bytes | Speed 2B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2024-04-08 17:44:57.876 [job-0] INFO JobContainer -

任务启动时刻 : 2024-04-08 17:44:45

任务结束时刻 : 2024-04-08 17:44:57

任务总计耗时 : 12s

任务平均流量 : 2B/s

记录写入速度 : 0rec/s

读出记录总数 : 4

读写失败总数 : 0[atguigu@node001 datax]$ 3、总结

搞了一下午,ヾ(◍°∇°◍)ノ゙加油~

参考文章“datax,mysql和hbase的相互导入”,加上ChatGPT的帮助,搞了大约4个小时,做了一个小案例。

相关文章:

Datax,hbase与mysql数据相互同步

参考文章:datax mysql 和hbase的 相互导入 目录 0、软件版本说明 1、hbase数据同步至mysql 1.1、hbase数据 1.2、mysql数据 1.3、json脚本(hbase2mysql.json) 1.4、同步成功日志 2、mysql数据同步至hbase 1.1、hbase数据 1.2、mysql…...

ubuntu spdlog 封装成c++类使用

安装及编译方法:ubuntu spdlog 日志安装及使用_spdlog_logger_info-CSDN博客 h文件: #ifndef LOGGING_H #define LOGGING_H#include <iostream> #include <cstring> #include <sstream> #include <string> #include <memor…...

【C语言】——字符串函数的使用与模拟实现(上)

【C语言】——字符串函数 前言一、 s t r l e n strlen strlen 函数1.1、函数功能1.2、函数的使用1.3、函数的模拟实现(1)计数法(2)递归法(3)指针 - 指针 二、 s t r c p y strcpy strcpy 函数2.1、函数功能…...

数据库(1)

目录 1.什么是事务?事务的基本特性ACID? 2.数据库中并发一致性问题? 3.数据的隔离等级? 4.ACID靠什么保证的呢? 5.SQL优化的实践经验? 1.什么是事务?事务的基本特性ACID? 事务指…...

VirtualBox - 与 Win10 虚拟机 与 宿主机 共享文件

原文链接 https://www.cnblogs.com/xy14/p/10427353.html 1. 概述 需要在 宿主机 和 虚拟机 之间交换文件复制粘贴 貌似不太好使 2. 问题 设置了共享文件夹之后, 找不到目录 3. 环境 宿主机 OS Win10开启了 网络发现 略虚拟机 OS Win10开启了 网络发现 略Virtualbox 6 4…...

深入浅出 useEffect:React 函数组件中的副作用处理详解

useEffect 是 React 中的一个钩子函数,用于处理函数组件中的副作用操作,如发送网络请求、订阅消息、手动修改 DOM 等。下面是 useEffect 的用法总结: 基本用法 import React, { useState, useEffect } from react;function Example() {cons…...

《QT实用小工具·十九》回车跳转到不同的编辑框

1、概述 源码放在文章末尾 该项目实现通过回车键让光标从一个编辑框跳转到另一个编辑框,下面是demo演示: 项目部分代码如下: #ifndef WIDGET_H #define WIDGET_H#include <QWidget>namespace Ui { class Widget; }class Widget : p…...

基本的数据类型在16位、32位和64位机上所占的字节大小

1、目前常用的机器都是32位和64位的,但是有时候会考虑16位机。总结一下在三种位数下常用的数据类型所占的字节大小。 数据类型16位(byte)32位(byte)64位(byte)取值范围char111-128 ~ 127unsigned char1110 ~ 255short int / short222-32768~32767unsigned short222…...

关注招聘 关注招聘 关注招聘

🔥关注招聘 🔥关注招聘 🔥关注招聘 🔥开源产品: 1.农业物联网平台开源版 2.充电桩系统开源版 3.GPU池化软件(AI人工智能训练平台/推理平台) 开源版 产品销售: 1.农业物联网平台企业版 2.充电桩系统企业…...

Django框架设计原理

相信大多数的Web开发者对于MVC(Model、View、Controller)设计模式都不陌生,该设计模式已经成为Web框架中一种事实上的标准了,Django框架自然也是一个遵循MVC设计模式的框架。不过从严格意义上讲,Django框架采用了一种更…...

Linux ARM平台开发系列讲解(QEMU篇) 1.2 新添加一个Linux kernel设备树

1. 概述 上一章节我们利用QEMU成功启动了Linux kernel,但是细心的小伙伴就会发现,我们用默认的defconfig是没有找到设备树源文件的,但是又发现kernel启动时候它使用了设备树riscv-virtio,qemu,这是因为qemu用了一个默认的设备树文件,该章节呢我们就把这个默认的设备树文件…...

OSPF动态路由实验(思科)

华为设备参考: 一,技术简介 OSPF(Open Shortest Path First)是一种内部网关协议,主要用于在单一自治系统内决策路由。它是一种基于链路状态的路由协议,通过链路状态路由算法来实现动态路由选择。 OSPF的…...

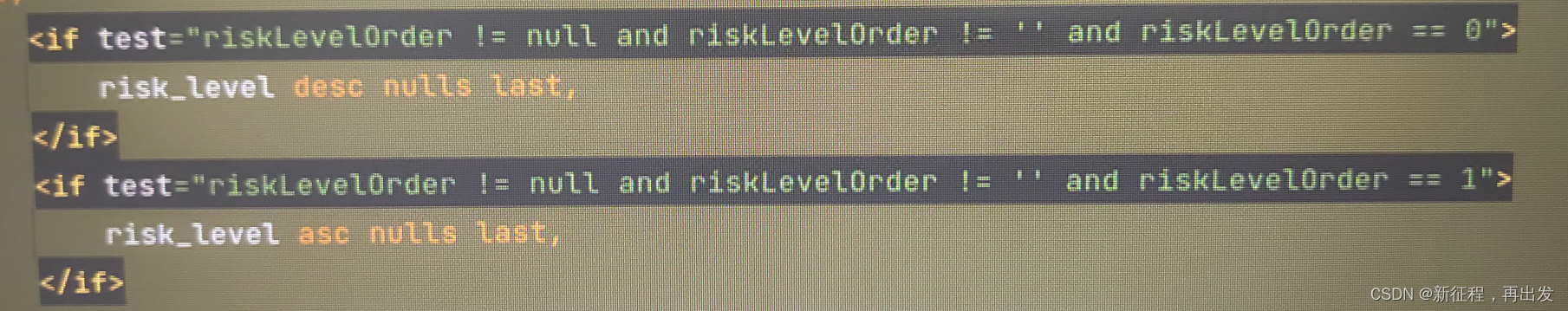

MyBatis 等类似的 XML 映射文件中,当传入的参数为空字符串时,<if> 标签可能会导致 SQL 语句中的条件判断出现意外结果。

问题 传入的参数为空字符串,但还是根据参数查询了。 原因 在 XML 中使用 标签进行条件判断时,需要明确理解其行为。在 MyBatis 等类似的 XML 映射文件中, 标签通常用于动态拼接 SQL 语句的条件部分。当传入的参数 riskLevel 为空字符串时…...

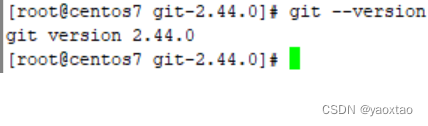

git的安装

git的安装 在CentOS系统上安装git时,我们可以选择yum安装或者源码编译安装两种方式。Yum的安装方式的好处是比较简单,直接输入”yum install git”命令即可。但是Yum的安装的话,不好控制安装git的版本。如果我们想选择安装git的版本…...

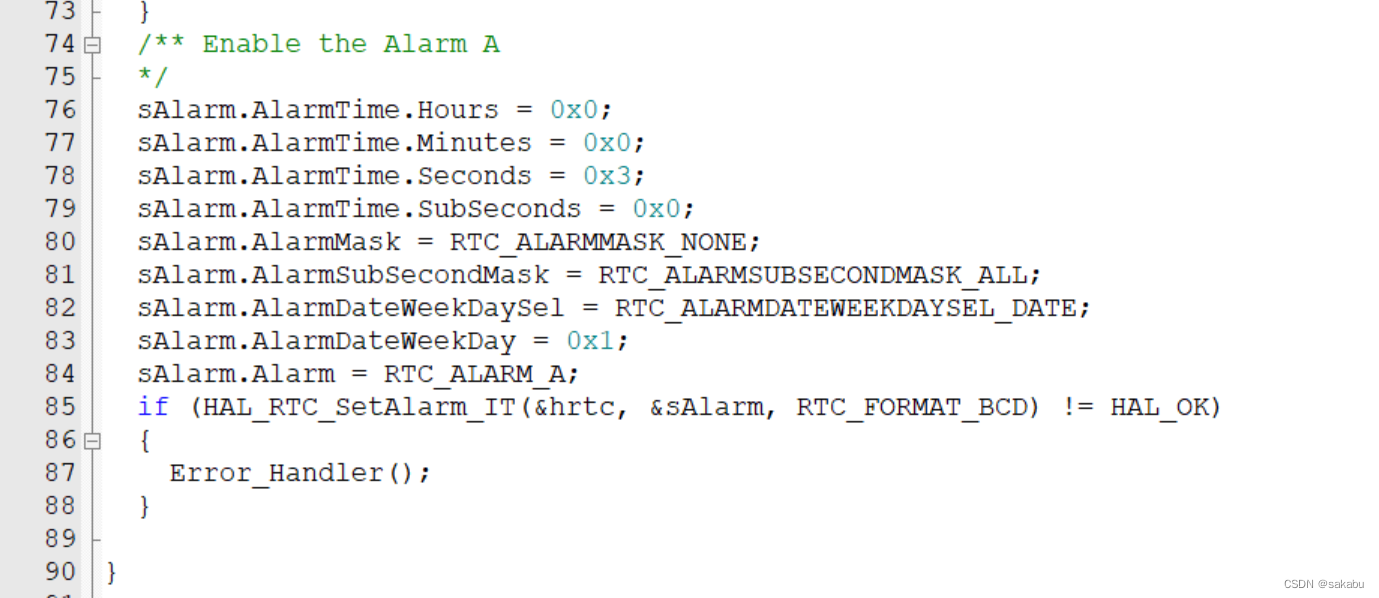

蓝桥杯嵌入式模板(cubemxkeil5)

LED 引脚PC8~PC15,默认高电平(灭)。 此外还要配置PD2为输出引脚(控制LED锁存) ,默认低电平(锁住)!!! #include "led.h"void led_disp…...

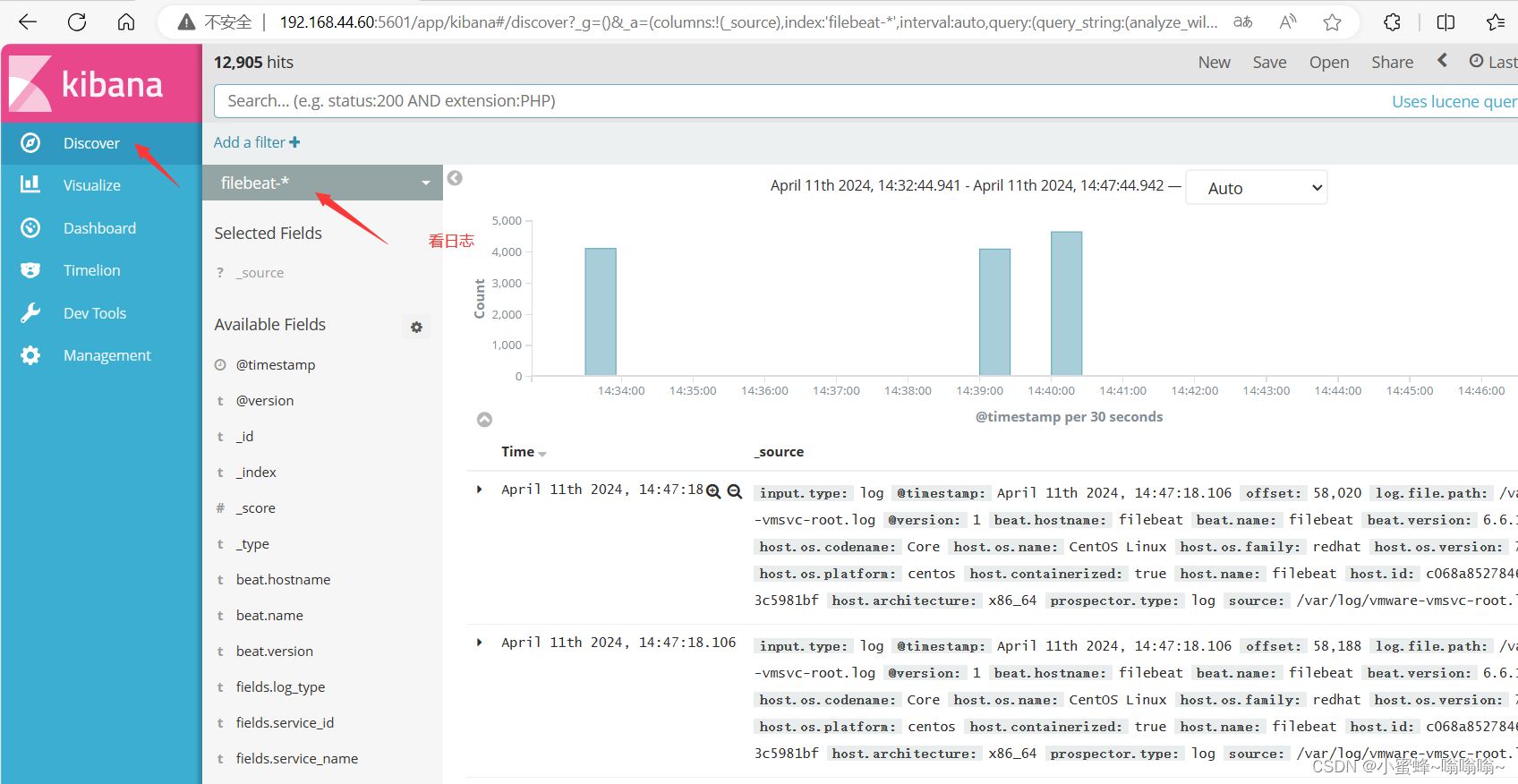

ELFK (Filebeat+ELK)日志分析系统

一. 相关介绍 Filebeat:轻量级的开源日志文件数据搜集器。通常在需要采集数据的客户端安装 Filebeat,并指定目录与日志格式,Filebeat 就能快速收集数据,并发送给 logstash 进或是直接发给 Elasticsearch 存储,性能上相…...

)

HttpClient、OKhttp、RestTemplate接口调用对比( Java HTTP 客户端)

文章目录 HttpClient、OKhttp、RestTemplate接口调用对比HttpClientOkHttprestTemplate HttpClient、OKhttp、RestTemplate接口调用对比 HttpClient、OkHttp 和 RestTemplate 是三种常用的 Java HTTP 客户端库,它们都可以用于发送 HTTP 请求和接收 HTTP 响应&#…...

[旅游] 景区排队上厕所

人有三急,急中最急是上个厕所要排队,而且人还不少!这样就需要做一个提前量的预测,万一提前量的预测,搞得不当,非得憋出膀光炎,或者尿裤子。尤其是女厕所太少!另外一点是儿童根本就没…...

三 maven的依赖管理

一 maven依赖管理 Maven 依赖管理是 Maven 软件中最重要的功能之一。Maven 的依赖管理能够帮助开发人员自动解决软件包依赖问题,使得开发人员能够轻松地将其他开发人员开发的模块或第三方框架集成到自己的应用程序或模块中,避免出现版本冲突和依赖缺失等…...

iperf3 网络性能测试

iperf3测试 1、iperf3简介 iperf3是一个主动测试网络带宽的工具,可以测试iTCP、UDP、SCTP等网络带宽;可以通过参数修改网络协议、缓冲区、测试时间、数据大小等,每个测试结果会得出吞吐量、带宽、重传数、丢包数等测试结果 2、参数详解 通…...

)

云计算——弹性云计算器(ECS)

弹性云服务器:ECS 概述 云计算重构了ICT系统,云计算平台厂商推出使得厂家能够主要关注应用管理而非平台管理的云平台,包含如下主要概念。 ECS(Elastic Cloud Server):即弹性云服务器,是云计算…...

【Linux】C语言执行shell指令

在C语言中执行Shell指令 在C语言中,有几种方法可以执行Shell指令: 1. 使用system()函数 这是最简单的方法,包含在stdlib.h头文件中: #include <stdlib.h>int main() {system("ls -l"); // 执行ls -l命令retu…...

生成 Git SSH 证书

🔑 1. 生成 SSH 密钥对 在终端(Windows 使用 Git Bash,Mac/Linux 使用 Terminal)执行命令: ssh-keygen -t rsa -b 4096 -C "your_emailexample.com" 参数说明: -t rsa&#x…...

Java 加密常用的各种算法及其选择

在数字化时代,数据安全至关重要,Java 作为广泛应用的编程语言,提供了丰富的加密算法来保障数据的保密性、完整性和真实性。了解这些常用加密算法及其适用场景,有助于开发者在不同的业务需求中做出正确的选择。 一、对称加密算法…...

HTML前端开发:JavaScript 常用事件详解

作为前端开发的核心,JavaScript 事件是用户与网页交互的基础。以下是常见事件的详细说明和用法示例: 1. onclick - 点击事件 当元素被单击时触发(左键点击) button.onclick function() {alert("按钮被点击了!&…...

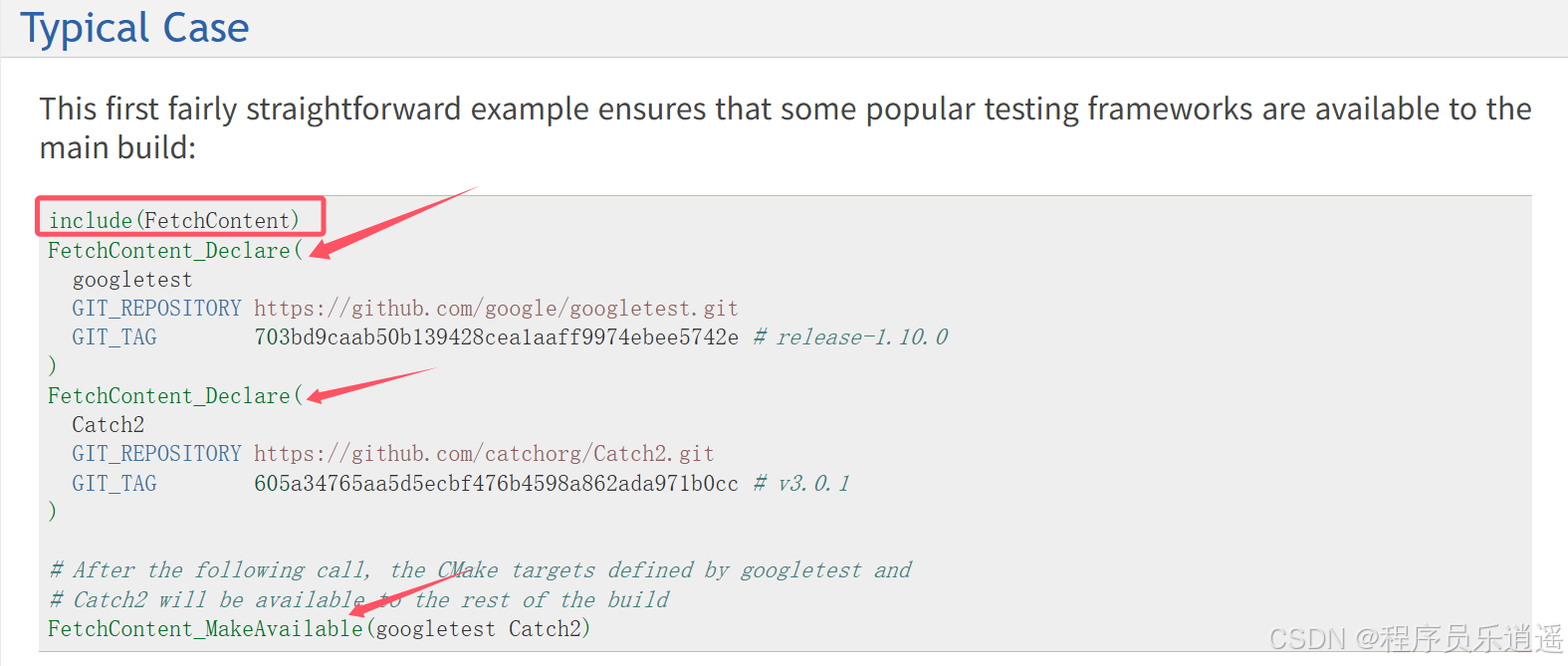

CMake 从 GitHub 下载第三方库并使用

有时我们希望直接使用 GitHub 上的开源库,而不想手动下载、编译和安装。 可以利用 CMake 提供的 FetchContent 模块来实现自动下载、构建和链接第三方库。 FetchContent 命令官方文档✅ 示例代码 我们将以 fmt 这个流行的格式化库为例,演示如何: 使用 FetchContent 从 GitH…...

在WSL2的Ubuntu镜像中安装Docker

Docker官网链接: https://docs.docker.com/engine/install/ubuntu/ 1、运行以下命令卸载所有冲突的软件包: for pkg in docker.io docker-doc docker-compose docker-compose-v2 podman-docker containerd runc; do sudo apt-get remove $pkg; done2、设置Docker…...

AspectJ 在 Android 中的完整使用指南

一、环境配置(Gradle 7.0 适配) 1. 项目级 build.gradle // 注意:沪江插件已停更,推荐官方兼容方案 buildscript {dependencies {classpath org.aspectj:aspectjtools:1.9.9.1 // AspectJ 工具} } 2. 模块级 build.gradle plu…...

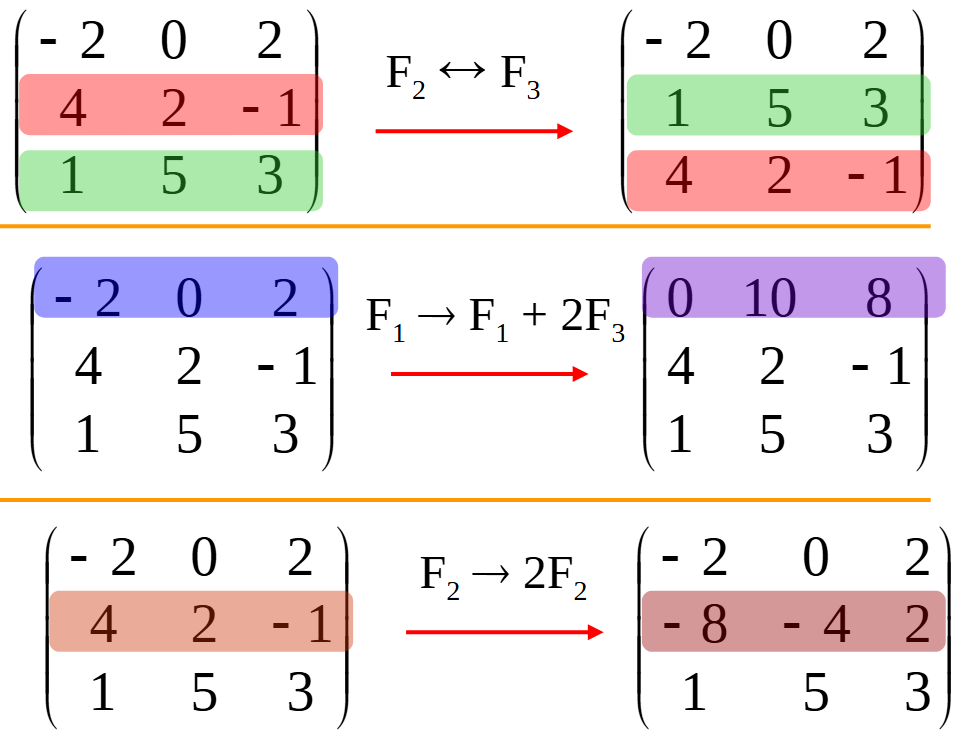

使用 SymPy 进行向量和矩阵的高级操作

在科学计算和工程领域,向量和矩阵操作是解决问题的核心技能之一。Python 的 SymPy 库提供了强大的符号计算功能,能够高效地处理向量和矩阵的各种操作。本文将深入探讨如何使用 SymPy 进行向量和矩阵的创建、合并以及维度拓展等操作,并通过具体…...

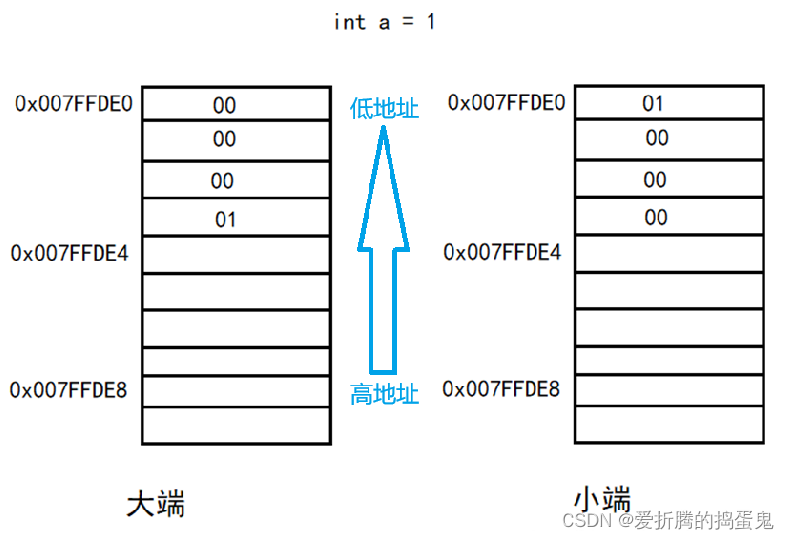

Java求职者面试指南:计算机基础与源码原理深度解析

Java求职者面试指南:计算机基础与源码原理深度解析 第一轮提问:基础概念问题 1. 请解释什么是进程和线程的区别? 面试官:进程是程序的一次执行过程,是系统进行资源分配和调度的基本单位;而线程是进程中的…...