使用ceph-csi把ceph-fs做为k8s的storageclass使用

背景

ceph三节点集群除了做为对象存储使用,计划使用cephfs替代掉k8s里面现有的nfs-storageclass。

思路

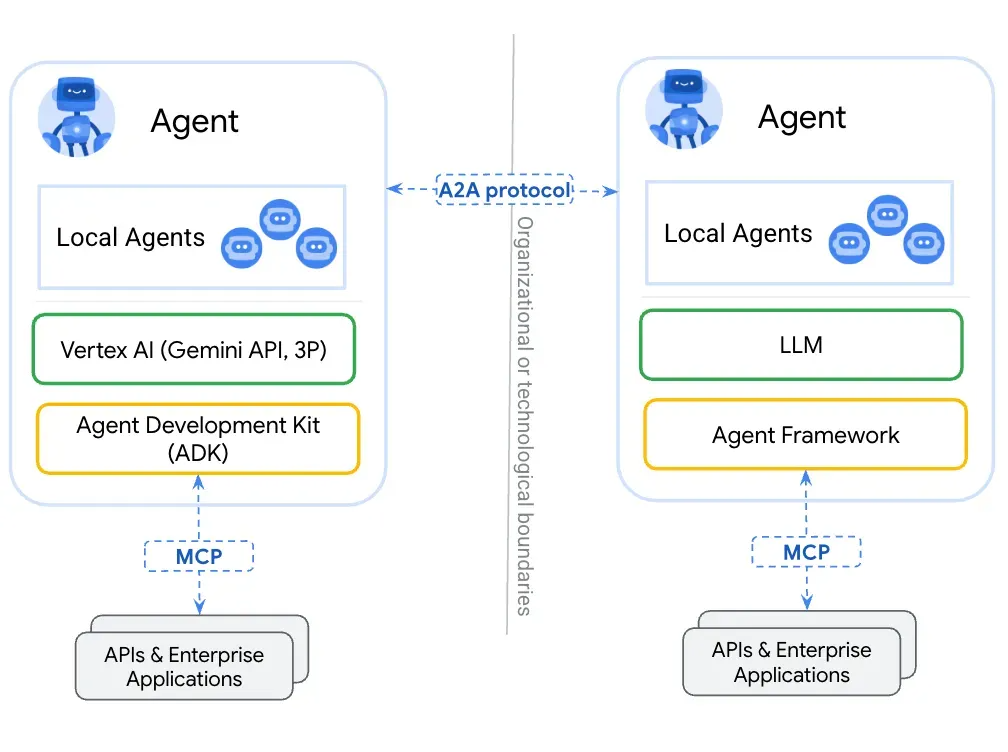

整体实现参考ceph官方的ceph csi实现,这套环境是arm架构的,即ceph和k8s都是在arm上实现。实测下来也兼容。

ceph-fs有两种两种挂载方式,根据源码里面的信息来看,一种是用户空间下的挂载方式fuse,另一种是内核空间下的mount挂载方式。

我这里的内核版本是4.15,默认不支持使用内核空间下的挂载,可以通过选项强制开启使用内核空间挂载来提升性能

源码如下

func LoadAvailableMounters(conf *util.Config) error {// #nosecfuseMounterProbe := exec.Command("ceph-fuse", "--version")// #noseckernelMounterProbe := exec.Command("mount.ceph")err := kernelMounterProbe.Run()if err != nil {log.ErrorLogMsg("failed to run mount.ceph %v", err)} else {// fetch the current running kernel inforelease, kvErr := util.GetKernelVersion()if kvErr != nil {return kvErr}

# 这里判断是否启用了在低版本内核上强制启用内核空间挂载cephfsif conf.ForceKernelCephFS || util.CheckKernelSupport(release, quotaSupport) {log.DefaultLog("loaded mounter: %s", volumeMounterKernel)availableMounters = append(availableMounters, volumeMounterKernel)} else {log.DefaultLog("kernel version < 4.17 might not support quota feature, hence not loading kernel client")}}err = fuseMounterProbe.Run()if err != nil {log.ErrorLogMsg("failed to run ceph-fuse %v", err)} else {log.DefaultLog("loaded mounter: %s", volumeMounterFuse)availableMounters = append(availableMounters, volumeMounterFuse)}if len(availableMounters) == 0 {return errors.New("no ceph mounters found on system")}return nil

}

ForceKernelCephFS参数在部署文档的md里面有

环境

# 系统版本

root@k8s-master01:~# cat /etc/os-release

NAME="Ubuntu"

VERSION="18.04.3 LTS (Bionic Beaver)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 18.04.3 LTS"

VERSION_ID="18.04"

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

VERSION_CODENAME=bionic

UBUNTU_CODENAME=bionic

# 内核版本

root@k8s-master01:~# uname -a

Linux k8s-master01 4.15.0-70-generic #79-Ubuntu SMP Tue Nov 12 10:36:10 UTC 2019 aarch64 aarch64 aarch64 GNU/Linux

# k8s版本

root@k8s-master01:~# kubectl version

Client Version: v1.30.3

Kustomize Version: v5.0.4-0.20230601165947-6ce0bf390ce3

Server Version: v1.30.0

# ceph版本

root@ceph-node01:/etc/ceph-cluster# ceph -s cluster:id: 5a6fdfb7-81a1-40f6-97b7-c92f96de9ac5health: HEALTH_WARNmons are allowing insecure global_id reclaimtoo many PGs per OSD (289 > max 250)services:mon: 3 daemons, quorum ceph-node01,ceph-node02,ceph-node03 (age 2w)mgr: ceph-node01(active, since 12d)mds: 1/1 daemons uposd: 3 osds: 3 up (since 2w), 3 in (since 2w)rgw: 2 daemons active (2 hosts, 1 zones)data:volumes: 1/1 healthypools: 10 pools, 289 pgsobjects: 353 objects, 95 MiBusage: 3.1 GiB used, 3.0 TiB / 3.0 TiB availpgs: 289 active+cleanio:client: 4.0 KiB/s rd, 0 B/s wr, 3 op/s rd, 2 op/s wrroot@ceph-node01:/etc/ceph-cluster# ceph --version

ceph version 16.2.15 (618f440892089921c3e944a991122ddc44e60516) pacific (stable)

实现

ceph操作

# 获取admin密钥

ceph auth get-key client.admin

# 创建fs

ceph fs new k8scephfs cephfs-metadata cephfs-data

ceph osd pool set cephfs-data bulk true# 创建subvolumegroup

ceph fs subvolumegroup create k8scephfs csi

ceph fs subvolumegroup ls

ceph fs subvolumegroup ls k8scephfs

也可以使用自己创建的用户进行赋权

k8s上操作

克隆仓库

cd /data/k8s/ceph-fs-csi

git clone https://github.com/ceph/ceph-csi.git

按照这里的文档进行

https://github.com/ceph/ceph-csi/blob/devel/docs/deploy-cephfs.md

我使用的是quay.io/cephcsi/cephcsi:v3.12.0

附上各个部署文件的配置(按照部署执行顺序)

创建csidriver对象

kubectl create -f csidriver.yaml

cat csidriver.yaml# /!\ DO NOT MODIFY THIS FILE

#

# This file has been automatically generated by Ceph-CSI yamlgen.

# The source for the contents can be found in the api/deploy directory, make

# your modifications there.

#

---

apiVersion: storage.k8s.io/v1

kind: CSIDriver

metadata:name: "cephfs.csi.ceph.com"

spec:attachRequired: falsepodInfoOnMount: falsefsGroupPolicy: FileseLinuxMount: true

创建node节点插件和provisionner需要的rbac权限

kubectl create -f csi-provisioner-rbac.yaml

kubectl create -f csi-nodeplugin-rbac.yaml

注意把namespace: 改为你需要部署的ns下面,不然后面部署provisioner和nodeplugin的时候报错找不到serviceaccount之类的

部署csi插件需要的configmap配置文件

kubectl create -f csi-config-map.yaml

cat csi-config-map.yamlapiVersion: v1

kind: ConfigMap

metadata:namespace: kube-systemname: "ceph-csi-config"

# Lets see the different configuration under config.json key.

data:config.json: |-[{"clusterID": "xxx-81a1-40f6-97b7-xx","monitors": ["10.17.x.x:6789","10.17.x.x:6789","10.17.x.x:6789"],"cephFS": {"subvolumeGroup": "csi","kernelMountOptions": "","netNamespaceFilePath": "", # 注意这里写空,不然会报错找不到网络命名空间"fuseMountOptions": ""},"readAffinity": {"enabled": false,"crushLocationLabels": []}}]cluster-mapping.json: |-[]

metadata:name: ceph-csi-config

大多数参数的含义在注释中都有说明

创建ceph-config配置

kubectl apply -f ceph-conf.yaml

cat ceph-conf.yaml

---

# This is a sample configmap that helps define a Ceph configuration as required

# by the CSI plugins.# Sample ceph.conf available at

# https://github.com/ceph/ceph/blob/master/src/sample.ceph.conf Detailed

# documentation is available at

# https://docs.ceph.com/en/latest/rados/configuration/ceph-conf/

apiVersion: v1

kind: ConfigMap

data:ceph.conf: |[global]auth_cluster_required = cephxauth_service_required = cephxauth_client_required = cephx# keyring is a required key and its value should be emptykeyring: |

metadata:name: ceph-config

创建ceph-secrets

kubectl apply -f secret.yaml

cat secret.yaml

---

apiVersion: v1

kind: Secret

metadata:name: csi-cephfs-secretnamespace: kube-system

stringData:# Required for statically provisioned volumes 静态制备卷userID: k8s_cephfs_useruserKey: AQA8DwlnoxxrepFw==# Required for dynamically provisioned volumes 动态制备卷adminID: adminadminKey: AQDVCwVnKxxzVdupC5QA==# Encryption passphraseencryptionPassphrase: test_passphrase

部署csi provisioner

---

kind: Service

apiVersion: v1

metadata:name: csi-cephfsplugin-provisionerlabels:app: csi-metrics

spec:selector:app: csi-cephfsplugin-provisionerports:- name: http-metricsport: 8080protocol: TCPtargetPort: 8681---

kind: Deployment

apiVersion: apps/v1

metadata:name: csi-cephfsplugin-provisioner

spec:selector:matchLabels:app: csi-cephfsplugin-provisionerreplicas: 3template:metadata:labels:app: csi-cephfsplugin-provisionerspec:serviceAccountName: cephfs-csi-provisionerpriorityClassName: system-cluster-criticalcontainers:- name: csi-cephfsplugin# for stable functionality replace canary with latest release versionimage: registry.xx.local/csi/cephcsi:v3.12.0args:- "--nodeid=$(NODE_ID)"- "--type=cephfs"- "--controllerserver=true"- "--endpoint=$(CSI_ENDPOINT)"- "--v=5"- "--drivername=cephfs.csi.ceph.com"- "--pidlimit=-1"- "--enableprofiling=false"- "--setmetadata=true"env:- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIP- name: NODE_IDvalueFrom:fieldRef:fieldPath: spec.nodeName- name: CSI_ENDPOINTvalue: unix:///csi/csi-provisioner.sock- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace# - name: KMS_CONFIGMAP_NAME# value: encryptionConfigimagePullPolicy: "IfNotPresent"volumeMounts:- name: socket-dirmountPath: /csi- name: host-sysmountPath: /sys- name: lib-modulesmountPath: /lib/modulesreadOnly: true- name: host-devmountPath: /dev- name: ceph-configmountPath: /etc/ceph/- name: ceph-csi-configmountPath: /etc/ceph-csi-config/- name: keys-tmp-dirmountPath: /tmp/csi/keys- name: csi-provisionerimage: registry.xx.local/csi/csi-provisioner:v5.0.1args:- "--csi-address=$(ADDRESS)"- "--v=1"- "--timeout=150s"- "--leader-election=true"- "--retry-interval-start=500ms"- "--feature-gates=HonorPVReclaimPolicy=true"- "--prevent-volume-mode-conversion=true"- "--extra-create-metadata=true"- "--http-endpoint=$(POD_IP):8090"env:- name: ADDRESSvalue: unix:///csi/csi-provisioner.sock- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPimagePullPolicy: "IfNotPresent"ports:- containerPort: 8090name: http-endpointprotocol: TCPvolumeMounts:- name: socket-dirmountPath: /csi- name: csi-resizerimage: registry.xx.local/csi/csi-resizer:v1.11.1args:- "--csi-address=$(ADDRESS)"- "--v=1"- "--timeout=150s"- "--leader-election"- "--retry-interval-start=500ms"- "--handle-volume-inuse-error=false"- "--feature-gates=RecoverVolumeExpansionFailure=true"- "--http-endpoint=$(POD_IP):8091"env:- name: ADDRESSvalue: unix:///csi/csi-provisioner.sock- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPimagePullPolicy: "IfNotPresent"ports:- containerPort: 8091name: http-endpointprotocol: TCPvolumeMounts:- name: socket-dirmountPath: /csi- name: csi-snapshotterimage: registry.xx.local/csi/csi-snapshotter:v8.0.1args:- "--csi-address=$(ADDRESS)"- "--v=1"- "--timeout=150s"- "--leader-election=true"- "--extra-create-metadata=true"- "--enable-volume-group-snapshots=true"- "--http-endpoint=$(POD_IP):8092"env:- name: ADDRESSvalue: unix:///csi/csi-provisioner.sock- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPimagePullPolicy: "IfNotPresent"ports:- containerPort: 8092name: http-endpointprotocol: TCPvolumeMounts:- name: socket-dirmountPath: /csi- name: liveness-prometheusimage: registry.xx.local/csi/cephcsi:v3.12.0args:- "--type=liveness"- "--endpoint=$(CSI_ENDPOINT)"- "--metricsport=8681"- "--metricspath=/metrics"- "--polltime=60s"- "--timeout=3s"env:- name: CSI_ENDPOINTvalue: unix:///csi/csi-provisioner.sock- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPports:- containerPort: 8681name: http-metricsprotocol: TCPvolumeMounts:- name: socket-dirmountPath: /csiimagePullPolicy: "IfNotPresent"volumes:- name: socket-diremptyDir: {medium: "Memory"}- name: host-syshostPath:path: /sys- name: lib-moduleshostPath:path: /lib/modules- name: host-devhostPath:path: /dev- name: ceph-configconfigMap:name: ceph-config- name: ceph-csi-configconfigMap:name: ceph-csi-config- name: keys-tmp-diremptyDir: {medium: "Memory"}部署csi cephfs驱动

kubectl create -f csi-cephfsplugin.yamlcat csi-cephfsplugin.yaml---

kind: DaemonSet

apiVersion: apps/v1

metadata:name: csi-cephfsplugin

spec:selector:matchLabels:app: csi-cephfsplugintemplate:metadata:labels:app: csi-cephfspluginspec:serviceAccountName: cephfs-csi-nodepluginpriorityClassName: system-node-criticalhostNetwork: truehostPID: true# to use e.g. Rook orchestrated cluster, and mons' FQDN is# resolved through k8s service, set dns policy to cluster firstdnsPolicy: ClusterFirstWithHostNetcontainers:- name: csi-cephfspluginsecurityContext:privileged: truecapabilities:add: ["SYS_ADMIN"]allowPrivilegeEscalation: true# for stable functionality replace canary with latest release versionimage: registry.xx.local/csi/cephcsi:v3.12.0args:- "--nodeid=$(NODE_ID)"- "--type=cephfs"- "--nodeserver=true"- "--endpoint=$(CSI_ENDPOINT)"- "--v=5"- "--drivername=cephfs.csi.ceph.com"- "--enableprofiling=false"- "--forcecephkernelclient=true" # 强制启用内核挂载# If topology based provisioning is desired, configure required# node labels representing the nodes topology domain# and pass the label names below, for CSI to consume and advertise# its equivalent topology domain# - "--domainlabels=failure-domain/region,failure-domain/zone"## Options to enable read affinity.# If enabled Ceph CSI will fetch labels from kubernetes node and# pass `read_from_replica=localize,crush_location=type:value` during# CephFS mount command. refer:# https://docs.ceph.com/en/latest/man/8/rbd/#kernel-rbd-krbd-options# for more details.# - "--enable-read-affinity=true"# - "--crush-location-labels=topology.io/zone,topology.io/rack"env:- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIP- name: NODE_IDvalueFrom:fieldRef:fieldPath: spec.nodeName- name: CSI_ENDPOINTvalue: unix:///csi/csi.sock- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace# - name: KMS_CONFIGMAP_NAME# value: encryptionConfigimagePullPolicy: "IfNotPresent"volumeMounts:- name: socket-dirmountPath: /csi- name: mountpoint-dirmountPath: /var/lib/kubelet/podsmountPropagation: Bidirectional- name: plugin-dirmountPath: /var/lib/kubelet/pluginsmountPropagation: "Bidirectional"- name: host-sysmountPath: /sys- name: etc-selinuxmountPath: /etc/selinuxreadOnly: true- name: lib-modulesmountPath: /lib/modulesreadOnly: true- name: host-devmountPath: /dev- name: host-mountmountPath: /run/mount- name: ceph-configmountPath: /etc/ceph/- name: ceph-csi-configmountPath: /etc/ceph-csi-config/- name: keys-tmp-dirmountPath: /tmp/csi/keys- name: ceph-csi-mountinfomountPath: /csi/mountinfo- name: driver-registrar# This is necessary only for systems with SELinux, where# non-privileged sidecar containers cannot access unix domain socket# created by privileged CSI driver container.securityContext:privileged: trueallowPrivilegeEscalation: trueimage: registry.xx.local/csi/csi-node-driver-registrar:v2.11.1args:- "--v=1"- "--csi-address=/csi/csi.sock"- "--kubelet-registration-path=/var/lib/kubelet/plugins/cephfs.csi.ceph.com/csi.sock"env:- name: KUBE_NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeNamevolumeMounts:- name: socket-dirmountPath: /csi- name: registration-dirmountPath: /registration- name: liveness-prometheussecurityContext:privileged: trueallowPrivilegeEscalation: trueimage: registry.xx.local/csi/cephcsi:v3.12.0args:- "--type=liveness"- "--endpoint=$(CSI_ENDPOINT)"- "--metricsport=8681"- "--metricspath=/metrics"- "--polltime=60s"- "--timeout=3s"env:- name: CSI_ENDPOINTvalue: unix:///csi/csi.sock- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPvolumeMounts:- name: socket-dirmountPath: /csiimagePullPolicy: "IfNotPresent"volumes:- name: socket-dirhostPath:path: /var/lib/kubelet/plugins/cephfs.csi.ceph.com/type: DirectoryOrCreate- name: registration-dirhostPath:path: /var/lib/kubelet/plugins_registry/type: Directory- name: mountpoint-dirhostPath:path: /var/lib/kubelet/podstype: DirectoryOrCreate- name: plugin-dirhostPath:path: /var/lib/kubelet/pluginstype: Directory- name: host-syshostPath:path: /sys- name: etc-selinuxhostPath:path: /etc/selinux- name: lib-moduleshostPath:path: /lib/modules- name: host-devhostPath:path: /dev- name: host-mounthostPath:path: /run/mount- name: ceph-configconfigMap:name: ceph-config- name: ceph-csi-configconfigMap:name: ceph-csi-config- name: keys-tmp-diremptyDir: {medium: "Memory"}- name: ceph-csi-mountinfohostPath:path: /var/lib/kubelet/plugins/cephfs.csi.ceph.com/mountinfotype: DirectoryOrCreate

---

# This is a service to expose the liveness metrics

apiVersion: v1

kind: Service

metadata:name: csi-metrics-cephfspluginlabels:app: csi-metrics

spec:ports:- name: http-metricsport: 8080protocol: TCPtargetPort: 8681selector:app: csi-cephfsplugin

验证各个组件的运行状态及日志是否正常

root@k8s-master01:~# kubectl get csidriver

NAME ATTACHREQUIRED PODINFOONMOUNT STORAGECAPACITY TOKENREQUESTS REQUIRESREPUBLISH MODES AGE

cephfs.csi.ceph.com false false false <unset> false Persistent 12d

csi.tigera.io true true false <unset> false Ephemeral 77droot@k8s-master01:~# kubectl get pod -o wide |grep csi-cephfs

csi-cephfs-demo-deployment-76cdd9b784-2gqwf 1/1 Running 0 107m 10.244.19.115 10.17.3.9 <none> <none>

csi-cephfs-demo-deployment-76cdd9b784-ncwbs 1/1 Running 0 107m 10.244.3.191 10.17.3.186 <none> <none>

csi-cephfs-demo-deployment-76cdd9b784-qsk75 1/1 Running 0 107m 10.244.14.119 10.17.3.4 <none> <none>

csi-cephfsplugin-4n6g5 3/3 Running 0 154m 10.17.3.3 10.17.3.3 <none> <none>

csi-cephfsplugin-4nbsm 3/3 Running 0 116m 10.17.3.186 10.17.3.186 <none> <none>

csi-cephfsplugin-5p77f 3/3 Running 0 154m 10.17.3.20 k8s-worker02 <none> <none>

csi-cephfsplugin-867dx 3/3 Running 0 154m 10.17.3.62 k8s-worker03 <none> <none>

csi-cephfsplugin-9cdzw 3/3 Running 0 154m 10.17.3.11 10.17.3.11 <none> <none>

csi-cephfsplugin-chnx5 3/3 Running 0 154m 10.17.3.5 10.17.3.5 <none> <none>

csi-cephfsplugin-chrft 3/3 Running 0 154m 10.17.3.58 k8s-worker04 <none> <none>

csi-cephfsplugin-d7nkt 3/3 Running 0 154m 192.168.204.45 192.168.204.45 <none> <none>

csi-cephfsplugin-dgzlg 3/3 Running 0 154m 10.17.3.238 k8s-worker05 <none> <none>

csi-cephfsplugin-dvckd 3/3 Running 0 154m 10.17.3.13 10.17.3.13 <none> <none>

csi-cephfsplugin-f4tqs 3/3 Running 0 154m 10.17.3.83 10.17.3.83 <none> <none>

csi-cephfsplugin-fwnbl 3/3 Running 0 154m 10.17.3.2 10.17.3.2 <none> <none>

csi-cephfsplugin-gzbff 3/3 Running 0 154m 10.17.3.7 10.17.3.7 <none> <none>

csi-cephfsplugin-h82mv 3/3 Running 0 154m 10.17.3.4 10.17.3.4 <none> <none>

csi-cephfsplugin-jfnqd 3/3 Running 0 154m 10.17.3.10 10.17.3.10 <none> <none>

csi-cephfsplugin-krgv8 3/3 Running 0 154m 10.17.3.6 10.17.3.6 <none> <none>

csi-cephfsplugin-nsf7h 3/3 Running 0 154m 10.17.3.66 k8s-worker06 <none> <none>

csi-cephfsplugin-provisioner-f96798c85-x6lr6 5/5 Running 0 159m 10.244.23.119 10.17.3.13 <none> <none>

csi-cephfsplugin-rf2v6 3/3 Running 0 154m 10.17.3.9 10.17.3.9 <none> <none>

csi-cephfsplugin-v6h6z 3/3 Running 0 154m 10.17.3.73 10.17.3.73 <none> <none>

csi-cephfsplugin-vctp6 3/3 Running 0 154m 10.17.3.230 k8s-worker01 <none> <none>

csi-cephfsplugin-w2nm9 3/3 Running 0 154m 10.17.3.8 10.17.3.8 <none> <none>

csi-cephfsplugin-x6dcf 3/3 Running 0 154m 10.17.3.12 10.17.3.12 <none> <none>

csi-cephfsplugin-provisioner pod日志

csi-provisioner容器日志

2024-10-23T14:35:13.589937869+08:00 I1023 06:35:13.589736 1 feature_gate.go:254] feature gates: {map[HonorPVReclaimPolicy:true]}

2024-10-23T14:35:13.589973529+08:00 I1023 06:35:13.589858 1 csi-provisioner.go:154] Version: v5.0.1

2024-10-23T14:35:13.589979119+08:00 I1023 06:35:13.589868 1 csi-provisioner.go:177] Building kube configs for running in cluster...

2024-10-23T14:35:13.591482965+08:00 I1023 06:35:13.591422 1 common.go:143] "Probing CSI driver for readiness"

2024-10-23T14:35:13.598643852+08:00 I1023 06:35:13.598566 1 csi-provisioner.go:302] CSI driver does not support PUBLISH_UNPUBLISH_VOLUME, not watching VolumeAttachments

2024-10-23T14:35:13.598958433+08:00 I1023 06:35:13.598904 1 csi-provisioner.go:592] ServeMux listening at "10.244.23.119:8090"

2024-10-23T14:35:13.599952096+08:00 I1023 06:35:13.599868 1 leaderelection.go:250] attempting to acquire leader lease kube-system/cephfs-csi-ceph-com...

2024-10-23T14:35:33.530700409+08:00 I1023 06:35:33.530566 1 leaderelection.go:260] successfully acquired lease kube-system/cephfs-csi-ceph-com

2024-10-23T14:35:33.632098107+08:00 I1023 06:35:33.631952 1 controller.go:824] "Starting provisioner controller" component="cephfs.csi.ceph.com_csi-cephfsplugin-provisioner-f96798c85-x6lr6_eb7f43e1-88dc-44e8-ba98-ab90a1d4f049"

2024-10-23T14:35:33.632175357+08:00 I1023 06:35:33.632020 1 clone_controller.go:66] Starting CloningProtection controller

2024-10-23T14:35:33.632293238+08:00 I1023 06:35:33.632099 1 clone_controller.go:82] Started CloningProtection controller

2024-10-23T14:35:33.632324568+08:00 I1023 06:35:33.632135 1 volume_store.go:98] "Starting save volume queue"

2024-10-23T14:35:33.733294954+08:00 I1023 06:35:33.733191 1 controller.go:873] "Started provisioner controller" component="cephfs.csi.ceph.com_csi-cephfsplugin-provisioner-f96798c85-x6lr6_eb7f43e1-88dc-44e8-ba98-ab90a1d4f049"

2024-10-23T14:40:49.765586971+08:00 I1023 06:40:49.765451 1 event.go:389] "Event occurred" object="kube-system/csi-cephfs-pvc" fieldPath="" kind="PersistentVolumeClaim" apiVersion="v1" type="Normal" reason="Provisioning" message="External provisioner is provisioning volume for claim \"kube-system/csi-cephfs-pvc\""

2024-10-23T14:40:49.932347893+08:00 I1023 06:40:49.932255 1 event.go:389] "Event occurred" object="kube-system/csi-cephfs-pvc" fieldPath="" kind="PersistentVolumeClaim" apiVersion="v1" type="Normal" reason="ProvisioningSucceeded" message="Successfully provisioned volume pvc-eba8bff3-5451-42e7-b30b-3b91a8425361"

csi-cephfsplugin容器日志

# 挂载的详细信息及使用的mounter

2024-10-23T14:40:49.770141698+08:00 I1023 06:40:49.770027 1 utils.go:241] ID: 20 Req-ID: pvc-eba8bff3-5451-42e7-b30b-3b91a8425361 GRPC request: {"capacity_range":{"required_bytes":1073741824},"name":"pvc-eba8bff3-5451-42e7-b30b-3b91a8425361","parameters":{"clusterID":"5a6fdfb7-81a1-40f6-97b7-c92f96de9ac5","csi.storage.k8s.io/pv/name":"pvc-eba8bff3-5451-42e7-b30b-3b91a8425361","csi.storage.k8s.io/pvc/name":"csi-cephfs-pvc","csi.storage.k8s.io/pvc/namespace":"kube-system","fsName":"k8scephfs","fuseMountOptions":"debug","mounter":"kernel","pool":"cephfs-data"},"secrets":"***stripped***","volume_capabilities":[{"AccessType":{"Mount":{}},"access_mode":{"mode":5}}]}

2024-10-23T14:35:13.406897197+08:00 I1023 06:35:13.406709 1 cephcsi.go:196] Driver version: v3.12.0 and Git version: 42797edd7e5e640e755a948a018d69c9200b7e60

2024-10-23T14:35:13.406945017+08:00 I1023 06:35:13.406835 1 cephcsi.go:228] Starting driver type: cephfs with name: cephfs.csi.ceph.com

2024-10-23T14:35:13.428314917+08:00 W1023 06:35:13.428215 1 util.go:278] kernel 4.15.0-70-generic does not support required features

2024-10-23T14:35:13.428337647+08:00 I1023 06:35:13.428264 1 volumemounter.go:82] kernel version < 4.17 might not support quota feature, hence not loading kernel client

2024-10-23T14:35:13.455657438+08:00 I1023 06:35:13.454407 1 volumemounter.go:90] loaded mounter: fuse

2024-10-23T14:35:13.455676979+08:00 I1023 06:35:13.454860 1 driver.go:110] Enabling controller service capability: CREATE_DELETE_VOLUME

2024-10-23T14:35:13.455681369+08:00 I1023 06:35:13.454871 1 driver.go:110] Enabling controller service capability: CREATE_DELETE_SNAPSHOT

2024-10-23T14:35:13.455685229+08:00 I1023 06:35:13.454876 1 driver.go:110] Enabling controller service capability: EXPAND_VOLUME

2024-10-23T14:35:13.455688799+08:00 I1023 06:35:13.454881 1 driver.go:110] Enabling controller service capability: CLONE_VOLUME

2024-10-23T14:35:13.455692319+08:00 I1023 06:35:13.454885 1 driver.go:110] Enabling controller service capability: SINGLE_NODE_MULTI_WRITER

2024-10-23T14:35:13.455695719+08:00 I1023 06:35:13.454895 1 driver.go:123] Enabling volume access mode: MULTI_NODE_MULTI_WRITER

2024-10-23T14:35:13.455699429+08:00 I1023 06:35:13.454900 1 driver.go:123] Enabling volume access mode: SINGLE_NODE_WRITER

2024-10-23T14:35:13.455702909+08:00 I1023 06:35:13.454904 1 driver.go:123] Enabling volume access mode: SINGLE_NODE_MULTI_WRITER

2024-10-23T14:35:13.455706409+08:00 I1023 06:35:13.454908 1 driver.go:123] Enabling volume access mode: SINGLE_NODE_SINGLE_WRITER

2024-10-23T14:35:13.455710189+08:00 I1023 06:35:13.454915 1 driver.go:142] Enabling group controller service capability: CREATE_DELETE_GET_VOLUME_GROUP_SNAPSHOT

2024-10-23T14:35:13.455713629+08:00 I1023 06:35:13.455398 1 server.go:117] Listening for connections on address: &net.UnixAddr{Name:"//csi/csi-provisioner.sock", Net:"unix"}

2024-10-23T14:35:13.455717469+08:00 I1023 06:35:13.455472 1 server.go:114] listening for CSI-Addons requests on address: &net.UnixAddr{Name:"/tmp/csi-addons.sock", Net:"unix"}

2024-10-23T14:35:13.592268468+08:00 I1023 06:35:13.592202 1 utils.go:240] ID: 1 GRPC call: /csi.v1.Identity/Probe

2024-10-23T14:35:13.593634353+08:00 I1023 06:35:13.593568 1 utils.go:241] ID: 1 GRPC request: {}

2024-10-23T14:35:13.593683873+08:00 I1023 06:35:13.593637 1 utils.go:247] ID: 1 GRPC response: {}

2024-10-23T14:35:13.595699881+08:00 I1023 06:35:13.595609 1 utils.go:240] ID: 2 GRPC call: /csi.v1.Identity/GetPluginInfo

2024-10-23T14:35:13.595747481+08:00 I1023 06:35:13.595677 1 utils.go:241] ID: 2 GRPC request: {}

2024-10-23T14:35:13.595760301+08:00 I1023 06:35:13.595690 1 identityserver-default.go:40] ID: 2 Using default GetPluginInfo

2024-10-23T14:35:13.595796801+08:00 I1023 06:35:13.595768 1 utils.go:247] ID: 2 GRPC response: {"name":"cephfs.csi.ceph.com","vendor_version":"v3.12.0"}

2024-10-23T14:35:13.596992025+08:00 I1023 06:35:13.596922 1 utils.go:240] ID: 3 GRPC call: /csi.v1.Identity/GetPluginCapabilities

2024-10-23T14:35:13.597060556+08:00 I1023 06:35:13.596969 1 utils.go:241] ID: 3 GRPC request: {}

2024-10-23T14:35:13.597158606+08:00 I1023 06:35:13.597108 1 utils.go:247] ID: 3 GRPC response: {"capabilities":[{"Type":{"Service":{"type":1}}},{"Type":{"VolumeExpansion":{"type":1}}},{"Type":{"Service":{"type":3}}}]}2024-10-23T14:35:13.597920769+08:00 I1023 06:35:13.597859 1 utils.go:240] ID: 4 GRPC call: /csi.v1.Controller/ControllerGetCapabilities

2024-10-23T14:35:13.597964159+08:00 I1023 06:35:13.597924 1 utils.go:241] ID: 4 GRPC request: {}

2024-10-23T14:35:13.597969969+08:00 I1023 06:35:13.597937 1 controllerserver-default.go:42] ID: 4 Using default ControllerGetCapabilities

2024-10-23T14:35:13.598166450+08:00 I1023 06:35:13.598105 1 utils.go:247] ID: 4 GRPC response: {"capabilities":[{"Type":{"Rpc":{"type":1}}},{"Type":{"Rpc":{"type":5}}},{"Type":{"Rpc":{"type":9}}},{"Type":{"Rpc":{"type":7}}},{"Type":{"Rpc":{"type":13}}}]}

2024-10-23T14:35:13.778536822+08:00 I1023 06:35:13.778466 1 utils.go:240] ID: 5 GRPC call: /csi.v1.Identity/Probe

2024-10-23T14:40:49.769752766+08:00 I1023 06:40:49.769641 1 utils.go:240] ID: 20 Req-ID: pvc-eba8bff3-5451-42e7-b30b-3b91a8425361 GRPC call: /csi.v1.Controller/CreateVolume

2024-10-23T14:40:49.794975480+08:00 I1023 06:40:49.794862 1 omap.go:89] ID: 20 Req-ID: pvc-eba8bff3-5451-42e7-b30b-3b91a8425361 got omap values: (pool="cephfs-metadata", namespace="csi", name="csi.volumes.default"): map[]

2024-10-23T14:40:49.803361272+08:00 I1023 06:40:49.803250 1 omap.go:159] ID: 20 Req-ID: pvc-eba8bff3-5451-42e7-b30b-3b91a8425361 set omap keys (pool="cephfs-metadata", namespace="csi", name="csi.volumes.default"): map[csi.volume.pvc-eba8bff3-5451-42e7-b30b-3b91a8425361:0d07c160-0f88-45a1-830e-a81fc60c8912])

2024-10-23T14:40:49.806120482+08:00 I1023 06:40:49.806011 1 omap.go:159] ID: 20 Req-ID: pvc-eba8bff3-5451-42e7-b30b-3b91a8425361 set omap keys (pool="cephfs-metadata", namespace="csi", name="csi.volume.0d07c160-0f88-45a1-830e-a81fc60c8912"): map[csi.imagename:csi-vol-0d07c160-0f88-45a1-830e-a81fc60c8912 csi.volname:pvc-eba8bff3-5451-42e7-b30b-3b91a8425361 csi.volume.owner:kube-system])

2024-10-23T14:40:49.806146432+08:00 I1023 06:40:49.806039 1 fsjournal.go:311] ID: 20 Req-ID: pvc-eba8bff3-5451-42e7-b30b-3b91a8425361 Generated Volume ID (0001-0024-5a6fdfb7-81a1-40f6-97b7-c92f96de9ac5-0000000000000001-0d07c160-0f88-45a1-830e-a81fc60c8912) and subvolume name (csi-vol-0d07c160-0f88-45a1-830e-a81fc60c8912) for request name (pvc-eba8bff3-5451-42e7-b30b-3b91a8425361)

2024-10-23T14:40:49.920503689+08:00 I1023 06:40:49.920371 1 controllerserver.go:475] ID: 20 Req-ID: pvc-eba8bff3-5451-42e7-b30b-3b91a8425361 cephfs: successfully created backing volume named csi-vol-0d07c160-0f88-45a1-830e-a81fc60c8912 for request name pvc-eba8bff3-5451-42e7-b30b-3b91a8425361

2024-10-23T14:40:49.920723069+08:00 I1023 06:40:49.920630 1 utils.go:247] ID: 20 Req-ID: pvc-eba8bff3-5451-42e7-b30b-3b91a8425361 GRPC response: {"volume":{"capacity_bytes":1073741824,"volume_context":{"clusterID":"5a6fdfb7-81a1-40f6-97b7-c92f96de9ac5","fsName":"k8scephfs","fuseMountOptions":"debug","mounter":"kernel","pool":"cephfs-data","subvolumeName":"csi-vol-0d07c160-0f88-45a1-830e-a81fc60c8912","subvolumePath":"/volumes/csi/csi-vol-0d07c160-0f88-45a1-830e-a81fc60c8912/8f9a8e9b-6e68-4bf9-929e-2d6561542bc0"},"volume_id":"0001-0024-5a6fdfb7-81a1-40f6-97b7-c92f96de9ac5-0000000000000001-0d07c160-0f88-45a1-830e-a81fc60c8912"}}

node节点上的csi-cephfsplugin容器日志

2024-10-23T15:16:14.126912386+08:00 I1023 07:16:14.126508 12954 cephcsi.go:196] Driver version: v3.12.0 and Git version: 42797edd7e5e640e755a948a018d69c9200b7e60

2024-10-23T15:16:14.126947537+08:00 I1023 07:16:14.126857 12954 cephcsi.go:228] Starting driver type: cephfs with name: cephfs.csi.ceph.com

# 加载了内核 mounter

2024-10-23T15:16:14.140884138+08:00 I1023 07:16:14.140798 12954 volumemounter.go:79] loaded mounter: kernel

2024-10-23T15:16:14.154482429+08:00 I1023 07:16:14.154387 12954 volumemounter.go:90] loaded mounter: fuse

2024-10-23T15:16:14.166941296+08:00 I1023 07:16:14.166812 12954 mount_linux.go:282] Detected umount with safe 'not mounted' behavior

2024-10-23T15:16:14.167345207+08:00 I1023 07:16:14.167238 12954 server.go:114] listening for CSI-Addons requests on address: &net.UnixAddr{Name:"/tmp/csi-addons.sock", Net:"unix"}

2024-10-23T15:16:14.167380757+08:00 I1023 07:16:14.167331 12954 server.go:117] Listening for connections on address: &net.UnixAddr{Name:"//csi/csi.sock", Net:"unix"}

2024-10-23T15:16:14.260538675+08:00 I1023 07:16:14.260460 12954 utils.go:240] ID: 1 GRPC call: /csi.v1.Identity/GetPluginInfo

2024-10-23T15:16:14.261889040+08:00 I1023 07:16:14.261820 12954 utils.go:241] ID: 1 GRPC request: {}

2024-10-23T15:16:14.261897400+08:00 I1023 07:16:14.261846 12954 identityserver-default.go:40] ID: 1 Using default GetPluginInfo

2024-10-23T15:16:14.261981370+08:00 I1023 07:16:14.261930 12954 utils.go:247] ID: 1 GRPC response: {"name":"cephfs.csi.ceph.com","vendor_version":"v3.12.0"}

2024-10-23T15:16:14.871333492+08:00 I1023 07:16:14.871244 12954 utils.go:240] ID: 2 GRPC call: /csi.v1.Node/NodeGetInfo

2024-10-23T15:16:14.871428942+08:00 I1023 07:16:14.871354 12954 utils.go:241] ID: 2 GRPC request: {}

2024-10-23T15:16:14.871453492+08:00 I1023 07:16:14.871369 12954 nodeserver-default.go:45] ID: 2 Using default NodeGetInfo

2024-10-23T15:16:14.871526333+08:00 I1023 07:16:14.871470 12954 utils.go:247] ID: 2 GRPC response: {"accessible_topology":{},"node_id":"10.17.3.186"}

2024-10-23T15:16:31.092306342+08:00 I1023 07:16:31.092201 12954 utils.go:240] ID: 3 GRPC call: /csi.v1.Node/NodeGetCapabilities

2024-10-23T15:16:31.092374873+08:00 I1023 07:16:31.092277 12954 utils.go:241] ID: 3 GRPC request: {}

2024-10-23T15:16:31.092507993+08:00 I1023 07:16:31.092430 12954 utils.go:247] ID: 3 GRPC response: {"capabilities":[{"Type":{"Rpc":{"type":1}}},{"Type":{"Rpc":{"type":2}}},{"Type":{"Rpc":{"type":4}}},{"Type":{"Rpc":{"type":5}}}]}

2024-10-23T15:16:31.093512467+08:00 I1023 07:16:31.093450 12954 utils.go:240] ID: 4 GRPC call: /csi.v1.Node/NodeGetVolumeStats

2024-10-23T15:16:31.093569477+08:00 I1023 07:16:31.093532 12954 utils.go:241] ID: 4 GRPC request: {"volume_id":"0001-0024-5a6fdfb7-81a1-40f6-97b7-c92f96de9ac5-0000000000000001-0d07c160-0f88-45a1-830e-a81fc60c8912","volume_path":"/var/lib/kubelet/pods/413d299c-711d-41de-ba76-b895cb42d4f6/volumes/kubernetes.io~csi/pvc-eba8bff3-5451-42e7-b30b-3b91a8425361/mount"}

2024-10-23T15:16:31.094377050+08:00 I1023 07:16:31.094299 12954 utils.go:247] ID: 4 GRPC response: {"usage":[{"available":1043433783296,"total":1043433783296,"unit":1}],"volume_condition":{"message":"volume is in a healthy condition"}}

2024-10-23T15:17:14.479549223+08:00 I1023 07:17:14.479417 12954 utils.go:240] ID: 5 GRPC call: /csi.v1.Identity/Probe

示例

root@k8s-master01:/data/k8s/ceph-fs-csi/ceph-csi/deploy/cephfs/kubernetes/xxx-csi/example# cat pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: csi-cephfs-pvc

spec:accessModes:- ReadWriteManyresources:requests:storage: 1GistorageClassName: csi-cephfs-sc

root@k8s-master01:/data/k8s/ceph-fs-csi/ceph-csi/deploy/cephfs/kubernetes/xxx-csi/example# cat pod.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: csi-cephfs-demo-deployment

spec:replicas: 3 selector:matchLabels:app: web-servertemplate:metadata:labels:app: web-serverspec:containers:- name: web-serverimage: registry.xx.local/csi/nginx:latest-arm64resources:requests:memory: "2Gi"volumeMounts:- name: mypvcmountPath: /var/lib/wwwvolumes:- name: mypvcpersistentVolumeClaim:claimName: csi-cephfs-pvcreadOnly: false

验证

kubectl get pod -o wide |grep demo

csi-cephfs-demo-pod 1/1 Running 0 7m24s 10.244.3.189 10.17.3.186 <none> <none>

kubectl exec -it csi-cephfs-demo-pod -- bash

root@csi-cephfs-demo-pod:/# df -Th

Filesystem Type Size Used Avail Use% Mounted on

overlay overlay 98G 26G 69G 28% /

tmpfs tmpfs 64M 0 64M 0% /dev

tmpfs tmpfs 16G 0 16G 0% /sys/fs/cgroup

/dev/vda2 ext4 98G 26G 69G 28% /etc/hosts

shm tmpfs 64M 0 64M 0% /dev/shm

10.17.3.14:6789,10.17.3.15:6789,10.17.3.16:6789:/volumes/csi/csi-vol-0d07c160-0f88-45a1-830e-a81fc60c8912/8f9a8e9b-6e68-4bf9-929e-2d6561542bc0 ceph 972G 0 972G 0% /var/lib/www

tmpfs tmpfs 32G 12K 32G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs tmpfs 16G 0 16G 0% /proc/acpi

tmpfs tmpfs 16G 0 16G 0% /proc/scsi

tmpfs tmpfs 16G 0 16G 0% /sys/firmwareroot@csi-cephfs-demo-pod:/var/lib/www# touch a.txt

root@csi-cephfs-demo-pod:/var/lib/www# ls

a.txt

root@csi-cephfs-demo-pod:/var/lib/www# touch b.txt

root@csi-cephfs-demo-pod:/var/lib/www# ls

a.txt b.txt

执行如下操作的同时删除掉节点上的plugin pod看是否会中断

root@csi-cephfs-demo-pod:/var/lib/www# for i in {1..10000};do echo "${i}" >> c.txt;echo ${i};sleep 0.5;done

kubectl delete pod xxx

测试共享读写

root@csi-cephfs-demo-deployment-76cdd9b784-2gqwf:/var/lib/www# tail -n 1 /etc/hosts > 1.txt

root@k8s-master01:/data/k8s/ceph-fs-csi/ceph-csi/deploy/cephfs/kubernetes/xx-csi/example# kubectl exec -it csi-cephfs-demo-deployment-76cdd9b784-ncwbs -- bash

root@csi-cephfs-demo-deployment-76cdd9b784-ncwbs:/var/lib/www# tail -n 1 /etc/hosts >> 1.txt

root@csi-cephfs-demo-deployment-76cdd9b784-ncwbs:/var/lib/www# cat 1.txt

10.244.19.115 csi-cephfs-demo-deployment-76cdd9b784-2gqwf

10.244.3.191 csi-cephfs-demo-deployment-76cdd9b784-ncwbs

root@k8s-master01:/data/k8s/ceph-fs-csi/ceph-csi/deploy/cephfs/kubernetes/xx-csi/example# kubectl exec -it csi-cephfs-demo-deployment-76cdd9b784-qsk75 -- bash

root@csi-cephfs-demo-deployment-76cdd9b784-qsk75:/var/lib/www# tail -n 1 /etc/hosts >> 1.txtroot@csi-cephfs-demo-deployment-76cdd9b784-qsk75:/var/lib/www# cat 1.txt

10.244.19.115 csi-cephfs-demo-deployment-76cdd9b784-2gqwf

10.244.3.191 csi-cephfs-demo-deployment-76cdd9b784-ncwbs

10.244.14.119 csi-cephfs-demo-deployment-76cdd9b784-qsk75

其他

如果node节点不能挂载,看看是否有mount.ceph命令,没有则安装

apt install ceph-common -y

modprobe ceph

lsmod|grep ceph

验证

cat /proc/filesystems

reference

https://github.com/ceph/ceph-csi

cephcsi和k8s之间的版本参考

https://github.com/ceph/ceph-csi/blob/devel/README.md#ceph-csi-container-images-and-release-compatibility

https://blog.csdn.net/chenhongloves/article/details/137279168

为什么不使用external-storage模式

https://elrond.wang/2021/06/19/Kubernetes%E9%9B%86%E6%88%90Ceph/#23-external-storage%E6%A8%A1%E5%BC%8F----%E7%89%88%E6%9C%AC%E9%99%88%E6%97%A7%E4%B8%8D%E5%86%8D%E4%BD%BF%E7%94%A8

相关文章:

使用ceph-csi把ceph-fs做为k8s的storageclass使用

背景 ceph三节点集群除了做为对象存储使用,计划使用cephfs替代掉k8s里面现有的nfs-storageclass。 思路 整体实现参考ceph官方的ceph csi实现,这套环境是arm架构的,即ceph和k8s都是在arm上实现。实测下来也兼容。 ceph-fs有两种两种挂载方…...

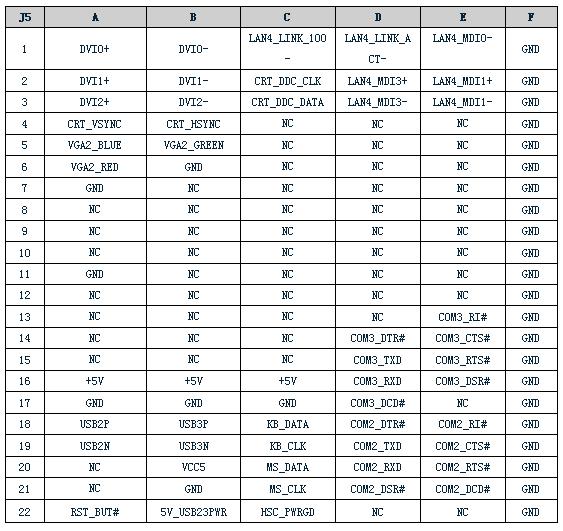

太速科技-212-RCP-601 CPCI刀片计算机

RCP-601 CPCI刀片计算机 一、产品简介 RCP-601是一款基于Intel i7双核四线程的高性能CPCI刀片式计算机,同时,将CPCI产品的欧卡结构及其可靠性、可维护性、可管理性与计算机的抗振动、抗冲击、抗宽温环境急剧变化等恶劣环境特性进行融合。产品特别…...

【解决 Windows 下 SSH “Bad owner or permissions“ 错误及端口转发问题详解】

使用 Windows 连接远程服务器出现 Bad owner or permissions 错误及解决方案 在 Windows 系统上连接远程服务器时,使用 SSH 可能会遇到以下错误: Bad owner or permissions on C:\Users\username/.ssh/config这个问题通常是由于 SSH 配置文件 .ssh/con…...

使用预训练的BERT进行金融领域问答

获取更多完整项目代码数据集,点此加入免费社区群 : 首页-置顶必看 1. 项目简介 本项目旨在开发并优化一个基于预训练BERT模型的问答系统,专注于金融领域的应用。随着金融市场信息复杂性和规模的增加,传统的信息检索方法难以高效…...

ReactOS系统中MM_REGION结构体的声明

ReactOS系统中MM_REGION结构体的声明 ReactOS系统中MM_REGION结构体的声明 文章目录 ReactOS系统中MM_REGION结构体的声明MM_REGION MM_REGION typedef struct _MM_REGION {ULONG Type;//MEM_COMMIT,MEM_RESERVEULONG Protect;//PAGE_READONLYY,PAGE_READ_WRITEULONG Length;…...

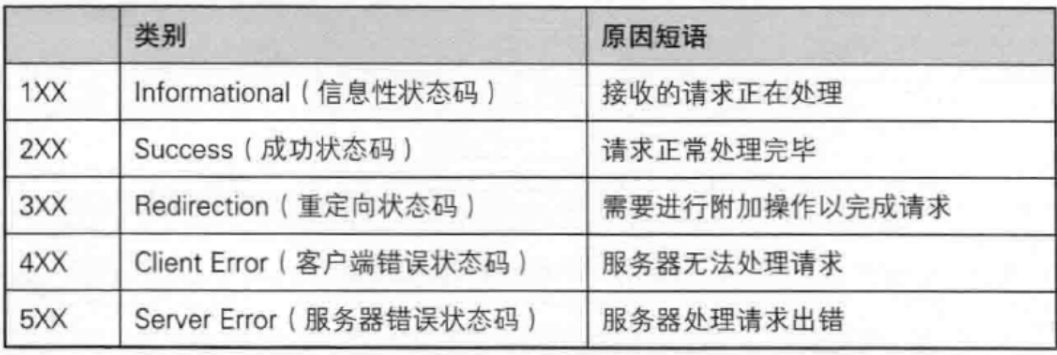

web相关知识学习笔记

一, web安全属于网络信息安全的一个分支,www即全球广域网,也叫万维网,是一个分布式图形信息系统 二, 1.①安全领域,通常将用户端(浏览器端)称为前端,服务器端称为后端 ②…...

App测试环境部署

一.JDK安装 参考以下AndroidDevTools - Android开发工具 Android SDK下载 Android Studio下载 Gradle下载 SDK Tools下载 二.SDK安装 安装地址:https://www.androiddevtools.cn/ 解压 环境变量配置 变量名:ANDROID_SDK_HOME 参考步骤: A…...

【论文阅读】Tabbed Out: Subverting the Android Custom Tab Security Model

论文链接:Tabbed Out: Subverting the Android Custom Tab Security Model | IEEE Conference Publication | IEEE Xplore 总览 “Tabbed Out: Subverting the Android Custom Tab Security Model” 由 Philipp Beer 等人撰写,发表于 2024 年 IEEE Symp…...

2025 - AI人工智能药物设计 - 中药网络药理学和毒理学的研究

中药网络药理学和毒理学的研究 TCMSP:https://old.tcmsp-e.com/tcmsp.php 然后去pubchem选择:输入Molecule Name 然后进行匹配:得到了smiles 再次通过smiles:COC1C(CC(C2C1OC(CC2O)C3CCCCC3)O)O 然后再次输入:http…...

iwebsec靶场 XSS漏洞通关笔记

目录 前言 1.反射性XSS 2.存储型XSS 3.DOM型XSS 第01关 反射型XSS漏洞 1.打开靶场 2.源码分析 3.渗透 第02关 存储型XSS漏洞 1.打开靶场 2.源码分析 4.渗透 方法1: 方法2 方法3 第03关 DOM XSS漏洞 1.打开靶场 2.源码分析 3.渗透分析 3.渗透过程…...

设计模式-单例模型(单件模式、Singleton)

单例模式是一种创建型设计模式, 让你能够保证一个类只有一个实例, 并提供一个访问该实例的全局节点。 单例模式同时解决了两个问题, 所以违反了单一职责原则: 保证一个类只有一个实例。 为什么会有人想要控制一个类所拥有的实例…...

笔记本双系统win10+Ubuntu 20.04 无法调节亮度亲测解决

sudo add-apt-repository ppa:apandada1/brightness-controller sudo apt-get update sudo apt-get install brightness-controller-simple 安装好后找到一个太阳的图标,就是这个软件,打开后调整brightness,就可以调整亮度,可…...

零基础Java第十一期:类和对象(二)

目录 一、对象的构造及初始化 1.1. 就地初始化 1.2. 默认初始化 1.3. 构造方法 二、封装 2.1. 封装的概念 2.2. 访问限定符 2.3. 封装扩展之包 三、static成员 3.1. 再谈学生类 3.2. static修饰成员变量 一、对象的构造及初始化 1.1. 就地初始化 在声明成员变…...

python笔记扩展)

NumPy包(下) python笔记扩展

9.迭代数组 nditer 是 NumPy 中的一个强大的迭代器对象,用于高效地遍历多维数组。nditer 提供了多种选项和控制参数,使得数组的迭代更加灵活和高效。 控制参数 nditer 提供了多种控制参数,用于控制迭代的行为。 1.order 参数 order 参数…...

极狐GitLab 17.5 发布 20+ 与 DevSecOps 相关的功能【一】

GitLab 是一个全球知名的一体化 DevOps 平台,很多人都通过私有化部署 GitLab 来进行源代码托管。极狐GitLab 是 GitLab 在中国的发行版,专门为中国程序员服务。可以一键式部署极狐GitLab。 学习极狐GitLab 的相关资料: 极狐GitLab 官网极狐…...

Oracle 第1章:Oracle数据库概述

在讨论Oracle数据库的入门与管理时,我们可以从以下几个方面来展开第一章的内容:“Oracle数据库概述”,包括数据库的历史与发展,Oracle数据库的特点与优势。 数据库的历史与发展 数据库技术的发展可以追溯到上世纪50年代…...

7、Nodes.js包管理工具

四、包管理工具 4.1 npm(Node Package Manager) Node.js官方内置的包管理工具。 命令行下打以下命令: npm -v如果返回版本号,则说明npm可以正常使用 4.1.1npm初始化 #在包所在目录下执行以下命令 npm init #正常初始化,手动…...

网络地址转换——NAT技术详解

网络地址转换——NAT技术详解 一、引言 随着互联网的飞速发展,IP地址资源日益紧张。为了解决IP地址资源短缺的问题,NAT(Network Address Translation,网络地址转换)技术应运而生。NAT技术允许一个私有IP地址的网络通…...

问:数据库存储过程优化实践~

存储过程优化是提高数据库性能的关键环节。通过精炼SQL语句、合理利用数据库特性、优化事务管理和错误处理,可以显著提升存储过程的执行效率和稳定性。以下是对存储过程优化实践点的阐述,结合具体示例,帮助大家更好地理解和实施这些优化策略。…...

C++ vector的使用(一)

vector vector类似于数组 遍历 这里的遍历跟string那里的遍历是一样的 1.auto(范围for) 2.迭代器遍历 3.operator void vector_test1() {vector<int> v;vector<int> v1(10, 1);//初始化10个都是1的变量vector<int> v3(v1.begin(), --…...

【JavaEE】-- HTTP

1. HTTP是什么? HTTP(全称为"超文本传输协议")是一种应用非常广泛的应用层协议,HTTP是基于TCP协议的一种应用层协议。 应用层协议:是计算机网络协议栈中最高层的协议,它定义了运行在不同主机上…...

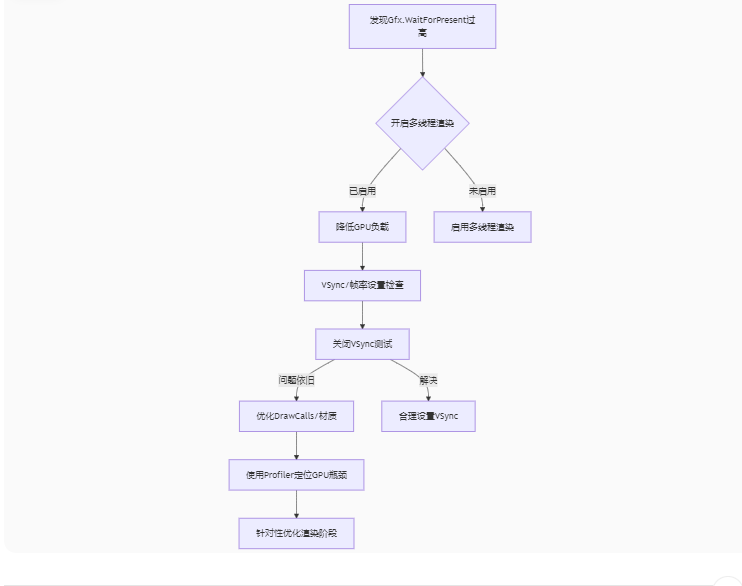

Unity3D中Gfx.WaitForPresent优化方案

前言 在Unity中,Gfx.WaitForPresent占用CPU过高通常表示主线程在等待GPU完成渲染(即CPU被阻塞),这表明存在GPU瓶颈或垂直同步/帧率设置问题。以下是系统的优化方案: 对惹,这里有一个游戏开发交流小组&…...

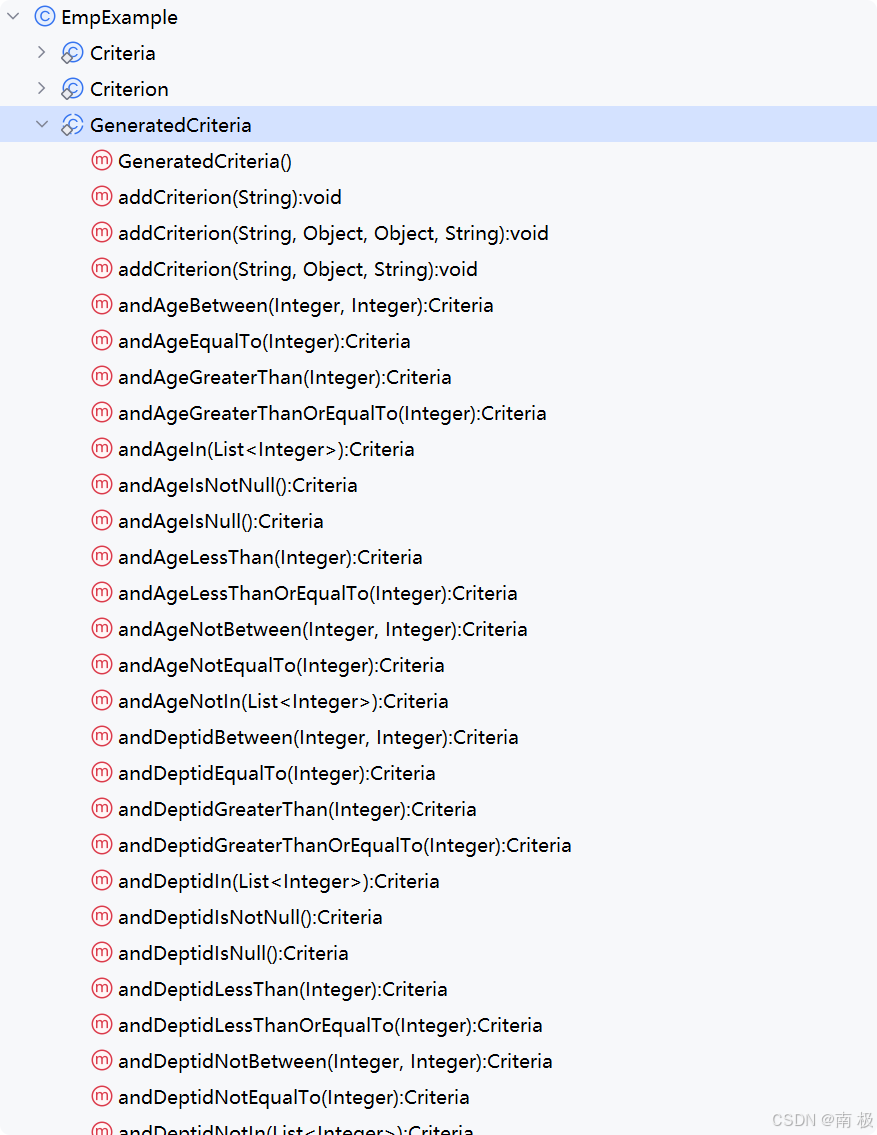

Mybatis逆向工程,动态创建实体类、条件扩展类、Mapper接口、Mapper.xml映射文件

今天呢,博主的学习进度也是步入了Java Mybatis 框架,目前正在逐步杨帆旗航。 那么接下来就给大家出一期有关 Mybatis 逆向工程的教学,希望能对大家有所帮助,也特别欢迎大家指点不足之处,小生很乐意接受正确的建议&…...

高危文件识别的常用算法:原理、应用与企业场景

高危文件识别的常用算法:原理、应用与企业场景 高危文件识别旨在检测可能导致安全威胁的文件,如包含恶意代码、敏感数据或欺诈内容的文档,在企业协同办公环境中(如Teams、Google Workspace)尤为重要。结合大模型技术&…...

第一篇:Agent2Agent (A2A) 协议——协作式人工智能的黎明

AI 领域的快速发展正在催生一个新时代,智能代理(agents)不再是孤立的个体,而是能够像一个数字团队一样协作。然而,当前 AI 生态系统的碎片化阻碍了这一愿景的实现,导致了“AI 巴别塔问题”——不同代理之间…...

C# SqlSugar:依赖注入与仓储模式实践

C# SqlSugar:依赖注入与仓储模式实践 在 C# 的应用开发中,数据库操作是必不可少的环节。为了让数据访问层更加简洁、高效且易于维护,许多开发者会选择成熟的 ORM(对象关系映射)框架,SqlSugar 就是其中备受…...

Rapidio门铃消息FIFO溢出机制

关于RapidIO门铃消息FIFO的溢出机制及其与中断抖动的关系,以下是深入解析: 门铃FIFO溢出的本质 在RapidIO系统中,门铃消息FIFO是硬件控制器内部的缓冲区,用于临时存储接收到的门铃消息(Doorbell Message)。…...

2025季度云服务器排行榜

在全球云服务器市场,各厂商的排名和地位并非一成不变,而是由其独特的优势、战略布局和市场适应性共同决定的。以下是根据2025年市场趋势,对主要云服务器厂商在排行榜中占据重要位置的原因和优势进行深度分析: 一、全球“三巨头”…...

短视频矩阵系统文案创作功能开发实践,定制化开发

在短视频行业迅猛发展的当下,企业和个人创作者为了扩大影响力、提升传播效果,纷纷采用短视频矩阵运营策略,同时管理多个平台、多个账号的内容发布。然而,频繁的文案创作需求让运营者疲于应对,如何高效产出高质量文案成…...

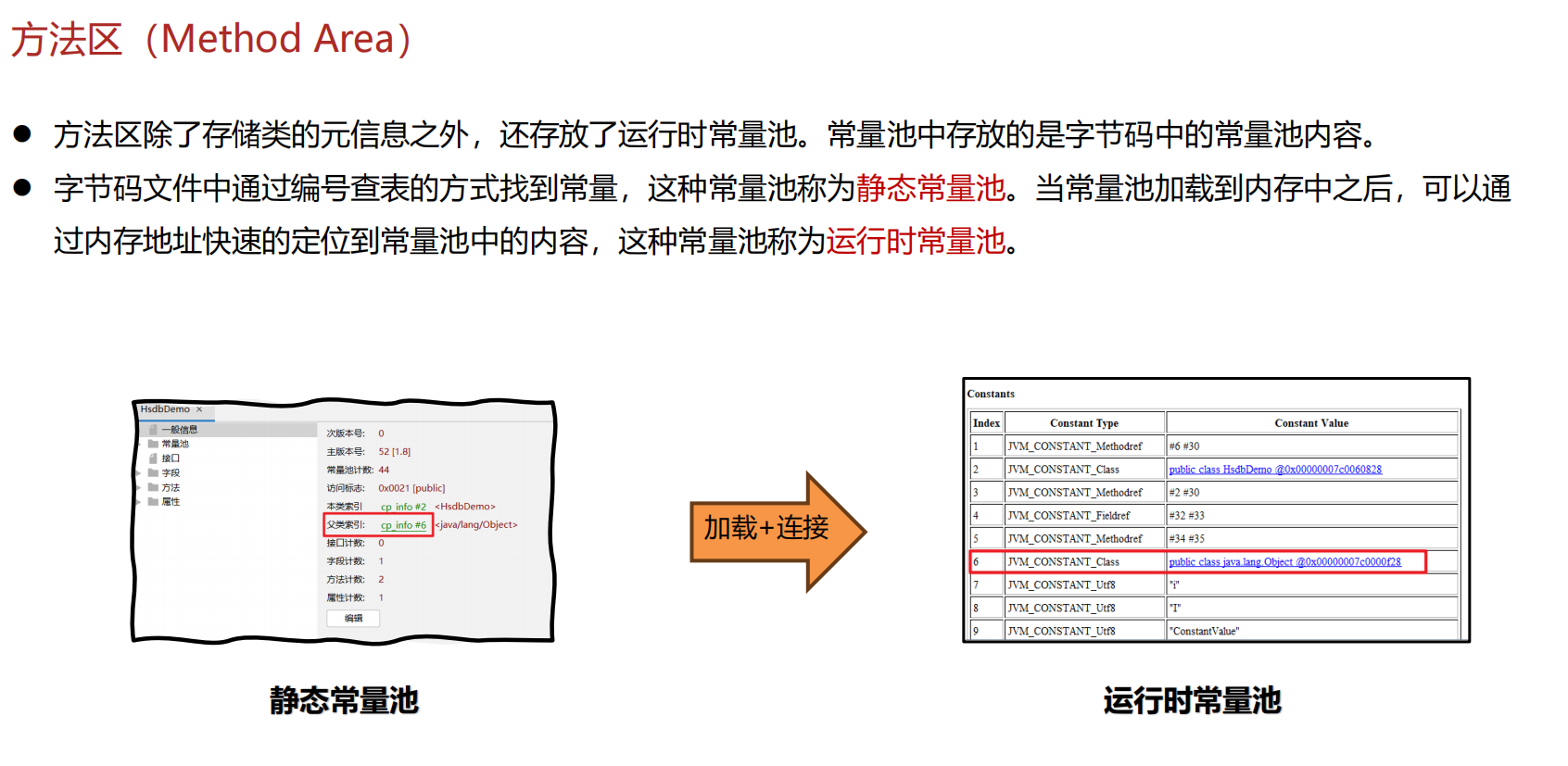

JVM 内存结构 详解

内存结构 运行时数据区: Java虚拟机在运行Java程序过程中管理的内存区域。 程序计数器: 线程私有,程序控制流的指示器,分支、循环、跳转、异常处理、线程恢复等基础功能都依赖这个计数器完成。 每个线程都有一个程序计数…...