【已解决】【hadoop】【hive】启动不成功 报错 无法与MySQL服务器建立连接 Hive连接到MetaStore失败 无法进入交互式执行环境

启动hive显示什么才是成功

当你成功启动Hive时,通常会看到一系列的日志信息输出到控制台,这些信息包括了Hive服务初始化的过程以及它与Metastore服务连接的情况等。一旦Hive完成启动并准备就绪,你将看到提示符(如 hive> ),表示你可以开始输入命令或查询了。

注意:Hive依赖于Hadoop的HDFS和YARN等组件,因此首先需要确保Hadoop服务已经成功启动。

报错

hadoop@dblab-VirtualBox:~$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [localhost]

localhost: namenode running as process 3184. Stop it first.

localhost: datanode running as process 3339. Stop it first.

Starting secondary namenodes [0.0.0.0]

0.0.0.0: secondarynamenode running as process 3537. Stop it first.

starting yarn daemons

resourcemanager running as process 3712. Stop it first.

localhost: nodemanager running as process 3910. Stop it first.

hadoop@dblab-VirtualBox:~$ ^C

hadoop@dblab-VirtualBox:~$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]Logging initialized using configuration in jar:file:/usr/local/hive/lib/hive-common-2.1.0.jar!/hive-log4j2.properties Async: true

Exception in thread "main" java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:578)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:518)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:641)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:226)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:366)

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:310)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:290)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:266)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:545)

... 9 more

Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1627)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:80)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:130)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:101)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3317)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3356)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3336)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3590)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:236)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:221)

... 14 more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1625)

... 23 more

Caused by: javax.jdo.JDOFatalDataStoreException: Unable to open a test connection to the given database. JDBC url = jdbc:mysql://localhost:2024/hive?createDatabaseIfNotExist=true, username = root. Terminating connection pool (set lazyInit to true if you expect to start your database after your app). Original Exception: ------

com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failureThe last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:341)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2251)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2284)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2083)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:806)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:410)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:328)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:208)

at com.jolbox.bonecp.BoneCP.obtainRawInternalConnection(BoneCP.java:361)

at com.jolbox.bonecp.BoneCP.<init>(BoneCP.java:416)

at com.jolbox.bonecp.BoneCPDataSource.getConnection(BoneCPDataSource.java:120)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl$ManagedConnectionImpl.getConnection(ConnectionFactoryImpl.java:483)

at org.datanucleus.store.rdbms.RDBMSStoreManager.<init>(RDBMSStoreManager.java:296)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.datanucleus.plugin.NonManagedPluginRegistry.createExecutableExtension(NonManagedPluginRegistry.java:606)

at org.datanucleus.plugin.PluginManager.createExecutableExtension(PluginManager.java:301)

at org.datanucleus.NucleusContextHelper.createStoreManagerForProperties(NucleusContextHelper.java:133)

at org.datanucleus.PersistenceNucleusContextImpl.initialise(PersistenceNucleusContextImpl.java:420)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.freezeConfiguration(JDOPersistenceManagerFactory.java:821)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.createPersistenceManagerFactory(JDOPersistenceManagerFactory.java:338)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.getPersistenceManagerFactory(JDOPersistenceManagerFactory.java:217)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at javax.jdo.JDOHelper$16.run(JDOHelper.java:1965)

at java.security.AccessController.doPrivileged(Native Method)

at javax.jdo.JDOHelper.invoke(JDOHelper.java:1960)

at javax.jdo.JDOHelper.invokeGetPersistenceManagerFactoryOnImplementation(JDOHelper.java:1166)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:808)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:701)

at org.apache.hadoop.hive.metastore.ObjectStore.getPMF(ObjectStore.java:424)

at org.apache.hadoop.hive.metastore.ObjectStore.getPersistenceManager(ObjectStore.java:453)

at org.apache.hadoop.hive.metastore.ObjectStore.initialize(ObjectStore.java:327)

at org.apache.hadoop.hive.metastore.ObjectStore.setConf(ObjectStore.java:294)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:76)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:136)

at org.apache.hadoop.hive.metastore.RawStoreProxy.<init>(RawStoreProxy.java:58)

at org.apache.hadoop.hive.metastore.RawStoreProxy.getProxy(RawStoreProxy.java:67)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.newRawStore(HiveMetaStore.java:581)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:546)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:612)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:398)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:78)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:84)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:6396)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:236)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:70)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1625)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:80)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:130)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:101)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3317)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3356)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3336)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3590)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:236)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:221)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:366)

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:310)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:290)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:266)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:545)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:518)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:641)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: java.net.ConnectException: 拒绝连接 (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:607)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:211)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:300)

... 85 more

------NestedThrowables:

java.sql.SQLException: Unable to open a test connection to the given database. JDBC url = jdbc:mysql://localhost:2024/hive?createDatabaseIfNotExist=true, username = root. Terminating connection pool (set lazyInit to true if you expect to start your database after your app). Original Exception: ------

com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failureThe last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:341)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2251)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2284)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2083)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:806)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:410)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:328)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:208)

at com.jolbox.bonecp.BoneCP.obtainRawInternalConnection(BoneCP.java:361)

at com.jolbox.bonecp.BoneCP.<init>(BoneCP.java:416)

at com.jolbox.bonecp.BoneCPDataSource.getConnection(BoneCPDataSource.java:120)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl$ManagedConnectionImpl.getConnection(ConnectionFactoryImpl.java:483)

at org.datanucleus.store.rdbms.RDBMSStoreManager.<init>(RDBMSStoreManager.java:296)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.datanucleus.plugin.NonManagedPluginRegistry.createExecutableExtension(NonManagedPluginRegistry.java:606)

at org.datanucleus.plugin.PluginManager.createExecutableExtension(PluginManager.java:301)

at org.datanucleus.NucleusContextHelper.createStoreManagerForProperties(NucleusContextHelper.java:133)

at org.datanucleus.PersistenceNucleusContextImpl.initialise(PersistenceNucleusContextImpl.java:420)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.freezeConfiguration(JDOPersistenceManagerFactory.java:821)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.createPersistenceManagerFactory(JDOPersistenceManagerFactory.java:338)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.getPersistenceManagerFactory(JDOPersistenceManagerFactory.java:217)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at javax.jdo.JDOHelper$16.run(JDOHelper.java:1965)

at java.security.AccessController.doPrivileged(Native Method)

at javax.jdo.JDOHelper.invoke(JDOHelper.java:1960)

at javax.jdo.JDOHelper.invokeGetPersistenceManagerFactoryOnImplementation(JDOHelper.java:1166)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:808)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:701)

at org.apache.hadoop.hive.metastore.ObjectStore.getPMF(ObjectStore.java:424)

at org.apache.hadoop.hive.metastore.ObjectStore.getPersistenceManager(ObjectStore.java:453)

at org.apache.hadoop.hive.metastore.ObjectStore.initialize(ObjectStore.java:327)

at org.apache.hadoop.hive.metastore.ObjectStore.setConf(ObjectStore.java:294)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:76)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:136)

at org.apache.hadoop.hive.metastore.RawStoreProxy.<init>(RawStoreProxy.java:58)

at org.apache.hadoop.hive.metastore.RawStoreProxy.getProxy(RawStoreProxy.java:67)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.newRawStore(HiveMetaStore.java:581)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:546)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:612)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:398)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:78)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:84)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:6396)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:236)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:70)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1625)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:80)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:130)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:101)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3317)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3356)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3336)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3590)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:236)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:221)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:366)

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:310)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:290)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:266)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:545)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:518)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:641)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: java.net.ConnectException: 拒绝连接 (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:607)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:211)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:300)

... 85 more

------at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:529)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.freezeConfiguration(JDOPersistenceManagerFactory.java:834)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.createPersistenceManagerFactory(JDOPersistenceManagerFactory.java:338)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.getPersistenceManagerFactory(JDOPersistenceManagerFactory.java:217)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at javax.jdo.JDOHelper$16.run(JDOHelper.java:1965)

at java.security.AccessController.doPrivileged(Native Method)

at javax.jdo.JDOHelper.invoke(JDOHelper.java:1960)

at javax.jdo.JDOHelper.invokeGetPersistenceManagerFactoryOnImplementation(JDOHelper.java:1166)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:808)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:701)

at org.apache.hadoop.hive.metastore.ObjectStore.getPMF(ObjectStore.java:424)

at org.apache.hadoop.hive.metastore.ObjectStore.getPersistenceManager(ObjectStore.java:453)

at org.apache.hadoop.hive.metastore.ObjectStore.initialize(ObjectStore.java:327)

at org.apache.hadoop.hive.metastore.ObjectStore.setConf(ObjectStore.java:294)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:76)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:136)

at org.apache.hadoop.hive.metastore.RawStoreProxy.<init>(RawStoreProxy.java:58)

at org.apache.hadoop.hive.metastore.RawStoreProxy.getProxy(RawStoreProxy.java:67)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.newRawStore(HiveMetaStore.java:581)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:546)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:612)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:398)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:78)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:84)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:6396)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:236)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:70)

... 28 more

Caused by: java.sql.SQLException: Unable to open a test connection to the given database. JDBC url = jdbc:mysql://localhost:2024/hive?createDatabaseIfNotExist=true, username = root. Terminating connection pool (set lazyInit to true if you expect to start your database after your app). Original Exception: ------

com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failureThe last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:341)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2251)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2284)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2083)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:806)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:410)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:328)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:208)

at com.jolbox.bonecp.BoneCP.obtainRawInternalConnection(BoneCP.java:361)

at com.jolbox.bonecp.BoneCP.<init>(BoneCP.java:416)

at com.jolbox.bonecp.BoneCPDataSource.getConnection(BoneCPDataSource.java:120)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl$ManagedConnectionImpl.getConnection(ConnectionFactoryImpl.java:483)

at org.datanucleus.store.rdbms.RDBMSStoreManager.<init>(RDBMSStoreManager.java:296)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.datanucleus.plugin.NonManagedPluginRegistry.createExecutableExtension(NonManagedPluginRegistry.java:606)

at org.datanucleus.plugin.PluginManager.createExecutableExtension(PluginManager.java:301)

at org.datanucleus.NucleusContextHelper.createStoreManagerForProperties(NucleusContextHelper.java:133)

at org.datanucleus.PersistenceNucleusContextImpl.initialise(PersistenceNucleusContextImpl.java:420)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.freezeConfiguration(JDOPersistenceManagerFactory.java:821)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.createPersistenceManagerFactory(JDOPersistenceManagerFactory.java:338)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.getPersistenceManagerFactory(JDOPersistenceManagerFactory.java:217)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at javax.jdo.JDOHelper$16.run(JDOHelper.java:1965)

at java.security.AccessController.doPrivileged(Native Method)

at javax.jdo.JDOHelper.invoke(JDOHelper.java:1960)

at javax.jdo.JDOHelper.invokeGetPersistenceManagerFactoryOnImplementation(JDOHelper.java:1166)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:808)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:701)

at org.apache.hadoop.hive.metastore.ObjectStore.getPMF(ObjectStore.java:424)

at org.apache.hadoop.hive.metastore.ObjectStore.getPersistenceManager(ObjectStore.java:453)

at org.apache.hadoop.hive.metastore.ObjectStore.initialize(ObjectStore.java:327)

at org.apache.hadoop.hive.metastore.ObjectStore.setConf(ObjectStore.java:294)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:76)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:136)

at org.apache.hadoop.hive.metastore.RawStoreProxy.<init>(RawStoreProxy.java:58)

at org.apache.hadoop.hive.metastore.RawStoreProxy.getProxy(RawStoreProxy.java:67)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.newRawStore(HiveMetaStore.java:581)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:546)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:612)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:398)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:78)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:84)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:6396)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:236)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:70)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1625)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:80)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:130)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:101)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3317)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3356)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3336)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3590)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:236)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:221)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:366)

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:310)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:290)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:266)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:545)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:518)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:641)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: java.net.ConnectException: 拒绝连接 (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:607)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:211)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:300)

... 85 more

------at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.jolbox.bonecp.PoolUtil.generateSQLException(PoolUtil.java:192)

at com.jolbox.bonecp.BoneCP.<init>(BoneCP.java:422)

at com.jolbox.bonecp.BoneCPDataSource.getConnection(BoneCPDataSource.java:120)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl$ManagedConnectionImpl.getConnection(ConnectionFactoryImpl.java:483)

at org.datanucleus.store.rdbms.RDBMSStoreManager.<init>(RDBMSStoreManager.java:296)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.datanucleus.plugin.NonManagedPluginRegistry.createExecutableExtension(NonManagedPluginRegistry.java:606)

at org.datanucleus.plugin.PluginManager.createExecutableExtension(PluginManager.java:301)

at org.datanucleus.NucleusContextHelper.createStoreManagerForProperties(NucleusContextHelper.java:133)

at org.datanucleus.PersistenceNucleusContextImpl.initialise(PersistenceNucleusContextImpl.java:420)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.freezeConfiguration(JDOPersistenceManagerFactory.java:821)

... 57 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failureThe last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:341)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2251)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2284)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2083)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:806)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:410)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:328)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:208)

at com.jolbox.bonecp.BoneCP.obtainRawInternalConnection(BoneCP.java:361)

at com.jolbox.bonecp.BoneCP.<init>(BoneCP.java:416)

... 69 more

Caused by: java.net.ConnectException: 拒绝连接 (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:607)

at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:211)

at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:300)

... 85 more

报错分析

从日志信息来看,遇到的问题:

-

过时的启动脚本:

start-all.sh脚本已被废弃,建议使用start-dfs.sh和start-yarn.sh分别启动分布式文件系统(DFS)和Yet Another Resource Negotiator(YARN)。 -

SLF4J绑定冲突:日志中显示类路径上存在多个SLF4J绑定。根据 SLF4J的多绑定错误页面,你应该只保留一个SLF4J绑定在类路径上。可以通过检查

/usr/local/hive/lib/和/usr/local/hadoop/share/hadoop/common/lib/路径下的JAR文件来解决这个冲突,移除多余的绑定。 -

Hive MetaStore 连接问题:日志中出现了

Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient异常,这通常是因为无法连接到Hive MetaStore服务。原因可能是:-

数据库连接问题:Hive MetaStore试图连接到MySQL数据库时出现了

javax.jdo.JDOFatalDataStoreException异常。确保MySQL服务正在运行,并且Hive MetaStore配置的数据库URL、用户名和密码是正确的。 -

SSL连接问题:日志中还包含了

javax.net.ssl.SSLHandshakeException异常,这表明在尝试建立SSL连接时出现了问题。这可能是因为MySQL服务器期望默认建立SSL连接,但客户端没有正确配置。你可以通过设置useSSL=false来禁用SSL连接,或者设置useSSL=true并提供信任的证书库以进行服务器证书验证。 -

通信链路故障:

com.mysql.jdbc.exceptions.jdbc4.CommunicationsException表示与MySQL服务器的通信链路出现故障。可能是因为网络问题、MySQL服务器未运行或配置错误。

-

解决这些问题通常需要检查Hadoop和Hive的配置文件,确保所有的服务都已经正确启动,并且相关的依赖服务(如MySQL)也已经就绪并可以正常访问。你可能需要检查 hive-site.xml 配置文件中的数据库连接设置,以及Hadoop和Hive的日志文件中可能包含的更详细的错误信息。

解决

发现只需要解决以下两个问题报错就可以成功运行hive了。

1. 数据库连接问题--->确保MySQL服务运行

确保MySQL服务正在运行,并且Hive MetaStore能够连接到它:

- 运行

sudo service mysql start或sudo systemctl start mysql来启动MySQL服务。 - 使用

mysql -u root -p登录MySQL,并验证Hive使用的数据库和用户是否存在。

2. SSL连接问题--->hive-site.xml 配置文件中的数据库连接设置

编辑Hive的配置文件hive-site.xml,通过设置 useSSL=false 来禁用SSL连接:

hive-site.xml配置文件,它定义了 Hive Metastore 如何连接到 MySQL 数据库。以下是每个配置项的解释:

Connection URL:

<property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true</value><description>JDBC connect string for a MySQL connection</description> </property>

name: 配置项的名称,用于在 Hive 配置中唯一标识这个配置项。value: 配置项的值,这里是 JDBC URL,用于连接到 MySQL 数据库。

jdbc:mysql://localhost:3306/hive: 指定了数据库服务器的地址(localhost),端口号(3306),以及数据库名称(hive)。?createDatabaseIfNotExist=true: 这是一个查询参数,告诉 MySQL 如果hive数据库不存在,则创建它。同时,useSSL=false禁用了 SSL 连接(MySQL 5.7.6 以后的版本默认要求 SSL 连接)。description: 配置项的描述,说明这是用于 MySQL 连接的 JDBC 连接字符串。Connection Driver Name:

<property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.jdbc.Driver</value><description>Driver class name for a MySQL connection</description> </property>

name: 配置项的名称。value: 配置项的值,这里是 MySQL JDBC 驱动的类名。description: 配置项的描述,说明这是用于 MySQL 连接的驱动类名。Connection User Name:

<property><name>javax.jdo.option.ConnectionUserName</name><value>hive</value><description>username to use against MySQL connection</description> </property>

name: 配置项的名称。value: 配置项的值,这里是用于连接到 MySQL 数据库的用户名(hive)。description: 配置项的描述,说明这是用于 MySQL 连接的用户名。Connection Password:

<property><name>javax.jdo.option.ConnectionPassword</name><value>hive</value><description>password to use against MySQL connection</description> </property>

name: 配置项的名称。value: 配置项的值,这里是用于连接到 MySQL 数据库的密码(hive)。description: 配置项的描述,说明这是用于 MySQL 连接的密码。

相关文章:

【已解决】【hadoop】【hive】启动不成功 报错 无法与MySQL服务器建立连接 Hive连接到MetaStore失败 无法进入交互式执行环境

启动hive显示什么才是成功 当你成功启动Hive时,通常会看到一系列的日志信息输出到控制台,这些信息包括了Hive服务初始化的过程以及它与Metastore服务连接的情况等。一旦Hive完成启动并准备就绪,你将看到提示符(如 hive> &#…...

基于架设一台NFS服务器实操作业

架设一台NFS服务器,并按照以下要求配置 首先需要关闭防火墙和SELinux 1、开放/nfs/shared目录,供所有用户查询资料 赋予所有用户只读的权限,sync将数据同步写到磁盘上 在客户端需要创建挂载点,把服务端共享的文件系统挂载到所创建…...

eachers中的树形图在点击其中某个子节点时关闭其他同级子节点

答案在代码末尾!!!!! tubiaoinit(params: any) {// 手动触发变化检测this.changeDetectorRef.detectChanges();if (this.myChart ! undefined) {this.myChart.dispose();}this.myChart echarts.init(this.pieChart?…...

Maven 介绍与核心概念解析

目录 1. pom文件解析 2. Maven坐标 3. Maven依赖范围 4. Maven 依赖传递与冲突解决 Maven,作为一个广泛应用于 Java 平台的自动化构建和依赖管理工具,其强大功能和易用性使得它在开发社区中备受青睐。本文将详细解析 Maven 的几个核心概念&a…...

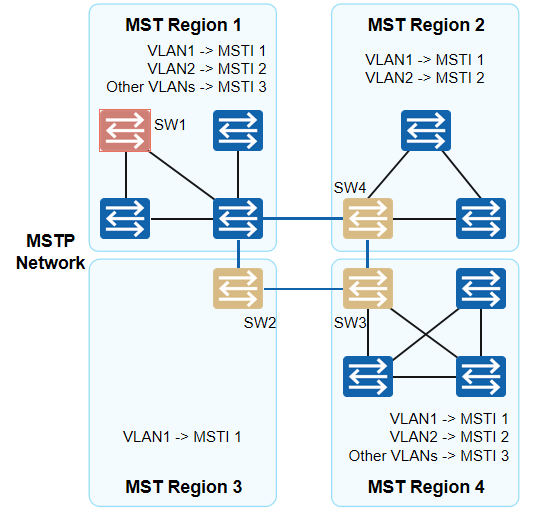

计算机网络-MSTP概述

一、RSTP/STP的缺陷与不足 前面我们学习了RSTP对于STP的一些优化与快速收敛机制。但在划分VLAN的网络中运行RSTP/STP,局域网内所有的VLAN共享一棵生成树,被阻塞后的链路将不承载任何流量,无法在VLAN间实现数据流量的负载均衡,导致…...

Redisson(三)应用场景及demo

一、基本的存储与查询 分布式环境下,为了方便多个进程之间的数据共享,可以使用RedissonClient的分布式集合类型,如List、Set、SortedSet等。 1、demo <parent><groupId>org.springframework.boot</groupId><artifact…...

1)

考研要求掌握的C语言程度(堆排序)1

含义 堆排序就是把数组的内容在心中建立为大根堆,然后每次循环把根顶和没交换过的根末进行调换,再次建立大根堆的过程 建树的几个公式 一个数组有n个元素 最后一个父亲节点是n/2-1; 假如父亲节点在数组的下标为a 那么左孩子节点在数组下标为2*a1,…...

chronyd配置了local的NTP server之后, NTP报文中出现public IP的问题

描述 客户在Rocky Linux 9.4的VM上配了一个local的NTP server(IP: 10.64.1.76)。 配置完成后, 时钟可以同步,但一段时间后客户的firewall收到告警, 拒绝了大量目标端口为123的请求, 且这些请求的目的IP并不是客户指定的NTP server的IP,客户要求解释原因…...

docker常用命令整理

文章目录 docker 常用操作命令一、镜像类操作1.构建镜像2.从容器创建镜像3.查看镜像列表4.删除镜像5. 从远程镜像仓库拉取镜像6. 将镜像推送到镜像仓库中7. 将镜像导出8. 导入镜像9. 登录镜像仓库 二、容器相关操作1. 运行容器2. 进入容器3. 查看容器的运行状态4. 查看容器的日…...

将CSDN博客转换为PDF的Python Web应用开发--Flask实战

文章目录 项目概述技术栈介绍 项目目录应用结构 功能实现单页博客转换示例: 专栏合集博客转换示例: PDF效果: 代码依赖文件requirements.txt:app.py:代码解释: /api/onepage.py:代码解释: /api/zhuanlan.py…...

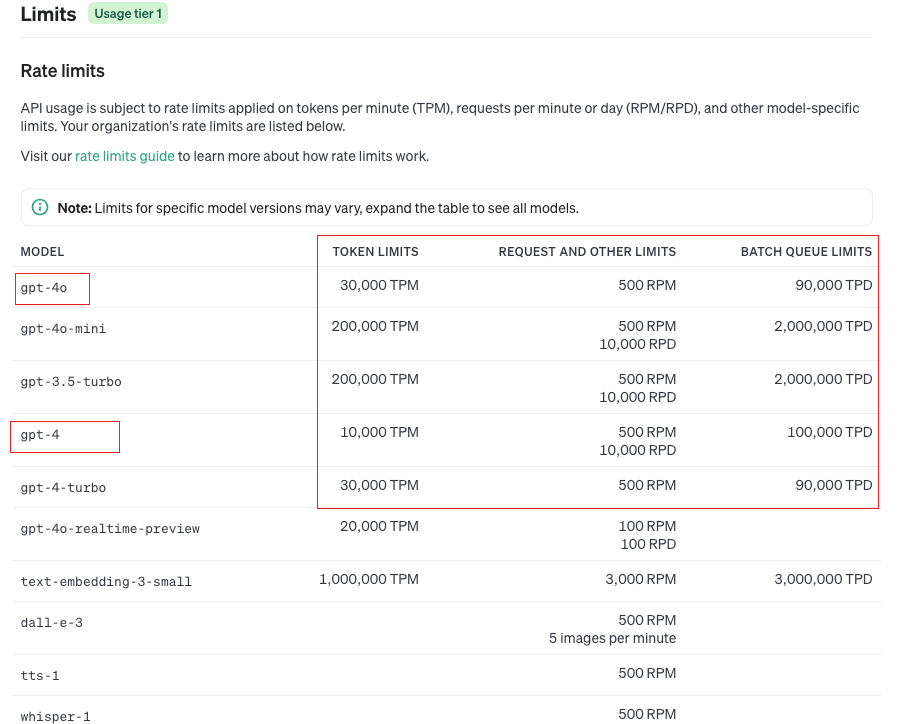

AIGC学习笔记(3)——AI大模型开发工程师

文章目录 AI大模型开发工程师002 GPT大模型开发基础1 OpenAI账户注册2 OpenAI官网介绍3 OpenAI GPT费用计算4 OpenAI Key获取与配置5 OpenAI 大模型总览6 代码演示安装依赖导入依赖初始化客户端执行代码遇到的问题 AI大模型开发工程师 002 GPT大模型开发基础 1 OpenAI账户注册…...

Windows server 2003服务器的安装

Windows server 2003服务器的安装 安装前的准备: 1.镜像SN序列号 图1-1 Windows server 2003的安装包非常人性化 2.指定一个安装位置 图1-2 选择好安装位置 3.启动虚拟机打开安装向导 图1-3 打开VMware17安装向导 图1-4 给虚拟光驱插入光盘镜像 图1-5 输入SN并…...

HTML作业

作业 复现下面的图片 复现结果 代码 <!DOCTYPE html> <html><head><meta charset"utf-8"><title></title></head><body><form action"#"method"get"enctype"text/plain"><…...

MYSQL-SQL-04-DCL(Data Control Language,数据控制语言)

DCL(数据控制语言) DCL英文全称是Data Control Language(数据控制语言),用来管理数据库用户、控制数据库的访问权限。 一、管理用户 1、查询用户 在MySQL数据库管理系统中,mysql 是一个特殊的系统数据库名称,它并不…...

多线程进阶——线程池的实现

什么是池化技术 池化技术是一种资源管理策略,它通过重复利用已存在的资源来减少资源的消耗,从而提高系统的性能和效率。在计算机编程中,池化技术通常用于管理线程、连接、数据库连接等资源。 我们会将可能使用的资源预先创建好,…...

C++网络编程之C/S模型

C网络编程之C/S模型 引言 在网络编程中,C/S(Client/Server,客户端/服务器)模型是一种最基本且广泛应用的架构模式。这种模型将应用程序分为两个部分:服务器(Server)和客户端(Clien…...

目标检测:YOLOv11(Ultralytics)环境配置,适合0基础纯小白,超详细

目录 1.前言 2. 查看电脑状况 3. 安装所需软件 3.1 Anaconda3安装 3.2 Pycharm安装 4. 安装环境 4.1 安装cuda及cudnn 4.1.1 下载及安装cuda 4.1.2 cudnn安装 4.2 创建虚拟环境 4.3 安装GPU版本 4.3.1 安装pytorch(GPU版) 4.3.2 安装ultral…...

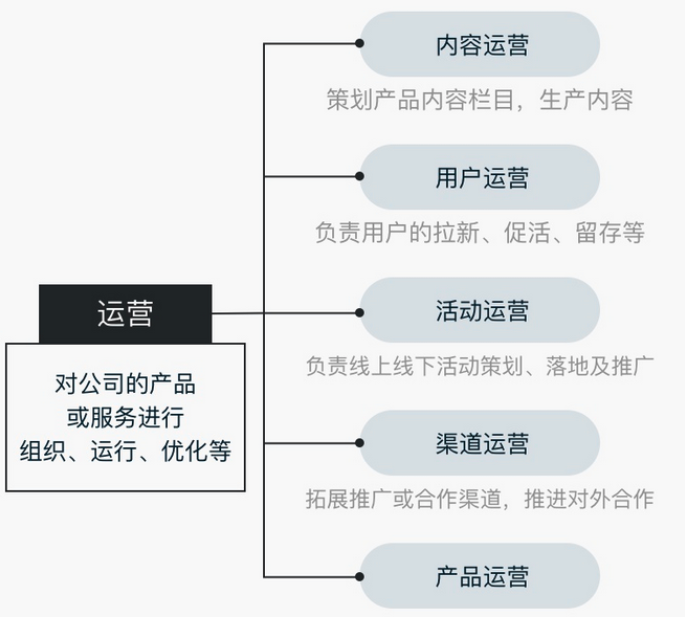

面试域——岗位职责以及工作流程

摘要 介绍互联网岗位的职责以及开发流程。在岗位职责方面,详细阐述了产品经理、前端开发工程师、后端开发工程师、测试工程师、运维工程师等的具体工作内容。产品经理负责需求收集、产品规划等;前端专注界面开发与交互;后端涉及系统架构与业…...

C#文件内容检索的功能

为了构建一个高效的文件内容检索系统,我们需要考虑更多的细节和实现策略。以下是对之前技术方案的扩展,以及一个更详细的C# demo示例,其中包含索引构建、多线程处理和文件监控的简化实现思路。 扩展后的技术方案 索引构建: 使用L…...

Redis-05 Redis发布订阅

Redis 的发布订阅(Pub/Sub)是一种消息通信模式,允许客户端订阅消息频道,以便在发布者向频道发送消息时接收消息。这种模式非常适合实现消息队列、聊天应用、实时通知等功能。 #了解即可,用的很少...

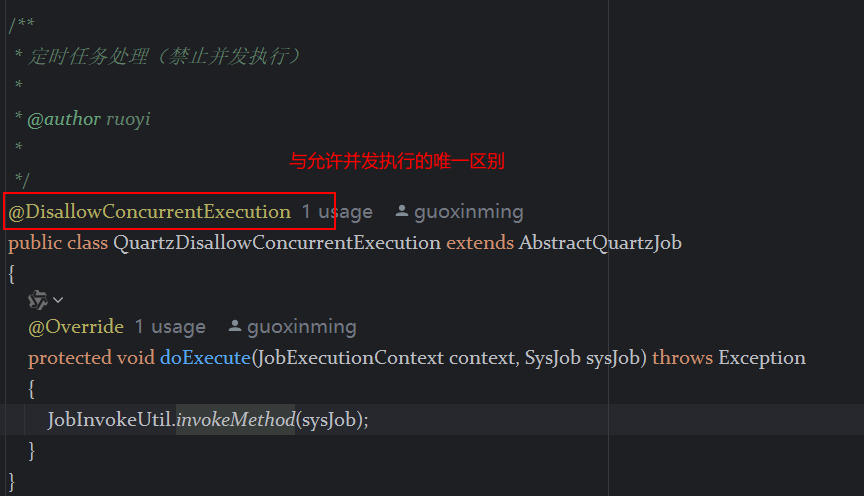

定时器任务——若依源码分析

分析util包下面的工具类schedule utils: ScheduleUtils 是若依中用于与 Quartz 框架交互的工具类,封装了定时任务的 创建、更新、暂停、删除等核心逻辑。 createScheduleJob createScheduleJob 用于将任务注册到 Quartz,先构建任务的 JobD…...

智能在线客服平台:数字化时代企业连接用户的 AI 中枢

随着互联网技术的飞速发展,消费者期望能够随时随地与企业进行交流。在线客服平台作为连接企业与客户的重要桥梁,不仅优化了客户体验,还提升了企业的服务效率和市场竞争力。本文将探讨在线客服平台的重要性、技术进展、实际应用,并…...

工业自动化时代的精准装配革新:迁移科技3D视觉系统如何重塑机器人定位装配

AI3D视觉的工业赋能者 迁移科技成立于2017年,作为行业领先的3D工业相机及视觉系统供应商,累计完成数亿元融资。其核心技术覆盖硬件设计、算法优化及软件集成,通过稳定、易用、高回报的AI3D视觉系统,为汽车、新能源、金属制造等行…...

ios苹果系统,js 滑动屏幕、锚定无效

现象:window.addEventListener监听touch无效,划不动屏幕,但是代码逻辑都有执行到。 scrollIntoView也无效。 原因:这是因为 iOS 的触摸事件处理机制和 touch-action: none 的设置有关。ios有太多得交互动作,从而会影响…...

Reasoning over Uncertain Text by Generative Large Language Models

https://ojs.aaai.org/index.php/AAAI/article/view/34674/36829https://ojs.aaai.org/index.php/AAAI/article/view/34674/36829 1. 概述 文本中的不确定性在许多语境中传达,从日常对话到特定领域的文档(例如医学文档)(Heritage 2013;Landmark、Gulbrandsen 和 Svenevei…...

智能AI电话机器人系统的识别能力现状与发展水平

一、引言 随着人工智能技术的飞速发展,AI电话机器人系统已经从简单的自动应答工具演变为具备复杂交互能力的智能助手。这类系统结合了语音识别、自然语言处理、情感计算和机器学习等多项前沿技术,在客户服务、营销推广、信息查询等领域发挥着越来越重要…...

【JVM面试篇】高频八股汇总——类加载和类加载器

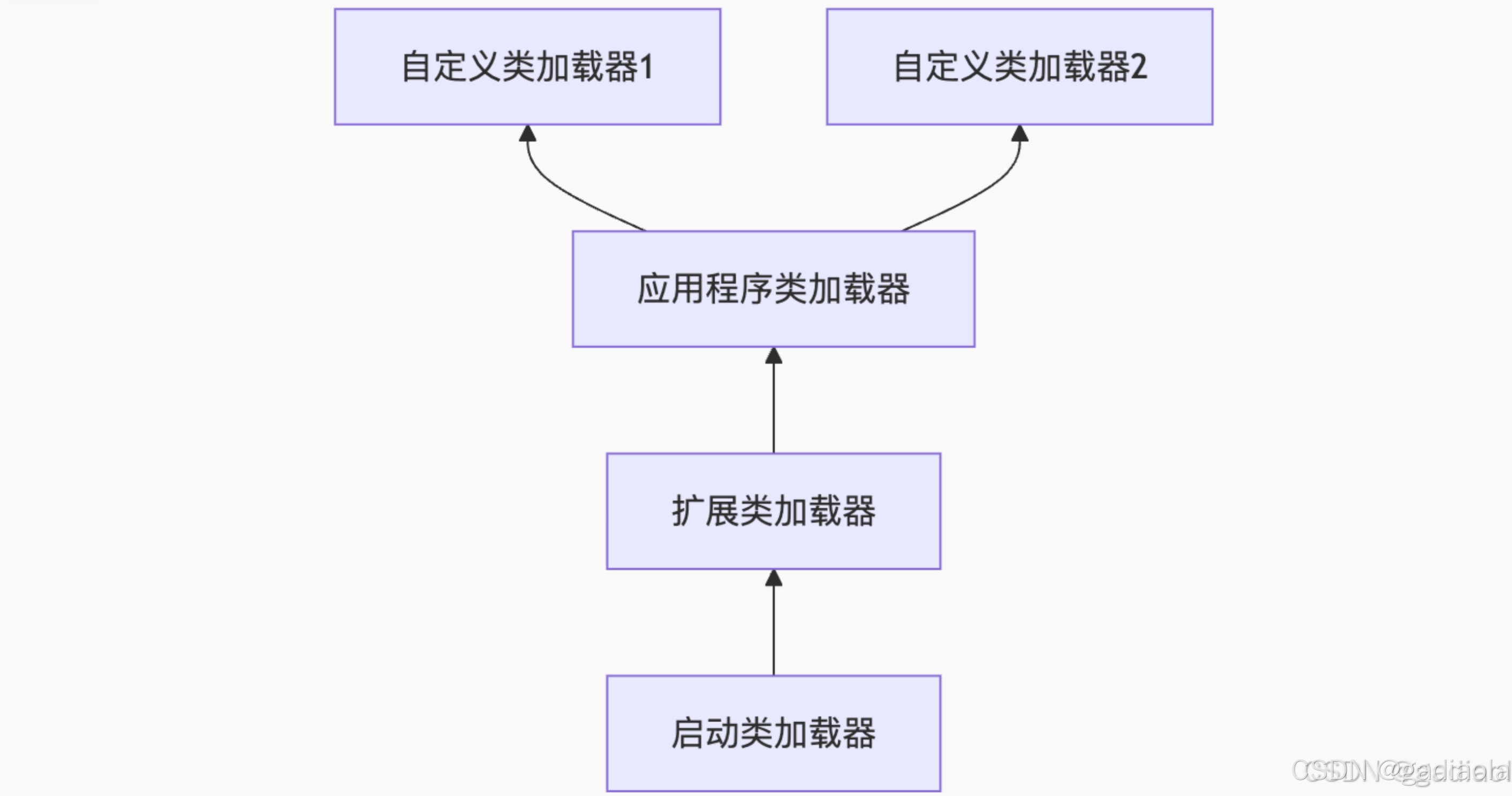

目录 1. 讲一下类加载过程? 2. Java创建对象的过程? 3. 对象的生命周期? 4. 类加载器有哪些? 5. 双亲委派模型的作用(好处)? 6. 讲一下类的加载和双亲委派原则? 7. 双亲委派模…...

宇树科技,改名了!

提到国内具身智能和机器人领域的代表企业,那宇树科技(Unitree)必须名列其榜。 最近,宇树科技的一项新变动消息在业界引发了不少关注和讨论,即: 宇树向其合作伙伴发布了一封公司名称变更函称,因…...

4. TypeScript 类型推断与类型组合

一、类型推断 (一) 什么是类型推断 TypeScript 的类型推断会根据变量、函数返回值、对象和数组的赋值和使用方式,自动确定它们的类型。 这一特性减少了显式类型注解的需要,在保持类型安全的同时简化了代码。通过分析上下文和初始值,TypeSc…...

go 里面的指针

指针 在 Go 中,指针(pointer)是一个变量的内存地址,就像 C 语言那样: a : 10 p : &a // p 是一个指向 a 的指针 fmt.Println(*p) // 输出 10,通过指针解引用• &a 表示获取变量 a 的地址 p 表示…...