第J5周:DenseNet+SE-Net实战

- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊

任务:

●1. 在DenseNet系列算法中插入SE-Net通道注意力机制,并完成猴痘病识别

●2. 改进思路是否可以迁移到其他地方呢

●3. 测试集accuracy到达89%(拔高,可选)

一、介绍

可参考论文《Squeeze-and-Excitation Networks》

SE-Net 是 ImageNet 2017(ImageNet 收官赛)的冠军模型,是由WMW团队发布。具有复杂度低,参数少和计算量小的优点。且SENet 思路很简单,很容易扩展到已有网络结构如 Inception 和 ResNet 中。

已经有很多工作在空间维度上来提升网络的性能,如 Inception 等,而 SENet 将关注点放在了特征通道之间的关系上。其具体策略为:通过学习的方式来自动获取到每个特征通道的重要程度,然后依照这个重要程度去提升有用的特征并抑制对当前任务用处不大的特征,这又叫做“特征重标定”策略。具体的 SE 模块如下图所示:

二、SE 模块应用分析

SE模块的灵活性在于它可以直接应用现有的网络结构中。以 Inception 和 ResNet 为例,我们只需要在 Inception 模块或 Residual 模块后添加一个 SE 模块即可。具体如下图所示:

上图分别是将 SE 模块嵌入到 Inception 结构与 ResNet 中的示例,方框旁边的维度信息代表该层的输出,c(原文是r,不过我觉得应该是c) 表示 Excitation 操作中的降维系数。

三、SE 模型效果对比

SE 模块很容易嵌入到其它网络中,为了验证 SE 模块的作用,在其它流行网络如 ResNet 和 Inception 中引入 SE 模块,测试其在 ImageNet 上的效果,如下表所示:

首先看一下网络的深度对 SE 的影响。上表分别展示了 ResNet-50、ResNet-101、ResNet-152 和嵌入 SE 模型的结果。第一栏 Original 是原作者实现的结果,为了进行公平的比较,重新进行了实验得到 Our re-implementation 的结果。最后一栏 SE-module 是指嵌入了 SE 模块的结果,它的训练参数和第二栏 Our re-implementation 一致。括号中的红色数值是指相对于 Our re-implementation 的精度提升的幅值。

从上表可以看出,SE-ResNets 在各种深度上都远远超过了其对应的没有SE的结构版本的精度,这说明无论网络的深度如何,SE模块都能够给网络带来性能上的增益。值得一提的是,SE-ResNet-50 可以达到和ResNet-101 一样的精度;更甚,SE-ResNet-101 远远地超过了更深的ResNet-152。

上图展示了ResNet-50 和 ResNet-152 以及它们对应的嵌入SE模块的网络在ImageNet上的训练过程,可以明显地看出加入了SE模块的网络收敛到更低的错误率上。

四、SE 模块代码实现

class SELayer(nn.Module):def __init__(self,channel,reduction=16):super(SELayer,self).__init__()self.avg_pool = nn.AdaptiveAvgPool2d(1)self.fc = nn.Sequential(nn.Linear(channel,channel//reduction),nn.ReLU(inplace=True),nn.Linear(channel//reduction,channel),nn.Sigmoid())def forward(self,x):b,c,_,_ = x.size()y = self.avg_pool(x).view(b,c) # 只保留批次和通道,抛弃长宽维度y = self.fc(y).view(b,c,1,1) # 增加长宽维度(从平面变立体)return x * y五 densenet+senet

1.densenet的原模型测试

from collections import OrderedDict

import torch

import torch.nn as nn

import torch.nn.functional as F

class DenseLayer(nn.Sequential):def __init__(self, in_channel, growth_rate, bn_size, drop_rate):super(DenseLayer, self).__init__()self.add_module('norm1', nn.BatchNorm2d(in_channel))self.add_module('relu1', nn.ReLU(inplace=True))self.add_module('conv1', nn.Conv2d(in_channel, bn_size*growth_rate,kernel_size=1, stride=1, bias=False))self.add_module('norm2', nn.BatchNorm2d(bn_size*growth_rate))self.add_module('relu2', nn.ReLU(inplace=True))self.add_module('conv2', nn.Conv2d(bn_size*growth_rate, growth_rate,kernel_size=3, stride=1, padding=1, bias=False))self.drop_rate = drop_ratedef forward(self, x):new_feature = super(DenseLayer, self).forward(x)if self.drop_rate>0:new_feature = F.dropout(new_feature, p=self.drop_rate, training=self.training)return torch.cat([x, new_feature], 1)

''' DenseBlock '''

class DenseBlock(nn.Sequential):def __init__(self, num_layers, in_channel, bn_size, growth_rate, drop_rate):super(DenseBlock, self).__init__()for i in range(num_layers):layer = DenseLayer(in_channel+i*growth_rate, growth_rate, bn_size, drop_rate)self.add_module('denselayer%d'%(i+1,), layer)

''' Transition layer between two adjacent DenseBlock '''

class Transition(nn.Sequential):def __init__(self, in_channel, out_channel):super(Transition, self).__init__()self.add_module('norm', nn.BatchNorm2d(in_channel))self.add_module('relu', nn.ReLU(inplace=True))self.add_module('conv', nn.Conv2d(in_channel, out_channel,kernel_size=1, stride=1, bias=False))self.add_module('pool', nn.AvgPool2d(2, stride=2))

class DenseNet(nn.Module):def __init__(self, growth_rate=32, block_config=(6,12,24,16), init_channel=64, bn_size=4, compression_rate=0.5, drop_rate=0, num_classes=1000):''':param growth_rate: (int) number of filters used in DenseLayer, `k` in the paper:param block_config: (list of 4 ints) number of layers in eatch DenseBlock:param init_channel: (int) number of filters in the first Conv2d:param bn_size: (int) the factor using in the bottleneck layer:param compression_rate: (float) the compression rate used in Transition Layer:param drop_rate: (float) the drop rate after each DenseLayer:param num_classes: (int) 待分类的类别数'''super(DenseNet, self).__init__()# first Conv2dself.features = nn.Sequential(OrderedDict([('conv0', nn.Conv2d(3, init_channel, kernel_size=7, stride=2, padding=3, bias=False)),('norm0', nn.BatchNorm2d(init_channel)),('relu0', nn.ReLU(inplace=True)),('pool0', nn.MaxPool2d(3, stride=2, padding=1))]))# DenseBlocknum_features = init_channelfor i, num_layers in enumerate(block_config):block = DenseBlock(num_layers, num_features, bn_size, growth_rate, drop_rate)self.features.add_module('denseblock%d'%(i+1), block)num_features += num_layers*growth_rateif i != len(block_config)-1:transition = Transition(num_features, int(num_features*compression_rate))self.features.add_module('transition%d'%(i+1), transition)num_features = int(num_features*compression_rate)# final BN+ReLUself.features.add_module('norm5', nn.BatchNorm2d(num_features))self.features.add_module('relu5', nn.ReLU(inplace=True))# 分类层self.classifier = nn.Linear(num_features, num_classes)# 参数初始化for m in self.modules():if isinstance(m, nn.Conv2d):nn.init.kaiming_normal_(m.weight)elif isinstance(m, nn.BatchNorm2d):nn.init.constant_(m.bias, 0)nn.init.constant_(m.weight, 1)elif isinstance(m, nn.Linear):nn.init.constant_(m.bias, 0)def forward(self, x):x = self.features(x)x = F.avg_pool2d(x, 7, stride=1).view(x.size(0), -1)x = self.classifier(x)return xdensenet121 = DenseNet(init_channel=64,growth_rate=32,block_config=(6,12,24,16),num_classes=2) model = densenet121.to(device)

print(model)

input = torch.rand(1,3,224,224).to(device)

output = model(input)

print(output.size())

2.dense net+senet测试

class SELayer(nn.Module):def __init__(self,channel,reduction=16):super(SELayer,self).__init__()self.avg_pool = nn.AdaptiveAvgPool2d(1)self.fc = nn.Sequential(nn.Linear(channel,channel//reduction),nn.ReLU(inplace=True),nn.Linear(channel//reduction,channel),nn.Sigmoid())def forward(self,x):b,c,_,_ = x.size()y = self.avg_pool(x).view(b,c) # 只保留批次和通道,抛弃长宽维度y = self.fc(y).view(b,c,1,1) # 增加长宽维度(从平面变立体)return x * y

class DenseBlockWithSE(nn.Module):def __init__(self, num_layers, in_channel, bn_size, growth_rate, drop_rate, reduction=16):super(DenseBlockWithSE, self).__init__()self.dense_block = nn.Sequential()for i in range(num_layers):layer = DenseLayer(in_channel + i * growth_rate, growth_rate, bn_size, drop_rate)self.dense_block.add_module(f'denselayer{i+1}', layer)# 在DenseBlock后添加SE模块self.se = SELayer(in_channel + num_layers * growth_rate, reduction)def forward(self, x):# 通过DenseBlockx = self.dense_block(x)# 通过SE模块x = self.se(x)return xclass DenseNetWithSE(nn.Module):def __init__(self, growth_rate=32, block_config=(6,12,24,16), init_channel=64, bn_size=4, compression_rate=0.5, drop_rate=0, num_classes=1000, reduction=16):''':param growth_rate: (int) number of filters used in DenseLayer, `k` in the paper:param block_config: (list of 4 ints) number of layers in each DenseBlock:param init_channel: (int) number of filters in the first Conv2d:param bn_size: (int) the factor using in the bottleneck layer:param compression_rate: (float) the compression rate used in Transition Layer:param drop_rate: (float) the drop rate after each DenseLayer:param num_classes: (int) number of classes for classification'''super(DenseNetWithSE, self).__init__()# First Conv2dself.features = nn.Sequential(OrderedDict([('conv0', nn.Conv2d(3, init_channel, kernel_size=7, stride=2, padding=3, bias=False)),('norm0', nn.BatchNorm2d(init_channel)),('relu0', nn.ReLU(inplace=True)),('pool0', nn.MaxPool2d(3, stride=2, padding=1))]))num_features = init_channelfor i, num_layers in enumerate(block_config):block = DenseBlockWithSE(num_layers, num_features, bn_size, growth_rate, drop_rate, reduction)self.features.add_module(f'denseblock{i+1}', block)num_features += num_layers * growth_rateif i != len(block_config) - 1:transition = Transition(num_features, int(num_features * compression_rate))self.features.add_module(f'transition{i+1}', transition)num_features = int(num_features * compression_rate)# Final BN + ReLUself.features.add_module('norm5', nn.BatchNorm2d(num_features))self.features.add_module('relu5', nn.ReLU(inplace=True))# Classifierself.classifier = nn.Linear(num_features, num_classes)# Parameter Initializationfor m in self.modules():if isinstance(m, nn.Conv2d):nn.init.kaiming_normal_(m.weight)elif isinstance(m, nn.BatchNorm2d):nn.init.constant_(m.bias, 0)nn.init.constant_(m.weight, 1)elif isinstance(m, nn.Linear):nn.init.constant_(m.bias, 0)def forward(self, x):x = self.features(x)x = F.avg_pool2d(x, 7, stride=1).view(x.size(0), -1)x = self.classifier(x)return x

densenet121_with_se = DenseNetWithSE(init_channel=64,growth_rate=32,block_config=(6, 12, 24, 16),num_classes=2,reduction=16) model = densenet121_with_se.to(device)

print(model)

input = torch.rand(1, 3, 224, 224).to(device)

output = model(input)

print(output.size()) # 检查输出形状模块的输出:

DenseNetWithSE((features): Sequential((conv0): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)(norm0): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu0): ReLU(inplace=True)(pool0): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)(denseblock1): DenseBlockWithSE((dense_block): Sequential((denselayer1): DenseLayer((norm1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(64, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer2): DenseLayer((norm1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(96, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer3): DenseLayer((norm1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer4): DenseLayer((norm1): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(160, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer5): DenseLayer((norm1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(192, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer6): DenseLayer((norm1): BatchNorm2d(224, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(224, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)))(se): SELayer((avg_pool): AdaptiveAvgPool2d(output_size=1)(fc): Sequential((0): Linear(in_features=256, out_features=16, bias=True)(1): ReLU(inplace=True)(2): Linear(in_features=16, out_features=256, bias=True)(3): Sigmoid())))(transition1): Transition((norm): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(pool): AvgPool2d(kernel_size=2, stride=2, padding=0))(denseblock2): DenseBlockWithSE((dense_block): Sequential((denselayer1): DenseLayer((norm1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer2): DenseLayer((norm1): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(160, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer3): DenseLayer((norm1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(192, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer4): DenseLayer((norm1): BatchNorm2d(224, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(224, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer5): DenseLayer((norm1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer6): DenseLayer((norm1): BatchNorm2d(288, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(288, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer7): DenseLayer((norm1): BatchNorm2d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(320, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer8): DenseLayer((norm1): BatchNorm2d(352, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(352, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer9): DenseLayer((norm1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(384, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer10): DenseLayer((norm1): BatchNorm2d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(416, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer11): DenseLayer((norm1): BatchNorm2d(448, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(448, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer12): DenseLayer((norm1): BatchNorm2d(480, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(480, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)))(se): SELayer((avg_pool): AdaptiveAvgPool2d(output_size=1)(fc): Sequential((0): Linear(in_features=512, out_features=32, bias=True)(1): ReLU(inplace=True)(2): Linear(in_features=32, out_features=512, bias=True)(3): Sigmoid())))(transition2): Transition((norm): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(pool): AvgPool2d(kernel_size=2, stride=2, padding=0))(denseblock3): DenseBlockWithSE((dense_block): Sequential((denselayer1): DenseLayer((norm1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer2): DenseLayer((norm1): BatchNorm2d(288, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(288, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer3): DenseLayer((norm1): BatchNorm2d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(320, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer4): DenseLayer((norm1): BatchNorm2d(352, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(352, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer5): DenseLayer((norm1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(384, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer6): DenseLayer((norm1): BatchNorm2d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(416, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer7): DenseLayer((norm1): BatchNorm2d(448, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(448, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer8): DenseLayer((norm1): BatchNorm2d(480, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(480, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer9): DenseLayer((norm1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer10): DenseLayer((norm1): BatchNorm2d(544, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(544, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer11): DenseLayer((norm1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(576, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer12): DenseLayer((norm1): BatchNorm2d(608, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(608, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer13): DenseLayer((norm1): BatchNorm2d(640, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(640, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer14): DenseLayer((norm1): BatchNorm2d(672, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(672, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer15): DenseLayer((norm1): BatchNorm2d(704, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(704, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer16): DenseLayer((norm1): BatchNorm2d(736, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(736, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer17): DenseLayer((norm1): BatchNorm2d(768, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(768, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer18): DenseLayer((norm1): BatchNorm2d(800, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(800, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer19): DenseLayer((norm1): BatchNorm2d(832, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(832, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer20): DenseLayer((norm1): BatchNorm2d(864, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(864, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer21): DenseLayer((norm1): BatchNorm2d(896, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(896, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer22): DenseLayer((norm1): BatchNorm2d(928, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(928, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer23): DenseLayer((norm1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(960, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer24): DenseLayer((norm1): BatchNorm2d(992, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(992, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)))(se): SELayer((avg_pool): AdaptiveAvgPool2d(output_size=1)(fc): Sequential((0): Linear(in_features=1024, out_features=64, bias=True)(1): ReLU(inplace=True)(2): Linear(in_features=64, out_features=1024, bias=True)(3): Sigmoid())))(transition3): Transition((norm): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(pool): AvgPool2d(kernel_size=2, stride=2, padding=0))(denseblock4): DenseBlockWithSE((dense_block): Sequential((denselayer1): DenseLayer((norm1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer2): DenseLayer((norm1): BatchNorm2d(544, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(544, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer3): DenseLayer((norm1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(576, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer4): DenseLayer((norm1): BatchNorm2d(608, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(608, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer5): DenseLayer((norm1): BatchNorm2d(640, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(640, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer6): DenseLayer((norm1): BatchNorm2d(672, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(672, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer7): DenseLayer((norm1): BatchNorm2d(704, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(704, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer8): DenseLayer((norm1): BatchNorm2d(736, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(736, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer9): DenseLayer((norm1): BatchNorm2d(768, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(768, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer10): DenseLayer((norm1): BatchNorm2d(800, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(800, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer11): DenseLayer((norm1): BatchNorm2d(832, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(832, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer12): DenseLayer((norm1): BatchNorm2d(864, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(864, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer13): DenseLayer((norm1): BatchNorm2d(896, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(896, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer14): DenseLayer((norm1): BatchNorm2d(928, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(928, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer15): DenseLayer((norm1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(960, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False))(denselayer16): DenseLayer((norm1): BatchNorm2d(992, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu1): ReLU(inplace=True)(conv1): Conv2d(992, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu2): ReLU(inplace=True)(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)))(se): SELayer((avg_pool): AdaptiveAvgPool2d(output_size=1)(fc): Sequential((0): Linear(in_features=1024, out_features=64, bias=True)(1): ReLU(inplace=True)(2): Linear(in_features=64, out_features=1024, bias=True)(3): Sigmoid())))(norm5): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu5): ReLU(inplace=True))(classifier): Linear(in_features=1024, out_features=2, bias=True)

)

torch.Size([1, 2])六个人总结

由于只更改了模块。数据的处理,训练和验证,测试和原先的还是一样的,并且服务器显卡有限,这里就不做重复训练了。

SE模块通过自适应地调整通道权重来增强重要特征,从而提高网络的表现。将SE模块应用到每个DenseBlock的输出中。

步骤:

1.在DenseBlock的输出中插入SE模块:在每个DenseBlock的输出后面加上SELayer,以便通过SE模块进行通道加权。

2.调整DenseBlock:在DenseBlock的输出后面添加一个SE模块,然后再将其传递到下一个层。

相关文章:

第J5周:DenseNet+SE-Net实战

🍨 本文为🔗365天深度学习训练营 中的学习记录博客🍖 原作者:K同学啊 任务: ●1. 在DenseNet系列算法中插入SE-Net通道注意力机制,并完成猴痘病识别 ●2. 改进思路是否可以迁移到其他地方呢 ●3. 测试集acc…...

Intern大模型训练营(五):书生大模型全链路开源体系笔记

观看视频,可以比较详细地了解到书生大模型全链路开源体系。 其中有几个印象比较深的点: 这张图讲述了书生浦语大模型开源的发展史,同时与主流的llama和Chatgpt模型进行比较,可以看出在参数上,InterLM在努力追赶甚至超…...

聚观早报 | 比亚迪腾势D9登陆泰国;苹果 iOS 18.2 将发布

聚观早报每日整理最值得关注的行业重点事件,帮助大家及时了解最新行业动态,每日读报,就读聚观365资讯简报。 整理丨Cutie 11月5日消息 比亚迪腾势D9登陆泰国 苹果 iOS 18.2 将发布 真我GT7 Pro防尘防水细节 小米15 Ultra最快明年登场 …...

微信小程序开发,诗词鉴赏app,诗词搜索实现(三)

微信小程序开发,诗词鉴赏app(一): https://blog.csdn.net/jky_yihuangxing/article/details/143501681微信小程序开发,诗词鉴赏app,诗词推荐实现(二):https://blog.csdn.net/jky_yih…...

Kotlin 协程使用及其详解

Kotlin协程,好用,但是上限挺高的,我一直感觉自己就处于会用,知其然不知其所以然的地步。 做点小总结,比较浅显。后面自己再继续补充吧。 一、什么是协程? Kotlin 协程是一种轻量级的并发编程方式&#x…...

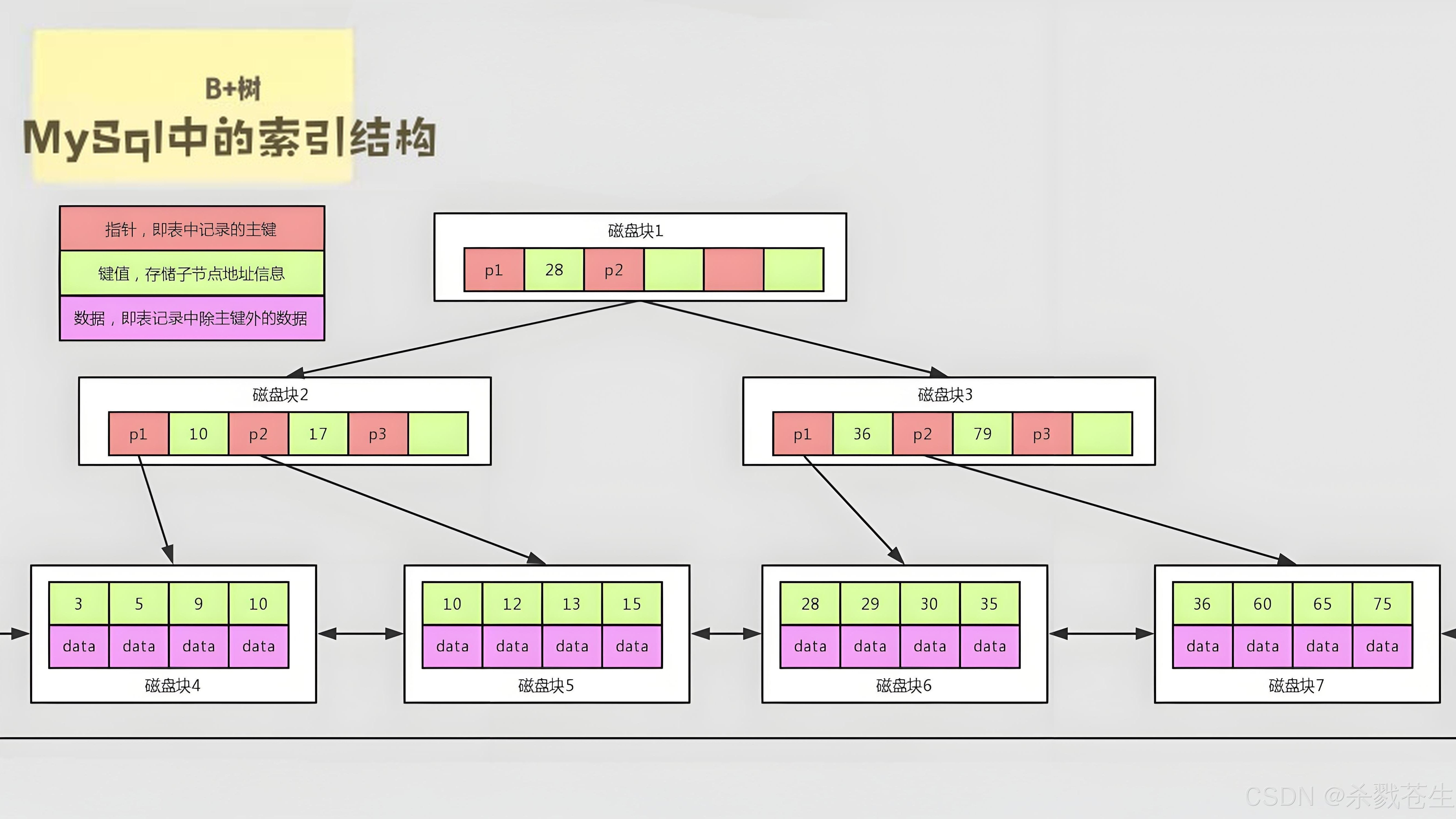

计算机组成原理--三章四章

这里写目录标题 第三章:存储系统3.1 存储系统基本概念引入存储器的层次结构简介产品 存储器的分类按层次分类按照介质分类按照存取方式分类按照信息的可更改性按照信息的可保护性 存储器的性能指标存储容量单位成本存储速度 总结 3.2主存储器的基本组成半导体元器件…...

单片机工程使用链接优化-flto找不到定义_链接静态库

IDE: CLion HOST: Windows 11 MinGW:x86_64-14.2.0-release-posix-seh-ucrt-rt_v12-rev0 GCC: arm-gnu-toolchain-13.3.rel1-mingw-w64-i686-arm-none-eabi 示例工程:https://github.com/ichliebedich-DaCapo/STM…...

UniTask/Unity的PlayerLoopTiming触发顺序

开始尝试在项目中使用UniTask,发现其中的UniTask.Yield确实很好用,还可以传入PlayerLoopTiming来更细致的调整代码时机,不过平常在Mono中接触的只有Awake,Start,Update等常用Timing,其他的就没怎么接触了&a…...

【报错记录】Steam迁移(移动)游戏报:移动以下应用的内容失败:XXX: 磁盘写入错误

前言 由于黑神话悟空,导致我的2TB的SSD系统盘快满了,我又买了一块4TB的SSD用来存放游戏,我就打算把之前C盘里的游戏移动到D盘,结果Steam移动游戏居然报错了,报的还是“磁盘写入错误”,如下图所示ÿ…...

C 语言学习-04【结构化程序设计】

1、单分支结构语句 用单分支结构进行奇偶判断: #include <stdio.h>int main() {int num;printf("Please enter an integer: ");scanf("%d", &num);if (num % 2 ! 0) {printf("%d is odd! \n", num);}if (num % 2 0) {prin…...

机器视觉:轮廓匹配算法原理

轮廓匹配的模板变量主要包括模板图像(Template)和待检测图像(Source Image) 在轮廓匹配中,模板变量主要包括一下几个关键部分: 模板图像(Template):这是进行匹配的…...

动力商城-02 环境搭建

1.父工程必须满足:1.1删除src目录 1.2pom 2.依赖继承 //里面的依赖,后代无条件继承<dependencies></dependencies>//里面的依赖,后代想要继承,得自己声明需要使用,可以不写版本号,自动继承&l…...

【react】Redux基础用法

1. Redux基础用法 Redux 是一个用于 JavaScript 应用的状态管理库,它不依赖于任何 UI库,但常用于与 React 框架配合使用。它提供了一种集中式的状态管理方式,将应用的所有状态保存在一个单一的全局 Store(存储)中&…...

使用Python分析股票价格数据并计算移动平均线的实用指南

使用Python分析股票价格数据并计算移动平均线的实用指南 在金融市场中,移动平均线(Moving Average, MA)是一种常用的技术分析工具,用于平滑价格数据,帮助投资者识别趋势。本文将详细介绍如何使用Python分析股票价格数据,并计算移动平均线。我们将通过一个实际的案例来演…...

如何解决FPS低的问题?代码优化方法有哪些?

如果你是一名游戏开发者,或者对电脑性能有所追求的用户,那么你一定遇到过帧率(FPS)低的问题。帧率低会导致游戏卡顿、画面不流畅等问题,极大地影响了用户体验。本文将从代码层面探讨FPS低的原因,并提供一些…...

QT信号和槽与自定义的信号和槽

QT信号和槽与自定义的信号和槽 1.概述 这篇文章介绍下QT信号和槽的入门知识,通过一个案例介绍如何创建信号和槽,并调用他们。 2.信号和槽使用 下面通过点击按钮关闭窗口的案例介绍如何使用信号和槽。 创建按钮 在widget.cpp文件中创建按钮代码如下 …...

LC:二分查找——杂记

文章目录 268. 丢失的数字162. 寻找峰值 268. 丢失的数字 LC将此题归类为二分查找,并且为简单题,下面记一下自己对这道题目的思考。 题目链接:268.丢失的数字 第一次看到这个题目,虽然标注的为简单,但肯定不能直接排…...

GA/T1400视图库平台EasyCVR多品牌摄像机视频平台前端监控摄像头镜头的基础知识

在现代安全监控系统中,摄像机镜头作为捕捉图像的关键组件,其选择和应用直接影响到监控图像的质量和系统的整体性能。随着技术的发展,摄像机镜头的种类和功能也在不断扩展,以适应各种复杂的监控环境和需求。对于相机成像来讲&#…...

【C++】踏上C++的学习之旅(六):深入“类和对象“世界,掌握编程的黄金法则(一)

文章目录 前言1. "面向过程"和"面向对象"的碰撞1.1 面向过程1.2 面向对象 2. "类"的引入3. "类"的定义3.1 🍉语法展示:3.2 "类"的两种定义方式3.3 "类"的命名规则 4. 类的访问限定符以及封…...

【物联网技术】ESP8266 WIFI模块在STA模式下作为TCP客户端上电自动进入透传数据模式

前言:讲解如何在ESP8266 WIFI模块在STA模式下作为TCP客户端与网络调试助手(TCP服务器)上电自动进入透传数据模式,而不需重新再发指令配置进入透传模式。 演示视频: ESP8266 WIFI模块在STA模式下作为TCP客户端上电自动进入透传数据模式 wifi模块在STA模式下作为TCP客户端相…...

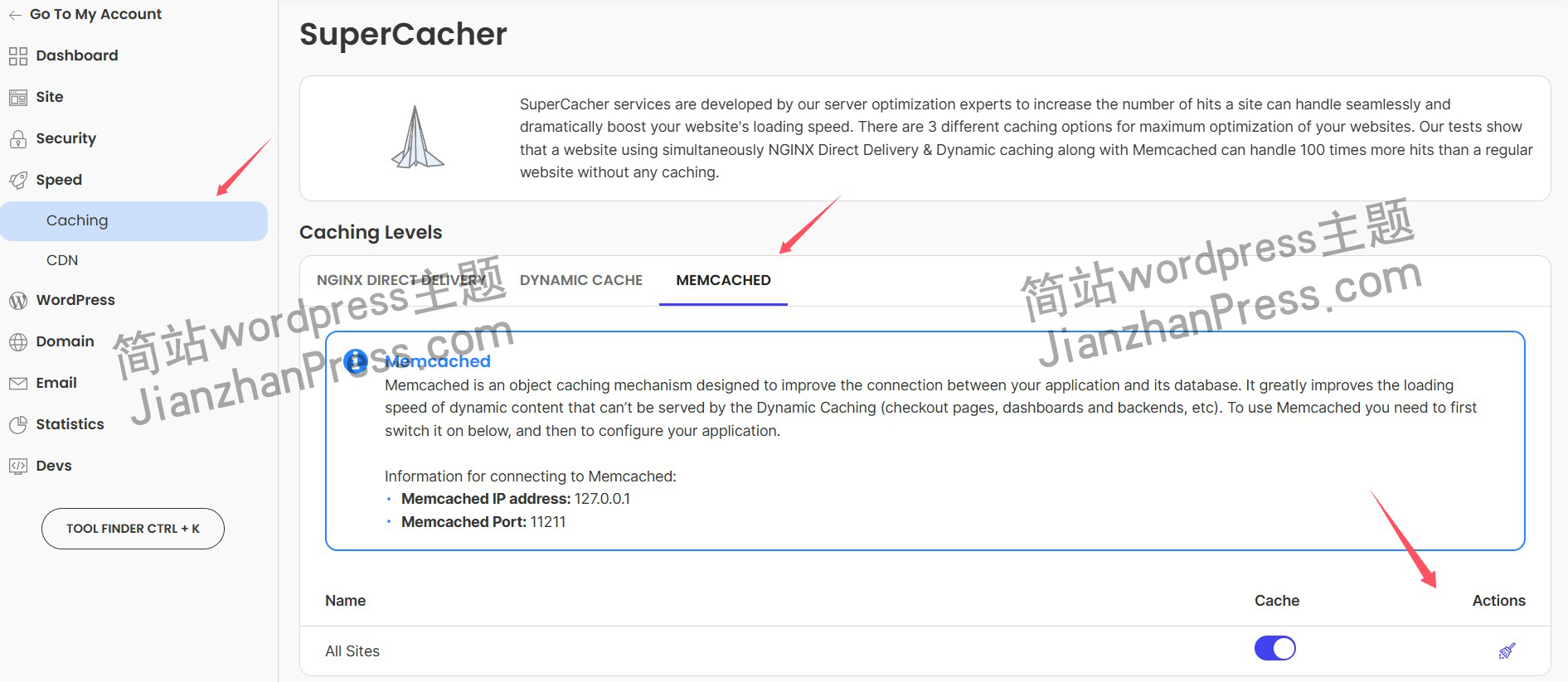

wordpress后台更新后 前端没变化的解决方法

使用siteground主机的wordpress网站,会出现更新了网站内容和修改了php模板文件、js文件、css文件、图片文件后,网站没有变化的情况。 不熟悉siteground主机的新手,遇到这个问题,就很抓狂,明明是哪都没操作错误&#x…...

【大模型RAG】拍照搜题技术架构速览:三层管道、两级检索、兜底大模型

摘要 拍照搜题系统采用“三层管道(多模态 OCR → 语义检索 → 答案渲染)、两级检索(倒排 BM25 向量 HNSW)并以大语言模型兜底”的整体框架: 多模态 OCR 层 将题目图片经过超分、去噪、倾斜校正后,分别用…...

Vue记事本应用实现教程

文章目录 1. 项目介绍2. 开发环境准备3. 设计应用界面4. 创建Vue实例和数据模型5. 实现记事本功能5.1 添加新记事项5.2 删除记事项5.3 清空所有记事 6. 添加样式7. 功能扩展:显示创建时间8. 功能扩展:记事项搜索9. 完整代码10. Vue知识点解析10.1 数据绑…...

逻辑回归:给不确定性划界的分类大师

想象你是一名医生。面对患者的检查报告(肿瘤大小、血液指标),你需要做出一个**决定性判断**:恶性还是良性?这种“非黑即白”的抉择,正是**逻辑回归(Logistic Regression)** 的战场&a…...

Java 8 Stream API 入门到实践详解

一、告别 for 循环! 传统痛点: Java 8 之前,集合操作离不开冗长的 for 循环和匿名类。例如,过滤列表中的偶数: List<Integer> list Arrays.asList(1, 2, 3, 4, 5); List<Integer> evens new ArrayList…...

DBAPI如何优雅的获取单条数据

API如何优雅的获取单条数据 案例一 对于查询类API,查询的是单条数据,比如根据主键ID查询用户信息,sql如下: select id, name, age from user where id #{id}API默认返回的数据格式是多条的,如下: {&qu…...

ElasticSearch搜索引擎之倒排索引及其底层算法

文章目录 一、搜索引擎1、什么是搜索引擎?2、搜索引擎的分类3、常用的搜索引擎4、搜索引擎的特点二、倒排索引1、简介2、为什么倒排索引不用B+树1.创建时间长,文件大。2.其次,树深,IO次数可怕。3.索引可能会失效。4.精准度差。三. 倒排索引四、算法1、Term Index的算法2、 …...

重启Eureka集群中的节点,对已经注册的服务有什么影响

先看答案,如果正确地操作,重启Eureka集群中的节点,对已经注册的服务影响非常小,甚至可以做到无感知。 但如果操作不当,可能会引发短暂的服务发现问题。 下面我们从Eureka的核心工作原理来详细分析这个问题。 Eureka的…...

论文笔记——相干体技术在裂缝预测中的应用研究

目录 相关地震知识补充地震数据的认识地震几何属性 相干体算法定义基本原理第一代相干体技术:基于互相关的相干体技术(Correlation)第二代相干体技术:基于相似的相干体技术(Semblance)基于多道相似的相干体…...

在Ubuntu24上采用Wine打开SourceInsight

1. 安装wine sudo apt install wine 2. 安装32位库支持,SourceInsight是32位程序 sudo dpkg --add-architecture i386 sudo apt update sudo apt install wine32:i386 3. 验证安装 wine --version 4. 安装必要的字体和库(解决显示问题) sudo apt install fonts-wqy…...