FasterNet中Pconv的实现、效果与作用分析

发表时间:2023年3月7日

论文地址:https://arxiv.org/abs/2303.03667

项目地址:https://github.com/JierunChen/FasterNet

FasterNet-t0在GPU、CPU和ARM处理器上分别比MobileViT-XXS快2.8×、3.3×和2.4×,而准确率要高2.9%。我们的大型FasterNet-L实现了令人印象深刻的83.5%的前1精度,与新兴的Swin-B相当,同时在GPU上有36%的推理吞吐量,并在CPU上节省了37%的计算时间。FasterNet作者提到的其核心在于PConv模块,其不仅减少了FLOPs(降低了冗余计算,其与ghostnet一样,认为conv中存在冗余),同时降低了mac(大部分输入直达输入),故而在取得了高性能的延时能力,如在gpu上fps高,在cpu与arm设备上延时最低。为此对PConv的设计与实现进行深入分析。

1、论文信息

1.1 模块设计

Pconv与常规卷积、分组卷积相比,只对输入通道的少部分做密集卷积(常规卷积),剩余部分直通到输出。该操作大幅度降低了卷积的运算量(如将输入通道分成4份,只对其中一份进行卷积,剩余的3份直通到下一层),也降低了内存访问成本(如C_in为400,只对其四分之一进行卷积,内存访问则为100wh+100wh,内存访问成本为200wh,为原来的1/4)

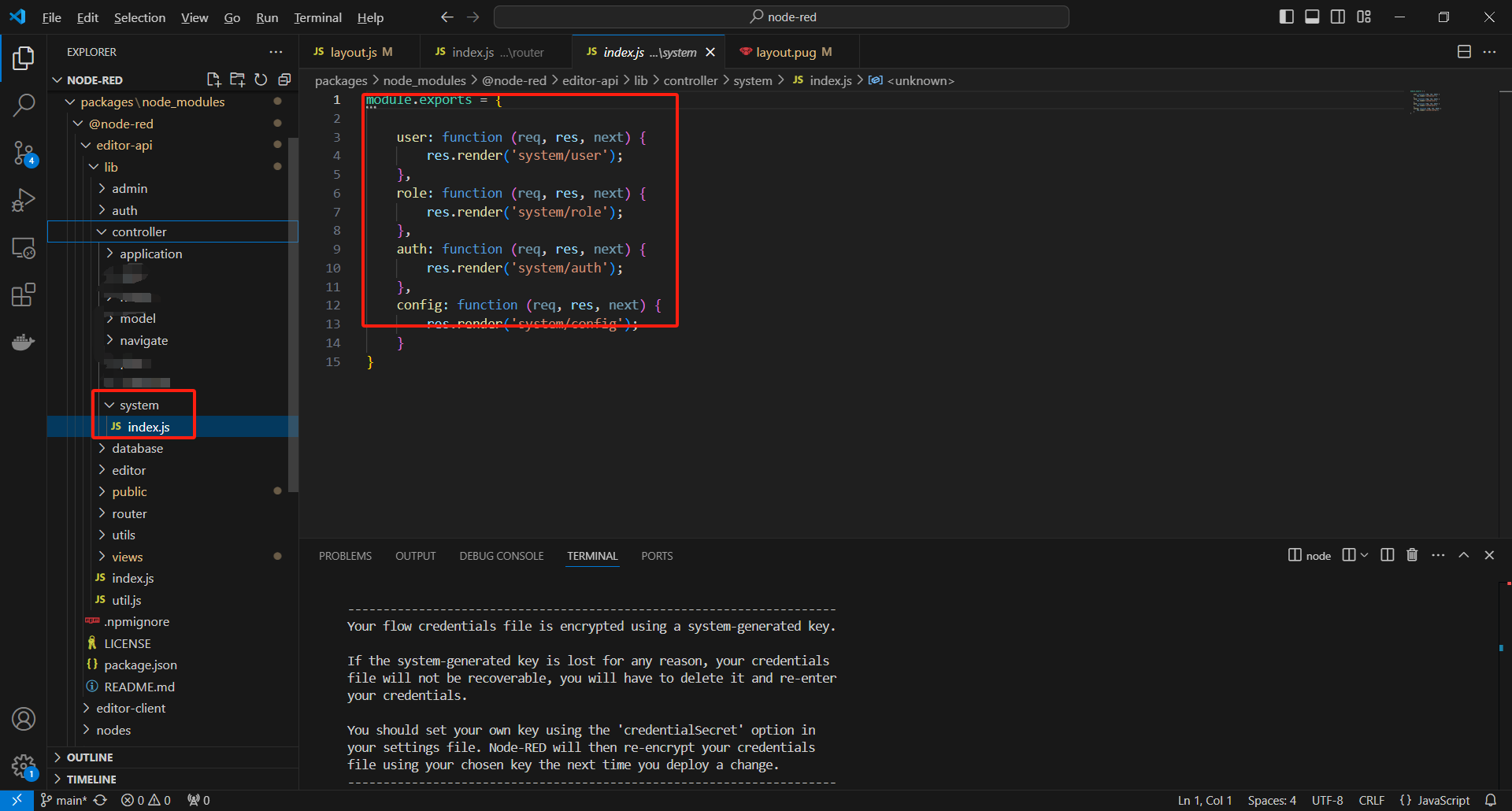

Pconv对应实现代码如下所示,可以看到就是split=》conv=》cat操作

class Partial_conv3(nn.Module):def __init__(self, dim, n_div, forward):super().__init__()self.dim_conv3 = dim // n_divself.dim_untouched = dim - self.dim_conv3self.partial_conv3 = nn.Conv2d(self.dim_conv3, self.dim_conv3, 3, 1, 1, bias=False)if forward == 'slicing':self.forward = self.forward_slicingelif forward == 'split_cat':self.forward = self.forward_split_catelse:raise NotImplementedErrordef forward_slicing(self, x: Tensor) -> Tensor:# only for inferencex = x.clone() # !!! Keep the original input intact for the residual connection laterx[:, :self.dim_conv3, :, :] = self.partial_conv3(x[:, :self.dim_conv3, :, :])return xdef forward_split_cat(self, x: Tensor) -> Tensor:# for training/inferencex1, x2 = torch.split(x, [self.dim_conv3, self.dim_untouched], dim=1)x1 = self.partial_conv3(x1)x = torch.cat((x1, x2), 1)return x

在论文中提到了与PWcov结合、或是T-shaped Conv,然而在代码层面实际上跟PConv没有任何关系。只是在FasterNet Block中与Conv1x1进行结合conv1x1实现通道间信息交互

1.2 模型结构

Faster的模型结构如下所示,可以看到Pconv只是其中的一小部分。作者将Pconv与conv1x1+BN+Relu+残差联合在一起形成FasterNet Block,FasterNet Block才是模型的主要成分。然后模型中参考了VIT模型设计中的很多设计(如PatchEmbed、mlp),只是没有Transformer模块。

PatchEmbed在模型输入层中可以看到,而mlp操作其实就是Pconv后面的Conv1x1+bn+relu+Conv1x1

具体模型结构如下所示(一共有t0、t1、t2、s、m、l等版本,可以看到数据在经过Embedding层后即完成了1/4下采样;后续的每一个Stage(即FasterNet Block)仅是实现特征提取;最后经过Merging层(即conv2+bn层)实现对数据的下采样

1.3 结构对比

模块性能对比 这里对比了conv、分组卷积、深度分离卷积、PConv。对应的feature map在像素点量上是逐步减半的(如:96x56x56的像素量是192x28x28的一半),可以发现只有DWConv的FLOPs是减半,其他方法是没有减少的。 这里可以发现,DWConv是性价比最高的结构,PConv是第二的(观察fps与latency)。唯独在ARM (Cortex-A72,using a single thread)架构下,PConv比DWConv要强

注:1、PConv在r为1/4时,FLOPs与group为1/16的分组卷积是一样的,但内存访问量是不同的。

注:2、DWConv是全分组卷积(ksize为3,分组数为通道数,仅实现空间信息交互)+点卷积组成(ksize为1,实现通道信息交互)

作者通过对Conv进行拟合,发现PConv是loss最低的。这里是因为GConv与PConv都无法实现全局的通道信息交互,所以需要PWConv。然后为了同等对比,所以DWConv也被迫加上了一个PWConv,这些loss在值差异上只有0.001~0.002,实际上是没有区别的,具体参考ddb_conv、RepConv进行融合输出值差异

内存访问成本对比: 公式2是Pconv的,公式3是conv的,但c’是c的1/4,故而说Pconv的内存访问成本是conv的1/4 这里是假定了模型输入输出的通道数都为c,所以是2c,否则是(c_in+c_out)

1.3 模型效果

宏观对比如下,可以发现FasterNet在GPU上达到了最高的fps,在cpu与arm上达到了最低的延时。

以下图表表示了FasterNet在轻量级与重量级模型中都取得了最近性能。

2、代码实现与分析

2.1 Pconv代码

Pconv的实现代码经过简化后如下所示,可以发现就是简单的split+cat操作。23年博主也做过类似尝试(用pconv全量替换掉conv),并没有训练出好效果

class Partial_conv3(nn.Module):def __init__(self, dim, n_div, forward):super().__init__()self.dim_conv3 = dim // n_divself.dim_untouched = dim - self.dim_conv3self.partial_conv3 = nn.Conv2d(self.dim_conv3, self.dim_conv3, 3, 1, 1, bias=False)def forward(self, x: Tensor) -> Tensor:# only for inferencex = x.clone() # !!! Keep the original input intact for the residual connection laterx[:, :self.dim_conv3, :, :] = self.partial_conv3(x[:, :self.dim_conv3, :, :])return x

2.2 Faster Block代码

spatial_mixing对象为pconv层

mlp对象为Faster Block模块中的非pconv层

forword代码如下:

def forward(self, x: Tensor) -> Tensor:shortcut = xx = self.spatial_mixing(x)x = shortcut + self.drop_path(self.mlp(x))return x

完整实现代码如下

class MLPBlock(nn.Module):def __init__(self,dim,n_div,mlp_ratio,drop_path,layer_scale_init_value,act_layer,norm_layer,pconv_fw_type):super().__init__()self.dim = dimself.mlp_ratio = mlp_ratioself.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()self.n_div = n_divmlp_hidden_dim = int(dim * mlp_ratio)mlp_layer: List[nn.Module] = [nn.Conv2d(dim, mlp_hidden_dim, 1, bias=False),norm_layer(mlp_hidden_dim),act_layer(),nn.Conv2d(mlp_hidden_dim, dim, 1, bias=False)]self.mlp = nn.Sequential(*mlp_layer)self.spatial_mixing = Partial_conv3(dim,n_div,pconv_fw_type)if layer_scale_init_value > 0:self.layer_scale = nn.Parameter(layer_scale_init_value * torch.ones((dim)), requires_grad=True)self.forward = self.forward_layer_scaleelse:self.forward = self.forwarddef forward(self, x: Tensor) -> Tensor:shortcut = xx = self.spatial_mixing(x)x = shortcut + self.drop_path(self.mlp(x))return xdef forward_layer_scale(self, x: Tensor) -> Tensor:shortcut = xx = self.spatial_mixing(x)x = shortcut + self.drop_path(self.layer_scale.unsqueeze(-1).unsqueeze(-1) * self.mlp(x))return x此外还有一个BasicStage类,其主要就是实现多层MLPBlock(即Faster Block)的堆叠

2.3 PatchEmbed与PatchMerging

PatchEmbed是类似于vit模型中的图像切patch,将空间信息转移到通道上。

PatchMerging是基于conv的stride实现特征图的分辨率降低,同时实现通道的增加。

class PatchEmbed(nn.Module):def __init__(self, patch_size, patch_stride, in_chans, embed_dim, norm_layer):super().__init__()self.proj = nn.Conv2d(in_chans, embed_dim, kernel_size=patch_size, stride=patch_stride, bias=False)if norm_layer is not None:self.norm = norm_layer(embed_dim)else:self.norm = nn.Identity()def forward(self, x: Tensor) -> Tensor:x = self.norm(self.proj(x))return xclass PatchMerging(nn.Module):def __init__(self, patch_size2, patch_stride2, dim, norm_layer):super().__init__()self.reduction = nn.Conv2d(dim, 2 * dim, kernel_size=patch_size2, stride=patch_stride2, bias=False)if norm_layer is not None:self.norm = norm_layer(2 * dim)else:self.norm = nn.Identity()def forward(self, x: Tensor) -> Tensor:x = self.norm(self.reduction(x))return x

2.4 模型代码

class FasterNet(nn.Module):def __init__(self,in_chans=3,num_classes=1000,embed_dim=96,depths=(1, 2, 8, 2),mlp_ratio=2.,n_div=4,patch_size=4,patch_stride=4,patch_size2=2, # for subsequent layerspatch_stride2=2,patch_norm=True,feature_dim=1280,drop_path_rate=0.1,layer_scale_init_value=0,norm_layer='BN',act_layer='RELU',fork_feat=False,init_cfg=None,pretrained=None,pconv_fw_type='split_cat',**kwargs):super().__init__()if norm_layer == 'BN':norm_layer = nn.BatchNorm2delse:raise NotImplementedErrorif act_layer == 'GELU':act_layer = nn.GELUelif act_layer == 'RELU':act_layer = partial(nn.ReLU, inplace=True)else:raise NotImplementedErrorif not fork_feat:self.num_classes = num_classesself.num_stages = len(depths)self.embed_dim = embed_dimself.patch_norm = patch_normself.num_features = int(embed_dim * 2 ** (self.num_stages - 1))self.mlp_ratio = mlp_ratioself.depths = depths# split image into non-overlapping patchesself.patch_embed = PatchEmbed(patch_size=patch_size,patch_stride=patch_stride,in_chans=in_chans,embed_dim=embed_dim,norm_layer=norm_layer if self.patch_norm else None)# stochastic depth decay ruledpr = [x.item()for x in torch.linspace(0, drop_path_rate, sum(depths))]# build layersstages_list = []for i_stage in range(self.num_stages):stage = BasicStage(dim=int(embed_dim * 2 ** i_stage),n_div=n_div,depth=depths[i_stage],mlp_ratio=self.mlp_ratio,drop_path=dpr[sum(depths[:i_stage]):sum(depths[:i_stage + 1])],layer_scale_init_value=layer_scale_init_value,norm_layer=norm_layer,act_layer=act_layer,pconv_fw_type=pconv_fw_type)stages_list.append(stage)# patch merging layerif i_stage < self.num_stages - 1:stages_list.append(PatchMerging(patch_size2=patch_size2,patch_stride2=patch_stride2,dim=int(embed_dim * 2 ** i_stage),norm_layer=norm_layer))self.stages = nn.Sequential(*stages_list)self.fork_feat = fork_featif self.fork_feat:self.forward = self.forward_det# add a norm layer for each outputself.out_indices = [0, 2, 4, 6]for i_emb, i_layer in enumerate(self.out_indices):if i_emb == 0 and os.environ.get('FORK_LAST3', None):raise NotImplementedErrorelse:layer = norm_layer(int(embed_dim * 2 ** i_emb))layer_name = f'norm{i_layer}'self.add_module(layer_name, layer)else:self.forward = self.forward_cls# Classifier headself.avgpool_pre_head = nn.Sequential(nn.AdaptiveAvgPool2d(1),nn.Conv2d(self.num_features, feature_dim, 1, bias=False),act_layer())self.head = nn.Linear(feature_dim, num_classes) \if num_classes > 0 else nn.Identity()self.apply(self.cls_init_weights)self.init_cfg = copy.deepcopy(init_cfg)if self.fork_feat and (self.init_cfg is not None or pretrained is not None):self.init_weights()def cls_init_weights(self, m):if isinstance(m, nn.Linear):trunc_normal_(m.weight, std=.02)if isinstance(m, nn.Linear) and m.bias is not None:nn.init.constant_(m.bias, 0)elif isinstance(m, (nn.Conv1d, nn.Conv2d)):trunc_normal_(m.weight, std=.02)if m.bias is not None:nn.init.constant_(m.bias, 0)elif isinstance(m, (nn.LayerNorm, nn.GroupNorm)):nn.init.constant_(m.bias, 0)nn.init.constant_(m.weight, 1.0)# init for mmdetection by loading imagenet pre-trained weightsdef init_weights(self, pretrained=None):logger = get_root_logger()if self.init_cfg is None and pretrained is None:logger.warn(f'No pre-trained weights for 'f'{self.__class__.__name__}, 'f'training start from scratch')passelse:assert 'checkpoint' in self.init_cfg, f'Only support ' \f'specify `Pretrained` in ' \f'`init_cfg` in ' \f'{self.__class__.__name__} 'if self.init_cfg is not None:ckpt_path = self.init_cfg['checkpoint']elif pretrained is not None:ckpt_path = pretrainedckpt = _load_checkpoint(ckpt_path, logger=logger, map_location='cpu')if 'state_dict' in ckpt:_state_dict = ckpt['state_dict']elif 'model' in ckpt:_state_dict = ckpt['model']else:_state_dict = ckptstate_dict = _state_dictmissing_keys, unexpected_keys = \self.load_state_dict(state_dict, False)# show for debugprint('missing_keys: ', missing_keys)print('unexpected_keys: ', unexpected_keys)def forward_cls(self, x):# output only the features of last layer for image classificationx = self.patch_embed(x)x = self.stages(x)x = self.avgpool_pre_head(x) # B C 1 1x = torch.flatten(x, 1)x = self.head(x)return xdef forward_det(self, x: Tensor) -> Tensor:# output the features of four stages for dense predictionx = self.patch_embed(x)outs = []for idx, stage in enumerate(self.stages):x = stage(x)if self.fork_feat and idx in self.out_indices:norm_layer = getattr(self, f'norm{idx}')x_out = norm_layer(x)outs.append(x_out)return outs

2.5 完整模型代码

完整模型代码只是用于3.2中的FLOPs分析

# Copyright (c) Microsoft Corporation.

# Licensed under the MIT License.

import torch

import torch.nn as nn

from timm.models.layers import DropPath, to_2tuple, trunc_normal_

from functools import partial

from typing import List

from torch import Tensor

import copy

import ostry:from mmdet.models.builder import BACKBONES as det_BACKBONESfrom mmdet.utils import get_root_loggerfrom mmcv.runner import _load_checkpointhas_mmdet = True

except ImportError:print("If for detection, please install mmdetection first")has_mmdet = Falseclass Partial_conv3(nn.Module):def __init__(self, dim, n_div, forward):super().__init__()self.dim_conv3 = dim // n_divself.dim_untouched = dim - self.dim_conv3self.partial_conv3 = nn.Conv2d(self.dim_conv3, self.dim_conv3, 3, 1, 1, bias=False)if forward == 'slicing':self.forward = self.forward_slicingelif forward == 'split_cat':self.forward = self.forward_split_catelse:raise NotImplementedErrordef forward_slicing(self, x: Tensor) -> Tensor:# only for inferencex = x.clone() # !!! Keep the original input intact for the residual connection laterx[:, :self.dim_conv3, :, :] = self.partial_conv3(x[:, :self.dim_conv3, :, :])return xdef forward_split_cat(self, x: Tensor) -> Tensor:# for training/inferencex1, x2 = torch.split(x, [self.dim_conv3, self.dim_untouched], dim=1)x1 = self.partial_conv3(x1)x = torch.cat((x1, x2), 1)return xclass MLPBlock(nn.Module):def __init__(self,dim,n_div,mlp_ratio,drop_path,layer_scale_init_value,act_layer,norm_layer,pconv_fw_type):super().__init__()self.dim = dimself.mlp_ratio = mlp_ratioself.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()self.n_div = n_divmlp_hidden_dim = int(dim * mlp_ratio)mlp_layer: List[nn.Module] = [nn.Conv2d(dim, mlp_hidden_dim, 1, bias=False),norm_layer(mlp_hidden_dim),act_layer(),nn.Conv2d(mlp_hidden_dim, dim, 1, bias=False)]self.mlp = nn.Sequential(*mlp_layer)self.spatial_mixing = Partial_conv3(dim,n_div,pconv_fw_type)if layer_scale_init_value > 0:self.layer_scale = nn.Parameter(layer_scale_init_value * torch.ones((dim)), requires_grad=True)self.forward = self.forward_layer_scaleelse:self.forward = self.forwarddef forward(self, x: Tensor) -> Tensor:shortcut = xx = self.spatial_mixing(x)x = shortcut + self.drop_path(self.mlp(x))return xdef forward_layer_scale(self, x: Tensor) -> Tensor:shortcut = xx = self.spatial_mixing(x)x = shortcut + self.drop_path(self.layer_scale.unsqueeze(-1).unsqueeze(-1) * self.mlp(x))return xclass BasicStage(nn.Module):def __init__(self,dim,depth,n_div,mlp_ratio,drop_path,layer_scale_init_value,norm_layer,act_layer,pconv_fw_type):super().__init__()blocks_list = [MLPBlock(dim=dim,n_div=n_div,mlp_ratio=mlp_ratio,drop_path=drop_path[i],layer_scale_init_value=layer_scale_init_value,norm_layer=norm_layer,act_layer=act_layer,pconv_fw_type=pconv_fw_type)for i in range(depth)]self.blocks = nn.Sequential(*blocks_list)def forward(self, x: Tensor) -> Tensor:x = self.blocks(x)return xclass PatchEmbed(nn.Module):def __init__(self, patch_size, patch_stride, in_chans, embed_dim, norm_layer):super().__init__()self.proj = nn.Conv2d(in_chans, embed_dim, kernel_size=patch_size, stride=patch_stride, bias=False)if norm_layer is not None:self.norm = norm_layer(embed_dim)else:self.norm = nn.Identity()def forward(self, x: Tensor) -> Tensor:x = self.norm(self.proj(x))return xclass PatchMerging(nn.Module):def __init__(self, patch_size2, patch_stride2, dim, norm_layer):super().__init__()self.reduction = nn.Conv2d(dim, 2 * dim, kernel_size=patch_size2, stride=patch_stride2, bias=False)if norm_layer is not None:self.norm = norm_layer(2 * dim)else:self.norm = nn.Identity()def forward(self, x: Tensor) -> Tensor:x = self.norm(self.reduction(x))return xclass FasterNet(nn.Module):def __init__(self,in_chans=3,num_classes=1000,embed_dim=96,depths=(1, 2, 8, 2),mlp_ratio=2.,n_div=4,patch_size=4,patch_stride=4,patch_size2=2, # for subsequent layerspatch_stride2=2,patch_norm=True,feature_dim=1280,drop_path_rate=0.1,layer_scale_init_value=0,norm_layer='BN',act_layer='RELU',fork_feat=False,init_cfg=None,pretrained=None,pconv_fw_type='split_cat',**kwargs):super().__init__()if norm_layer == 'BN':norm_layer = nn.BatchNorm2delse:raise NotImplementedErrorif act_layer == 'GELU':act_layer = nn.GELUelif act_layer == 'RELU':act_layer = partial(nn.ReLU, inplace=True)else:raise NotImplementedErrorif not fork_feat:self.num_classes = num_classesself.num_stages = len(depths)self.embed_dim = embed_dimself.patch_norm = patch_normself.num_features = int(embed_dim * 2 ** (self.num_stages - 1))self.mlp_ratio = mlp_ratioself.depths = depths# split image into non-overlapping patchesself.patch_embed = PatchEmbed(patch_size=patch_size,patch_stride=patch_stride,in_chans=in_chans,embed_dim=embed_dim,norm_layer=norm_layer if self.patch_norm else None)# stochastic depth decay ruledpr = [x.item()for x in torch.linspace(0, drop_path_rate, sum(depths))]# build layersstages_list = []for i_stage in range(self.num_stages):stage = BasicStage(dim=int(embed_dim * 2 ** i_stage),n_div=n_div,depth=depths[i_stage],mlp_ratio=self.mlp_ratio,drop_path=dpr[sum(depths[:i_stage]):sum(depths[:i_stage + 1])],layer_scale_init_value=layer_scale_init_value,norm_layer=norm_layer,act_layer=act_layer,pconv_fw_type=pconv_fw_type)stages_list.append(stage)# patch merging layerif i_stage < self.num_stages - 1:stages_list.append(PatchMerging(patch_size2=patch_size2,patch_stride2=patch_stride2,dim=int(embed_dim * 2 ** i_stage),norm_layer=norm_layer))self.stages = nn.Sequential(*stages_list)self.fork_feat = fork_featif self.fork_feat:self.forward = self.forward_det# add a norm layer for each outputself.out_indices = [0, 2, 4, 6]for i_emb, i_layer in enumerate(self.out_indices):if i_emb == 0 and os.environ.get('FORK_LAST3', None):raise NotImplementedErrorelse:layer = norm_layer(int(embed_dim * 2 ** i_emb))layer_name = f'norm{i_layer}'self.add_module(layer_name, layer)else:self.forward = self.forward_cls# Classifier headself.avgpool_pre_head = nn.Sequential(nn.AdaptiveAvgPool2d(1),nn.Conv2d(self.num_features, feature_dim, 1, bias=False),act_layer())self.head = nn.Linear(feature_dim, num_classes) \if num_classes > 0 else nn.Identity()self.apply(self.cls_init_weights)self.init_cfg = copy.deepcopy(init_cfg)if self.fork_feat and (self.init_cfg is not None or pretrained is not None):self.init_weights()def cls_init_weights(self, m):if isinstance(m, nn.Linear):trunc_normal_(m.weight, std=.02)if isinstance(m, nn.Linear) and m.bias is not None:nn.init.constant_(m.bias, 0)elif isinstance(m, (nn.Conv1d, nn.Conv2d)):trunc_normal_(m.weight, std=.02)if m.bias is not None:nn.init.constant_(m.bias, 0)elif isinstance(m, (nn.LayerNorm, nn.GroupNorm)):nn.init.constant_(m.bias, 0)nn.init.constant_(m.weight, 1.0)# init for mmdetection by loading imagenet pre-trained weightsdef init_weights(self, pretrained=None):logger = get_root_logger()if self.init_cfg is None and pretrained is None:logger.warn(f'No pre-trained weights for 'f'{self.__class__.__name__}, 'f'training start from scratch')passelse:assert 'checkpoint' in self.init_cfg, f'Only support ' \f'specify `Pretrained` in ' \f'`init_cfg` in ' \f'{self.__class__.__name__} 'if self.init_cfg is not None:ckpt_path = self.init_cfg['checkpoint']elif pretrained is not None:ckpt_path = pretrainedckpt = _load_checkpoint(ckpt_path, logger=logger, map_location='cpu')if 'state_dict' in ckpt:_state_dict = ckpt['state_dict']elif 'model' in ckpt:_state_dict = ckpt['model']else:_state_dict = ckptstate_dict = _state_dictmissing_keys, unexpected_keys = \self.load_state_dict(state_dict, False)# show for debugprint('missing_keys: ', missing_keys)print('unexpected_keys: ', unexpected_keys)def forward_cls(self, x):# output only the features of last layer for image classificationx = self.patch_embed(x)x = self.stages(x)x = self.avgpool_pre_head(x) # B C 1 1x = torch.flatten(x, 1)x = self.head(x)return xdef forward_det(self, x: Tensor) -> Tensor:# output the features of four stages for dense predictionx = self.patch_embed(x)outs = []for idx, stage in enumerate(self.stages):x = stage(x)if self.fork_feat and idx in self.out_indices:norm_layer = getattr(self, f'norm{idx}')x_out = norm_layer(x)outs.append(x_out)return outs3、相关分析

3.1 PConv可以取代Conv么?

不可以,其仅是实现了对于C_in与C_out相等时,conv的平替;同时,其只有局部空间信息的交互,大部分通道数据是直连输出,因此会是输入数据直传到网络深层。故而需要密集全连接的卷积层进行通道间信息交互。

在整个论文实验中,也没有将FasterNet中pconv替换为Conv的对比,pconv。或许FasterNet的优势仅是因为其结构设计(尤其是对输入进行PatchEmbed,将空间大小降低为原来的1/16),也就是是使用Conv替代pconv,在acc与延时上或许依旧占据优势。

同样,对于PWConv也没有等效对比,将FasterNet中pconv替换为PWConv或许还能再度迎来性能提升。毕竟在作者实验中,PWConv在gpu上推理速度比pconv更具优势,拟合能力与pconv不相上下。

3.2 FasterNet中的FLOPs分布

基于以下代码构建了一个简易的FasterNet模型,并输出了每一层的flops

if __name__=="__main__":model=FasterNet( depths=(1, 1, 1, 1),)from fvcore.nn import flop_count_table, FlopCountAnalysis, ActivationCountAnalysis x = torch.randn(1, 3, 256, 256)# model = SAFMN(dim=36, n_blocks=12, ffn_scale=2.0, upscaling_factor=2)print(f'params: {sum(map(lambda x: x.numel(), model.parameters()))}')print(flop_count_table(FlopCountAnalysis(model, x), activations=ActivationCountAnalysis(model, x)))output = model(x)print(output.shape)

代码运行输出效果如下,可以发现模型关键模块FasterBlock中flops的大头在blocks.0.mlp上,spatial_mixing.partial_conv3(即pconv)只占据了模块10%的计算量为0.21m。

| module | #parameters or shape | #flops | #activations |

|:--------------------------------------------------|:-----------------------|:-----------|:---------------|

| model | 7.4M | 0.948G | 3.136M |

| patch_embed | 4.8K | 20.84M | 0.393M |

| patch_embed.proj | 4.608K | 18.874M | 0.393M |

| patch_embed.proj.weight | (96, 3, 4, 4) | | |

| patch_embed.norm | 0.192K | 1.966M | 0 |

| patch_embed.norm.weight | (96,) | | |

| patch_embed.norm.bias | (96,) | | |

| stages | 5.131M | 0.924G | 2.74M |

| stages.0.blocks.0 | 42.432K | 0.176G | 1.278M |

| stages.0.blocks.0.mlp | 37.248K | 0.155G | 1.18M |

| stages.0.blocks.0.spatial_mixing.partial_conv3 | 5.184K | 21.234M | 98.304K |

| stages.1 | 74.112K | 76.481M | 0.197M |

| stages.1.reduction | 73.728K | 75.497M | 0.197M |

| stages.1.norm | 0.384K | 0.983M | 0 |

| stages.2.blocks.0 | 0.169M | 0.174G | 0.639M |

| stages.2.blocks.0.mlp | 0.148M | 0.153G | 0.59M |

| stages.2.blocks.0.spatial_mixing.partial_conv3 | 20.736K | 21.234M | 49.152K |

| stages.3 | 0.296M | 75.989M | 98.304K |

| stages.3.reduction | 0.295M | 75.497M | 98.304K |

| stages.3.norm | 0.768K | 0.492M | 0 |

| stages.4.blocks.0 | 0.674M | 0.173G | 0.319M |

| stages.4.blocks.0.mlp | 0.591M | 0.152G | 0.295M |

| stages.4.blocks.0.spatial_mixing.partial_conv3 | 82.944K | 21.234M | 24.576K |

| stages.5 | 1.181M | 75.743M | 49.152K |

| stages.5.reduction | 1.18M | 75.497M | 49.152K |

| stages.5.norm | 1.536K | 0.246M | 0 |

| stages.6.blocks.0 | 2.694M | 0.173G | 0.16M |

| stages.6.blocks.0.mlp | 2.362M | 0.151G | 0.147M |

| stages.6.blocks.0.spatial_mixing.partial_conv3 | 0.332M | 21.234M | 12.288K |

| avgpool_pre_head | 0.983M | 1.032M | 1.28K |

| avgpool_pre_head.1 | 0.983M | 0.983M | 1.28K |

| avgpool_pre_head.1.weight | (1280, 768, 1, 1) | | |

| avgpool_pre_head.0 | | 49.152K | 0 |

| head | 1.281M | 1.28M | 1K |

| head.weight | (1000, 1280) | | |

| head.bias | (1000,) | | |

3.3 将PConv替换为Conv的FLops变化

将原来的Partial_conv3类代码替换为以下代码

class Partial_conv3(nn.Module):def __init__(self, dim, n_div, forward):super().__init__()self.conv = nn.Conv2d(dim, dim, 3, 1, 1, bias=False)def forward(self, x: Tensor) -> Tensor:# only for inferencex = x.clone() # !!! Keep the original input intact for the residual connection laterx = self.conv(x)return x

再次运行以下代码后

if __name__=="__main__":model=FasterNet( depths=(1, 1, 1, 1),)from fvcore.nn import flop_count_table, FlopCountAnalysis, ActivationCountAnalysis x = torch.randn(1, 3, 256, 256)# model = SAFMN(dim=36, n_blocks=12, ffn_scale=2.0, upscaling_factor=2)print(f'params: {sum(map(lambda x: x.numel(), model.parameters()))}')print(flop_count_table(FlopCountAnalysis(model, x), activations=ActivationCountAnalysis(model, x)))output = model(x)print(output.shape)

这里可以发现flops为2.22g,相比与原来的0.98g翻了一倍。在新的FasterBlock中,spatial_mixing.conv中flops的占比达到了70%,为0.34g,相比于原来的21m为16倍。

| module | #parameters or shape | #flops | #activations |

|:-----------------------------------------|:-----------------------|:-----------|:---------------|

| model | 14.009M | 2.222G | 3.689M |

| patch_embed | 4.8K | 20.84M | 0.393M |

| patch_embed.proj | 4.608K | 18.874M | 0.393M |

| patch_embed.proj.weight | (96, 3, 4, 4) | | |

| patch_embed.norm | 0.192K | 1.966M | 0 |

| patch_embed.norm.weight | (96,) | | |

| patch_embed.norm.bias | (96,) | | |

| stages | 11.74M | 2.199G | 3.293M |

| stages.0.blocks.0 | 0.12M | 0.495G | 1.573M |

| stages.0.blocks.0.mlp | 37.248K | 0.155G | 1.18M |

| stages.0.blocks.0.spatial_mixing.conv | 82.944K | 0.34G | 0.393M |

| stages.1 | 74.112K | 76.481M | 0.197M |

| stages.1.reduction | 73.728K | 75.497M | 0.197M |

| stages.1.norm | 0.384K | 0.983M | 0 |

| stages.2.blocks.0 | 0.48M | 0.493G | 0.786M |

| stages.2.blocks.0.mlp | 0.148M | 0.153G | 0.59M |

| stages.2.blocks.0.spatial_mixing.conv | 0.332M | 0.34G | 0.197M |

| stages.3 | 0.296M | 75.989M | 98.304K |

| stages.3.reduction | 0.295M | 75.497M | 98.304K |

| stages.3.norm | 0.768K | 0.492M | 0 |

| stages.4.blocks.0 | 1.918M | 0.492G | 0.393M |

| stages.4.blocks.0.mlp | 0.591M | 0.152G | 0.295M |

| stages.4.blocks.0.spatial_mixing.conv | 1.327M | 0.34G | 98.304K |

| stages.5 | 1.181M | 75.743M | 49.152K |

| stages.5.reduction | 1.18M | 75.497M | 49.152K |

| stages.5.norm | 1.536K | 0.246M | 0 |

| stages.6.blocks.0 | 7.671M | 0.491G | 0.197M |

| stages.6.blocks.0.mlp | 2.362M | 0.151G | 0.147M |

| stages.6.blocks.0.spatial_mixing.conv | 5.308M | 0.34G | 49.152K |

| avgpool_pre_head | 0.983M | 1.032M | 1.28K |

| avgpool_pre_head.1 | 0.983M | 0.983M | 1.28K |

| avgpool_pre_head.1.weight | (1280, 768, 1, 1) | | |

| avgpool_pre_head.0 | | 49.152K | 0 |

| head | 1.281M | 1.28M | 1K |

| head.weight | (1000, 1280) | | |

| head.bias | (1000,) | | |

torch.Size([1, 1000])

3.3 整体结论

基于3.1-3.3的分析,可以发现我们不能直接用pconv取代模型中所有的conv层,但可以在部分层中取代个别flops较大的conv中。pconv只是近似conv的一个选择,其仅是在FasterNet的架构设计下发挥作用,直接平替到其他模型中必然存在水土不服(需要额外的PWConv层实现信息交互)。

但是,FasterNet却为我们提供了一个强大的backbone,其在轻量级与重量级模型中均达到了最佳精度下的最快速度,可以用于图像分类、目标检测中。然后在我们的实验中,或许可以将FasterNet中的Pconv替换为DWConv,这样也许能再次提升backbone能力的提升。毕竟作者没有做这个对比,也说不定是发现Pconv不如DWConv后隐匿了这一部分实验数据

相关文章:

FasterNet中Pconv的实现、效果与作用分析

发表时间:2023年3月7日 论文地址:https://arxiv.org/abs/2303.03667 项目地址:https://github.com/JierunChen/FasterNet FasterNet-t0在GPU、CPU和ARM处理器上分别比MobileViT-XXS快2.8、3.3和2.4,而准确率要高2.9%。我们的大型…...

QToolbar工具栏下拉菜单不弹出有小箭头

这里说了怎么弹出:Qt 工具栏QToolBar添加带有弹出菜单的QAction_qt如何将action添加到工具栏-CSDN博客 然后如果你是在UI里面建立的action,并拖到了toolbar,并在代码中设置菜单,例如: ui->mytoolbar->setMenu(…...

w025基于SpringBoot网上超市的设计与实现

🙊作者简介:拥有多年开发工作经验,分享技术代码帮助学生学习,独立完成自己的项目或者毕业设计。 代码可以查看文章末尾⬇️联系方式获取,记得注明来意哦~🌹赠送计算机毕业设计600个选题excel文件࿰…...

深度学习在推荐系统中的应用

参考自《深度学习推荐系统》,用于学习和记录。 前言 (1)与传统的机器学习模型相比,深度学习模型的表达能力更强,能够挖掘(2)深度学习的模型结构非常灵活,能够根据业务场景和数据特…...

软考系统架构设计师论文:论面向对象的建模及应用

试题三 论面向对象的建模及应用 软件系统建模是软件开发中的重要环节,通过构建软件系统模型可以帮助系统开发人员理解系统、抽取业务过程和管理系统的复杂性,也可以方便各类人员之间的交流。软件系统建模是在系统需求分析和系统实现之间架起的一座桥梁,系统开发人员按照软件…...

LSM-TREE和SSTable

一、什么是LSM-TREE LSM Tree 是一种高效的写优化数据结构,专门用于处理大量写入操作 在一些写多读少的场景,为了加快写磁盘的速度,提出使用日志文件追加顺序写,加快写的速度,减少随机读写。但是日志文件只能遍历查询…...

mysql 升级

# 备份数据库数据 mysqldump -u root -p --single-transaction --all-databases > backup20240830.sql; # 备份mysql数据目录: cp -r /data/mysql mysql20240902 # 备份mysql配置文件my.cnf cp -r /etc/my.cnf my.cnf20240902 systemctl stop mysqld tar -x…...

基于Multisim定时器倒计时器电路0-999计时计数(含仿真和报告)

【全套资料.zip】定时器倒计时器电路Multisim仿真设计数字电子技术 文章目录 功能一、Multisim仿真源文件二、原理文档报告资料下载【Multisim仿真报告讲解视频.zip】 功能 1.0-999秒定时功能,计时间隔1秒,数字显示。 2. 进行0-999秒减计时,…...

力扣11.5

1035. 不相交的线 在两条独立的水平线上按给定的顺序写下 nums1 和 nums2 中的整数。 现在,可以绘制一些连接两个数字 nums1[i] 和 nums2[j] 的直线,这些直线需要同时满足: nums1[i] nums2[j]且绘制的直线不与任何其他连线(非…...

arkUI:层叠布局(Stack)

arkUI:层叠布局(Stack) 1 主要内容说明2 相关内容2.1 层叠布局(Stack)2.1.1 源码1的相关说明2.1.2 源码1 (层叠布局)2.1.3 源码1运行效果2.1.3.1 当alignContent: Alignment.Bottom2.1.3.2 当al…...

【LeetCode】【算法】221. 最大正方形

LeetCode 221. 最大正方形 题目描述 在一个由 ‘0’ 和 ‘1’ 组成的二维矩阵内,找到只包含 ‘1’ 的最大正方形,并返回其面积。 思路 思路:动态规划。初始化时,第0列和第0行,若nums[i][j]1则dp[i][j]初始化为1&am…...

怎麼解除IP阻止和封禁?

IP地址被阻止的原因 安全問題如果有人使用 IP 地址試圖侵入某個網站或導致其他安全問題,則可能會禁止該 IP 以保護該網站。濫用或垃圾郵件如果IP地址發送過多垃圾郵件、發佈不當內容或濫用網站服務,則可能會被禁止,以保持網站清潔和友好。違…...

O-RAN Fronthual CU/Sync/Mgmt 平面和协议栈

O-RAN Fronthual CU/Sync/Mgmt 平面和协议栈 O-RAN Fronthual CU/Sync/Mgmt 平面和协议栈O-RAN前端O-RAN 前传平面C-Plane(控制平面):控制平面消息定义数据传输、波束形成等所需的调度、协调。U-Plane(用户平面)&#…...

一招解决Mac没有剪切板历史记录的问题

使用Mac的朋友肯定都为Mac的剪切功能苦恼过,旧内容覆盖新内容,导致如果有内容需要重复输入的话,就需要一次一次的重复复制粘贴,非常麻烦 但其实Mac也能够有剪切板历史记录功能,iCopy,让你的Mac也能拥有剪切…...

Node-Red二次开发:各目录结构说明及开发流程

node-red下载之前需要安装nodejs软件,然后设置环境变量; node-red下载之后,需要先安装依赖: 1. 安装依赖shell npm install # 或 yarn install 2. 运行shell npm run dev node-red的目录结构: node-red的前后端都是…...

论文阅读-Event-based Visible and Infrared Fusion via Multi-task Collaboration

一、前言 可见光图像与红外图像融合(VIF)通过结合热红外图像与可见光图像的丰富纹理,提供了一个全面可靠的场景描述。然而,传统的VIF系统可能在极端光照和高动态运动场景中捕获过曝或欠曝的图像,进而导致融合结果下降…...

Spring Boot2(Spring Boot 的Web开发 springMVC 请求处理 参数绑定 常用注解 数据传递 文件上传)

SpringBoot的web开发 静态资源映射规则 总结:只要静态资源放在类路径下: called /static (or /public or /resources or //METAINF/resources 一启动服务器就能访问到静态资源文件 springboot只需要将图片放在 static 下 就可以被访问到了 总结&…...

nginx中location模块中的root指令和alias指令区别

在 Nginx 配置中,location 模块用于定义如何处理特定请求路径。root 和 alias 是两个常用的指令,用于指定请求文件的位置,但它们有不同的行为。 root 指令 root 指令用于设置请求的根目录。当请求到来时,Nginx 会将请求的 URI 附…...

C++ 线程常见的实际场景解决方案

文章目录 一、主线程阻塞等待子线程返回1、代码示例2、代码改进 一、主线程阻塞等待子线程返回 主线程等待一个线程,此线程会开始连接一个服务器并循环读取服务器存储的值,主线程会阻塞直到连接服务器成功。因为如果不阻塞,可能上层业务刚开…...

Node.js——fs模块-文件删除

1、在Node.js中,我们可以使用unlink或unlinkSync来删除文件。 2、语法: fs.unlink(path,callback) fs.unlinkSync(path) 参数说明: path 文件路径 callback 操作后的回调函数 本文的分享到此结束,欢迎大家评论区一同讨论学…...

智慧工地云平台源码,基于微服务架构+Java+Spring Cloud +UniApp +MySql

智慧工地管理云平台系统,智慧工地全套源码,java版智慧工地源码,支持PC端、大屏端、移动端。 智慧工地聚焦建筑行业的市场需求,提供“平台网络终端”的整体解决方案,提供劳务管理、视频管理、智能监测、绿色施工、安全管…...

多场景 OkHttpClient 管理器 - Android 网络通信解决方案

下面是一个完整的 Android 实现,展示如何创建和管理多个 OkHttpClient 实例,分别用于长连接、普通 HTTP 请求和文件下载场景。 <?xml version"1.0" encoding"utf-8"?> <LinearLayout xmlns:android"http://schemas…...

汇编常见指令

汇编常见指令 一、数据传送指令 指令功能示例说明MOV数据传送MOV EAX, 10将立即数 10 送入 EAXMOV [EBX], EAX将 EAX 值存入 EBX 指向的内存LEA加载有效地址LEA EAX, [EBX4]将 EBX4 的地址存入 EAX(不访问内存)XCHG交换数据XCHG EAX, EBX交换 EAX 和 EB…...

【JavaSE】绘图与事件入门学习笔记

-Java绘图坐标体系 坐标体系-介绍 坐标原点位于左上角,以像素为单位。 在Java坐标系中,第一个是x坐标,表示当前位置为水平方向,距离坐标原点x个像素;第二个是y坐标,表示当前位置为垂直方向,距离坐标原点y个像素。 坐标体系-像素 …...

[Java恶补day16] 238.除自身以外数组的乘积

给你一个整数数组 nums,返回 数组 answer ,其中 answer[i] 等于 nums 中除 nums[i] 之外其余各元素的乘积 。 题目数据 保证 数组 nums之中任意元素的全部前缀元素和后缀的乘积都在 32 位 整数范围内。 请 不要使用除法,且在 O(n) 时间复杂度…...

基于Java Swing的电子通讯录设计与实现:附系统托盘功能代码详解

JAVASQL电子通讯录带系统托盘 一、系统概述 本电子通讯录系统采用Java Swing开发桌面应用,结合SQLite数据库实现联系人管理功能,并集成系统托盘功能提升用户体验。系统支持联系人的增删改查、分组管理、搜索过滤等功能,同时可以最小化到系统…...

的使用)

Go 并发编程基础:通道(Channel)的使用

在 Go 中,Channel 是 Goroutine 之间通信的核心机制。它提供了一个线程安全的通信方式,用于在多个 Goroutine 之间传递数据,从而实现高效的并发编程。 本章将介绍 Channel 的基本概念、用法、缓冲、关闭机制以及 select 的使用。 一、Channel…...

Ubuntu系统复制(U盘-电脑硬盘)

所需环境 电脑自带硬盘:1块 (1T) U盘1:Ubuntu系统引导盘(用于“U盘2”复制到“电脑自带硬盘”) U盘2:Ubuntu系统盘(1T,用于被复制) !!!建议“电脑…...

2025年- H71-Lc179--39.组合总和(回溯,组合)--Java版

1.题目描述 2.思路 当前的元素可以重复使用。 (1)确定回溯算法函数的参数和返回值(一般是void类型) (2)因为是用递归实现的,所以我们要确定终止条件 (3)单层搜索逻辑 二…...

基于Uniapp的HarmonyOS 5.0体育应用开发攻略

一、技术架构设计 1.混合开发框架选型 (1)使用Uniapp 3.8版本支持ArkTS编译 (2)通过uni-harmony插件调用原生能力 (3)分层架构设计: graph TDA[UI层] -->|Vue语法| B(Uniapp框架)B --&g…...