通过学习更多样化的生成数据进行更广泛的数据分发来改进实例分割

大家读完觉得有帮助记得关注和点赞!!!

本次使用的英文整理的一些记录,练习一下为后续SCI发表论文打好基础

Improving Instance Segmentation by Learning Wider Data Distribution with More Diverse Generative Data

Abstract

Instance segmentation is data-hungry, and as model capacity increases, data scale becomes crucial for improving the accuracy. Most instance segmentation datasets today require costly manual annotation, limiting their data scale. Models trained on such data are prone to overfitting on the training set, especially for those rare categories. While recent works have delved into exploiting generative models to create synthetic datasets for data augmentation, these approaches do not efficiently harness the full potential of generative models.

To address these issues, we introduce a more efficient strategy to construct generative datasets for data augmentation, termed DiverGen. Firstly, we provide an explanation of the role of generative data from the perspective of distribution discrepancy. We investigate the impact of different data on the distribution learned by the model. We argue that generative data can expand the data distribution that the model can learn, thus mitigating overfitting. Additionally, we find that the diversity of generative data is crucial for improving model performance and enhance it through various strategies, including category diversity, prompt diversity, and generative model diversity. With these strategies, we can scale the data to millions while maintaining the trend of model performance improvement. On the LVIS dataset, DiverGen significantly outperforms the strong model X-Paste, achieving +1.1 box AP and +1.1 mask AP across all categories, and +1.9 box AP and +2.5 mask AP for rare categories. Our codes are available at https://github.com/aim-uofa/DiverGen.

1Introduction

Instance segmentation [9, 4, 2] is one of the challenging tasks in computer vision, requiring the prediction of masks and categories for instances in an image, which serves as the foundation for numerous visual applications. As models’ learning capabilities improve, the demand for training data increases. However, current datasets for instance segmentation heavily rely on manual annotation, which is time-consuming and costly, and the dataset scale cannot meet the training needs of models. Despite the recent emergence of the automatically annotated dataset SA-1B [12], it lacks category annotations, failing to meet the requirements of instance segmentation. Meanwhile, the ongoing development of the generative model has largely improved the controllability and realism of generated samples. For example, the recent text2image diffusion model [22, 24] can generate high-quality images corresponding to input prompts. Therefore, current methods [34, 28, 27] use generative models for data augmentation by generating datasets to supplement the training of models on real datasets and improve model performance. Although current methods have proposed various strategies to enable generative data to boost model performance, there are still some limitations: 1) Existing methods have not fully exploited the potential of generative models. First, some methods [34] not only use generative data but also need to crawl images from the internet, which is significantly challenging to obtain large-scale data. Meanwhile, the content of data crawled from the internet is uncontrollable and needs extra checking. Second, existing methods do not fully use the controllability of generative models. Current methods often adopt manually designed templates to construct prompts, limiting the potential output of generative models. 2) Existing methods [28, 27] often explain the role of generative data from the perspective of class imbalance or data scarcity, without considering the discrepancy between real-world data and generative data. Moreover, these methods typically show improved model performance only in scenarios with a limited number of real samples, and the effectiveness of generative data on existing large-scale real datasets, like LVIS [8], is not thoroughly investigated.

In this paper, we first explore the role of generative data from the perspective of distribution discrepancy, addressing two main questions: 1) Why does generative data augmentation enhance model performance? 2) What types of generative data are beneficial for improving model performance? First, we find that there exist discrepancies between the model learned distribution of the limited real training data and the distribution of real-world data. We visualize the data and find that compared to the real-world data, generative data can expand the data distribution that the model can learn. Furthermore, we find that the role of adding generative data is to alleviate the bias of the real training data, effectively mitigating overfitting the training data. Second, we find that there are also discrepancies between the distribution of the generative data and the real-world data distribution. If these discrepancies are not handled properly, the full potential of the generative model cannot be utilized. By conducting several experiments, we find that using diverse generative data enables models to better adapt to these discrepancies, improving model performance.

Based on the above analysis, we propose an efficient strategy for enhancing data diversity, namely, Generative Data Diversity Enhancement. We design various diversity enhancement strategies to increase data diversity from the perspectives of category diversity, prompt diversity, and generative model diversity. For category diversity, we observe that models trained with generative data covering all categories adapt better to distribution discrepancy than models trained with partial categories. Therefore, we introduce not only categories from LVIS [8] but also extra categories from ImageNet-1K [23] to enhance category diversity in data generation, thereby reinforcing the model’s adaptability to distribution discrepancy. For prompt diversity, we find that as the scale of the generative dataset increases, manually designed prompts cannot scale up to the corresponding level, limiting the diversity of output images from the generative model. Thus, we design a set of diverse prompt generation strategies to use large language models, like ChatGPT, for prompt generation, requiring the large language models to output maximally diverse prompts under constraints. By combining manually designed prompts and ChatGPT designed prompts, we effectively enrich prompt diversity and further improve generative data diversity. For generative model diversity, we find that data from different generative models also exhibit distribution discrepancies. Exposing models to data from different generative models during training can enhance adaptability to different distributions. Therefore, we employ Stable Diffusion [22] and DeepFloyd-IF [24] to generate images for all categories separately and mix the two types of data during training to increase data diversity.

At the same time, we optimize the data generation workflow and propose a four-stage generative pipeline consisting of instance generation, instance annotation, instance filtration, and instance augmentation. In the instance generation stage, we employ our proposed Generative Data Diversity Enhancement to enhance data diversity, producing diverse raw data. In the instance annotation stage, we introduce an annotation strategy called SAM-background. This strategy obtains high-quality annotations by using background points as input prompts for SAM [12], obtaining the annotations of raw data. In the instance filtration stage, we introduce a metric called CLIP inter-similarity. Utilizing the CLIP [21] image encoder, we extract embeddings from generative and real data, and then compute their similarity. A lower similarity indicates lower data quality. After filtration, we obtain the final generative dataset. In the instance augmentation stage, we use the instance paste strategy [34] to increase model learning efficiency on generative data.

Experiments demonstrate that our designed data diversity strategies can effectively improve model performance and maintain the trend of performance gains as the data scale increases to the million level, which enables large-scale generative data for data augmentation. On the LVIS dataset, DiverGen significantly outperforms the strong model X-Paste [34], achieving +1.1 box AP [8] and +1.1 mask AP across all categories, and +1.9 box AP and +2.5 mask AP for rare categories.

In summary, our main contributions are as follows:

- •

We explain the role of generative data from the perspective of distribution discrepancy. We find that generative data can expand the data distribution that the model can learn, mitigating overfitting the training set and the diversity of generative data is crucial for improving model performance.

- •

We propose the Generative Data Diversity Enhancement strategy to increase data diversity from the aspects of category diversity, prompt diversity, and generative model diversity. By enhancing data diversity, we can scale the data to millions while maintaining the trend of model performance improvement.

- •

We optimize the data generation pipeline. We propose an annotation strategy SAM-background to obtain higher-quality annotations. We also introduce a filtration metric called CLIP inter-similarity to filter data and further improve the quality of the generative dataset.

2Related Work

Instance segmentation. Instance segmentation is an important task in the field of computer vision and has been extensively studied. Unlike semantic segmentation, instance segmentation not only classifies the pixels at a pixel level but also distinguishes different instances of the same category. Previously, the focus of instance segmentation research has primarily been on the design of model structures. Mask-RCNN [9] unifies the tasks of object detection and instance segmentation. Subsequently, Mask2Former [4] further unified the tasks of semantic segmentation and instance segmentation by leveraging the structure of DETR [2].

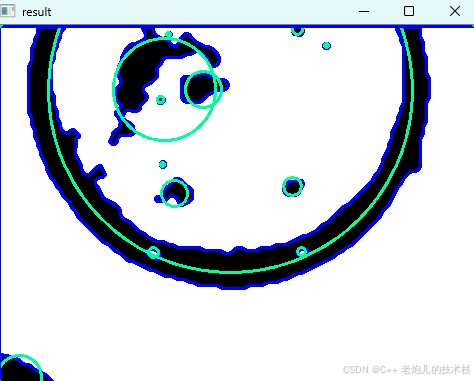

Figure 1:Visualization of data distributions on different sources. Compared to real-world data (LVIS train and LVIS val), generative data (Stable Diffusion and IF) can expand the data distribution that the model can learn.

Orthogonal to these studies focusing on model architecture, our work primarily investigates how to better utilize generated data for this task. We focus on the challenging long-tail dataset LVIS [8] because it is only the long-tailed categories that face the issue of limited real data and require generative images for augmentation, making it more practically meaningful.

Generative data augmentation. The use of generative models to synthesize training data for assisting perception tasks such as classification [6, 32], detection [34, 3], segmentation [14, 28, 27], etc. has received widespread attention from researchers. In the field of segmentation, early works [33, 13] utilize generative adversarial networks (GANs) to synthesize additional training samples. With the rise of diffusion models, there have been numerous efforts [34, 14, 28, 27, 30] to utilize text2image diffusion models, such as Stable Diffusion [22], to boost the segmentation performance. Li et al. [14] combine the Stable Diffusion model with a novel grounding module and establish an automatic pipeline for constructing a segmentation dataset. DiffuMask [28] exploits the potential of cross-attention maps between text and images to synthesize accurate semantic labels. More recently, FreeMask [30] uses a mask-to-image generation model to generate images conditioned on the provided semantic masks. However, the aforementioned work is only applicable to semantic segmentation. The most relevant work to ours is X-Paste [34], which promotes instance segmentation through copy-pasting the generative images and a filter strategy based on CLIP [21].

In summary, most methods only demonstrate significant advantages when training data is extremely limited. They consider generating data as a means to compensate for data scarcity or class imbalance. However, in this work, we take a further step to examine and analyze this problem from the perspective of data distribution. We propose a pipeline that enhances diversity from multiple levels to alleviate the impact of data distribution discrepancies. This provides new insights and inspirations for further advancements in this field.

3Our Proposed DiverGen

3.1Analysis of Data Distribution

Existing methods [34, 28, 29] often attribute the role of generative data to addressing class imbalance or data scarcity. In this paper, we provide an explanation for two main questions from the perspective of distribution discrepancy.

Why does generative data augmentation enhance model performance? We argue that there exist discrepancies between the model learned distribution of the limited real training data and the distribution of real-world data. The role of adding generative data is to alleviate the bias of the real training data, effectively mitigating overfitting the training data.

First, to intuitively understand the discrepancies between different data sources, we use CLIP [21] image encoder to extract the embeddings of images from different data sources, and then use UMAP [18] to reduce dimensions for visualization. Visualization of data distributions on different sources is shown in Figure 1. Real-world data (LVIS [8] train and LVIS val) cluster near the center, while generative data (Stable Diffusion [22] and IF [24]) are more dispersed, indicating that generative data can expand the data distribution that the model can learn.

Then, to characterize the distribution learned by the model, we employ the free energy formulation used by Joseph et al. [10]. This formulation transforms the logits outputted by the classification head into an energy function. The formulation is shown below:

| F(𝒒;h)=−τlog∑c=1nexp(hc(𝒒)τ). | (1) |

Here, 𝒒 is the feature of instance, hc(𝒒) is the cth logit outputted by classification head h(.), n is the number of categories and τ is the temperature parameter. We train one model using only the LVIS train set (θtrain), and another model using LVIS train with generative data (θgen). Both models are evaluated on the LVIS val set and we use instances that are successfully matched by both models to obtain energy values. Additionally, we train another model using LVIS val (θval), treating it as representative of real-world data distribution. Then, we further fit Gaussian distributions to the histograms of energy values to obtain the mean μ and standard deviation σ for each model and compute the KL divergence [11] between them. DKL(pθtrain∥pθval) is 0.063, and DKL(pθgen∥pθval) is 0.019. The latter is lower, indicating that using generative data mitigates the bias of limited real training data.

Moreover, we also analyze the role of generative data from a metric perspective. We randomly select up to five images per category to form a minitrain set and then conduct inferences using θtrain and θgen. Then, we define a metric, termed train-val gap (TVG), which is formulated as follows:

| TVGwk=APwkminitrain−APwkval. | (2) |

Here, TVGwk is train-val gap of w category on task k, APwkd is AP [8] of w category on k obtained on dataset d, w∈{f,c,r}, with f, c, r standing for frequent, common, rare [8] respectively, and k∈{box,mask}, with box, mask referring to the object detection and instance segmentation. The train-val gap serves as a measure of the disparity in the model’s performance between the training and validation sets. A larger gap indicates a higher degree of overfitting the training set. The results, as presented in Table 1, show that the metrics for the rare categories consistently surpass those of frequent and common. This observation suggests that the model tends to overfit more on the rare categories that have fewer examples. With the augmentation of generative data, all TVG of θgen are lower than θtrain, showing that adding generative data can effectively alleviate overfitting the training data.

| Data Source | TVGfbox | TVGfmask | TVGcbox | TVGcmask | TVGrbox | TVGrmask |

| LVIS | 13.16 | 10.71 | 21.80 | 16.80 | 39.59 | 31.68 |

| LVIS + Gen | 9.64 | 8.38 | 15.64 | 12.69 | 29.39 | 22.49 |

Table 1:Results of train-val gap on different data sources. With the augmentation of generative data, all TVG of LVIS are lower than LVIS + Gen, showing that adding generative data can effectively alleviate overfitting to the training data.

What types of generative data are beneficial for improving model performance? We argue that there are also discrepancies between the distribution of the generative data and the real-world data distribution. If these discrepancies are not properly addressed, the full potential of the generative model cannot be attained.

We divide the generative data into ‘frequent’, ‘common’, and ‘rare’ [8] groups, and train three models using each group of data as instance paste source. The inference results are shown in Table 2. We find that the metrics on the corresponding category subset are lowest when training with only one group of data. We consider model performance to be primarily influenced by the quality and diversity of data. Given that the quality of generative data is relatively consistent, we contend insufficient diversity in the data can mislead the distribution that the model can learn and a more comprehensive understanding is obtained by the model from a diverse set of data. Therefore, we believe that using diverse generative data enables models to better adapt to these discrepancies, improving model performance.

| # Gen Category | APfbox | APfmask | APcbox | APcmask | APrbox | APrmask |

| none | 50.14 | 43.84 | 47.54 | 43.12 | 41.39 | 36.83 |

| f | 50.81 | 44.24 | 47.96 | 43.51 | 41.51 | 37.92 |

| c | 51.86 | 45.22 | 47.69 | 42.79 | 42.32 | 37.30 |

| r | 51.46 | 44.90 | 48.24 | 43.51 | 32.67 | 29.04 |

| all | 52.10 | 45.45 | 50.29 | 44.87 | 46.03 | 41.86 |

Table 2:Results of different category data subset for training. The metrics on the corresponding category subset are lowest when training with only one group of data, showing insufficient diversity in the data can mislead the distribution that the model can learn. Blue font means the lowest value in models using generative data.

3.2Generative Data Diversity Enhancement

Through the analysis above, we find that the diversity of generative data is crucial for improving model performance. Therefore, we design a series of strategies to enhance data diversity at three levels: category diversity, prompt diversity, and generative model diversity, which help the model to better adapt to the distribution discrepancy between generative data and real data.

Category diversity. The above experiments show that including data from partial categories results in lower performance than incorporating data from all categories. We believe that, akin to human learning, the model can learn features beneficial to the current category from some other categories. Therefore, we consider increasing the diversity of data by adding extra categories. First, we select some extra categories besides LVIS from ImageNet-1K [23] categories based on WordNet [5] similarity. Then, the generative data from LVIS and extra categories are mixed for training, requiring the model to learn to distinguish all categories. Finally, we truncate the parameters in the classification head corresponding to the extra categories during inference, ensuring that the inferred category range remains within LVIS.

Prompt diversity. The output images of the text2image generative model typically rely on the input prompts. Existing methods [34] usually generate prompts by manually designing templates, such as “a photo of a single {category_name}." When the data scale is small, designing prompts manually is convenient and fast. However, when generating a large scale of data, it is challenging to scale the number of manually designed prompts correspondingly. Intuitively, it is essential to diversify the prompts to enhance data diversity. To easily generate a large number of prompts, we choose large language model, like ChatGPT, to enhance the prompt diversity. We have three requirements for the large language model: 1) each prompt should be as different as possible; 2) each prompt should ensure that there is only one object in the image; 3) prompts should describe different attributes of the category. For example, if the category is food, prompts should cover attributes like color, brand, size, freshness, packaging type, packaging color, etc. Limited by the inference cost of ChatGPT, we use the manually designed prompts as the base and only use ChatGPT to enhance the prompt diversity for a subset of categories. Moreover, we also leverage the controllability of the generative model, adding the constraint “in a white background" after each prompt to make the background of output images simple and clear, which reduces the difficulty of mask annotation.

Generative model diversity. The quality and style of output images vary across generative models, and the data distribution learned solely from one generative model’s data is limited. Therefore, we introduce multiple generative models to enhance the diversity of data, allowing the model to learn from wider data distributions. We selected two commonly used generative models, Stable Diffusion [22] (SD) and DeepFloyd-IF [24] (IF). We use Stable Diffusion V1.5, generating images with a resolution of 512 × 512, and use images output from Stage II of IF with a resolution of 256 × 256. For each category in LVIS, we generated 1k images using two models separately. Examples from different generative models are shown in Figure 2.

Figure 2:Examples of various generative models. The samples generated by different generative models vary, even within the same category.

Figure 3:Overview of the DiverGen pipeline. In instance generation, we enhance data diversity at three levels: category diversity, prompt diversity, and generative model diversity. Next, we use SAM-background to obtain high-quality masks. Then, we use CLIP inter-similarity to filter out low-quality data. At last, we use the instance paste strategy to increase model learning efficiency on generative data.

3.3Generative Pipeline

The generative pipeline of DiverGen is built upon X-Paste [34]. It can be divided into four stages: instance generation, instance annotation, instance filtration and instance augmentation. The overview of DiverGen is illustrated in Figure 3.

Instance generation. Instance generation is a crucial stage for enhancing data diversity. In this stage, we employ our proposed Generative Data Diversity Enhancement (GDDE), as mentioned in Sec 3.2. In category diversity enhancement, we utilize the category information from LVIS [8] categories and extra categories selected from ImageNet-1K [23]. In prompt diversity enhancement, we utilize manually designed prompts and ChatGPT designed prompts to enhance prompt diversity. In model diversity enhancement, we employ two generative models, SD and IF.

Instance annotation. We employ SAM [12] as our annotation model. SAM is a class-agnostic promptable segmenter that outputs corresponding masks based on input prompts, such as points, boxes, etc. In instance generation, leveraging the controllability of the generative model, the generative images have two characteristics: 1) each image predominantly contains only one foreground object; 2) the background of the images is relatively simple. Therefore, we introduce a SAM-background (SAM-bg) annotation strategy. SAM-bg takes the four corner points of an image as input prompts for SAM to obtain the background mask, then inverts the background mask as the mask of the foreground object. Due to the conditional constraints during the instance generation stage, this strategy is simple but effective in producing high-quality masks.

Instance filtration. In the instance filtration stage, X-Paste utilizes the CLIP score (similarity between images and text) as the metric for image filtering. However, we observe that the CLIP score is ineffective in filtering low-quality images. In contrast to the similarity between images and text, we think the similarity between images can better filter out low-quality images. Therefore, we propose a new metric called CLIP inter-similarity. We use the image encoder of CLIP [21] to extract image embeddings for objects in the training set and generative images, then calculate the similarity between them. If the similarity is too low, it indicates a significant disparity between the generative and real images, suggesting that it is probably a poor-quality image and needs to be filtered.

Instance augmentation. We use the augmentation strategy proposed by X-Paste [34] but do not use the data retrieved from the network or the instances in LVIS [8] training set as the paste data source, only use the generative data as the paste data source.

4Experiments

4.1Settings

Datasets. We choose LVIS [8] for our experiments. LVIS is a large-scale instance segmentation dataset, containing 164k images with approximately two million high-quality annotations of instance segmentation and object detection. LVIS dataset uses images from COCO 2017 [15] dataset, but redefines the train/val/test splits, with around 100k images in the training set and around 20k images in the validation set. The annotations in LVIS cover 1,203 categories, with a typical long-tailed distribution of categories, so LVIS further divides the categories into frequent, common, and rare based on the frequency of each category in the dataset. We use the official LVIS training split and the validation split.

Evaluation metrics. The evaluation metrics are LVIS box average precision (APbox) and mask average precision (APmask). We also provide the average precision of rare categories (APrbox and APrmask). The maximum number of detections per image is 300.

Implementation details. We use CenterNet2 [35] as the baseline and Swin-L [16] as the backbone. In the training process, we initialize the parameters by the pre-trained Swin-L weights provided by Liu et al. [16]. The training size is 896 and the batch size is 16. The maximum training iterations is 180,000 with an initial learning rate of 0.0001. We use the instance paste strategy provided by Zhao et al. [34].

4.2Main Results

Data diversity is more important than quantity. To investigate the impact of different scales of generative data, we use generative data of varying scales as paste data sources. We construct three datasets using only DeepFloyd-IF [24] with manually designed prompts, all containing original LVIS 1,203 categories, but with per-category quantities of 0.25k, 0.5k, and 1k, resulting in total dataset scales of 300k, 600k, and 1,200k. As shown in Table 3, we find that using generative data improves model performance compared to the baseline. However, as the dataset scale increases, the model performance initially improves but then declines. The model performance using 1,200k data is lower than that using 600k data. Due to the limited number of manually designed prompts, the generative model produces similar data, as shown in Figure 4(a). Consequently, the model can not gain benefits from more data. However, when using our proposed Generative Data Diversity Enhancement (GDDE), due to the increased data diversity, the model trained with 1,200k images achieves better results than using 600k images, with an improvement of 1.21 box AP and 1.04 mask AP. Moreover, when using the same data scale of 600k, the mask AP increased by 0.64 AP and the box AP increased by 0.55 AP when using GDDE compared to not using it. The results demonstrate that data diversity is more important than quantity. When the scale of data is small, increasing the quantity of data can improve model performance, which we consider is an indirect way of increasing data diversity. However, this simplistic approach of solely increasing quantity to increase diversity has an upper limit. When it reaches this limit, explicit data diversity enhancement strategies become necessary to maintain the trend of model performance improvement.

| # Gen Data | GDDE | APbox | APmask | APrbox | APrmask |

| 0 | 47.50 | 42.32 | 41.39 | 36.83 | |

| 300k | 49.65 | 44.01 | 45.68 | 41.11 | |

| 600k | 50.03 | 44.44 | 47.15 | 41.96 | |

| 1200k | 49.44 | 43.75 | 42.96 | 37.91 | |

| 600k | ✓ | 50.67 | 44.99 | 48.52 | 43.63 |

| 1200k | ✓ | 51.24 | 45.48 | 50.07 | 45.85 |

Table 3:Results of different scales of generative data. When using the same data scale, models using our proposed GDDE can achieve higher performance than those without it, showing that data diversity is more important than quantity.

(a)Images of manually designed prompts.

(b)Images of ChatGPT designed prompts.

Figure 4:Examples of generative data using different prompts. By using prompts designed by ChatGPT, the diversity of generated images in terms of shapes, textures, etc. can be significantly improved.

Comparision with previous methods. We compare DiverGen with previous data-augmentation related methods in Table 4. Compared to the baseline CenterNet2 [35], our method significantly improves, increasing box AP by +3.7 and mask AP by +3.2. Regarding rare categories, our method surpasses the baseline with +8.7 in box AP and +9.0 in mask AP. Compared to the previous strong model X-Paste [34], we outperform it with +1.1 in box AP and +1.1 in mask AP of all categories, and +1.9 in box AP and +2.5 in mask AP of rare categories. It is worth mentioning that, X-Paste utilizes both generative data and web-retrieved data as paste data sources during training, while our method exclusively uses generative data as the paste data source. We achieve this by designing diversity enhancement strategies, further unlocking the potential of generative models.

| Method | Backbone | APbox | APmask | APboxr | APmaskr |

| Copy-Paste [7] | EfficientNet-B7 | 41.6 | 38.1 | - | 32.1 |

| Tan et al. [26] | ResNeSt-269 | - | 41.5 | - | 30.0 |

| Detic [36] | Swin-B | 46.9 | 41.7 | 45.9 | 41.7 |

| CenterNet2 [35] | Swin-L | 47.5 | 42.3 | 41.4 | 36.8 |

| X-Paste [34] | Swin-L | 50.1 | 44.4 | 48.2 | 43.3 |

| DiverGen (Ours) | Swin-L | 51.2 | 45.5 | 50.1 | 45.8 |

| (+1.1) | (+1.1) | (+1.9) | (+2.5) |

Table 4:Comparison with previous methods on LVIS val set.

4.3Ablation Studies

We analyze the effects of the proposed strategies in DiverGen through a series of ablation studies using the Swin-L [16] backbone.

Effect of category diversity. We select 50, 250, and 566 extra categories from ImagNet-1K [23], and generate 0.5k images for each category, which are added to the baseline. The baseline only uses 1,203 categories of LIVS [8] to generate data. We show the results in Table 5. Generally, increasing the number of extra categories initially improves then declines model performance, peaking at 250 extra categories. The trend suggests that using extra categories to enhance category diversity can improve the model’s generalization capabilities, but too many extra categories may mislead the model, leading to a decrease in performance.

| # Extra Category | APbox | APmask | APrbox | APrmask |

| 0 | 49.44 | 43.75 | 42.96 | 37.91 |

| 50 | 49.92 | 44.17 | 44.94 | 39.86 |

| 250 | 50.59 | 44.77 | 47.99 | 42.91 |

| 566 | 50.35 | 44.63 | 47.68 | 42.53 |

Table 5:Ablation of the number of extra categories during training. Using extra categories to enhance category diversity can improve the model’s generalization capabilities, but too many extra categories may mislead the model, leading to a decrease in performance.

Effect of prompt diversity. We select a subset of categories and use ChatGPT to generate 32 and 128 prompts for each category, with each prompt being used to generate 8 and 2 images, respectively, ensuring that the image count for each category is 0.25k. The baseline uses only one prompt per category to generate 0.25k images. The regenerated images will replace the corresponding categories in the baseline to ensure that the final data scale is consistent. The results are presented in Table 6. With the increase in prompt diversity, there is a continuous improvement in model performance, indicating that prompt diversity is indeed beneficial for enhancing model performance.

| # Prompt | APbox | APmask | APrbox | APrmask |

| 1 | 49.65 | 44.01 | 45.68 | 41.11 |

| 32 | 50.03 | 44.39 | 45.83 | 41.32 |

| 128 | 50.27 | 44.50 | 46.49 | 41.25 |

Table 6:Ablation of the number of prompts used to generate data. With the increase in prompt diversity, there is a continuous improvement in model performance, indicating that prompt diversity is indeed beneficial for enhancing model performance.

Effect of generative model diversity. We choose two commonly used generative models, Stable Diffusion [22] (SD) and DeepFloyd-IF [24] (IF). We generate 1k images per category for each generative model, totaling 1,200k. When using a mixed dataset (SD + IF), we take 600k from SD and 600k from IF per category, respectively, to ensure the total dataset scale is consistent. The baseline does not use any generative data (none). As shown in Table 7, using data generated by either SD or IF alone can improve performance, further mixing the generative data of both leads to significant performance gains. This demonstrates that increasing model diversity is beneficial for improving model performance.

| Model | APbox | APmask | APrbox | APrmask |

| none | 47.50 | 42.32 | 41.39 | 36.83 |

| SD [22] | 48.13 | 42.82 | 43.68 | 39.15 |

| IF [24] | 49.44 | 43.75 | 42.96 | 37.91 |

| SD + IF | 50.78 | 45.27 | 48.94 | 44.35 |

Table 7:Ablation of different generative models. Increasing model diversity is beneficial for improving model performance.

Effect of annotation strategy. X-Paste [34] uses four models (U2Net [20], SelfReformer [31], UFO [25] and CLIPseg [17]) to generate masks and selects the one with the highest CLIP score. We compare our proposed annotation strategy (SAM-bg) to that proposed by X-Paste (max CLIP). In Table 8, SAM-bg outperforms max CLIP strategy across all metrics, indicating that our proposed strategy can produce better annotations, improving model performance. As shown in Figure 5, SAM-bg unlocks the potential capability of SAM, obtaining precise and refined masks.

Figure 5:Examples of object mask of different annotation strategies. SAM-bg can obtain more complete and delicate masks.

| Strategy | APbox | APmask | APrbox | APrmask |

| max CLIP [34] | 49.10 | 43.45 | 42.75 | 37.55 |

| SAM-bg | 49.44 | 43.75 | 42.96 | 37.91 |

Table 8:Ablation of different annotation strategies. Our proposed SAM-bg can produce better annotations, improving model performance.

Effect of CLIP inter-similarity. We compare our proposed CLIP inter-similarity to CLIP score [34]. The results are shown in Table 9. The performance of data filtered by CLIP inter-similarity is higher than that of CLIP score, demonstrating that CLIP inter-similarity can filter low-quality images more effectively.

| Strategy | APbox | APmask | APrbox | APrmask |

| none | 49.44 | 43.75 | 42.96 | 37.91 |

| CLIP score [34] | 49.84 | 44.27 | 44.83 | 40.82 |

| CLIP inter-similarity | 50.07 | 44.44 | 45.53 | 41.16 |

Table 9:Ablation of the different filtration strategies. Our proposed CLIP inter-similarity can filter low-quality images more effectively.

5Conclusions

In this paper, we explain the role of generative data augmentation from the perspective of data distribution discrepancies and find that generative data can expand the data distribution that the model can learn, mitigating overfitting the training set. Furthermore, we find that data diversity of generative data is crucial for improving model performance. Therefore, we design an efficient data diversity enhancement strategy, Generative Data Diversity Enhancement. We design various diversity enhancement strategies to increase data diversity from the aspects of category diversity, prompt diversity, and generative model diversity. Finally, we optimize the data generative pipeline by designing the annotation strategy SAM-background to obtain higher quality annotations and introducing the metric CLIP inter-similarity to filter data, which further improves the quality of the generative dataset. Through these designed strategies, our proposed method significantly outperforms the existing strong models. We hope DiverGen can provide new insights and inspirations for future research on the effectiveness and efficiency of generative data augmentation.

相关文章:

通过学习更多样化的生成数据进行更广泛的数据分发来改进实例分割

大家读完觉得有帮助记得关注和点赞!!! 本次使用的英文整理的一些记录,练习一下为后续SCI发表论文打好基础 Improving Instance Segmentation by Learning Wider Data Distribution with More Diverse Generative Data Abstract In…...

NVIDIA视频编解码

开源了两个项目:英伟达显卡视频编解码、jetson视频编解码。都是基于官方SDK进行的封装,由于官方自带的demo晦涩难懂并且每块都是独立的,我对SDK进行二次封装并形成了一套较为完整的视频编解码流程,调用简单,有完整的测…...

GCC支持Objective C的故事?Objective-C?GCC只能编译C语言吗?Objective-C 1.0和2.0有什么区别?

GCC支持Objective C的故事 Objective-C 主要由 Stepstone 公司的Brad Cox和 Tom Love 在1980 年左右发明。乔布斯离开苹果公司后成立了NeXT STEP公司, 买下了Objective-C 语言的授权。GCC对Objective-C语言的支持是在1992年加入的,具体是在GCC 1.3版本中…...

详解深度学习中的Dropout

Dropout是一种在神经网络训练中常用的正则化技术,其操作是在每次训练迭代中随机“丢弃”一部分神经元(即将其输出置为零)。以下是对这一操作的详细解释: 一、基本思想 Dropout的基本思想是减少神经元之间的复杂共适应关系&#…...

SQL-杂记1

PIVOT的使用: 行转列IIF()的使用:IIF( boolean_expression, true_value, false_value)多个字段使用MX()函数 SELECTD.ID,字段1,字段2,字段3,字段4,字段5,X.MinDateValue FROM 表名 D WITH(NOLOCK) OUTER APPLY (SELECT MIN(DateValue) AS MinDateValueFROM (VALUES (字段1),(字…...

Python(十七)excel指定列自动翻译成英文

前言 本章主要讲述在excel的指定列后面添加一列,并翻译成英文 一、效果图 二、代码 实际需求: # -*- codeing utf-8 -*- # time: 2025/1/16 16:32 # Author : Mikasa # # Aim:自动将客户发的货物清单里的商品名称,翻译成英文…...

Ubuntu20.04取消root账号自动登录的方法,触觉智能RK3568开发板演示

Ubuntu20.04默认情况下为root账号自动登录,本文介绍如何取消root账号自动登录,改为通过输入账号密码登录,使用触觉智能EVB3568鸿蒙开发板演示,搭载瑞芯微RK3568,四核A55处理器,主频2.0Ghz,1T算力…...

诡异的Spring @RequestBody驼峰命名字段映射失败为null问题记录

问题 一个非常常规的Spring Controller,代码如下: import lombok.RequiredArgsConstructor;Slf4j RestController RequiredArgsConstructor RequestMapping("/api/v1/config") public class ConfigController {private final ConfigService …...

YOLOv10改进,YOLOv10检测头融合RFAConv卷积,添加小目标检测层(四头检测)+CA注意机制,全网首发

摘要 空间注意力已广泛应用于提升卷积神经网络(CNN)的性能,但它存在一定的局限性。作者提出了一个新的视角,认为空间注意力机制本质上解决了卷积核参数共享的问题。然而,空间注意力生成的注意力图信息对于大尺寸卷积核来说是不足够的。因此,提出了一种新型的注意力机制—…...

)

周末总结(2024/01/18)

工作 人际关系核心实践: 要学会随时回应别人的善意,执行时间控制在5分钟以内 坚持每天早会打招呼 遇到接不住的话题时拉低自己,抬高别人(无阴阳气息) 朋友圈点赞控制在5min以内,职场社交不要放在5min以外 职场的人际关系在面对利…...

LLM - 大模型 ScallingLaws 的迁移学习与混合训练(PLM) 教程(3)

欢迎关注我的CSDN:https://spike.blog.csdn.net/ 本文地址:https://spike.blog.csdn.net/article/details/145212097 免责声明:本文来源于个人知识与公开资料,仅用于学术交流,欢迎讨论,不支持转载。 Scalin…...

【软件开发过程管理规范】需求管理,需求分析,设计开发管理,测试管理(Word)

一、需求管理规程 1 简介 2 过程总体描述 2.1 过程概述 2.2 过程流程图 3 过程元素描述 3.1 准备阶段 3.2 需求调研 3.3 需求分析 软件开发人员及用户往往容易忽略信息沟通,这导致软件开发出来后不能很好地满足用户的需要,从而造成返工。而返工不仅在技术…...

计算机网络 (49)网络安全问题概述

前言 计算机网络安全问题是一个复杂且多维的领域,它涉及到网络系统的硬件、软件以及数据的安全保护,确保这些元素不因偶然的或恶意的原因而遭到破坏、更改或泄露。 一、计算机网络安全的定义 计算机网络安全是指利用网络管理控制和技术措施,保…...

RPA编程实践:Electron实践开始

文章目录 前言闲话少叙,打开官网版本发布安装在 Windows 上安装在 macOS 上安装在 Linux (Ubuntu) 上安装 前言 上回说道,我们electron适合于熟悉web开发,但想要研发桌面应用的人。 但我觉得这个需求应该不是很多。 因为使用electron&#…...

ORB-SLAM2源码学习:MapPoint.cc④: 新增地图点总结

前言 让我们来总结ORB-SLAM2 中的新增地图点。 1.在第一阶段跟踪中的恒速模型跟踪中新增地图点 针对双目相机或RGB-D相机,找出上一帧中具有有效深度值且不是地图点的特征点,将其中较近的点作为上一帧新的临时地图点, 并记录在向扯mlpTempo…...

2025西湖论剑-babytrace

前言 就做了下题目,pwn1/3 都是签到,pwn2 后面绕 ptrace 有点意思,简单记录一下 漏洞分析 子进程中的读/写功能没有检查负数的情况,存在越界读写: void __fastcall get_value(__int64 *int64_arr) {__int64 ll; //…...

绘图专用,26个常见流程图符号及其解释

关注作者 当您设计网站、构建应用程序或绘制业务系统时,您需要一种方法来清晰地绘制步骤和用户流程。虽然您可以使用流程图来概述这些过程,但箭头和方框只能帮助您到目前为止。为了清楚地表达您的意思,您需要流程图符号。 为了帮助解释每个流…...

是什么?)

【个人学习记录】软件开发生命周期(SDLC)是什么?

软件开发生命周期(Software Development Life Cycle,SDLC)是一个用于规划、创建、测试和部署信息系统的结构化过程。它包含以下主要阶段: 需求分析(Requirements Analysis) 收集并分析用户需求定义系统目标…...

自学SpringBoot笔记

概念 什么是SpringBoot? Spring Boot 是基于 Spring Framework 的一款开源框架,主要用于简化 Spring 应用程序的开发。它通过提供一系列的 开箱即用的功能 和 自动配置,让开发者可以快速构建生产级别的独立应用程序,而无需手动配…...

03JavaWeb——Ajax-Vue-Element(项目实战)

1 Ajax 1.1 Ajax介绍 1.1.1 Ajax概述 我们前端页面中的数据,如下图所示的表格中的学生信息,应该来自于后台,那么我们的后台和前端是互不影响的2个程序,那么我们前端应该如何从后台获取数据呢?因为是2个程序…...

利用最小二乘法找圆心和半径

#include <iostream> #include <vector> #include <cmath> #include <Eigen/Dense> // 需安装Eigen库用于矩阵运算 // 定义点结构 struct Point { double x, y; Point(double x_, double y_) : x(x_), y(y_) {} }; // 最小二乘法求圆心和半径 …...

【杂谈】-递归进化:人工智能的自我改进与监管挑战

递归进化:人工智能的自我改进与监管挑战 文章目录 递归进化:人工智能的自我改进与监管挑战1、自我改进型人工智能的崛起2、人工智能如何挑战人类监管?3、确保人工智能受控的策略4、人类在人工智能发展中的角色5、平衡自主性与控制力6、总结与…...

uni-app学习笔记二十二---使用vite.config.js全局导入常用依赖

在前面的练习中,每个页面需要使用ref,onShow等生命周期钩子函数时都需要像下面这样导入 import {onMounted, ref} from "vue" 如果不想每个页面都导入,需要使用node.js命令npm安装unplugin-auto-import npm install unplugin-au…...

:滤镜命令)

ffmpeg(四):滤镜命令

FFmpeg 的滤镜命令是用于音视频处理中的强大工具,可以完成剪裁、缩放、加水印、调色、合成、旋转、模糊、叠加字幕等复杂的操作。其核心语法格式一般如下: ffmpeg -i input.mp4 -vf "滤镜参数" output.mp4或者带音频滤镜: ffmpeg…...

Axios请求超时重发机制

Axios 超时重新请求实现方案 在 Axios 中实现超时重新请求可以通过以下几种方式: 1. 使用拦截器实现自动重试 import axios from axios;// 创建axios实例 const instance axios.create();// 设置超时时间 instance.defaults.timeout 5000;// 最大重试次数 cons…...

涂鸦T5AI手搓语音、emoji、otto机器人从入门到实战

“🤖手搓TuyaAI语音指令 😍秒变表情包大师,让萌系Otto机器人🔥玩出智能新花样!开整!” 🤖 Otto机器人 → 直接点明主体 手搓TuyaAI语音 → 强调 自主编程/自定义 语音控制(TuyaAI…...

DeepSeek 技术赋能无人农场协同作业:用 AI 重构农田管理 “神经网”

目录 一、引言二、DeepSeek 技术大揭秘2.1 核心架构解析2.2 关键技术剖析 三、智能农业无人农场协同作业现状3.1 发展现状概述3.2 协同作业模式介绍 四、DeepSeek 的 “农场奇妙游”4.1 数据处理与分析4.2 作物生长监测与预测4.3 病虫害防治4.4 农机协同作业调度 五、实际案例大…...

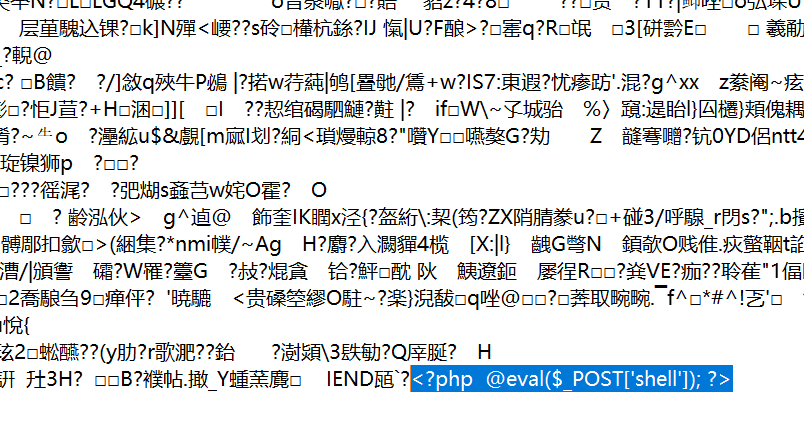

零基础在实践中学习网络安全-皮卡丘靶场(第九期-Unsafe Fileupload模块)(yakit方式)

本期内容并不是很难,相信大家会学的很愉快,当然对于有后端基础的朋友来说,本期内容更加容易了解,当然没有基础的也别担心,本期内容会详细解释有关内容 本期用到的软件:yakit(因为经过之前好多期…...

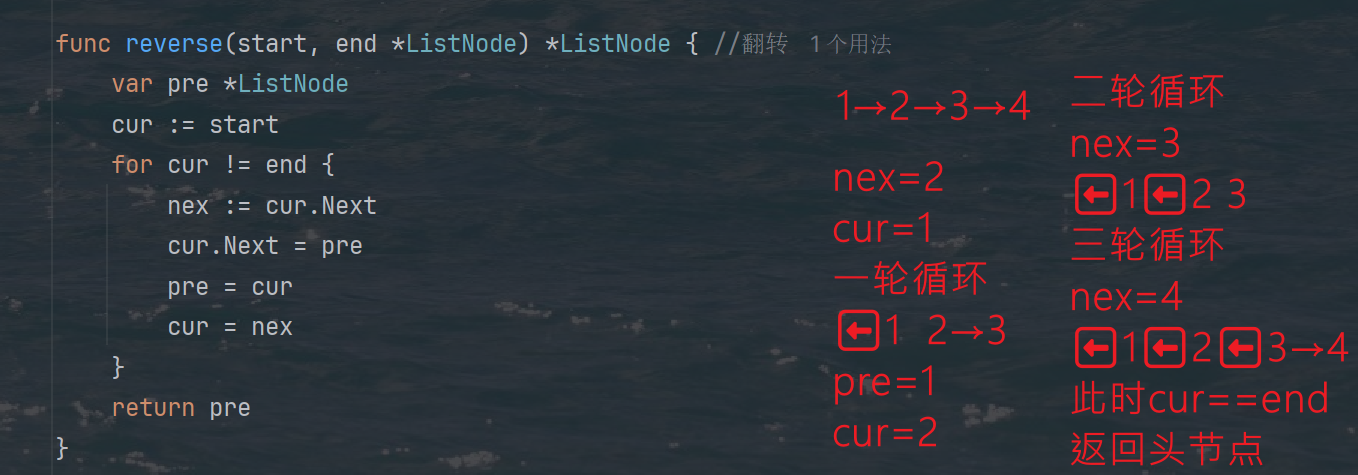

力扣热题100 k个一组反转链表题解

题目: 代码: func reverseKGroup(head *ListNode, k int) *ListNode {cur : headfor i : 0; i < k; i {if cur nil {return head}cur cur.Next}newHead : reverse(head, cur)head.Next reverseKGroup(cur, k)return newHead }func reverse(start, end *ListNode) *ListN…...

Python+ZeroMQ实战:智能车辆状态监控与模拟模式自动切换

目录 关键点 技术实现1 技术实现2 摘要: 本文将介绍如何利用Python和ZeroMQ消息队列构建一个智能车辆状态监控系统。系统能够根据时间策略自动切换驾驶模式(自动驾驶、人工驾驶、远程驾驶、主动安全),并通过实时消息推送更新车…...