ELKstack-日志收集案例

由于实验环境限制,将 filebeat 和 logstash 部署在 tomcat-server-nodeX,将 redis 和

写 ES 集群的 logstash 部署在 redis-server,将 HAproxy 和 Keepalived 部署在

tomcat-server-nodeX。将 Kibana 部署在 ES 集群主机。

环境:

| 主机名 | 核心 | RAM | IP | 运行的服务 |

|---|---|---|---|---|

| es-server-node1 | 2 | 4G | 192.168.100.142 | Elasticsearch、Kibana、Head、Cerebro |

| es-server-node2 | 2 | 4G | 192.168.100.144 | Elasticsearch 、Kibana |

| es-server-node3 | 2 | 4G | 192.168.100.146 | Elasticsearch 、Kibana |

| tomcat-server-node1 | 2 | 2G | 192.168.100.150 | logstash、filebeat、haproxy、tomcat |

| tomcat-server-node2 | 2 | 2G | 192.168.100.152 | logstash、filebeat、haproxy、nginx、tomcat |

| redis-server | 2 | 2G | 192.168.100.154 | redis、logstash、MySQL |

一. 基础环境说明

1.1 ES 和 logstash 需要 JAVA 环境

前提:关闭防火墙和 SELinux,时间同步

ES 安装说明

不同的 ES 版本需要的依赖和说明,查看官方文档:

ES 安装说明

Logstash 安装说明

不同的 ES 版本需要的依赖和说明,查看官方文档:

Logstash 安装说明

1.2 HAProxy 和 redis 及 nginx 编译基础工具安装

Ubuntu 安装:

apt -y purge ufw lxd lxd-client lxcfs liblxc-common

apt -y install iproute2 ntpdate tcpdump telnet traceroute nfs-kernel-server nfs-common lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev gcc make openssh-server iotop unzip zip

CentOS7 安装:

yum -y install vim-enhanced tcpdump lrzsz tree telnet bash-completion net-tools wget bzip2 lsof tmux man-pages zip unzip nfs-utils gcc make gcc-c++ glibc glibc-devel pcre pcre-devel openssl openssl-devel systemd-devel zlib-devel

二. 配置 filebeat 将数据通过 logstash 写入 redis

日志流动路径:

tomcat-server:filebeat --> tomcat-server:logstash --> redis-server:redis

2.1 filebeat 配置

2.1.1 tomcat-server-node1

filebeat: logstash-output doc

root@tomcat-server-node1:~# ip addr sho eth0 | grep "inet "inet 192.168.100.150/24 brd 192.168.100.255 scope global eth0root@tomcat-server-node1:~# vim /etc/filebeat/filebeat.yml

...

filebeat.inputs:

- type: logenabled: truepaths:- /var/log/syslogdocument_type: system-logexclude_lines: ['^DBG']fields:name: syslog_from_filebeat_150 # 该值用来在logstash区分日志来源,以输出到不同目标或创建不同index(输出目标为elasticsearch时)

utput.logstash:hosts: ["192.168.100.150:5044"]

2.1.2 tomcat-server-node2

root@tomcat-server-node2:~# ip addr show eth0 | grep "inet "inet 192.168.100.152/24 brd 192.168.100.255 scope global eth0root@tomcat-server-node2:~# vim /etc/filebeat/filebeat.yml

...

filebeat.inputs:

- type: logenabled: truepaths:- /var/log/syslogdocument_type: system-logexclude_lines: ['^DBG']fields:name: syslog_from_filebeat_152

output.logstash:hosts: ["192.168.100.152:5044"]

...

2.2 logstash 配置

logstash filebeat input plugin

logstash redis output plugin

2.2.1 tomcat-server-node1

root@tomcat-server-node1:/etc/logstash/conf.d# cat syslog_from_filebeat.conf

input {beats {host => "192.168.100.150"port => "5044"}

}output {redis {host => "192.168.100.154"port => "6379"db => "1"key => "syslog_150"data_type => "list"password => "stevenux"}

}

重启 logstash:

~# systemctl restart logstash

2.2.2 tomca-server-node2

root@tomcat-server-node2:/etc/logstash/conf.d# cat syslog_from_filebeat.conf

input {beats {host => "192.168.100.152"port => "5044"}

}output {redis {host => "192.168.100.154"port => "6379"db => "1"key => "syslog_152"data_type => "list"password => "stevenux"}

}重启 logstash:

~# systemctl restart logstash

2.3 redis 配置

2.3.1 关闭 RDB 和 AOF 数据持久

root@redis-server:/etc/logstash/conf.d# cat /usr/local/redis/redis.conf

...

bind 0.0.0.0

port 6379

daemonize yes

supervised no

pidfile /var/run/redis_6379.pid

loglevel notice

databases 16

# requirepass foobared # 设置密码

#save 900 # 关闭RDB

#save 300

#save 60 10000

#dbfilename dump.rdb

appendonly no # 关闭AOF

...

2.3.2 设置密码

开启 redis 后使用 redis-cli 设置密码,重启 redis 后失效:

127.0.0.1> CONFIG SET requirepass 'stevenux'

启动 redis:

root@redis-server:/etc/logstash/conf.d# /usr/local/redis/src/redis-server &

2.4 查看 logstash 和 filebeat 日志及 redis 数据

2.4.1 redis 数据

root@redis-server:~# redis-cli

127.0.0.1:6379> AUTH stevenux

OK

127.0.0.1:6379> SELECT 1

OK

127.0.0.1:6379[1]> KEYS *

1) "syslog_152"

2) "syslog_150"

127.0.0.1:6379[1]> LLEN syslog_152

(integer) 3760

127.0.0.1:6379[1]> LLEN syslog_150

(integer) 4125

127.0.0.1:6379[1]> LPOP syslog_152

"{\"agent\":{\"hostname\":\"tomcat-server-node2\",\"id\":\"93f937e9-e692-4434-8b83-7562f95ef976\",\"type\":\"filebeat\",\"version\":\"7.6.1\",\"ephemeral_id\":\"5cf51f37-15b3-44b7-bd01-293f0290774b\"},\"host\":{\"name\":\"tomcat-server-node2\",\"hostname\":\"tomcat-server-node2\",\"id\":\"e96c1092201442a4aeb7f67c5c417605\",\"architecture\":\"x86_64\",\"containerized\":false,\"os\":{\"codename\":\"bionic\",\"name\":\"Ubuntu\",\"platform\":\"ubuntu\",\"kernel\":\"4.15.0-55-generic\",\"family\":\"debian\",\"version\":\"18.04.3 LTS (Bionic Beaver)\"}},\"input\":{\"type\":\"log\"},\"@timestamp\":\"2020-03-22T05:52:07.572Z\",\"ecs\":{\"version\":\"1.4.0\"},\"tags\":[\"beats_input_codec_plain_applied\"],\"log\":{\"offset\":21760382,\"file\":{\"path\":\"/var/log/syslog\"}},\"message\":\"Mar 22 13:52:00 tomcat-server-node2 filebeat[1136]: 2020-03-22T13:52:00.256+0800#011ERROR#011pipeline/output.go:100#011Failed to connect to backoff(async(tcp://192.168.100.152:5044)): dial tcp 192.168.100.152:5044: connect: connection refused\",\"@version\":\"1\",\"fields\":{\"name\":\"syslog_from_filebeat_152\"}}"

127.0.0.1:6379[1]> LPOP syslog_150

"{\"@timestamp\":\"2020-03-22T05:46:08.122Z\",\"tags\":[\"beats_input_codec_plain_applied\"],\"fields\":{\"name\":\"syslog_from_filebeat_150\"},\"@version\":\"1\",\"agent\":{\"hostname\":\"tomcat-server-node1\",\"id\":\"93f937e9-e692-4434-8b83-7562f95ef976\",\"version\":\"7.6.1\",\"type\":\"filebeat\",\"ephemeral_id\":\"a03ec121-e70b-4039-a696-3e7ccefcb510\"},\"host\":{\"name\":\"tomcat-server-node1\",\"os\":{\"name\":\"Ubuntu\",\"platform\":\"ubuntu\",\"family\":\"debian\",\"kernel\":\"4.15.0-55-generic\",\"version\":\"18.04.3 LTS (Bionic Beaver)\",\"codename\":\"bionic\"},\"containerized\":false,\"architecture\":\"x86_64\",\"id\":\"e96c1092201442a4aeb7f67c5c417605\",\"hostname\":\"tomcat-server-node1\"},\"input\":{\"type\":\"log\"},\"ecs\":{\"version\":\"1.4.0\"},\"message\":\"Mar 22 13:11:57 tomcat-server-node1 kernel: [ 0.000000] 2 disabled\",\"log\":{\"offset\":21567440,\"file\":{\"path\":\"/var/log/syslog\"}}}"

127.0.0.1:6379[1]>

2.4.2 logstash 日志

root@tomcat-server-node1:/etc/logstash/conf.d# tail /var/log/logstash/logstash-plain.log -n66

...

[2020-03-22T13:49:33,428][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"7.6.1"}

[2020-03-22T13:49:35,044][INFO ][org.reflections.Reflections] Reflections took 35 ms to scan 1 urls, producing 20 keys and 40 values

[2020-03-22T13:49:35,573][WARN ][org.logstash.instrument.metrics.gauge.LazyDelegatingGauge][main] A gauge metric of an unknown type (org.jruby.RubyArray) has been create for key: cluster_uuids. This may result in invalid serialization. It is recommended to log an issue to the responsible developer/development team.

[2020-03-22T13:49:35,603][INFO ][logstash.javapipeline ][main] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>250, "pipeline.sources"=>["/etc/logstash/conf.d/syslog_from_filebeat.conf"], :thread=>"#<Thread:0xacd988b run>"}

[2020-03-22T13:49:36,337][INFO ][logstash.inputs.beats ][main] Beats inputs: Starting input listener {:address=>"192.168.100.150:5044"}

[2020-03-22T13:49:36,354][INFO ][logstash.javapipeline ][main] Pipeline started {"pipeline.id"=>"main"}

[2020-03-22T13:49:36,426][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2020-03-22T13:49:36,482][INFO ][org.logstash.beats.Server][main] Starting server on port: 5044

[2020-03-22T13:49:36,728][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

2.4.2 filebeat 日志

root@tomcat-server-node1:/etc/logstash/conf.d# tail /var/log/syslog -n2

Mar 22 13:53:35 tomcat-server-node1 filebeat[1162]: 2020-03-22T13:53:35.082+0800#011INFO#011[monitoring]#011log/log.go:145#011Non-zero metrics in the last 30s#011{"monitoring": {"metrics": {"beat":{"cpu":{"system":{"ticks":990,"time":{"ms":6}},"total":{"ticks":1440,"time":{"ms":6},"value":1440},"user":{"ticks":450}},"handles":{"limit":{"hard":4096,"soft":1024},"open":11},"info":{"ephemeral_id":"a03ec121-e70b-4039-a696-3e7ccefcb510","uptime":{"ms":450355}},"memstats":{"gc_next":14313856,"memory_alloc":11135512,"memory_total":142802472},"runtime":{"goroutines":29}},"filebeat":{"events":{"added":1,"done":1},"harvester":{"files":{"cf3638b8-1dfe-4b35-acd1-d3ec67a6780e":{"last_event_published_time":"2020-03-22T13:53:07.351Z","last_event_timestamp":"2020-03-22T13:53:07.351Z","read_offset":1259,"size":1259}},"open_files":1,"running":1}},"libbeat":{"config":{"module":{"running":0}},"output":{"events":{"acked":1,"batches":1,"total":1},"read":{"bytes":6},"write":{"bytes":1134}},"pipeline":{"clients":1,"events":{"active":0,"published":1,"total":1},"queue":{"acked":1}}},"registrar":{"states":{"current":2,"update":1},"writes":{"success":1,"total":1}},"system":{"load":{"1":0.03,"15":0.41,"5":0.56,"norm":{"1":0.015,"15":0.205,"5":0.28}}}}}}

Mar 22 13:54:05 tomcat-server-node1 filebeat[1162]: 2020-03-22T13:54:05.082+0800#011INFO#011[monitoring]#011log/log.go:145#011Non-zero metrics in the last 30s#011{"monitoring": {"metrics": {"beat":{"cpu":{"system":{"ticks":990,"time":{"ms":4}},"total":{"ticks":1450,"time":{"ms":9},"value":1450},"user":{"ticks":460,"time":{"ms":5}}},"handles":{"limit":{"hard":4096,"soft":1024},"open":11},"info":{"ephemeral_id":"a03ec121-e70b-4039-a696-3e7ccefcb510","uptime":{"ms":480354}},"memstats":{"gc_next":14313856,"memory_alloc":12851080,"memory_total":144518040},"runtime":{"goroutines":29}},"filebeat":{"events":{"added":1,"done":1},"harvester":{"files":{"cf3638b8-1dfe-4b35-acd1-d3ec67a6780e":{"last_event_published_time":"2020-03-22T13:53:42.357Z","last_event_timestamp":"2020-03-22T13:53:42.357Z","read_offset":1245,"size":1245}},"open_files":1,"running":1}},"libbeat":{"config":{"module":{"running":0}},"output":{"events":{"acked":1,"batches":1,"total":1},"read":{"bytes":6},"write":{"bytes":1130}},"pipeline":{"clients":1,"events":{"active":0,"published":1,"total":1},"queue":{"acked":1}}},"registrar":{"states":{"current":2,"update":1},"writes":{"success":1,"total":1}},"system":{"load":{"1":0.02,"15":0.39,"5":0.5,"norm":{"1":0.01,"15":0.195,"5":0.25}}}}}}

三. 配置 logstash 取出 redis 数据写入 ES 集群

日志数据流动:

redis-server: redis --> redis-server: logstash --> es-server-nodeX: elasticsearch

3.1 logstash 配置

root@redis-server:/etc/logstash/conf.d# vim syslog_redis_to_es.conf

root@redis-server:/etc/logstash/conf.d# cat syslog_redis_to_es.conf

input {redis {host => "192.168.100.154"port => "6379"data_type => "list"db => "1"key => "syslog_150"password => "stevenux"}redis {host => "192.168.100.154"port => "6379"data_type => "list"db => "1"key => "syslog_152"password => "stevenux"}}output {if [fields][name] == "syslog_from_filebeat_150" { # 该判断值在filebeat配置文件中定义elasticsearch {hosts => ["192.168.100.144:9200"]index => "syslog_from_filebeat_150-%{+YYYY.MM.dd}"}}if [fields][name] == "syslog_from_filebeat_152" {elasticsearch {hosts => ["192.168.100.144:9200"]index => "syslog_from_filebeat_152-%{+YYYY.MM.dd}"}}}测试语法:

root@redis-server:/etc/logstash/conf.d# pwd

/etc/logstash/conf.d

root@redis-server:/etc/logstash/conf.d# /usr/share/logstash/bin/logstash -f syslog_redis_to_es.conf -t

启动 logstash:

root@redis-server:/etc/logstash/conf.d# systemctl restart logstash

查看 redis 数据是否被取走:

127.0.0.1:6379[1]> KEYS *

1) "syslog_152"

2) "syslog_150"

127.0.0.1:6379[1]> llen syslog_152

(integer) 3804

127.0.0.1:6379[1]> llen syslog_152

(integer) 3804

127.0.0.1:6379[1]> KEYS *

1) "syslog_152"

2) "syslog_150"

127.0.0.1:6379[1]> llen syslog_152

(integer) 3805

127.0.0.1:6379[1]> llen syslog_152

(integer) 3808

127.0.0.1:6379[1]> KEYS *

1) "syslog_152"

2) "syslog_150"

127.0.0.1:6379[1]> KEYS *

1) "syslog_152"

2) "syslog_150"

127.0.0.1:6379[1]> KEYS *

1) "syslog_152"

2) "syslog_150"

127.0.0.1:6379[1]> KEYS *

(empty list or set) # gone

127.0.0.1:6379[1]> KEYS *

(empty list or set)

127.0.0.1:6379[1]> KEYS *

(empty list or set)

四.将日志写入 MySQL

写入数据库的目的是用于持久化保存重要数据,比如状态码、客户端 IP、客户

端浏览器版本等等,用于后期按月做数据统计等。

4.1 安装 MySQL

使用 apt 安装:

root@redis-server:~# apt install mysql-server

root@redis-server:~# mysql

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.29-0ubuntu0.18.04.1 (Ubuntu)

...

mysql> ALTER USER user() IDENTIFIED BY 'stevenux'; # 更root密码

Query OK, 0 rows affected (0.00 sec)mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)mysql>

4.2 创建 logstash 用户并授权

4.2.1 创建数据库和用户授权

授权 logstash 用户访问数据库,将数据存入新建的数据库 log_data:

...

mysql> CREATE DATABASE log_data CHARACTER SET utf8 COLLATE utf8_bin;

Query OK, 1 row affected (0.00 sec)mysql> GRANT ALL PRIVILEGES ON log_data.* TO logstash@"%" IDENTIFIED BY 'stevenux';

Query OK, 0 rows affected, 1 warning (0.00 sec)mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)mysql>

4.2.2 测试 logstash 用户连接数据库

root@redis-server:~# mysql -ulogstash -p

Enter password: (stevenux)

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 5

Server version: 5.7.29-0ubuntu0.18.04.1 (Ubuntu)

...mysql> SHOW DATABASES;

+--------------------+

| Database |

+--------------------+

| information_schema |

| log_data |

+--------------------+

2 rows in set (0.00 sec)mysql>

4.3 配置 logstash 连接数据库

logstash 连接 MySQL 数据库需要使用 MySQL 官方的 JDBC 驱动程序MySQL Connector/J。

MySQL Connector/J 是 MySQL 官方 JDBC 驱动程序,JDBC(Java Data Base Connectivity)

java 数据库连接器,是一种用于执行 SQL 语句的 Java API,可以为多种关系数据库提供

统一访问,它由一组用 Java 语言编写的类和接口组成。

4.3.1 下载驱动 jar 包

root@redis-server:/usr/local/src# pwd

/usr/local/src

root@redis-server:/usr/local/src# wget https://downloads.mysql.com/archives/get/p/3/file/mysql-connector-java-5.1.42.zip

4.3.2 安装 jar 包到 logstash

# 解压zip包

root@redis-server:/usr/local/src# unzip mysql-connector-java-5.1.42.zip

root@redis-server:/usr/local/src# ll mysql-connector-java-5.1.42

total 1468

drwxr-xr-x 4 root root 4096 Apr 17 2017 ./

drwxr-xr-x 5 root root 4096 Mar 22 15:05 ../

-rw-r--r-- 1 root root 91463 Apr 17 2017 build.xml

-rw-r--r-- 1 root root 244278 Apr 17 2017 CHANGES

-rw-r--r-- 1 root root 18122 Apr 17 2017 COPYING

drwxr-xr-x 2 root root 4096 Apr 17 2017 docs/

-rw-r--r-- 1 root root 996444 Apr 17 2017 mysql-connector-java-5.1.42-bin.jar

-rw-r--r-- 1 root root 61407 Apr 17 2017 README

-rw-r--r-- 1 root root 63658 Apr 17 2017 README.txt

drwxr-xr-x 8 root root 4096 Apr 17 2017 src/# 安装logstash的要求创建目录

root@redis-server:/usr/local/src# mkdir -pv /usr/share/logstash/vendor/jar/jdbc

mkdir: created directory '/usr/share/logstash/vendor/jar'

mkdir: created directory '/usr/share/logstash/vendor/jar/jdbc'# 将jar包拷贝过去

root@redis-server:/usr/local/src# cp mysql-connector-java-5.1.42/mysql-connector-java-5.1.42-bin.jar /usr/share/logstash/vendor/jar/jdbc/# 更改权限

root@redis-server:/usr/local/src# chown logstash.logstash /usr/share/logstash/vendor/jar -R

root@redis-server:/usr/local/src# ll /usr/share/logstash/vendor/jar/

total 12

drwxr-xr-x 3 logstash logstash 4096 Mar 22 15:07 ./

drwxrwxr-x 5 logstash logstash 4096 Mar 22 15:07 ../

drwxr-xr-x 2 logstash logstash 4096 Mar 22 15:09 jdbc/

root@redis-server:/usr/local/src# ll /usr/share/logstash/vendor/jar/jdbc/

total 984

drwxr-xr-x 2 logstash logstash 4096 Mar 22 15:09 ./

drwxr-xr-x 3 logstash logstash 4096 Mar 22 15:07 ../

-rw-r--r-- 1 logstash logstash 996444 Mar 22 15:09 mysql-connector-java-5.1.42-bin.jar

4.4 配置 logstash 的输出插件

4.4.1 配置 gem 源

logstash 的输出到 SQL 的插件为logstash-output-jdbc,该插件使用了 shell

脚本和 ruby 脚本写成,所以需要使用 ruby 的包管理器 gem 和 gem 源。

国外的 gem 源由于网络原因,从国内访问太慢而且不稳定,还经常安装不成功,因此

之前一段时间很多人都是使用国内淘宝的 gem 源https://ruby.taobao.org/,现在

淘宝的 gem 源虽然还可以使用,但是已经停止维护更新。其官方介绍

root@redis-server:/usr/local/src# snap install ruby

root@redis-server:/usr/local/src# apt install gem# 更改源

root@redis-server:/usr/local/src# gem sources --add https://gems.ruby-china.com/ --remove https://rubygems.org/

https://gems.ruby-china.com/ added to sources

https://rubygems.org/ removed from sources# 查看源

root@redis-server:/usr/local/src# gem sources -l

*** CURRENT SOURCES ***https://gems.ruby-china.com/ # 确认只有ruby-china

4.4.2 安装配置 logstash-output-jdbc 插件

该插件可以将 logstash 的数据通过 JDBC 输出到 SQL 数据库。使用 logstash 自带的

/usr/share/logstash/bin/logstash-plugin工具安装该插件。

# 查看已安装的插件

root@redis-server:~# /usr/share/logstash/bin/logstash-plugin list

...

logstash-codec-avro

logstash-codec-cef

logstash-codec-collectd

logstash-codec-dots

logstash-codec-edn

logstash-codec-edn_lines

logstash-codec-es_bulk

logstash-codec-fluent

logstash-codec-graphite

logstash-codec-json

...# 安装logstash-output-jdbc

root@redis-server:~# /usr/share/logstash/bin/logstash-plugin install logstash-output-jdbc

...

Validating logstash-output-jdbc

Installing logstash-output-jdbc

Installation successful # 安装完成

4.5 事先在数据库创建表结构

收集 tomcat 的访问日志中的 clientip,status,AgentVersion,method 和

访问时间等字段值。时间字段使用系统时间。

root@redis-server:~# mysql -ulogstash -p

Enter password:

...

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| log_data |

+--------------------+

2 rows in set (0.00 sec)mysql> select CURRENT_TIMESTAMP;

+---------------------+

| CURRENT_TIMESTAMP |

+---------------------+

| 2020-03-22 16:36:33 |

+---------------------+

1 row in set (0.00 sec)mysql> USE log_data;

Database changed

mysql> CREATE TABLE tom_log (host varchar(128), status int(32), clientip varchar(50), AgentVersion varchar(512), time timestamp default current_timestamp);

Query OK, 0 rows affected (0.02 sec)mysql> show tables;

+--------------------+

| Tables_in_log_data |

+--------------------+

| tom_log |

+--------------------+

1 row in set (0.00 sec)mysql> desc tom_log;

+--------------+--------------+------+-----+-------------------+-------+

| Field | Type | Null | Key | Default | Extra |

+--------------+--------------+------+-----+-------------------+-------+

| host | varchar(128) | YES | | NULL | |

| status | int(32) | YES | | NULL | |

| clientip | varchar(50) | YES | | NULL | |

| AgentVersion | varchar(512) | YES | | NULL | |

| time | timestamp | NO | | CURRENT_TIMESTAMP | | # 时间字段

+--------------+--------------+------+-----+-------------------+-------+

5 rows in set (0.02 sec)

4.6 syslog 更改为手机 tomcat 访问日志

4.6.1 保证 tomcat 的日志格式为 json

root@tomcat-server-node1:~# cat /usr/local/tomcat/conf/server.xml

...

<Server>

...<Service>...<Engine>...<Host>...<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"prefix="tomcat_access_log" suffix=".log"pattern="{"clientip":"%h","ClientUser":"%l","authenticated":"%u","AccessTime":"%t","method":"%r","status":"%s","SendBytes":"%b","Query?string":"%q","partner":"%{Referer}i","AgentVersion":"%{User-Agent}i"}" /></Host></Engine></Service>

</Server>

4.6.2 filebeat 配置

tomcat-server-node1

root@tomcat-server-node1:~# cat /etc/filebeat/filebeat.yml

...

filebeat.inputs:

- type: logenabled: truepaths:- /var/log/syslogdocument_type: system-logexclude_lines: ['^DBG']#include_lines: ['^ERR', '^WARN']fields:name: syslog_from_filebeat_150 # 自定义条目filebeat.inputs:

- type: logenabled: truepaths:- /usr/local/tomcat/logs/tomcat_access_log.2020-03-22.logdocument_type: tomcat-logexclude_lines: ['^DBG']fields:name: tom_from_filebeat_150

# logstash输出

output.logstash:hosts: ["192.168.100.150:5044"]

tomcat-server-node2

root@tomcat-server-node2:~# cat /etc/filebeat/filebeat.yml

...

filebeat.inputs:

- type: logenabled: truepaths:- /var/log/syslogdocument_type: system-logexclude_lines: ['^DBG']fields:name: syslog_from_filebeat_152 # 自定义字段filebeat.inputs:

- type: logenabled: truepaths:- /usr/local/tomcat/logs/tomcat_access_log.2020-03-22.logdocument_type: tomcat-logexclude_lines: ['^DBG']fields:name: tom_from_filebeat_152# logstash输出

output.logstash:hosts: ["192.168.100.152:5044"]

4.6.3 logstash 配置

tomcat-server-node1

root@tomcat-server-node1:~# cat /etc/logstash/conf.d/tom_from_filebeat.conf

input {beats {host => "192.168.100.150"port => "5044"}

}output {if [fields][name] == "tom_from_filebeat_150" {redis {host => "192.168.100.154"port => "6379"db => "1"key => "tomlog_150"data_type => "list"password => "stevenux"}}

}

tomcat-server-node2

root@tomcat-server-node2:~# cat /etc/logstash/conf.d/tomlog_from_filebeat.conf

input {beats {host => "192.168.100.152"port => "5044"}

}output {if [fields][name] == "tom_from_filebeat_152" {redis {host => "192.168.100.154"port => "6379"db => "1"key => "tomlog_152"data_type => "list"password => "stevenux"}}

}

4.6.4 查看 redis 数据

root@redis-server:/etc/logstash/conf.d# redis-cli

127.0.0.1:6379> auth stevenux

OK

127.0.0.1:6379> select 1

OK

127.0.0.1:6379[12]> KEYS *

(empty list or set)

127.0.0.1:6379[1]> KEYS *

(empty list or set)

127.0.0.1:6379[1]> KEYS *

(empty list or set)

1) "tomlog_150"

127.0.0.1:6379[1]> KEYS *

1) "tomlog_150"

127.0.0.1:6379[1]> KEYS *

1) "tomlog_150"

127.0.0.1:6379[1]> KEYS *

1) "tomlog_150"

127.0.0.1:6379[1]> KEYS *

1) "tomlog_150"

.....

127.0.0.1:6379[1]>

127.0.0.1:6379[1]>

127.0.0.1:6379[1]>127.0.0.1:6379[1]> KEYS *

1) "tomlog_150"

2) "tomlog_152"

127.0.0.1:6379[1]>4.6 配置 logstash 输出到 MySQL

日志数据流动:

redis-server:redis --> redis-server: logstash --> redis-server: MySQL

logstash 使用该插件配置示例:

input

{stdin { }

}

output {jdbc {driver_class => "com.mysql.jdbc.Driver"connection_string => "jdbc:mysql://HOSTNAME/DATABASE?user=USER&password=PASSWORD"statement => [ "INSERT INTO log (host, timestamp, message) VALUES(?, CAST(? AS timestamp), ?)", "host", "@timestamp", "message" ]}

}

数据库连接出错解决:

~# mysql -ulogstash -h192.168.100.154 -pstevenux

mysql: [Warning] Using a password on the command line interface can be insecure.

# 出现以下错误,则更改一下监听地址为所有地址

ERROR 2003 (HY000): Can\'t connect to MySQL server on '192.168.100.154' (111)~# vim /etc/logstash/conf.d# vim /etc/mysql/mysql.conf.d/mysqld.cnf

...

bind-address = 0.0.0.0

...# 重启

root@redis-server:/etc/logstash/conf.d# systemctl restart mysql# 连接试试

root@redis-server:/etc/logstash/conf.d# mysql -ulogstash -h192.168.100.154 -p

Enter password:mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| log_data |

+--------------------+

2 rows in set (0.01 sec)

确保 logstash 已经安装插件:

root@redis-server:/etc/logstash/conf.d# /usr/share/logstash/bin/logstash-plugin list | grep jdbc

...

logstash-integration-jdbc├── logstash-input-jdbc├── logstash-filter-jdbc_streaming└── logstash-filter-jdbc_static

logstash-output-jdbcroot@redis-server:~# ll /usr/share/logstash/vendor/jar/jdbc/mysql-connector-java-5.1.42-bin.jar

-rw-r--r-- 1 logstash logstash 996444 Mar 22 15:09 /usr/share/logstash/vendor/jar/jdbc/mysql-connector-java-5.1.42-bin.jar

配置 logstash:

root@redis-server:~# cat /etc/logstash/conf.d/tomlog_from_redis_to_mysql.conf

input {redis {host => "192.168.100.154"port => "6379"db => "1"data_type => "list"key => "tomlog_150"password => "stevenux"}redis {host => "192.168.100.154"port => "6379"db => "1"data_type => "list"key => "tomlog_152"password => "stevenux"}

}output {jdbc {driver_class => "com.mysql.jdbc.Driver"connection_string => "jdbc:mysql://192.168.100.154/log_data?user=logstash&password=stevenux"statement => [ "INSERT INTO tom_log (host, status, clientip, AgentVersion, time) VALUES(?, ?, ?, ?, ?)", "host", "status", "clientip", "AgentVersion","time" ]}

}

检查语法:

root@redis-server:~# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/tomlog_from_redis_to_mysql.conf -t

...

[INFO ] 2020-03-22 17:13:34.157 [LogStash::Runner] Reflections - Reflections took 41 ms to scan 1 urls, producing 20 keys and 40 values

Configuration OK

[INFO ] 2020-03-22 17:13:34.593 [LogStash::Runner] runner - Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

4.7 测试

4.7.1 访问一下

root@es-server-node2:~# curl 192.168.100.152:8080

root@es-server-node2:~# curl 192.168.100.152:8080/not_exists

root@es-server-node3:~# curl 192.168.100.152:8080

root@es-server-node3:~# curl 192.168.100.152:8080/not_exists

4.7.2 查看数据

root@redis-server:/etc/logstash/conf.d# mysql -ulogstash -h192.168.100.154 -p

Enter password:

...

mysql> SHOW DATABASES;

+--------------------+

| Database |

+--------------------+

| information_schema |

| log_data |

+--------------------+

2 rows in set (0.00 sec)mysql> USE log_data;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -ADatabase changed

mysql> SHOW TABLES;

+--------------------+

| Tables_in_log_data |

+--------------------+

| tom_log |

+--------------------+

1 row in set (0.00 sec)mysql> SELECT COUNT(*) FROM tom_log;

+----------+

| COUNT(*) |

+----------+

| 2072 |

+----------+

1 row in set (0.00 sec)mysql> SELECT * FROM tom_log ORDER BY time DESC LIMIT 5 \G

*************************** 1. row ***************************host: tomcat-server-node2status: 200clientip: 192.168.100.146

AgentVersion: curl/7.58.0time: 2020-03-22 21:05:42

*************************** 2. row ***************************host: tomcat-server-node2status: 200clientip: 192.168.100.152

AgentVersion: curl/7.58.0time: 2020-03-22 21:05:42

*************************** 3. row ***************************host: tomcat-server-node2status: 200clientip: 192.168.100.150

AgentVersion: curl/7.58.0time: 2020-03-22 21:05:42

*************************** 4. row ***************************host: tomcat-server-node2status: 200clientip: 192.168.100.144

AgentVersion: curl/7.58.0time: 2020-03-22 21:05:42

*************************** 5. row ***************************host: tomcat-server-node2status: 200clientip: 192.168.100.150

AgentVersion: curl/7.58.0time: 2020-03-22 21:05:42

5 rows in set (0.01 sec)4.7.3 写 MySQL 的 logstash 日志

root@redis-server:/etc/logstash/conf.d# tail /var/log/logstash/logstash-plain.log -n12

[2020-03-22T20:48:54,555][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"7.6.1"}

[2020-03-22T20:48:56,366][INFO ][org.reflections.Reflections] Reflections took 33 ms to scan 1 urls, producing 20 keys and 40 values

[2020-03-22T20:48:56,825][INFO ][logstash.outputs.jdbc ][main] JDBC - Starting up

[2020-03-22T20:48:56,892][INFO ][com.zaxxer.hikari.HikariDataSource][main] HikariPool-1 - Starting...

[2020-03-22T20:48:57,242][INFO ][com.zaxxer.hikari.HikariDataSource][main] HikariPool-1 - Start completed.

[2020-03-22T20:48:57,326][WARN ][org.logstash.instrument.metrics.gauge.LazyDelegatingGauge][main] A gauge metric of an unknown type (org.jruby.RubyArray) has been create for key: cluster_uuids. This may result in invalid serialization. It is recommended to log an issue to the responsible developer/development team.

[2020-03-22T20:48:57,331][INFO ][logstash.javapipeline ][main] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>250, "pipeline.sources"=>["/etc/logstash/conf.d/tomlog_from_redis_to_mysql.conf"], :thread=>"#<Thread:0x752a3c2 run>"}

[2020-03-22T20:48:58,173][INFO ][logstash.inputs.redis ][main] Registering Redis {:identity=>"redis://<password>@192.168.100.154:6379/1 list:tomlog_150"}

[2020-03-22T20:48:58,178][INFO ][logstash.inputs.redis ][main] Registering Redis {:identity=>"redis://<password>@192.168.100.154:6379/1 list:tomlog_152"}

[2020-03-22T20:48:58,193][INFO ][logstash.javapipeline ][main] Pipeline started {"pipeline.id"=>"main"}

[2020-03-22T20:48:58,313][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2020-03-22T20:48:58,813][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}五. 通过 HAProxy 代理 Kibana

5.1 部署 Kibana

5.1.1 es-server-node1

# 安装

root@es-server-node1:/usr/local/src# dpkg -i kibana-7.6.1-amd64.deb

Selecting previously unselected package kibana.

(Reading database ... 85899 files and directories currently installed.)

Preparing to unpack kibana-7.6.1-amd64.deb ...

Unpacking kibana (7.6.1) ...

Setting up kibana (7.6.1) ...

Processing triggers for ureadahead (0.100.0-21) ...

Processing triggers for systemd (237-3ubuntu10.24) ...# 配置文件

root@es-server-node1:/usr/local/src# grep "^[a-Z]" /etc/kibana/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

server.name: "kibana-demo-node1"

elasticsearch.hosts: ["http://192.168.100.144:9200"]

5.1.2 es-server-node2

# 安装

root@es-server-node1:/usr/local/src# dpkg -i kibana-7.6.1-amd64.deb

Selecting previously unselected package kibana.

(Reading database ... 85899 files and directories currently installed.)

Preparing to unpack kibana-7.6.1-amd64.deb ...

Unpacking kibana (7.6.1) ...

Setting up kibana (7.6.1) ...

Processing triggers for ureadahead (0.100.0-21) ...

Processing triggers for systemd (237-3ubuntu10.24) ...# 配置文件

root@es-server-node1:/usr/local/src# grep "^[a-Z]" /etc/kibana/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

server.name: "kibana-demo-node2"

elasticsearch.hosts: ["http://192.168.100.144:9200"]

5.1.3 es-server-node3

将之前安装好的 kibana 配置文件分发给 node1 和 node2

root@es-server-node3:~# scp /etc/kibana/kibana.yml 192.168.100.142:/etc/kibana/

root@es-server-node3:~# scp /etc/kibana/kibana.yml 192.168.100.144:/etc/kibana/

5.2 HAProxy 和 keepalived 配置

5.2.1 Keepalived 配置

tomcat-server-node1

~# apt install keepalived -y

~# vim /etc/keepalived/keepalived.conf

root@tomcat-server-node1:~# cat /etc/keepalived/keepalived.conf

global_defs {notification_email {root@localhost}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id ha1.example.com

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0vrrp_mcast_group4 224.0.0.18

#vrrp_iptables

}vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass stevenux

}

virtual_ipaddress {192.168.100.200 dev eth0 label eth0:0

}

}root@tomcat-server-node1:~# systemctl start keepalivedroot@tomcat-server-node1:~# ip addr show eth0 | grep inetinet 192.168.100.150/24 brd 192.168.100.255 scope global eth0inet 192.168.100.200/32 scope global eth0:0inet6 fe80::20c:29ff:fe64:9fdf/64 scope link

tomcat-server-node2

~# apt install keepalived -y

~# vim /etc/keepalived/keepalived.confroot@tomcat-server-node2:/etc/logstash/conf.d# cat /etc/keepalived/keepalived.conf

global_defs {notification_email {root@localhost}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id ha1.example.com

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0vrrp_mcast_group4 224.0.0.18

#vrrp_iptables

}vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 80

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass stevenux

}

virtual_ipaddress {192.168.100.200 dev eth0 label eth0:0

}

}root@tomcat-server-node1:~# systemctl start keepalivedroot@tomcat-server-node2:/etc/logstash/conf.d# ip addr show eth0 | grep eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000inet 192.168.100.152/24 brd 192.168.100.255 scope global eth0

5.2.2 HAProxy 配置

tomcat-server-node1

root@tomcat-server-node1:~# cat /etc/haproxy/haproxy.cfg

globallog /dev/log local0log /dev/log local1 noticechroot /var/lib/haproxystats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listenersstats timeout 30suser haproxygroup haproxydaemonlog 127.0.0.1 local6 infoca-base /etc/ssl/certscrt-base /etc/ssl/privatessl-default-bind-ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:RSA+AESGCM:RSA+AES:!aNULL:!MD5:!DSSssl-default-bind-options no-sslv3defaultslog globalmode httpoption httplogoption dontlognulltimeout connect 5000timeout client 50000timeout server 50000errorfile 400 /etc/haproxy/errors/400.httperrorfile 403 /etc/haproxy/errors/403.httperrorfile 408 /etc/haproxy/errors/408.httperrorfile 500 /etc/haproxy/errors/500.httperrorfile 502 /etc/haproxy/errors/502.httperrorfile 503 /etc/haproxy/errors/503.httperrorfile 504 /etc/haproxy/errors/504.httplisten statsmode httpbind 0.0.0.0:9999stats enablelog globalstats uri /haproxy-statusstats auth haadmin:stevenuxlisten elasticsearch_clustermode httpbalance roundrobinbind 192.168.100.200:80server 192.168.100.142 192.168.100.142:5601 check inter 3s fall 3 rise 5server 192.168.100.144 192.168.100.144:5601 check inter 3s fall 3 rise 5server 192.168.100.146 192.168.100.146:5601 check inter 3s fall 3 rise 5root@tomcat-server-node1:~# systemctl restart haproxy

tomcat-server-node2

root@tomcat-server-node2:~# cat /etc/haproxy/haproxy.cfg

globallog /dev/log local0log /dev/log local1 noticechroot /var/lib/haproxystats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listenersstats timeout 30suser haproxygroup haproxydaemonlog 127.0.0.1 local6 infoca-base /etc/ssl/certscrt-base /etc/ssl/privatessl-default-bind-ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:RSA+AESGCM:RSA+AES:!aNULL:!MD5:!DSSssl-default-bind-options no-sslv3defaultslog globalmode httpoption httplogoption dontlognulltimeout connect 5000timeout client 50000timeout server 50000errorfile 400 /etc/haproxy/errors/400.httperrorfile 403 /etc/haproxy/errors/403.httperrorfile 408 /etc/haproxy/errors/408.httperrorfile 500 /etc/haproxy/errors/500.httperrorfile 502 /etc/haproxy/errors/502.httperrorfile 503 /etc/haproxy/errors/503.httperrorfile 504 /etc/haproxy/errors/504.httplisten statsmode httpbind 0.0.0.0:9999stats enablelog globalstats uri /haproxy-statusstats auth haadmin:stevenuxlisten elasticsearch_clustermode httpbalance roundrobinbind 192.168.100.200:80server 192.168.100.142 192.168.100.142:5601 check inter 3s fall 3 rise 5server 192.168.100.144 192.168.100.144:5601 check inter 3s fall 3 rise 5server 192.168.100.146 192.168.100.146:5601 check inter 3s fall 3 rise 5root@tomcat-server-node2:~# systemctl restart haproxy.service

六. 通过 nginx 代理 Kibana

将 nginx 作为反向代理服务器,增加登录用户认证功能,有效避免无关人员随意访问 kibana 页面。

6.1 nginx 配置

root@tomcat-server-node1:~# cat /apps/nginx/conf/nginx.confworker_processes 1;events {worker_connections 1024;

}http {include mime.types;default_type application/octet-stream;log_format access_json '{"@timestamp":"$time_iso8601",''"host":"$server_addr",''"clientip":"$remote_addr",''"size":$body_bytes_sent,''"responsetime":$request_time,''"upstreamtime":"$upstream_response_time",''"upstreamhost":"$upstream_addr",''"http_host":"$host",''"url":"$uri",''"domain":"$host",''"xff":"$http_x_forwarded_for",''"referer":"$http_referer",''"status":"$status"}';access_log logs/access.log access_json;sendfile on;keepalive_timeout 65;upstream kibana_server {server 192.168.100.142:5601 weight=1 max_fails=3 fail_timeout=60;}server {listen 80;server_name 192.168.100.150;location / {proxy_pass http://kibana_server;proxy_http_version 1.1;proxy_set_header Upgrade $http_upgrade;proxy_set_header Connection 'upgrade';proxy_set_header Host $host;proxy_cache_bypass $http_upgrade;}}}root@tomcat-server-node1:~# nginx -t

nginx: the configuration file /apps/nginx/conf/nginx.conf syntax is ok

nginx: configuration file /apps/nginx/conf/nginx.conf test is successful

root@tomcat-server-node1:~# nginx -s reload

6.3 登录认证配置

6.3.1 创建认证文件

# Centos

~# yum install httpd-tools# Ubuntu

root@tomcat-server-node1:~# apt install apache-utilsroot@tomcat-server-node1:~# htpasswd -bc /apps/nginx/conf/htpasswd.users stevenux stevenux

Adding password for user stevenux

root@tomcat-server-node1:~# cat /apps/nginx/conf/htpasswd.users

stevenux:$apr1$xYOszdHs$b2GX4zCBNv6tuNj427WoT1

root@tomcat-server-node1:~# htpasswd -b /apps/nginx/conf/htpasswd.users jack stevenux

Adding password for user jack

root@tomcat-server-node1:~# cat /apps/nginx/conf/htpasswd.users

stevenux:$apr1$xYOszdHs$b2GX4zCBNv6tuNj427WoT1

jack:$apr1$hfqwuymq$J4G86iNOyjUA08yMPhVU8.

6.3.2 nginx 配置

root@tomcat-server-node1:~# vim /apps/nginx/conf/nginx.conf

...

server {listen 80;server_name 192.168.100.150;auth_basic "Restricted Access"; # 添加这两翰auth_basic_user_file /apps/nginx/conf/htpasswd.users;location / {proxy_pass http://kibana_server;proxy_http_version 1.1;proxy_set_header Upgrade $http_upgrade;proxy_set_header Connection 'upgrade';proxy_set_header Host $host;proxy_cache_bypass $http_upgrade;}

...root@tomcat-server-node1:~# nginx -t

nginx: the configuration file /apps/nginx/conf/nginx.conf syntax is ok

nginx: configuration file /apps/nginx/conf/nginx.conf test is successful

root@tomcat-server-node1:~# nginx -s reload

相关文章:

ELKstack-日志收集案例

由于实验环境限制,将 filebeat 和 logstash 部署在 tomcat-server-nodeX,将 redis 和 写 ES 集群的 logstash 部署在 redis-server,将 HAproxy 和 Keepalived 部署在 tomcat-server-nodeX。将 Kibana 部署在 ES 集群主机。 环境:…...

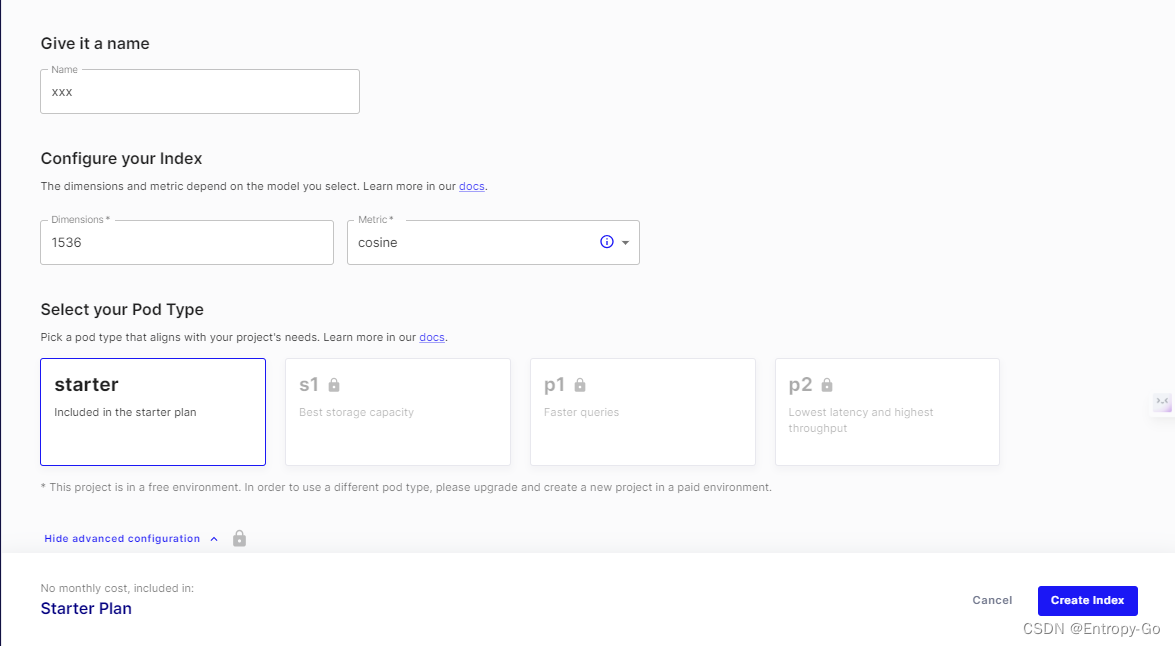

基于GPT-4和LangChain构建云端定制化PDF知识库AI聊天机器人

参考: GitHub - mayooear/gpt4-pdf-chatbot-langchain: GPT4 & LangChain Chatbot for large PDF docs 1.摘要: 使用新的GPT-4 api为多个大型PDF文件构建chatGPT聊天机器人。 使用的技术栈包括LangChain, Pinecone, Typescript, Openai和Next.js…...

Python可视化工具分享

今天和大家分享几个实用的纯python构建可视化界面服务,比如日常写了脚本但是不希望给别人代码,可以利用这些包快速构建好看的界面作为服务提供他人使用。有关于库的最新更新时间和当前star数量。 streamlit (23.3k Updated 2 hours ago) Streamlit 可让…...

ethers.js:构建ERC-20代币交易的不同方法

在这篇文章中,我们将探讨如何使用ethers.js将ERC-20令牌从一个地址转移到另一个地址 Ethers是一个非常酷的JavaScript库,它能够发送EIP-1559事务,而无需手动指定气体属性。它将确定gasLimit,并默认使用1.5 Gwei的maxPriorityFeePerGas,从v5.6.0开始。 此外,如果您使用签名…...

[实践篇]13.23 QNX环境变量profile

一,profile简介 /etc/profile或/system/etc/profile是qnx侧的设置环境变量的文件,该文件适用于所有用户,它可以用作以下情形: 设置HOMENAME和SYSNAME环境变量设置PATH环境变量设置TMPDIR环境变量(/tmp)设置PCI以及IFS_BASE等环境变量等文件内容示例如下: /etc/profile…...

HDLBits-Verilog学习记录 | Getting Started

Getting Started problem: Build a circuit with no inputs and one output. That output should always drive 1 (or logic high). 答案不唯一,仅共参考: module top_module( output one );// Insert your code hereassign one 1;endmodule相关解释…...

flask模型部署教程

搭建python flask服务的步骤 1、安装相关的包 具体参考https://blog.csdn.net/weixin_42126327/article/details/127642279 1、安装conda环境和相关包 # 一、安装conda # 1、首先,前往Anaconda官网(https://www.anaconda.com/products/individual&am…...

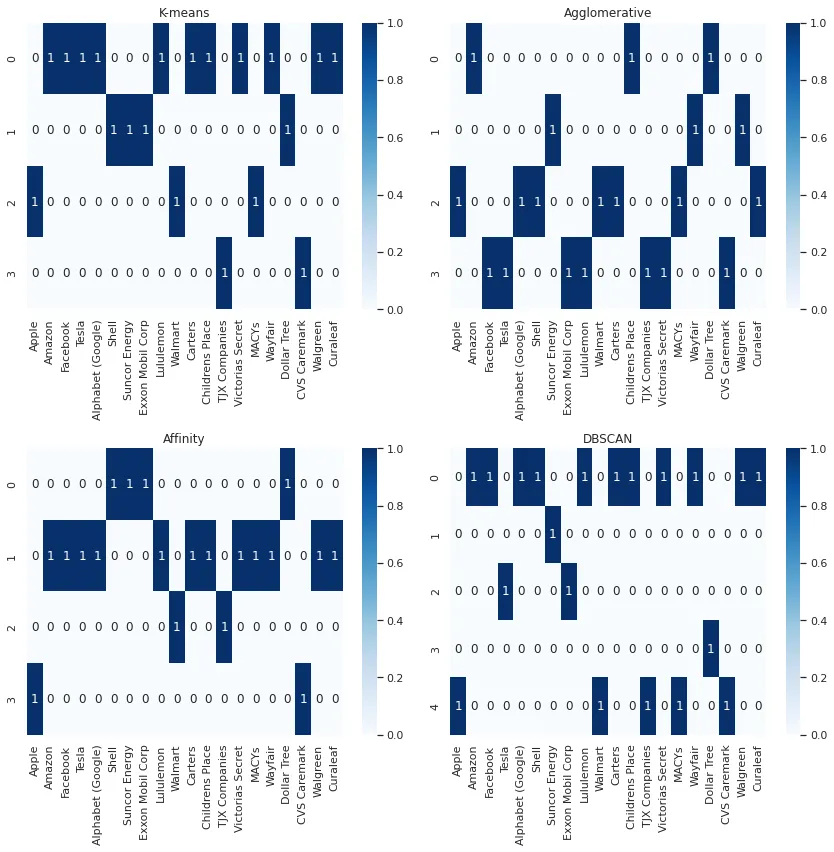

一文详解4种聚类算法及可视化(Python)

在这篇文章中,基于20家公司的股票价格时间序列数据。根据股票价格之间的相关性,看一下对这些公司进行聚类的四种不同方式。 苹果(AAPL),亚马逊(AMZN),Facebook(META&…...

SpringBoot---内置Tomcat 配置和切换

😀前言 本篇博文是关于内置Tomcat 配置和切换,希望你能够喜欢 🏠个人主页:晨犀主页 🧑个人简介:大家好,我是晨犀,希望我的文章可以帮助到大家,您的满意是我的动力&#x…...

Qt 显示git版本信息

项目场景: 项目需要在APP中显示当前的版本号,考虑到git共同开发,显示git版本,查找bug或恢复设置更为便捷。 使用需求: 显示的内容包括哪个分支编译的,版本号多少,编译时间,以及是否…...

Mysql的视图和管理

MySQL 视图(view) 视图是一个虚拟表,其内容由查询定义,同真实的表一样,视图包含列,其数据来自对应的真实表(基表) create view 视图名 as select语句alter view 视图名 as select语句 --更新成新的视图SHOW CREATE VIEW 视图名d…...

uniapp 顶部头部样式

<u-navbartitle"商城":safeAreaInsetTop"true"><view slot"left"><image src"/static/logo.png" mode"" class"u-w-50 u-h-50"></image></view></u-navbar>...

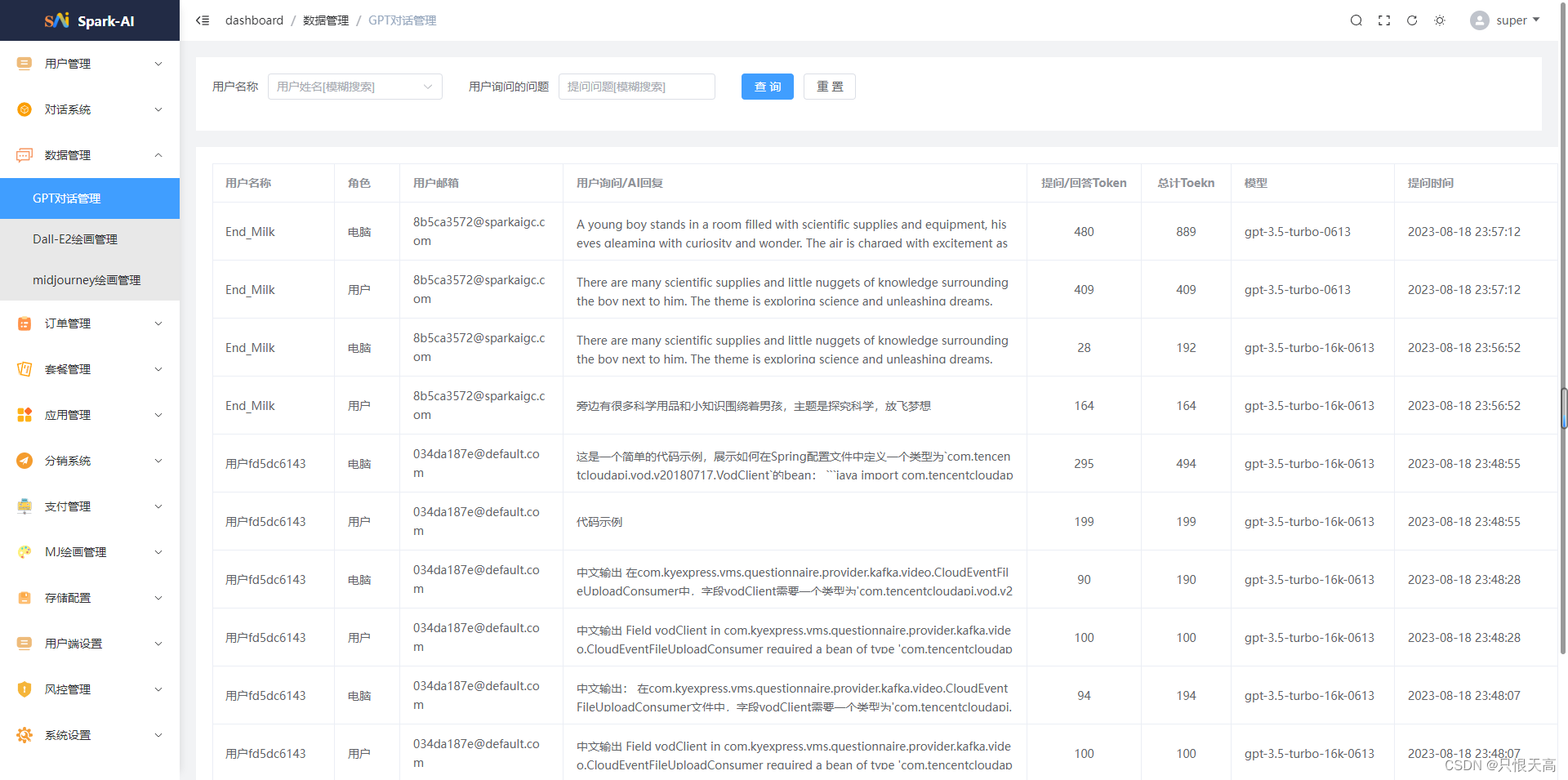

最新ai系统ChatGPT程序源码+详细搭建教程+mj以图生图+Dall-E2绘画+支持GPT4+AI绘画+H5端+Prompt知识库

目录 一、前言 二、系统演示 三、功能模块 3.1 GPT模型提问 3.2 应用工作台 3.3 Midjourney专业绘画 3.4 mind思维导图 四、源码系统 4.1 前台演示站点 4.2 SparkAi源码下载 4.3 SparkAi系统文档 五、详细搭建教程 5.1 基础env环境配置 5.2 env.env文件配置 六、环境…...

FairyGUI-Unity 自定义UIShader

FairyGUI中给组件更换Shader,最简单的方式就是找到组件中的Shader字段进行赋值。需要注意的是,对于自定的shader效果需要将目标图片进行单独发布,也就是一个目标图片占用一张图集。(应该会有更好的解决办法,但目前还是…...

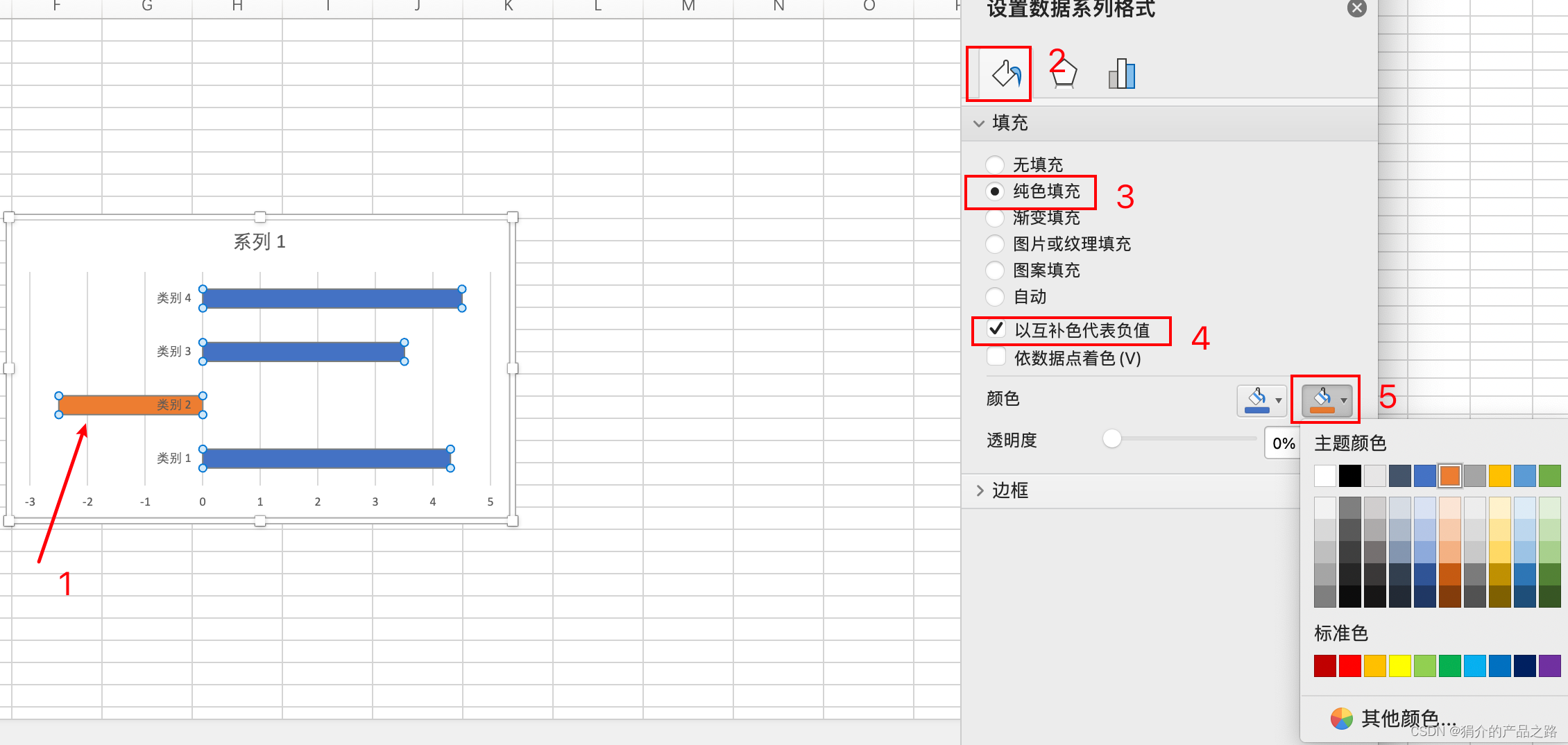

Excel/PowerPoint柱状图条形图负值设置补色

原始数据: 列1系列 1类别 14.3类别 2-2.5类别 33.5类别 44.5 默认作图 解决方案 1、选中柱子,双击,按如下顺序操作 2、这时候颜色会由一个变成两个 3、对第二个颜色进行设置,即为负值的颜色 条形图的设置方法相同...

el-date-picker 时间区域选择,type=daterange,form表单校验+数据回显问题

情景问题:新增表单有时间区域选择,选择了时间,还是提示必填的校验提示语,且修改时,通过 号赋值法,重新选择此时间范围无效。 解决方法:(重点) widthHoldTime:[]…...

LeetCode 面试题 01.02. 判定是否互为字符重排

文章目录 一、题目二、C# 题解 一、题目 给定两个由小写字母组成的字符串 s1 和 s2,请编写一个程序,确定其中一个字符串的字符重新排列后,能否变成另一个字符串,点击此处跳转。 示例 1: 输入: s1 “abc”, s2 “…...

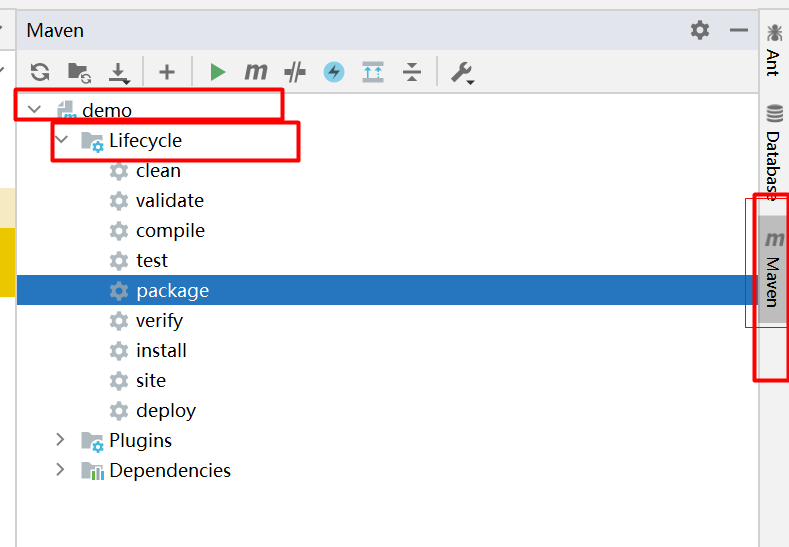

学习maven工具

文章目录 🐒个人主页🏅JavaEE系列专栏📖前言:🏨maven工具产生的背景🦓maven简介🪀pom.xml文件(project object Model 项目对象模型) 🪂maven工具安装步骤两个前提:下载 m…...

手机直播源码开发,协议讨论篇(三):RTMP实时消息传输协议

实时消息传输协议RTMP简介 RTMP又称实时消息传输协议,是一种实时通信协议。在当今数字化时代,手机直播源码平台为全球用户进行服务,如何才能增加用户,提升用户黏性?就需要让一对一直播平台能够为用户提供优质的体验。…...

【JavaEE基础学习打卡05】JDBC之基本入门就可以了

目录 前言一、JDBC学习前说明1.Java SE中JDBC2.JDBC版本 二、JDBC基本概念1.JDBC原理2.JDBC组件 三、JDBC基本编程步骤1.JDBC操作的数据库准备2.JDBC操作数据库表步骤 四、代码优化1.简单优化2.with-resources探讨 总结 前言 📜 本系列教程适用于JavaWeb初学者、爱好…...

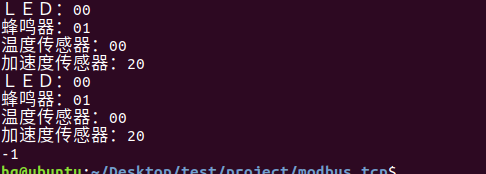

网络编程(Modbus进阶)

思维导图 Modbus RTU(先学一点理论) 概念 Modbus RTU 是工业自动化领域 最广泛应用的串行通信协议,由 Modicon 公司(现施耐德电气)于 1979 年推出。它以 高效率、强健性、易实现的特点成为工业控制系统的通信标准。 包…...

DeepSeek 赋能智慧能源:微电网优化调度的智能革新路径

目录 一、智慧能源微电网优化调度概述1.1 智慧能源微电网概念1.2 优化调度的重要性1.3 目前面临的挑战 二、DeepSeek 技术探秘2.1 DeepSeek 技术原理2.2 DeepSeek 独特优势2.3 DeepSeek 在 AI 领域地位 三、DeepSeek 在微电网优化调度中的应用剖析3.1 数据处理与分析3.2 预测与…...

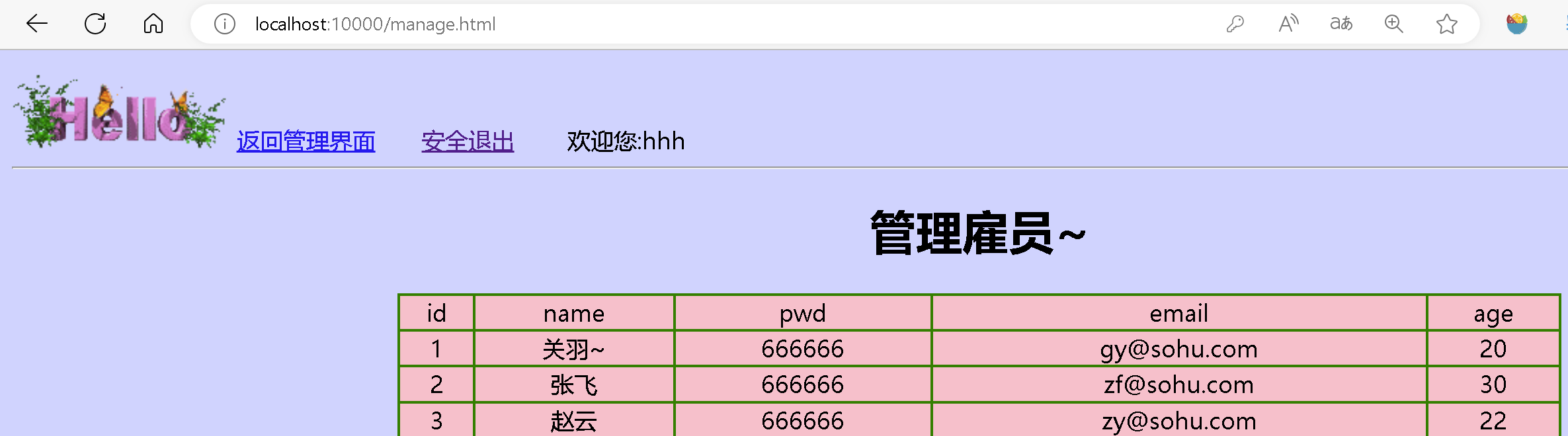

基于ASP.NET+ SQL Server实现(Web)医院信息管理系统

医院信息管理系统 1. 课程设计内容 在 visual studio 2017 平台上,开发一个“医院信息管理系统”Web 程序。 2. 课程设计目的 综合运用 c#.net 知识,在 vs 2017 平台上,进行 ASP.NET 应用程序和简易网站的开发;初步熟悉开发一…...

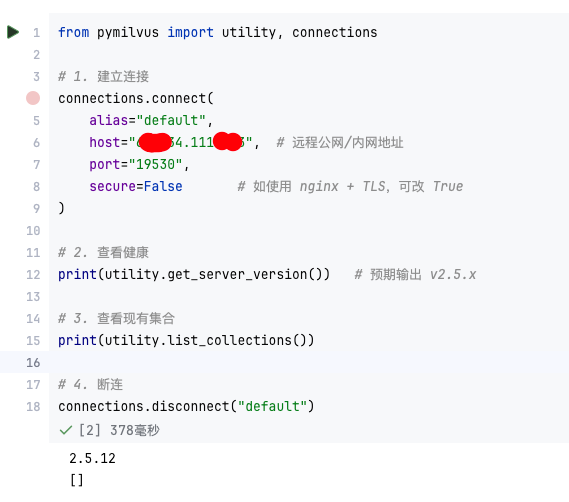

【大模型RAG】Docker 一键部署 Milvus 完整攻略

本文概要 Milvus 2.5 Stand-alone 版可通过 Docker 在几分钟内完成安装;只需暴露 19530(gRPC)与 9091(HTTP/WebUI)两个端口,即可让本地电脑通过 PyMilvus 或浏览器访问远程 Linux 服务器上的 Milvus。下面…...

解决本地部署 SmolVLM2 大语言模型运行 flash-attn 报错

出现的问题 安装 flash-attn 会一直卡在 build 那一步或者运行报错 解决办法 是因为你安装的 flash-attn 版本没有对应上,所以报错,到 https://github.com/Dao-AILab/flash-attention/releases 下载对应版本,cu、torch、cp 的版本一定要对…...

unix/linux,sudo,其发展历程详细时间线、由来、历史背景

sudo 的诞生和演化,本身就是一部 Unix/Linux 系统管理哲学变迁的微缩史。来,让我们拨开时间的迷雾,一同探寻 sudo 那波澜壮阔(也颇为实用主义)的发展历程。 历史背景:su的时代与困境 ( 20 世纪 70 年代 - 80 年代初) 在 sudo 出现之前,Unix 系统管理员和需要特权操作的…...

docker 部署发现spring.profiles.active 问题

报错: org.springframework.boot.context.config.InvalidConfigDataPropertyException: Property spring.profiles.active imported from location class path resource [application-test.yml] is invalid in a profile specific resource [origin: class path re…...

Pinocchio 库详解及其在足式机器人上的应用

Pinocchio 库详解及其在足式机器人上的应用 Pinocchio (Pinocchio is not only a nose) 是一个开源的 C 库,专门用于快速计算机器人模型的正向运动学、逆向运动学、雅可比矩阵、动力学和动力学导数。它主要关注效率和准确性,并提供了一个通用的框架&…...

AI病理诊断七剑下天山,医疗未来触手可及

一、病理诊断困局:刀尖上的医学艺术 1.1 金标准背后的隐痛 病理诊断被誉为"诊断的诊断",医生需通过显微镜观察组织切片,在细胞迷宫中捕捉癌变信号。某省病理质控报告显示,基层医院误诊率达12%-15%,专家会诊…...

淘宝扭蛋机小程序系统开发:打造互动性强的购物平台

淘宝扭蛋机小程序系统的开发,旨在打造一个互动性强的购物平台,让用户在购物的同时,能够享受到更多的乐趣和惊喜。 淘宝扭蛋机小程序系统拥有丰富的互动功能。用户可以通过虚拟摇杆操作扭蛋机,实现旋转、抽拉等动作,增…...