ceph 常用命令

bucket 常用命令

查看 realm (区域)

radosgw-admin realm list

输出

{"default_info": "43c462f5-5634-496e-ad4e-978d28c2x9090","realms": ["myrgw"]

}

radosgw-admin realm get

{"id": "2cfc7b36-43b6-4a9b-a89e-2a2264f54733","name": "mys3","current_period": "4999b859-83e2-42f9-8d3c-c7ae4b9685ff","epoch": 2

}

查看区域组

radosgw-admin zonegroups list

或者

radosgw-admin zonegroup list

输出

{"default_info": "f3a96381-12e2-4e7e-8221-c1d79708bc59","zonegroups": ["myrgw"]

}

radosgw-admin zonegroup get

{"id": "ad97bbae-61f1-41cb-a585-d10dd54e86e4","name": "mys3","api_name": "mys3","is_master": "true","endpoints": ["http://rook-ceph-rgw-mys3.rook-ceph.svc:80"],"hostnames": [],"hostnames_s3website": [],"master_zone": "9b5c0c9f-541d-4176-8527-89b4dae02ac2","zones": [{"id": "9b5c0c9f-541d-4176-8527-89b4dae02ac2","name": "mys3","endpoints": ["http://rook-ceph-rgw-mys3.rook-ceph.svc:80"],"log_meta": "false","log_data": "false","bucket_index_max_shards": 11,"read_only": "false","tier_type": "","sync_from_all": "true","sync_from": [],"redirect_zone": ""}],"placement_targets": [{"name": "default-placement","tags": [],"storage_classes": ["STANDARD"]}],"default_placement": "default-placement","realm_id": "2cfc7b36-43b6-4a9b-a89e-2a2264f54733","sync_policy": {"groups": []}

}

查看 zone

radosgw-admin zone list

{"default_info": "9b5c0c9f-541d-4176-8527-89b4dae02ac2","zones": ["mys3","default"]

}

radosgw-admin zone get

{"id": "9b5c0c9f-541d-4176-8527-89b4dae02ac2","name": "mys3","domain_root": "mys3.rgw.meta:root","control_pool": "mys3.rgw.control","gc_pool": "mys3.rgw.log:gc","lc_pool": "mys3.rgw.log:lc","log_pool": "mys3.rgw.log","intent_log_pool": "mys3.rgw.log:intent","usage_log_pool": "mys3.rgw.log:usage","roles_pool": "mys3.rgw.meta:roles","reshard_pool": "mys3.rgw.log:reshard","user_keys_pool": "mys3.rgw.meta:users.keys","user_email_pool": "mys3.rgw.meta:users.email","user_swift_pool": "mys3.rgw.meta:users.swift","user_uid_pool": "mys3.rgw.meta:users.uid","otp_pool": "mys3.rgw.otp","system_key": {"access_key": "","secret_key": ""},"placement_pools": [{"key": "default-placement","val": {"index_pool": "mys3.rgw.buckets.index","storage_classes": {"STANDARD": {"data_pool": "mys3.rgw.buckets.data"}},"data_extra_pool": "mys3.rgw.buckets.non-ec","index_type": 0,"inline_data": "true"}}],"realm_id": "","notif_pool": "mys3.rgw.log:notif"

}

查看bucket 名字

radosgw-admin bucket list

查看某个bucket的详细信息

说明:对象 id ,有多少个对象,存储限制等信息都能查到。

radosgw-admin bucket stats --bucket=ceph-bkt-9

{"bucket": "ceph-bkt-9","num_shards": 9973,"tenant": "","zonegroup": "f3a96381-12e2-4e7e-8221-c1d79708bc59","placement_rule": "default-placement","explicit_placement": {"data_pool": "","data_extra_pool": "","index_pool": ""},"id": "af3f8be9-99ee-44b7-9d17-5b616dca80ff.45143.53","marker": "af3f8be9-99ee-44b7-9d17-5b616dca80ff.45143.53","index_type": "Normal","owner": "mys3-juicefs","ver": "0#536,1#475,省略","master_ver": "0#0,1#0,2#0,3#0,4#0,省略","mtime": "0.000000","creation_time": "2023-11-03T16:58:09.692764Z","max_marker": "0#,1#,2#,3#,省略","usage": {"rgw.main": {"size": 88057775893,"size_actual": 99102711808,"size_utilized": 88057775893,"size_kb": 85993922,"size_kb_actual": 96779992,"size_kb_utilized": 85993922,"num_objects": 4209803}},"bucket_quota": {"enabled": false,"check_on_raw": false,"max_size": -1,"max_size_kb": 0,"max_objects": -1}

}

查看bucket的配置信息,例如索引分片值

radosgw-admin bucket limit check

说明:由于输出太多,所以只显示50行

radosgw-admin bucket limit check|head -50

[{"user_id": "dashboard-admin","buckets": []},{"user_id": "obc-default-ceph-bkt-openbayes-juicefs-6a2b2c57-d393-4529-8620-c0af6c9c30f8","buckets": [{"bucket": "ceph-bkt-20d5f58a-7501-4084-baca-98d9e68a7e57","tenant": "","num_objects": 355,"num_shards": 11,"objects_per_shard": 32,"fill_status": "OK"}]},{"user_id": "rgw-admin-ops-user","buckets": []},{"user_id": "mys3-user","buckets": [{"bucket": "ceph-bkt-caa8a9d1-c278-4015-ba2d-354e142c0","tenant": "","num_objects": 80,"num_shards": 11,"objects_per_shard": 7,"fill_status": "OK"},{"bucket": "ceph-bkt-caa8a9d1-c278-4015-ba2d-354e142c1","tenant": "","num_objects": 65,"num_shards": 11,"objects_per_shard": 5,"fill_status": "OK"},{"bucket": "ceph-bkt-caa8a9d1-c278-4015-ba2d-354e142c10","tenant": "","num_objects": 83,"num_shards": 11,"objects_per_shard": 7,"fill_status": "OK"},{

查看存储使用情况命令

ceph df

输出

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 900 GiB 834 GiB 66 GiB 66 GiB 7.29

TOTAL 900 GiB 834 GiB 66 GiB 66 GiB 7.29--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

.mgr 1 1 449 KiB 2 1.3 MiB 0 255 GiB

replicapool 2 32 19 GiB 5.87k 56 GiB 6.79 255 GiB

myfs-metadata 3 16 34 MiB 33 103 MiB 0.01 255 GiB

myfs-replicated 4 32 1.9 MiB 9 5.8 MiB 0 255 GiB

.rgw.root 26 8 5.6 KiB 20 152 KiB 0 383 GiB

default.rgw.log 27 32 182 B 2 24 KiB 0 255 GiB

default.rgw.control 28 32 0 B 8 0 B 0 255 GiB

default.rgw.meta 29 32 0 B 0 0 B 0 255 GiB

ceph osd df

输出

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS0 hdd 0.09769 1.00000 100 GiB 6.4 GiB 4.8 GiB 3.2 MiB 1.5 GiB 94 GiB 6.35 0.87 89 up3 hdd 0.19530 1.00000 200 GiB 15 GiB 14 GiB 42 MiB 1.1 GiB 185 GiB 7.61 1.04 152 up1 hdd 0.09769 1.00000 100 GiB 7.3 GiB 5.3 GiB 1.5 MiB 1.9 GiB 93 GiB 7.27 1.00 78 up4 hdd 0.19530 1.00000 200 GiB 15 GiB 14 GiB 4.2 MiB 1.1 GiB 185 GiB 7.32 1.00 157 up2 hdd 0.09769 1.00000 100 GiB 9.9 GiB 7.6 GiB 1.2 MiB 2.3 GiB 90 GiB 9.94 1.36 73 up5 hdd 0.19530 1.00000 200 GiB 12 GiB 11 GiB 43 MiB 1.1 GiB 188 GiB 6.18 0.85 158 upTOTAL 900 GiB 66 GiB 57 GiB 95 MiB 9.1 GiB 834 GiB 7.31

MIN/MAX VAR: 0.85/1.36 STDDEV: 1.24

rados df

POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR USED COMPR UNDER COMPR

.mgr 1.3 MiB 2 0 6 0 0 0 2696928 5.5 GiB 563117 29 MiB 0 B 0 B

.rgw.root 152 KiB 20 0 40 0 0 0 428 443 KiB 10 7 KiB 0 B 0 B

default.rgw.control 0 B 8 0 24 0 0 0 0 0 B 0 0 B 0 B 0 B

default.rgw.log 24 KiB 2 0 6 0 0 0 0 0 B 0 0 B 0 B 0 B

default.rgw.meta 0 B 0 0 0 0 0 0 0 0 B 0 0 B 0 B 0 B

myfs-metadata 103 MiB 33 0 99 0 0 0 18442579 10 GiB 272672 194 MiB 0 B 0 B

myfs-replicated 5.8 MiB 9 0 27 0 0 0 24 24 KiB 33 1.9 MiB 0 B 0 B

mys3.rgw.buckets.data 307 MiB 18493 0 36986 0 0 0 767457 942 MiB 2713288 1.2 GiB 0 B 0 B

mys3.rgw.buckets.index 20 MiB 2827 0 5654 0 0 0 7299856 6.2 GiB 1208180 598 MiB 0 B 0 B

mys3.rgw.buckets.non-ec 0 B 0 0 0 0 0 0 0 0 B 0 0 B 0 B 0 B

mys3.rgw.control 0 B 8 0 16 0 0 0 0 0 B 0 0 B 0 B 0 B

mys3.rgw.log 76 MiB 342 0 684 0 0 0 4944901 4.5 GiB 3764847 1.1 GiB 0 B 0 B

mys3.rgw.meta 4.3 MiB 526 0 1052 0 0 0 4617928 3.8 GiB 658074 321 MiB 0 B 0 B

mys3.rgw.otp 0 B 0 0 0 0 0 0 0 0 B 0 0 B 0 B 0 B

replicapool 56 GiB 5873 0 17619 0 0 0 4482521 65 GiB 132312964 1.3 TiB 0 B 0 Btotal_objects 28143

total_used 65 GiB

total_avail 835 GiB

total_space 900 GiB

ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.87895 root default

-5 0.29298 host node01 0 hdd 0.09769 osd.0 up 1.00000 1.000003 hdd 0.19530 osd.3 up 1.00000 1.00000

-3 0.29298 host node02 1 hdd 0.09769 osd.1 up 1.00000 1.000004 hdd 0.19530 osd.4 up 1.00000 1.00000

-7 0.29298 host node03 2 hdd 0.09769 osd.2 up 1.00000 1.000005 hdd 0.19530 osd.5 up 1.00000 1.00000

ceph osd find 1

说明:1为 osd 的 id 号

{"osd": 1,"addrs": {"addrvec": [{"type": "v2","addr": "10.96.12.109:6800","nonce": 701714258},{"type": "v1","addr": "10.96.12.109:6801","nonce": 701714258}]},"osd_fsid": "9b165ff1-1116-4dd8-ab04-59abb6e5e3b5","host": "node02","pod_name": "rook-ceph-osd-1-5cd7b7fd9b-pq76v","pod_namespace": "rook-ceph","crush_location": {"host": "node02","root": "default"}

}

pg 常用命令

ceph pg ls-by-osd 0|head -20

PG OBJECTS DEGRADED MISPLACED UNFOUND BYTES OMAP_BYTES* OMAP_KEYS* LOG STATE SINCE VERSION REPORTED UP ACTING SCRUB_STAMP DEEP_SCRUB_STAMP LAST_SCRUB_DURATION SCRUB_SCHEDULING

2.5 185 0 0 0 682401810 75 8 4202 active+clean 7h 89470'3543578 89474:3900145 [2,4,0]p2 [2,4,0]p2 2023-11-15T01:09:50.285147+0000 2023-11-15T01:09:50.285147+0000 4 periodic scrub scheduled @ 2023-11-16T12:33:31.356087+0000

2.9 178 0 0 0 633696256 423 13 2003 active+clean 117m 89475'2273592 89475:2445503 [4,0,5]p4 [4,0,5]p4 2023-11-15T06:22:49.007240+0000 2023-11-12T21:00:41.277161+0000 1 periodic scrub scheduled @ 2023-11-16T13:29:45.298222+0000

2.c 171 0 0 0 607363106 178 12 4151 active+clean 14h 89475'4759653 89475:4985220 [2,4,0]p2 [2,4,0]p2 2023-11-14T17:41:46.959311+0000 2023-11-13T07:10:45.084379+0000 1 periodic scrub scheduled @ 2023-11-15T23:58:48.840924+0000

2.f 174 0 0 0 641630226 218 8 4115 active+clean 12h 89475'4064519 89475:4177515 [2,0,4]p2 [2,0,4]p2 2023-11-14T20:11:34.002882+0000 2023-11-13T13:19:50.306895+0000 1 periodic scrub scheduled @ 2023-11-16T02:52:50.646390+0000

2.11 172 0 0 0 637251602 0 0 3381 active+clean 7h 89475'4535730 89475:4667861 [0,4,5]p0 [0,4,5]p0 2023-11-15T00:41:28.325584+0000 2023-11-08T22:50:59.120985+0000 1 periodic scrub scheduled @ 2023-11-16T05:10:15.810837+0000

2.13 198 0 0 0 762552338 347 19 1905 active+clean 5h 89475'6632536 89475:6895777 [5,0,4]p5 [5,0,4]p5 2023-11-15T03:06:33.483129+0000 2023-11-15T03:06:33.483129+0000 5 periodic scrub scheduled @ 2023-11-16T10:29:19.975736+0000

2.16 181 0 0 0 689790976 75 8 3427 active+clean 18h 89475'5897648 89475:6498260 [0,2,1]p0 [0,2,1]p0 2023-11-14T14:07:00.475337+0000 2023-11-13T08:59:03.104478+0000 1 periodic scrub scheduled @ 2023-11-16T01:55:30.581835+0000

2.1b 181 0 0 0 686268416 437 16 1956 active+clean 5h 89475'4001434 89475:4376306 [5,0,4]p5 [5,0,4]p5 2023-11-15T02:36:36.002761+0000 2023-11-15T02:36:36.002761+0000 4 periodic scrub scheduled @ 2023-11-16T09:15:09.271395+0000

3.2 0 0 0 0 0 0 0 68 active+clean 4h 67167'68 89474:84680 [4,5,0]p4 [4,5,0]p4 2023-11-15T04:01:14.378817+0000 2023-11-15T04:01:14.378817+0000 1 periodic scrub scheduled @ 2023-11-16T09:26:55.350003+0000

3.3 2 0 0 0 34 4880 10 71 active+clean 6h 71545'71 89474:97438 [0,4,5]p0 [0,4,5]p0 2023-11-15T01:55:57.633258+0000 2023-11-12T07:28:22.391454+0000 1 periodic scrub scheduled @ 2023-11-16T02:46:05.613867+0000

3.6 1 0 0 0 0 0 0 1987 active+clean 91m 89475'54154 89475:145435 [4,0,5]p4 [4,0,5]p4 2023-11-15T06:48:38.818739+0000 2023-11-08T20:05:08.257800+0000 1 periodic scrub scheduled @ 2023-11-16T15:08:59.546203+0000

3.8 0 0 0 0 0 0 0 44 active+clean 16h 83074'44 89474:84245 [5,1,0]p5 [5,1,0]p5 2023-11-14T15:26:04.057142+0000 2023-11-13T03:51:42.271364+0000 1 periodic scrub scheduled @ 2023-11-15T19:49:15.168863+0000

3.b 3 0 0 0 8388608 0 0 2369 active+clean 24h 29905'26774 89474:3471652 [4,0,5]p4 [4,0,5]p4 2023-11-14T07:50:38.682896+0000 2023-11-10T20:06:19.530705+0000 1 periodic scrub scheduled @ 2023-11-15T12:35:50.298157+0000

3.f 4 0 0 0 4194880 0 0 4498 active+clean 15h 42287'15098 89474:905369 [0,5,4]p0 [0,5,4]p0 2023-11-14T17:15:38.681549+0000 2023-11-10T14:00:49.535978+0000 1 periodic scrub scheduled @ 2023-11-15T22:26:56.705010+0000

4.6 0 0 0 0 0 0 0 380 active+clean 2h 20555'380 89474:84961 [5,1,0]p5 [5,1,0]p5 2023-11-15T05:29:28.833076+0000 2023-11-09T09:41:36.198863+0000 1 periodic scrub scheduled @ 2023-11-16T11:28:34.901957+0000

4.a 0 0 0 0 0 0 0 274 active+clean 16h 20555'274 89474:91274 [0,1,2]p0 [0,1,2]p0 2023-11-14T16:09:50.743410+0000 2023-11-14T16:09:50.743410+0000 1 periodic scrub scheduled @ 2023-11-15T18:12:35.709178+0000

4.b 0 0 0 0 0 0 0 352 active+clean 6h 20555'352 89474:85072 [4,0,5]p4 [4,0,5]p4 2023-11-15T01:49:06.361454+0000 2023-11-12T12:44:50.143887+0000 7 periodic scrub scheduled @ 2023-11-16T03:42:06.193542+0000

4.10 1 0 0 0 2474 0 0 283 active+clean 17h 20555'283 89474:89904 [4,2,0]p4 [4,2,0]p4 2023-11-14T14:57:49.174637+0000 2023-11-09T18:56:58.241925+0000 1 periodic scrub scheduled @ 2023-11-16T02:56:36.556523+0000

4.14 0 0 0 0 0 0 0 304 active+clean 33h 20555'304 89474:85037 [5,1,0]p5 [5,1,0]p5 2023-11-13T22:55:24.034723+0000 2023-11-11T09:51:00.248512+0000 1 periodic scrub scheduled @ 2023-11-15T09:18:55.094605+0000

ceph pg ls-by-pool myfs-replicated|head -10

PG OBJECTS DEGRADED MISPLACED UNFOUND BYTES OMAP_BYTES* OMAP_KEYS* LOG STATE SINCE VERSION REPORTED UP ACTING SCRUB_STAMP DEEP_SCRUB_STAMP LAST_SCRUB_DURATION SCRUB_SCHEDULING

4.0 0 0 0 0 0 0 0 294 active+clean 14m 20555'294 89474:89655 [4,3,5]p4 [4,3,5]p4 2023-11-15T08:06:30.504646+0000 2023-11-11T14:10:37.423797+0000 1 periodic scrub scheduled @ 2023-11-16T20:00:48.189584+0000

4.1 0 0 0 0 0 0 0 282 active+clean 19h 20555'282 89474:91316 [2,3,4]p2 [2,3,4]p2 2023-11-14T13:11:39.095045+0000 2023-11-08T02:29:45.827302+0000 1 periodic deep scrub scheduled @ 2023-11-15T23:05:45.143337+0000

4.2 0 0 0 0 0 0 0 228 active+clean 30h 20555'228 89474:84866 [5,3,4]p5 [5,3,4]p5 2023-11-14T01:51:16.091750+0000 2023-11-14T01:51:16.091750+0000 1 periodic scrub scheduled @ 2023-11-15T13:37:08.420266+0000

4.3 0 0 0 0 0 0 0 228 active+clean 12h 20555'228 89474:91622 [2,3,1]p2 [2,3,1]p2 2023-11-14T19:23:46.585302+0000 2023-11-07T22:06:51.216573+0000 1 periodic deep scrub scheduled @ 2023-11-16T02:02:54.588932+0000

4.4 1 0 0 0 2474 0 0 236 active+clean 18h 20555'236 89474:35560 [1,5,3]p1 [1,5,3]p1 2023-11-14T13:42:45.498057+0000 2023-11-10T13:03:03.664431+0000 1 periodic scrub scheduled @ 2023-11-15T22:08:15.399060+0000

4.5 0 0 0 0 0 0 0 171 active+clean 23h 20555'171 89474:88153 [3,5,1]p3 [3,5,1]p3 2023-11-14T09:01:04.687468+0000 2023-11-09T23:45:29.913888+0000 6 periodic scrub scheduled @ 2023-11-15T13:08:21.849161+0000

4.6 0 0 0 0 0 0 0 380 active+clean 2h 20555'380 89474:84961 [5,1,0]p5 [5,1,0]p5 2023-11-15T05:29:28.833076+0000 2023-11-09T09:41:36.198863+0000 1 periodic scrub scheduled @ 2023-11-16T11:28:34.901957+0000

4.7 0 0 0 0 0 0 0 172 active+clean 18h 20555'172 89474:77144 [1,5,3]p1 [1,5,3]p1 2023-11-14T13:52:17.458837+0000 2023-11-09T16:56:57.755836+0000 17 periodic scrub scheduled @ 2023-11-16T01:10:07.099940+0000

4.8 0 0 0 0 0 0 0 272 active+clean 15h 20555'272 89474:84994 [5,3,4]p5 [5,3,4]p5 2023-11-14T17:14:47.534009+0000 2023-11-14T17:14:47.534009+0000 1 periodic scrub scheduled @ 2023-11-15T19:30:59.254042+0000

ceph pg ls-by-primary 0|head -10

PG OBJECTS DEGRADED MISPLACED UNFOUND BYTES OMAP_BYTES* OMAP_KEYS* LOG STATE SINCE VERSION REPORTED UP ACTING SCRUB_STAMP DEEP_SCRUB_STAMP LAST_SCRUB_DURATION SCRUB_SCHEDULING

2.11 172 0 0 0 637251602 0 0 3375 active+clean 7h 89475'4536024 89475:4668155 [0,4,5]p0 [0,4,5]p0 2023-11-15T00:41:28.325584+0000 2023-11-08T22:50:59.120985+0000 1 periodic scrub scheduled @ 2023-11-16T05:10:15.810837+0000

2.16 181 0 0 0 689790976 75 8 3380 active+clean 18h 89475'5898101 89475:6498713 [0,2,1]p0 [0,2,1]p0 2023-11-14T14:07:00.475337+0000 2023-11-13T08:59:03.104478+0000 1 periodic scrub scheduled @ 2023-11-16T01:55:30.581835+0000

3.3 2 0 0 0 34 4880 10 71 active+clean 6h 71545'71 89474:97438 [0,4,5]p0 [0,4,5]p0 2023-11-15T01:55:57.633258+0000 2023-11-12T07:28:22.391454+0000 1 periodic scrub scheduled @ 2023-11-16T02:46:05.613867+0000

3.f 4 0 0 0 4194880 0 0 4498 active+clean 15h 42287'15098 89474:905369 [0,5,4]p0 [0,5,4]p0 2023-11-14T17:15:38.681549+0000 2023-11-10T14:00:49.535978+0000 1 periodic scrub scheduled @ 2023-11-15T22:26:56.705010+0000

4.a 0 0 0 0 0 0 0 274 active+clean 16h 20555'274 89474:91274 [0,1,2]p0 [0,1,2]p0 2023-11-14T16:09:50.743410+0000 2023-11-14T16:09:50.743410+0000 1 periodic scrub scheduled @ 2023-11-15T18:12:35.709178+0000

4.1b 0 0 0 0 0 0 0 188 active+clean 9h 20572'188 89474:60345 [0,4,5]p0 [0,4,5]p0 2023-11-14T22:45:32.243017+0000 2023-11-09T15:22:58.954604+0000 15 periodic scrub scheduled @ 2023-11-16T05:26:22.970008+0000

26.0 4 0 0 0 2055 0 0 4 active+clean 16h 74696'14 89474:22375 [0,5]p0 [0,5]p0 2023-11-14T16:07:57.126669+0000 2023-11-09T12:57:29.272721+0000 1 periodic scrub scheduled @ 2023-11-15T17:12:43.441862+0000

26.3 1 0 0 0 104 0 0 1 active+clean 10h 74632'8 89474:22487 [0,4]p0 [0,4]p0 2023-11-14T21:43:19.284917+0000 2023-11-11T13:26:08.679346+0000 1 periodic scrub scheduled @ 2023-11-16T01:39:45.617371+0000

27.5 1 0 0 0 154 0 0 2 active+clean 23h 69518'2 89474:22216 [0,4,2]p0 [0,4,2]p0 2023-11-14T08:56:33.324158+0000 2023-11-10T23:46:33.688281+0000 1 periodic scrub scheduled @ 2023-11-15T20:32:30.759743+0000

ceph osd perf

osd commit_latency(ms) apply_latency(ms)5 2 24 2 23 2 22 0 00 0 01 1 1

相关文章:

ceph 常用命令

bucket 常用命令 查看 realm (区域) radosgw-admin realm list输出 {"default_info": "43c462f5-5634-496e-ad4e-978d28c2x9090","realms": ["myrgw"] }radosgw-admin realm get{"id": "2cfc…...

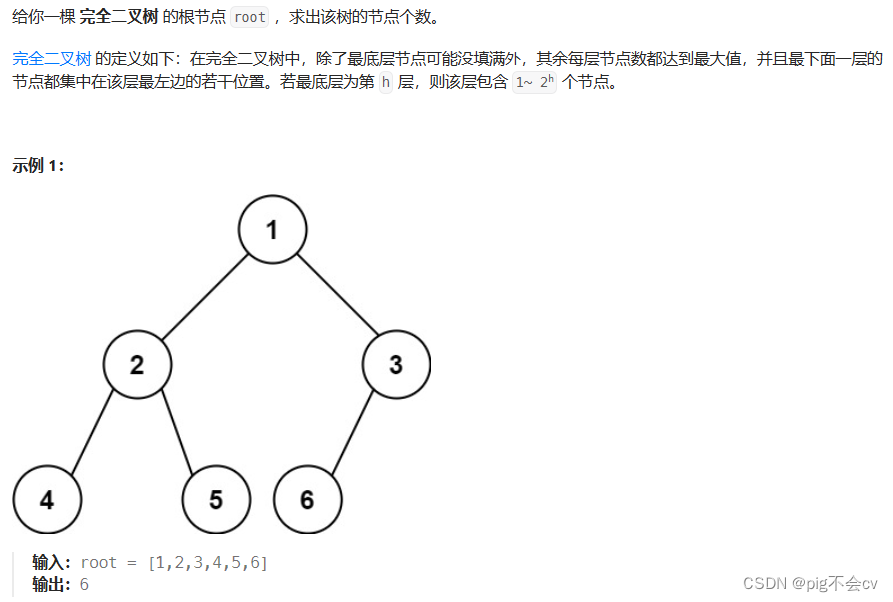

6.8完全二叉树的节点个数(LC222-E)

算法: 如果不考虑完全二叉树的特性,直接把完全二叉树当作普通二叉树求节点数,其实也很简单。 递归法: 用什么顺序遍历都可以。 比如后序遍历(LRV):不断遍历左右子树的节点数,最后…...

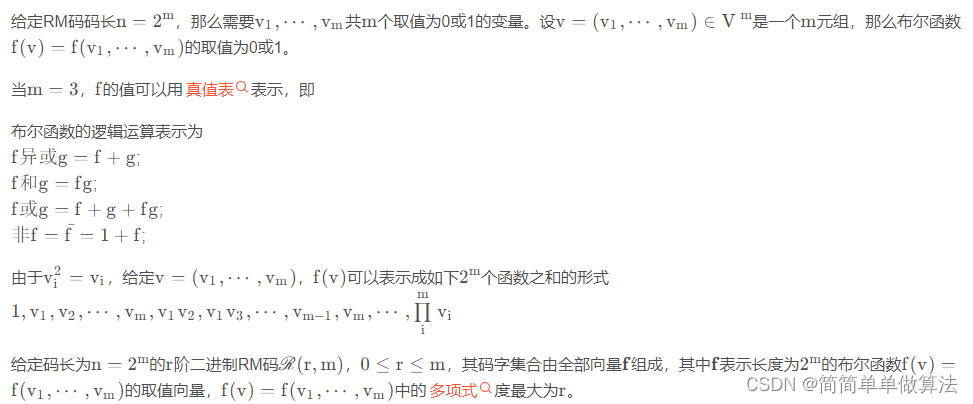

基于协作mimo系统的RM编译码误码率matlab仿真,对比硬判决译码和软判决译码

目录 1.算法运行效果图预览 2.算法运行软件版本 3.部分核心程序 4.算法理论概述 5.算法完整程序工程 1.算法运行效果图预览 2.算法运行软件版本 matlab2022a 3.部分核心程序 ..................................................................... while(Err < TL…...

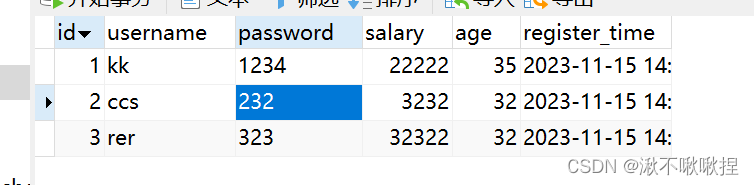

Django模型层

模型层 与数据库相关的,用于定义数据模型和数据库表结构。 在Django应用程序中,模型层是数据库和应用程序之间的接口,它负责处理所有与数据库相关的操作,例如创建、读取、更新和删除记录。Django的模型层还提供了一些高级功能 首…...

计算机视觉的应用18-一键抠图人像与更换背景的项目应用,可扩展批量抠图与背景替换

大家好,我是微学AI,今天给大家介绍一下计算机视觉的应用18-一键抠图人像与更换背景的项目应用,可扩展批量抠图与背景替换。该项目能够让你轻松地处理和编辑图片。这个项目的核心功能是一键抠图和更换背景。这个项目能够自动识别图片中的主体&…...

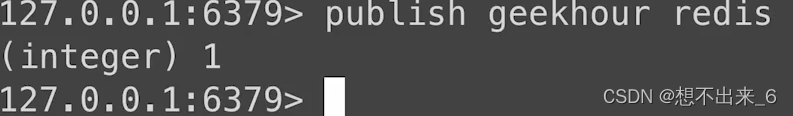

Redis(哈希Hash和发布订阅模式)

哈希是一个字符类型字段和值的映射表。 在Redis中,哈希是一种数据结构,用于存储键值对的集合。哈希可以理解为一个键值对的集合,其中每个键都对应一个值。哈希在Redis中的作用主要有以下几点: 1. 存储对象:哈希可以用…...

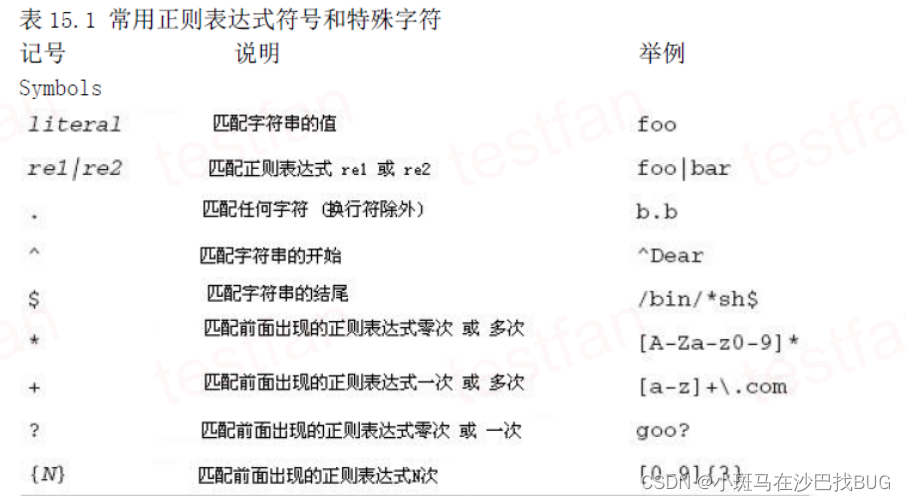

php正则表达式汇总

php正则表达式有"/pattern/“、”“、”$“、”.“、”[]“、”[]“、”[a-z]“、”[A-Z]“、”[0-9]“、”\d"、“\D”、“\w”、“\W”、“\s”、“\S”、“\b”、“*”、“”、“?”、“{n}”、“{n,}”、“{n,m}”、“\bword\b”、“(pattern)”、“x|y"和…...

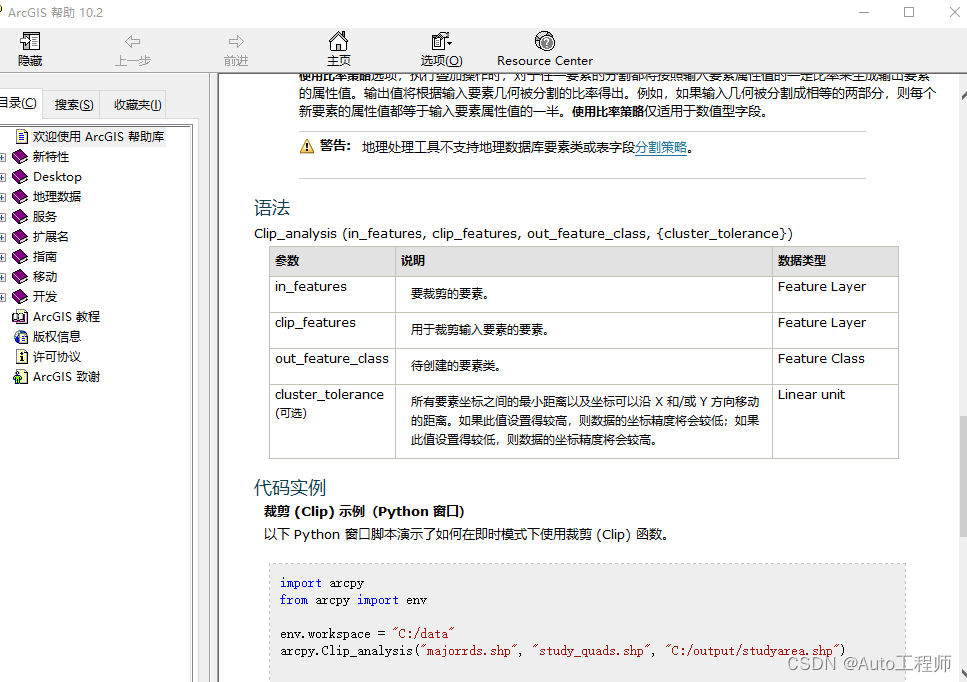

Python与ArcGIS系列(八)通过python执行地理处理工具

目录 0 简述1 脚本执行地理处理工具2 在地理处理工具间建立联系0 简述 arcgis包含数百种可以通过python脚本执行的地理处理工具,这样就通过python可以处理复杂的工作和批处理。本篇将介绍如何利用arcpy实现执行地理处理工具以及在地理处理工具间建立联系。 1 脚本执行地理处理…...

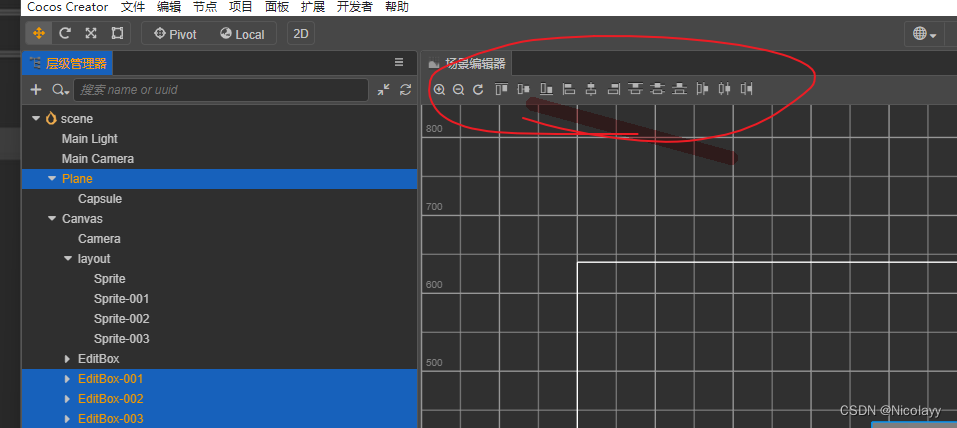

cocos----刚体

刚体(Rigidbody) 刚体(Rigidbody)是运动学(Kinematic)中的一个概念,指在运动中和受力作用后,形状和大小不变,而且内部各点的相对位置不变的物体。在 Unity3D 中ÿ…...

【SAP-HCM】--HR人员信息导入函数

人员基本信息导入函数:HR_MAINTAIN_MASTERDATA 人员其他信息类型导入函数:HR_INFOTYPE_OPERATION 不逼逼,直接上代码,这两个函数还是相对简单易懂的 *根据操作类型查找对应的T529A 操作类型对应的值IF gt_alv IS NOT INITIAL.S…...

【开源】基于JAVA的大学兼职教师管理系统

项目编号: S 004 ,文末获取源码。 \color{red}{项目编号:S004,文末获取源码。} 项目编号:S004,文末获取源码。 目录 一、摘要1.1 项目介绍1.2 项目录屏 二、研究内容三、界面展示3.1 登录注册3.2 学生教师管…...

Pyhon函数

import time # # for i in range(1,10): # j1 # for j in range(1,i1): # print(f"{i}x{j}{i*j} " ,end) # print() #复用,代码,精简,复用度高def j99(n1,max10): for i in range(n,max):jifor j in ran…...

使用vuex完成小黑记事本案例

使用vuex完成小黑记事本案例 App.vue <template><div id"app"><TodoHeader></TodoHeader><TodoMain ></TodoMain><TodoFooter></TodoFooter></div> </template><script> import TodoMain from …...

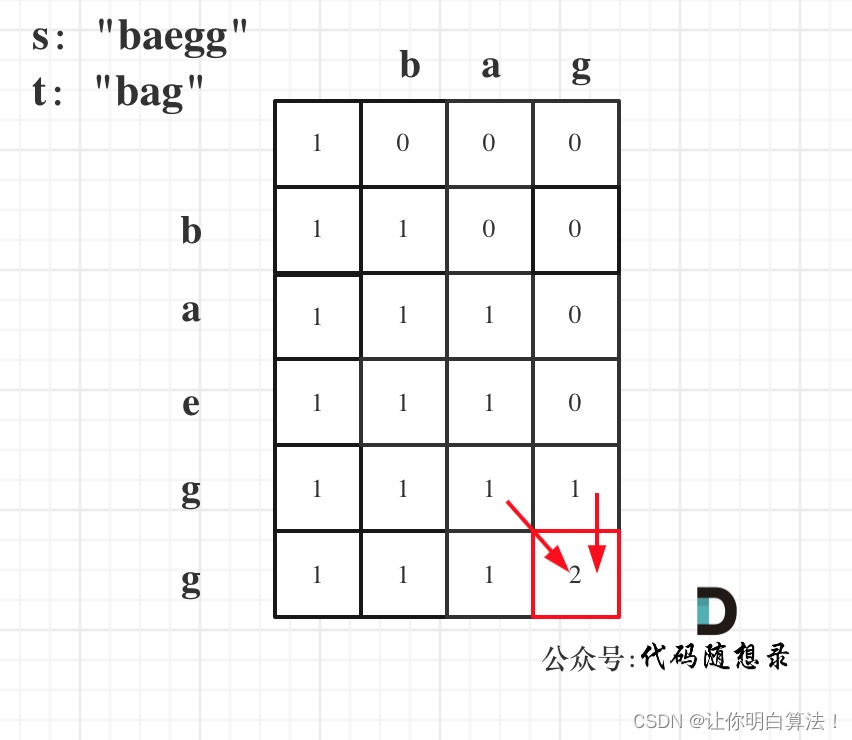

进阶理解:leetcode115.不同的子序列(细节深度)

这道题是困难题,本章是针对于动态规划解决,对于思路进行一个全面透彻的讲解,但是并不是对于基础讲解思路,而是渗透到递推式和dp填数的详解,如果有读者不清楚基本的解题思路,请看我的这篇文章算法训练营DAY5…...

数据结构-哈希表(C语言)

哈希表的概念 哈希表就是: “将记录的存储位置与它的关键字之间建立一个对应关系,使每个关键字和一个唯一的存储位置对 应。” 哈希表又称:“散列法”、“杂凑法”、“关键字:地址法”。 哈希表思想 基本思想是在关键字和存…...

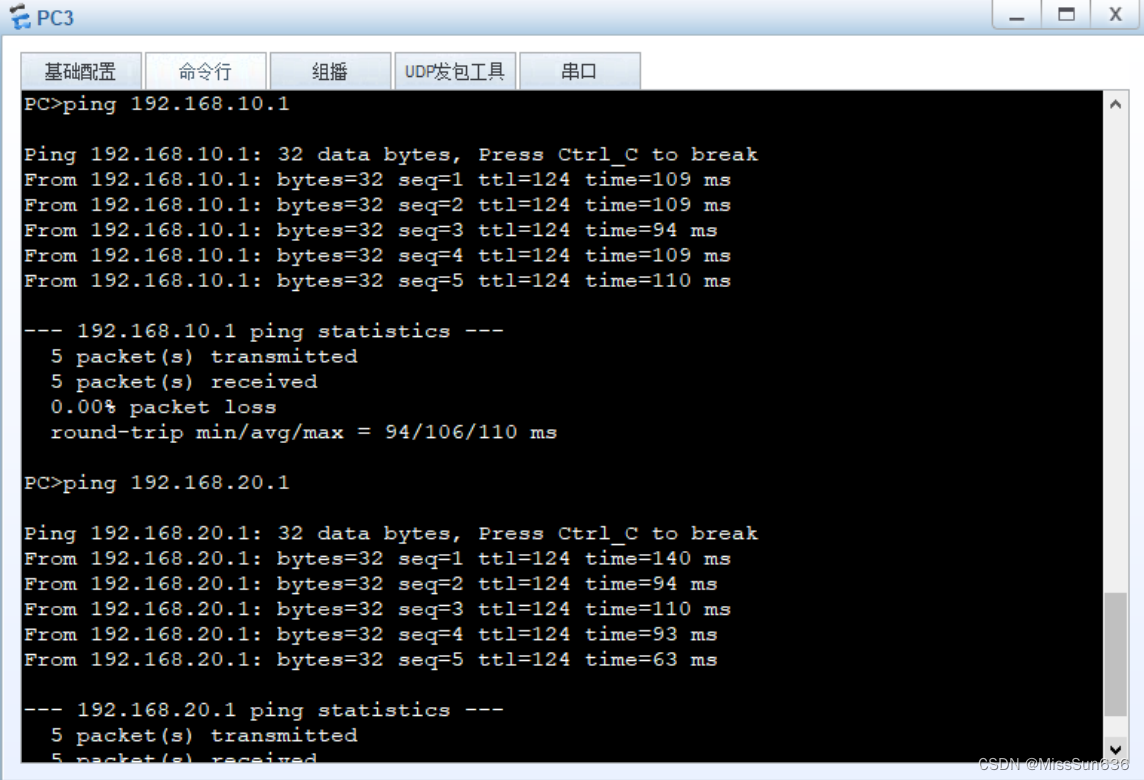

HCIA-综合实验(三)

综合实验(三) 1 实验拓扑2 IP 规划3 实验需求一、福州思博网络规划如下:二、上海思博网络规划如下:三、福州思博与上海思博网络互联四、网络优化 4 配置思路4.1 福州思博配置在 SW1、SW2、SW3 上配置交换网络SW1、SW2、SW3 运行 S…...

Java程序员的成长路径

熟悉JAVA语言基础语法。 学习JAVA基础知识,推荐阅读书单中的经典书籍。 理解并掌握面向对象的特性,比如继承,多态,覆盖,重载等含义,并正确运用。 熟悉SDK中常见类和API的使用,比如࿱…...

几种常用的排序

int[] arr new int[]{1, 2,8, 7, 5};这是提前准备好的数组 冒泡排序 public static void bubbleSort(int[] arr) {int len arr.length;for (int i 0; i < len - 1; i) {for (int j 0; j < len - i - 1; j) {if (arr[j] > arr[j1]) {int temp arr[j];arr[j] ar…...

性能测试【第三篇】Jmeter的使用

线程数:10 ,设置10个并发 Ramp-Up时间(秒):所有线程在多少时间内启动,如果设置5,那么每秒启动2个线程 循环次数:请求的重复次数,如果勾选"永远"将一直发送请求 持续时间时间:设置场景运行的时间 启动延迟:设置场景延迟启动时间 响应断言 响应断言模式匹配规则 包括…...

业务:业务系统检查项参考

名录明细云平台摸底1.原有云平台体系:VMware、openstack、ovirt、k8s、docker、混合云系列及版本 2.原有云平台规模,物理机数量、虚拟机数量、迁移业务系统所占配额 3.待补充系统摸底 (适用于物理主机)每一台虚拟机或物理机: 1.系统全局参数…...

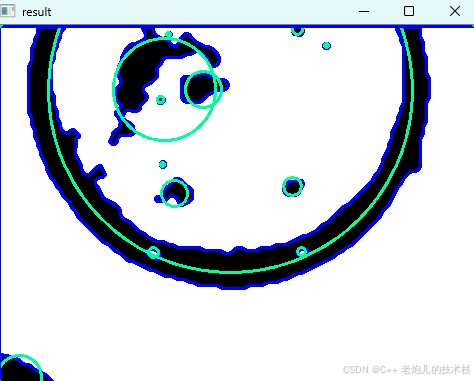

利用最小二乘法找圆心和半径

#include <iostream> #include <vector> #include <cmath> #include <Eigen/Dense> // 需安装Eigen库用于矩阵运算 // 定义点结构 struct Point { double x, y; Point(double x_, double y_) : x(x_), y(y_) {} }; // 最小二乘法求圆心和半径 …...

[2025CVPR]DeepVideo-R1:基于难度感知回归GRPO的视频强化微调框架详解

突破视频大语言模型推理瓶颈,在多个视频基准上实现SOTA性能 一、核心问题与创新亮点 1.1 GRPO在视频任务中的两大挑战 安全措施依赖问题 GRPO使用min和clip函数限制策略更新幅度,导致: 梯度抑制:当新旧策略差异过大时梯度消失收敛困难:策略无法充分优化# 传统GRPO的梯…...

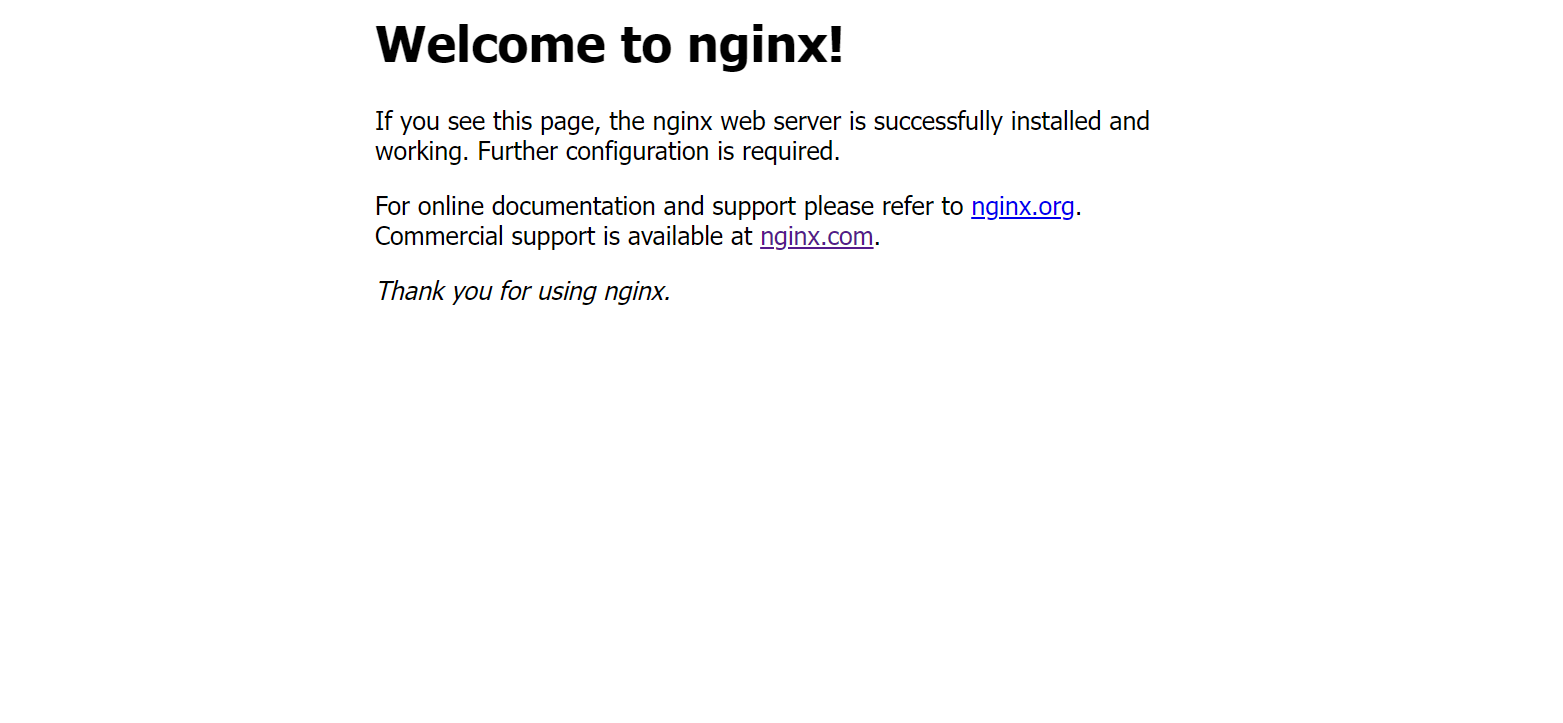

linux之kylin系统nginx的安装

一、nginx的作用 1.可做高性能的web服务器 直接处理静态资源(HTML/CSS/图片等),响应速度远超传统服务器类似apache支持高并发连接 2.反向代理服务器 隐藏后端服务器IP地址,提高安全性 3.负载均衡服务器 支持多种策略分发流量…...

Java多线程实现之Callable接口深度解析

Java多线程实现之Callable接口深度解析 一、Callable接口概述1.1 接口定义1.2 与Runnable接口的对比1.3 Future接口与FutureTask类 二、Callable接口的基本使用方法2.1 传统方式实现Callable接口2.2 使用Lambda表达式简化Callable实现2.3 使用FutureTask类执行Callable任务 三、…...

如何为服务器生成TLS证书

TLS(Transport Layer Security)证书是确保网络通信安全的重要手段,它通过加密技术保护传输的数据不被窃听和篡改。在服务器上配置TLS证书,可以使用户通过HTTPS协议安全地访问您的网站。本文将详细介绍如何在服务器上生成一个TLS证…...

基于Docker Compose部署Java微服务项目

一. 创建根项目 根项目(父项目)主要用于依赖管理 一些需要注意的点: 打包方式需要为 pom<modules>里需要注册子模块不要引入maven的打包插件,否则打包时会出问题 <?xml version"1.0" encoding"UTF-8…...

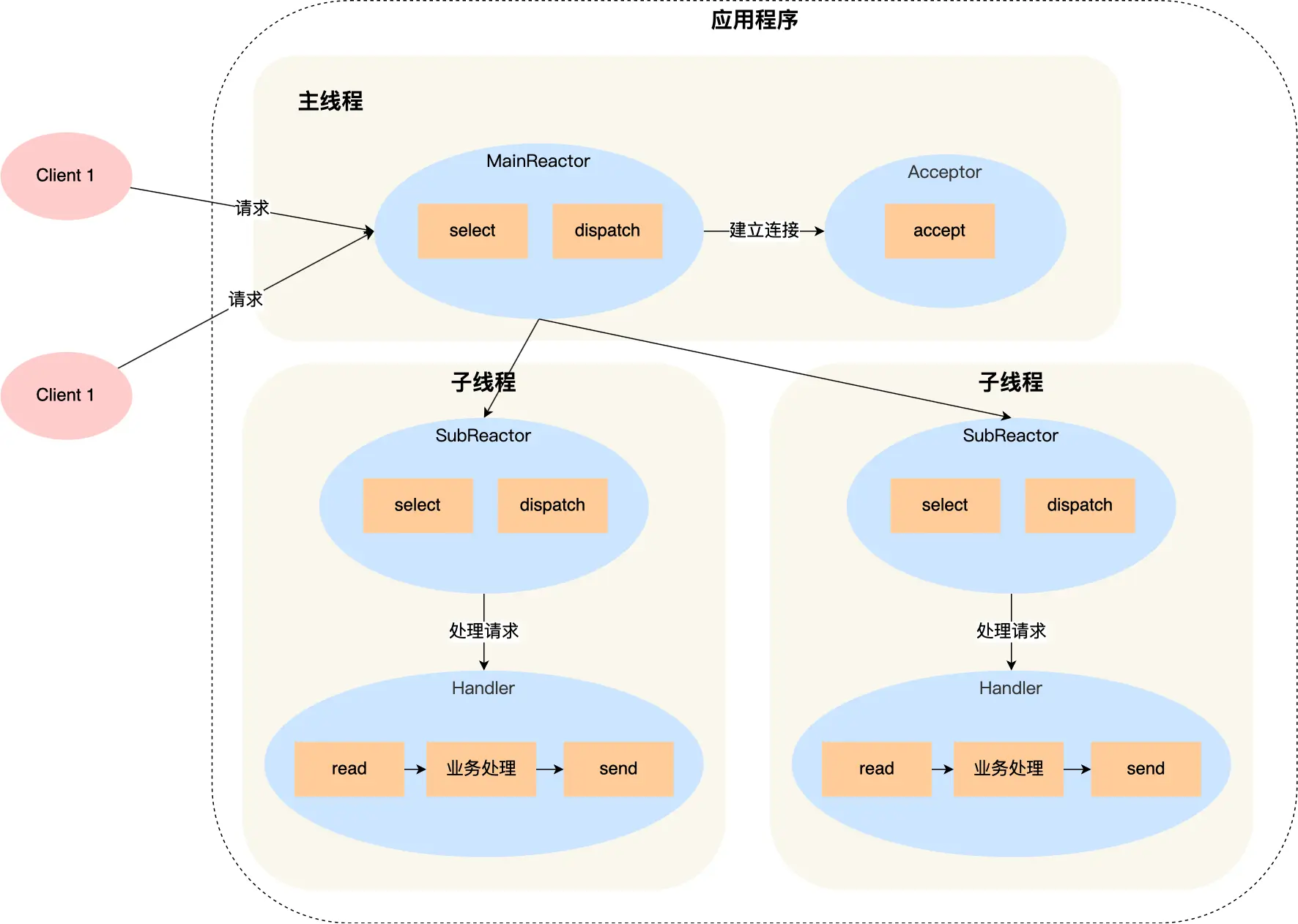

select、poll、epoll 与 Reactor 模式

在高并发网络编程领域,高效处理大量连接和 I/O 事件是系统性能的关键。select、poll、epoll 作为 I/O 多路复用技术的代表,以及基于它们实现的 Reactor 模式,为开发者提供了强大的工具。本文将深入探讨这些技术的底层原理、优缺点。 一、I…...

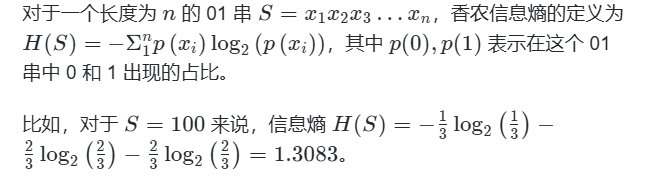

蓝桥杯3498 01串的熵

问题描述 对于一个长度为 23333333的 01 串, 如果其信息熵为 11625907.5798, 且 0 出现次数比 1 少, 那么这个 01 串中 0 出现了多少次? #include<iostream> #include<cmath> using namespace std;int n 23333333;int main() {//枚举 0 出现的次数//因…...

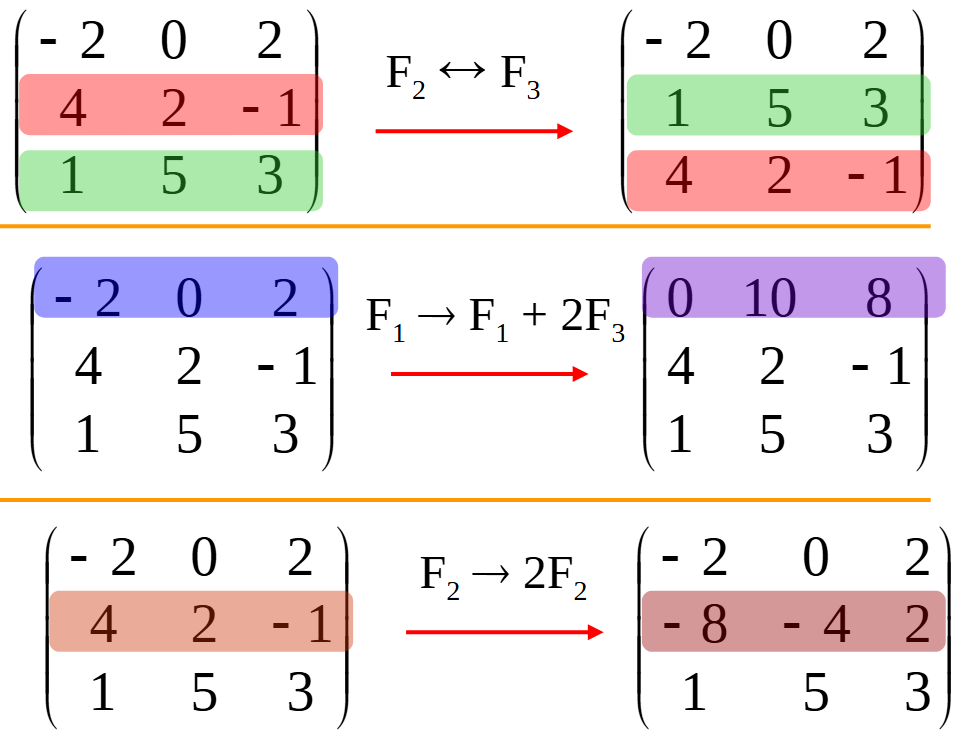

使用 SymPy 进行向量和矩阵的高级操作

在科学计算和工程领域,向量和矩阵操作是解决问题的核心技能之一。Python 的 SymPy 库提供了强大的符号计算功能,能够高效地处理向量和矩阵的各种操作。本文将深入探讨如何使用 SymPy 进行向量和矩阵的创建、合并以及维度拓展等操作,并通过具体…...

Mobile ALOHA全身模仿学习

一、题目 Mobile ALOHA:通过低成本全身远程操作学习双手移动操作 传统模仿学习(Imitation Learning)缺点:聚焦与桌面操作,缺乏通用任务所需的移动性和灵活性 本论文优点:(1)在ALOHA…...