GPU服务器安装显卡驱动、CUDA和cuDNN

GPU服务器安装cuda和cudnn

- 1. 服务器驱动安装

- 2. cuda安装

- 3. cudNN安装

- 4. 安装docker环境

- 5. 安装nvidia-docker2

- 5.1 ubuntu系统安装

- 5.2 centos系统安装

- 6. 测试docker容调用GPU服务

1. 服务器驱动安装

- 显卡驱动下载地址

- https://www.nvidia.cn/Download/index.aspx?lang=cn

- 显卡驱动安装完成后可以通过命令:nvidia-smi 查看驱动信息

- 显卡型号查看命令:lspci |grep -i vga

root@hk-MZ32-AR0-00:~# nvidia-smi

Fri Feb 10 17:27:58 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 460.106.00 Driver Version: 460.106.00 CUDA Version: 11.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:04:00.0 Off | 0 |

| N/A 46C P0 27W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 Tesla T4 Off | 00000000:06:00.0 Off | 0 |

| N/A 43C P0 28W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 2 Tesla T4 Off | 00000000:0D:00.0 Off | 0 |

| N/A 48C P0 28W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 3 Tesla T4 Off | 00000000:0F:00.0 Off | 0 |

| N/A 45C P0 26W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 4 Tesla T4 Off | 00000000:17:00.0 Off | 0 |

| N/A 48C P0 27W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 5 Tesla T4 Off | 00000000:19:00.0 Off | 0 |

| N/A 48C P0 28W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 6 Tesla T4 Off | 00000000:21:00.0 Off | 0 |

| N/A 45C P0 26W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 7 Tesla T4 Off | 00000000:23:00.0 Off | 0 |

| N/A 45C P0 27W / 70W | 0MiB / 15109MiB | 4% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------++-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

2. cuda安装

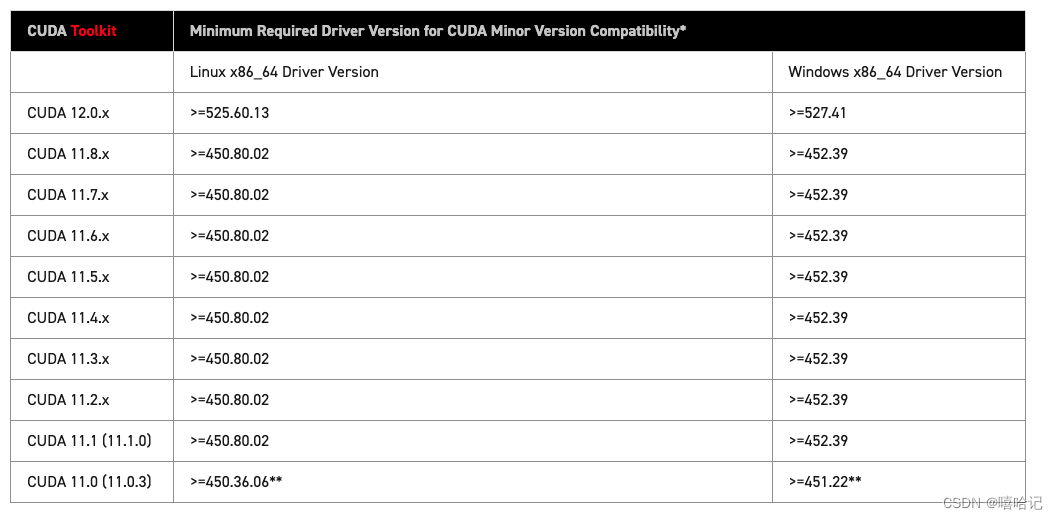

- CUDA安装的时候需要注意显卡的驱动版本

- 参考文档 :接入附上一份

- 此次实验机的驱动版本是 460.106.00,我选用的版本是CUDA 11.0

- 下载地址:https://developer.nvidia.com/cuda-toolkit-archive

root@hk-MZ32-AR0-00:~# wget http://developer.download.nvidia.com/compute/cuda/11.0.2/local_installers/cuda_11.0.2_450.51.05_linux.run

--2023-01-29 19:55:42-- http://developer.download.nvidia.com/compute/cuda/11.0.2/local_installers/cuda_11.0.2_450.51.05_linux.run

Resolving developer.download.nvidia.com (developer.download.nvidia.com)... 152.199.39.144

Connecting to developer.download.nvidia.com (developer.download.nvidia.com)|152.199.39.144|:80... connected.

HTTP request sent, awaiting response... 301 Moved Permanently

Location: https://developer.download.nvidia.com/compute/cuda/11.0.2/local_installers/cuda_11.0.2_450.51.05_linux.run [following]

--2023-01-29 19:55:43-- https://developer.download.nvidia.com/compute/cuda/11.0.2/local_installers/cuda_11.0.2_450.51.05_linux.run

Connecting to developer.download.nvidia.com (developer.download.nvidia.com)|152.199.39.144|:443... connected.

HTTP request sent, awaiting response... 301 Moved Permanently

Location: https://developer.download.nvidia.cn/compute/cuda/11.0.2/local_installers/cuda_11.0.2_450.51.05_linux.run [following]

--2023-01-29 19:55:44-- https://developer.download.nvidia.cn/compute/cuda/11.0.2/local_installers/cuda_11.0.2_450.51.05_linux.run

Resolving developer.download.nvidia.cn (developer.download.nvidia.cn)... 125.64.2.195, 125.64.2.196, 150.138.231.66, ...

Connecting to developer.download.nvidia.cn (developer.download.nvidia.cn)|125.64.2.195|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 3066694836 (2.9G) [application/octet-stream]

Saving to: ‘cuda_11.0.2_450.51.05_linux.run’100%[=====================================================================================================================================>] 3,066,694,836 11.3MB/s in 4m 25s 2023-01-29 20:00:15 (11.0 MB/s) - ‘cuda_11.0.2_450.51.05_linux.run’ saved [3066694836/3066694836]3. cudNN安装

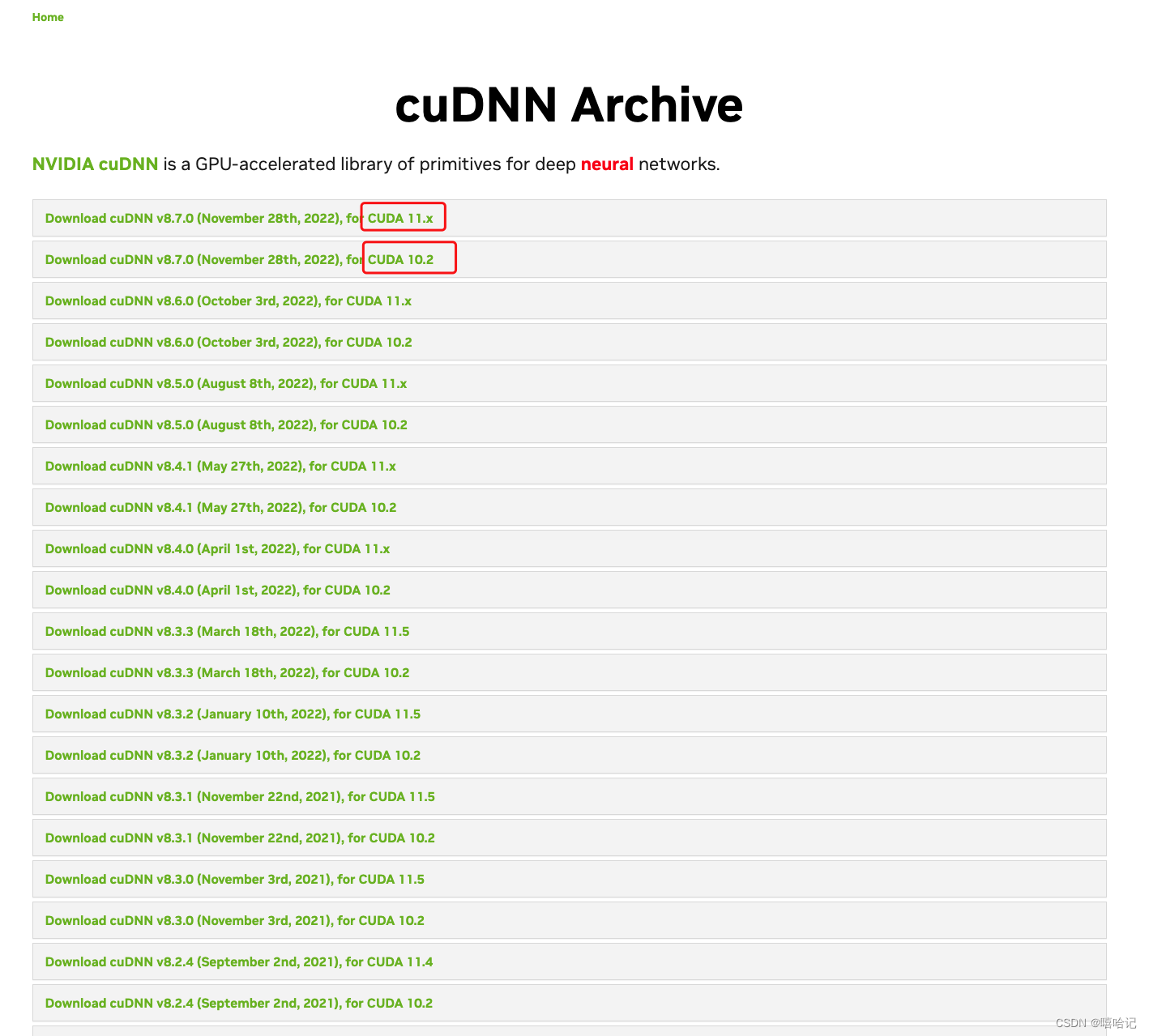

- 下载链接:https://developer.nvidia.com/rdp/cudnn-archive

- cudNN下载的时候也需要注意CUDA的版本,如下图红色框标注的版本

root@hk-MZ32-AR0-00:~# rzZMODEM Session started e50

------------------------ Sent cudnn-linux-x86_64-8.7.0.84_cuda11-archive.tar.xz

root@hk-MZ32-AR0-00:~# tar -xvf cudnn-linux-x86_64-8.7.0.84_cuda11-archive.tar.xz

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_adv_infer_static.a

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_adv_infer_static_v8.a

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_adv_train_static.a

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_adv_train_static_v8.a

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_cnn_infer_static.a

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_cnn_infer_static_v8.a

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_cnn_train_static.a

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_cnn_train_static_v8.a

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_ops_infer_static.a

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_ops_infer_static_v8.a

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_ops_train_static.a

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_ops_train_static_v8.a

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn.so.8

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn.so

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn.so.8.7.0

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_adv_infer.so.8

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_adv_infer.so

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_adv_infer.so.8.7.0

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_adv_train.so.8

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_adv_train.so

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_adv_train.so.8.7.0

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_cnn_infer.so

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_cnn_infer.so.8

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_cnn_infer.so.8.7.0

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_cnn_train.so

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_cnn_train.so.8.7.0

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_cnn_train.so.8

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_ops_infer.so.8.7.0

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_ops_infer.so

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_ops_infer.so.8

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_ops_train.so.8.7.0

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_ops_train.so

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/libcudnn_ops_train.so.8

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_v8.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_adv_infer_v8.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_adv_train_v8.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_backend_v8.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_cnn_infer_v8.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_cnn_train_v8.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_ops_infer_v8.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_ops_train_v8.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_version_v8.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_adv_infer.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_adv_train.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_backend.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_cnn_infer.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_cnn_train.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_ops_infer.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_ops_train.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/cudnn_version.h

cudnn-linux-x86_64-8.7.0.84_cuda11-archive/LICENSE

root@hk-MZ32-AR0-00:~# ll cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/

总用量 2520176

drwxr-xr-x 2 25503 2174 4096 11月 22 04:14 ./

drwxr-xr-x 4 25503 2174 4096 11月 22 04:14 ../

lrwxrwxrwx 1 25503 2174 23 11月 22 03:58 libcudnn_adv_infer.so -> libcudnn_adv_infer.so.8*

lrwxrwxrwx 1 25503 2174 27 11月 22 03:58 libcudnn_adv_infer.so.8 -> libcudnn_adv_infer.so.8.7.0*

-rwxr-xr-x 1 25503 2174 130381904 11月 22 03:58 libcudnn_adv_infer.so.8.7.0*

-rw-r--r-- 1 25503 2174 132979922 11月 22 03:58 libcudnn_adv_infer_static.a

lrwxrwxrwx 1 25503 2174 27 11月 22 03:58 libcudnn_adv_infer_static_v8.a -> libcudnn_adv_infer_static.a

lrwxrwxrwx 1 25503 2174 23 11月 22 03:58 libcudnn_adv_train.so -> libcudnn_adv_train.so.8*

lrwxrwxrwx 1 25503 2174 27 11月 22 03:58 libcudnn_adv_train.so.8 -> libcudnn_adv_train.so.8.7.0*

-rwxr-xr-x 1 25503 2174 121095120 11月 22 03:58 libcudnn_adv_train.so.8.7.0*

-rw-r--r-- 1 25503 2174 123566296 11月 22 03:58 libcudnn_adv_train_static.a

lrwxrwxrwx 1 25503 2174 27 11月 22 03:58 libcudnn_adv_train_static_v8.a -> libcudnn_adv_train_static.a

lrwxrwxrwx 1 25503 2174 23 11月 22 03:58 libcudnn_cnn_infer.so -> libcudnn_cnn_infer.so.8*

lrwxrwxrwx 1 25503 2174 27 11月 22 03:58 libcudnn_cnn_infer.so.8 -> libcudnn_cnn_infer.so.8.7.0*

-rwxr-xr-x 1 25503 2174 639185544 11月 22 03:58 libcudnn_cnn_infer.so.8.7.0*

-rw-r--r-- 1 25503 2174 829548950 11月 22 03:58 libcudnn_cnn_infer_static.a

lrwxrwxrwx 1 25503 2174 27 11月 22 03:58 libcudnn_cnn_infer_static_v8.a -> libcudnn_cnn_infer_static.a

lrwxrwxrwx 1 25503 2174 23 11月 22 03:58 libcudnn_cnn_train.so -> libcudnn_cnn_train.so.8*

lrwxrwxrwx 1 25503 2174 27 11月 22 03:58 libcudnn_cnn_train.so.8 -> libcudnn_cnn_train.so.8.7.0*

-rwxr-xr-x 1 25503 2174 102197000 11月 22 03:58 libcudnn_cnn_train.so.8.7.0*

-rw-r--r-- 1 25503 2174 153525776 11月 22 03:58 libcudnn_cnn_train_static.a

lrwxrwxrwx 1 25503 2174 27 11月 22 03:58 libcudnn_cnn_train_static_v8.a -> libcudnn_cnn_train_static.a

lrwxrwxrwx 1 25503 2174 23 11月 22 03:58 libcudnn_ops_infer.so -> libcudnn_ops_infer.so.8*

lrwxrwxrwx 1 25503 2174 27 11月 22 03:58 libcudnn_ops_infer.so.8 -> libcudnn_ops_infer.so.8.7.0*

-rwxr-xr-x 1 25503 2174 97489336 11月 22 03:58 libcudnn_ops_infer.so.8.7.0*

-rw-r--r-- 1 25503 2174 100636906 11月 22 03:58 libcudnn_ops_infer_static.a

lrwxrwxrwx 1 25503 2174 27 11月 22 03:58 libcudnn_ops_infer_static_v8.a -> libcudnn_ops_infer_static.a

lrwxrwxrwx 1 25503 2174 23 11月 22 03:58 libcudnn_ops_train.so -> libcudnn_ops_train.so.8*

lrwxrwxrwx 1 25503 2174 27 11月 22 03:58 libcudnn_ops_train.so.8 -> libcudnn_ops_train.so.8.7.0*

-rwxr-xr-x 1 25503 2174 74703096 11月 22 03:58 libcudnn_ops_train.so.8.7.0*

-rw-r--r-- 1 25503 2174 75156862 11月 22 03:58 libcudnn_ops_train_static.a

lrwxrwxrwx 1 25503 2174 27 11月 22 03:58 libcudnn_ops_train_static_v8.a -> libcudnn_ops_train_static.a

lrwxrwxrwx 1 25503 2174 13 11月 22 03:58 libcudnn.so -> libcudnn.so.8*

lrwxrwxrwx 1 25503 2174 17 11月 22 03:58 libcudnn.so.8 -> libcudnn.so.8.7.0*

-rwxr-xr-x 1 25503 2174 150200 11月 22 03:58 libcudnn.so.8.7.0*root@hk-MZ32-AR0-00:~# ll cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/

总用量 448

drwxr-xr-x 2 25503 2174 4096 11月 22 04:14 ./

drwxr-xr-x 4 25503 2174 4096 11月 22 04:14 ../

-rw-r--r-- 1 25503 2174 29025 11月 22 03:58 cudnn_adv_infer.h

-rw-r--r-- 1 25503 2174 29025 11月 22 03:58 cudnn_adv_infer_v8.h

-rw-r--r-- 1 25503 2174 27700 11月 22 03:58 cudnn_adv_train.h

-rw-r--r-- 1 25503 2174 27700 11月 22 03:58 cudnn_adv_train_v8.h

-rw-r--r-- 1 25503 2174 24727 11月 22 03:58 cudnn_backend.h

-rw-r--r-- 1 25503 2174 24727 11月 22 03:58 cudnn_backend_v8.h

-rw-r--r-- 1 25503 2174 29083 11月 22 03:58 cudnn_cnn_infer.h

-rw-r--r-- 1 25503 2174 29083 11月 22 03:58 cudnn_cnn_infer_v8.h

-rw-r--r-- 1 25503 2174 10217 11月 22 03:58 cudnn_cnn_train.h

-rw-r--r-- 1 25503 2174 10217 11月 22 03:58 cudnn_cnn_train_v8.h

-rw-r--r-- 1 25503 2174 2968 11月 22 03:58 cudnn.h

-rw-r--r-- 1 25503 2174 49631 11月 22 03:58 cudnn_ops_infer.h

-rw-r--r-- 1 25503 2174 49631 11月 22 03:58 cudnn_ops_infer_v8.h

-rw-r--r-- 1 25503 2174 25733 11月 22 03:58 cudnn_ops_train.h

-rw-r--r-- 1 25503 2174 25733 11月 22 03:58 cudnn_ops_train_v8.h

-rw-r--r-- 1 25503 2174 2968 11月 22 03:58 cudnn_v8.h

-rw-r--r-- 1 25503 2174 3113 11月 22 03:58 cudnn_version.h

-rw-r--r-- 1 25503 2174 3113 11月 22 03:58 cudnn_version_v8.h

root@hk-MZ32-AR0-00:~# cp -P cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/* /usr/local/cuda/lib64/root@hk-MZ32-AR0-00:~# cp -P cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/* /usr/local/cuda/include/

root@hk-MZ32-AR0-00:~# ll /usr/local/cuda/lib64/libcudnn*

lrwxrwxrwx 1 root root 23 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_adv_infer.so -> libcudnn_adv_infer.so.8*

lrwxrwxrwx 1 root root 27 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_adv_infer.so.8 -> libcudnn_adv_infer.so.8.7.0*

-rwxr-xr-x 1 root root 130381904 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_adv_infer.so.8.7.0*

-rw-r--r-- 1 root root 132979922 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_adv_infer_static.a

lrwxrwxrwx 1 root root 27 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_adv_infer_static_v8.a -> libcudnn_adv_infer_static.a

lrwxrwxrwx 1 root root 23 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_adv_train.so -> libcudnn_adv_train.so.8*

lrwxrwxrwx 1 root root 27 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_adv_train.so.8 -> libcudnn_adv_train.so.8.7.0*

-rwxr-xr-x 1 root root 121095120 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_adv_train.so.8.7.0*

-rw-r--r-- 1 root root 123566296 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_adv_train_static.a

lrwxrwxrwx 1 root root 27 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_adv_train_static_v8.a -> libcudnn_adv_train_static.a

lrwxrwxrwx 1 root root 23 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_cnn_infer.so -> libcudnn_cnn_infer.so.8*

lrwxrwxrwx 1 root root 27 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_cnn_infer.so.8 -> libcudnn_cnn_infer.so.8.7.0*

-rwxr-xr-x 1 root root 639185544 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_cnn_infer.so.8.7.0*

-rw-r--r-- 1 root root 829548950 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_cnn_infer_static.a

lrwxrwxrwx 1 root root 27 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_cnn_infer_static_v8.a -> libcudnn_cnn_infer_static.a

lrwxrwxrwx 1 root root 23 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_cnn_train.so -> libcudnn_cnn_train.so.8*

lrwxrwxrwx 1 root root 27 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_cnn_train.so.8 -> libcudnn_cnn_train.so.8.7.0*

-rwxr-xr-x 1 root root 102197000 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_cnn_train.so.8.7.0*

-rw-r--r-- 1 root root 153525776 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_cnn_train_static.a

lrwxrwxrwx 1 root root 27 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_cnn_train_static_v8.a -> libcudnn_cnn_train_static.a

lrwxrwxrwx 1 root root 23 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_ops_infer.so -> libcudnn_ops_infer.so.8*

lrwxrwxrwx 1 root root 27 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_ops_infer.so.8 -> libcudnn_ops_infer.so.8.7.0*

-rwxr-xr-x 1 root root 97489336 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_ops_infer.so.8.7.0*

-rw-r--r-- 1 root root 100636906 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_ops_infer_static.a

lrwxrwxrwx 1 root root 27 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_ops_infer_static_v8.a -> libcudnn_ops_infer_static.a

lrwxrwxrwx 1 root root 23 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_ops_train.so -> libcudnn_ops_train.so.8*

lrwxrwxrwx 1 root root 27 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_ops_train.so.8 -> libcudnn_ops_train.so.8.7.0*

-rwxr-xr-x 1 root root 74703096 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_ops_train.so.8.7.0*

-rw-r--r-- 1 root root 75156862 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_ops_train_static.a

lrwxrwxrwx 1 root root 27 2月 10 17:39 /usr/local/cuda/lib64/libcudnn_ops_train_static_v8.a -> libcudnn_ops_train_static.a

lrwxrwxrwx 1 root root 13 2月 10 17:39 /usr/local/cuda/lib64/libcudnn.so -> libcudnn.so.8*

lrwxrwxrwx 1 root root 17 2月 10 17:39 /usr/local/cuda/lib64/libcudnn.so.8 -> libcudnn.so.8.7.0*

-rwxr-xr-x 1 root root 150200 2月 10 17:39 /usr/local/cuda/lib64/libcudnn.so.8.7.0*

root@hk-MZ32-AR0-00:~# ll /usr/local/cuda/lib64/libcudnn* | wc -l

33

root@hk-MZ32-AR0-00:~# ll cudnn-linux-x86_64-8.7.0.84_cuda11-archive/

include/ lib/ LICENSE

root@hk-MZ32-AR0-00:~# ll cudnn-linux-x86_64-8.7.0.84_cuda11-archive/lib/* |wc -l

33root@hk-MZ32-AR0-00:~# ll /usr/local/cuda/include/cudn*

-rw-r--r-- 1 root root 29025 2月 10 17:39 /usr/local/cuda/include/cudnn_adv_infer.h

-rw-r--r-- 1 root root 29025 2月 10 17:39 /usr/local/cuda/include/cudnn_adv_infer_v8.h

-rw-r--r-- 1 root root 27700 2月 10 17:39 /usr/local/cuda/include/cudnn_adv_train.h

-rw-r--r-- 1 root root 27700 2月 10 17:39 /usr/local/cuda/include/cudnn_adv_train_v8.h

-rw-r--r-- 1 root root 24727 2月 10 17:39 /usr/local/cuda/include/cudnn_backend.h

-rw-r--r-- 1 root root 24727 2月 10 17:39 /usr/local/cuda/include/cudnn_backend_v8.h

-rw-r--r-- 1 root root 29083 2月 10 17:39 /usr/local/cuda/include/cudnn_cnn_infer.h

-rw-r--r-- 1 root root 29083 2月 10 17:39 /usr/local/cuda/include/cudnn_cnn_infer_v8.h

-rw-r--r-- 1 root root 10217 2月 10 17:39 /usr/local/cuda/include/cudnn_cnn_train.h

-rw-r--r-- 1 root root 10217 2月 10 17:39 /usr/local/cuda/include/cudnn_cnn_train_v8.h

-rw-r--r-- 1 root root 2968 2月 10 17:39 /usr/local/cuda/include/cudnn.h

-rw-r--r-- 1 root root 49631 2月 10 17:39 /usr/local/cuda/include/cudnn_ops_infer.h

-rw-r--r-- 1 root root 49631 2月 10 17:39 /usr/local/cuda/include/cudnn_ops_infer_v8.h

-rw-r--r-- 1 root root 25733 2月 10 17:39 /usr/local/cuda/include/cudnn_ops_train.h

-rw-r--r-- 1 root root 25733 2月 10 17:39 /usr/local/cuda/include/cudnn_ops_train_v8.h

-rw-r--r-- 1 root root 2968 2月 10 17:39 /usr/local/cuda/include/cudnn_v8.h

-rw-r--r-- 1 root root 3113 2月 10 17:39 /usr/local/cuda/include/cudnn_version.h

-rw-r--r-- 1 root root 3113 2月 10 17:39 /usr/local/cuda/include/cudnn_version_v8.h

root@hk-MZ32-AR0-00:~# ll /usr/local/cuda/include/cudn* |wc -l

18

root@hk-MZ32-AR0-00:~# ll cudnn-linux-x86_64-8.7.0.84_cuda11-archive/include/* | wc -l

18

4. 安装docker环境

root@hk-MZ32-AR0-00:~# curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -root@hk-MZ32-AR0-00:~# add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"root@hk-MZ32-AR0-00:~# apt-get -y install docker-ce

5. 安装nvidia-docker2

5.1 ubuntu系统安装

root@hk-MZ32-AR0-00:~# curl -s -L https://nvidia.github.io/nvidia-docker/$(. /etc/os-release;echo $ID$VERSION_ID)/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

deb https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/$(ARCH) /

#deb https://nvidia.github.io/libnvidia-container/experimental/ubuntu18.04/$(ARCH) /

deb https://nvidia.github.io/nvidia-container-runtime/stable/ubuntu18.04/$(ARCH) /

#deb https://nvidia.github.io/nvidia-container-runtime/experimental/ubuntu18.04/$(ARCH) /

deb https://nvidia.github.io/nvidia-docker/ubuntu18.04/$(ARCH) /root@hk-MZ32-AR0-00:~# apt-get update

命中:1 http://mirrors.aliyun.com/ubuntu bionic InRelease

命中:2 https://mirrors.aliyun.com/docker-ce/linux/ubuntu focal InRelease

获取:3 http://mirrors.aliyun.com/ubuntu bionic-security InRelease [88.7 kB]

命中:4 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic InRelease

获取:5 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates InRelease [88.7 kB]

获取:6 http://mirrors.aliyun.com/ubuntu bionic-updates InRelease [88.7 kB]

获取:7 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-backports InRelease [83.3 kB]

获取:8 https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/amd64 InRelease [1,484 B]

命中:9 https://packages.microsoft.com/ubuntu/18.04/prod bionic InRelease

获取:10 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-security InRelease [88.7 kB]

获取:11 http://mirrors.aliyun.com/ubuntu bionic-proposed InRelease [242 kB]

获取:12 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-proposed InRelease [242 kB]

命中:13 http://ppa.launchpad.net/graphics-drivers/ppa/ubuntu focal InRelease

命中:14 https://linux.teamviewer.com/deb stable InRelease

获取:15 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main i386 Packages [1,604 kB]

获取:16 http://mirrors.aliyun.com/ubuntu bionic-backports InRelease [83.3 kB]

获取:17 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 Packages [2,909 kB]

获取:18 http://mirrors.aliyun.com/ubuntu bionic-security/main amd64 DEP-11 Metadata [76.8 kB]

获取:19 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/main amd64 DEP-11 Metadata [297 kB]

获取:20 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/universe amd64 DEP-11 Metadata [302 kB]

获取:21 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-updates/multiverse amd64 DEP-11 Metadata [2,468 B]

获取:22 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-backports/main amd64 DEP-11 Metadata [8,108 B]

获取:23 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-backports/universe amd64 DEP-11 Metadata [10.0 kB]

获取:24 https://nvidia.github.io/libnvidia-container/stable/ubuntu22.04/amd64 InRelease [1,484 B]

获取:25 http://mirrors.aliyun.com/ubuntu bionic-security/universe amd64 DEP-11 Metadata [61.0 kB]

获取:26 http://mirrors.aliyun.com/ubuntu bionic-security/multiverse amd64 DEP-11 Metadata [2,464 B]

获取:27 http://mirrors.aliyun.com/ubuntu bionic-updates/main amd64 Packages [2,909 kB]

获取:28 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-security/main amd64 DEP-11 Metadata [76.8 kB]

获取:29 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-security/universe amd64 DEP-11 Metadata [61.1 kB]

获取:30 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-security/multiverse amd64 DEP-11 Metadata [2,464 B]

获取:31 https://nvidia.github.io/nvidia-container-runtime/stable/ubuntu22.04/amd64 InRelease [1,481 B]

获取:32 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-proposed/main Sources [81.3 kB]

获取:33 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-proposed/main Translation-en [38.8 kB]

获取:34 https://nvidia.github.io/nvidia-docker/ubuntu22.04/amd64 InRelease [1,474 B]

获取:35 https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic-proposed/main amd64 DEP-11 Metadata [6,552 B]

获取:36 https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/amd64 Packages [22.3 kB]

获取:37 https://nvidia.github.io/libnvidia-container/stable/ubuntu22.04/amd64 Packages [22.3 kB]

获取:38 https://nvidia.github.io/nvidia-container-runtime/stable/ubuntu22.04/amd64 Packages [7,416 B]

获取:39 https://nvidia.github.io/nvidia-docker/ubuntu22.04/amd64 Packages [4,488 B]

获取:40 http://mirrors.aliyun.com/ubuntu bionic-updates/main i386 Packages [1,604 kB]

获取:41 http://mirrors.aliyun.com/ubuntu bionic-updates/main amd64 DEP-11 Metadata [297 kB]

获取:42 http://mirrors.aliyun.com/ubuntu bionic-updates/universe amd64 DEP-11 Metadata [302 kB]

获取:43 http://mirrors.aliyun.com/ubuntu bionic-updates/multiverse amd64 DEP-11 Metadata [2,468 B]

获取:44 http://mirrors.aliyun.com/ubuntu bionic-proposed/main Sources [81.3 kB]

获取:45 http://mirrors.aliyun.com/ubuntu bionic-proposed/main Translation-en [38.8 kB]

获取:46 http://mirrors.aliyun.com/ubuntu bionic-proposed/main amd64 DEP-11 Metadata [6,516 B]

获取:47 http://mirrors.aliyun.com/ubuntu bionic-backports/main amd64 DEP-11 Metadata [8,092 B]

获取:48 http://mirrors.aliyun.com/ubuntu bionic-backports/universe amd64 DEP-11 Metadata [10.1 kB]

已下载 11.9 MB,耗时 11秒 (1,115 kB/s)

正在读取软件包列表... 2%

正在读取软件包列表... 完成

root@test:/etc/apt/sources.list.d#

root@test:/etc/apt/sources.list.d# apt-get install nvidia-docker2

正在读取软件包列表... 完成

正在分析软件包的依赖关系树

正在读取状态信息... 完成

下列软件包是自动安装的并且现在不需要了:libevent-2.1-7 libnatpmp1 libxvmc1 transmission-common

使用'apt autoremove'来卸载它(它们)。

将会同时安装下列软件:libnvidia-container-tools libnvidia-container1 nvidia-container-toolkit nvidia-container-toolkit-base

下列【新】软件包将被安装:libnvidia-container-tools libnvidia-container1 nvidia-container-toolkit nvidia-container-toolkit-base nvidia-docker2

升级了 0 个软件包,新安装了 5 个软件包,要卸载 0 个软件包,有 80 个软件包未被升级。

需要下载 3,773 kB 的归档。

解压缩后会消耗 14.6 MB 的额外空间。

您希望继续执行吗? [Y/n] y

获取:1 https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/amd64 libnvidia-container1 1.12.0-1 [927 kB]

获取:2 https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/amd64 libnvidia-container-tools 1.12.0-1 [24.5 kB]

获取:3 https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/amd64 nvidia-container-toolkit-base 1.12.0-1 [2,066 kB]

获取:4 https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/amd64 nvidia-container-toolkit 1.12.0-1 [750 kB]

获取:5 https://nvidia.github.io/libnvidia-container/stable/ubuntu18.04/amd64 nvidia-docker2 2.12.0-1 [5,544 B]

已下载 3,773 kB,耗时 2分 13秒 (28.3 kB/s)

正在选中未选择的软件包 libnvidia-container1:amd64。

(正在读取数据库 ... 系统当前共安装有 202374 个文件和目录。)

准备解压 .../libnvidia-container1_1.12.0-1_amd64.deb ...

正在解压 libnvidia-container1:amd64 (1.12.0-1) ...

正在选中未选择的软件包 libnvidia-container-tools。

准备解压 .../libnvidia-container-tools_1.12.0-1_amd64.deb ...

正在解压 libnvidia-container-tools (1.12.0-1) ...

正在选中未选择的软件包 nvidia-container-toolkit-base。

准备解压 .../nvidia-container-toolkit-base_1.12.0-1_amd64.deb ...

正在解压 nvidia-container-toolkit-base (1.12.0-1) ...

正在选中未选择的软件包 nvidia-container-toolkit。

准备解压 .../nvidia-container-toolkit_1.12.0-1_amd64.deb ...

正在解压 nvidia-container-toolkit (1.12.0-1) ...

正在选中未选择的软件包 nvidia-docker2。

准备解压 .../nvidia-docker2_2.12.0-1_all.deb ...

正在解压 nvidia-docker2 (2.12.0-1) ...

正在设置 nvidia-container-toolkit-base (1.12.0-1) ...

正在设置 libnvidia-container1:amd64 (1.12.0-1) ...

正在设置 libnvidia-container-tools (1.12.0-1) ...

正在设置 nvidia-container-toolkit (1.12.0-1) ...

正在设置 nvidia-docker2 (2.12.0-1) ...

正在处理用于 libc-bin (2.31-0ubuntu9.7) 的触发器 ...root@hk-MZ32-AR0-00:~# systemctl restart docker5.2 centos系统安装

[root@bj ~]# sudo yum install -y nvidia-docker2

Loaded plugins: fastestmirror, product-id, search-disabled-repos, subscription-managerThis system is not registered with an entitlement server. You can use subscription-manager to register.Loading mirror speeds from cached hostfile

epel/x86_64/metalink | 6.2 kB 00:00:00 * base: mirrors.163.com* epel: mirrors.bfsu.edu.cn* extras: mirrors.ustc.edu.cn* updates: mirrors.ustc.edu.cn

base | 3.6 kB 00:00:00

docker-ce-stable | 3.5 kB 00:00:00

extras | 2.9 kB 00:00:00

libnvidia-container/x86_64/signature | 833 B 00:00:00

Retrieving key from https://nvidia.github.io/libnvidia-container/gpgkey

Importing GPG key 0xF796ECB0:Userid : "NVIDIA CORPORATION (Open Source Projects) <cudatools@nvidia.com>"Fingerprint: c95b 321b 61e8 8c18 09c4 f759 ddca e044 f796 ecb0From : https://nvidia.github.io/libnvidia-container/gpgkey

libnvidia-container/x86_64/signature | 2.1 kB 00:00:00 !!!

nvidia-container-runtime/x86_64/signature | 833 B 00:00:00

Retrieving key from https://nvidia.github.io/nvidia-container-runtime/gpgkey

Importing GPG key 0xF796ECB0:Userid : "NVIDIA CORPORATION (Open Source Projects) <cudatools@nvidia.com>"Fingerprint: c95b 321b 61e8 8c18 09c4 f759 ddca e044 f796 ecb0From : https://nvidia.github.io/nvidia-container-runtime/gpgkey

nvidia-container-runtime/x86_64/signature | 2.1 kB 00:00:00 !!!

nvidia-docker/x86_64/signature | 833 B 00:00:00

Retrieving key from https://nvidia.github.io/nvidia-docker/gpgkey

Importing GPG key 0xF796ECB0:Userid : "NVIDIA CORPORATION (Open Source Projects) <cudatools@nvidia.com>"Fingerprint: c95b 321b 61e8 8c18 09c4 f759 ddca e044 f796 ecb0From : https://nvidia.github.io/nvidia-docker/gpgkey

nvidia-docker/x86_64/signature | 2.1 kB 00:00:00 !!!

teamviewer/x86_64/signature | 867 B 00:00:00

teamviewer/x86_64/signature | 2.5 kB 00:00:00 !!!

updates | 2.9 kB 00:00:00

(1/3): nvidia-container-runtime/x86_64/primary | 11 kB 00:00:01

(2/3): nvidia-docker/x86_64/primary | 8.0 kB 00:00:01

(3/3): libnvidia-container/x86_64/primary | 27 kB 00:00:03

libnvidia-container 171/171

nvidia-container-runtime 71/71

nvidia-docker 54/54

Resolving Dependencies

--> Running transaction check

---> Package nvidia-docker2.noarch 0:2.11.0-1 will be installed

--> Processing Dependency: nvidia-container-toolkit >= 1.10.0-1 for package: nvidia-docker2-2.11.0-1.noarch

--> Running transaction check

---> Package nvidia-container-toolkit.x86_64 0:1.11.0-1 will be installed

--> Processing Dependency: nvidia-container-toolkit-base = 1.11.0-1 for package: nvidia-container-toolkit-1.11.0-1.x86_64

--> Processing Dependency: libnvidia-container-tools < 2.0.0 for package: nvidia-container-toolkit-1.11.0-1.x86_64

--> Processing Dependency: libnvidia-container-tools >= 1.11.0-1 for package: nvidia-container-toolkit-1.11.0-1.x86_64

--> Running transaction check

---> Package libnvidia-container-tools.x86_64 0:1.11.0-1 will be installed

--> Processing Dependency: libnvidia-container1(x86-64) >= 1.11.0-1 for package: libnvidia-container-tools-1.11.0-1.x86_64

--> Processing Dependency: libnvidia-container.so.1(NVC_1.0)(64bit) for package: libnvidia-container-tools-1.11.0-1.x86_64

--> Processing Dependency: libnvidia-container.so.1()(64bit) for package: libnvidia-container-tools-1.11.0-1.x86_64

---> Package nvidia-container-toolkit-base.x86_64 0:1.11.0-1 will be installed

--> Running transaction check

---> Package libnvidia-container1.x86_64 0:1.11.0-1 will be installed

--> Finished Dependency ResolutionDependencies Resolved=================================================================================================================================================================================Package Arch Version Repository Size

=================================================================================================================================================================================

Installing:nvidia-docker2 noarch 2.11.0-1 libnvidia-container 8.7 k

Installing for dependencies:libnvidia-container-tools x86_64 1.11.0-1 libnvidia-container 50 klibnvidia-container1 x86_64 1.11.0-1 libnvidia-container 1.0 Mnvidia-container-toolkit x86_64 1.11.0-1 libnvidia-container 780 knvidia-container-toolkit-base x86_64 1.11.0-1 libnvidia-container 2.5 MTransaction Summary

=================================================================================================================================================================================

Install 1 Package (+4 Dependent packages)Total download size: 4.3 M

Installed size: 12 M

Downloading packages:

(1/5): libnvidia-container-tools-1.11.0-1.x86_64.rpm | 50 kB 00:00:01

(2/5): libnvidia-container1-1.11.0-1.x86_64.rpm | 1.0 MB 00:00:03

(3/5): nvidia-container-toolkit-1.11.0-1.x86_64.rpm | 780 kB 00:00:03

(4/5): nvidia-docker2-2.11.0-1.noarch.rpm | 8.7 kB 00:00:00

(5/5): nvidia-container-toolkit-base-1.11.0-1.x86_64.rpm | 2.5 MB 00:00:43

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Total 94 kB/s | 4.3 MB 00:00:46

Running transaction check

Running transaction test

Transaction test succeeded

Running transactionInstalling : nvidia-container-toolkit-base-1.11.0-1.x86_64 1/5 Installing : libnvidia-container1-1.11.0-1.x86_64 2/5 Installing : libnvidia-container-tools-1.11.0-1.x86_64 3/5 Installing : nvidia-container-toolkit-1.11.0-1.x86_64 4/5 Installing : nvidia-docker2-2.11.0-1.noarch 5/5 Verifying : libnvidia-container1-1.11.0-1.x86_64 1/5 Verifying : nvidia-container-toolkit-base-1.11.0-1.x86_64 2/5 Verifying : nvidia-container-toolkit-1.11.0-1.x86_64 3/5 Verifying : libnvidia-container-tools-1.11.0-1.x86_64 4/5 Verifying : nvidia-docker2-2.11.0-1.noarch 5/5 Installed:nvidia-docker2.noarch 0:2.11.0-1 Dependency Installed:libnvidia-container-tools.x86_64 0:1.11.0-1 libnvidia-container1.x86_64 0:1.11.0-1 nvidia-container-toolkit.x86_64 0:1.11.0-1 nvidia-container-toolkit-base.x86_64 0:1.11.0-1Complete!

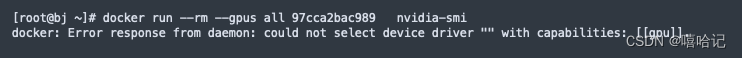

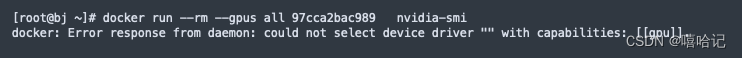

- 若是centos系统,需要用yum安装过nvidia-docker2,虽然已经安装过nvidia-container-toolkit,但是在容器中使用gpu的时候报错,更新安装 nvidia-container-toolkit

# 设置yum源:nvidia-container-toolkit.repo[root@bj ~]# distribution=$(. /etc/os-release;echo $ID$VERSION_ID) \

> && curl -s -L https://nvidia.github.io/libnvidia-container/$distribution/libnvidia-container.repo | tee /etc/yum.repos.d/nvidia-container-toolkit.repo

[libnvidia-container]

name=libnvidia-container

baseurl=https://nvidia.github.io/libnvidia-container/stable/centos7/$basearch

repo_gpgcheck=1

gpgcheck=0

enabled=1

gpgkey=https://nvidia.github.io/libnvidia-container/gpgkey

sslverify=1

sslcacert=/etc/pki/tls/certs/ca-bundle.crt[libnvidia-container-experimental]

name=libnvidia-container-experimental

baseurl=https://nvidia.github.io/libnvidia-container/experimental/centos7/$basearch

repo_gpgcheck=1

gpgcheck=0

enabled=0

gpgkey=https://nvidia.github.io/libnvidia-container/gpgkey

sslverify=1

sslcacert=/etc/pki/tls/certs/ca-bundle.crt[root@bj ~]# yum install -y nvidia-container-toolkit

Loaded plugins: fastestmirror, product-id, search-disabled-repos, subscription-managerThis system is not registered with an entitlement server. You can use subscription-manager to register.Repository libnvidia-container is listed more than once in the configuration

Repository libnvidia-container-experimental is listed more than once in the configuration

Loading mirror speeds from cached hostfile* base: mirrors.ustc.edu.cn* epel: mirrors.ustc.edu.cn* extras: mirrors.ustc.edu.cn* updates: mirrors.ustc.edu.cn

Resolving Dependencies

--> Running transaction check

---> Package nvidia-container-toolkit.x86_64 0:1.11.0-1 will be updated

---> Package nvidia-container-toolkit.x86_64 0:1.12.0-0.1.rc.3 will be an update

--> Processing Dependency: nvidia-container-toolkit-base = 1.12.0-0.1.rc.3 for package: nvidia-container-toolkit-1.12.0-0.1.rc.3.x86_64

--> Processing Dependency: libnvidia-container-tools >= 1.12.0-0.1.rc.3 for package: nvidia-container-toolkit-1.12.0-0.1.rc.3.x86_64

--> Running transaction check

---> Package libnvidia-container-tools.x86_64 0:1.11.0-1 will be updated

---> Package libnvidia-container-tools.x86_64 0:1.12.0-0.1.rc.3 will be an update

--> Processing Dependency: libnvidia-container1(x86-64) >= 1.12.0-0.1.rc.3 for package: libnvidia-container-tools-1.12.0-0.1.rc.3.x86_64

---> Package nvidia-container-toolkit-base.x86_64 0:1.11.0-1 will be updated

---> Package nvidia-container-toolkit-base.x86_64 0:1.12.0-0.1.rc.3 will be an update

--> Running transaction check

---> Package libnvidia-container1.x86_64 0:1.11.0-1 will be updated

---> Package libnvidia-container1.x86_64 0:1.12.0-0.1.rc.3 will be an update

--> Finished Dependency ResolutionDependencies Resolved=================================================================================================================================================================================Package Arch Version Repository Size

=================================================================================================================================================================================

Updating:nvidia-container-toolkit x86_64 1.12.0-0.1.rc.3 libnvidia-container-experimental 797 k

Updating for dependencies:libnvidia-container-tools x86_64 1.12.0-0.1.rc.3 libnvidia-container-experimental 50 klibnvidia-container1 x86_64 1.12.0-0.1.rc.3 libnvidia-container-experimental 1.0 Mnvidia-container-toolkit-base x86_64 1.12.0-0.1.rc.3 libnvidia-container-experimental 3.4 MTransaction Summary

=================================================================================================================================================================================

Upgrade 1 Package (+3 Dependent packages)Total download size: 5.2 M

Downloading packages:

Delta RPMs disabled because /usr/bin/applydeltarpm not installed.

(1/4): libnvidia-container-tools-1.12.0-0.1.rc.3.x86_64.rpm | 50 kB 00:00:00

(2/4): nvidia-container-toolkit-1.12.0-0.1.rc.3.x86_64.rpm | 797 kB 00:00:00

(3/4): libnvidia-container1-1.12.0-0.1.rc.3.x86_64.rpm | 1.0 MB 00:00:02

(4/4): nvidia-container-toolkit-base-1.12.0-0.1.rc.3.x86_64.rpm | 3.4 MB 00:00:00

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Total 2.0 MB/s | 5.2 MB 00:00:02

Running transaction check

Running transaction test

Transaction test succeeded

Running transactionUpdating : nvidia-container-toolkit-base-1.12.0-0.1.rc.3.x86_64 1/8 Updating : libnvidia-container1-1.12.0-0.1.rc.3.x86_64 2/8 Updating : libnvidia-container-tools-1.12.0-0.1.rc.3.x86_64 3/8 Updating : nvidia-container-toolkit-1.12.0-0.1.rc.3.x86_64 4/8 Cleanup : nvidia-container-toolkit-1.11.0-1.x86_64 5/8 Cleanup : libnvidia-container-tools-1.11.0-1.x86_64 6/8 Cleanup : libnvidia-container1-1.11.0-1.x86_64 7/8 Cleanup : nvidia-container-toolkit-base-1.11.0-1.x86_64 8/8 Verifying : libnvidia-container1-1.12.0-0.1.rc.3.x86_64 1/8 Verifying : nvidia-container-toolkit-base-1.12.0-0.1.rc.3.x86_64 2/8 Verifying : libnvidia-container-tools-1.12.0-0.1.rc.3.x86_64 3/8 Verifying : nvidia-container-toolkit-1.12.0-0.1.rc.3.x86_64 4/8 Verifying : libnvidia-container-tools-1.11.0-1.x86_64 5/8 Verifying : nvidia-container-toolkit-base-1.11.0-1.x86_64 6/8 Verifying : nvidia-container-toolkit-1.11.0-1.x86_64 7/8 Verifying : libnvidia-container1-1.11.0-1.x86_64 8/8 Updated:nvidia-container-toolkit.x86_64 0:1.12.0-0.1.rc.3 Dependency Updated:libnvidia-container-tools.x86_64 0:1.12.0-0.1.rc.3 libnvidia-container1.x86_64 0:1.12.0-0.1.rc.3 nvidia-container-toolkit-base.x86_64 0:1.12.0-0.1.rc.3 Complete!

[root@bj ~]# systemctl restart docker

6. 测试docker容调用GPU服务

root@hk-MZ32-AR0-00:~# docker run --rm --gpus all 97cca2bac989 nvidia-smi

Sat Feb 11 07:13:48 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 460.106.00 Driver Version: 460.106.00 CUDA Version: 11.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:04:00.0 Off | 0 |

| N/A 47C P0 27W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 Tesla T4 Off | 00000000:06:00.0 Off | 0 |

| N/A 43C P0 28W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 2 Tesla T4 Off | 00000000:0D:00.0 Off | 0 |

| N/A 49C P0 28W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 3 Tesla T4 Off | 00000000:0F:00.0 Off | 0 |

| N/A 45C P0 26W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 4 Tesla T4 Off | 00000000:17:00.0 Off | 0 |

| N/A 48C P0 27W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 5 Tesla T4 Off | 00000000:19:00.0 Off | 0 |

| N/A 49C P0 28W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 6 Tesla T4 Off | 00000000:21:00.0 Off | 0 |

| N/A 45C P0 26W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 7 Tesla T4 Off | 00000000:23:00.0 Off | 0 |

| N/A 45C P0 28W / 70W | 0MiB / 15109MiB | 5% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------++-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

相关文章:

GPU服务器安装显卡驱动、CUDA和cuDNN

GPU服务器安装cuda和cudnn1. 服务器驱动安装2. cuda安装3. cudNN安装4. 安装docker环境5. 安装nvidia-docker25.1 ubuntu系统安装5.2 centos系统安装6. 测试docker容调用GPU服务1. 服务器驱动安装 显卡驱动下载地址https://www.nvidia.cn/Download/index.aspx?langcn显卡驱动…...

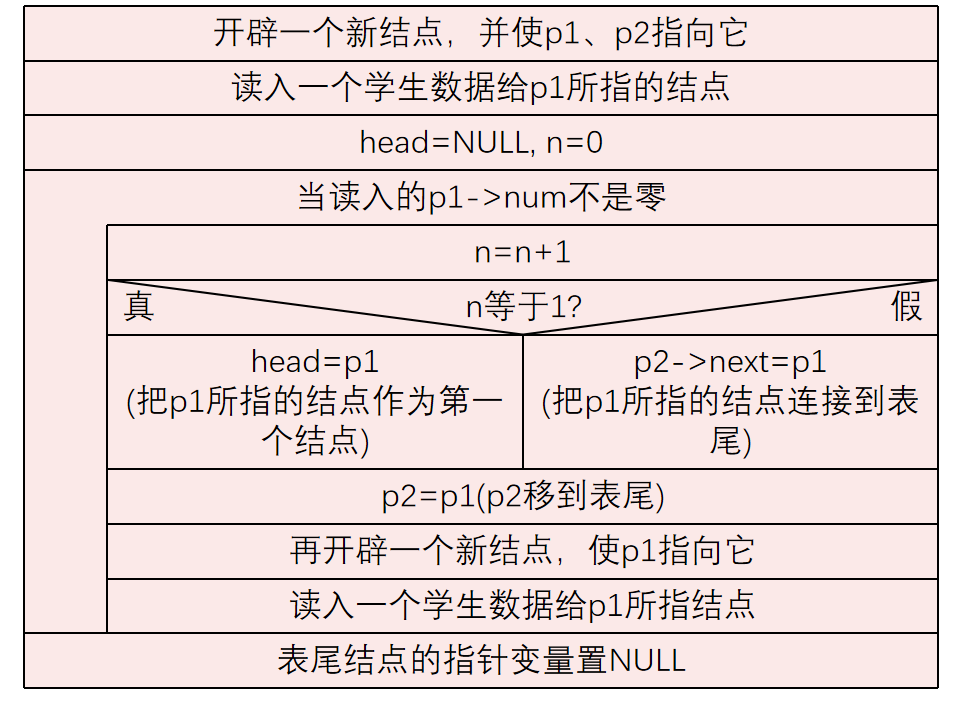

结构体变量

C语言允许用户自己建立由不同类型数据组成的组合型的数据结构,它称为结构体(structre)。 在程序中建立一个结构体类型: 1.结构体 建立结构体 struct Student { int num; //学号为整型 char name[20]; //姓名为字符串 char se…...

Java 多态

文章目录1、多态的介绍2、多态的格式3、对象的强制类型转换4、instanceof 运算符5、案例:笔记本USB接口1、多态的介绍 多态(Polymorphism)按字面意思理解就是“多种形态”,即一个对象拥有多种形态。 即同一种方法可以根据发送对…...

九龙证券|一夜暴跌36%,美股走势分化,标普指数创近2月最差周度表现

当地时间2月10日,美股三大指数收盘涨跌纷歧。道指涨0.5%,标普500指数涨0.22%,纳指跌0.61%。 受国际油价明显上升影响,动力板块领涨,埃克森美孚、康菲石油涨超4%。大型科技股走低,特斯拉、英伟达跌约5%。热门…...

【数据库】 mysql用户授权详解

目录 MySQL用户授权 一,密码策略 1,查看临时密码 2,查看数据库当前密码策略: 二, 用户授权和撤销授权 1、创建用户 2,删除用户 3,授权和回收权限 MySQL用户授权 一,密码策略…...

【性能】性能测试理论篇_学习笔记_2023/2/11

性能测试的目的验证系统是否能满足用户提出的性能指标发现性能瓶颈,优化系统整体性能性能测试的分类注:这些测试类型其实是密切相关,甚至无法区别的,例如几乎所有的测试都有并发测试。在实际中不用纠结具体的概念。而是要明确测试…...

C语言(输入printf()函数)

printf()的细节操作很多,对于现阶段的朋友来说,主要还是以理解为主。因为很多的确很难用到。 目录 一.转换说明(占位符) 二.printf()转换说明修饰符 1.数字 2.%数字1.数字2 3.整型转换字符补充 4.标记 -符号 符号 空格符…...

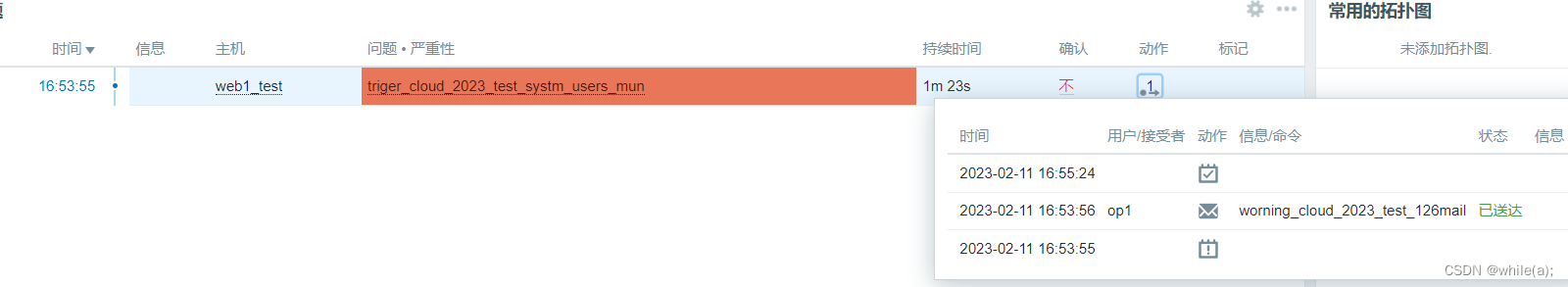

Zabbix 构建监控告警平台(四)

Zabbix ActionZabbix Macros1.Zabbix Action 1.1动作Action简介 当某个触发器状态发生改变(如Problem、OK),可以采取相应的动作,如: 执行远程命令 邮件,短信,微信告警,电话 1.2告警实验简介 1. 创建告警media type&…...

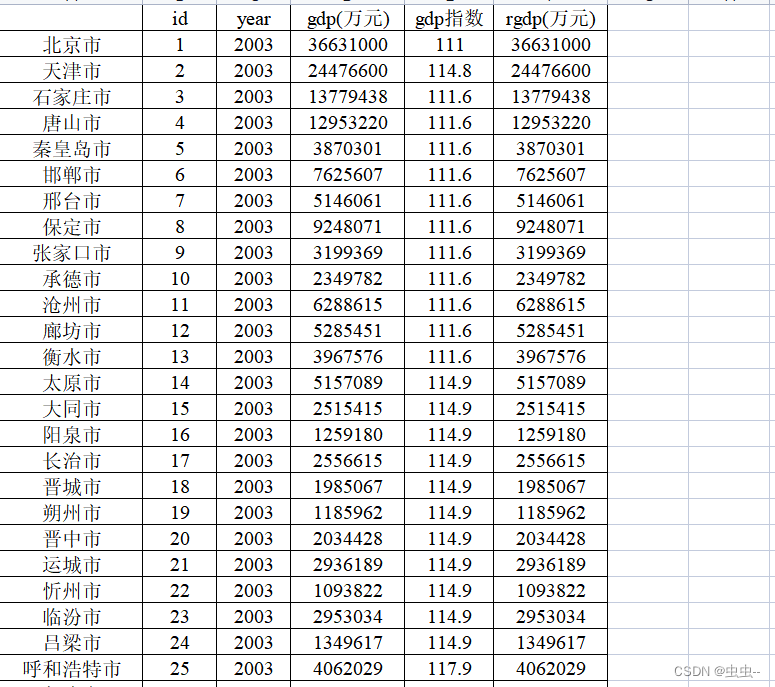

2004-2019年285个地级市实际GDP与名义GDP

2004-2019年285个地级市实际GDP和名义GDP 1、时间:2004-2019年 2、范围:285个地级市 3、说明:GDP平减指数采用地级市所在省份当年平减指数 4、代码: "gen rgdp gdp if year 2003 gen rgdp gdp if year 2003" re…...

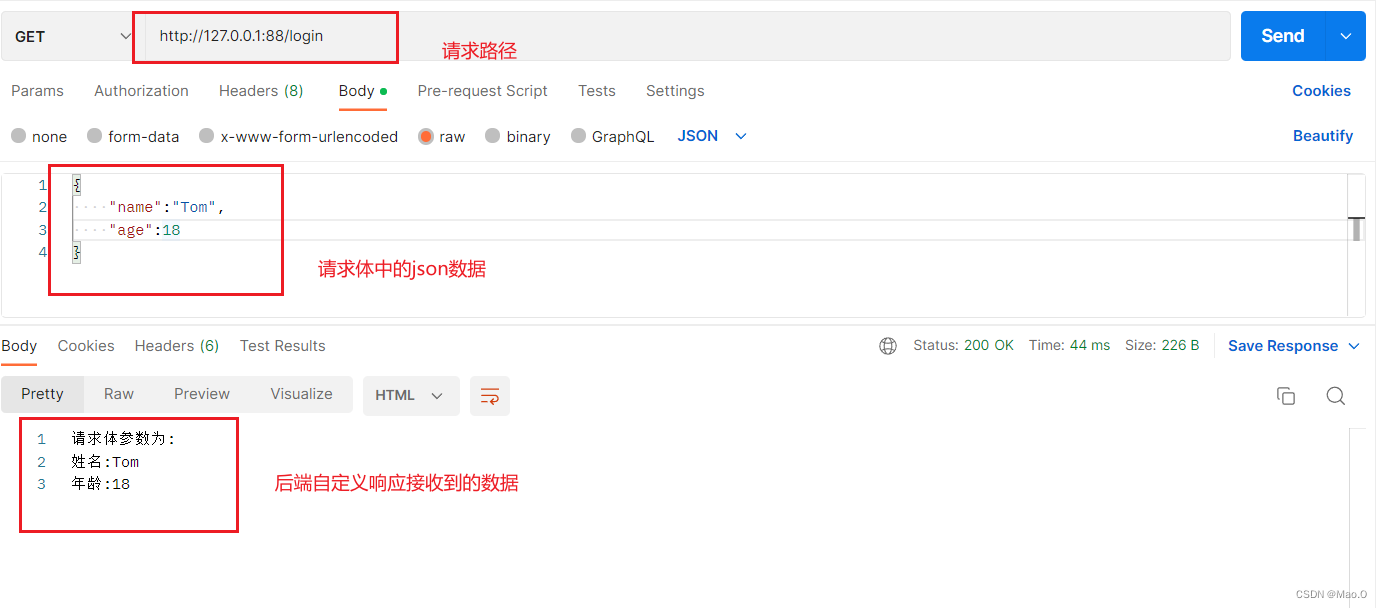

Node.js笔记-Express(基于Node.js的web开发框架)

目录 Express概述 Express安装 基本使用 创建服务器 编写请求接口 接收请求参数 获取路径参数(/login/2) 静态资源托管-express.static(内置中间件) 什么是静态资源托管? express.static() 应用举例 托管多个静态资源 挂载路径前缀…...

力扣sql简单篇练习(十五)

力扣sql简单篇练习(十五) 1 直线上的最近距离 1.1 题目内容 1.1.1 基本题目信息 1.1.2 示例输入输出 1.2 示例sql语句 SELECT min(abs(p1.x-p2.x)) shortest FROM point p1 INNER JOIN point p2 ON p1.x <>p2.x1.3 运行截图 2 只出现一次的最大数字 2.1 题目内容 2…...

浅谈动态代理

什么是动态代理?以下为个人理解:动态代理就是在程序运行的期间,动态地针对对象的方法进行增强操作。并且这个动作的执行者已经不是"this"对象了,而是我们创建的代理对象,这个代理对象就是类似中间人的角色,帮…...

Idea超好用的管理工具ToolBox(附带idea工具)

文章目录为什么要用ToolBox总结idea管理安装、更新、卸载寻找ide配置、根路径idea使用准备工作配置为什么要用ToolBox 快速轻松地更新,轻松管理您的 JetBrains 工具 安装自动更新同时更新插件和 IDE回滚和降级通过下载补丁或一组补丁而不是整个包,节省维护 IDE 的…...

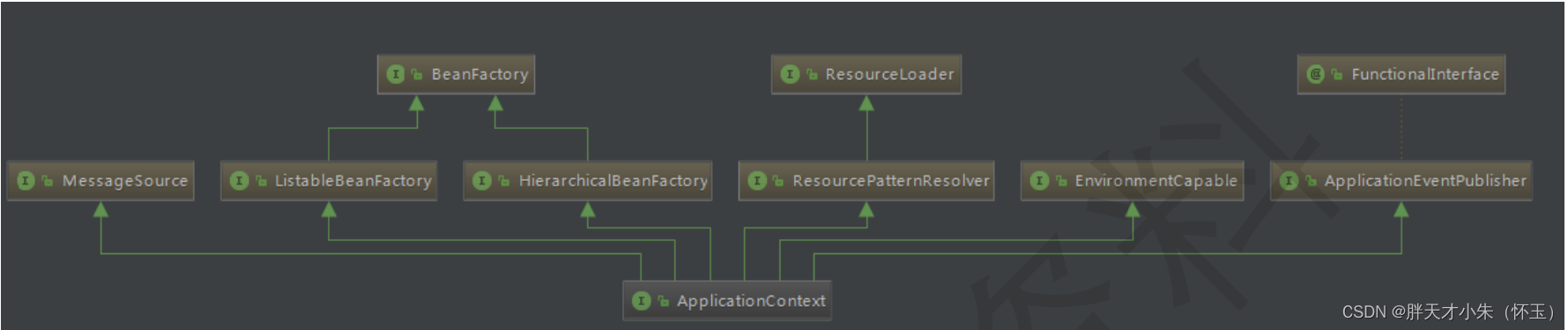

Spring 中 ApplicationContext 和 BeanFactory 的区别

文章目录类图包目录不同国际化强大的事件机制(Event)底层资源的访问延迟加载常用容器类图 包目录不同 spring-beans.jar 中 org.springframework.beans.factory.BeanFactoryspring-context.jar 中 org.springframework.context.ApplicationContext 国际…...

情人节有哪些数码好物值得送礼?情人节实用性强的数码好物推荐

转瞬间,情人节快到了,大家还在为送什么礼物而烦恼?在这个以科技为主的时代,人们正在享受着科技带来的便利,其中,数码产品也成为了日常生活中必不可少的存在。接下来,我来给大家推荐几款比较实用…...

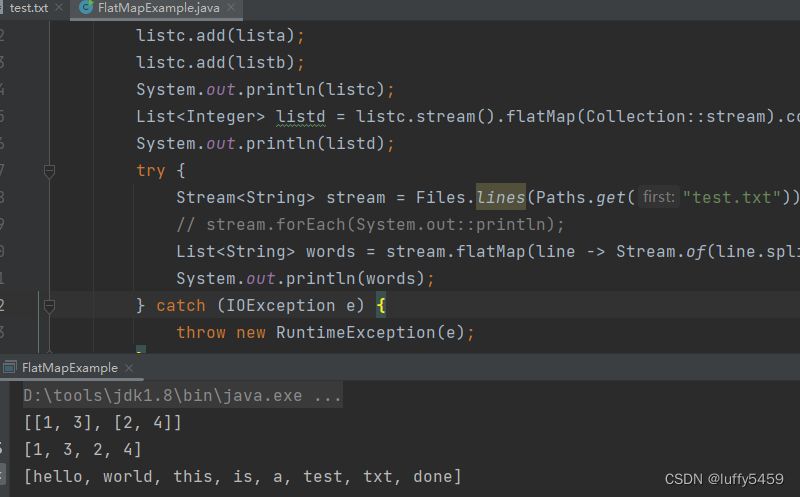

java中flatMap用法

java中map是把集合每个元素重新映射,元素个数不变,但是元素值发生了变化。而flatMap从字面上来说是压平这个映射,实际作用就是将每个元素进行一个一对多的拆分,细分成更小的单元,返回一个新的Stream流,新的…...

)

【MySQL Shell】8.9.2 InnoDB ClusterSet 集群中的不一致事务集(GTID集)

AdminAPI 的 clusterSet.status() 命令警告您,如果 InnoDB 集群的 GTID 集与 InnoDB ClusterSet 中主集群上的 GTID 集不一致。与 InnoDB ClusterSet 中的其他集群相比,处于此状态的集群具有额外的事务,并且具有全局状态 OK_NOT_CONSISTENT 。…...

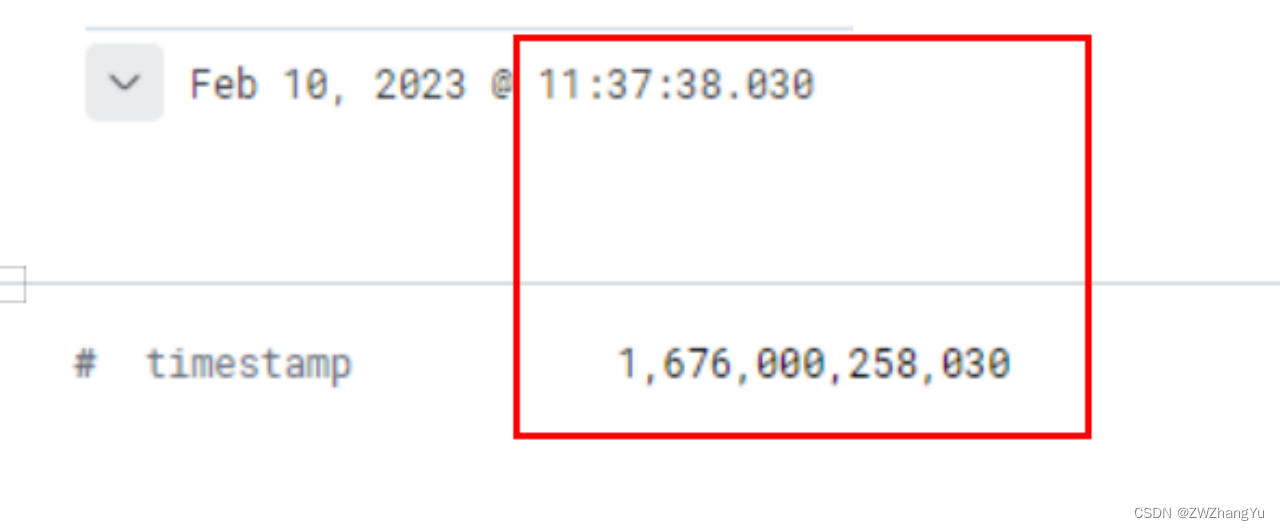

logstash毫秒时间戳转日期以及使用业务日志时间戳替换原始@timestamp

文章目录问题解决方式参考问题 在使用Kibana观察日志排查问题时发现存在很多组的timestamp 数据一样,如下所示 详细观察内部数据发现其中日志数据有一个timestamp字段保存的是业务日志的毫秒级时间戳,经过和timestamp数据对比发现二者的时间不匹配。经…...

【C语言】qsort——回调函数

目录 1.回调函数 2.qsort函数 //整形数组排序 //结构体排序 3.模拟实现qsort //整型数组排序 //结构体排序 1.回调函数 回调函数就是一个通过函数指针调用的函数。如果你把函数的指针(地址)作为参数传递给另一个函数,当这个指针被用来…...

8年软件测试工程师经验感悟

不知不觉在软件测试行业,野蛮生长了8年之久。这一路上拥有了非常多的感受。有迷茫,有踩过坑,有付出有收获, 有坚持! 我一直都在软件测试行业奋战, 毕业时一起入职的好友已经公司内部转岗,去选择…...

Cursor实现用excel数据填充word模版的方法

cursor主页:https://www.cursor.com/ 任务目标:把excel格式的数据里的单元格,按照某一个固定模版填充到word中 文章目录 注意事项逐步生成程序1. 确定格式2. 调试程序 注意事项 直接给一个excel文件和最终呈现的word文件的示例,…...

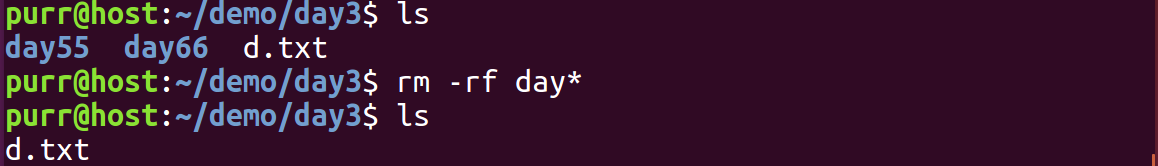

Linux 文件类型,目录与路径,文件与目录管理

文件类型 后面的字符表示文件类型标志 普通文件:-(纯文本文件,二进制文件,数据格式文件) 如文本文件、图片、程序文件等。 目录文件:d(directory) 用来存放其他文件或子目录。 设备…...

PL0语法,分析器实现!

简介 PL/0 是一种简单的编程语言,通常用于教学编译原理。它的语法结构清晰,功能包括常量定义、变量声明、过程(子程序)定义以及基本的控制结构(如条件语句和循环语句)。 PL/0 语法规范 PL/0 是一种教学用的小型编程语言,由 Niklaus Wirth 设计,用于展示编译原理的核…...

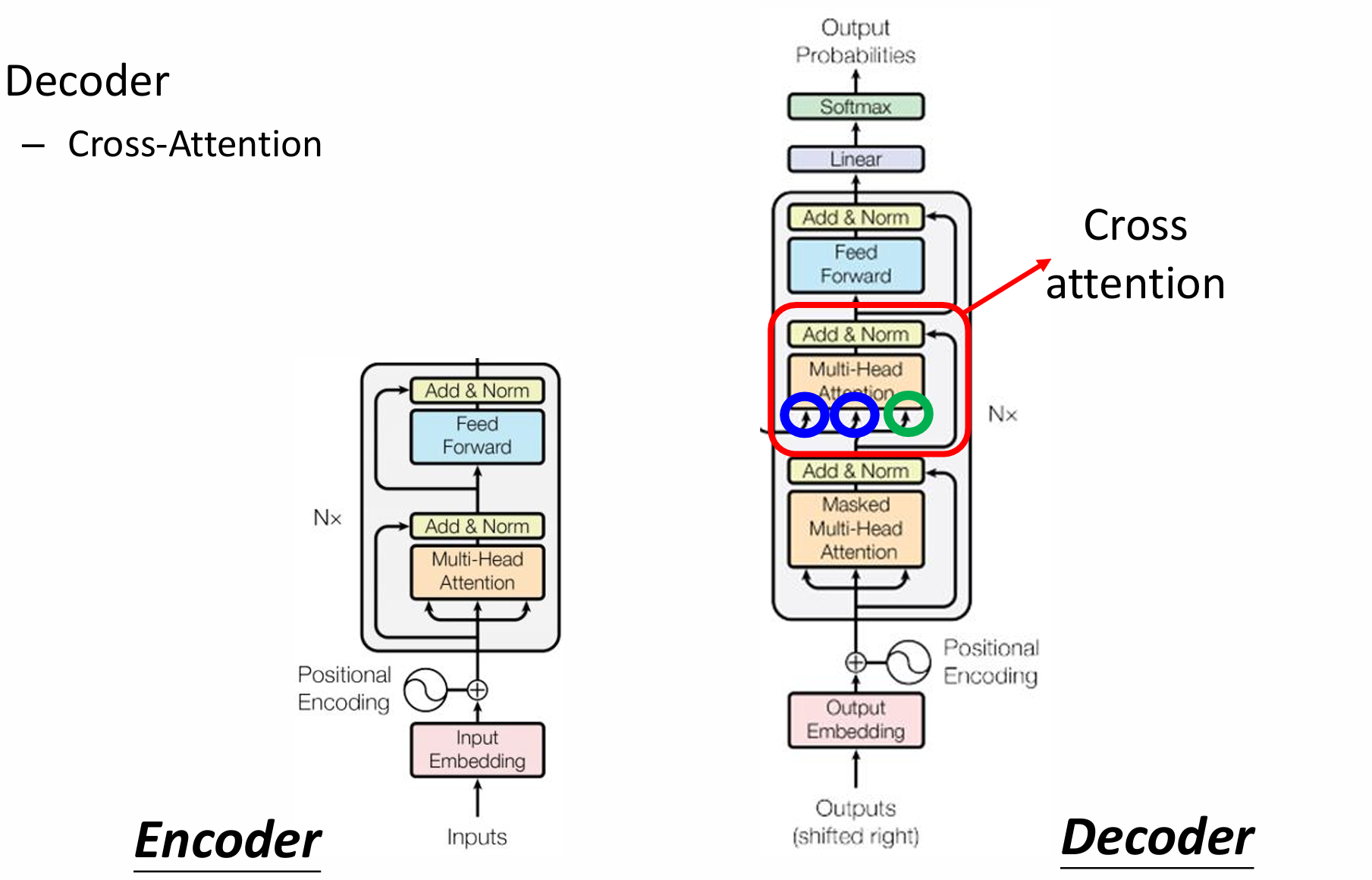

自然语言处理——Transformer

自然语言处理——Transformer 自注意力机制多头注意力机制Transformer 虽然循环神经网络可以对具有序列特性的数据非常有效,它能挖掘数据中的时序信息以及语义信息,但是它有一个很大的缺陷——很难并行化。 我们可以考虑用CNN来替代RNN,但是…...

uniapp中使用aixos 报错

问题: 在uniapp中使用aixos,运行后报如下错误: AxiosError: There is no suitable adapter to dispatch the request since : - adapter xhr is not supported by the environment - adapter http is not available in the build 解决方案&…...

NPOI操作EXCEL文件 ——CAD C# 二次开发

缺点:dll.版本容易加载错误。CAD加载插件时,没有加载所有类库。插件运行过程中用到某个类库,会从CAD的安装目录找,找不到就报错了。 【方案2】让CAD在加载过程中把类库加载到内存 【方案3】是发现缺少了哪个库,就用插件程序加载进…...

在鸿蒙HarmonyOS 5中使用DevEco Studio实现企业微信功能

1. 开发环境准备 安装DevEco Studio 3.1: 从华为开发者官网下载最新版DevEco Studio安装HarmonyOS 5.0 SDK 项目配置: // module.json5 {"module": {"requestPermissions": [{"name": "ohos.permis…...

springboot 日志类切面,接口成功记录日志,失败不记录

springboot 日志类切面,接口成功记录日志,失败不记录 自定义一个注解方法 import java.lang.annotation.ElementType; import java.lang.annotation.Retention; import java.lang.annotation.RetentionPolicy; import java.lang.annotation.Target;/***…...

【深尚想】TPS54618CQRTERQ1汽车级同步降压转换器电源芯片全面解析

1. 元器件定义与技术特点 TPS54618CQRTERQ1 是德州仪器(TI)推出的一款 汽车级同步降压转换器(DC-DC开关稳压器),属于高性能电源管理芯片。核心特性包括: 输入电压范围:2.95V–6V,输…...

开疆智能Ethernet/IP转Modbus网关连接鸣志步进电机驱动器配置案例

在工业自动化控制系统中,常常会遇到不同品牌和通信协议的设备需要协同工作的情况。本案例中,客户现场采用了 罗克韦尔PLC,但需要控制的变频器仅支持 ModbusRTU 协议。为了实现PLC 对变频器的有效控制与监控,引入了开疆智能Etherne…...