Apache HBase(二)

目录

一、Apache HBase

1、HBase Shell操作

1.1、DDL创建修改表格

1、创建命名空间和表格

2、查看表格

3、修改表

4、删除表

1.2、DML写入读取数据

1、写入数据

2、读取数据

3、删除数据

2、大数据软件启动

一、Apache HBase

1、HBase Shell操作

先启动HBase。再进行下面命令行操作。

1、进入HBase客户端命令行

[root@node1 hbase-3.0.0]# bin/hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/export/server/hadoop-3.3.6/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/export/server/hbase-3.0.0/lib/client-facing-thirdparty/log4j-slf4j-impl-2.17.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

For Reference, please visit: http://hbase.apache.org/book.html#shell

Version 3.0.0-beta-1, r119d11c808aefa82d22fef6cd265981506b9dc09, Tue Dec 26 07:40:00 UTC 2023

Took 0.0014 seconds

hbase:001:0>

2、查看帮助命令

能够展示HBase中所有能使用的命令,主要使用的命令有namespace命令空间相关,DDL创建修改表格,DML写入读取数据。

hbase:001:0> help

HBase Shell, version 3.0.0-beta-1, r119d11c808aefa82d22fef6cd265981506b9dc09, Tue Dec 26 07:40:00 UTC 2023

Type 'help "COMMAND"', (e.g. 'help "get"' -- the quotes are necessary) for help on a specific command.

Commands are grouped. Type 'help "COMMAND_GROUP"', (e.g. 'help "general"') for help on a command group.COMMAND GROUPS:Group name: generalCommands: processlist, status, table_help, version, whoamiGroup name: ddlCommands: alter, alter_async, alter_status, clone_table_schema, create, describe, disable, disable_all, drop, drop_all, enable, enable_all, exists, get_table, is_disabled, is_enabled, list, list_disabled_tables, list_enabled_tables, list_regions, locate_region, show_filtersGroup name: namespaceCommands: alter_namespace, create_namespace, describe_namespace, drop_namespace, list_namespace, list_namespace_tablesGroup name: dmlCommands: append, count, delete, deleteall, get, get_counter, get_splits, incr, put, scan, truncate, truncate_preserveGroup name: toolsCommands: assign, balance_switch, balancer, balancer_enabled, catalogjanitor_enabled, catalogjanitor_run, catalogjanitor_switch, cleaner_chore_enabled, cleaner_chore_run, cleaner_chore_switch, clear_block_cache, clear_compaction_queues, clear_deadservers, clear_slowlog_responses, close_region, compact, compact_rs, compaction_state, compaction_switch, decommission_regionservers, flush, flush_master_store, get_balancer_decisions, get_balancer_rejections, get_largelog_responses, get_slowlog_responses, hbck_chore_run, is_in_maintenance_mode, list_deadservers, list_decommissioned_regionservers, list_liveservers, list_unknownservers, major_compact, merge_region, move, normalize, normalizer_enabled, normalizer_switch, recommission_regionserver, regioninfo, rit, snapshot_cleanup_enabled, snapshot_cleanup_switch, split, splitormerge_enabled, splitormerge_switch, stop_master, stop_regionserver, trace, truncate_region, unassign, wal_roll, zk_dumpGroup name: replicationCommands: add_peer, append_peer_exclude_namespaces, append_peer_exclude_tableCFs, append_peer_namespaces, append_peer_tableCFs, disable_peer, disable_table_replication, enable_peer, enable_table_replication, get_peer_config, list_peer_configs, list_peers, list_replicated_tables, peer_modification_enabled, peer_modification_switch, remove_peer, remove_peer_exclude_namespaces, remove_peer_exclude_tableCFs, remove_peer_namespaces, remove_peer_tableCFs, set_peer_bandwidth, set_peer_exclude_namespaces, set_peer_exclude_tableCFs, set_peer_namespaces, set_peer_replicate_all, set_peer_serial, set_peer_tableCFs, show_peer_tableCFs, transit_peer_sync_replication_state, update_peer_configGroup name: snapshotsCommands: clone_snapshot, delete_all_snapshot, delete_snapshot, delete_table_snapshots, list_snapshots, list_table_snapshots, restore_snapshot, snapshotGroup name: configurationCommands: update_all_config, update_config, update_rsgroup_configGroup name: quotasCommands: disable_exceed_throttle_quota, disable_rpc_throttle, enable_exceed_throttle_quota, enable_rpc_throttle, list_quota_snapshots, list_quota_table_sizes, list_quotas, list_snapshot_sizes, set_quotaGroup name: securityCommands: grant, list_security_capabilities, revoke, user_permissionGroup name: proceduresCommands: list_locks, list_proceduresGroup name: visibility labelsCommands: add_labels, clear_auths, get_auths, list_labels, set_auths, set_visibilityGroup name: rsgroupCommands: add_rsgroup, alter_rsgroup_config, balance_rsgroup, get_namespace_rsgroup, get_rsgroup, get_server_rsgroup, get_table_rsgroup, list_rsgroups, move_namespaces_rsgroup, move_servers_namespaces_rsgroup, move_servers_rsgroup, move_servers_tables_rsgroup, move_tables_rsgroup, remove_rsgroup, remove_servers_rsgroup, rename_rsgroup, show_rsgroup_configGroup name: storefiletrackerCommands: change_sft, change_sft_allSHELL USAGE:

Quote all names in HBase Shell such as table and column names. Commas delimit

command parameters. Type <RETURN> after entering a command to run it.

Dictionaries of configuration used in the creation and alteration of tables are

Ruby Hashes. They look like this:{'key1' => 'value1', 'key2' => 'value2', ...}and are opened and closed with curley-braces. Key/values are delimited by the

'=>' character combination. Usually keys are predefined constants such as

NAME, VERSIONS, COMPRESSION, etc. Constants do not need to be quoted. Type

'Object.constants' to see a (messy) list of all constants in the environment.If you are using binary keys or values and need to enter them in the shell, use

double-quote'd hexadecimal representation. For example:hbase> get 't1', "key\x03\x3f\xcd"hbase> get 't1', "key\003\023\011"hbase> put 't1', "test\xef\xff", 'f1:', "\x01\x33\x40"The HBase shell is the (J)Ruby IRB with the above HBase-specific commands added.

For more on the HBase Shell, see http://hbase.apache.org/book.html

hbase:002:0>

查看命令使用方式以create_namespace为例

hbase:002:0> help 'create_namespace'

Create namespace; pass namespace name,

and optionally a dictionary of namespace configuration.

Examples:hbase> create_namespace 'ns1'hbase> create_namespace 'ns1', {'PROPERTY_NAME'=>'PROPERTY_VALUE'}

hbase:003:0>

上面命令中的list相当于数据库中的show命令。

问题:创建namespace报错!

hbase:002:0> create_namespace 'lwztest'

2024-03-24 23:23:21,813 INFO [RPCClient-NioEventLoopGroup-1-1] Configuration.deprecation (Configuration.java:logDeprecation(1395)) - hbase.client.pause.cqtbe is deprecated. Instead, use hbase.client.pause.server.overloaded

2024-03-24 23:23:24,628 WARN [RPCClient-NioEventLoopGroup-1-2] client.AsyncRpcRetryingCaller (AsyncRpcRetryingCaller.java:onError(177 )) - Call to master failed, tries = 6, maxAttempts = 7, timeout = 1200000 ms, time elapsed = 2692 ms

org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: Server is not runn ing yetat org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:423)at org.apache.hadoop.hbase.ipc.RemoteWithExtrasException.instantiateException(RemoteWithExtrasException.java:110)at org.apache.hadoop.hbase.ipc.RemoteWithExtrasException.unwrapRemoteException(RemoteWithExtrasException.java:100)at org.apache.hadoop.hbase.client.ConnectionUtils.translateException(ConnectionUtils.java:217)at org.apache.hadoop.hbase.client.AsyncRpcRetryingCaller.onError(AsyncRpcRetryingCaller.java:165)at org.apache.hadoop.hbase.client.AsyncMasterRequestRpcRetryingCaller.lambda$null$4(AsyncMasterRequestRpcRetryingCaller.java:7 6)at org.apache.hadoop.hbase.util.FutureUtils.lambda$addListener$0(FutureUtils.java:71)at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:760)at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:736)at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:474)at java.util.concurrent.CompletableFuture.completeExceptionally(CompletableFuture.java:1977)at org.apache.hadoop.hbase.client.RawAsyncHBaseAdmin$1.run(RawAsyncHBaseAdmin.java:463)at org.apache.hbase.thirdparty.com.google.protobuf.RpcUtil$1.run(RpcUtil.java:79)at org.apache.hbase.thirdparty.com.google.protobuf.RpcUtil$1.run(RpcUtil.java:70)at org.apache.hadoop.hbase.ipc.AbstractRpcClient.onCallFinished(AbstractRpcClient.java:396)at org.apache.hadoop.hbase.ipc.AbstractRpcClient.access$100(AbstractRpcClient.java:93)at org.apache.hadoop.hbase.ipc.AbstractRpcClient$3.run(AbstractRpcClient.java:429)at org.apache.hadoop.hbase.ipc.AbstractRpcClient$3.run(AbstractRpcClient.java:424)at org.apache.hadoop.hbase.ipc.Call.callComplete(Call.java:117)at org.apache.hadoop.hbase.ipc.Call.setException(Call.java:132)at org.apache.hadoop.hbase.ipc.NettyRpcDuplexHandler.readResponse(NettyRpcDuplexHandler.java:199)at org.apache.hadoop.hbase.ipc.NettyRpcDuplexHandler.channelRead(NettyRpcDuplexHandler.java:220)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:442)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.ja va:412)at org.apache.hbase.thirdparty.io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:346)at org.apache.hbase.thirdparty.io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:318)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:444)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.ja va:412)at org.apache.hbase.thirdparty.io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:442)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.ja va:412)at org.apache.hbase.thirdparty.io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:141 0)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:440)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)at org.apache.hbase.thirdparty.io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)at org.apache.hbase.thirdparty.io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166)at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:788)at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:724)at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:650)at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:562)at org.apache.hbase.thirdparty.io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:997)at org.apache.hbase.thirdparty.io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)at org.apache.hbase.thirdparty.io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.hbase.ipc.RemoteWithExtrasException(org.apache.hadoop.hbase.ipc.ServerNotRunningYetException): org.apache .hadoop.hbase.ipc.ServerNotRunningYetException: Server is not running yetat org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)at org.apache.hadoop.hbase.ipc.AbstractRpcClient.onCallFinished(AbstractRpcClient.java:391)... 32 more

2024-03-24 23:23:26,673 WARN [RPCClient-NioEventLoopGroup-1-2] client.AsyncRpcRetryingCaller (AsyncRpcRetryingCaller.java:onError(177 )) - Call to master failed, tries = 7, maxAttempts = 7, timeout = 1200000 ms, time elapsed = 4739 ms

org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: Server is not runn ing yetat org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:423)at org.apache.hadoop.hbase.ipc.RemoteWithExtrasException.instantiateException(RemoteWithExtrasException.java:110)at org.apache.hadoop.hbase.ipc.RemoteWithExtrasException.unwrapRemoteException(RemoteWithExtrasException.java:100)at org.apache.hadoop.hbase.client.ConnectionUtils.translateException(ConnectionUtils.java:217)at org.apache.hadoop.hbase.client.AsyncRpcRetryingCaller.onError(AsyncRpcRetryingCaller.java:165)at org.apache.hadoop.hbase.client.AsyncMasterRequestRpcRetryingCaller.lambda$null$4(AsyncMasterRequestRpcRetryingCaller.java:7 6)at org.apache.hadoop.hbase.util.FutureUtils.lambda$addListener$0(FutureUtils.java:71)at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:760)at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:736)at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:474)at java.util.concurrent.CompletableFuture.completeExceptionally(CompletableFuture.java:1977)at org.apache.hadoop.hbase.client.RawAsyncHBaseAdmin$1.run(RawAsyncHBaseAdmin.java:463)at org.apache.hbase.thirdparty.com.google.protobuf.RpcUtil$1.run(RpcUtil.java:79)at org.apache.hbase.thirdparty.com.google.protobuf.RpcUtil$1.run(RpcUtil.java:70)at org.apache.hadoop.hbase.ipc.AbstractRpcClient.onCallFinished(AbstractRpcClient.java:396)at org.apache.hadoop.hbase.ipc.AbstractRpcClient.access$100(AbstractRpcClient.java:93)at org.apache.hadoop.hbase.ipc.AbstractRpcClient$3.run(AbstractRpcClient.java:429)at org.apache.hadoop.hbase.ipc.AbstractRpcClient$3.run(AbstractRpcClient.java:424)at org.apache.hadoop.hbase.ipc.Call.callComplete(Call.java:117)at org.apache.hadoop.hbase.ipc.Call.setException(Call.java:132)at org.apache.hadoop.hbase.ipc.NettyRpcDuplexHandler.readResponse(NettyRpcDuplexHandler.java:199)at org.apache.hadoop.hbase.ipc.NettyRpcDuplexHandler.channelRead(NettyRpcDuplexHandler.java:220)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:442)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.ja va:412)at org.apache.hbase.thirdparty.io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:346)at org.apache.hbase.thirdparty.io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:318)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:444)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.ja va:412)at org.apache.hbase.thirdparty.io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:442)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.ja va:412)at org.apache.hbase.thirdparty.io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:141 0)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:440)at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext. java:420)at org.apache.hbase.thirdparty.io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)at org.apache.hbase.thirdparty.io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166)at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:788)at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:724)at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:650)at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:562)at org.apache.hbase.thirdparty.io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:997)at org.apache.hbase.thirdparty.io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)at org.apache.hbase.thirdparty.io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.hbase.ipc.RemoteWithExtrasException(org.apache.hadoop.hbase.ipc.ServerNotRunningYetException): org.apache .hadoop.hbase.ipc.ServerNotRunningYetException: Server is not running yetat org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)at org.apache.hadoop.hbase.ipc.AbstractRpcClient.onCallFinished(AbstractRpcClient.java:391)... 32 more

2024-03-24 23:23:26,693 INFO [RPCClient-NioEventLoopGroup-1-2] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onError(2730)) - Opera tion: CREATE_NAMESPACE, Namespace: lwztest failed with Failed after attempts=7, exceptions:

2024-03-24T15:23:22.437Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yetat org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)2024-03-24T15:23:22.561Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yetat org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)2024-03-24T15:23:22.777Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yetat org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)2024-03-24T15:23:23.084Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yetat org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)2024-03-24T15:23:23.599Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yetat org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)2024-03-24T15:23:24.637Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yetat org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)2024-03-24T15:23:26.676Z, org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: org.apache.hadoop.hbase.ipc.ServerNotRunningYetExc eption: Server is not running yetat org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)ERROR: org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: Server is not running yetat org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:3288)at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:3294)at org.apache.hadoop.hbase.master.HMaster.createNamespace(HMaster.java:3471)at org.apache.hadoop.hbase.master.MasterRpcServices.createNamespace(MasterRpcServices.java:770)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:437)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:124)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:102)at org.apache.hadoop.hbase.ipc.RpcHandler.run(RpcHandler.java:82)For usage try 'help "create_namespace"'解决方式:

1. 停止hbase集群

2. 在配置文件hbase-site.xml 文件中增加如下配置-->分发到集群其他机器

<property><name>hbase.wal.provider</name><value>filesystem</value>

</property>3. 重启hbase集群

1.1、DDL创建修改表格

1、创建命名空间和表格

此时操作成功!

hbase:001:0> create_namespace 'lwztest'

2024-03-24 23:33:51,354 INFO [RPCClient-NioEventLoopGroup-1-1] Configuration.deprecation (Configuration.java:logDeprecation(1395)) - hbase.client.pause.cqtbe is deprecated. Instead, use hbase.client.pause.server.overloaded

2024-03-24 23:33:51,653 INFO [RPCClient-NioEventLoopGroup-1-2] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2725)) - Operation: CREATE_NAMESPACE, Namespace: lwztest completed

Took 1.3931 seconds

hbase:002:0> list

TABLE

0 row(s)

Took 0.0251 seconds

=> []

hbase:003:0> list_namespace

NAMESPACE

default

hbase

lwztest

3 row(s)

Took 0.0534 seconds

hbase:004:0> help 'create'

Creates a table. Pass a table name, and a set of column family

specifications (at least one), and, optionally, table configuration.

Column specification can be a simple string (name), or a dictionary

(dictionaries are described below in main help output), necessarily

including NAME attribute.

Examples:Create a table with namespace=ns1 and table qualifier=t1hbase> create 'ns1:t1', {NAME => 'f1', VERSIONS => 5}Create a table with namespace=default and table qualifier=t1hbase> create 't1', {NAME => 'f1'}, {NAME => 'f2'}, {NAME => 'f3'}hbase> # The above in shorthand would be the following:hbase> create 't1', 'f1', 'f2', 'f3'hbase> create 't1', {NAME => 'f1', VERSIONS => 1, TTL => 2592000, BLOCKCACHE => true}hbase> create 't1', {NAME => 'f1', CONFIGURATION => {'hbase.hstore.blockingStoreFiles' => '10'}}hbase> create 't1', {NAME => 'f1', IS_MOB => true, MOB_THRESHOLD => 1000000, MOB_COMPACT_PARTITION_POLICY => 'weekly'}Table configuration options can be put at the end.

Examples:hbase> create 'ns1:t1', 'f1', SPLITS => ['10', '20', '30', '40']hbase> create 't1', 'f1', SPLITS => ['10', '20', '30', '40']hbase> create 't1', 'f1', SPLITS_FILE => 'splits.txt'hbase> create 't1', {NAME => 'f1', VERSIONS => 5}, METADATA => { 'mykey' => 'myvalue' }hbase> # Optionally pre-split the table into NUMREGIONS, usinghbase> # SPLITALGO ("HexStringSplit", "UniformSplit" or classname)hbase> create 't1', 'f1', {NUMREGIONS => 15, SPLITALGO => 'HexStringSplit'}hbase> create 't1', 'f1', {NUMREGIONS => 15, SPLITALGO => 'HexStringSplit', REGION_REPLICATION => 2, CONFIGURATION => {'hbase.hregion.scan.loadColumnFamiliesOnDemand' => 'true'}}hbase> create 't1', 'f1', {SPLIT_ENABLED => false, MERGE_ENABLED => false}hbase> create 't1', {NAME => 'f1', DFS_REPLICATION => 1}You can also keep around a reference to the created table:hbase> t1 = create 't1', 'f1'Which gives you a reference to the table named 't1', on which you can then

call methods.

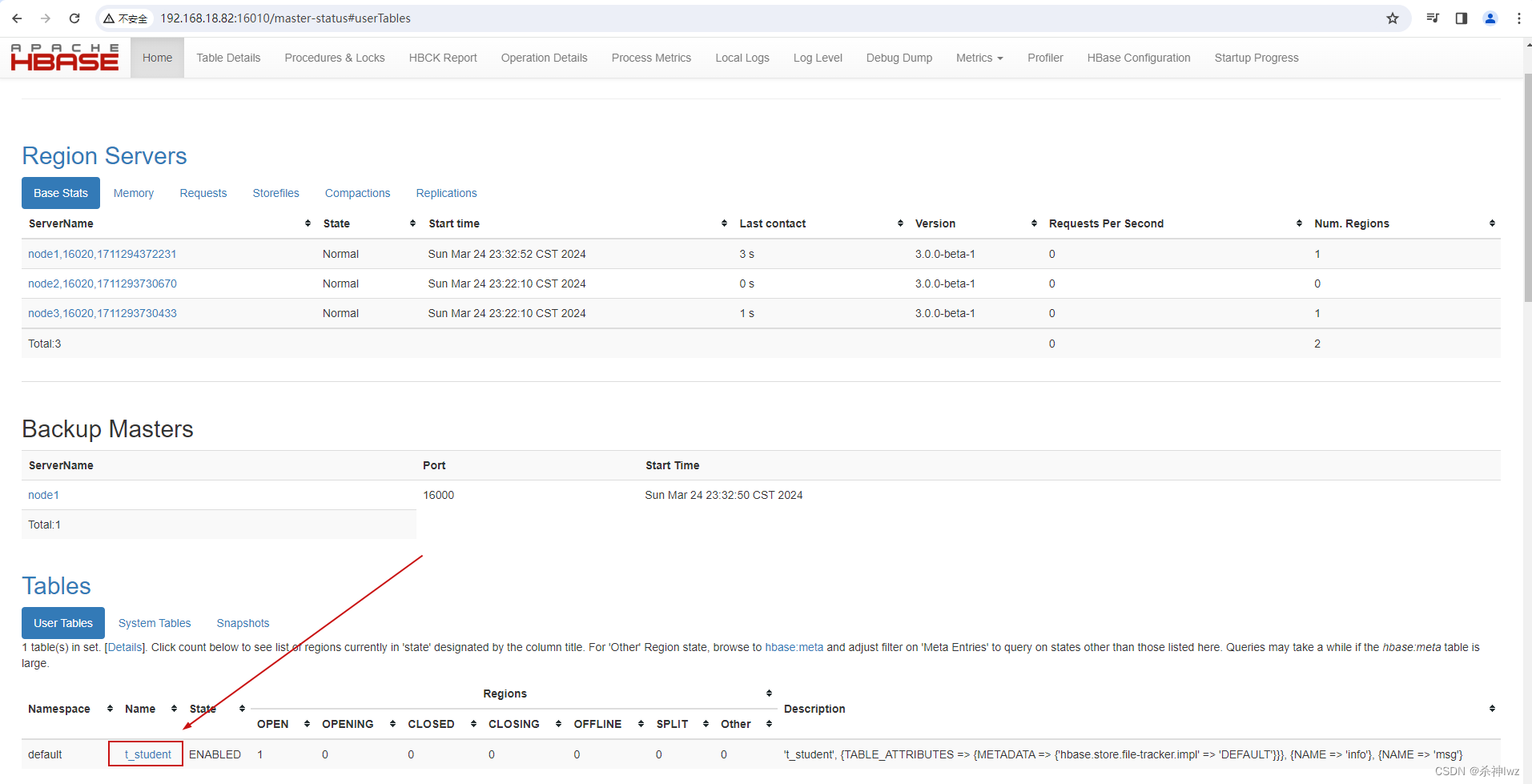

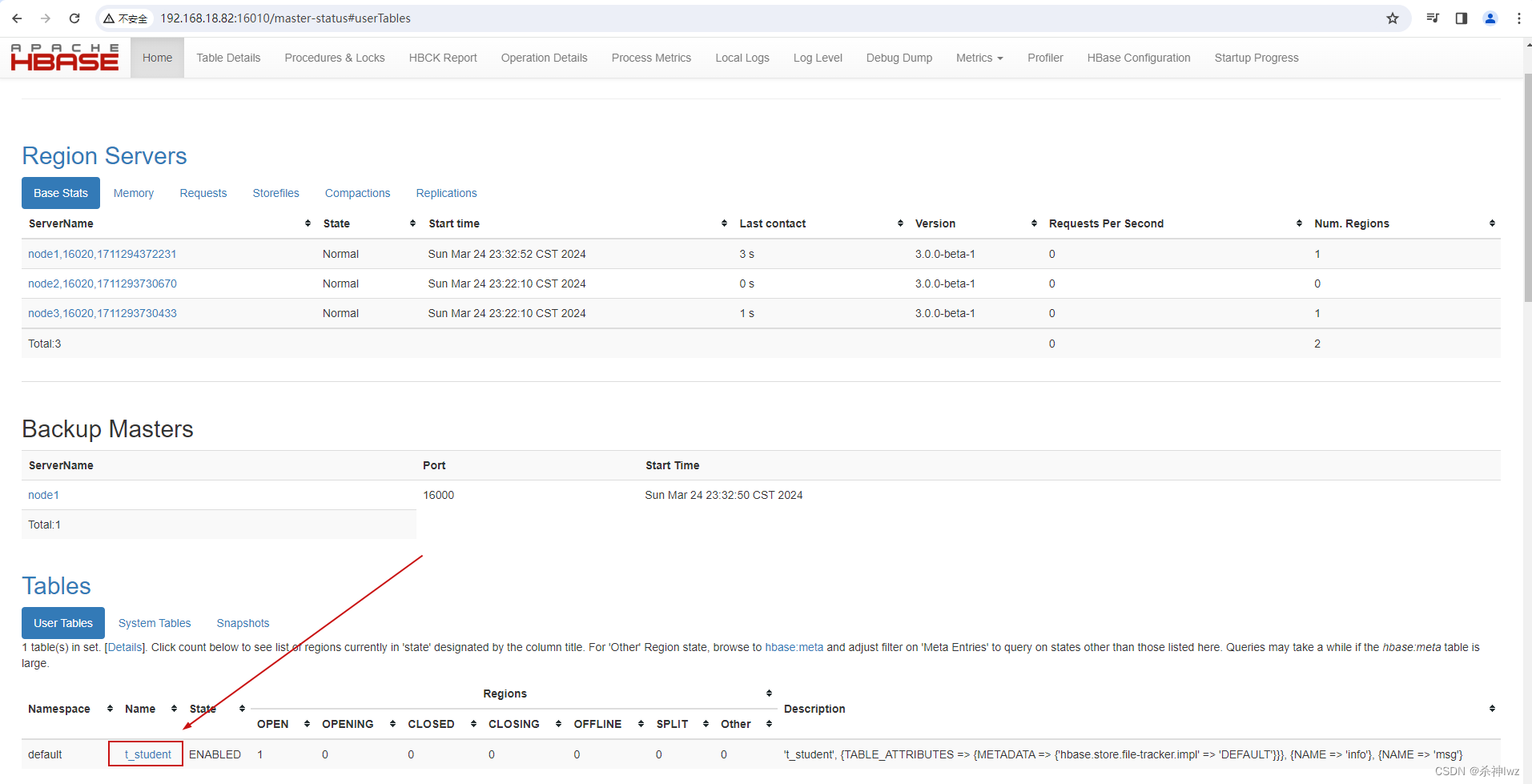

# 创建表

hbase:005:0> create 't_student','info','msg'

Created table t_student

2024-03-25 00:01:27,754 INFO [RPCClient-NioEventLoopGroup-1-3] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2701)) - Operation: CREATE, Table Name: default:t_student completed

Took 1.2346 seconds

=> Hbase::Table - t_student

hbase:006:0> list

TABLE

t_student

1 row(s)

Took 0.0084 seconds

=> ["t_student"]

2、查看表格

#创建表格

hbase:003:0> create 'lwztest:t_person', {NAME => 'info', VERSIONS => 5}, {NAME => 'msg', VERSIONS => 5}

Created table lwztest:t_person

2024-03-27 23:38:15,261 INFO [RPCClient-NioEventLoopGroup-1-3] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2701)) - Operation: CREATE, Table Name: lwztest:t_person completed

Took 1.2760 seconds

=> Hbase::Table - lwztest:t_person

#查看表格信息

hbase:005:0> describe 'lwztest:t_person'

Table lwztest:t_person is ENABLED

lwztest:t_person, {TABLE_ATTRIBUTES => {METADATA => {'hbase.store.file-tracker.impl' => 'DEFAULT'}}}

COLUMN FAMILIES DESCRIPTION

{NAME => 'info', INDEX_BLOCK_ENCODING => 'NONE', VERSIONS => '5', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', M

IN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE =>'65536 B (64KB)'}{NAME => 'msg', INDEX_BLOCK_ENCODING => 'NONE', VERSIONS => '5', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MI

N_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE =>

'65536 B (64KB)'}2 row(s)

Quota is disabled

Took 0.1988 seconds

hbase:006:0>

3、修改表

alter

#修改表格

hbase:006:0> alter 't_student',{NAME =>'info',VERSIONS => 3}

Updating all regions with the new schema...

2024-03-27 23:45:24,377 INFO [RPCClient-NioEventLoopGroup-1-8] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2701)) - Operation: MODIFY, Table Name: default:t_student completed

Took 0.9728 seconds

hbase:008:0> describe 't_student'

Table t_student is ENABLED

t_student, {TABLE_ATTRIBUTES => {METADATA => {'hbase.store.file-tracker.impl' => 'DEFAULT'}}}

COLUMN FAMILIES DESCRIPTION

{NAME => 'info', INDEX_BLOCK_ENCODING => 'NONE', VERSIONS => '3', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', M

IN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE =>'65536 B (64KB)'}

{NAME => 'msg', INDEX_BLOCK_ENCODING => 'NONE', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MI

N_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE =>

'65536 B (64KB)'}

2 row(s)

Quota is disabled

Took 0.0436 seconds#删除信息使用特殊的语法

hbase:009:0> alter 't_student',NAME => 'msg',METHOD => 'delete'

Updating all regions with the new schema...

2024-03-27 23:47:58,313 INFO [RPCClient-NioEventLoopGroup-1-8] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2701)) - Operation: MODIFY, Table Name: default:t_student completed

Took 0.8596 seconds

hbase:010:0> describe 't_student'

Table t_student is ENABLED

t_student, {TABLE_ATTRIBUTES => {METADATA => {'hbase.store.file-tracker.impl' => 'DEFAULT'}}}

COLUMN FAMILIES DESCRIPTION

{NAME => 'info', INDEX_BLOCK_ENCODING => 'NONE', VERSIONS => '3', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', M

IN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE =>'65536 B (64KB)'}

1 row(s)

Quota is disabled

Took 0.0453 seconds

#删除方式2

hbase:011:0>alter 't_student','delete' => 'msg'

4、删除表

shell中删除表格,需要先将表格状态设置为不可用

#表格设置为不可用

hbase:011:0> disable 't_student'

2024-03-27 23:51:11,305 INFO [RPCClient-NioEventLoopGroup-1-8] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2701)) - Operation: DISABLE, Table Name: default:t_student completed

Took 0.6848 seconds

hbase:012:0> describe 't_student'

Table t_student is DISABLED

t_student, {TABLE_ATTRIBUTES => {METADATA => {'hbase.store.file-tracker.impl' => 'DEFAULT'}}}

COLUMN FAMILIES DESCRIPTION

{NAME => 'info', INDEX_BLOCK_ENCODING => 'NONE', VERSIONS => '3', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', M

IN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE =>'65536 B (64KB)'}

1 row(s)

Quota is disabled

Took 0.0353 seconds#删除表

hbase:013:0> drop 't_student'

2024-03-27 23:51:58,684 INFO [RPCClient-NioEventLoopGroup-1-8] client.AsyncHBaseAdmin (RawAsyncHBaseAdmin.java:onFinished(2701)) - Operation: DELETE, Table Name: default:t_student completed

Took 0.3649 seconds

hbase:014:0>

1.2、DML写入读取数据

1、写入数据

在HBase中如果想要写入数据,只能添加结构中最底层的cell。可以手动写入时间戳指定cell的版本,推荐不写默认使用当前的系统时间。

#写入数据

hbase:001:0> put 'lwztest:t_person','1001','info:name','zhangsan'

2024-03-28 23:11:11,460 INFO [RPCClient-NioEventLoopGroup-1-1] Configuration.deprecation (Configuration.java:logDeprecation(1395)) - hbase.client.pause.cqtbe is deprecated. Instead, use hbase.client.pause.server.overloaded

Took 0.2648 seconds

hbase:002:0> put 'lwztest:t_person','1001','info:name','zhangsan'

Took 0.0190 seconds

hbase:003:0> put 'lwztest:t_person','1001','info:name','lisi'

Took 0.0212 seconds

hbase:004:0> put 'lwztest:t_person','1001','info:age','20'

Took 0.0139 seconds

2、读取数据

读取数据的方法有两个:get和scan。

get最大范围是一行数据,也可以进行列的过滤,读取数据的结果为多行cell。

#读取数据

hbase:006:0> get 'lwztest:t_person','1001'

COLUMN CELLinfo:age timestamp=2024-03-28T23:13:30.749, value=20info:name timestamp=2024-03-28T23:13:11.586, value=lisi

1 row(s)

Took 0.1166 seconds

#读取数据

hbase:007:0> scan 'lwztest:t_person'

ROW COLUMN+CELL1001 column=info:age, timestamp=2024-03-28T23:13:30.749, value=201001 column=info:name, timestamp=2024-03-28T23:13:11.586, value=lisi

1 row(s)

Took 0.0394 seconds

3、删除数据

删除数据的方法有两个:delete和deleteall。

delete表示删除一个版本的数据,即为1个cell,不填写版本默认删除最新的一个版本。

deleteall表示删除所有版本的数据,即为当前行当前列的多个cell。(执行命令会标记数据为要删除,不会直接将数据彻底删除,删除数据只在特定时期清理磁盘时进行)

#删除数据

hbase:008:0> delete 'lwztest:t_person','1001','info:name'

Took 0.0254 seconds

hbase:009:0> scan 'lwztest:t_person'

ROW COLUMN+CELL1001 column=info:age, timestamp=2024-03-28T23:13:30.749, value=201001 column=info:name, timestamp=2024-03-28T23:12:52.937, value=zhangsan

1 row(s)

Took 0.0130 seconds

#删除所有版本数据

hbase:010:0> deleteall 'lwztest:t_person','1001','info:name'

Took 0.0107 seconds

hbase:011:0> scan 'lwztest:t_person'

ROW COLUMN+CELL1001 column=info:age, timestamp=2024-03-28T23:13:30.749, value=20

1 row(s)

Took 0.0083 seconds

hbase:012:0>

2、大数据软件启动

由于大数据相关的软件非常多,启动和关闭比较麻烦...自己写一个脚本,直接启动一个脚本把所有软件都起来。按照实际情况编写。

lwz-start.sh

#!/bin/bash

echo "hadoop startup..."

start-all.shecho "zookeeper startup..."

zkServer.sh startssh root@node2 << eeooff

zkServer.sh start

exit

eeooff

ssh root@node3 << eeooff

zkServer.sh start

exit

eeooffecho "hive startup..."

nohup /export/server/hive-3.1.3/bin/hive --service metastore &

nohup /export/server/hive-3.1.3/bin/hive --service hiveserver2 &echo "HBase startup..."

start-hbase.shecho done!lwz-stop.sh

#!/bin/bash

echo "hadoop stop..."

stop-all.shecho "zookeeper stop..."

zkServer.sh stopssh root@node2 << eeooff

zkServer.sh stop

exit

eeooff

ssh root@node3 << eeooff

zkServer.sh stop

exit

eeooffecho "hive stop..."echo "HBase stop..."

hbase-daemon.sh stop master

hbase-daemon.sh stop regionserverecho done!3、HBase API

创建一个maven项目,在pom.xml中添加依赖

注意:会报错javax.el包不存在,是一个测试用的依赖,不影响使用

<dependencies><dependency><groupId>org.apache.hbase</groupId><artifactId>hbase-server</artifactId><version>3.0.0-beta-1</version><exclusions><exclusion><groupId>org.glassfish</groupId><artifactId>javax.el</artifactId></exclusion></exclusions></dependency><dependency><groupId>org.apache.hbase</groupId><artifactId>hbase-client</artifactId><version>3.0.0-beta-1</version></dependency><dependency><groupId>org.glassfish</groupId><artifactId>javax.el</artifactId><version>3.0.1-b12</version></dependency>

</dependencies>Apache HBase(一)

请记住,你当下的结果,由过去决定;你现在的努力,在未来见效;

不断学习才能不断提高!磨炼,不断磨炼自己的技能!学习伴随我们终生!

生如蝼蚁,当立鸿鹄之志,命比纸薄,应有不屈之心。

乾坤未定,你我皆是黑马,若乾坤已定,谁敢说我不能逆转乾坤?

努力吧,机会永远是留给那些有准备的人,否则,机会来了,没有实力,只能眼睁睁地看着机会溜走。

相关文章:

Apache HBase(二)

目录 一、Apache HBase 1、HBase Shell操作 1.1、DDL创建修改表格 1、创建命名空间和表格 2、查看表格 3、修改表 4、删除表 1.2、DML写入读取数据 1、写入数据 2、读取数据 3、删除数据 2、大数据软件启动 一、Apache HBase 1、HBase Shell操作 先启动HBase。再…...

【设计模式】原型模式详解

概述 用一个已经创建的实例作为原型,通过复制该原型对象来创建一个和原型对象相同的新对象 结构 抽象原型类:规定了具体原型对象必须实现的clone()方法具体原型类:实现抽象原型类的clone()方法,它是可以被复制的对象。访问类&…...

企微侧边栏开发(内部应用内嵌H5)

一、背景 公司的业务需要用企业微信和客户进行沟通,而客户的个人信息基本都存储在内部CRM系统中,对于销售来说需要一边看企微,一边去内部CRM系统查询,比较麻烦,希望能在企微增加一个侧边栏展示客户的详细信息…...

如何确定最优的石油管道位置

如何确定最优的石油管道位置 一、前言二、问题概述三、理解问题的几何性质四、转化为数学问题五、寻找最优解六、算法设计6.1伪代码6.2 C代码七算法效率和实际应用7.1时间效率分析7.2 空间效率分析结论一、前言 当我们面对建设大型输油管道的复杂任务时,确保效率和成本效益是…...

FPGA 图像边缘检测(Canny算子)

1 顶层代码 timescale 1ns / 1ps //边缘检测二阶微分算子:canny算子module image_canny_edge_detect (input clk,input reset, //复位高电平有效input [10:0] img_width,input [ 9:0] img_height,input [ 7:0] low_threshold,input [ 7:0] high_threshold,input va…...

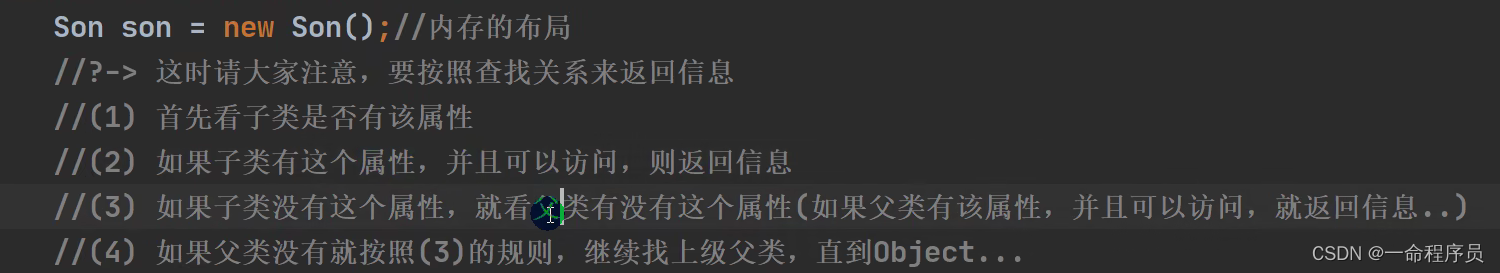

2024.3.28学习笔记

今日学习韩顺平java0200_韩顺平Java_对象机制练习_哔哩哔哩_bilibili 今日学习p286-p294 继承 继承可以解决代码复用,让我们的编程更加靠近人类思维,当多个类存在相同的属性和方法时,可以从这些类中抽象出父类,在父类中定义这些…...

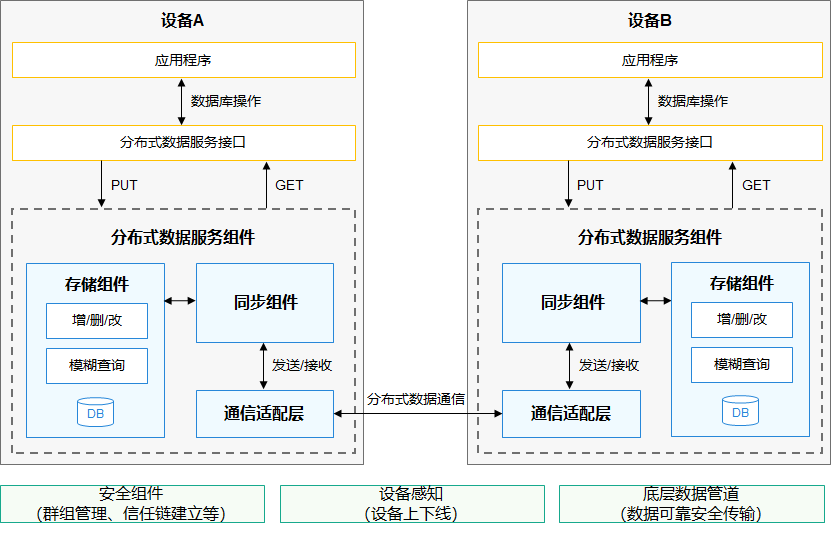

33.HarmonyOS App(JAVA)鸿蒙系统app数据库增删改查

33.HarmonyOS App(JAVA)鸿蒙系统app数据库增删改查 关系数据库 关系对象数据库(ORM) 应用偏好数据库 分布式数据库 关系型数据库(Relational Database,RDB)是一种基于关系模型来管理数据的数据库。HarmonyOS关系型…...

寄主机显示器被快递搞坏了怎么办?怎么破?

大家好,我是平泽裕也。 最近,我在社区里看到很多关于开学后弟弟寄来的电脑显示器被快递损坏的帖子。 看到它真的让我感到难过。 如果有人的数码产品被快递损坏了,我会伤心很久。 那么今天就跟大家聊聊寄快递的一些小技巧。 作为一名曾经的…...

python爬虫-bs4

python爬虫-bs4 目录 python爬虫-bs4说明安装导入 基础用法解析对象获取文本Tag对象获取HTML中的标签内容find参数获取标签属性获取所有标签获取标签名嵌套获取子节点和父节点 说明 BeautifulSoup 是一个HTML/XML的解析器,主要的功能也是如何解析和提取 HTML/XML 数…...

SpringBoot学习之ElasticSearch下载安装和启动(Mac版)(三十一)

本篇是接上一篇Windows版本,需要Windows版本的请看上一篇,这里我们继续把Elasticsearch简称为ES,以下都是这样。 一、下载 登录Elasticsearch官网,地址是:Download Elasticsearch | Elastic 进入以后,网页会自动识别系统给你提示Mac版本的下载链接按钮 二、安装 下载…...

OC对象 - Block解决循环引用

文章目录 OC对象 - Block解决循环引用前言1. 循环引用示例1.1 分析 2. 解决思路3. ARC下3.1 __weak3.2 __unsafe_unretained3.3 __block 4. MRC下4.1 __unsafe_unretain....4.1 __block 5. 总结5.1 ARC下5.2 MRC下 OC对象 - Block解决循环引用 前言 本章将会通过一个循环引用…...

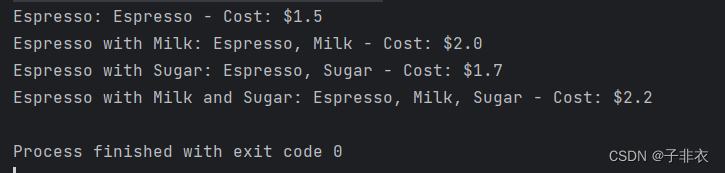

Java设计模式之装饰器模式

装饰器模式是一种结构型设计模式,它允许动态地将责任附加到对象上。装饰器模式是通过创建一个包装对象,也就是装饰器,来包裹真实对象,从而实现对真实对象的功能增强。装饰器模式可以在不修改原有对象的情况下,动态地添…...

)

Java基础知识总结(25)

代理模式 什么是代理模式? 代理模式是指,为其他对象提供一种代理以控制这个对象的访问。一个对象不适合或者不能直接引用另一个对象,而代理对象可以在客户和目标对象之间起到中介的作用。换句话说,代理模式,是在不修…...

Vue3 实现基于token 用户登录

前后端分离情况下,实现的大致思路 1 第一次登录的时候,前端调用后端的登录接口,发送用户名与密码 2 后端收到请求,验证用户名和密码,验证成功 给前端返回一个token 3 前段拿到token 将token 存储进localStorage 和…...

在word中显示Euclid Math One公式的问题及解决(latex公式,无需插件)

问题:想要在word中显示形如latex中的花体字母 网上大多解决办法是安装Euclid Math One。安装后发现单独的符号插入可行,但是公式中选择该字体时依然显示默认字体。 解决办法:插入公式后,勾选左上角的latex 在公式块中键入latex代码…...

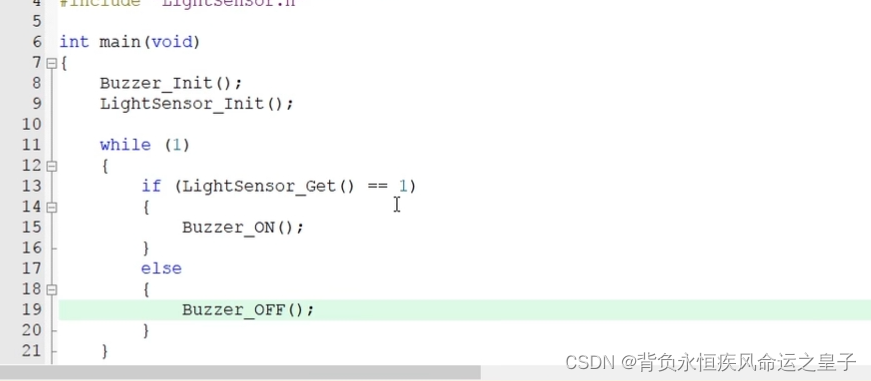

江协科技STM32:按键控制LED光敏传感器控制蜂鸣器

按键控制LED LED模块 左上角PA0用上拉输入模式,如果此时引脚悬空,PA0就是高电平,这种方式下,按下按键,引脚为低电平,松下按键,引脚为高电平 右上角PA0,把上拉电阻想象成弹簧 当按键…...

最佳矢量绘图设计软件Sketch for Mac v99.5 最新中文激活版

Sketch for Mac是一款功能强大的矢量绘图软件,它提供了简单易用的界面和丰富的工具,让用户能够轻松创建精美的设计作品。 软件下载:Sketch for Mac v99.5 最新中文激活版 Sketch具有直观的布局和智能的工具,使得设计师能够快速实现…...

【IntelliJ IDEA】运行测试报错解决方案(附图)

IntelliJ IDEA 版本 2023.3.4 (Ultimate Edition) 测试报错信息 命令行过长。 通过 JAR 清单或通过类路径文件缩短命令行,然后重新运行 解决方案 修改运行配置,里面如果没有缩短命令行,需要再修改选项里面勾选缩短命令行让其显示&#x…...

【Kotlin】List、Set、Map简介

1 List Java 的 List、Set、Map 介绍见 → Java容器及其常用方法汇总。 1.1 创建 List 1.1.1 emptyList var list emptyList<String>() // 创建空List 1.1.2 List 构造函数 var list1 List(3) { "abc" } // [abc, abc, abc] var list2 ArrayList<In…...

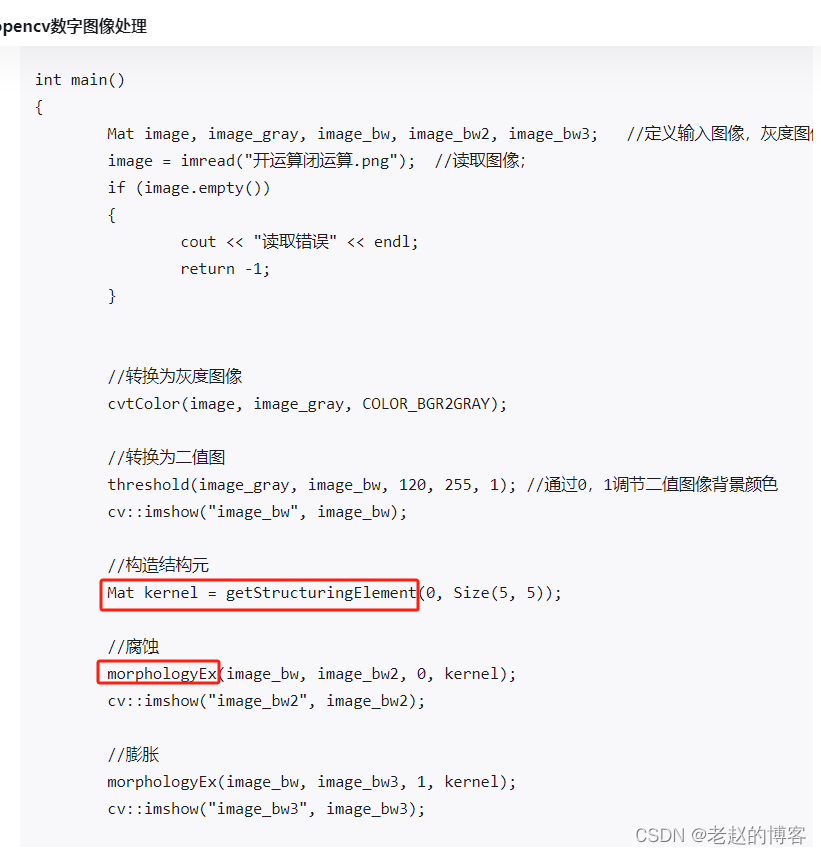

OpenCV 形态学处理函数

四、形态学处理(膨胀,腐蚀,开闭运算)_getstructuringelement()函数作用-CSDN博客 数字图像处理(c opencv):形态学图像处理-morphologyEx函数实现腐蚀膨胀、开闭运算、击中-击不中变换、形态学梯度、顶帽黑帽变换 - 知乎…...

详解)

后进先出(LIFO)详解

LIFO 是 Last In, First Out 的缩写,中文译为后进先出。这是一种数据结构的工作原则,类似于一摞盘子或一叠书本: 最后放进去的元素最先出来 -想象往筒状容器里放盘子: (1)你放进的最后一个盘子(…...

.Net框架,除了EF还有很多很多......

文章目录 1. 引言2. Dapper2.1 概述与设计原理2.2 核心功能与代码示例基本查询多映射查询存储过程调用 2.3 性能优化原理2.4 适用场景 3. NHibernate3.1 概述与架构设计3.2 映射配置示例Fluent映射XML映射 3.3 查询示例HQL查询Criteria APILINQ提供程序 3.4 高级特性3.5 适用场…...

生成 Git SSH 证书

🔑 1. 生成 SSH 密钥对 在终端(Windows 使用 Git Bash,Mac/Linux 使用 Terminal)执行命令: ssh-keygen -t rsa -b 4096 -C "your_emailexample.com" 参数说明: -t rsa&#x…...

工业自动化时代的精准装配革新:迁移科技3D视觉系统如何重塑机器人定位装配

AI3D视觉的工业赋能者 迁移科技成立于2017年,作为行业领先的3D工业相机及视觉系统供应商,累计完成数亿元融资。其核心技术覆盖硬件设计、算法优化及软件集成,通过稳定、易用、高回报的AI3D视觉系统,为汽车、新能源、金属制造等行…...

重启Eureka集群中的节点,对已经注册的服务有什么影响

先看答案,如果正确地操作,重启Eureka集群中的节点,对已经注册的服务影响非常小,甚至可以做到无感知。 但如果操作不当,可能会引发短暂的服务发现问题。 下面我们从Eureka的核心工作原理来详细分析这个问题。 Eureka的…...

作为测试我们应该关注redis哪些方面

1、功能测试 数据结构操作:验证字符串、列表、哈希、集合和有序的基本操作是否正确 持久化:测试aof和aof持久化机制,确保数据在开启后正确恢复。 事务:检查事务的原子性和回滚机制。 发布订阅:确保消息正确传递。 2、性…...

React父子组件通信:Props怎么用?如何从父组件向子组件传递数据?

系列回顾: 在上一篇《React核心概念:State是什么?》中,我们学习了如何使用useState让一个组件拥有自己的内部数据(State),并通过一个计数器案例,实现了组件的自我更新。这很棒&#…...

:LSM Tree 概述)

从零手写Java版本的LSM Tree (一):LSM Tree 概述

🔥 推荐一个高质量的Java LSM Tree开源项目! https://github.com/brianxiadong/java-lsm-tree java-lsm-tree 是一个从零实现的Log-Structured Merge Tree,专为高并发写入场景设计。 核心亮点: ⚡ 极致性能:写入速度超…...

FOPLP vs CoWoS

以下是 FOPLP(Fan-out panel-level packaging 扇出型面板级封装)与 CoWoS(Chip on Wafer on Substrate)两种先进封装技术的详细对比分析,涵盖技术原理、性能、成本、应用场景及市场趋势等维度: 一、技术原…...

Appium下载安装配置保姆教程(图文详解)

目录 一、Appium软件介绍 1.特点 2.工作原理 3.应用场景 二、环境准备 安装 Node.js 安装 Appium 安装 JDK 安装 Android SDK 安装Python及依赖包 三、安装教程 1.Node.js安装 1.1.下载Node 1.2.安装程序 1.3.配置npm仓储和缓存 1.4. 配置环境 1.5.测试Node.j…...