深度学习Day-20:DenseNet算法实战 乳腺癌识别

🍨 本文为:[🔗365天深度学习训练营] 中的学习记录博客

🍖 原作者:[K同学啊 | 接辅导、项目定制]

一、 基础配置

- 语言环境:Python3.8

- 编译器选择:Pycharm

- 深度学习环境:

-

- torch==1.12.1+cu113

- torchvision==0.13.1+cu113

二、 前期准备

1.设置GPU

import torch

import torch.nn as nn

from torchvision import transforms,datasets

import pathlib,warningswarnings.filterwarnings("ignore")device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')2. 导入数据

本项目所采用的数据集未收录于公开数据中,故需要自己在文件目录中导入相应数据集合,并设置对应文件目录,以供后续学习过程中使用。

运行下述代码:

data_dir = "./data/J3-data"

data_dir = pathlib.Path(data_dir)data_path = list(data_dir.glob('*'))

classNames = [str(path).split('\\')[2] for path in data_path]

print(classNames)

得到如下输出:

['0', '1']接下来,我们通过transforms.Compose对整个数据集进行预处理:

train_transforms = transforms.Compose([transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸# transforms.RandomHorizontalFlip(), # 随机水平翻转transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛mean=[0.485, 0.456, 0.406],std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])total_dataset = datasets.ImageFolder(data_dir,transform=transforms)

print(total_dataset.class_to_idx)

得到如下输出:

{'0': 0, '1': 1}3. 划分数据集

此处数据集需要做按比例划分的操作:

train_size = int(0.8*len(total_dataset))

test_size = len(total_dataset) - train_size

train_dataset,test_dataset = torch.utils.data.random_split(total_dataset,[train_size,test_size])接下来,根据划分得到的训练集和验证集对数据集进行包装:

batch_size = 32train_dl = torch.utils.data.DataLoader(train_dataset,batch_size = batch_size,shuffle = True,num_workers = 0)test_dl = torch.utils.data.DataLoader(test_dataset,batch_size = batch_size,shuffle = True,num_workers = 0)并通过:

for X,y in test_dl:print('Shape of X:',X.shape)print('shape of y:',y.shape,y.dtype)break输出测试数据集的数据分布情况:

Shape of X: torch.Size([32, 3, 224, 224])

shape of y: torch.Size([32]) torch.int644.搭建模型

首先,导入搭建模型所依赖的库用于后续模型的搭建过程:

import torch.nn.functional as F

from collections import OrderedDict1.DenseLayer

class DenseLayer(nn.Sequential):def __init__(self, in_channel, growth_rate, bn_size, drop_rate):super(DenseLayer, self).__init__()self.add_module('norm1', nn.BatchNorm2d(in_channel))self.add_module('relu1', nn.ReLU(inplace=True))self.add_module('conv1', nn.Conv2d(in_channel, bn_size * growth_rate, kernel_size=1, stride=1))self.add_module('norm2', nn.BatchNorm2d(bn_size * growth_rate))self.add_module('relu2', nn.ReLU(inplace=True))self.add_module('conv2', nn.Conv2d(bn_size * growth_rate, growth_rate, kernel_size=3, stride=1, padding=1))self.drop_rate = drop_ratedef forward(self, x):new_feature = super(DenseLayer, self).forward(x)if self.drop_rate > 0:new_feature = F.dropout(new_feature, p=self.drop_rate, training=self.training)return torch.cat([x, new_feature], 1)2.DenseBlock

class DenseBlock(nn.Sequential):def __init__(self, num_layers, in_channel, bn_size, growth_rate, drop_rate):super(DenseBlock, self).__init__()for i in range(num_layers):layer = DenseLayer(in_channel + i * growth_rate, growth_rate, bn_size, drop_rate)self.add_module('denselayer%d' % (i + 1,), layer)3.Transition

class Transition(nn.Sequential):def __init__(self, in_channel, out_channel):super(Transition, self).__init__()self.add_module('norm', nn.BatchNorm2d(in_channel))self.add_module('relu', nn.ReLU(inplace=True))self.add_module('conv', nn.Conv2d(in_channel, out_channel, kernel_size=1, stride=1))self.add_module('pool', nn.AvgPool2d(kernel_size=2, stride=2))4.搭建DenseNet

class DenseNet(nn.Module):def __init__(self, growth_rate=32, block_config=(6, 12, 24, 16), init_channel=64, bn_size=4,compression_rate=0.5, drop_rate=0, num_classes=1000):super(DenseNet, self).__init__()self.features = nn.Sequential(OrderedDict([('conv0', nn.Conv2d(3, init_channel, kernel_size=7, stride=2, padding=3)),('norm0', nn.BatchNorm2d(init_channel)),('relu0', nn.ReLU(inplace=True)),('pool0', nn.MaxPool2d(kernel_size=3, stride=2, padding=1))]))num_features = init_channelfor i, num_layers in enumerate(block_config):block = DenseBlock(num_layers, num_features, bn_size=bn_size, growth_rate=growth_rate, drop_rate=drop_rate)self.features.add_module('denseblock%d' % (i + 1), block)num_features += num_layers * growth_rateif i != len(block_config) - 1:transition = Transition(num_features, int(num_features * compression_rate))self.features.add_module('transition%d' % (i + 1), transition)num_features = int(num_features * compression_rate)self.features.add_module('norm5', nn.BatchNorm2d(num_features))self.features.add_module('relu5', nn.ReLU(inplace=True))self.classifier = nn.Linear(num_features, num_classes)for m in self.modules():if isinstance(m, nn.Conv2d):nn.init.kaiming_normal_(m.weight)elif isinstance(m, nn.BatchNorm2d):nn.init.constant_(m.bias, 0)nn.init.constant_(m.weight, 1)elif isinstance(m, nn.Linear):nn.init.constant_(m.bias, 0)def forward(self, x):x = self.features(x)x = F.avg_pool2d(x, 7, stride=1).view(x.size(0), -1)x = self.classifier(x)return x5.利用DenseNet搭建DenseNet121

densenet121 = DenseNet(init_channel=64,growth_rate=32,block_config=(6,12,24,16),num_classes=len(classNames))

model = densenet121.to(device)

2.查看模型信息

import torchsummary as summary

summary.summary(model, (3, 224, 224))得到如下输出:

----------------------------------------------------------------Layer (type) Output Shape Param #

================================================================Conv2d-1 [-1, 64, 112, 112] 9,472BatchNorm2d-2 [-1, 64, 112, 112] 128ReLU-3 [-1, 64, 112, 112] 0MaxPool2d-4 [-1, 64, 56, 56] 0BatchNorm2d-5 [-1, 64, 56, 56] 128ReLU-6 [-1, 64, 56, 56] 0Conv2d-7 [-1, 128, 56, 56] 8,320BatchNorm2d-8 [-1, 128, 56, 56] 256ReLU-9 [-1, 128, 56, 56] 0Conv2d-10 [-1, 32, 56, 56] 36,896BatchNorm2d-11 [-1, 96, 56, 56] 192ReLU-12 [-1, 96, 56, 56] 0Conv2d-13 [-1, 128, 56, 56] 12,416BatchNorm2d-14 [-1, 128, 56, 56] 256ReLU-15 [-1, 128, 56, 56] 0Conv2d-16 [-1, 32, 56, 56] 36,896BatchNorm2d-17 [-1, 128, 56, 56] 256ReLU-18 [-1, 128, 56, 56] 0Conv2d-19 [-1, 128, 56, 56] 16,512BatchNorm2d-20 [-1, 128, 56, 56] 256ReLU-21 [-1, 128, 56, 56] 0Conv2d-22 [-1, 32, 56, 56] 36,896BatchNorm2d-23 [-1, 160, 56, 56] 320ReLU-24 [-1, 160, 56, 56] 0Conv2d-25 [-1, 128, 56, 56] 20,608BatchNorm2d-26 [-1, 128, 56, 56] 256ReLU-27 [-1, 128, 56, 56] 0Conv2d-28 [-1, 32, 56, 56] 36,896BatchNorm2d-29 [-1, 192, 56, 56] 384ReLU-30 [-1, 192, 56, 56] 0Conv2d-31 [-1, 128, 56, 56] 24,704BatchNorm2d-32 [-1, 128, 56, 56] 256ReLU-33 [-1, 128, 56, 56] 0Conv2d-34 [-1, 32, 56, 56] 36,896BatchNorm2d-35 [-1, 224, 56, 56] 448ReLU-36 [-1, 224, 56, 56] 0Conv2d-37 [-1, 128, 56, 56] 28,800BatchNorm2d-38 [-1, 128, 56, 56] 256ReLU-39 [-1, 128, 56, 56] 0Conv2d-40 [-1, 32, 56, 56] 36,896BatchNorm2d-41 [-1, 256, 56, 56] 512ReLU-42 [-1, 256, 56, 56] 0Conv2d-43 [-1, 128, 56, 56] 32,896AvgPool2d-44 [-1, 128, 28, 28] 0BatchNorm2d-45 [-1, 128, 28, 28] 256ReLU-46 [-1, 128, 28, 28] 0Conv2d-47 [-1, 128, 28, 28] 16,512BatchNorm2d-48 [-1, 128, 28, 28] 256ReLU-49 [-1, 128, 28, 28] 0Conv2d-50 [-1, 32, 28, 28] 36,896BatchNorm2d-51 [-1, 160, 28, 28] 320ReLU-52 [-1, 160, 28, 28] 0Conv2d-53 [-1, 128, 28, 28] 20,608BatchNorm2d-54 [-1, 128, 28, 28] 256ReLU-55 [-1, 128, 28, 28] 0Conv2d-56 [-1, 32, 28, 28] 36,896BatchNorm2d-57 [-1, 192, 28, 28] 384ReLU-58 [-1, 192, 28, 28] 0Conv2d-59 [-1, 128, 28, 28] 24,704BatchNorm2d-60 [-1, 128, 28, 28] 256ReLU-61 [-1, 128, 28, 28] 0Conv2d-62 [-1, 32, 28, 28] 36,896BatchNorm2d-63 [-1, 224, 28, 28] 448ReLU-64 [-1, 224, 28, 28] 0Conv2d-65 [-1, 128, 28, 28] 28,800BatchNorm2d-66 [-1, 128, 28, 28] 256ReLU-67 [-1, 128, 28, 28] 0Conv2d-68 [-1, 32, 28, 28] 36,896BatchNorm2d-69 [-1, 256, 28, 28] 512ReLU-70 [-1, 256, 28, 28] 0Conv2d-71 [-1, 128, 28, 28] 32,896BatchNorm2d-72 [-1, 128, 28, 28] 256ReLU-73 [-1, 128, 28, 28] 0Conv2d-74 [-1, 32, 28, 28] 36,896BatchNorm2d-75 [-1, 288, 28, 28] 576ReLU-76 [-1, 288, 28, 28] 0Conv2d-77 [-1, 128, 28, 28] 36,992BatchNorm2d-78 [-1, 128, 28, 28] 256ReLU-79 [-1, 128, 28, 28] 0Conv2d-80 [-1, 32, 28, 28] 36,896BatchNorm2d-81 [-1, 320, 28, 28] 640ReLU-82 [-1, 320, 28, 28] 0Conv2d-83 [-1, 128, 28, 28] 41,088BatchNorm2d-84 [-1, 128, 28, 28] 256ReLU-85 [-1, 128, 28, 28] 0Conv2d-86 [-1, 32, 28, 28] 36,896BatchNorm2d-87 [-1, 352, 28, 28] 704ReLU-88 [-1, 352, 28, 28] 0Conv2d-89 [-1, 128, 28, 28] 45,184BatchNorm2d-90 [-1, 128, 28, 28] 256ReLU-91 [-1, 128, 28, 28] 0Conv2d-92 [-1, 32, 28, 28] 36,896BatchNorm2d-93 [-1, 384, 28, 28] 768ReLU-94 [-1, 384, 28, 28] 0Conv2d-95 [-1, 128, 28, 28] 49,280BatchNorm2d-96 [-1, 128, 28, 28] 256ReLU-97 [-1, 128, 28, 28] 0Conv2d-98 [-1, 32, 28, 28] 36,896BatchNorm2d-99 [-1, 416, 28, 28] 832ReLU-100 [-1, 416, 28, 28] 0Conv2d-101 [-1, 128, 28, 28] 53,376BatchNorm2d-102 [-1, 128, 28, 28] 256ReLU-103 [-1, 128, 28, 28] 0Conv2d-104 [-1, 32, 28, 28] 36,896BatchNorm2d-105 [-1, 448, 28, 28] 896ReLU-106 [-1, 448, 28, 28] 0Conv2d-107 [-1, 128, 28, 28] 57,472BatchNorm2d-108 [-1, 128, 28, 28] 256ReLU-109 [-1, 128, 28, 28] 0Conv2d-110 [-1, 32, 28, 28] 36,896BatchNorm2d-111 [-1, 480, 28, 28] 960ReLU-112 [-1, 480, 28, 28] 0Conv2d-113 [-1, 128, 28, 28] 61,568BatchNorm2d-114 [-1, 128, 28, 28] 256ReLU-115 [-1, 128, 28, 28] 0Conv2d-116 [-1, 32, 28, 28] 36,896BatchNorm2d-117 [-1, 512, 28, 28] 1,024ReLU-118 [-1, 512, 28, 28] 0Conv2d-119 [-1, 256, 28, 28] 131,328AvgPool2d-120 [-1, 256, 14, 14] 0BatchNorm2d-121 [-1, 256, 14, 14] 512ReLU-122 [-1, 256, 14, 14] 0Conv2d-123 [-1, 128, 14, 14] 32,896BatchNorm2d-124 [-1, 128, 14, 14] 256ReLU-125 [-1, 128, 14, 14] 0Conv2d-126 [-1, 32, 14, 14] 36,896BatchNorm2d-127 [-1, 288, 14, 14] 576ReLU-128 [-1, 288, 14, 14] 0Conv2d-129 [-1, 128, 14, 14] 36,992BatchNorm2d-130 [-1, 128, 14, 14] 256ReLU-131 [-1, 128, 14, 14] 0Conv2d-132 [-1, 32, 14, 14] 36,896BatchNorm2d-133 [-1, 320, 14, 14] 640ReLU-134 [-1, 320, 14, 14] 0Conv2d-135 [-1, 128, 14, 14] 41,088BatchNorm2d-136 [-1, 128, 14, 14] 256ReLU-137 [-1, 128, 14, 14] 0Conv2d-138 [-1, 32, 14, 14] 36,896BatchNorm2d-139 [-1, 352, 14, 14] 704ReLU-140 [-1, 352, 14, 14] 0Conv2d-141 [-1, 128, 14, 14] 45,184BatchNorm2d-142 [-1, 128, 14, 14] 256ReLU-143 [-1, 128, 14, 14] 0Conv2d-144 [-1, 32, 14, 14] 36,896BatchNorm2d-145 [-1, 384, 14, 14] 768ReLU-146 [-1, 384, 14, 14] 0Conv2d-147 [-1, 128, 14, 14] 49,280BatchNorm2d-148 [-1, 128, 14, 14] 256ReLU-149 [-1, 128, 14, 14] 0Conv2d-150 [-1, 32, 14, 14] 36,896BatchNorm2d-151 [-1, 416, 14, 14] 832ReLU-152 [-1, 416, 14, 14] 0Conv2d-153 [-1, 128, 14, 14] 53,376BatchNorm2d-154 [-1, 128, 14, 14] 256ReLU-155 [-1, 128, 14, 14] 0Conv2d-156 [-1, 32, 14, 14] 36,896BatchNorm2d-157 [-1, 448, 14, 14] 896ReLU-158 [-1, 448, 14, 14] 0Conv2d-159 [-1, 128, 14, 14] 57,472BatchNorm2d-160 [-1, 128, 14, 14] 256ReLU-161 [-1, 128, 14, 14] 0Conv2d-162 [-1, 32, 14, 14] 36,896BatchNorm2d-163 [-1, 480, 14, 14] 960ReLU-164 [-1, 480, 14, 14] 0Conv2d-165 [-1, 128, 14, 14] 61,568BatchNorm2d-166 [-1, 128, 14, 14] 256ReLU-167 [-1, 128, 14, 14] 0Conv2d-168 [-1, 32, 14, 14] 36,896BatchNorm2d-169 [-1, 512, 14, 14] 1,024ReLU-170 [-1, 512, 14, 14] 0Conv2d-171 [-1, 128, 14, 14] 65,664BatchNorm2d-172 [-1, 128, 14, 14] 256ReLU-173 [-1, 128, 14, 14] 0Conv2d-174 [-1, 32, 14, 14] 36,896BatchNorm2d-175 [-1, 544, 14, 14] 1,088ReLU-176 [-1, 544, 14, 14] 0Conv2d-177 [-1, 128, 14, 14] 69,760BatchNorm2d-178 [-1, 128, 14, 14] 256ReLU-179 [-1, 128, 14, 14] 0Conv2d-180 [-1, 32, 14, 14] 36,896BatchNorm2d-181 [-1, 576, 14, 14] 1,152ReLU-182 [-1, 576, 14, 14] 0Conv2d-183 [-1, 128, 14, 14] 73,856BatchNorm2d-184 [-1, 128, 14, 14] 256ReLU-185 [-1, 128, 14, 14] 0Conv2d-186 [-1, 32, 14, 14] 36,896BatchNorm2d-187 [-1, 608, 14, 14] 1,216ReLU-188 [-1, 608, 14, 14] 0Conv2d-189 [-1, 128, 14, 14] 77,952BatchNorm2d-190 [-1, 128, 14, 14] 256ReLU-191 [-1, 128, 14, 14] 0Conv2d-192 [-1, 32, 14, 14] 36,896BatchNorm2d-193 [-1, 640, 14, 14] 1,280ReLU-194 [-1, 640, 14, 14] 0Conv2d-195 [-1, 128, 14, 14] 82,048BatchNorm2d-196 [-1, 128, 14, 14] 256ReLU-197 [-1, 128, 14, 14] 0Conv2d-198 [-1, 32, 14, 14] 36,896BatchNorm2d-199 [-1, 672, 14, 14] 1,344ReLU-200 [-1, 672, 14, 14] 0Conv2d-201 [-1, 128, 14, 14] 86,144BatchNorm2d-202 [-1, 128, 14, 14] 256ReLU-203 [-1, 128, 14, 14] 0Conv2d-204 [-1, 32, 14, 14] 36,896BatchNorm2d-205 [-1, 704, 14, 14] 1,408ReLU-206 [-1, 704, 14, 14] 0Conv2d-207 [-1, 128, 14, 14] 90,240BatchNorm2d-208 [-1, 128, 14, 14] 256ReLU-209 [-1, 128, 14, 14] 0Conv2d-210 [-1, 32, 14, 14] 36,896BatchNorm2d-211 [-1, 736, 14, 14] 1,472ReLU-212 [-1, 736, 14, 14] 0Conv2d-213 [-1, 128, 14, 14] 94,336BatchNorm2d-214 [-1, 128, 14, 14] 256ReLU-215 [-1, 128, 14, 14] 0Conv2d-216 [-1, 32, 14, 14] 36,896BatchNorm2d-217 [-1, 768, 14, 14] 1,536ReLU-218 [-1, 768, 14, 14] 0Conv2d-219 [-1, 128, 14, 14] 98,432BatchNorm2d-220 [-1, 128, 14, 14] 256ReLU-221 [-1, 128, 14, 14] 0Conv2d-222 [-1, 32, 14, 14] 36,896BatchNorm2d-223 [-1, 800, 14, 14] 1,600ReLU-224 [-1, 800, 14, 14] 0Conv2d-225 [-1, 128, 14, 14] 102,528BatchNorm2d-226 [-1, 128, 14, 14] 256ReLU-227 [-1, 128, 14, 14] 0Conv2d-228 [-1, 32, 14, 14] 36,896BatchNorm2d-229 [-1, 832, 14, 14] 1,664ReLU-230 [-1, 832, 14, 14] 0Conv2d-231 [-1, 128, 14, 14] 106,624BatchNorm2d-232 [-1, 128, 14, 14] 256ReLU-233 [-1, 128, 14, 14] 0Conv2d-234 [-1, 32, 14, 14] 36,896BatchNorm2d-235 [-1, 864, 14, 14] 1,728ReLU-236 [-1, 864, 14, 14] 0Conv2d-237 [-1, 128, 14, 14] 110,720BatchNorm2d-238 [-1, 128, 14, 14] 256ReLU-239 [-1, 128, 14, 14] 0Conv2d-240 [-1, 32, 14, 14] 36,896BatchNorm2d-241 [-1, 896, 14, 14] 1,792ReLU-242 [-1, 896, 14, 14] 0Conv2d-243 [-1, 128, 14, 14] 114,816BatchNorm2d-244 [-1, 128, 14, 14] 256ReLU-245 [-1, 128, 14, 14] 0Conv2d-246 [-1, 32, 14, 14] 36,896BatchNorm2d-247 [-1, 928, 14, 14] 1,856ReLU-248 [-1, 928, 14, 14] 0Conv2d-249 [-1, 128, 14, 14] 118,912BatchNorm2d-250 [-1, 128, 14, 14] 256ReLU-251 [-1, 128, 14, 14] 0Conv2d-252 [-1, 32, 14, 14] 36,896BatchNorm2d-253 [-1, 960, 14, 14] 1,920ReLU-254 [-1, 960, 14, 14] 0Conv2d-255 [-1, 128, 14, 14] 123,008BatchNorm2d-256 [-1, 128, 14, 14] 256ReLU-257 [-1, 128, 14, 14] 0Conv2d-258 [-1, 32, 14, 14] 36,896BatchNorm2d-259 [-1, 992, 14, 14] 1,984ReLU-260 [-1, 992, 14, 14] 0Conv2d-261 [-1, 128, 14, 14] 127,104BatchNorm2d-262 [-1, 128, 14, 14] 256ReLU-263 [-1, 128, 14, 14] 0Conv2d-264 [-1, 32, 14, 14] 36,896BatchNorm2d-265 [-1, 1024, 14, 14] 2,048ReLU-266 [-1, 1024, 14, 14] 0Conv2d-267 [-1, 512, 14, 14] 524,800AvgPool2d-268 [-1, 512, 7, 7] 0BatchNorm2d-269 [-1, 512, 7, 7] 1,024ReLU-270 [-1, 512, 7, 7] 0Conv2d-271 [-1, 128, 7, 7] 65,664BatchNorm2d-272 [-1, 128, 7, 7] 256ReLU-273 [-1, 128, 7, 7] 0Conv2d-274 [-1, 32, 7, 7] 36,896BatchNorm2d-275 [-1, 544, 7, 7] 1,088ReLU-276 [-1, 544, 7, 7] 0Conv2d-277 [-1, 128, 7, 7] 69,760BatchNorm2d-278 [-1, 128, 7, 7] 256ReLU-279 [-1, 128, 7, 7] 0Conv2d-280 [-1, 32, 7, 7] 36,896BatchNorm2d-281 [-1, 576, 7, 7] 1,152ReLU-282 [-1, 576, 7, 7] 0Conv2d-283 [-1, 128, 7, 7] 73,856BatchNorm2d-284 [-1, 128, 7, 7] 256ReLU-285 [-1, 128, 7, 7] 0Conv2d-286 [-1, 32, 7, 7] 36,896BatchNorm2d-287 [-1, 608, 7, 7] 1,216ReLU-288 [-1, 608, 7, 7] 0Conv2d-289 [-1, 128, 7, 7] 77,952BatchNorm2d-290 [-1, 128, 7, 7] 256ReLU-291 [-1, 128, 7, 7] 0Conv2d-292 [-1, 32, 7, 7] 36,896BatchNorm2d-293 [-1, 640, 7, 7] 1,280ReLU-294 [-1, 640, 7, 7] 0Conv2d-295 [-1, 128, 7, 7] 82,048BatchNorm2d-296 [-1, 128, 7, 7] 256ReLU-297 [-1, 128, 7, 7] 0Conv2d-298 [-1, 32, 7, 7] 36,896BatchNorm2d-299 [-1, 672, 7, 7] 1,344ReLU-300 [-1, 672, 7, 7] 0Conv2d-301 [-1, 128, 7, 7] 86,144BatchNorm2d-302 [-1, 128, 7, 7] 256ReLU-303 [-1, 128, 7, 7] 0Conv2d-304 [-1, 32, 7, 7] 36,896BatchNorm2d-305 [-1, 704, 7, 7] 1,408ReLU-306 [-1, 704, 7, 7] 0Conv2d-307 [-1, 128, 7, 7] 90,240BatchNorm2d-308 [-1, 128, 7, 7] 256ReLU-309 [-1, 128, 7, 7] 0Conv2d-310 [-1, 32, 7, 7] 36,896BatchNorm2d-311 [-1, 736, 7, 7] 1,472ReLU-312 [-1, 736, 7, 7] 0Conv2d-313 [-1, 128, 7, 7] 94,336BatchNorm2d-314 [-1, 128, 7, 7] 256ReLU-315 [-1, 128, 7, 7] 0Conv2d-316 [-1, 32, 7, 7] 36,896BatchNorm2d-317 [-1, 768, 7, 7] 1,536ReLU-318 [-1, 768, 7, 7] 0Conv2d-319 [-1, 128, 7, 7] 98,432BatchNorm2d-320 [-1, 128, 7, 7] 256ReLU-321 [-1, 128, 7, 7] 0Conv2d-322 [-1, 32, 7, 7] 36,896BatchNorm2d-323 [-1, 800, 7, 7] 1,600ReLU-324 [-1, 800, 7, 7] 0Conv2d-325 [-1, 128, 7, 7] 102,528BatchNorm2d-326 [-1, 128, 7, 7] 256ReLU-327 [-1, 128, 7, 7] 0Conv2d-328 [-1, 32, 7, 7] 36,896BatchNorm2d-329 [-1, 832, 7, 7] 1,664ReLU-330 [-1, 832, 7, 7] 0Conv2d-331 [-1, 128, 7, 7] 106,624BatchNorm2d-332 [-1, 128, 7, 7] 256ReLU-333 [-1, 128, 7, 7] 0Conv2d-334 [-1, 32, 7, 7] 36,896BatchNorm2d-335 [-1, 864, 7, 7] 1,728ReLU-336 [-1, 864, 7, 7] 0Conv2d-337 [-1, 128, 7, 7] 110,720BatchNorm2d-338 [-1, 128, 7, 7] 256ReLU-339 [-1, 128, 7, 7] 0Conv2d-340 [-1, 32, 7, 7] 36,896BatchNorm2d-341 [-1, 896, 7, 7] 1,792ReLU-342 [-1, 896, 7, 7] 0Conv2d-343 [-1, 128, 7, 7] 114,816BatchNorm2d-344 [-1, 128, 7, 7] 256ReLU-345 [-1, 128, 7, 7] 0Conv2d-346 [-1, 32, 7, 7] 36,896BatchNorm2d-347 [-1, 928, 7, 7] 1,856ReLU-348 [-1, 928, 7, 7] 0Conv2d-349 [-1, 128, 7, 7] 118,912BatchNorm2d-350 [-1, 128, 7, 7] 256ReLU-351 [-1, 128, 7, 7] 0Conv2d-352 [-1, 32, 7, 7] 36,896BatchNorm2d-353 [-1, 960, 7, 7] 1,920ReLU-354 [-1, 960, 7, 7] 0Conv2d-355 [-1, 128, 7, 7] 123,008BatchNorm2d-356 [-1, 128, 7, 7] 256ReLU-357 [-1, 128, 7, 7] 0Conv2d-358 [-1, 32, 7, 7] 36,896BatchNorm2d-359 [-1, 992, 7, 7] 1,984ReLU-360 [-1, 992, 7, 7] 0Conv2d-361 [-1, 128, 7, 7] 127,104BatchNorm2d-362 [-1, 128, 7, 7] 256ReLU-363 [-1, 128, 7, 7] 0Conv2d-364 [-1, 32, 7, 7] 36,896BatchNorm2d-365 [-1, 1024, 7, 7] 2,048ReLU-366 [-1, 1024, 7, 7] 0Linear-367 [-1, 2] 2,050

================================================================

Total params: 6,966,146

Trainable params: 6,966,146

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 294.57

Params size (MB): 26.57

Estimated Total Size (MB): 321.72

----------------------------------------------------------------三、 训练模型

1. 编写训练函数

def train(dataloader,model,optimizer,loss_fn):size = len(dataloader.dataset)num_batches = len(dataloader)train_acc,train_loss = 0,0for X,y in dataloader:X,y = X.to(device),y.to(device)pred = model(X)loss = loss_fn(pred,y)optimizer.zero_grad()loss.backward()optimizer.step()train_loss += loss.item()train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()train_loss /= num_batchestrain_acc /= sizereturn train_acc,train_loss2. 编写测试函数

测试函数和训练函数大致相同,但是由于不进行梯度下降对网络权重进行更新,所以不需要传入优化器

def test(dataloader, model, loss_fn):size = len(dataloader.dataset) # 测试集的大小num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)test_loss, test_acc = 0, 0# 当不进行训练时,停止梯度更新,节省计算内存消耗with torch.no_grad():for imgs, target in dataloader:imgs, target = imgs.to(device), target.to(device)# 计算losstarget_pred = model(imgs)loss = loss_fn(target_pred, target)test_loss += loss.item()test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()test_acc /= sizetest_loss /= num_batchesreturn test_acc, test_loss3.正式训练

import copyoptimizer = torch.optim.Adam(model.parameters(), lr=1e-4)

loss_fn = nn.CrossEntropyLoss() # 创建损失函数epochs = 10train_loss=[]

train_acc=[]

test_loss=[]

test_acc=[]

best_acc = 0for epoch in range(epochs):model.train()epoch_train_acc,epoch_train_loss = train(train_dl,model,opt,loss_fn)model.eval()epoch_test_acc,epoch_test_loss = test(test_dl,model,loss_fn)if epoch_test_acc > best_acc:best_acc = epoch_test_accbest_model = copy.deepcopy(model)train_acc.append(epoch_train_acc)train_loss.append(epoch_train_loss)test_acc.append(epoch_test_acc)test_loss.append(epoch_test_loss)lr = opt.state_dict()['param_groups'][0]['lr']template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, Lr:{:.2E}')print(template.format(epoch+1, epoch_train_acc*100, epoch_train_loss,epoch_test_acc*100, epoch_test_loss, lr))# 保存最佳模型到文件中

PATH = '/best_model.pth' # 保存的参数文件名

torch.save(best_model.state_dict(), PATH)print('Done')

得到如下输出:

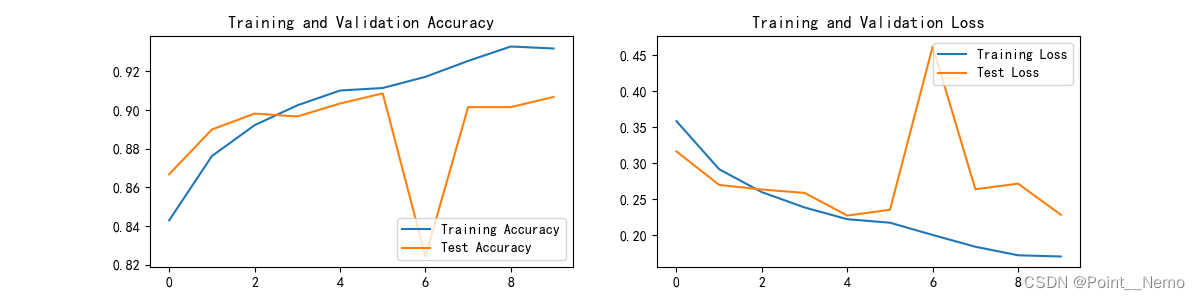

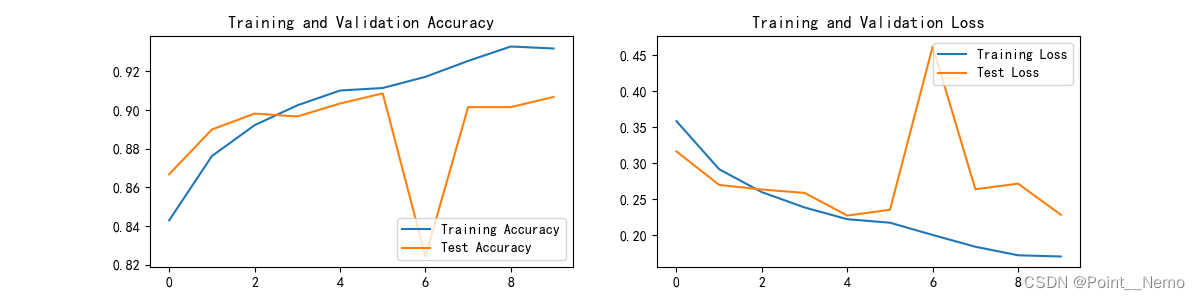

Epoch: 1, Train_acc:84.3%, Train_loss:0.359, Test_acc:86.7%, Test_loss:0.317, Lr:1.00E-04

Epoch: 2, Train_acc:87.6%, Train_loss:0.292, Test_acc:89.0%, Test_loss:0.270, Lr:1.00E-04

Epoch: 3, Train_acc:89.2%, Train_loss:0.260, Test_acc:89.8%, Test_loss:0.264, Lr:1.00E-04

Epoch: 4, Train_acc:90.2%, Train_loss:0.239, Test_acc:89.7%, Test_loss:0.259, Lr:1.00E-04

Epoch: 5, Train_acc:91.0%, Train_loss:0.222, Test_acc:90.3%, Test_loss:0.228, Lr:1.00E-04

Epoch: 6, Train_acc:91.1%, Train_loss:0.218, Test_acc:90.9%, Test_loss:0.236, Lr:1.00E-04

Epoch: 7, Train_acc:91.7%, Train_loss:0.201, Test_acc:82.4%, Test_loss:0.462, Lr:1.00E-04

Epoch: 8, Train_acc:92.5%, Train_loss:0.184, Test_acc:90.2%, Test_loss:0.264, Lr:1.00E-04

Epoch: 9, Train_acc:93.3%, Train_loss:0.172, Test_acc:90.2%, Test_loss:0.272, Lr:1.00E-04

Epoch:10, Train_acc:93.2%, Train_loss:0.171, Test_acc:90.7%, Test_loss:0.229, Lr:1.00E-04

DoneProcess finished with exit code 0

四、 结果可视化

1. Loss&Accuracy

import matplotlib.pyplot as plt

#隐藏警告

import warnings

warnings.filterwarnings("ignore") #忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 #分辨率epochs_range = range(epochs)plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

得到的可视化结果:

五、个人理解

本文为实战帖,具体代码细节及网络理解在之前的文章中已有涉及,这里不再做细节阐述。

相关文章:

深度学习Day-20:DenseNet算法实战 乳腺癌识别

🍨 本文为:[🔗365天深度学习训练营] 中的学习记录博客 🍖 原作者:[K同学啊 | 接辅导、项目定制] 一、 基础配置 语言环境:Python3.8编译器选择:Pycharm深度学习环境: torch1.12.1c…...

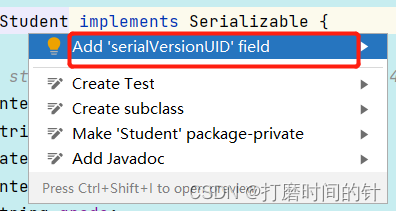

给类设置serialVersionUID

第一步打开idea设置窗口(setting窗口默认快捷键CtrlAltS) 第二步搜索找到Inspections 第三步勾选主窗口中Java->Serializations issues->下的Serializable class without serialVersionUID’项 ,并点击“OK”确认 第四步鼠标选中要加…...

Android之实现两段颜色样式不同的文字拼接进行富文本方式的显示

一、使用SpannableString进行拼接 1、显示例子 前面文字显示红色,后面显示白色,显示在一个TextView中,可以自动换行 发送人姓名: 发送信息内容2、TextView <TextViewandroid:id"id/tv_msg"android:layout_width"wrap_c…...

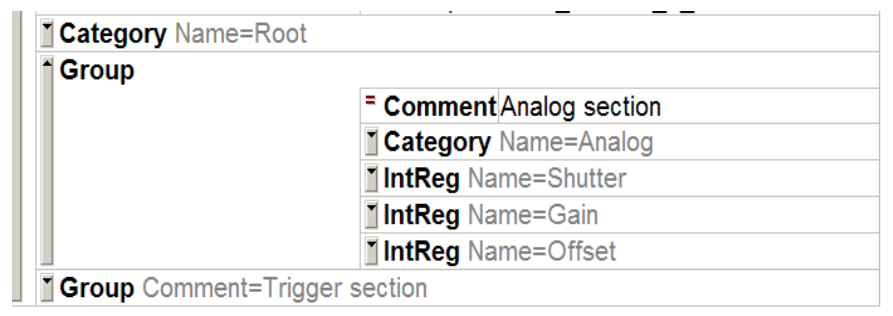

GenICam标准(五)

系列文章目录 GenICam标准(一) GenICam标准(二) GenICam标准(三) GenICam标准(四) GenICam标准(五) GenICam标准(六) 文章目录 系列文…...

《人生海海》读后感

麦家是写谍战的高手,《暗算》《风声》等等作品被搬上荧屏后,掀起了一阵一阵的收视狂潮。麦家声名远扬我自然是知道的,然而我对谍战似乎总是提不起兴趣,因此从来没有拜读过他的作品。这几天无聊时在网上找找看看,发现了…...

SpringBoot自定义Starter及原理分析

目录 1.前言2.环境3.准备Starter项目4.准备AutoConfigure项目4.1 准备类HelloProperties4.2 准备类HelloService4.3 准备类HelloServiceAutoConfiguration4.4 创建spring.factories文件并引用配置类HelloServiceAutoConfiguration4.5 安装到maven仓库 5.在其他项目中引入自定义…...

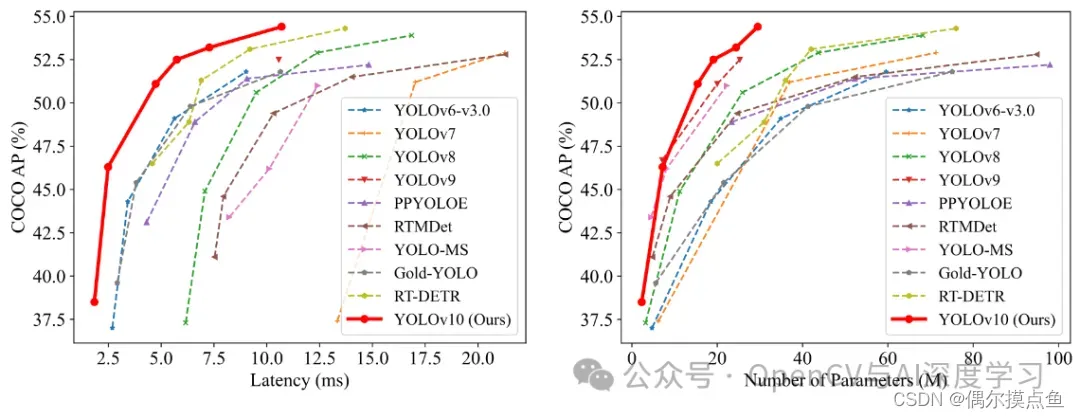

YOLOv10网络架构及特点

YOLOv10简介 YOLOv10是清华大学的研究人员在Ultralytics Python包的基础上,引入了一种新的实时目标检测方法,解决了YOLO 以前版本在后处理和模型架构方面的不足。通过消除非最大抑制(NMS)和优化各种模型组件,YOLOv…...

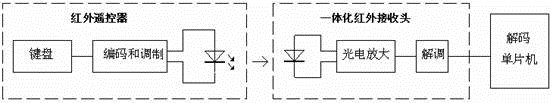

基于单片机的多功能智能小车设计

第一章 绪论 1.1 课题背景和意义 随着计算机、微电子、信息技术的快速发展,智能化技术的发展速度越来越快,智能化与人们生活的联系也越来越紧密,智能化是未来社会发展的必然趋势。智能小车实际上就是一个可以自由移动的智能机器人,比较适合在人们无法工作的地方工作,也可…...

Python时间序列分析库

Sktime Welcome to sktime — sktime documentation 用于ML/AI和时间序列的统一API,用于模型构建、拟合、应用和验证支持各种学习任务,包括预测、时间序列分类、回归、聚类。复合模型构建,包括具有转换、集成、调整和精简功能的管道scikit学习式界面约定的交互式用户体验Pro…...

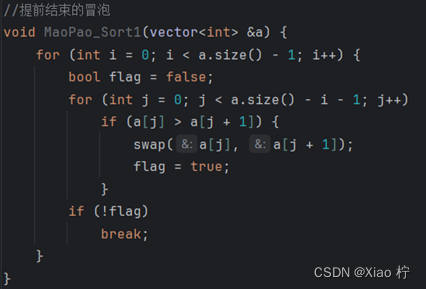

算法设计与分析 实验1 算法性能分析

目录 一、实验目的 二、实验概述 三、实验内容 四、问题描述 1.实验基本要求 2.实验亮点 3.实验说明 五、算法原理和实现 问题1-4算法 1. 选择排序 算法实验原理 核心伪代码 算法性能分析 数据测试 选择排序算法优化 2. 冒泡排序 算法实验原理 核心伪代码 算…...

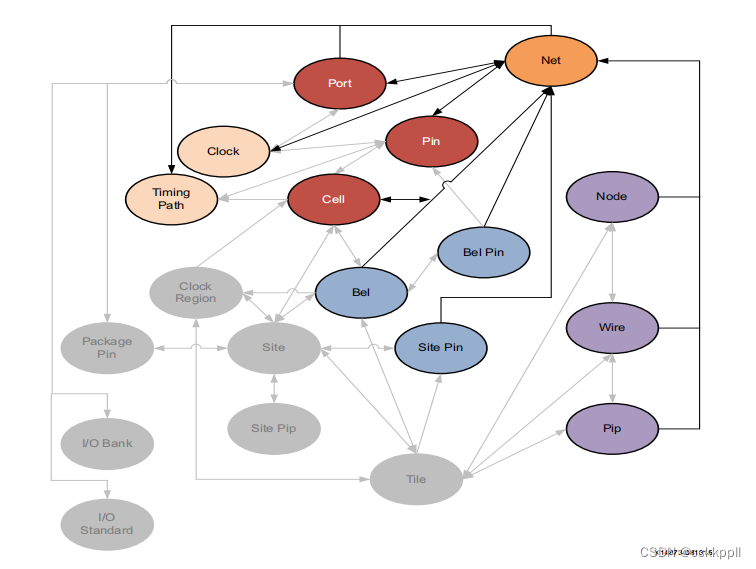

FPGA NET

描述 网络是一组相互连接的引脚、端口和导线。每条电线都有一个网名 识别它。两条或多条导线可以具有相同的网络名称。所有电线共享一个公用网络 名称是单个NET的一部分,并且连接到这些导线的所有引脚或端口都是电气的 有联系的。 当net对象在 将RTL源文件细化或编译…...

把服务器上的镜像传到到公司内部私有harbor上,提高下载速度

一、登录 docker login https://harbor.cqxyy.net/ -u 账号 -p 密码 二、转移镜像 minio 2024.05版 # 指定tag docker tag minio/minio:RELEASE.2024-05-10T01-41-38Z harbor.cqxyy.net/customer-software/minio:RELEASE.2024-05-10T01-41-38Z# 推送镜像 docker push harbo…...

1055 集体照(测试点3, 4, 5)

solution 从后排开始输出,可以先把所有的学生进行排序(身高降序,名字升序),再按照每排的人数找到中间位置依次左右各一个进行排列测试点3, 4, 5:k是小于10的正整数,则每…...

AI 定位!GeoSpyAI上传一张图片分析具体位置 不可思议! ! !

🏡作者主页:点击! 🤖常见AI大模型部署:点击! 🤖Ollama部署LLM专栏:点击! ⏰️创作时间:2024年6月16日12点23分 🀄️文章质量:94分…...

中国最著名的起名大师颜廷利:父亲节与之相关的真实含义

今天是2024年6月16日,这一天被广泛庆祝为“父亲节”。在汉语中,“父亲”这一角色常以“爸爸”、“大大”(da-da)或“爹爹”等词汇表达。有趣的是,“爸爸”在汉语拼音中表示为“ba-ba”,而当我们稍微改变“b…...

【每日刷题】Day66

【每日刷题】Day66 🥕个人主页:开敲🍉 🔥所属专栏:每日刷题🍍 🌼文章目录🌼 1. 小乐乐改数字_牛客题霸_牛客网 (nowcoder.com) 2. 牛牛的递增之旅_牛客题霸_牛客网 (nowcoder.com)…...

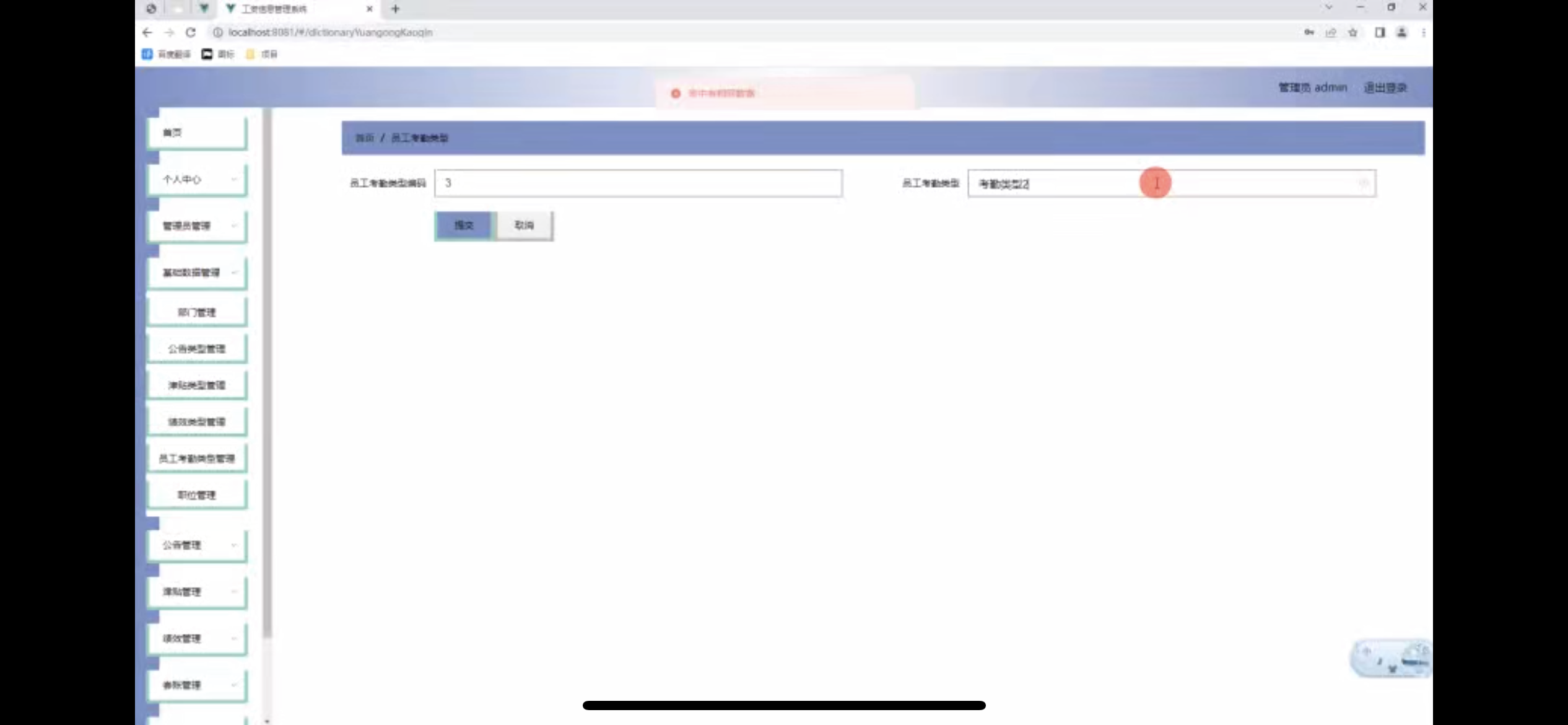

工资信息管理系统的设计

管理员账户功能包括:系统首页,个人中心,基础数据管理,公告管理,津贴管理,管理员管理,绩效管理 用户账户功能包括:系统首页,个人中心,公告管理,津…...

Docker 镜像****后,如何给Ubuntu手动安装 docker 服务

Docker 镜像****后,如何给Ubuntu手动安装 docker 服务 下载地址下载自己需要的安装包使用下面的命令进行安装启动服务 最近由于某些未知原因,国内的docker镜像全部被停。刚好需要重新安装自己的笔记本为双系统,在原来的Windows下,…...

数组模拟单链表和双链表

目录 单链表 初始化 头插 删除 插入 双链表 初始化 插入右和插入左 删除 单链表 单链表主要有三个接口:头插,删除,插入(由于单链表的性质,插入接口是在结点后面插入) 初始化 int e[N], ne[N]; …...

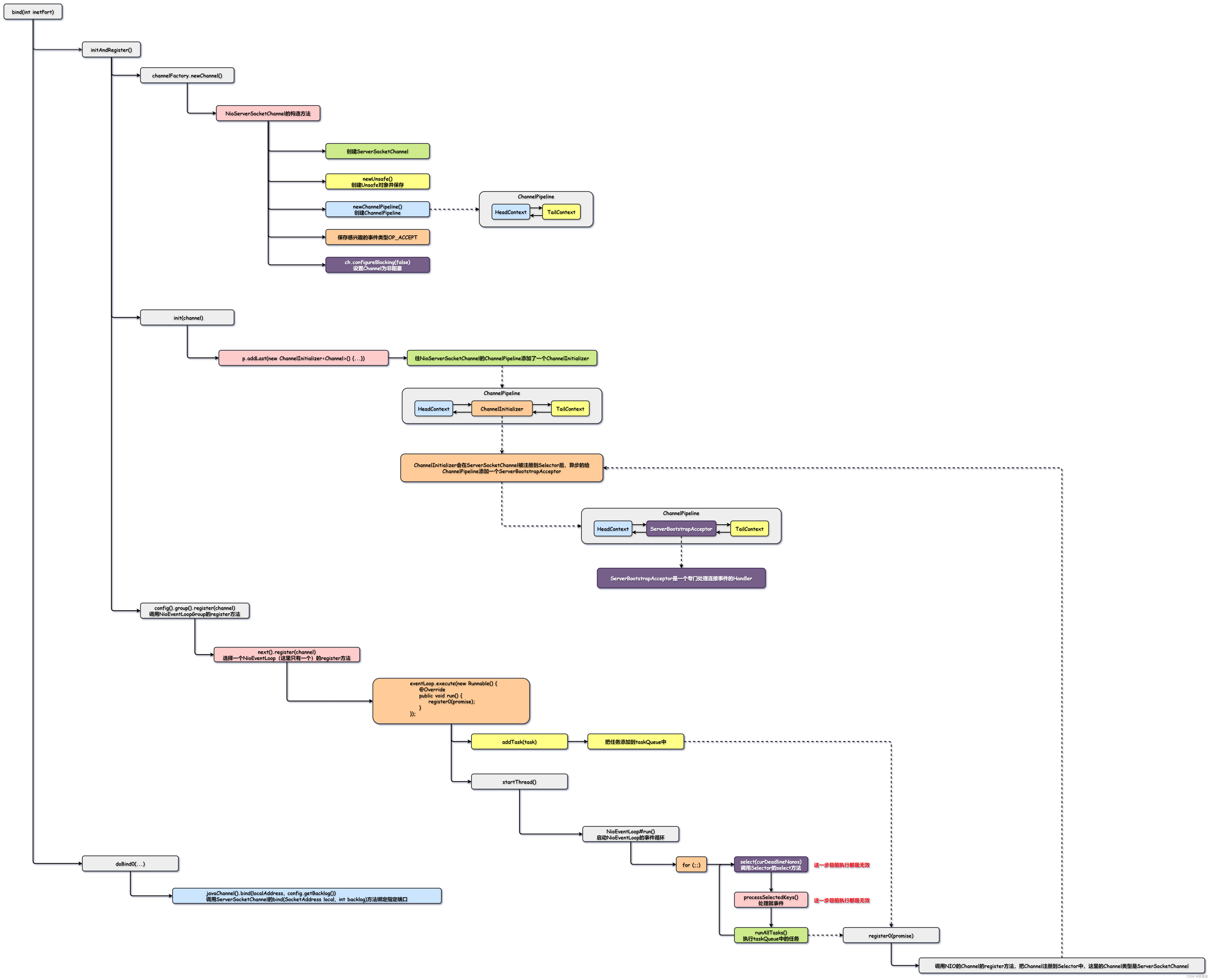

【图解IO与Netty系列】Netty源码解析——服务端启动

Netty源码解析——服务端启动 Netty案例复习Netty原理复习Netty服务端启动源码解析bind(int)initAndRegister()channelFactory.newChannel()init(channel)config().group().register(channel)startThread()run()register0(ChannelPromise promise)doBind0(...) 今天我们一起来学…...

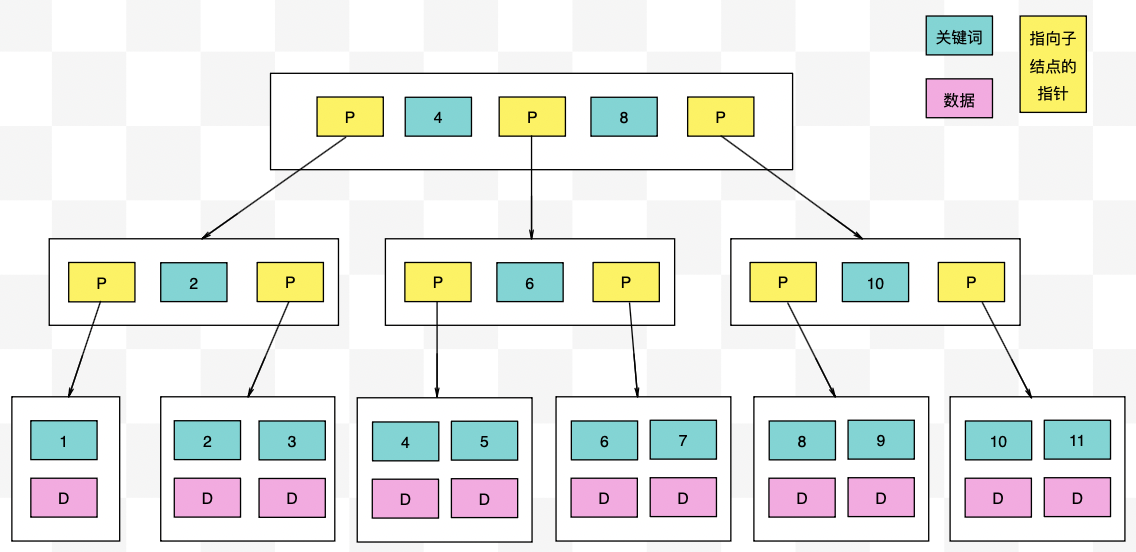

【力扣数据库知识手册笔记】索引

索引 索引的优缺点 优点1. 通过创建唯一性索引,可以保证数据库表中每一行数据的唯一性。2. 可以加快数据的检索速度(创建索引的主要原因)。3. 可以加速表和表之间的连接,实现数据的参考完整性。4. 可以在查询过程中,…...

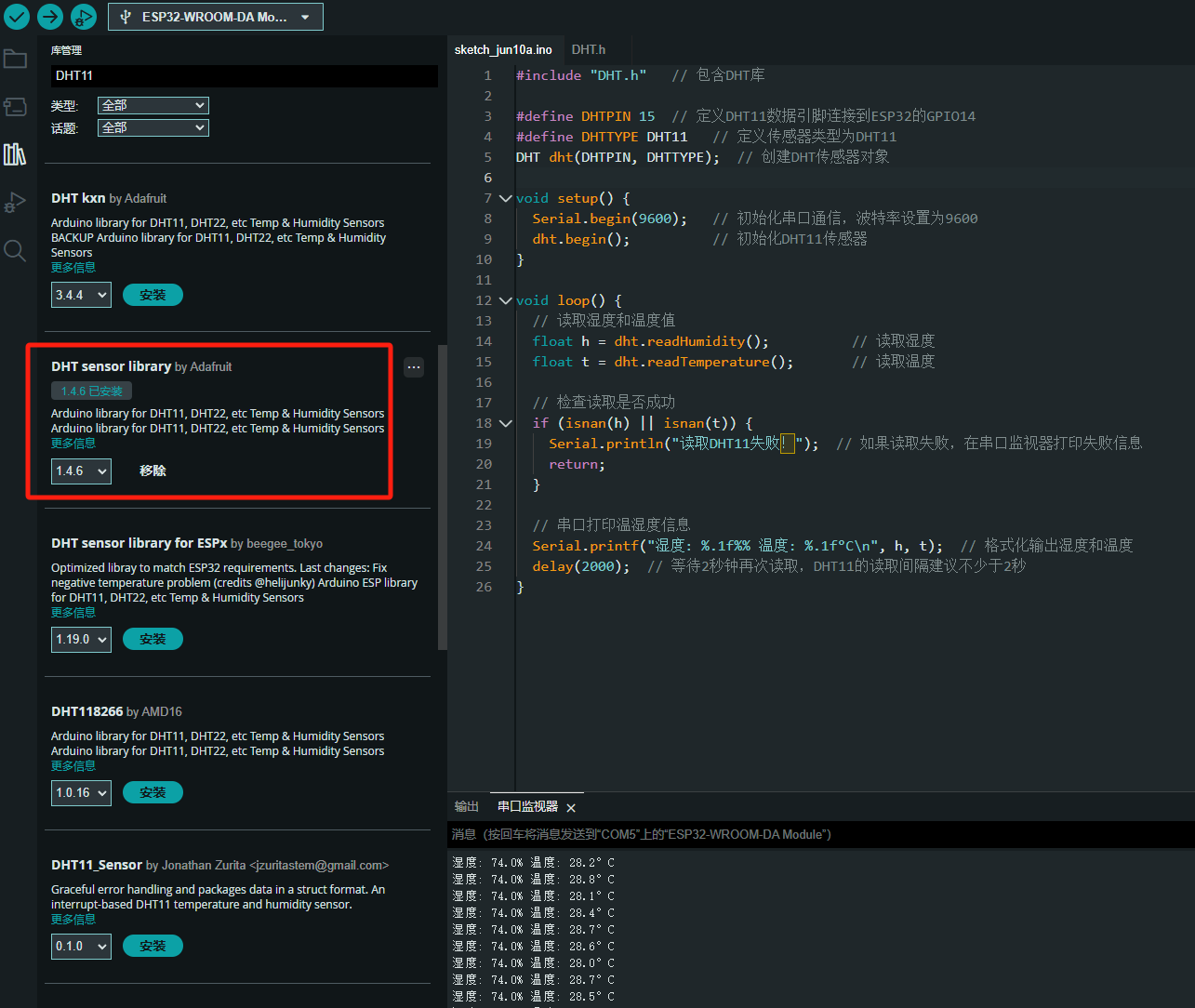

ESP32读取DHT11温湿度数据

芯片:ESP32 环境:Arduino 一、安装DHT11传感器库 红框的库,别安装错了 二、代码 注意,DATA口要连接在D15上 #include "DHT.h" // 包含DHT库#define DHTPIN 15 // 定义DHT11数据引脚连接到ESP32的GPIO15 #define D…...

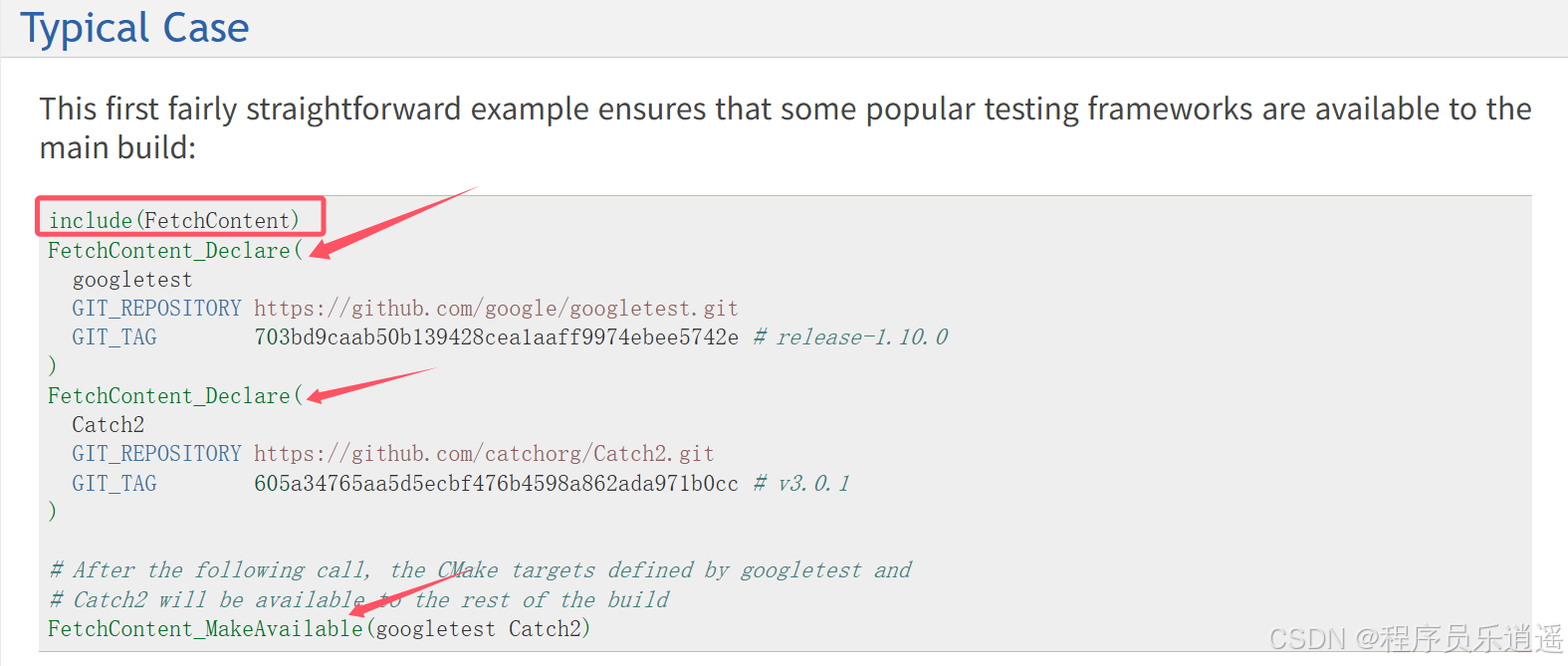

CMake 从 GitHub 下载第三方库并使用

有时我们希望直接使用 GitHub 上的开源库,而不想手动下载、编译和安装。 可以利用 CMake 提供的 FetchContent 模块来实现自动下载、构建和链接第三方库。 FetchContent 命令官方文档✅ 示例代码 我们将以 fmt 这个流行的格式化库为例,演示如何: 使用 FetchContent 从 GitH…...

【 java 虚拟机知识 第一篇 】

目录 1.内存模型 1.1.JVM内存模型的介绍 1.2.堆和栈的区别 1.3.栈的存储细节 1.4.堆的部分 1.5.程序计数器的作用 1.6.方法区的内容 1.7.字符串池 1.8.引用类型 1.9.内存泄漏与内存溢出 1.10.会出现内存溢出的结构 1.内存模型 1.1.JVM内存模型的介绍 内存模型主要分…...

Caliper 配置文件解析:fisco-bcos.json

config.yaml 文件 config.yaml 是 Caliper 的主配置文件,通常包含以下内容: test:name: fisco-bcos-test # 测试名称description: Performance test of FISCO-BCOS # 测试描述workers:type: local # 工作进程类型number: 5 # 工作进程数量monitor:type: - docker- pro…...

根目录0xa0属性对应的Ntfs!_SCB中的FileObject是什么时候被建立的----NTFS源代码分析--重要

根目录0xa0属性对应的Ntfs!_SCB中的FileObject是什么时候被建立的 第一部分: 0: kd> g Breakpoint 9 hit Ntfs!ReadIndexBuffer: f7173886 55 push ebp 0: kd> kc # 00 Ntfs!ReadIndexBuffer 01 Ntfs!FindFirstIndexEntry 02 Ntfs!NtfsUpda…...

c# 局部函数 定义、功能与示例

C# 局部函数:定义、功能与示例 1. 定义与功能 局部函数(Local Function)是嵌套在另一个方法内部的私有方法,仅在包含它的方法内可见。 • 作用:封装仅用于当前方法的逻辑,避免污染类作用域,提升…...

算术操作符与类型转换:从基础到精通

目录 前言:从基础到实践——探索运算符与类型转换的奥秘 算术操作符超级详解 算术操作符:、-、*、/、% 赋值操作符:和复合赋值 单⽬操作符:、--、、- 前言:从基础到实践——探索运算符与类型转换的奥秘 在先前的文…...

大数据治理的常见方式

大数据治理的常见方式 大数据治理是确保数据质量、安全性和可用性的系统性方法,以下是几种常见的治理方式: 1. 数据质量管理 核心方法: 数据校验:建立数据校验规则(格式、范围、一致性等)数据清洗&…...

2.2.2 ASPICE的需求分析

ASPICE的需求分析是汽车软件开发过程中至关重要的一环,它涉及到对需求进行详细分析、验证和确认,以确保软件产品能够满足客户和用户的需求。在ASPICE中,需求分析的关键步骤包括: 需求细化:将从需求收集阶段获得的高层需…...