DirectShow过滤器开发-读视频文件过滤器(再写)

下载本过滤器DLL

本过滤器读取视频文件输出视频流和音频流。流类型由文件决定。已知可读取的文件格式有:AVI,ASF,MOV,MP4,MPG,WMV。

过滤器信息

过滤器名称:读视频文件

过滤器GUID:{29001AD7-37A5-45E0-A750-E76453B36E33}

DLL注册函数名:DllRegisterServer

删除注册函数名:DllUnregisterServer

过滤器有2个输出引脚。

输出引脚1标识:Video

输出引脚1媒体类型:

主要类型:MEDIATYPE_Video

子类型:MEDIASUBTYPE_NULL

输出引脚2标识:Audio

输出引脚2媒体类型:

主要类型:MEDIATYPE_Audio

子类型:MEDIASUBTYPE_NULL

过滤器开发信息

过滤器实现了IFileSourceFilter接口,用于指定要读取的视频文件。并在接口的Load方法中确定文件视频流,音频流媒体类型,设置给视频音频输出引脚,并获取文件的时长。过滤器运行时,创建媒体源线程,在线程中创建媒体源,并获取媒体源事件。媒体源启动后(调用媒体源的Start方法),将产生“创建了新流”事件,事件值为视频或音频流接口。获取流接口后创建视频音频工作线程(视频音频流线程)。在线程中获取流事件。请求样本后,会产生“产生新样本”事件,事件值为样本接口,其为媒体基础样本,将其转换为引脚样本,由引脚向下游发送。再请求下一个样本。过滤器停止时,调用媒体源接口的Stop方法,会产生“流停止”事件,收到此事件后,退出视频音频工作线程,但媒体源线程仍未退出。再次运行过滤器时,再次启动媒体源,此时媒体源产生“流已更新”事件,事件值为已更新的流接口。获取流接口后创建视频音频工作线程(视频音频流线程)。过滤器更改播放位置时(即定位,调用媒体源的Start方法,在参数中指定新的开始位置),媒体源也会产生“流已更新”事件,此时只更新流接口,不再创建新的视频音频工作线程,因为线程已存在。媒体源会自动寻找关键帧,从关键帧开始。要退出媒体源线程,通过销毁过滤器或指定新的要读取的文件,方式为发送“退出媒体源线程”事件信号。

IMediaSeeking接口用于调整播放的当前位置(定位)。可以在过滤器实现,也可以在任一引脚实现。如果在引脚实现,最好在音频引脚。

过滤器DLL的全部代码

DLL.h

#ifndef DLL_FILE

#define DLL_FILE#include "strmbase10.h"//过滤器基类定义文件#if _DEBUG

#pragma comment(lib, "strmbasd10.lib")//过滤器基类实现文件调试版本

#else

#pragma comment(lib, "strmbase10.lib")//过滤器基类实现文件发布版本

#endif// {29001AD7-37A5-45E0-A750-E76453B36E33}

DEFINE_GUID(CLSID_Reader,//过滤器GUID0x29001ad7, 0x37a5, 0x45e0, 0xa7, 0x50, 0xe7, 0x64, 0x53, 0xb3, 0x6e, 0x33);#include "mfapi.h"

#include "mfidl.h"

#include "mferror.h"

#include "evr.h"

#pragma comment(lib, "mfplat.lib")

#pragma comment(lib, "mf.lib")class CPin2;

class CFilter;class CPin1 : public CBaseOutputPin

{friend class CPin2;friend class CFilter;

public:CPin1(CFilter *pFilter, HRESULT *phr, LPCWSTR pPinName);~CPin1();DECLARE_IUNKNOWNSTDMETHODIMP NonDelegatingQueryInterface(REFIID riid, void **ppvoid);BOOL HasSet = FALSE;HRESULT CheckMediaType(const CMediaType *pmt);HRESULT GetMediaType(int iPosition, CMediaType *pMediaType);HRESULT SetMediaType(const CMediaType *pmt);HRESULT BreakConnect();HRESULT DecideBufferSize(IMemAllocator *pMemAllocator, ALLOCATOR_PROPERTIES * ppropInputRequest);CFilter *pCFilter = NULL;STDMETHODIMP Notify(IBaseFilter *pSelf, Quality q){return E_FAIL;}

};class CPin2 : public CBaseOutputPin, public IMediaSeeking

{friend class CPin1;friend class CFilter;

public:CPin2(CFilter *pFilter, HRESULT *phr, LPCWSTR pPinName);~CPin2();DECLARE_IUNKNOWNSTDMETHODIMP NonDelegatingQueryInterface(REFIID riid, void **ppvoid);BOOL HasSet = FALSE;HRESULT CheckMediaType(const CMediaType *pmt);HRESULT GetMediaType(int iPosition, CMediaType *pMediaType);HRESULT SetMediaType(const CMediaType *pmt);HRESULT BreakConnect();HRESULT DecideBufferSize(IMemAllocator *pMemAllocator, ALLOCATOR_PROPERTIES * ppropInputRequest);CFilter *pCFilter = NULL;DWORD m_dwSeekingCaps = AM_SEEKING_CanSeekForwards | AM_SEEKING_CanSeekBackwards | AM_SEEKING_CanSeekAbsolute | AM_SEEKING_CanGetStopPos | AM_SEEKING_CanGetDuration;HRESULT STDMETHODCALLTYPE CheckCapabilities(DWORD *pCapabilities);HRESULT STDMETHODCALLTYPE ConvertTimeFormat(LONGLONG *pTarget, const GUID *pTargetFormat, LONGLONG Source, const GUID *pSourceFormat);HRESULT STDMETHODCALLTYPE GetAvailable(LONGLONG *pEarliest, LONGLONG *pLatest);HRESULT STDMETHODCALLTYPE GetCapabilities(DWORD *pCapabilities);HRESULT STDMETHODCALLTYPE GetCurrentPosition(LONGLONG *pCurrent);HRESULT STDMETHODCALLTYPE GetDuration(LONGLONG *pDuration);HRESULT STDMETHODCALLTYPE GetPositions(LONGLONG *pCurrent, LONGLONG *pStop);HRESULT STDMETHODCALLTYPE GetPreroll(LONGLONG *pllPreroll);HRESULT STDMETHODCALLTYPE GetRate(double *pdRate);HRESULT STDMETHODCALLTYPE GetStopPosition(LONGLONG *pStop);HRESULT STDMETHODCALLTYPE GetTimeFormat(GUID *pFormat);HRESULT STDMETHODCALLTYPE IsFormatSupported(const GUID *pFormat);HRESULT STDMETHODCALLTYPE IsUsingTimeFormat(const GUID *pFormat);HRESULT STDMETHODCALLTYPE QueryPreferredFormat(GUID *pFormat);HRESULT STDMETHODCALLTYPE SetPositions(LONGLONG *pCurrent, DWORD dwCurrentFlags, LONGLONG *pStop, DWORD dwStopFlags);HRESULT STDMETHODCALLTYPE SetRate(double dRate);HRESULT STDMETHODCALLTYPE SetTimeFormat(const GUID *pFormat);STDMETHODIMP Notify(IBaseFilter *pSelf, Quality q){return E_FAIL;}

};class CFilter : public CCritSec, public CBaseFilter, public IFileSourceFilter

{friend class CPin1;friend class CPin2;

public:CFilter(TCHAR* pName, LPUNKNOWN pUnk, HRESULT* hr);~CFilter();CBasePin* GetPin(int n);int GetPinCount();static CUnknown * WINAPI CreateInstance(LPUNKNOWN pUnk, HRESULT *phr);DECLARE_IUNKNOWNSTDMETHODIMP NonDelegatingQueryInterface(REFIID iid, void ** ppv);STDMETHODIMP Load(LPCOLESTR lpwszFileName, const AM_MEDIA_TYPE *pmt);STDMETHODIMP GetCurFile(LPOLESTR * ppszFileName, AM_MEDIA_TYPE *pmt);HRESULT GetMediaType();//获取视频音频流媒体类型REFERENCE_TIME mStart = 0;HANDLE hSourceThread = NULL;//媒体源线程句柄HANDLE hVideoThread = NULL;//视频流线程句柄HANDLE hAudioThread = NULL;//音频流线程句柄HANDLE hExit = NULL;// “退出媒体源线程”事件句柄HANDLE hInit = NULL;//“媒体源已创建”事件句柄STDMETHODIMP Pause();STDMETHODIMP Stop();CPin1* pCPin1 = NULL;//视频引脚指针CPin2* pCPin2 = NULL;//音频引脚指针WCHAR* m_pFileName = NULL;//要读取的视频文件路径LONGLONG DUR = 0;//持续时间,100纳秒单位LONGLONG CUR = 0;//当前位置,单位100纳秒IMFMediaType* pVideoType = NULL;//视频媒体类型IMFMediaType* pAudioType = NULL;//音频媒体类型IMFMediaSource *pIMFMediaSource = NULL;//媒体源接口IMFPresentationDescriptor* pISourceD = NULL;//演示文稿描述符IMFMediaStream* pVideoStream = NULL;//视频流接口IMFMediaStream* pAudioStream = NULL;//音频流接口

};template <class T> void SafeRelease(T** ppT)

{if (*ppT){(*ppT)->Release();*ppT = NULL;}

}#endif //DLL_FILEDLL.cpp

#include "DLL.h"const AMOVIESETUP_MEDIATYPE Pin1Type = //引脚1媒体类型

{&MEDIATYPE_Video, //主要类型&MEDIASUBTYPE_NULL //子类型

};const AMOVIESETUP_MEDIATYPE Pin2Type = //引脚2媒体类型

{&MEDIATYPE_Audio, //主要类型&MEDIASUBTYPE_NULL //子类型

};const AMOVIESETUP_PIN sudPins[] = //引脚信息

{{L"Video", //引脚名称FALSE, //渲染引脚TRUE, //输出引脚FALSE, //具有该引脚的零个实例FALSE, //可以创建一个以上引脚的实例&CLSID_NULL, //该引脚连接的过滤器的类标识NULL, //该引脚连接的引脚名称1, //引脚支持的媒体类型数&Pin1Type //媒体类型信息},{L"Audio", //引脚名称FALSE, //渲染引脚TRUE, //输出引脚FALSE, //具有该引脚的零个实例FALSE, //可以创建一个以上引脚的实例&CLSID_NULL, //该引脚连接的过滤器的类标识NULL, //该引脚连接的引脚名称1, //引脚支持的媒体类型数&Pin2Type //媒体类型信息}

};const AMOVIESETUP_FILTER Reader = //过滤器的注册信息

{&CLSID_Reader, //过滤器的类标识L"读视频文件", //过滤器的名称MERIT_DO_NOT_USE, //过滤器优先值2, //引脚数量sudPins //引脚信息

};CFactoryTemplate g_Templates[] = //类工厂模板数组

{{L"读视频文件", //对象(这里为过滤器)名称&CLSID_Reader, //对象CLSID的指针CFilter::CreateInstance, //创建对象实例的函数的指针NULL, //指向从DLL入口点调用的函数的指针&Reader //指向AMOVIESETUP_FILTER结构的指针}

};int g_cTemplates = 1;//模板数组大小STDAPI DllRegisterServer()//注册DLL

{return AMovieDllRegisterServer2(TRUE);

}STDAPI DllUnregisterServer()//删除DLL注册

{return AMovieDllRegisterServer2(FALSE);

}extern "C" BOOL WINAPI DllEntryPoint(HINSTANCE, ULONG, LPVOID);BOOL APIENTRY DllMain(HANDLE hModule, DWORD dwReason, LPVOID lpReserved)

{return DllEntryPoint((HINSTANCE)(hModule), dwReason, lpReserved);

}CFilter.cpp

#include "DLL.h"

#include "strsafe.h"DWORD WINAPI MediaSourceThread(LPVOID pParam);

DWORD WINAPI VideoThread(LPVOID pParam);

DWORD WINAPI AudioThread(LPVOID pParam);CFilter::CFilter(TCHAR *pName, LPUNKNOWN pUnk, HRESULT *phr) : CBaseFilter(NAME("读视频文件"), pUnk, this, CLSID_Reader)

{HRESULT hr = MFStartup(MF_VERSION);//初始化媒体基础if (hr != S_OK){MessageBox(NULL, L"初始化媒体基础失败", L"读视频文件", MB_OK); return;}pCPin1 = new CPin1(this, phr, L"Video");//创建视频输出引脚pCPin2 = new CPin2(this, phr, L"Audio");//创建音频输出引脚hExit = CreateEvent(NULL, FALSE, FALSE, NULL);//自动重置,初始无信号hInit = CreateEvent(NULL, FALSE, FALSE, NULL);//自动重置,初始无信号

}CFilter::~CFilter()

{SetEvent(hExit);//发送“退出媒体源线程”信号SafeRelease(&pVideoType); SafeRelease(&pAudioType);//释放媒体类型if (m_pFileName)delete[] m_pFileName;MFShutdown();//关闭媒体基础CloseHandle(hSourceThread); CloseHandle(hVideoThread); CloseHandle(hAudioThread); CloseHandle(hExit); CloseHandle(hInit);

}CBasePin *CFilter::GetPin(int n)

{if (n == 0)return pCPin1;if (n == 1)return pCPin2;return NULL;

}int CFilter::GetPinCount()

{return 2;

}CUnknown * WINAPI CFilter::CreateInstance(LPUNKNOWN pUnk, HRESULT *phr)

{return new CFilter(NAME("读视频文件"), pUnk, phr);

}STDMETHODIMP CFilter::NonDelegatingQueryInterface(REFIID iid, void ** ppv)

{if (iid == IID_IFileSourceFilter){return GetInterface(static_cast<IFileSourceFilter*>(this), ppv);}elsereturn CBaseFilter::NonDelegatingQueryInterface(iid, ppv);

}STDMETHODIMP CFilter::Load(LPCOLESTR lpwszFileName, const AM_MEDIA_TYPE *pmt)

{CheckPointer(lpwszFileName, E_POINTER);if (wcslen(lpwszFileName) > MAX_PATH || wcslen(lpwszFileName)<4)return ERROR_FILENAME_EXCED_RANGE;size_t len = 1 + lstrlenW(lpwszFileName);if (m_pFileName != NULL)delete[] m_pFileName;m_pFileName = new WCHAR[len];if (m_pFileName == NULL)return E_OUTOFMEMORY;HRESULT hr = StringCchCopyW(m_pFileName, len, lpwszFileName);hr = GetMediaType();//获取视频音频流媒体类型if (hr != S_OK)//如果获取媒体类型失败{delete[] m_pFileName; m_pFileName = NULL;return VFW_E_INVALID_FILE_FORMAT;//设置文件名失败}return S_OK;

}STDMETHODIMP CFilter::GetCurFile(LPOLESTR * ppszFileName, AM_MEDIA_TYPE *pmt)

{CheckPointer(ppszFileName, E_POINTER);*ppszFileName = NULL;if (m_pFileName != NULL){DWORD n = sizeof(WCHAR)*(1 + lstrlenW(m_pFileName));*ppszFileName = (LPOLESTR)CoTaskMemAlloc(n);if (*ppszFileName != NULL){CopyMemory(*ppszFileName, m_pFileName, n); return S_OK;}}return S_FALSE;

}HRESULT CFilter::GetMediaType()//获取视频音频流媒体类型

{DWORD dw = WaitForSingleObject(hSourceThread, 0);if (dw == WAIT_TIMEOUT)//如果媒体源线程正在运行{SetEvent(hExit);//发送“退出媒体源线程”信号WaitForSingleObject(hSourceThread, INFINITE);//等待媒体源线程退出}SafeRelease(&pVideoType); SafeRelease(&pAudioType);//释放媒体类型IMFPresentationDescriptor* pSourceD = NULL;//演示文稿描述符IMFMediaSource *pMFMediaSource = NULL;//媒体源接口IMFStreamDescriptor* pStreamD1 = NULL;//流1描述符IMFStreamDescriptor* pStreamD2 = NULL;//流2描述符IMFMediaTypeHandler* pHandle1 = NULL;//流1媒体类型处理器IMFMediaTypeHandler* pHandle2 = NULL;//流2媒体类型处理器IMFSourceResolver* pSourceResolver = NULL;//源解析器HRESULT hr = MFCreateSourceResolver(&pSourceResolver);//创建源解析器MF_OBJECT_TYPE ObjectType = MF_OBJECT_INVALID;IUnknown* pSource = NULL;if (SUCCEEDED(hr)){hr = pSourceResolver->CreateObjectFromURL(//从URL创建媒体源m_pFileName, //源的URLMF_RESOLUTION_MEDIASOURCE, //创建源对象NULL, //可选属性存储&ObjectType, //接收创建的对象类型&pSource //接收指向媒体源的指针);}SafeRelease(&pSourceResolver);//释放源解析器if (SUCCEEDED(hr)){hr = pSource->QueryInterface(IID_PPV_ARGS(&pMFMediaSource));//获取媒体源接口}SafeRelease(&pSource);//释放IUnknown接口if (SUCCEEDED(hr)){hr = pMFMediaSource->CreatePresentationDescriptor(&pSourceD);//获取演示文稿描述符}if (SUCCEEDED(hr)){hr = pSourceD->GetUINT64(MF_PD_DURATION, (UINT64*)&DUR);//获取文件流的时长,100纳秒为单位}BOOL Selected;if (SUCCEEDED(hr)){hr = pSourceD->GetStreamDescriptorByIndex(0, &Selected, &pStreamD1);//获取流1描述符}if (SUCCEEDED(hr)){hr = pStreamD1->GetMediaTypeHandler(&pHandle1);//获取流1媒体类型处理器}SafeRelease(&pStreamD1);//释放流1描述符GUID guid;if (SUCCEEDED(hr)){hr = pHandle1->GetMajorType(&guid);//获取流1主要类型}if (SUCCEEDED(hr)){if (guid == MEDIATYPE_Video)//如果是视频{hr = pHandle1->GetCurrentMediaType(&pVideoType);//获取视频流的媒体类型}else if (guid == MEDIATYPE_Audio)//如果是音频{hr = pHandle1->GetCurrentMediaType(&pAudioType);//获取音频流的媒体类型}}SafeRelease(&pHandle1);//释放流1媒体类型处理器if (SUCCEEDED(hr)){hr = pSourceD->GetStreamDescriptorByIndex(1, &Selected, &pStreamD2);//获取流2描述符}if (SUCCEEDED(hr)){hr = pStreamD2->GetMediaTypeHandler(&pHandle2);//获取流2媒体类型处理器}SafeRelease(&pStreamD2);//释放流2描述符if (SUCCEEDED(hr)){hr = pHandle2->GetMajorType(&guid);//获取流2主要类型}if (SUCCEEDED(hr)){if (guid == MEDIATYPE_Video)//如果是视频{hr = pHandle2->GetCurrentMediaType(&pVideoType);//获取视频流的媒体类型}else if (guid == MEDIATYPE_Audio)//如果是音频{hr = pHandle2->GetCurrentMediaType(&pAudioType);//获取音频流的媒体类型}}SafeRelease(&pHandle2);//释放流2媒体类型处理器SafeRelease(&pSourceD);//释放演示文稿描述符SafeRelease(&pMFMediaSource);//释放媒体源接口return hr;

}STDMETHODIMP CFilter::Pause()

{if (m_State == State_Stopped){DWORD dw = WaitForSingleObject(hSourceThread, 0);if (dw == WAIT_FAILED || dw == WAIT_OBJECT_0)//如果媒体源线程没有创建或已退出{hSourceThread = CreateThread(NULL, 0, MediaSourceThread, this, 0, NULL);//创建媒体源线程WaitForSingleObject(hInit, INFINITE);//等待媒体源创建成功}mStart = 0;PROPVARIANT var;PropVariantInit(&var);var.vt = VT_I8;var.hVal.QuadPart = mStart;HRESULT hr = pIMFMediaSource->Start(pISourceD, NULL, &var);//启动媒体源PropVariantClear(&var);}return CBaseFilter::Pause();

}STDMETHODIMP CFilter::Stop()

{HRESULT hr = pIMFMediaSource->Stop();return CBaseFilter::Stop();

}DWORD WINAPI MediaSourceThread(LPVOID pParam)//媒体源线程

{CFilter* pCFilter = (CFilter*)pParam;HRESULT hr, hr1, hr2;IMFMediaStream* pIMFMediaStream = NULL;IMFStreamDescriptor* pIMFStreamDescriptor = NULL;IMFMediaTypeHandler* pHandler = NULL;pCFilter->pVideoStream = NULL;//视频流接口pCFilter->pAudioStream = NULL;//音频流接口IMFSourceResolver* pSourceResolver = NULL;//源解析器hr = MFCreateSourceResolver(&pSourceResolver);//创建源解析器MF_OBJECT_TYPE ObjectType = MF_OBJECT_INVALID;IUnknown* pSource = NULL;if (SUCCEEDED(hr)){hr = pSourceResolver->CreateObjectFromURL(//从URL创建媒体源pCFilter->m_pFileName, //源的URLMF_RESOLUTION_MEDIASOURCE, //创建源对象NULL, //可选属性存储&ObjectType, //接收创建的对象类型&pSource //接收指向媒体源的指针);}SafeRelease(&pSourceResolver);//释放源解析器if (SUCCEEDED(hr)){hr = pSource->QueryInterface(IID_PPV_ARGS(&pCFilter->pIMFMediaSource));//获取媒体源接口}SafeRelease(&pSource);//释放IUnknown接口if (SUCCEEDED(hr)){hr = pCFilter->pIMFMediaSource->CreatePresentationDescriptor(&pCFilter->pISourceD);//获取演示文稿描述符}if (hr != S_OK){SafeRelease(&pCFilter->pIMFMediaSource);//释放媒体源接口return hr;}SetEvent(pCFilter->hInit);//发送“媒体源已创建”信号Agan:GUID guid;IMFMediaEvent* pSourceEvent = NULL;hr1 = pCFilter->pIMFMediaSource->GetEvent(MF_EVENT_FLAG_NO_WAIT, &pSourceEvent);//获取媒体源事件,不等待DWORD dw = WaitForSingleObject(pCFilter->hExit, 0);//检测“退出媒体源线程”信号if (dw == WAIT_OBJECT_0)//如果有“退出媒体源线程”信号{hr = pCFilter->pIMFMediaSource->Stop();pCFilter->pIMFMediaSource->Shutdown();SafeRelease(&pCFilter->pISourceD);//释放演示文稿描述符SafeRelease(&pCFilter->pIMFMediaSource);//释放媒体源return 1;//退出线程}if (SUCCEEDED(hr1))//如果获取媒体源事件成功{MediaEventType MET;hr2 = pSourceEvent->GetType(&MET);//获取媒体源事件类型if (SUCCEEDED(hr2)){PROPVARIANT vr;PropVariantInit(&vr);hr = pSourceEvent->GetValue(&vr);//获取事件值switch (MET){case MENewStream://如果是“创建了新流”事件hr = vr.punkVal->QueryInterface(&pIMFMediaStream);//获取流接口vr.punkVal->Release();//释放IUnknown接口hr = pIMFMediaStream->GetStreamDescriptor(&pIMFStreamDescriptor);//获取流描述符hr = pIMFStreamDescriptor->GetMediaTypeHandler(&pHandler);//获取媒体类型处理器SafeRelease(&pIMFStreamDescriptor);//释放流描述符hr = pHandler->GetMajorType(&guid);//获取主要类型SafeRelease(&pHandler);//释放类型处理器if (guid == MEDIATYPE_Video)//如果是视频流{pCFilter->pVideoStream = pIMFMediaStream;//获取视频流hr = pCFilter->pVideoStream->RequestSample(NULL);//视频流请求样本第1个样本pCFilter->hVideoThread = CreateThread(NULL, 0, VideoThread, pCFilter, 0, NULL);//创建视频工作线程}if (guid == MEDIATYPE_Audio)//如果是音频流{pCFilter->pAudioStream = pIMFMediaStream;//获取音频流hr = pCFilter->pAudioStream->RequestSample(NULL);//音频流请求样本第1个样本pCFilter->hAudioThread = CreateThread(NULL, 0, AudioThread, pCFilter, 0, NULL);//创建音频工作线程}break;case MEUpdatedStream://如果是“流已更新”(定位或重启时发送)hr = vr.punkVal->QueryInterface(&pIMFMediaStream);//获取流接口vr.punkVal->Release();//释放IUnknown接口hr = pIMFMediaStream->GetStreamDescriptor(&pIMFStreamDescriptor);//获取流描述符hr = pIMFStreamDescriptor->GetMediaTypeHandler(&pHandler);//获取媒体类型处理器SafeRelease(&pIMFStreamDescriptor);//释放流描述符hr = pHandler->GetMajorType(&guid);//获取主要类型SafeRelease(&pHandler);//释放类型处理器if (guid == MEDIATYPE_Video)//如果是视频流{pCFilter->pVideoStream = pIMFMediaStream;//获取视频流hr = pCFilter->pVideoStream->RequestSample(NULL);//视频流请求第1个样本DWORD dw = WaitForSingleObject(pCFilter->hVideoThread, 0);if (dw == WAIT_OBJECT_0)//如果视频工作线程已退出。防止在SEEK时,创建新线程{pCFilter->hVideoThread = CreateThread(NULL, 0, VideoThread, pCFilter, 0, NULL);//创建视频工作线程}}if (guid == MEDIATYPE_Audio)//如果是音频流{pCFilter->pAudioStream = pIMFMediaStream;//获取音频流hr = pCFilter->pAudioStream->RequestSample(NULL);//音频流请求第1个样本DWORD dw = WaitForSingleObject(pCFilter->hAudioThread, 0);if (dw == WAIT_OBJECT_0)//如果音频工作线程已退出。防止在SEEK时,创建新线程{pCFilter->hAudioThread = CreateThread(NULL, 0, AudioThread, pCFilter, 0, NULL);//创建音频工作线程}}break;}PropVariantClear(&vr);}SafeRelease(&pSourceEvent);//释放媒体源事件}goto Agan;

}DWORD WINAPI VideoThread(LPVOID pParam)//视频工作线程

{CFilter* pCFilter = (CFilter*)pParam;HRESULT hr;hr = pCFilter->pCPin1->DeliverBeginFlush();Sleep(200);hr = pCFilter->pCPin1->DeliverEndFlush();hr = pCFilter->pCPin1->DeliverNewSegment(0, pCFilter->DUR - pCFilter->mStart, 1.0);

Agan:HRESULT hr1, hr2;IMFSample* pIMFSample = NULL;IMFMediaEvent* pStreamEvent = NULL;hr1 = pCFilter->pVideoStream->GetEvent(0, &pStreamEvent);//获取媒体流事件,无限期等待if (hr1 == S_OK){MediaEventType meType = MEUnknown;hr2 = pStreamEvent->GetType(&meType);//获取事件类型if (hr2 == S_OK){PROPVARIANT var;PropVariantInit(&var);hr = pStreamEvent->GetValue(&var);//获取事件值switch (meType){case MEMediaSample://如果是“产生新样本”事件hr = var.punkVal->QueryInterface(&pIMFSample);//获取样本接口if (hr == S_OK){HRESULT hrA, hrB;UINT32 CleanPoint;hrA = pIMFSample->GetUINT32(MFSampleExtension_CleanPoint, &CleanPoint);//是否为关键帧UINT32 Discontinuity;hrB = pIMFSample->GetUINT32(MFSampleExtension_Discontinuity, &Discontinuity);//是否包含中断标志LONGLONG star, dur;hr = pIMFSample->GetSampleTime(&star);hr = pIMFSample->GetSampleDuration(&dur);DWORD len;hr = pIMFSample->GetTotalLength(&len);DWORD Count;hr = pIMFSample->GetBufferCount(&Count);IMFMediaBuffer* pBuffer = NULL;if (Count == 1)hr = pIMFSample->GetBufferByIndex(0, &pBuffer);elsehr = pIMFSample->ConvertToContiguousBuffer(&pBuffer);//将具有多个缓冲区的样本转换为具有单个缓冲区的样本BYTE* pData = NULL; DWORD MLen, CLen;hr = pBuffer->Lock(&pData, &MLen, &CLen);IMediaSample *pOutSample = NULL;hr = pCFilter->pCPin1->GetDeliveryBuffer(&pOutSample, NULL, NULL, 0);//获取一个空的输出引脚样本if (hr == S_OK){BYTE* pOutBuffer = NULL;hr = pOutSample->GetPointer(&pOutBuffer);//获取输出引脚样本缓冲区指针if (pOutBuffer && pData && len <= 10000000)CopyMemory(pOutBuffer, pData, len);LONGLONG STAR = star - pCFilter->mStart, END = STAR + dur;hr = pOutSample->SetTime(&STAR, &END);//设置输出引脚样本时间戳hr = pOutSample->SetActualDataLength(len);//设置输出引脚样本有效数据长度if (hrA == S_OK){if (CleanPoint)//如果是关键帧hr = pOutSample->SetSyncPoint(TRUE);//设置同步点标志elsehr = pOutSample->SetSyncPoint(FALSE);}if (hrB == S_OK){if (Discontinuity)//如果有中断标志{hr = pOutSample->SetDiscontinuity(TRUE);//设置中断标志}elsehr = pOutSample->SetDiscontinuity(FALSE);}hr = pCFilter->pCPin1->Deliver(pOutSample);//输出引脚向下游发送样本pOutSample->Release();//释放输出引脚样本}pBuffer->Unlock(); SafeRelease(&pBuffer); SafeRelease(&pIMFSample);hr = pCFilter->pVideoStream->RequestSample(NULL);//请求下一个样本}break;case MEEndOfStream://如果是“流结束”事件hr = pCFilter->pCPin1->DeliverEndOfStream();break;case MEStreamStopped://如果是“流停止”事件PropVariantClear(&var);SafeRelease(&pStreamEvent);return 1;//终止视频工作线程}PropVariantClear(&var);}SafeRelease(&pStreamEvent);}goto Agan;

}DWORD WINAPI AudioThread(LPVOID pParam)//音频工作线程

{CFilter* pCFilter = (CFilter*)pParam;HRESULT hr, hrA, hrB;hr = pCFilter->pCPin2->DeliverBeginFlush();Sleep(100);hr = pCFilter->pCPin2->DeliverEndFlush();hr = pCFilter->pCPin2->DeliverNewSegment(0, pCFilter->DUR - pCFilter->mStart, 1.0);

Agan:HRESULT hr1, hr2;IMFSample* pIMFSample = NULL;IMFMediaEvent* pStreamEvent = NULL;hr1 = pCFilter->pAudioStream->GetEvent(0, &pStreamEvent);//获取媒体流事件,无限期等待if (hr1 == S_OK){MediaEventType meType = MEUnknown;hr2 = pStreamEvent->GetType(&meType);//获取事件类型if (hr2 == S_OK){PROPVARIANT var;PropVariantInit(&var);hr = pStreamEvent->GetValue(&var);//获取事件值switch (meType){case MEMediaSample://如果是“产生新样本”事件hr = var.punkVal->QueryInterface(&pIMFSample);//获取样本接口if (hr == S_OK){UINT32 CleanPoint;hrA = pIMFSample->GetUINT32(MFSampleExtension_CleanPoint, &CleanPoint);//是否为关键帧UINT32 Discontinuity;hrB = pIMFSample->GetUINT32(MFSampleExtension_Discontinuity, &Discontinuity);//是否包含中断标志LONGLONG star, dur;hr = pIMFSample->GetSampleTime(&star);pCFilter->CUR = star;//音频当前位置hr = pIMFSample->GetSampleDuration(&dur);DWORD Count;hr = pIMFSample->GetBufferCount(&Count);IMFMediaBuffer* pBuffer = NULL;if (Count == 1)hr = pIMFSample->GetBufferByIndex(0, &pBuffer);elsehr = pIMFSample->ConvertToContiguousBuffer(&pBuffer);//将具有多个缓冲区的样本转换为具有单个缓冲区的样本BYTE* pData = NULL;hr = pBuffer->Lock(&pData, NULL, NULL);DWORD len;hr = pIMFSample->GetTotalLength(&len);IMediaSample *pOutSample = NULL;hr = pCFilter->pCPin2->GetDeliveryBuffer(&pOutSample, NULL, NULL, 0);//获取一个空的输出引脚样本if (hr == S_OK){BYTE* pOutBuffer = NULL;hr = pOutSample->GetPointer(&pOutBuffer);//获取输出引脚样本缓冲区指针if (pOutBuffer && pData && len <= 1000000)CopyMemory(pOutBuffer, pData, len);LONGLONG STAR = star - pCFilter->mStart, END = STAR + dur;hr = pOutSample->SetTime(&STAR, &END);//设置输出引脚样本时间戳hr = pOutSample->SetActualDataLength(len);//设置输出引脚样本有效数据长度if (hrA == S_OK){if (CleanPoint)//如果是关键帧hr = pOutSample->SetSyncPoint(TRUE);//设置同步点标志elsehr = pOutSample->SetSyncPoint(FALSE);}if (hrB == S_OK){if (Discontinuity)//如果有中断标志{hr = pOutSample->SetDiscontinuity(TRUE);//设置中断标志}elsehr = pOutSample->SetDiscontinuity(FALSE);}hr = pCFilter->pCPin2->Deliver(pOutSample);//输出引脚向下游发送样本pOutSample->Release();//释放输出引脚样本}pBuffer->Unlock(); SafeRelease(&pBuffer); SafeRelease(&pIMFSample);hr = pCFilter->pAudioStream->RequestSample(NULL);//请求下一个样本}break;case MEEndOfStream://如果是“流结束”事件hr = pCFilter->pCPin2->DeliverEndOfStream();break;case MEStreamStopped://如果是“流停止”事件PropVariantClear(&var);SafeRelease(&pStreamEvent);return 1;//终止音频工作线程}PropVariantClear(&var);}SafeRelease(&pStreamEvent);}goto Agan;

}CPin1.cpp

#include "DLL.h"CPin1::CPin1(CFilter *pFilter, HRESULT *phr, LPCWSTR pPinName) : CBaseOutputPin(NAME("Video"), pFilter, pFilter, phr, pPinName)

{pCFilter = pFilter;

}CPin1::~CPin1()

{}STDMETHODIMP CPin1::NonDelegatingQueryInterface(REFIID riid, void **ppv)

{if (riid == IID_IQualityControl){return CBasePin::NonDelegatingQueryInterface(riid, ppv);}elsereturn CBasePin::NonDelegatingQueryInterface(riid, ppv);

}HRESULT CPin1::CheckMediaType(const CMediaType *pmt)

{AM_MEDIA_TYPE* pMt = NULL;pCFilter->pVideoType->GetRepresentation(AM_MEDIA_TYPE_REPRESENTATION, (void**)&pMt);//将IMFMediaType表示的媒体类型,转换为AM_MEDIA_TYPE结构形式CMediaType MT(*pMt);if (pmt->MatchesPartial(&MT)){pCFilter->pVideoType->FreeRepresentation(AM_MEDIA_TYPE_REPRESENTATION, pMt);//释放GetRepresentation分配的内存return S_OK;}pCFilter->pVideoType->FreeRepresentation(AM_MEDIA_TYPE_REPRESENTATION, pMt);//释放GetRepresentation分配的内存return S_FALSE;

}HRESULT CPin1::GetMediaType(int iPosition, CMediaType *pmt)

{if (pCFilter->m_pFileName == NULL)return S_FALSE;if (iPosition == 0){AM_MEDIA_TYPE* pMt = NULL;pCFilter->pVideoType->GetRepresentation(AM_MEDIA_TYPE_REPRESENTATION, (void**)&pMt);//将IMFMediaType表示的媒体类型,转换为AM_MEDIA_TYPE结构形式pmt->Set(*pMt);pCFilter->pVideoType->FreeRepresentation(AM_MEDIA_TYPE_REPRESENTATION, pMt);//释放GetRepresentation分配的内存HasSet = TRUE;return S_OK;}return S_FALSE;

}HRESULT CPin1::SetMediaType(const CMediaType *pmt)

{if (HasSet == FALSE)//如果GetMediaType函数没有调用{GetMediaType(0, &m_mt);//设置引脚媒体类型return S_OK;}return CBasePin::SetMediaType(pmt);

}HRESULT CPin1::BreakConnect()

{HasSet = FALSE;return CBasePin::BreakConnect();

}HRESULT CPin1::DecideBufferSize(IMemAllocator *pMemAllocator, ALLOCATOR_PROPERTIES * ppropInputRequest)//确定输出引脚样本缓冲区大小

{HRESULT hr = S_OK;ppropInputRequest->cBuffers = 1;//1个缓冲区ppropInputRequest->cbBuffer = 10000000;//缓冲区的大小10MALLOCATOR_PROPERTIES Actual;hr = pMemAllocator->SetProperties(ppropInputRequest, &Actual);if (FAILED(hr))return hr;if (Actual.cbBuffer < ppropInputRequest->cbBuffer)// 这个分配器是否不合适{return E_FAIL;}ASSERT(Actual.cBuffers == 1);// 确保我们只有 1 个缓冲区return S_OK;

}CPin2.cpp

#include "DLL.h"CPin2::CPin2(CFilter *pFilter, HRESULT *phr, LPCWSTR pPinName) : CBaseOutputPin(NAME("Audio"), pFilter, pFilter, phr, pPinName)

{pCFilter = pFilter;

}CPin2::~CPin2()

{}STDMETHODIMP CPin2::NonDelegatingQueryInterface(REFIID riid, void **ppv)

{if (riid == IID_IMediaSeeking){return GetInterface(static_cast<IMediaSeeking*>(this), ppv);}elsereturn CBaseOutputPin::NonDelegatingQueryInterface(riid, ppv);

}HRESULT CPin2::CheckMediaType(const CMediaType *pmt)

{AM_MEDIA_TYPE* pMt = NULL;pCFilter->pAudioType->GetRepresentation(AM_MEDIA_TYPE_REPRESENTATION, (void**)&pMt);//将IMFMediaType表示的媒体类型,转换为AM_MEDIA_TYPE结构形式CMediaType MT(*pMt);if (pmt->MatchesPartial(&MT)){pCFilter->pAudioType->FreeRepresentation(AM_MEDIA_TYPE_REPRESENTATION, pMt);//释放GetRepresentation分配的内存return S_OK;}pCFilter->pAudioType->FreeRepresentation(AM_MEDIA_TYPE_REPRESENTATION, pMt);//释放GetRepresentation分配的内存return S_FALSE;

}HRESULT CPin2::GetMediaType(int iPosition, CMediaType *pmt)

{if (pCFilter->m_pFileName == NULL)return S_FALSE;if (iPosition == 0){AM_MEDIA_TYPE* pMt = NULL;pCFilter->pAudioType->GetRepresentation(AM_MEDIA_TYPE_REPRESENTATION, (void**)&pMt);//将IMFMediaType表示的媒体类型,转换为AM_MEDIA_TYPE结构形式pmt->Set(*pMt);pCFilter->pAudioType->FreeRepresentation(AM_MEDIA_TYPE_REPRESENTATION, pMt);//释放GetRepresentation分配的内存HasSet = TRUE;return S_OK;}return S_FALSE;

}HRESULT CPin2::SetMediaType(const CMediaType *pmt)

{if (HasSet == FALSE)//如果GetMediaType函数没有调用{GetMediaType(0, &m_mt);//设置引脚媒体类型return S_OK;}return CBasePin::SetMediaType(pmt);

}HRESULT CPin2::BreakConnect()

{HasSet = FALSE;return CBasePin::BreakConnect();

}HRESULT CPin2::DecideBufferSize(IMemAllocator *pMemAllocator, ALLOCATOR_PROPERTIES * ppropInputRequest)//确定输出引脚样本缓冲区大小

{HRESULT hr = S_OK;ppropInputRequest->cBuffers = 1;//1个缓冲区ppropInputRequest->cbBuffer = 1000000;//缓冲区的大小1MALLOCATOR_PROPERTIES Actual;hr = pMemAllocator->SetProperties(ppropInputRequest, &Actual);if (FAILED(hr))return hr;if (Actual.cbBuffer < ppropInputRequest->cbBuffer)// 这个分配器是否不合适{return E_FAIL;}ASSERT(Actual.cBuffers == 1);// 确保我们只有 1 个缓冲区return S_OK;

}HRESULT STDMETHODCALLTYPE CPin2::CheckCapabilities(DWORD *pCapabilities)//查询流是否具有指定的Seek功能

{CheckPointer(pCapabilities, E_POINTER);return (~m_dwSeekingCaps & *pCapabilities) ? S_FALSE : S_OK;

}HRESULT STDMETHODCALLTYPE CPin2::ConvertTimeFormat(LONGLONG *pTarget, const GUID *pTargetFormat, LONGLONG Source, const GUID *pSourceFormat)//从一种时间格式转换为另一种时间格式

{CheckPointer(pTarget, E_POINTER);if (pTargetFormat == 0 || *pTargetFormat == TIME_FORMAT_MEDIA_TIME){if (pSourceFormat == 0 || *pSourceFormat == TIME_FORMAT_MEDIA_TIME){*pTarget = Source;return S_OK;}}return E_INVALIDARG;

}HRESULT STDMETHODCALLTYPE CPin2::GetAvailable(LONGLONG *pEarliest, LONGLONG *pLatest)//获取有效Seek的时间范围

{if (pEarliest){*pEarliest = 0;}if (pLatest){CAutoLock lock(m_pLock);*pLatest = pCFilter->DUR;}return S_OK;

}HRESULT STDMETHODCALLTYPE CPin2::GetCapabilities(DWORD *pCapabilities)//检索流的所有Seek功能

{CheckPointer(pCapabilities, E_POINTER);*pCapabilities = m_dwSeekingCaps;return S_OK;

}HRESULT STDMETHODCALLTYPE CPin2::GetCurrentPosition(LONGLONG *pCurrent)//获取相对于流总持续时间的当前位置

{*pCurrent = pCFilter->CUR;return S_OK;

}HRESULT STDMETHODCALLTYPE CPin2::GetDuration(LONGLONG *pDuration)//获取流的持续时间

{CheckPointer(pDuration, E_POINTER);CAutoLock lock(m_pLock);*pDuration = pCFilter->DUR;return S_OK;

}HRESULT STDMETHODCALLTYPE CPin2::GetPositions(LONGLONG *pCurrent, LONGLONG *pStop)//获取相对于流总持续时间的当前位置和停止位置

{CheckPointer(pCurrent, E_POINTER); CheckPointer(pStop, E_POINTER);*pCurrent = pCFilter->CUR; *pStop = pCFilter->DUR;return S_OK;

}HRESULT STDMETHODCALLTYPE CPin2::GetPreroll(LONGLONG *pllPreroll)//获取将在开始位置之前排队的数据量

{CheckPointer(pllPreroll, E_POINTER);*pllPreroll = 0;return S_OK;

}HRESULT STDMETHODCALLTYPE CPin2::GetRate(double *pdRate)//获取播放速率

{CheckPointer(pdRate, E_POINTER);CAutoLock lock(m_pLock);*pdRate = 1.0;return S_OK;

}HRESULT STDMETHODCALLTYPE CPin2::GetStopPosition(LONGLONG *pStop)//获取相对于流的持续时间的停止播放时间

{CheckPointer(pStop, E_POINTER);CAutoLock lock(m_pLock);*pStop = pCFilter->DUR;return S_OK;

}HRESULT STDMETHODCALLTYPE CPin2::GetTimeFormat(GUID *pFormat)//获取当前用于Seek操作的时间格式

{CheckPointer(pFormat, E_POINTER);*pFormat = TIME_FORMAT_MEDIA_TIME;return S_OK;

}HRESULT STDMETHODCALLTYPE CPin2::IsFormatSupported(const GUID *pFormat)//确定Seek操作是否支持指定的时间格式

{CheckPointer(pFormat, E_POINTER);return *pFormat == TIME_FORMAT_MEDIA_TIME ? S_OK : S_FALSE;

}HRESULT STDMETHODCALLTYPE CPin2::IsUsingTimeFormat(const GUID *pFormat)//确定Seek操作当前是否使用指定的时间格式

{CheckPointer(pFormat, E_POINTER);return *pFormat == TIME_FORMAT_MEDIA_TIME ? S_OK : S_FALSE;

}HRESULT STDMETHODCALLTYPE CPin2::QueryPreferredFormat(GUID *pFormat)//获取首选的Seek时间格式

{CheckPointer(pFormat, E_POINTER);*pFormat = TIME_FORMAT_MEDIA_TIME;return S_OK;

}HRESULT STDMETHODCALLTYPE CPin2::SetPositions(LONGLONG *pCurrent, DWORD dwCurrentFlags, LONGLONG *pStop, DWORD dwStopFlags)//设置当前位置和停止位置

{CheckPointer(pCurrent, E_POINTER);DWORD dwCurrentPos = dwCurrentFlags & AM_SEEKING_PositioningBitsMask;if (dwCurrentPos == AM_SEEKING_AbsolutePositioning && *pCurrent >= 0 && *pCurrent <= pCFilter->DUR){HRESULT hr;FILTER_STATE fs;hr = pCFilter->GetState(0, &fs);if (fs != State_Stopped && *pCurrent && *pCurrent<pCFilter->DUR - 10000000){hr = pCFilter->pIMFMediaSource->Pause();//暂停媒体源hr = pCFilter->pCPin1->DeliverBeginFlush();hr = pCFilter->pCPin2->DeliverBeginFlush();Sleep(200);//确保下游解码器丢弃所有样本,如果下游解码器残留有样本,渲染器会等待其渲染时间(时间戳),视频将卡在这里hr = pCFilter->pCPin1->DeliverEndFlush();hr = pCFilter->pCPin2->DeliverEndFlush();hr = pCFilter->pCPin1->DeliverNewSegment(0, pCFilter->DUR - pCFilter->mStart, 1.0);hr = pCFilter->pCPin2->DeliverNewSegment(0, pCFilter->DUR - pCFilter->mStart, 1.0);pCFilter->mStart = *pCurrent;PROPVARIANT var;PropVariantInit(&var);var.vt = VT_I8;var.hVal.QuadPart = pCFilter->mStart;hr = pCFilter->pIMFMediaSource->Start(pCFilter->pISourceD, NULL, &var);//启动媒体源PropVariantClear(&var);return S_OK;}}return E_INVALIDARG;

}HRESULT STDMETHODCALLTYPE CPin2::SetRate(double dRate)//设置播放速率

{if (dRate == 1.0)return S_OK;else return S_FALSE;

}HRESULT STDMETHODCALLTYPE CPin2::SetTimeFormat(const GUID *pFormat)//设置后续Seek操作的时间格式

{CheckPointer(pFormat, E_POINTER);return *pFormat == TIME_FORMAT_MEDIA_TIME ? S_OK : E_INVALIDARG;

}下载本过滤器DLL

相关文章:

)

DirectShow过滤器开发-读视频文件过滤器(再写)

下载本过滤器DLL 本过滤器读取视频文件输出视频流和音频流。流类型由文件决定。已知可读取的文件格式有:AVI,ASF,MOV,MP4,MPG,WMV。 过滤器信息 过滤器名称:读视频文件 过滤器GUID:…...

代码练习2.3

终端输入10个学生成绩,使用冒泡排序对学生成绩从低到高排序 #include <stdio.h>void bubbleSort(int arr[], int n) {for (int i 0; i < n-1; i) {for (int j 0; j < n-i-1; j) {if (arr[j] > arr[j1]) {// 交换 arr[j] 和 arr[j1]int temp arr[…...

基于 Redis GEO 实现条件分页查询用户附近的场馆列表

🎯 本文档详细介绍了如何使用Redis GEO模块实现场馆位置的存储与查询,以支持“附近场馆”搜索功能。首先,通过微信小程序获取用户当前位置,并将该位置信息与场馆的经纬度数据一同存储至Redis中。利用Redis GEO高效的地理空间索引能…...

)

【大数据技术】案例01:词频统计样例(hadoop+mapreduce+yarn)

词频统计(hadoop+mapreduce+yarn) 搭建完全分布式高可用大数据集群(VMware+CentOS+FinalShell) 搭建完全分布式高可用大数据集群(Hadoop+MapReduce+Yarn) 在阅读本文前,请确保已经阅读过以上两篇文章,成功搭建了Hadoop+MapReduce+Yarn的大数据集群环境。 写在前面 Wo…...

Selenium 使用指南:从入门到精通

Selenium 使用指南:从入门到精通 Selenium 是一个用于自动化 Web 浏览器操作的强大工具,广泛应用于自动化测试和 Web 数据爬取中。本文将带你从入门到精通地掌握 Selenium,涵盖其基本操作、常用用法以及一个完整的图片爬取示例。 1. 环境配…...

笔试-排列组合

应用 一个长度为[1, 50]、元素都是字符串的非空数组,每个字符串的长度为[1, 30],代表非负整数,元素可以以“0”开头。例如:[“13”, “045”,“09”,“56”]。 将所有字符串排列组合,拼起来组成…...

Java序列化详解

1 什么是序列化、反序列化 在Java编程实践中,当我们需要持久化Java对象,比如把Java对象保存到文件里,或是在网络中传输Java对象时,序列化机制就发挥着关键作用。 序列化:指的是把数据结构或对象转变为可存储、可传输的…...

ChatGPT与GPT的区别与联系

ChatGPT 和 GPT 都是基于 Transformer 架构的语言模型,但它们有不同的侧重点和应用。下面我们来探讨一下它们的区别与联系。 1. GPT(Generative Pre-trained Transformer) GPT 是一类由 OpenAI 开发的语言模型,基于 Transformer…...

MySQL入门 – CRUD基本操作

MySQL入门 – CRUD基本操作 Essential CRUD Manipulation to MySQL Database By JacksonML 本文简要介绍操作MySQL数据库的基本操作,即创建(Create), 读取(Read), 更新(Update)和删除(Delete)。 基于数据表的关系型…...

Redis背景介绍

⭐️前言⭐️ 本文主要做Redis相关背景介绍,包括核心能力、重要特性和使用场景。 🍉欢迎点赞 👍 收藏 ⭐留言评论 🍉博主将持续更新学习记录收获,友友们有任何问题可以在评论区留言 🍉博客中涉及源码及博主…...

PPT演示设置:插入音频同步切换播放时长计算

PPT中插入音频&同步切换&放时长计算 一、 插入音频及音频设置二、设置页面切换和音频同步三、播放时长计算 一、 插入音频及音频设置 1.插入音频:点击菜单栏插入-音频-选择PC上的音频(已存在的音频)或者录制音频(现场录制…...

DIFY源码解析

偶然发现Github上某位大佬开源的DIFY源码注释和解析,目前还处于陆续不断更新地更新过程中,为大佬的专业和开源贡献精神点赞。先收藏链接,后续慢慢学习。 相关链接如下: DIFY源码解析...

[权限提升] Wdinwos 提权 维持 — 系统错误配置提权 - Trusted Service Paths 提权

关注这个专栏的其他相关笔记:[内网安全] 内网渗透 - 学习手册-CSDN博客 0x01:Trusted Service Paths 提权原理 Windows 的服务通常都是以 System 权限运行的,所以系统在解析服务的可执行文件路径中的空格的时候也会以 System 权限进行解析&a…...

【算法】回溯算法专题② ——组合型回溯 + 剪枝 python

目录 前置知识进入正题小试牛刀实战演练总结 前置知识 【算法】回溯算法专题① ——子集型回溯 python 进入正题 组合https://leetcode.cn/problems/combinations/submissions/596357179/ 给定两个整数 n 和 k,返回范围 [1, n] 中所有可能的 k 个数的组合。 你可以…...

LeetCode:121.买卖股票的最佳时机1

跟着carl学算法,本系列博客仅做个人记录,建议大家都去看carl本人的博客,写的真的很好的! 代码随想录 LeetCode:121.买卖股票的最佳时机1 给定一个数组 prices ,它的第 i 个元素 prices[i] 表示一支给定股票…...

pytorch生成对抗网络

人工智能例子汇总:AI常见的算法和例子-CSDN博客 生成对抗网络(GAN,Generative Adversarial Network)是一种深度学习模型,由两个神经网络组成:生成器(Generator)和判别器࿰…...

Visual Studio Code应用本地部署的deepseek

1.打开Visual Studio Code,在插件中搜索continue,安装插件。 2.添加新的大语言模型,我们选择ollama. 3.直接点connect,会链接本地下载好的deepseek模型。 参看上篇文章:deepseek本地部署-CSDN博客 4.输入需求生成可用…...

用 HTML、CSS 和 JavaScript 实现抽奖转盘效果

顺序抽奖 前言 这段代码实现了一个简单的抽奖转盘效果。页面上有一个九宫格布局的抽奖区域,周围八个格子分别放置了不同的奖品名称,中间是一个 “开始抽奖” 的按钮。点击按钮后,抽奖区域的格子会快速滚动,颜色不断变化…...

Skewer v0.2.2安装与使用-生信工具43

01 Skewer 介绍 Skewer(来自于 SourceForge)实现了一种基于位掩码的 k-差异匹配算法,专门用于接头修剪,特别设计用于处理下一代测序(NGS)双端序列。 fastp安装及使用-fastp v0.23.4(bioinfoma…...

C语言:链表排序与插入的实现

好的!以下是一篇关于这段代码的博客文章: 从零开始:链表排序与插入的实现 在数据结构的学习中,链表是一种非常基础且重要的数据结构。今天,我们将通过一个简单的 C 语言程序,来探讨如何实现一个从小到大排序的链表,并在其中插入一个新的节点。这个过程不仅涉及链表的基…...

Go 语言接口详解

Go 语言接口详解 核心概念 接口定义 在 Go 语言中,接口是一种抽象类型,它定义了一组方法的集合: // 定义接口 type Shape interface {Area() float64Perimeter() float64 } 接口实现 Go 接口的实现是隐式的: // 矩形结构体…...

2024年赣州旅游投资集团社会招聘笔试真

2024年赣州旅游投资集团社会招聘笔试真 题 ( 满 分 1 0 0 分 时 间 1 2 0 分 钟 ) 一、单选题(每题只有一个正确答案,答错、不答或多答均不得分) 1.纪要的特点不包括()。 A.概括重点 B.指导传达 C. 客观纪实 D.有言必录 【答案】: D 2.1864年,()预言了电磁波的存在,并指出…...

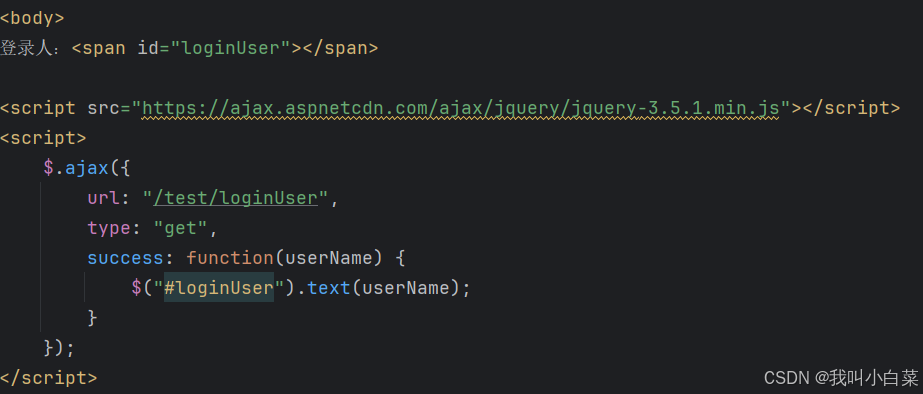

【Java_EE】Spring MVC

目录 Spring Web MVC 编辑注解 RestController RequestMapping RequestParam RequestParam RequestBody PathVariable RequestPart 参数传递 注意事项 编辑参数重命名 RequestParam 编辑编辑传递集合 RequestParam 传递JSON数据 编辑RequestBody …...

ios苹果系统,js 滑动屏幕、锚定无效

现象:window.addEventListener监听touch无效,划不动屏幕,但是代码逻辑都有执行到。 scrollIntoView也无效。 原因:这是因为 iOS 的触摸事件处理机制和 touch-action: none 的设置有关。ios有太多得交互动作,从而会影响…...

图表类系列各种样式PPT模版分享

图标图表系列PPT模版,柱状图PPT模版,线状图PPT模版,折线图PPT模版,饼状图PPT模版,雷达图PPT模版,树状图PPT模版 图表类系列各种样式PPT模版分享:图表系列PPT模板https://pan.quark.cn/s/20d40aa…...

:邮件营销与用户参与度的关键指标优化指南)

精益数据分析(97/126):邮件营销与用户参与度的关键指标优化指南

精益数据分析(97/126):邮件营销与用户参与度的关键指标优化指南 在数字化营销时代,邮件列表效度、用户参与度和网站性能等指标往往决定着创业公司的增长成败。今天,我们将深入解析邮件打开率、网站可用性、页面参与时…...

企业如何增强终端安全?

在数字化转型加速的今天,企业的业务运行越来越依赖于终端设备。从员工的笔记本电脑、智能手机,到工厂里的物联网设备、智能传感器,这些终端构成了企业与外部世界连接的 “神经末梢”。然而,随着远程办公的常态化和设备接入的爆炸式…...

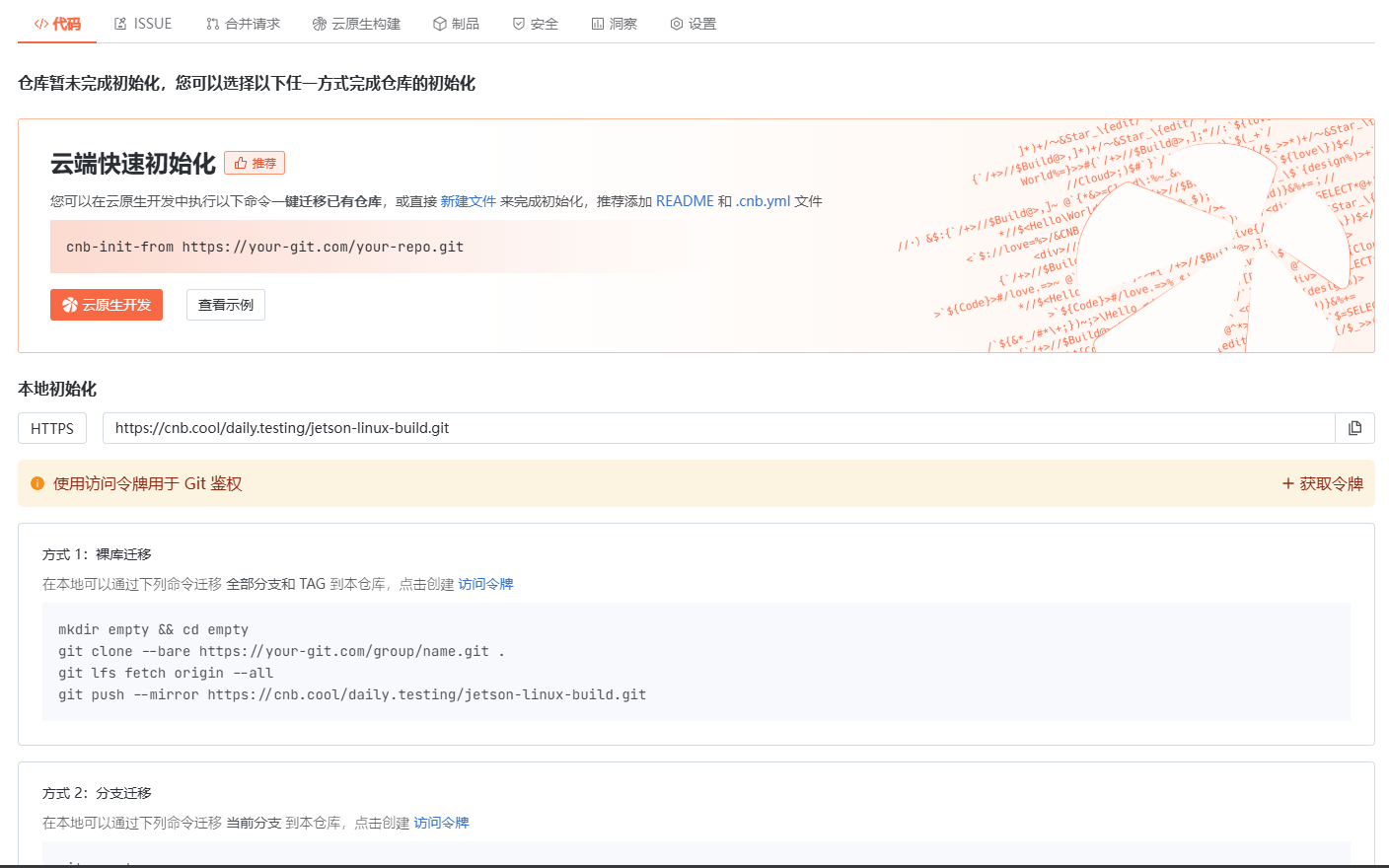

云原生玩法三问:构建自定义开发环境

云原生玩法三问:构建自定义开发环境 引言 临时运维一个古董项目,无文档,无环境,无交接人,俗称三无。 运行设备的环境老,本地环境版本高,ssh不过去。正好最近对 腾讯出品的云原生 cnb 感兴趣&…...

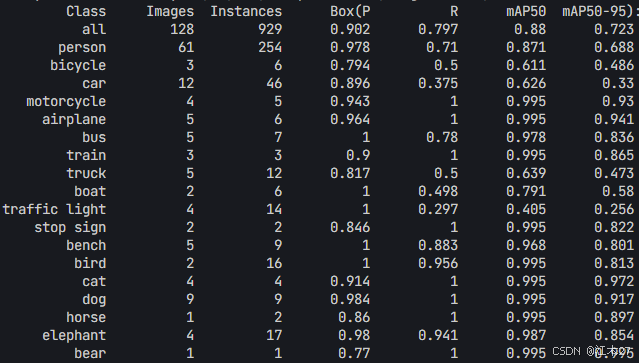

Yolov8 目标检测蒸馏学习记录

yolov8系列模型蒸馏基本流程,代码下载:这里本人提交了一个demo:djdll/Yolov8_Distillation: Yolov8轻量化_蒸馏代码实现 在轻量化模型设计中,**知识蒸馏(Knowledge Distillation)**被广泛应用,作为提升模型…...

CSS | transition 和 transform的用处和区别

省流总结: transform用于变换/变形,transition是动画控制器 transform 用来对元素进行变形,常见的操作如下,它是立即生效的样式变形属性。 旋转 rotate(角度deg)、平移 translateX(像素px)、缩放 scale(倍数)、倾斜 skewX(角度…...