llama.cpp GGUF 模型格式

llama.cpp GGUF 模型格式

- 1. Specification

- 1.1. GGUF Naming Convention (命名规则)

- 1.1.1. Validating Above Naming Convention

- 1.2. File Structure

- 2. Standardized key-value pairs

- 2.1. General

- 2.1.1. Required

- 2.1.2. General metadata

- 2.1.3. Source metadata

- 2.2. LLM

- 2.2.1. Attention

- 2.2.2. RoPE

- 2.2.2.1. Scaling

- 2.2.3. SSM

- 2.2.4. Models

- 2.2.4.1. LLaMA

- 2.2.4.1.1. Optional

- 2.2.4.2. MPT

- 2.2.4.3. GPT-NeoX

- 2.2.4.3.1. Optional

- 2.2.4.4. GPT-J

- 2.2.4.4.1. Optional

- 2.2.4.5. GPT-2

- 2.2.4.6. BLOOM

- 2.2.4.7. Falcon

- 2.2.4.7.1. Optional

- 2.2.4.8. Mamba

- 2.2.4.9. RWKV

- 2.2.4.10. Whisper

- 2.2.5. Prompting

- 2.3. LoRA

- 2.4. Tokenizer

- 2.4.1. GGML

- 2.4.1.1. Special tokens

- 2.4.2. Hugging Face

- 2.4.3. Other

- 2.5. Computation graph

- 3. Standardized tensor names

- 3.1. Base layers

- 3.2. Attention and feed-forward layer blocks

- 4. Version History

- 4.1. v3

- 4.2. v2

- 4.3. v1

- 5. Historical State of Affairs (历史状况)

- 5.1. Overview

- 5.2. Drawbacks

- 5.3. Why not other formats?

- References

原来仙翁手下,竟是两只禽兽!什么仙什么妖,全是唬弄人的把戏!

ggml/docs/gguf.md

https://github.com/ggerganov/ggml/blob/master/docs/gguf.md

GGUF is a file format for storing models for inference with GGML and executors based on GGML. GGUF is a binary format that is designed for fast loading and saving of models, and for ease of reading. Models are traditionally developed using PyTorch or another framework, and then converted to GGUF for use in GGML.

GGUF 是一种用于存储模型的文件格式。

It is a successor file format to GGML, GGMF and GGJT, and is designed to be unambiguous by containing all the information needed to load a model. It is also designed to be extensible, so that new information can be added to models without breaking compatibility.

它是 GGML、GGMF 和 GGJT 的后继文件格式,旨在通过包含加载模型所需的所有信息来确保明确性。它还具有可扩展性,因此可以在不破坏兼容性的情况下将新信息添加到模型中。

For more information about the motivation behind GGUF, see Historical State of Affairs.

1. Specification

GGUF is a format based on the existing GGJT, but makes a few changes to the format to make it more extensible and easier to use.

GGUF 是一种基于现有 GGJT 的格式,但对格式进行了一些更改,使其更具可扩展性和更易于使用。

The following features are desired:

- Single-file deployment: they can be easily distributed and loaded, and do not require any external files for additional information.

它们可以轻松分发和加载,并且不需要任何外部文件来获取附加信息。 - Extensible: new features can be added to GGML-based executors/new information can be added to GGUF models without breaking compatibility with existing models.

可以向基于 GGML 的执行器添加新功能/可以将新信息添加到 GGUF 模型,而不会破坏与现有模型的兼容性。 mmapcompatibility: models can be loaded usingmmapfor fast loading and saving.

可以使用mmap加载模型,以便快速加载和保存。- Easy to use: models can be easily loaded and saved using a small amount of code, with no need for external libraries, regardless of the language used.

无论使用何种语言,只需少量代码即可轻松加载和保存模型,无需外部库。 - Full information: all information needed to load a model is contained in the model file, and no additional information needs to be provided by the user.

加载模型所需的所有信息都包含在模型文件中,无需用户提供任何额外信息。

The key difference between GGJT and GGUF is the use of a key-value structure for the hyperparameters (now referred to as metadata), rather than a list of untyped values. This allows for new metadata to be added without breaking compatibility with existing models, and to annotate the model with additional information that may be useful for inference or for identifying the model.

GGJT 和 GGUF 之间的主要区别在于,GGUF 使用键值结构来表示超参数 (现在称为元数据),而不是无类型值列表。这样就可以在不破坏与现有模型兼容性的情况下添加新的元数据,并使用可能对推理或识别模型有用的其他信息来注释模型。

1.1. GGUF Naming Convention (命名规则)

GGUF follow a naming convention of <BaseName><SizeLabel><FineTune><Version><Encoding><Type><Shard>.gguf where each component is delimitated by a - if present. Ultimately this is intended to make it easier for humans to at a glance get the most important details of a model. It is not intended to be perfectly parsable in the field due to the diversity of existing gguf filenames.

最终,这样做的目的是让人们一眼就能了解模型最重要的细节。

The components are:

- BaseName: A descriptive name for the model base type or architecture.

- This can be derived from gguf metadata

general.basenamesubstituting spaces for dashes.

- This can be derived from gguf metadata

- SizeLabel: Parameter weight class (useful for leader boards) represented as

<expertCount>x<count><scale-prefix>- This can be derived from gguf metadata

general.size_labelif available or calculated if missing. - Rounded decimal point is supported in count with a single letter scale prefix to assist in floating point exponent shown below

Q: Quadrillion parameters.T: Trillion parameters.B: Billion parameters.M: Million parameters.K: Thousand parameters.

- Additional

-<attributes><count><scale-prefix>can be appended as needed to indicate other attributes of interest

- This can be derived from gguf metadata

- FineTune: A descriptive name for the model fine tuning goal (e.g.

Chat,Instruct, etc…)- This can be derived from gguf metadata

general.finetunesubstituting spaces for dashes.

- This can be derived from gguf metadata

- Version: (Optional) Denotes the model version number, formatted as

v<Major>.<Minor>- If model is missing a version number then assume

v1.0(First Public Release) - This can be derived from gguf metadata

general.version

- If model is missing a version number then assume

- Encoding: Indicates the weights encoding scheme that was applied to the model. Content, type mixture and arrangement however are determined by user code and can vary depending on project needs.

- Type: Indicates the kind of gguf file and the intended purpose for it

- If missing, then file is by default a typical gguf tensor model file

LoRA: GGUF file is a LoRA adaptervocab: GGUF file with only vocab data and metadata

- Shard: (Optional) Indicates and denotes that the model has been split into multiple shards, formatted as

<ShardNum>-of-<ShardTotal>.- ShardNum : Shard position in this model. Must be 5 digits padded by zeros. (必须是 5 位数字,并用零填充。)

- Shard number always starts from

00001onwards (e.g. First shard always starts at00001-of-XXXXXrather than00000-of-XXXXX). 分片编号始终从00001开始。

- Shard number always starts from

- ShardTotal : Total number of shards in this model. Must be 5 digits padded by zeros. (必须是 5 位数字,并用零填充。)

- ShardNum : Shard position in this model. Must be 5 digits padded by zeros. (必须是 5 位数字,并用零填充。)

1.1.1. Validating Above Naming Convention

At a minimum all model files should have at least BaseName, SizeLabel, Version, in order to be easily validated as a file that is keeping with the GGUF Naming Convention. An example of this issue is that it is easy for Encoding to be mistaken as a FineTune if Version is omitted.

所有模型文件至少应具有 BaseName, SizeLabel and Version,以便轻松验证是否符合 GGUF 命名约定。

To validate you can use this regular expression ^(?<BaseName>[A-Za-z0-9\s]*(?:(?:-(?:(?:[A-Za-z\s][A-Za-z0-9\s]*)|(?:[0-9\s]*)))*))-(?:(?<SizeLabel>(?:\d+x)?(?:\d+\.)?\d+[A-Za-z](?:-[A-Za-z]+(\d+\.)?\d+[A-Za-z]+)?)(?:-(?<FineTune>[A-Za-z0-9\s-]+))?)?-(?:(?<Version>v\d+(?:\.\d+)*))(?:-(?<Encoding>(?!LoRA|vocab)[\w_]+))?(?:-(?<Type>LoRA|vocab))?(?:-(?<Shard>\d{5}-of-\d{5}))?\.gguf$ which will check that you got the minimum BaseName, SizeLabel and Version present in the correct order.

For example:

-

Mixtral-8x7B-v0.1-KQ2.gguf:- Model Name: Mixtral

- Expert Count: 8

- Parameter Count: 7B

- Version Number: v0.1

- Weight Encoding Scheme: KQ2

-

Hermes-2-Pro-Llama-3-8B-F16.gguf:- Model Name: Hermes 2 Pro Llama 3

- Expert Count: 0

- Parameter Count: 8B

- Version Number: v1.0

- Weight Encoding Scheme: F16

- Shard: N/A

-

Grok-100B-v1.0-Q4_0-00003-of-00009.gguf- Model Name: Grok

- Expert Count: 0

- Parameter Count: 100B

- Version Number: v1.0

- Weight Encoding Scheme: Q4_0

- Shard: 3 out of 9 total shards

#!/usr/bin/env node

const ggufRegex = /^(?<BaseName>[A-Za-z0-9\s]*(?:(?:-(?:(?:[A-Za-z\s][A-Za-z0-9\s]*)|(?:[0-9\s]*)))*))-(?:(?<SizeLabel>(?:\d+x)?(?:\d+\.)?\d+[A-Za-z](?:-[A-Za-z]+(\d+\.)?\d+[A-Za-z]+)?)(?:-(?<FineTune>[A-Za-z0-9\s-]+))?)?-(?:(?<Version>v\d+(?:\.\d+)*))(?:-(?<Encoding>(?!LoRA|vocab)[\w_]+))?(?:-(?<Type>LoRA|vocab))?(?:-(?<Shard>\d{5}-of-\d{5}))?\.gguf$/;function parseGGUFFilename(filename) {const match = ggufRegex.exec(filename);if (!match)return null;const {BaseName = null, SizeLabel = null, FineTune = null, Version = "v1.0", Encoding = null, Type = null, Shard = null} = match.groups;return {BaseName: BaseName, SizeLabel: SizeLabel, FineTune: FineTune, Version: Version, Encoding: Encoding, Type: Type, Shard: Shard};

}const testCases = [{filename: 'Mixtral-8x7B-v0.1-KQ2.gguf', expected: { BaseName: 'Mixtral', SizeLabel: '8x7B', FineTune: null, Version: 'v0.1', Encoding: 'KQ2', Type: null, Shard: null}},{filename: 'Grok-100B-v1.0-Q4_0-00003-of-00009.gguf', expected: { BaseName: 'Grok', SizeLabel: '100B', FineTune: null, Version: 'v1.0', Encoding: 'Q4_0', Type: null, Shard: "00003-of-00009"}},{filename: 'Hermes-2-Pro-Llama-3-8B-v1.0-F16.gguf', expected: { BaseName: 'Hermes-2-Pro-Llama-3', SizeLabel: '8B', FineTune: null, Version: 'v1.0', Encoding: 'F16', Type: null, Shard: null}},{filename: 'Phi-3-mini-3.8B-ContextLength4k-instruct-v1.0.gguf', expected: { BaseName: 'Phi-3-mini', SizeLabel: '3.8B-ContextLength4k', FineTune: 'instruct', Version: 'v1.0', Encoding: null, Type: null, Shard: null}},{filename: 'not-a-known-arrangement.gguf', expected: null},

];testCases.forEach(({ filename, expected }) => {const result = parseGGUFFilename(filename);const passed = JSON.stringify(result) === JSON.stringify(expected);console.log(`${filename}: ${passed ? "PASS" : "FAIL"}`);if (!passed) {console.log(result);console.log(expected);}

});

1.2. File Structure

GGUF v3 https://github.com/mishig25

GGUF files are structured as follows. They use a global alignment specified in the general.alignment metadata field, referred to as ALIGNMENT below. Where required, the file is padded with 0x00 bytes to the next multiple of general.alignment.

Fields, including arrays, are written sequentially without alignment unless otherwise specified.

除非另有说明,Fields (including arrays) 均按顺序写入且不对齐。

Models are little-endian by default. They can also come in big-endian for use with big-endian computers; in this case, all values (including metadata values and tensors) will also be big-endian. At the time of writing, there is no way to determine if a model is big-endian; this may be rectified in future versions. If no additional information is provided, assume the model is little-endian.

enum ggml_type: uint32_t {GGML_TYPE_F32 = 0,GGML_TYPE_F16 = 1,GGML_TYPE_Q4_0 = 2,GGML_TYPE_Q4_1 = 3,// GGML_TYPE_Q4_2 = 4, support has been removed// GGML_TYPE_Q4_3 = 5, support has been removedGGML_TYPE_Q5_0 = 6,GGML_TYPE_Q5_1 = 7,GGML_TYPE_Q8_0 = 8,GGML_TYPE_Q8_1 = 9,GGML_TYPE_Q2_K = 10,GGML_TYPE_Q3_K = 11,GGML_TYPE_Q4_K = 12,GGML_TYPE_Q5_K = 13,GGML_TYPE_Q6_K = 14,GGML_TYPE_Q8_K = 15,GGML_TYPE_IQ2_XXS = 16,GGML_TYPE_IQ2_XS = 17,GGML_TYPE_IQ3_XXS = 18,GGML_TYPE_IQ1_S = 19,GGML_TYPE_IQ4_NL = 20,GGML_TYPE_IQ3_S = 21,GGML_TYPE_IQ2_S = 22,GGML_TYPE_IQ4_XS = 23,GGML_TYPE_I8 = 24,GGML_TYPE_I16 = 25,GGML_TYPE_I32 = 26,GGML_TYPE_I64 = 27,GGML_TYPE_F64 = 28,GGML_TYPE_IQ1_M = 29,GGML_TYPE_COUNT,

};enum gguf_metadata_value_type: uint32_t {// The value is a 8-bit unsigned integer.GGUF_METADATA_VALUE_TYPE_UINT8 = 0,// The value is a 8-bit signed integer.GGUF_METADATA_VALUE_TYPE_INT8 = 1,// The value is a 16-bit unsigned little-endian integer.GGUF_METADATA_VALUE_TYPE_UINT16 = 2,// The value is a 16-bit signed little-endian integer.GGUF_METADATA_VALUE_TYPE_INT16 = 3,// The value is a 32-bit unsigned little-endian integer.GGUF_METADATA_VALUE_TYPE_UINT32 = 4,// The value is a 32-bit signed little-endian integer.GGUF_METADATA_VALUE_TYPE_INT32 = 5,// The value is a 32-bit IEEE754 floating point number.GGUF_METADATA_VALUE_TYPE_FLOAT32 = 6,// The value is a boolean.// 1-byte value where 0 is false and 1 is true.// Anything else is invalid, and should be treated as either the model being invalid or the reader being buggy.GGUF_METADATA_VALUE_TYPE_BOOL = 7,// The value is a UTF-8 non-null-terminated string, with length prepended.GGUF_METADATA_VALUE_TYPE_STRING = 8,// The value is an array of other values, with the length and type prepended.///// Arrays can be nested, and the length of the array is the number of elements in the array, not the number of bytes.GGUF_METADATA_VALUE_TYPE_ARRAY = 9,// The value is a 64-bit unsigned little-endian integer.GGUF_METADATA_VALUE_TYPE_UINT64 = 10,// The value is a 64-bit signed little-endian integer.GGUF_METADATA_VALUE_TYPE_INT64 = 11,// The value is a 64-bit IEEE754 floating point number.GGUF_METADATA_VALUE_TYPE_FLOAT64 = 12,

};// A string in GGUF.

struct gguf_string_t {// The length of the string, in bytes.uint64_t len;// The string as a UTF-8 non-null-terminated string.char string[len];

};union gguf_metadata_value_t {uint8_t uint8;int8_t int8;uint16_t uint16;int16_t int16;uint32_t uint32;int32_t int32;float float32;uint64_t uint64;int64_t int64;double float64;bool bool_;gguf_string_t string;struct {// Any value type is valid, including arrays.gguf_metadata_value_type type;// Number of elements, not bytesuint64_t len;// The array of values.gguf_metadata_value_t array[len];} array;

};struct gguf_metadata_kv_t {// The key of the metadata. It is a standard GGUF string, with the following caveats:// - It must be a valid ASCII string.// - It must be a hierarchical key, where each segment is `lower_snake_case` and separated by a `.`.// - It must be at most 2^16-1/65535 bytes long.// Any keys that do not follow these rules are invalid.gguf_string_t key;// The type of the value.// Must be one of the `gguf_metadata_value_type` values.gguf_metadata_value_type value_type;// The value.gguf_metadata_value_t value;

};struct gguf_header_t {// Magic number to announce that this is a GGUF file.// Must be `GGUF` at the byte level: `0x47` `0x47` `0x55` `0x46`.// Your executor might do little-endian byte order, so it might be// check for 0x46554747 and letting the endianness cancel out.// Consider being *very* explicit about the byte order here.uint32_t magic;// The version of the format implemented.// Must be `3` for version described in this spec, which introduces big-endian support.//// This version should only be increased for structural changes to the format.// Changes that do not affect the structure of the file should instead update the metadata// to signify the change.uint32_t version;// The number of tensors in the file.// This is explicit, instead of being included in the metadata, to ensure it is always present// for loading the tensors.uint64_t tensor_count;// The number of metadata key-value pairs.uint64_t metadata_kv_count;// The metadata key-value pairs.gguf_metadata_kv_t metadata_kv[metadata_kv_count];

};uint64_t align_offset(uint64_t offset) {return offset + (ALIGNMENT - (offset % ALIGNMENT)) % ALIGNMENT;

}struct gguf_tensor_info_t {// The name of the tensor. It is a standard GGUF string, with the caveat that// it must be at most 64 bytes long.gguf_string_t name;// The number of dimensions in the tensor.// Currently at most 4, but this may change in the future.uint32_t n_dimensions;// The dimensions of the tensor.uint64_t dimensions[n_dimensions];// The type of the tensor.ggml_type type;// The offset of the tensor's data in this file in bytes.//// This offset is relative to `tensor_data`, not to the start// of the file, to make it easier for writers to write the file.// Readers should consider exposing this offset relative to the// file to make it easier to read the data.//// Must be a multiple of `ALIGNMENT`. That is, `align_offset(offset) == offset`.uint64_t offset;

};struct gguf_file_t {// The header of the file.gguf_header_t header;// Tensor infos, which can be used to locate the tensor data.gguf_tensor_info_t tensor_infos[header.tensor_count];// Padding to the nearest multiple of `ALIGNMENT`.//// That is, if `sizeof(header) + sizeof(tensor_infos)` is not a multiple of `ALIGNMENT`,// this padding is added to make it so.//// This can be calculated as `align_offset(position) - position`, where `position` is// the position of the end of `tensor_infos` (i.e. `sizeof(header) + sizeof(tensor_infos)`).uint8_t _padding[];// Tensor data.//// This is arbitrary binary data corresponding to the weights of the model. This data should be close// or identical to the data in the original model file, but may be different due to quantization or// other optimizations for inference. Any such deviations should be recorded in the metadata or as// part of the architecture definition.//// Each tensor's data must be stored within this array, and located through its `tensor_infos` entry.// The offset of each tensor's data must be a multiple of `ALIGNMENT`, and the space between tensors// should be padded to `ALIGNMENT` bytes.uint8_t tensor_data[];

};

2. Standardized key-value pairs

The following key-value pairs are standardized. This list may grow in the future as more use cases are discovered. Where possible, names are shared with the original model definitions to make it easier to map between the two.

Not all of these are required, but they are all recommended. Keys that are required are bolded. For omitted pairs, the reader should assume that the value is unknown and either default or error as appropriate.

并非所有这些都是必需的,但它们都是推荐的。必需的键以粗体显示。对于省略的对,读者应假设该值是未知的,并且是默认值或错误 (视情况而定)。

The community can develop their own key-value pairs to carry additional data. However, these should be namespaced with the relevant community name to avoid collisions. For example, the rustformers community might use rustformers. as a prefix for all of their keys.

社区可以开发自己的键值对来承载更多数据。但是,这些键值对应使用相关社区名称进行命名空间划分,以避免冲突。例如, rustformers 社区可能会使用 rustformers. 作为其所有键的前缀。

If a particular community key is widely used, it may be promoted to a standardized key.

By convention, most counts/lengths/etc are uint64 unless otherwise specified. This is to allow for larger models to be supported in the future. Some models may use uint32 for their values; it is recommended that readers support both.

2.1. General

2.1.1. Required

general.architecture: string: describes what architecture this model implements. All lowercase ASCII, with only[a-z0-9]+characters allowed. Known values include:llamamptgptneoxgptjgpt2bloomfalconmambarwkv

general.quantization_version: uint32: The version of the quantization format. Not required if the model is not quantized (i.e. no tensors are quantized). If any tensors are quantized, this_must_be present. This is separate to the quantization scheme of the tensors itself; the quantization version may change without changing the scheme’s name (e.g. the quantization scheme isQ5_K, and the quantization version is 4).general.alignment: uint32: the global alignment to use, as described above. This can vary to allow for different alignment schemes, but it must be a multiple of 8. Some writers may not write the alignment. If the alignment is not specified, assume it is32.

2.1.2. General metadata

general.name: string: The name of the model. This should be a human-readable name that can be used to identify the model. It should be unique within the community that the model is defined in.general.author: string: The author of the model.general.version: string: The version of the model.general.organization: string: The organization of the model.general.basename: string: The base model name / architecture of the modelgeneral.finetune: string: What has the base model been optimized toward.general.description: string: free-form description of the model including anything that isn’t covered by the other fieldsgeneral.quantized_by: string: The name of the individual who quantized the modelgeneral.size_label: string: Size class of the model, such as number of weights and experts. (Useful for leader boards)general.license: string: License of the model, expressed as a SPDX license expression (e.g."MIT OR Apache-2.0). Do not include any other information, such as the license text or the URL to the license.general.license.name: string: Human friendly license namegeneral.license.link: string: URL to the license.general.url: string: URL to the model’s homepage. This can be a GitHub repo, a paper, etc.general.doi: string: Digital Object Identifier (DOI) https://www.doi.org/general.uuid: string: Universally unique identifiergeneral.repo_url: string: URL to the model’s repository such as a GitHub repo or HuggingFace repogeneral.tags: string[]: List of tags that can be used as search terms for a search engine or social mediageneral.languages: string[]: What languages can the model speak. Encoded as ISO 639 two letter codesgeneral.datasets: string[]: Links or references to datasets that the model was trained upongeneral.file_type: uint32: An enumerated value describing the type of the majority of the tensors in the file. Optional; can be inferred from the tensor types.ALL_F32 = 0MOSTLY_F16 = 1MOSTLY_Q4_0 = 2MOSTLY_Q4_1 = 3MOSTLY_Q4_1_SOME_F16 = 4MOSTLY_Q4_2 = 5(support removed)MOSTLY_Q4_3 = 6(support removed)MOSTLY_Q8_0 = 7MOSTLY_Q5_0 = 8MOSTLY_Q5_1 = 9MOSTLY_Q2_K = 10MOSTLY_Q3_K_S = 11MOSTLY_Q3_K_M = 12MOSTLY_Q3_K_L = 13MOSTLY_Q4_K_S = 14MOSTLY_Q4_K_M = 15MOSTLY_Q5_K_S = 16MOSTLY_Q5_K_M = 17MOSTLY_Q6_K = 18

2.1.3. Source metadata

Information about where this model came from. This is useful for tracking the provenance of the model, and for finding the original source if the model is modified. For a model that was converted from GGML, for example, these keys would point to the model that was converted from.

有关此模型来源的信息。这对于追踪模型的出处以及在模型被修改时查找原始来源非常有用。

-

general.source.url: string: URL to the source of the model’s homepage. This can be a GitHub repo, a paper, etc. -

general.source.doi: string: Source Digital Object Identifier (DOI) https://www.doi.org/ -

general.source.uuid: string: Source Universally unique identifier -

general.source.repo_url: string: URL to the source of the model’s repository such as a GitHub repo or HuggingFace repo -

general.base_model.count: uint32: Number of parent models -

general.base_model.{id}.name: string: The name of the parent model. -

general.base_model.{id}.author: string: The author of the parent model. -

general.base_model.{id}.version: string: The version of the parent model. -

general.base_model.{id}.organization: string: The organization of the parent model. -

general.base_model.{id}.url: string: URL to the source of the parent model’s homepage. This can be a GitHub repo, a paper, etc. -

general.base_model.{id}.doi: string: Parent Digital Object Identifier (DOI) https://www.doi.org/ -

general.base_model.{id}.uuid: string: Parent Universally unique identifier -

general.base_model.{id}.repo_url: string: URL to the source of the parent model’s repository such as a GitHub repo or HuggingFace repo

2.2. LLM

In the following, [llm] is used to fill in for the name of a specific LLM architecture. For example, llama for LLaMA, mpt for MPT, etc. If mentioned in an architecture’s section, it is required for that architecture, but not all keys are required for all architectures. Consult the relevant section for more information.

[llm].context_length: uint64: Also known asn_ctx. length of the context (in tokens) that the model was trained on. For most architectures, this is the hard limit on the length of the input. Architectures, like RWKV, that are not reliant on transformer-style attention may be able to handle larger inputs, but this is not guaranteed.[llm].embedding_length: uint64: Also known asn_embd. Embedding layer size.[llm].block_count: uint64: The number of blocks of attention+feed-forward layers (i.e. the bulk of the LLM). Does not include the input or embedding layers.[llm].feed_forward_length: uint64: Also known asn_ff. The length of the feed-forward layer.[llm].use_parallel_residual: bool: Whether or not the parallel residual logic should be used.[llm].tensor_data_layout: string: When a model is converted to GGUF, tensors may be rearranged to improve performance. This key describes the layout of the tensor data. This is not required; if not present, it is assumed to bereference.reference: tensors are laid out in the same order as the original model- further options can be found for each architecture in their respective sections

[llm].expert_count: uint32: Number of experts in MoE models (optional for non-MoE arches).[llm].expert_used_count: uint32: Number of experts used during each token token evaluation (optional for non-MoE arches).

2.2.1. Attention

[llm].attention.head_count: uint64: Also known asn_head. Number of attention heads.[llm].attention.head_count_kv: uint64: The number of heads per group used in Grouped-Query-Attention. If not present or if present and equal to[llm].attention.head_count, the model does not use GQA.[llm].attention.max_alibi_bias: float32: The maximum bias to use for ALiBI.[llm].attention.clamp_kqv: float32: Value (C) to clamp the values of theQ,K, andVtensors between ([-C, C]).[llm].attention.layer_norm_epsilon: float32: Layer normalization epsilon.[llm].attention.layer_norm_rms_epsilon: float32: Layer RMS normalization epsilon.[llm].attention.key_length: uint32: The optional size of a key head, d k d_k dk. If not specified, it will ben_embd / n_head.[llm].attention.value_length: uint32: The optional size of a value head, d v d_v dv. If not specified, it will ben_embd / n_head.

2.2.2. RoPE

[llm].rope.dimension_count: uint64: The number of rotary dimensions for RoPE.[llm].rope.freq_base: float32: The base frequency for RoPE.

2.2.2.1. Scaling

The following keys describe RoPE scaling parameters:

[llm].rope.scaling.type: string: Can benone,linear, oryarn.[llm].rope.scaling.factor: float32: A scale factor for RoPE to adjust the context length.[llm].rope.scaling.original_context_length: uint32_t: The original context length of the base model.[llm].rope.scaling.finetuned: bool: True if model has been finetuned with RoPE scaling.

Note that older models may not have these keys, and may instead use the following key:

[llm].rope.scale_linear: float32: A linear scale factor for RoPE to adjust the context length.

It is recommended that models use the newer keys if possible, as they are more flexible and allow for more complex scaling schemes. Executors will need to support both indefinitely.

2.2.3. SSM

[llm].ssm.conv_kernel: uint32: The size of the rolling/shift state.[llm].ssm.inner_size: uint32: The embedding size of the states.[llm].ssm.state_size: uint32: The size of the recurrent state.[llm].ssm.time_step_rank: uint32: The rank of time steps.

2.2.4. Models

The following sections describe the metadata for each model architecture. Each key specified _must_ be present.

2.2.4.1. LLaMA

llama.context_lengthllama.embedding_lengthllama.block_countllama.feed_forward_lengthllama.rope.dimension_countllama.attention.head_countllama.attention.layer_norm_rms_epsilon

2.2.4.1.1. Optional

llama.rope.scalellama.attention.head_count_kvllama.tensor_data_layout:Meta AI original pth:def permute(weights: NDArray, n_head: int) -> NDArray:return (weights.reshape(n_head, 2, weights.shape[0] // n_head // 2, *weights.shape[1:]).swapaxes(1, 2).reshape(weights.shape))

llama.expert_countllama.expert_used_count

2.2.4.2. MPT

mpt.context_lengthmpt.embedding_lengthmpt.block_countmpt.attention.head_countmpt.attention.alibi_bias_maxmpt.attention.clip_kqvmpt.attention.layer_norm_epsilon

2.2.4.3. GPT-NeoX

gptneox.context_lengthgptneox.embedding_lengthgptneox.block_countgptneox.use_parallel_residualgptneox.rope.dimension_countgptneox.attention.head_countgptneox.attention.layer_norm_epsilon

2.2.4.3.1. Optional

gptneox.rope.scale

2.2.4.4. GPT-J

gptj.context_lengthgptj.embedding_lengthgptj.block_countgptj.rope.dimension_countgptj.attention.head_countgptj.attention.layer_norm_epsilon

2.2.4.4.1. Optional

gptj.rope.scale

2.2.4.5. GPT-2

gpt2.context_lengthgpt2.embedding_lengthgpt2.block_countgpt2.attention.head_countgpt2.attention.layer_norm_epsilon

2.2.4.6. BLOOM

bloom.context_lengthbloom.embedding_lengthbloom.block_countbloom.feed_forward_lengthbloom.attention.head_countbloom.attention.layer_norm_epsilon

2.2.4.7. Falcon

falcon.context_lengthfalcon.embedding_lengthfalcon.block_countfalcon.attention.head_countfalcon.attention.head_count_kvfalcon.attention.use_normfalcon.attention.layer_norm_epsilon

2.2.4.7.1. Optional

-

falcon.tensor_data_layout:-

jploski(author of the original GGML implementation of Falcon):# The original query_key_value tensor contains n_head_kv "kv groups", # each consisting of n_head/n_head_kv query weights followed by one key # and one value weight (shared by all query heads in the kv group). # This layout makes it a big pain to work with in GGML. # So we rearrange them here,, so that we have n_head query weights # followed by n_head_kv key weights followed by n_head_kv value weights, # in contiguous fashion.if "query_key_value" in src:qkv = model[src].view(n_head_kv, n_head // n_head_kv + 2, head_dim, head_dim * n_head)q = qkv[:, :-2 ].reshape(n_head * head_dim, head_dim * n_head)k = qkv[:, [-2]].reshape(n_head_kv * head_dim, head_dim * n_head)v = qkv[:, [-1]].reshape(n_head_kv * head_dim, head_dim * n_head)model[src] = torch.cat((q,k,v)).reshape_as(model[src])

-

2.2.4.8. Mamba

mamba.context_lengthmamba.embedding_lengthmamba.block_countmamba.ssm.conv_kernelmamba.ssm.inner_sizemamba.ssm.state_sizemamba.ssm.time_step_rankmamba.attention.layer_norm_rms_epsilon

2.2.4.9. RWKV

The vocabulary size is the same as the number of rows in the head matrix.

rwkv.architecture_version: uint32: The only allowed value currently is 4. Version 5 is expected to appear some time in the future.rwkv.context_length: uint64: Length of the context used during training or fine-tuning. RWKV is able to handle larger context than this limit, but the output quality may suffer.rwkv.block_count: uint64rwkv.embedding_length: uint64rwkv.feed_forward_length: uint64

2.2.4.10. Whisper

Keys that do not have types defined should be assumed to share definitions with llm. keys.

(For example, whisper.context_length is equivalent to llm.context_length.)

This is because they are both transformer models.

-

whisper.encoder.context_length -

whisper.encoder.embedding_length -

whisper.encoder.block_count -

whisper.encoder.mels_count: uint64 -

whisper.encoder.attention.head_count -

whisper.decoder.context_length -

whisper.decoder.embedding_length -

whisper.decoder.block_count -

whisper.decoder.attention.head_count

2.2.5. Prompting

TODO: Include prompt format, and/or metadata about how it should be used (instruction, conversation, autocomplete, etc).

2.3. LoRA

TODO: Figure out what metadata is needed for LoRA. Probably desired features:

- match an existing model exactly, so that it can’t be misapplied

- be marked as a LoRA so executors won’t try to run it by itself

Should this be an architecture, or should it share the details of the original model with additional fields to mark it as a LoRA?

2.4. Tokenizer

The following keys are used to describe the tokenizer of the model. It is recommended that model authors support as many of these as possible, as it will allow for better tokenization quality with supported executors.

2.4.1. GGML

GGML supports an embedded vocabulary that enables inference of the model, but implementations of tokenization using this vocabulary (i.e. llama.cpp’s tokenizer) may have lower accuracy than the original tokenizer used for the model. When a more accurate tokenizer is available and supported, it should be used instead.

GGML 支持嵌入词汇表,可实现模型推理,但使用此词汇表 (即 llama.cpp 的标记器) 的标记化实现的准确率可能低于用于模型的原始标记器。当有更准确的标记器可用且受支持时,应改用它。

It is not guaranteed to be standardized across models, and may change in the future. It is recommended that model authors use a more standardized tokenizer if possible.

建议模型作者尽可能使用更标准化的标记器。

tokenizer.ggml.model: string: The name of the tokenizer model.llama: Llama style SentencePiece (tokens and scores extracted from HFtokenizer.model)replit: Replit style SentencePiece (tokens and scores extracted from HFspiece.model)gpt2: GPT-2 / GPT-NeoX style BPE (tokens extracted from HFtokenizer.json)rwkv: RWKV tokenizer

tokenizer.ggml.tokens: array[string]: A list of tokens indexed by the token ID used by the model.tokenizer.ggml.scores: array[float32]: If present, the score/probability of each token. If not present, all tokens are assumed to have equal probability. If present, it must have the same length and index astokens.

如果存在,则为每个 token 的分数/概率。如果不存在,则假定所有 token 具有相同的概率。如果存在,则必须具有与tokens相同的长度和索引。tokenizer.ggml.token_type: array[int32]: The token type (1=normal, 2=unknown, 3=control, 4=user defined, 5=unused, 6=byte). If present, it must have the same length and index astokens.tokenizer.ggml.merges: array[string]: If present, the merges of the tokenizer. If not present, the tokens are assumed to be atomic.- 如果存在,则 tokenizer 会进行合并。如果不存在,则假定 token 是原子的。

tokenizer.ggml.added_tokens: array[string]: If present, tokens that were added after training.

2.4.1.1. Special tokens

tokenizer.ggml.bos_token_id: uint32: Beginning of sequence markertokenizer.ggml.eos_token_id: uint32: End of sequence markertokenizer.ggml.unknown_token_id: uint32: Unknown tokentokenizer.ggml.separator_token_id: uint32: Separator tokentokenizer.ggml.padding_token_id: uint32: Padding token

2.4.2. Hugging Face

Hugging Face maintains their own tokenizers library that supports a wide variety of tokenizers. If your executor uses this library, it may be able to use the model’s tokenizer directly.

tokenizer.huggingface.json: string: the entirety of the HFtokenizer.jsonfor a given model (e.g. https://huggingface.co/mosaicml/mpt-7b-instruct/blob/main/tokenizer.json). Included for compatibility with executors that support HF tokenizers directly.

2.4.3. Other

Other tokenizers may be used, but are not necessarily standardized. They may be executor-specific. They will be documented here as they are discovered/further developed.

tokenizer.rwkv.world: string: a RWKV World tokenizer, like https://github.com/BlinkDL/ChatRWKV/blob/main/tokenizer/rwkv_vocab_v20230424.txt. This text file should be included verbatim.tokenizer.chat_template : string: a Jinja template that specifies the input format expected by the model. For more details see: https://huggingface.co/docs/transformers/main/en/chat_templating

2.5. Computation graph

This is a future extension and still needs to be discussed, and may necessitate a new GGUF version. At the time of writing, the primary blocker is the stabilization of the computation graph format.

这是未来的扩展,仍需讨论,并且可能需要新的 GGUF 版本。在撰写本文时,主要阻碍因素是计算图格式的稳定性。

A sample computation graph of GGML nodes could be included in the model itself, allowing an executor to run the model without providing its own implementation of the architecture. This would allow for a more consistent experience across executors, and would allow for more complex architectures to be supported without requiring the executor to implement them.

GGML 节点的计算图示例可以包含在模型本身中,从而允许执行器运行模型而无需提供其自己的架构实现。这将允许在执行器之间获得更一致的体验,并且允许支持更复杂的架构而无需执行器实现它们。

3. Standardized tensor names

To minimize complexity and maximize compatibility, it is recommended that models using the transformer architecture use the following naming convention for their tensors:

为了最大限度地降低复杂性并最大限度地提高兼容性,建议使用 Transformer 架构的模型对其张量使用以下命名约定。

3.1. Base layers

AA.weight AA.bias

where AA can be:

token_embd: Token embedding layerpos_embd: Position embedding layeroutput_norm: Output normalization layeroutput: Output layer

3.2. Attention and feed-forward layer blocks

blk.N.BB.weight blk.N.BB.bias

where N signifies the block number a layer belongs to, and where BB could be:

-

attn_norm: Attention normalization layer -

attn_norm_2: Attention normalization layer -

attn_qkv: Attention query-key-value layer -

attn_q: Attention query layer -

attn_k: Attention key layer -

attn_v: Attention value layer -

attn_output: Attention output layer -

ffn_norm: Feed-forward network normalization layer -

ffn_up: Feed-forward network “up” layer -

ffn_gate: Feed-forward network “gate” layer -

ffn_down: Feed-forward network “down” layer -

ffn_gate_inp: Expert-routing layer for the Feed-forward network in MoE models -

ffn_gate_exp: Feed-forward network “gate” layer per expert in MoE models -

ffn_down_exp: Feed-forward network “down” layer per expert in MoE models -

ffn_up_exp: Feed-forward network “up” layer per expert in MoE models -

ssm_in: State space model input projections layer -

ssm_conv1d: State space model rolling/shift layer -

ssm_x: State space model selective parametrization layer -

ssm_a: State space model state compression layer -

ssm_d: State space model skip connection layer -

ssm_dt: State space model time step layer -

ssm_out: State space model output projection layer

4. Version History

This document is actively updated to describe the current state of the metadata, and these changes are not tracked outside of the commits.

However, the format _itself_ has changed. The following sections describe the changes to the format itself.

4.1. v3

Adds big-endian support.

4.2. v2

Most countable values (lengths, etc) were changed from uint32 to uint64 to allow for larger models to be supported in the future.

4.3. v1

Initial version.

5. Historical State of Affairs (历史状况)

The following information is provided for context, but is not necessary to understand the rest of this document.

下列信息仅供参考,但对于理解本文档的其余部分并非必需。

5.1. Overview

At present, there are three GGML file formats floating around for LLMs:

- GGML (unversioned): baseline format, with no versioning or alignment.

- GGMF (versioned): the same as GGML, but with versioning. Only one version exists.

- GGJT: Aligns the tensors to allow for use with

mmap, which requires alignment. v1, v2 and v3 are identical, but the latter versions use a different quantization scheme that is incompatible with previous versions.

v1、v2 和 v3 相同,但后者版本使用不同的量化方案,与之前的版本不兼容。

GGML is primarily used by the examples in ggml, while GGJT is used by llama.cpp models. Other executors may use any of the three formats, but this is not ‘officially’ supported.

These formats share the same fundamental structure:

- a magic number with an optional version number

- model-specific hyperparameters, including

- metadata about the model, such as the number of layers, the number of heads, etc.

- a

ftypethat describes the type of the majority of the tensors,- for GGML files, the quantization version is encoded in the

ftypedivided by 1000

- for GGML files, the quantization version is encoded in the

- an embedded vocabulary, which is a list of strings with length prepended. The GGMF/GGJT formats embed a float32 score next to the strings.

- finally, a list of tensors with their length-prepended name, type, and (aligned, in the case of GGJT) tensor data

Notably, this structure does not identify what model architecture the model belongs to, nor does it offer any flexibility for changing the structure of the hyperparameters. This means that the only way to add new hyperparameters is to add them to the end of the list, which is a breaking change for existing models.

值得注意的是,这种结构无法识别模型属于哪种模型架构,也无法提供更改超参数结构的任何灵活性。这意味着添加新超参数的唯一方法是将它们添加到列表末尾,这对现有模型来说是一个重大变化。

5.2. Drawbacks

Unfortunately, over the last few months, there are a few issues that have become apparent with the existing models:

- There’s no way to identify which model architecture a given model is for, because that information isn’t present

无法确定给定模型适用于哪种模型架构,因为不存在该信息。- Similarly, existing programs cannot intelligently fail upon encountering new architectures

- Adding or removing any new hyperparameters is a breaking change, which is impossible for a reader to detect without using heuristics

添加或删除任何新的超参数都是重大变化,读者如果不使用启发式方法就不可能检测到。 - Each model architecture requires its own conversion script to their architecture’s variant of GGML

每个模型架构都需要自己的转换脚本来转换为其架构的 GGML 变体。 - Maintaining backwards compatibility without breaking the structure of the format requires clever tricks, like packing the quantization version into the

ftype, which are not guaranteed to be picked up by readers/writers, and are not consistent between the two formats

在不破坏格式结构的情况下保持向后兼容性需要一些巧妙的技巧,例如将量化版本打包到ftype中,但这并不能保证读者/作者能够理解,并且两种格式之间也不一致。

5.3. Why not other formats?

There are a few other formats that could be used, but issues include:

- requiring additional dependencies to load or save the model, which is complicated in a C environment

- limited or no support for 4-bit quantization

- existing cultural expectations (e.g. whether or not the model is a directory or a file)

- lack of support for embedded vocabularies

- lack of control over direction of future development (缺乏对未来发展方向的控制)

Ultimately, it is likely that GGUF will remain necessary for the foreseeable future, and it is better to have a single format that is well-documented and supported by all executors than to contort an existing format to fit the needs of GGML.

最终,GGUF 在可预见的未来很可能仍然是必要的,并且最好有一个有据可查且得到所有执行者支持的单一格式,而不是扭曲现有的格式来满足 GGML 的需求。

References

[1] Yongqiang Cheng, https://yongqiang.blog.csdn.net/

[2] ggml/docs/gguf.md

https://github.com/ggerganov/ggml/blob/master/docs/gguf.md

相关文章:

llama.cpp GGUF 模型格式

llama.cpp GGUF 模型格式 1. Specification1.1. GGUF Naming Convention (命名规则)1.1.1. Validating Above Naming Convention 1.2. File Structure 2. Standardized key-value pairs2.1. General2.1.1. Required2.1.2. General metadata2.1.3. Source metadata 2.2. LLM2.2.…...

嵌入式硬件篇---HAL库内外部时钟主频锁相环分频器

文章目录 前言第一部分:STM32-HAL库HAL库编程优势1.抽象层2.易于上手3.代码可读性4.跨平台性5.维护和升级6.中间件支持 劣势1.性能2.灵活性3.代码大小4.复杂性 直接寄存器操作编程优势1.性能2.灵活性3.代码大小4.学习深度 劣势1.复杂性2.可读性3.可维护性4.跨平台性…...

【IoCDI】_@Bean的参数传递

目录 1. 不创建参数类型的Bean 2. 创建一个与参数同类型同名的Bean 3. 创建多个与参数同类型,其中一个与参数同名的Bean 4. 创建一个与参数同类型不同名的Bean 5. 创建多个与参数同类型但不同名的Bean 对于Bean修饰的方法,也可能需要从外部传参&…...

[特殊字符] ChatGPT-4与4o大比拼

🔍 ChatGPT-4与ChatGPT-4o之间有何不同?让我们一探究竟! 🚀 性能与速度方面,GPT-4-turbo以其优化设计,提供了更快的响应速度和处理性能,非常适合需要即时反馈的应用场景。相比之下,G…...

【模型】Bi-LSTM模型详解

1. 模型架构与计算过程 Bi-LSTM 由两个LSTM层组成,一个是正向LSTM(从前到后处理序列),另一个是反向LSTM(从后到前处理序列)。每个LSTM单元都可以通过门控机制对序列的长期依赖进行建模。 1. 遗忘门 遗忘…...

directx12 3d开发过程中出现的报错 一

报错:“&”要求左值 “& 要求左值” 这个错误通常是因为你在尝试获取一个临时对象或者右值的地址,而 & 运算符只能用于左值(即可以放在赋值语句左边的表达式,代表一个可以被引用的内存位置)。 可能出现错…...

Ubuntu 24.04 安装 Poetry:Python 依赖管理的终极指南

Ubuntu 24.04 安装 Poetry:Python 依赖管理的终极指南 1. 更新系统包列表2. 安装 Poetry方法 1:使用官方安装脚本方法 2:使用 Pipx 安装 3. 配置环境变量4. 验证安装5. 配置 Poetry(可选)设置虚拟环境位置配置镜像源 6…...

读写锁: ReentrantReadWriteLock

在多线程编程场景中,对共享资源的访问控制极为关键。传统的锁机制在同一时刻只允许一个线程访问共享资源,这在读写操作频繁的场景下,会因为读操作相互不影响数据一致性,而造成不必要的性能损耗。ReentrantReadWriteLock࿰…...

上海路网道路 水系铁路绿色住宅地工业用地面图层shp格式arcgis无偏移坐标2023年

标题和描述中提到的资源是关于2023年上海市地理信息数据的集合,主要包含道路、水系、铁路、绿色住宅区以及工业用地的图层数据,这些数据以Shapefile(shp)格式存储,并且是适用于ArcGIS软件的无偏移坐标系统。这个压缩包…...

爬虫学习笔记之Robots协议相关整理

定义 Robots协议也称作爬虫协议、机器人协议,全名为网络爬虫排除标准,用来告诉爬虫和搜索引擎哪些页面可以爬取、哪些不可以。它通常是一个叫做robots.txt的文本文件,一般放在网站的根目录下。 robots.txt文件的样例 对有所爬虫均生效&#…...

Python小游戏29乒乓球

import pygame import sys # 初始化pygame pygame.init() # 屏幕大小 screen_width 800 screen_height 600 screen pygame.display.set_mode((screen_width, screen_height)) pygame.display.set_caption("打乒乓球") # 颜色定义 WHITE (255, 255, 255) BLACK (…...

220.存在重复元素③

目录 一、题目二、思路三、解法四、收获 一、题目 给你一个整数数组 nums 和两个整数 indexDiff 和 valueDiff 。 找出满足下述条件的下标对 (i, j): i ! j, abs(i - j) < indexDiff abs(nums[i] - nums[j]) < valueDiff 如果存在,返回 true &a…...

使用 Go 语言调用 DeepSeek API:完整指南

引言 DeepSeek 是一个强大的 AI 模型服务平台,本文将详细介绍如何使用 Go 语言调用 DeepSeek API,实现流式输出和对话功能。 Deepseek的api因为被功击已不能用,本文以 DeepSeek:https://cloud.siliconflow.cn/i/vnCCfVaQ 为例子进…...

AJAX笔记原理篇

黑马程序员视频地址: AJAX-Day03-01.XMLHttpRequest_基本使用https://www.bilibili.com/video/BV1MN411y7pw?vd_source0a2d366696f87e241adc64419bf12cab&spm_id_from333.788.videopod.episodes&p33https://www.bilibili.com/video/BV1MN411y7pw?vd_sour…...

ubuntu直接运行arm环境qemu-arm-static

qemu-arm-static 嵌入式开发有时会在ARM设备上使用ubuntu文件系统。开发者常常会面临这样一个问题,想预先交叉编译并安装一些应用程序,但是交叉编译的环境配置以及依赖包的安装十分繁琐,并且容易出错。想直接在目标板上进行编译和安装&#x…...

尝试把clang-tidy集成到AWTK项目

前言 项目经过一段时间的耕耘终于进入了团队开发阶段,期间出现了很多问题,其中一个就是开会讨论团队的代码风格规范,目前项目代码风格比较混乱,有的模块是驼峰,有的模块是匈牙利,后面经过讨论,…...

一文了解性能优化的方法

背景 在应用上线后,用户感知较明显的,除了功能满足需求之外,再者就是程序的性能了。因此,在日常开发中,我们除了满足基本的功能之外,还应该考虑性能因素。关注并可以优化程序性能,也是体现开发能…...

【怎么用系列】短视频戒断——对推荐算法进行干扰

如今推荐算法已经渗透到人们生活的方方面面,尤其是抖音等短视频核心就是推荐算法。 【短视频的危害】 1> 会让人变笨,慢慢让人丧失注意力与专注力 2> 让人丧失阅读长文的能力 3> 让人沉浸在一个又一个快感与嗨点当中。当我们刷短视频时&#x…...

)

C#中的委托(Delegate)

什么是委托? 首先,我们要知道C#是一种强类型的编程语言,强类型的编程语言的特性,是所有的东西都是特定的类型 委托是一种存储函数的引用类型,就像我们定义的一个 string str 一样,这个 str 变量就是 string 类型. 因为C#中没有函数类型,但是可以定义一个委托类型,把这个函数…...

PostCss

什么是 PostCss 如果把 CSS 单独拎出来看,光是样式本身,就有很多事情要处理。 既然有这么多事情要处理,何不把这些事情集中到一起统一处理呢? PostCss 就是基于这样的理念出现的。 PostCss 类似于一个编译器,可以将…...

Docker 离线安装指南

参考文章 1、确认操作系统类型及内核版本 Docker依赖于Linux内核的一些特性,不同版本的Docker对内核版本有不同要求。例如,Docker 17.06及之后的版本通常需要Linux内核3.10及以上版本,Docker17.09及更高版本对应Linux内核4.9.x及更高版本。…...

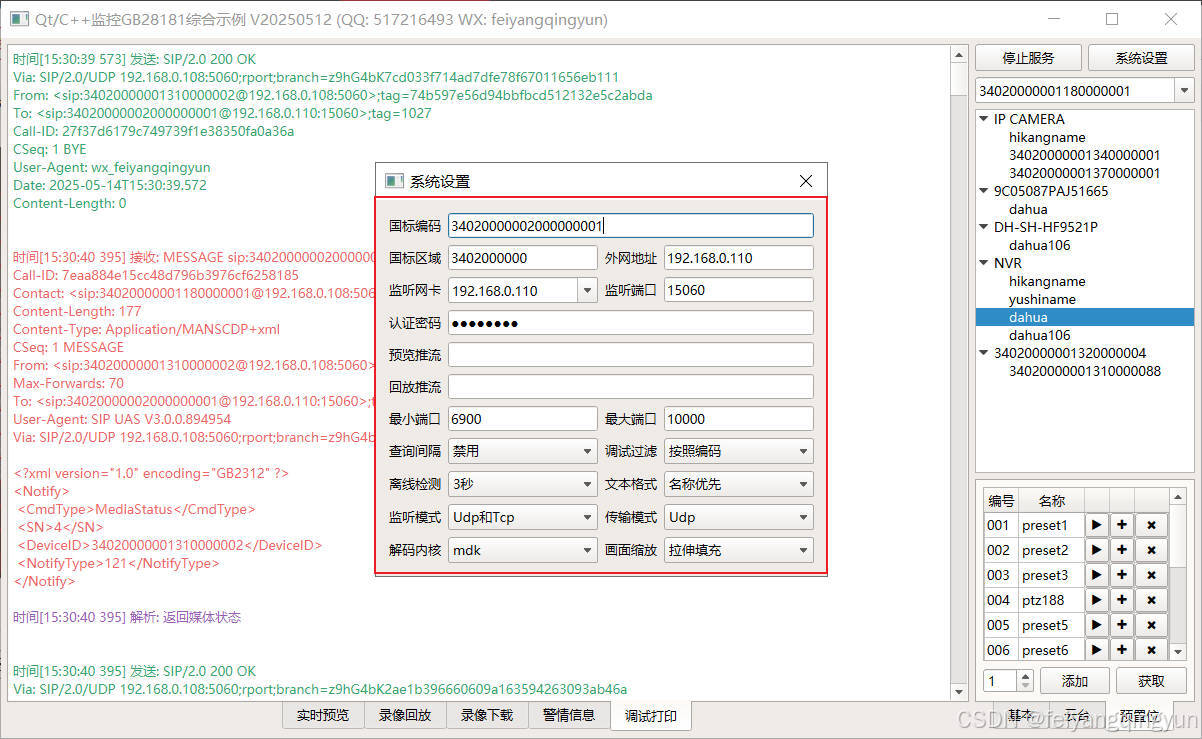

Qt/C++开发监控GB28181系统/取流协议/同时支持udp/tcp被动/tcp主动

一、前言说明 在2011版本的gb28181协议中,拉取视频流只要求udp方式,从2016开始要求新增支持tcp被动和tcp主动两种方式,udp理论上会丢包的,所以实际使用过程可能会出现画面花屏的情况,而tcp肯定不丢包,起码…...

)

React Native 开发环境搭建(全平台详解)

React Native 开发环境搭建(全平台详解) 在开始使用 React Native 开发移动应用之前,正确设置开发环境是至关重要的一步。本文将为你提供一份全面的指南,涵盖 macOS 和 Windows 平台的配置步骤,如何在 Android 和 iOS…...

从WWDC看苹果产品发展的规律

WWDC 是苹果公司一年一度面向全球开发者的盛会,其主题演讲展现了苹果在产品设计、技术路线、用户体验和生态系统构建上的核心理念与演进脉络。我们借助 ChatGPT Deep Research 工具,对过去十年 WWDC 主题演讲内容进行了系统化分析,形成了这份…...

江苏艾立泰跨国资源接力:废料变黄金的绿色供应链革命

在华东塑料包装行业面临限塑令深度调整的背景下,江苏艾立泰以一场跨国资源接力的创新实践,重新定义了绿色供应链的边界。 跨国回收网络:废料变黄金的全球棋局 艾立泰在欧洲、东南亚建立再生塑料回收点,将海外废弃包装箱通过标准…...

多种风格导航菜单 HTML 实现(附源码)

下面我将为您展示 6 种不同风格的导航菜单实现,每种都包含完整 HTML、CSS 和 JavaScript 代码。 1. 简约水平导航栏 <!DOCTYPE html> <html lang"zh-CN"> <head><meta charset"UTF-8"><meta name"viewport&qu…...

爬虫基础学习day2

# 爬虫设计领域 工商:企查查、天眼查短视频:抖音、快手、西瓜 ---> 飞瓜电商:京东、淘宝、聚美优品、亚马逊 ---> 分析店铺经营决策标题、排名航空:抓取所有航空公司价格 ---> 去哪儿自媒体:采集自媒体数据进…...

OPenCV CUDA模块图像处理-----对图像执行 均值漂移滤波(Mean Shift Filtering)函数meanShiftFiltering()

操作系统:ubuntu22.04 OpenCV版本:OpenCV4.9 IDE:Visual Studio Code 编程语言:C11 算法描述 在 GPU 上对图像执行 均值漂移滤波(Mean Shift Filtering),用于图像分割或平滑处理。 该函数将输入图像中的…...

Mac下Android Studio扫描根目录卡死问题记录

环境信息 操作系统: macOS 15.5 (Apple M2芯片)Android Studio版本: Meerkat Feature Drop | 2024.3.2 Patch 1 (Build #AI-243.26053.27.2432.13536105, 2025年5月22日构建) 问题现象 在项目开发过程中,提示一个依赖外部头文件的cpp源文件需要同步,点…...

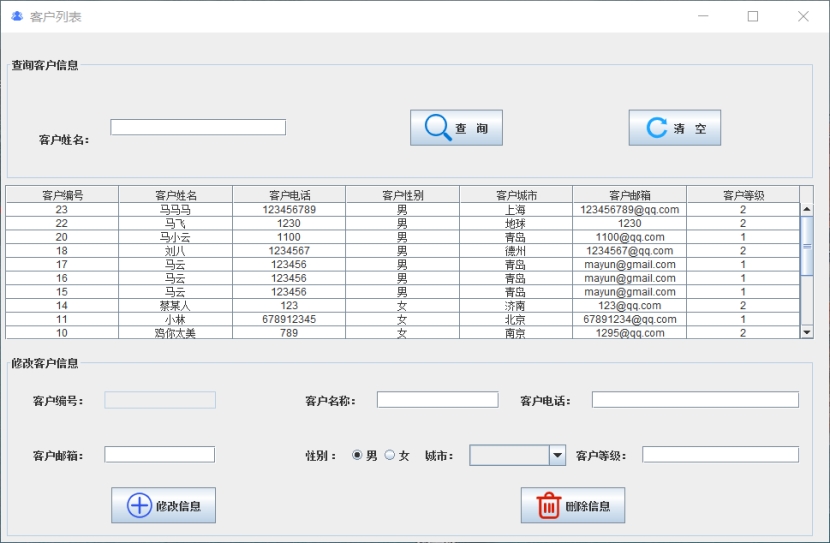

基于Java+MySQL实现(GUI)客户管理系统

客户资料管理系统的设计与实现 第一章 需求分析 1.1 需求总体介绍 本项目为了方便维护客户信息为了方便维护客户信息,对客户进行统一管理,可以把所有客户信息录入系统,进行维护和统计功能。可通过文件的方式保存相关录入数据,对…...