kaggle视频行为分析1st and Future - Player Contact Detection

这次比赛的目标是检测美式橄榄球NFL比赛中球员经历的外部接触。您将使用视频和球员追踪数据来识别发生接触的时刻,以帮助提高球员的安全。两种接触,一种是人与人的,另一种是人与地面,不包括脚底和地面的,跟我之前做的这个是同一个主办方举行的

kaggle视频追踪NFL Health & Safety - Helmet Assignment-CSDN博客

之前做的是视频追踪,用的deepsort,这一场比赛用的2.5DCNN。

EDA部分

eda可以参考这一个notebook,用的fasteda,挺方便的

NFL Player Contact Detection EDA 🏈 | Kaggle

视频数据在test和train文件夹里面,还提供了这一个train_baseline_helmets.csv,是由上一次比赛的冠军方案产生的,是我之前做的视频追踪,train_player_tracking.csv 的频率是10HZ,视频是59.94HZ,之后要进行转换,snap 事件也就是比赛开始发生在视频的第五秒

train_labels.csv

step: A number representing each each timestep for each play, starting at 0 at the moment of the play starting, and incrementing by 1 every 0.1 seconds.- 之前说的比赛第5秒开始,一个step是0.1秒

接触发生以10HZ记录

[train/test]_player_tracking.csv

datetime: timestamp at 10 Hz.

[train/test]_video_metadata.csv

be used to sync with player tracking data.和视频是同步的

训练部分

我自己租卡跑,20多个小时,10个epoch,我上传到kaggle,链接如下

track_weight | Kaggle

额外要用的一个数据集如下,我用的的4090显卡20核跑的,你要自己训练的话要自己修改一下

timm-0.6.9 | Kaggle

导入包

import os

import sys

import glob

import numpy as np

import pandas as pd

import random

import math

import gc

import cv2

from tqdm import tqdm

import time

from functools import lru_cache

import torch

from torch import nn

from torch.nn import functional as F

from torch.utils.data import Dataset, DataLoader

from torch.cuda.amp import autocast, GradScaler

import timm

import albumentations as A

from albumentations.pytorch import ToTensorV2

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from timm.scheduler import CosineLRScheduler

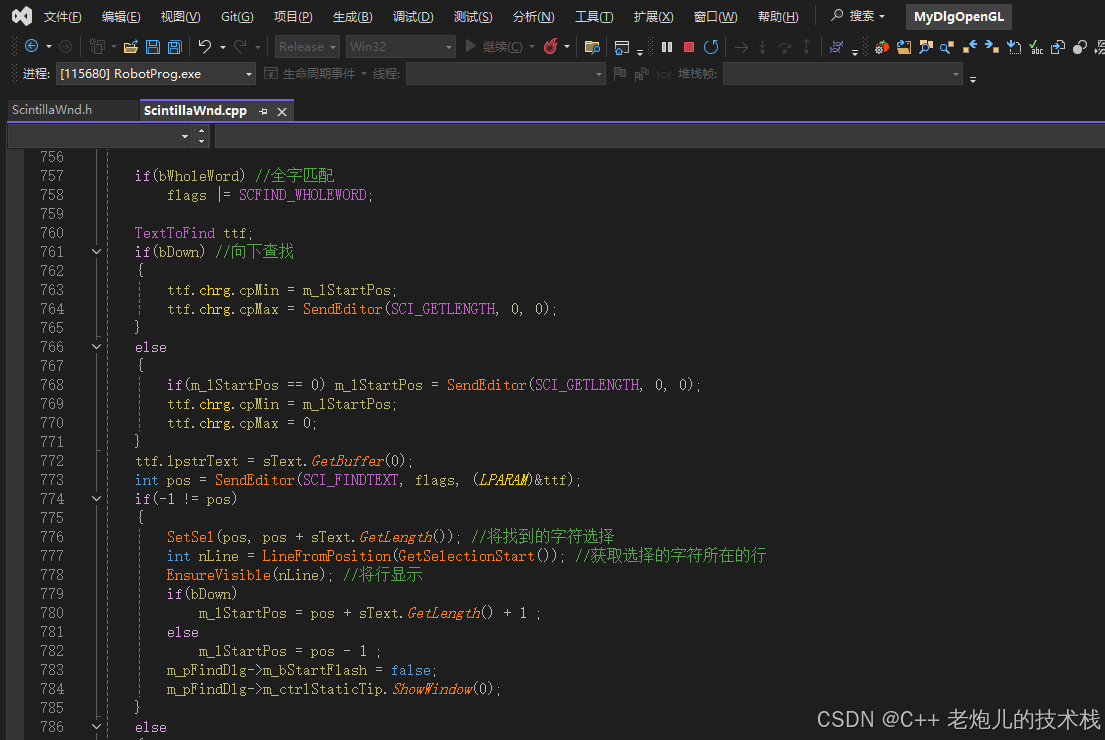

sys.path.append('../input/timm-0-6-9/pytorch-image-models-master')配置

CFG = {'seed': 42,'model': 'convnext_small.fb_in1k','img_size': 256,'epochs': 10,'train_bs': 48, 'valid_bs': 32,'lr': 1e-3, 'weight_decay': 1e-6,'num_workers': 20,'max_grad_norm' : 1000,'epochs_warmup' : 3.0

}

我用的convnext,这个网络是原本的cnn根据vit模型去反复修改的,有兴趣自己去找论文看,但论文也就是在那反复调

设置种子和device

def seed_everything(seed):random.seed(seed)os.environ['PYTHONHASHSEED'] = str(seed)np.random.seed(seed)torch.manual_seed(seed)torch.cuda.manual_seed(seed)torch.cuda.manual_seed_all(seed)torch.backends.cudnn.deterministic = Truetorch.backends.cudnn.benchmark = Falseseed_everything(CFG['seed'])

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')添加一些额外的列和读取数据

def expand_contact_id(df):"""Splits out contact_id into seperate columns."""df["game_play"] = df["contact_id"].str[:12]df["step"] = df["contact_id"].str.split("_").str[-3].astype("int")df["nfl_player_id_1"] = df["contact_id"].str.split("_").str[-2]df["nfl_player_id_2"] = df["contact_id"].str.split("_").str[-1]return df

labels = expand_contact_id(pd.read_csv("../input/nfl-player-contact-detection/train_labels.csv"))

train_tracking = pd.read_csv("../input/nfl-player-contact-detection/train_player_tracking.csv")

train_helmets = pd.read_csv("../input/nfl-player-contact-detection/train_baseline_helmets.csv")

train_video_metadata = pd.read_csv("../input/nfl-player-contact-detection/train_video_metadata.csv")将视频数据转化为图像数据

import subprocess

from tqdm import tqdm# 假设 train_helmets 是一个包含视频文件名的 DataFrame

for video in tqdm(train_helmets.video.unique()):if 'Endzone2' not in video:# 输入视频路径input_path = f'/openbayes/home/train/{video}'# 输出帧路径output_path = f'/openbayes/train/frames/{video}_%04d.jpg'# 构建 ffmpeg 命令command = ['ffmpeg','-i', input_path, # 输入视频文件'-q:v', '5', # 设置输出图像质量'-f', 'image2', # 输出为图像序列output_path, # 输出图像路径'-hide_banner', # 隐藏 ffmpeg 的 banner 信息'-loglevel', 'error' # 只显示错误日志]# 执行命令subprocess.run(command, check=True)可以自己修改那里的质量,在kaggle上不能训练,要你自己租卡才跑的动

创建一些特征

def create_features(df, tr_tracking, merge_col="step", use_cols=["x_position", "y_position"]):output_cols = []df_combo = (df.astype({"nfl_player_id_1": "str"}).merge(tr_tracking.astype({"nfl_player_id": "str"})[["game_play", merge_col, "nfl_player_id",] + use_cols],left_on=["game_play", merge_col, "nfl_player_id_1"],right_on=["game_play", merge_col, "nfl_player_id"],how="left",).rename(columns={c: c+"_1" for c in use_cols}).drop("nfl_player_id", axis=1).merge(tr_tracking.astype({"nfl_player_id": "str"})[["game_play", merge_col, "nfl_player_id"] + use_cols],left_on=["game_play", merge_col, "nfl_player_id_2"],right_on=["game_play", merge_col, "nfl_player_id"],how="left",).drop("nfl_player_id", axis=1).rename(columns={c: c+"_2" for c in use_cols}).sort_values(["game_play", merge_col, "nfl_player_id_1", "nfl_player_id_2"]).reset_index(drop=True))output_cols += [c+"_1" for c in use_cols]output_cols += [c+"_2" for c in use_cols]if ("x_position" in use_cols) & ("y_position" in use_cols):index = df_combo['x_position_2'].notnull()distance_arr = np.full(len(index), np.nan)tmp_distance_arr = np.sqrt(np.square(df_combo.loc[index, "x_position_1"] - df_combo.loc[index, "x_position_2"])+ np.square(df_combo.loc[index, "y_position_1"]- df_combo.loc[index, "y_position_2"]))distance_arr[index] = tmp_distance_arrdf_combo['distance'] = distance_arroutput_cols += ["distance"]df_combo['G_flug'] = (df_combo['nfl_player_id_2']=="G")output_cols += ["G_flug"]return df_combo, output_colsuse_cols = ['x_position', 'y_position', 'speed', 'distance','direction', 'orientation', 'acceleration', 'sa'

]train, feature_cols = create_features(labels, train_tracking, use_cols=use_cols)label和train_tracking进行合并,这里的feature_cols后面训练要用到

和视频的频率进行同步,过滤一部分数据

train_filtered = train.query('not distance>2').reset_index(drop=True)

train_filtered['frame'] = (train_filtered['step']/10*59.94+5*59.94).astype('int')+1

train_filtered.head()视频频率是59.94,而数据集是10,这里将距离过大的pair去除

数据增强

train_aug = A.Compose([A.HorizontalFlip(p=0.5),A.ShiftScaleRotate(p=0.5),A.RandomBrightnessContrast(brightness_limit=(-0.1, 0.1), contrast_limit=(-0.1, 0.1), p=0.5),A.Normalize(mean=[0.], std=[1.]),ToTensorV2()

])valid_aug = A.Compose([A.Normalize(mean=[0.], std=[1.]),ToTensorV2()

])创建字典

video2helmets = {}

train_helmets_new = train_helmets.set_index('video')

for video in tqdm(train_helmets.video.unique()):video2helmets[video] = train_helmets_new.loc[video].reset_index(drop=True)

video2frames = {}for game_play in tqdm(train_video_metadata.game_play.unique()):for view in ['Endzone', 'Sideline']:video = game_play + f'_{view}.mp4'video2frames[video] = max(list(map(lambda x:int(x.split('_')[-1].split('.')[0]), \glob.glob(f'../train/frames/{video}*'))))取出视频对应的检测数据和每个视频的最大帧数,检测数据后面用来截取图像用的,最大帧数确保抽取的帧不超过这个范围

数据集

class MyDataset(Dataset):def __init__(self, df, aug=train_aug, mode='train'):self.df = dfself.frame = df.frame.valuesself.feature = df[feature_cols].fillna(-1).valuesself.players = df[['nfl_player_id_1','nfl_player_id_2']].valuesself.game_play = df.game_play.valuesself.aug = augself.mode = modedef __len__(self):return len(self.df)# @lru_cache(1024)# def read_img(self, path):# return cv2.imread(path, 0)def __getitem__(self, idx): window = 24frame = self.frame[idx]if self.mode == 'train':frame = frame + random.randint(-6, 6)players = []for p in self.players[idx]:if p == 'G':players.append(p)else:players.append(int(p))imgs = []for view in ['Endzone', 'Sideline']:video = self.game_play[idx] + f'_{view}.mp4'tmp = video2helmets[video]

# tmp = tmp.query('@frame-@window<=frame<=@frame+@window')tmp[tmp['frame'].between(frame-window, frame+window)]tmp = tmp[tmp.nfl_player_id.isin(players)]#.sort_values(['nfl_player_id', 'frame'])tmp_frames = tmp.frame.valuestmp = tmp.groupby('frame')[['left','width','top','height']].mean()

#0.002sbboxes = []for f in range(frame-window, frame+window+1, 1):if f in tmp_frames:x, w, y, h = tmp.loc[f][['left','width','top','height']]bboxes.append([x, w, y, h])else:bboxes.append([np.nan, np.nan, np.nan, np.nan])bboxes = pd.DataFrame(bboxes).interpolate(limit_direction='both').valuesbboxes = bboxes[::4]if bboxes.sum() > 0:flag = 1else:flag = 0

#0.03sfor i, f in enumerate(range(frame-window, frame+window+1, 4)):img_new = np.zeros((256, 256), dtype=np.float32)if flag == 1 and f <= video2frames[video]:img = cv2.imread(f'../train/frames/{video}_{f:04d}.jpg', 0)x, w, y, h = bboxes[i]img = img[int(y+h/2)-128:int(y+h/2)+128,int(x+w/2)-128:int(x+w/2)+128].copy()img_new[:img.shape[0], :img.shape[1]] = imgimgs.append(img_new)

#0.06sfeature = np.float32(self.feature[idx])img = np.array(imgs).transpose(1, 2, 0) img = self.aug(image=img)["image"]label = np.float32(self.df.contact.values[idx])return img, feature, label模型

class Model(nn.Module):def __init__(self):super(Model, self).__init__()self.backbone = timm.create_model(CFG['model'], pretrained=True, num_classes=500, in_chans=13)self.mlp = nn.Sequential(nn.Linear(18, 64),nn.LayerNorm(64),nn.ReLU(),nn.Dropout(0.2),)self.fc = nn.Linear(64+500*2, 1)def forward(self, img, feature):b, c, h, w = img.shapeimg = img.reshape(b*2, c//2, h, w)img = self.backbone(img).reshape(b, -1)feature = self.mlp(feature)y = self.fc(torch.cat([img, feature], dim=1))return y这里len(feature_cols)是18,所以mlp输入是18,在上面

for i, f in enumerate(range(frame-window, frame+window+1, 4)):img_new = np.zeros((256, 256), dtype=np.float32)if flag == 1 and f <= video2frames[video]:img = cv2.imread(f'/openbayes/train/frames/{video}_{f:04d}.jpg', 0)x, w, y, h = bboxes[i]img = img[int(y+h/2)-128:int(y+h/2)+128,int(x+w/2)-128:int(x+w/2)+128].copy()img_new[:img.shape[0], :img.shape[1]] = imgimgs.append(img_new)进行了抽帧,每个视角抽了13帧,两个视角,总计26帧,所以输入通道26,跟之前的比赛一样,也是提供两个视角

for view in ['Endzone', 'Sideline']:

损失函数

model = Model()

model.to(device)

model.train()

import torch.nn as nn

criterion = nn.BCEWithLogitsLoss()这里用的交叉熵

评估指标

def evaluate(model, loader_val, *, compute_score=True, pbar=None):"""Predict and compute loss and score"""tb = time.time()in_training = model.trainingmodel.eval()loss_sum = 0.0n_sum = 0y_all = []y_pred_all = []if pbar is not None:pbar = tqdm(desc='Predict', nrows=78, total=pbar)total= len(loader_val)for ibatch,(img, feature, label) in tqdm(enumerate(loader_val),total = total):# img, feature, label = [x.to(device) for x in batch]img = img.to(device)feature = feature.to(device)n = label.size(0)label = label.to(device)with torch.no_grad():y_pred = model(img, feature)loss = criterion(y_pred.view(-1), label)n_sum += nloss_sum += n * loss.item()if pbar is not None:pbar.update(len(img))del loss, img, labelgc.collect()loss_val = loss_sum / n_sumret = {'loss': loss_val,'time': time.time() - tb}model.train(in_training) gc.collect()return ret

载入数据,设置学习率计划和优化器

train_set,valid_set = train_test_split(train_filtered,test_size=0.05, random_state=42,stratify = train_filtered['contact'])

train_set = MyDataset(train_set, train_aug, 'train')

train_loader = DataLoader(train_set, batch_size=CFG['train_bs'], shuffle=True, num_workers=12, pin_memory=True,drop_last=True)

valid_set = MyDataset(valid_set, valid_aug, 'test')

valid_loader = DataLoader(valid_set, batch_size=CFG['valid_bs'], shuffle=False, num_workers=12, pin_memory=True)

optimizer = torch.optim.AdamW(model.parameters(), lr=CFG['lr'], weight_decay=CFG['weight_decay'])

nbatch = len(train_loader)

warmup = CFG['epochs_warmup'] * nbatch

nsteps = CFG['epochs'] * nbatch

scheduler = CosineLRScheduler(optimizer,warmup_t=warmup, warmup_lr_init=0.0, warmup_prefix=True,t_initial=(nsteps - warmup), lr_min=1e-6) 开始训练,这里保存整个模型

for iepoch in range(CFG['epochs']):print('Epoch:', iepoch+1)loss_sum = 0.0n_sum = 0total = len(train_loader)# Trainfor ibatch,(img, feature, label) in tqdm(enumerate(train_loader),total = total):img = img.to(device)feature = feature.to(device)n = label.size(0)label = label.to(device)optimizer.zero_grad()y_pred = model(img, feature).squeeze(-1)loss = criterion(y_pred, label)loss_train = loss.item()loss_sum += n * loss_trainn_sum += nloss.backward()grad_norm = torch.nn.utils.clip_grad_norm_(model.parameters(),CFG['max_grad_norm'])optimizer.step()scheduler.step(iepoch * nbatch + ibatch + 1)val = evaluate(model, valid_loader)time_val += val['time']loss_train = loss_sum / n_sumdt = (time.time() - tb) / 60print('Epoch: %d Train Loss: %.4f Test Loss: %.4f Time: %.2f min' %(iepoch + 1, loss_train, val['loss'],dt))if val['loss'] < best_loss:best_loss = val['loss']# Save modelofilename = '/openbayes/home/best_model.pt'torch.save(model, ofilename)print(ofilename, 'written')del valgc.collect()dt = time.time() - tb

print(' %.2f min total, %.2f min val' % (dt / 60, time_val / 60))

gc.collect()只保留权重可能会出现一些bug,保留整个模型比较稳妥

推理部分

这里我用TTA的版本

导入包

import os

import sys

sys.path.append('/kaggle/input/timm-0-6-9/pytorch-image-models-master')

import glob

import numpy as np

import pandas as pd

import random

import math

import gc

import cv2

from tqdm import tqdm

import time

from functools import lru_cache

import torch

from torch import nn

from torch.nn import functional as F

from torch.utils.data import Dataset, DataLoader

from torch.cuda.amp import autocast, GradScaler

import timm

import albumentations as A

from albumentations.pytorch import ToTensorV2

import matplotlib.pyplot as plt

from sklearn.metrics import matthews_corrcoef数据处理

这里基本和前面一样,我全部放一起了

CFG = {'seed': 42,'model': 'convnext_small.fb_in1k','img_size': 256,'epochs': 10,'train_bs': 100, 'valid_bs': 64,'lr': 1e-3, 'weight_decay': 1e-6,'num_workers': 4

}

def seed_everything(seed):random.seed(seed)os.environ['PYTHONHASHSEED'] = str(seed)np.random.seed(seed)torch.manual_seed(seed)torch.cuda.manual_seed(seed)torch.cuda.manual_seed_all(seed)torch.backends.cudnn.deterministic = Truetorch.backends.cudnn.benchmark = Falseseed_everything(CFG['seed'])

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

def expand_contact_id(df):"""Splits out contact_id into seperate columns."""df["game_play"] = df["contact_id"].str[:12]df["step"] = df["contact_id"].str.split("_").str[-3].astype("int")df["nfl_player_id_1"] = df["contact_id"].str.split("_").str[-2]df["nfl_player_id_2"] = df["contact_id"].str.split("_").str[-1]return dflabels = expand_contact_id(pd.read_csv("/kaggle/input/nfl-player-contact-detection/sample_submission.csv"))test_tracking = pd.read_csv("/kaggle/input/nfl-player-contact-detection/test_player_tracking.csv")test_helmets = pd.read_csv("/kaggle/input/nfl-player-contact-detection/test_baseline_helmets.csv")test_video_metadata = pd.read_csv("/kaggle/input/nfl-player-contact-detection/test_video_metadata.csv")

!mkdir -p ../work/framesfor video in tqdm(test_helmets.video.unique()):if 'Endzone2' not in video:!ffmpeg -i /kaggle/input/nfl-player-contact-detection/test/{video} -q:v 2 -f image2 /kaggle/work/frames/{video}_%04d.jpg -hide_banner -loglevel error

def create_features(df, tr_tracking, merge_col="step", use_cols=["x_position", "y_position"]):output_cols = []df_combo = (df.astype({"nfl_player_id_1": "str"}).merge(tr_tracking.astype({"nfl_player_id": "str"})[["game_play", merge_col, "nfl_player_id",] + use_cols],left_on=["game_play", merge_col, "nfl_player_id_1"],right_on=["game_play", merge_col, "nfl_player_id"],how="left",).rename(columns={c: c+"_1" for c in use_cols}).drop("nfl_player_id", axis=1).merge(tr_tracking.astype({"nfl_player_id": "str"})[["game_play", merge_col, "nfl_player_id"] + use_cols],left_on=["game_play", merge_col, "nfl_player_id_2"],right_on=["game_play", merge_col, "nfl_player_id"],how="left",).drop("nfl_player_id", axis=1).rename(columns={c: c+"_2" for c in use_cols}).sort_values(["game_play", merge_col, "nfl_player_id_1", "nfl_player_id_2"]).reset_index(drop=True))output_cols += [c+"_1" for c in use_cols]output_cols += [c+"_2" for c in use_cols]if ("x_position" in use_cols) & ("y_position" in use_cols):index = df_combo['x_position_2'].notnull()distance_arr = np.full(len(index), np.nan)tmp_distance_arr = np.sqrt(np.square(df_combo.loc[index, "x_position_1"] - df_combo.loc[index, "x_position_2"])+ np.square(df_combo.loc[index, "y_position_1"]- df_combo.loc[index, "y_position_2"]))distance_arr[index] = tmp_distance_arrdf_combo['distance'] = distance_arroutput_cols += ["distance"]df_combo['G_flug'] = (df_combo['nfl_player_id_2']=="G")output_cols += ["G_flug"]return df_combo, output_colsuse_cols = ['x_position', 'y_position', 'speed', 'distance','direction', 'orientation', 'acceleration', 'sa'

]test, feature_cols = create_features(labels, test_tracking, use_cols=use_cols)

test

test_filtered = test.query('not distance>2').reset_index(drop=True)

test_filtered['frame'] = (test_filtered['step']/10*59.94+5*59.94).astype('int')+1

test_filtered

del test, labels, test_tracking

gc.collect()

train_aug = A.Compose([A.HorizontalFlip(p=0.5),A.ShiftScaleRotate(p=0.5),A.RandomBrightnessContrast(brightness_limit=(-0.1, 0.1), contrast_limit=(-0.1, 0.1), p=0.5),A.Normalize(mean=[0.], std=[1.]),ToTensorV2()

])valid_aug = A.Compose([A.Normalize(mean=[0.], std=[1.]),ToTensorV2()

])

video2helmets = {}

test_helmets_new = test_helmets.set_index('video')

for video in tqdm(test_helmets.video.unique()):video2helmets[video] = test_helmets_new.loc[video].reset_index(drop=True)del test_helmets, test_helmets_new

gc.collect()

video2frames = {}for game_play in tqdm(test_video_metadata.game_play.unique()):for view in ['Endzone', 'Sideline']:video = game_play + f'_{view}.mp4'video2frames[video] = max(list(map(lambda x:int(x.split('_')[-1].split('.')[0]), \glob.glob(f'/kaggle/work/frames/{video}*'))))

class MyDataset(Dataset):def __init__(self, df, aug=valid_aug, mode='train'):self.df = dfself.frame = df.frame.valuesself.feature = df[feature_cols].fillna(-1).valuesself.players = df[['nfl_player_id_1','nfl_player_id_2']].valuesself.game_play = df.game_play.valuesself.aug = augself.mode = modedef __len__(self):return len(self.df)# @lru_cache(1024)# def read_img(self, path):# return cv2.imread(path, 0)def __getitem__(self, idx): window = 24frame = self.frame[idx]if self.mode == 'train':frame = frame + random.randint(-6, 6)players = []for p in self.players[idx]:if p == 'G':players.append(p)else:players.append(int(p))imgs = []for view in ['Endzone', 'Sideline']:video = self.game_play[idx] + f'_{view}.mp4'tmp = video2helmets[video]

# tmp = tmp.query('@frame-@window<=frame<=@frame+@window')tmp[tmp['frame'].between(frame-window, frame+window)]tmp = tmp[tmp.nfl_player_id.isin(players)]#.sort_values(['nfl_player_id', 'frame'])tmp_frames = tmp.frame.valuestmp = tmp.groupby('frame')[['left','width','top','height']].mean()

#0.002sbboxes = []for f in range(frame-window, frame+window+1, 1):if f in tmp_frames:x, w, y, h = tmp.loc[f][['left','width','top','height']]bboxes.append([x, w, y, h])else:bboxes.append([np.nan, np.nan, np.nan, np.nan])bboxes = pd.DataFrame(bboxes).interpolate(limit_direction='both').valuesbboxes = bboxes[::4]if bboxes.sum() > 0:flag = 1else:flag = 0

#0.03sfor i, f in enumerate(range(frame-window, frame+window+1, 4)):img_new = np.zeros((256, 256), dtype=np.float32)if flag == 1 and f <= video2frames[video]:img = cv2.imread(f'/kaggle/work/frames/{video}_{f:04d}.jpg', 0)x, w, y, h = bboxes[i]img = img[int(y+h/2)-128:int(y+h/2)+128,int(x+w/2)-128:int(x+w/2)+128].copy()img_new[:img.shape[0], :img.shape[1]] = imgimgs.append(img_new)

#0.06sfeature = np.float32(self.feature[idx])img = np.array(imgs).transpose(1, 2, 0) img = self.aug(image=img)["image"]label = np.float32(self.df.contact.values[idx])return img, feature, label查看截取出来的图片

img, feature, label = MyDataset(test_filtered, valid_aug, 'test')[0]

plt.imshow(img.permute(1,2,0)[:,:,7])

plt.show()

img.shape, feature, label

进行推理

class Model(nn.Module):def __init__(self):super(Model, self).__init__()self.backbone = timm.create_model(CFG['model'], pretrained=False, num_classes=500, in_chans=13)self.mlp = nn.Sequential(nn.Linear(18, 64),nn.LayerNorm(64),nn.ReLU(),nn.Dropout(0.2),# nn.Linear(64, 64),# nn.LayerNorm(64),# nn.ReLU(),# nn.Dropout(0.2))self.fc = nn.Linear(64+500*2, 1)def forward(self, img, feature):b, c, h, w = img.shapeimg = img.reshape(b*2, c//2, h, w)img = self.backbone(img).reshape(b, -1)feature = self.mlp(feature)y = self.fc(torch.cat([img, feature], dim=1))return y

test_set = MyDataset(test_filtered, valid_aug, 'test')

test_loader = DataLoader(test_set, batch_size=CFG['valid_bs'], shuffle=False, num_workers=CFG['num_workers'], pin_memory=True)model = Model().to(device)

model = torch.load('/kaggle/input/track-weight/best_model.pt')model.eval()y_pred = []

with torch.no_grad():tk = tqdm(test_loader, total=len(test_loader))for step, batch in enumerate(tk):if(step % 4 != 3):img, feature, label = [x.to(device) for x in batch]output1 = model(img, feature).squeeze(-1)output2 = model(img.flip(-1), feature).squeeze(-1)y_pred.extend(0.2*(output1.sigmoid().cpu().numpy()) + 0.8*(output2.sigmoid().cpu().numpy()))else:img, feature, label = [x.to(device) for x in batch]output = model(img.flip(-1), feature).squeeze(-1)y_pred.extend(output.sigmoid().cpu().numpy()) y_pred = np.array(y_pred)这里用了翻转,tta算是一种隐式模型集成

提交

th = 0.29test_filtered['contact'] = (y_pred >= th).astype('int')sub = pd.read_csv('/kaggle/input/nfl-player-contact-detection/sample_submission.csv')sub = sub.drop("contact", axis=1).merge(test_filtered[['contact_id', 'contact']], how='left', on='contact_id')

sub['contact'] = sub['contact'].fillna(0).astype('int')sub[["contact_id", "contact"]].to_csv("submission.csv", index=False)sub.head()推理代码链接和成绩

infer_code | Kaggle

修改版本

之前的,效果不是很好,我还是换成resnet50进行训练,结果如下,链接和权重如下

infer_code | Kaggle

best_weight | Kaggle

相关文章:

kaggle视频行为分析1st and Future - Player Contact Detection

这次比赛的目标是检测美式橄榄球NFL比赛中球员经历的外部接触。您将使用视频和球员追踪数据来识别发生接触的时刻,以帮助提高球员的安全。两种接触,一种是人与人的,另一种是人与地面,不包括脚底和地面的,跟我之前做的这…...

1. junit5介绍

JUnit 5 是 Java 生态中最流行的单元测试框架,由 JUnit Platform、JUnit Jupiter 和 JUnit Vintage 三个子项目组成。以下是 JUnit 5 的全面使用指南及示例: 一、环境配置 1. Maven 依赖 <dependency><groupId>org.junit.jupiter</grou…...

(脚本学习)BUU18 [CISCN2019 华北赛区 Day2 Web1]Hack World1

自用 题目 考虑是不是布尔盲注,如何测试:用"1^1^11 1^0^10,就像是真真真等于真,真假真等于假"这个测试 SQL布尔盲注脚本1 import requestsurl "http://8e4a9bf2-c055-4680-91fd-5b969ebc209e.node5.buuoj.cn…...

Caxa 二次开发 ObjectCRX-1 踩坑:环境配置以及 Helloworld

绝了,坑是真 nm 的多,官方给的文档里到处都是坑。 用的环境 ObjectCRX,以下简称 objcrx。 #1 安装环境 & 参考文档的大坑 #1.1 Caxa 提供的文档和环境安装包 首先一定要跟 Caxa 对应版本的帮助里提供的 ObjectCRX 安装器 (wizard) 匹配…...

【自然语言处理(NLP)】生成词向量:GloVe(Global Vectors for Word Representation)原理及应用

文章目录 介绍GloVe 介绍核心思想共现矩阵1. 共现矩阵的定义2. 共现概率矩阵的定义3. 共现概率矩阵的意义4. 共现概率矩阵的构建步骤5. 共现概率矩阵的应用6. 示例7. 优缺点优点缺点 **总结** 目标函数训练过程使用预训练的GloVe词向量 优点应用总结 个人主页:道友老…...

bable-预设

babel 有多种预设,最常见的预设是 babel/preset-env,它可以让你使用最新的 JS 语法,而无需针对每种语法转换设置具体的插件。 babel/preset-env 预设 安装 npm i -D babel/preset-env配置 .babelrc 文件 在根目录下新建 .babelrc 文件&a…...

回顾生化之父三上真司的游戏思想

1. 放养式野蛮成长路线,开创生存恐怖类型 三上进入capcom后,没有培训,没有师傅手把手的指导,而是每天摸索写策划书,老员工给出不行的评语后,扔掉旧的重写新的。 然后突然就成为游戏总监,进入开…...

无公网IP 外网访问青龙面板

青龙面板是一款基于 Docker 的自动化管理平台,用户可以通过简便的 Web 界面,轻松的添加、管理和监控各种自动化任务。而且这款面板还支持多用户、多任务、任务依赖和日志监控,个人和团队都比较适合使用。 本文将详细的介绍如何用 Docker 在本…...

中国证券基本知识汇总

中国证券市场是一个多层次、多领域的市场,涉及到各种金融工具、交易方式、市场参与者等内容。以下是中国证券基本知识的汇总: 1. 证券市场概述 证券市场:是指买卖证券(如股票、债券、基金等)的市场。证券市场可以分为…...

C基础寒假练习(2)

一、输出3-100以内的完美数,(完美数:因子和(因子不包含自身)数本身 #include <stdio.h>// 函数声明 int isPerfectNumber(int num);int main() {printf("3-100以内的完美数有:\n");for (int i 3; i < 100; i){if (isPerfectNumber…...

Baklib如何提升内容中台智能化推荐系统的精准服务与用户体验

内容概要 在数字化转型的浪潮中,内容中台的智能化推荐系统成为提升用户体验的重要工具。Baklib作为行业领先者,在这一领域积极探索,推出了具有前瞻性的解决方案,旨在提高内容的匹配度和推荐的精准性。本文将深入探讨Baklib如何通…...

【Java】位图 布隆过滤器

位图 初识位图 位图, 实际上就是将二进制位作为哈希表的一个个哈希桶的数据结构, 由于二进制位只能表示 0 和 1, 因此通常用于表示数据是否存在. 如下图所示, 这个位图就用于标识 0 ~ 14 中有什么数字存在 可以看到, 我们这里相当于是把下标作为了 key-value 的一员. 但是这…...

【专业标题】数字时代的影像保卫战:照片误删拯救全指南

在智能手机普及率达98%的今天,每个人的数字相册都承载着价值连城的记忆资产。照片误删事件却如同数字时代的隐形杀手,全球每分钟有超过5000张珍贵影像因此消失。当我们发现重要照片不翼而飞时,那种心脏骤停般的恐慌感,正是数据时代…...

深度剖析八大排序算法

欢迎并且感谢大家指出我的问题,由于本人水平有限,有些内容写的不是很全面,只是把比较实用的东西给写下来,如果有写的不对的地方,还希望各路大牛多多指教!谢谢大家!🥰 在计算机科学领…...

JVM_程序计数器的作用、特点、线程私有、本地方法的概述

①. 程序计数器 ①. 作用 (是用来存储指向下一条指令的地址,也即将要执行的指令代码。由执行引擎读取下一条指令) ②. 特点(是线程私有的 、不会存在内存溢出) ③. 注意:在物理上实现程序计数器是在寄存器实现的,整个cpu中最快的一个执行单元 ④. 它是唯一一个在java虚拟机规…...

【Numpy核心编程攻略:Python数据处理、分析详解与科学计算】2.20 傅里叶变换:从时域到频域的算法实现

2.20 傅里叶变换:从时域到频域的算法实现 目录 #mermaid-svg-zrRqIme9IEqP6JJE {font-family:"trebuchet ms",verdana,arial,sans-serif;font-size:16px;fill:#333;}#mermaid-svg-zrRqIme9IEqP6JJE .error-icon{fill:#552222;}#mermaid-svg-zrRqIme9IEqP…...

PAT甲级1052、Linked LIst Sorting

题目 A linked list consists of a series of structures, which are not necessarily adjacent in memory. We assume that each structure contains an integer key and a Next pointer to the next structure. Now given a linked list, you are supposed to sort the stru…...

git error: invalid path

git clone GitHub - guanpengchn/awesome-books: :books: 开发者推荐阅读的书籍 在windows上想把这个仓库拉取下来,发现本地git仓库创建 但只有一个.git隐藏文件夹,其他文件都处于删除状态。 问题: Cloning into awesome-books... remote:…...

优选算法合集————双指针(专题二)

好久都没给大家带来算法专题啦,今天给大家带来滑动窗口专题的训练 题目一:长度最小的子数组 题目描述: 给定一个含有 n 个正整数的数组和一个正整数 target 。 找出该数组中满足其和 ≥ target 的长度最小的 连续子数组 [numsl, numsl1, …...

)

Ubuntu下Tkinter绑定数字小键盘上的回车键(PySide6类似)

设计了一个tkinter程序,在Win下绑定回车键,直接绑定"<Return>"就可以使用主键盘和小键盘的回车键直接“提交”,到了ubuntu下就不行了。经过搜索,发现ubuntu下主键盘和数字小键盘的回车键,名称不一样。…...

第19节 Node.js Express 框架

Express 是一个为Node.js设计的web开发框架,它基于nodejs平台。 Express 简介 Express是一个简洁而灵活的node.js Web应用框架, 提供了一系列强大特性帮助你创建各种Web应用,和丰富的HTTP工具。 使用Express可以快速地搭建一个完整功能的网站。 Expre…...

【网络】每天掌握一个Linux命令 - iftop

在Linux系统中,iftop是网络管理的得力助手,能实时监控网络流量、连接情况等,帮助排查网络异常。接下来从多方面详细介绍它。 目录 【网络】每天掌握一个Linux命令 - iftop工具概述安装方式核心功能基础用法进阶操作实战案例面试题场景生产场景…...

visual studio 2022更改主题为深色

visual studio 2022更改主题为深色 点击visual studio 上方的 工具-> 选项 在选项窗口中,选择 环境 -> 常规 ,将其中的颜色主题改成深色 点击确定,更改完成...

1688商品列表API与其他数据源的对接思路

将1688商品列表API与其他数据源对接时,需结合业务场景设计数据流转链路,重点关注数据格式兼容性、接口调用频率控制及数据一致性维护。以下是具体对接思路及关键技术点: 一、核心对接场景与目标 商品数据同步 场景:将1688商品信息…...

Leetcode 3577. Count the Number of Computer Unlocking Permutations

Leetcode 3577. Count the Number of Computer Unlocking Permutations 1. 解题思路2. 代码实现 题目链接:3577. Count the Number of Computer Unlocking Permutations 1. 解题思路 这一题其实就是一个脑筋急转弯,要想要能够将所有的电脑解锁&#x…...

HTML前端开发:JavaScript 常用事件详解

作为前端开发的核心,JavaScript 事件是用户与网页交互的基础。以下是常见事件的详细说明和用法示例: 1. onclick - 点击事件 当元素被单击时触发(左键点击) button.onclick function() {alert("按钮被点击了!&…...

k8s业务程序联调工具-KtConnect

概述 原理 工具作用是建立了一个从本地到集群的单向VPN,根据VPN原理,打通两个内网必然需要借助一个公共中继节点,ktconnect工具巧妙的利用k8s原生的portforward能力,简化了建立连接的过程,apiserver间接起到了中继节…...

今日学习:Spring线程池|并发修改异常|链路丢失|登录续期|VIP过期策略|数值类缓存

文章目录 优雅版线程池ThreadPoolTaskExecutor和ThreadPoolTaskExecutor的装饰器并发修改异常并发修改异常简介实现机制设计原因及意义 使用线程池造成的链路丢失问题线程池导致的链路丢失问题发生原因 常见解决方法更好的解决方法设计精妙之处 登录续期登录续期常见实现方式特…...

uniapp 实现腾讯云IM群文件上传下载功能

UniApp 集成腾讯云IM实现群文件上传下载功能全攻略 一、功能背景与技术选型 在团队协作场景中,群文件共享是核心需求之一。本文将介绍如何基于腾讯云IMCOS,在uniapp中实现: 群内文件上传/下载文件元数据管理下载进度追踪跨平台文件预览 二…...

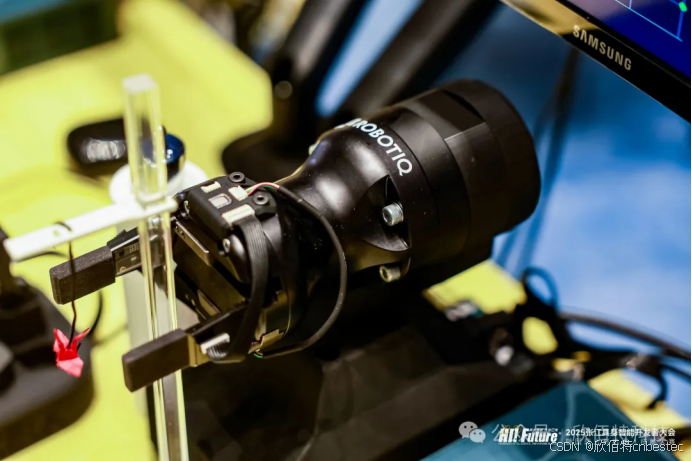

Xela矩阵三轴触觉传感器的工作原理解析与应用场景

Xela矩阵三轴触觉传感器通过先进技术模拟人类触觉感知,帮助设备实现精确的力测量与位移监测。其核心功能基于磁性三维力测量与空间位移测量,能够捕捉多维触觉信息。该传感器的设计不仅提升了触觉感知的精度,还为机器人、医疗设备和制造业的智…...