前端 基于 vue-simple-uploader 实现大文件断点续传和分片上传

文章目录

- 一、前言

- 二、后端部分

- 新建Maven 项目

- 后端

- pom.xml

- 配置文件 application.yml

- HttpStatus.java

- AjaxResult.java

- CommonConstant.java

- WebConfig.java

- CheckChunkVO.java

- BackChunk.java

- BackFileList.java

- BackChunkMapper.java

- BackFileListMapper.java

- BackFileListMapper.xml

- BackChunkMapper.xml

- IBackFileService.java

- IBackChunkService.java

- BackFileServiceImpl.java

- BackChunkServiceImpl.java

- FileController.java

- 启动类 PointUploadApplication .java 添加 Mapper 扫描

- 三、前端部分

- 初始化项目

- 安装依赖

- 新建 Upload.vue

- 修改 App.vue

- 修改路由 router/index.js

- 还要改一下 main.js , 把 vue-simple-uploader 组件加载进来 即全局注册

- 访问测试

- 四、数据库

一、前言

最近写项目碰到个需求,视频大文件上传,为了方便需要实现分片上传和断点续传。在网上找了很长时间,有很多文章,自己也都一一去实现,最终找到了这个博主发的文章,思路很清晰,前后端的代码也都有。在这里复刻一遍防止以后遇到,感谢大佬!

参考文章:基于 vue-simple-uploader 实现大文件断点续传和分片上传

二、后端部分

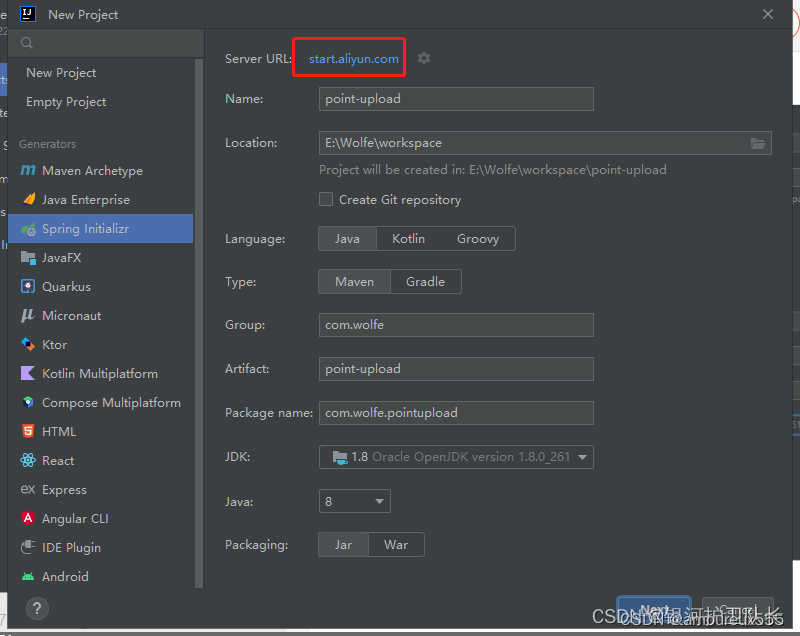

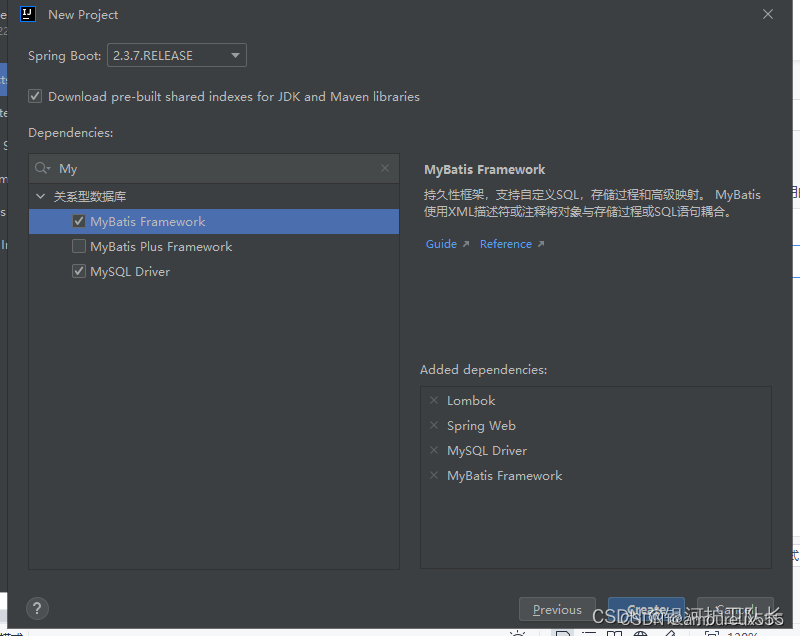

新建Maven 项目

后端

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><groupId>com.wolfe</groupId><artifactId>point-upload</artifactId><version>0.0.1-SNAPSHOT</version><name>point-upload</name><description>point-upload</description><properties><java.version>1.8</java.version><project.build.sourceEncoding>UTF-8</project.build.sourceEncoding><project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding><spring-boot.version>2.3.7.RELEASE</spring-boot.version></properties><dependencies><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId></dependency><dependency><groupId>org.mybatis.spring.boot</groupId><artifactId>mybatis-spring-boot-starter</artifactId><version>2.1.4</version></dependency><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><scope>runtime</scope></dependency><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><optional>true</optional></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-test</artifactId><scope>test</scope><exclusions><exclusion><groupId>org.junit.vintage</groupId><artifactId>junit-vintage-engine</artifactId></exclusion></exclusions></dependency></dependencies><dependencyManagement><dependencies><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-dependencies</artifactId><version>${spring-boot.version}</version><type>pom</type><scope>import</scope></dependency></dependencies></dependencyManagement><build><plugins><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-compiler-plugin</artifactId><version>3.8.1</version><configuration><source>1.8</source><target>1.8</target><encoding>UTF-8</encoding></configuration></plugin><plugin><groupId>org.springframework.boot</groupId><artifactId>spring-boot-maven-plugin</artifactId><version>2.3.7.RELEASE</version><configuration><mainClass>com.wolfe.pointupload.PointUploadApplication</mainClass></configuration><executions><execution><id>repackage</id><goals><goal>repackage</goal></goals></execution></executions></plugin></plugins></build></project>配置文件 application.yml

server:port: 8081

spring:application:name: point-uploaddatasource:driver-class-name: com.mysql.cj.jdbc.Drivername: defaultDataSourceurl: jdbc:mysql://localhost:3306/pointupload?serverTimezone=UTCusername: rootpassword: rootservlet:multipart:max-file-size: 100MBmax-request-size: 200MB

mybatis:mapper-locations: classpath:mapper/*xmltype-aliases-package: com.wolfe.pointupload.mybatis.entity

config:upload-path: E:/Wolfe/uploadPathHttpStatus.java

package com.wolfe.pointupload.common;public interface HttpStatus {/*** 操作成功*/int SUCCESS = 200;/*** 系统内部错误*/int ERROR = 500;

}AjaxResult.java

package com.wolfe.pointupload.common;import java.util.HashMap;public class AjaxResult extends HashMap<String, Object> {private static final long serialVersionUID = 1L;/*** 状态码*/public static final String CODE_TAG = "code";/*** 返回内容*/public static final String MSG_TAG = "msg";/*** 数据对象*/public static final String DATA_TAG = "data";/*** 初始化一个新创建的 AjaxResult 对象,使其表示一个空消息。*/public AjaxResult() {}/*** 初始化一个新创建的 AjaxResult 对象** @param code 状态码* @param msg 返回内容*/public AjaxResult(int code, String msg) {super.put(CODE_TAG, code);super.put(MSG_TAG, msg);super.put("result", true);super.put("needMerge", true);}/*** 初始化一个新创建的 AjaxResult 对象** @param code 状态码* @param msg 返回内容* @param data 数据对象*/public AjaxResult(int code, String msg, Object data) {super.put(CODE_TAG, code);super.put(MSG_TAG, msg);super.put("result", true);super.put("needMerge", true);if (data != null) {super.put(DATA_TAG, data);}}/*** 返回成功消息** @return 成功消息*/public static AjaxResult success() {return AjaxResult.success("操作成功");}/*** 返回成功数据** @return 成功消息*/public static AjaxResult success(Object data) {return AjaxResult.success("操作成功", data);}/*** 返回成功消息** @param msg 返回内容* @return 成功消息*/public static AjaxResult success(String msg) {return AjaxResult.success(msg, null);}/*** 返回成功消息** @param msg 返回内容* @param data 数据对象* @return 成功消息*/public static AjaxResult success(String msg, Object data) {return new AjaxResult(HttpStatus.SUCCESS, msg, data);}/*** 返回错误消息** @return*/public static AjaxResult error() {return AjaxResult.error("操作失败");}/*** 返回错误消息** @param msg 返回内容* @return 警告消息*/public static AjaxResult error(String msg) {return AjaxResult.error(msg, null);}/*** 返回错误消息** @param msg 返回内容* @param data 数据对象* @return 警告消息*/public static AjaxResult error(String msg, Object data) {return new AjaxResult(HttpStatus.ERROR, msg, data);}/*** 返回错误消息** @param code 状态码* @param msg 返回内容* @return 警告消息*/public static AjaxResult error(int code, String msg) {return new AjaxResult(code, msg, null);}

}CommonConstant.java

package com.wolfe.pointupload.common;public interface CommonConstant {/*** 更新或新增是否成功 0为失败 1为成功* 当要增加的信息已存在时,返回为-1*/Integer UPDATE_FAIL = 0;Integer UPDATE_EXISTS = -1;

}

WebConfig.java

package com.wolfe.pointupload.config;import org.springframework.context.annotation.Configuration;

import org.springframework.web.servlet.config.annotation.CorsRegistry;

import org.springframework.web.servlet.config.annotation.WebMvcConfigurer;/*** 配置类, 容许跨域访问*/

@Configuration

public class WebConfig implements WebMvcConfigurer {@Overridepublic void addCorsMappings(CorsRegistry registry) {registry.addMapping("/**").allowedOrigins("*").allowedMethods("GET", "HEAD", "POST", "PUT", "DELETE", "OPTIONS").allowCredentials(true).maxAge(3600).allowedHeaders("*");}

}

CheckChunkVO.java

package com.wolfe.pointupload.domain;import lombok.Data;import java.io.Serializable;

import java.util.ArrayList;

import java.util.List;@Data

public class CheckChunkVO implements Serializable {private boolean skipUpload = false;private String url;private List<Integer> uploaded = new ArrayList<>();private boolean needMerge = true;private boolean result = true;

}

BackChunk.java

package com.wolfe.pointupload.domain;import lombok.Data;

import org.springframework.web.multipart.MultipartFile;import java.io.Serializable;@Data

public class BackChunk implements Serializable {/*** 主键ID*/private Long id;/*** 当前文件块,从1开始*/private Integer chunkNumber;/*** 分块大小*/private Long chunkSize;/*** 当前分块大小*/private Long currentChunkSize;/*** 总大小*/private Long totalSize;/*** 文件标识*/private String identifier;/*** 文件名*/private String filename;/*** 相对路径*/private String relativePath;/*** 总块数*/private Integer totalChunks;/*** 二进制文件*/private MultipartFile file;

}

BackFileList.java

package com.wolfe.pointupload.domain;import lombok.Data;@Data

public class BackFileList {private static final long serialVersionUID = 1L;/*** 主键ID*/private Long id;/*** 文件名*/private String filename;/*** 唯一标识,MD5*/private String identifier;/*** 链接*/private String url;/*** 本地地址*/private String location;/*** 文件总大小*/private Long totalSize;

}

BackChunkMapper.java

package com.wolfe.pointupload.mapper;import com.wolfe.pointupload.domain.BackChunk;import java.util.List;/*** 文件分片管理Mapper接口*/

public interface BackChunkMapper {/*** 查询文件分片管理** @param id 文件分片管理ID* @return 文件分片管理*/public BackChunk selectBackChunkById(Long id);/*** 查询文件分片管理列表** @param backChunk 文件分片管理* @return 文件分片管理集合*/public List<BackChunk> selectBackChunkList(BackChunk backChunk);/*** 新增文件分片管理** @param backChunk 文件分片管理* @return 结果*/public int insertBackChunk(BackChunk backChunk);/*** 修改文件分片管理** @param backChunk 文件分片管理* @return 结果*/public int updateBackChunk(BackChunk backChunk);/*** 删除文件分片管理** @param id 文件分片管理ID* @return 结果*/public int deleteBackChunkById(Long id);/*** 功能描述: 根据文件名和MD5值删除chunk记录** @param:* @return:* @author: xjd* @date: 2020/7/31 23:43*/int deleteBackChunkByIdentifier(BackChunk backChunk);/*** 批量删除文件分片管理** @param ids 需要删除的数据ID* @return 结果*/public int deleteBackChunkByIds(Long[] ids);

}

BackFileListMapper.java

package com.wolfe.pointupload.mapper;import com.wolfe.pointupload.domain.BackFileList;import java.util.List;/*** 已上传文件列表Mapper接口*/

public interface BackFileListMapper {/*** 查询已上传文件列表** @param id 已上传文件列表ID* @return 已上传文件列表*/public BackFileList selectBackFileListById(Long id);/*** 功能描述: 查询单条已上传文件记录** @param: BackFileList 已上传文件列表*/Integer selectSingleBackFileList(BackFileList BackFileList);/*** 查询已上传文件列表列表** @param BackFileList 已上传文件列表* @return 已上传文件列表集合*/public List<BackFileList> selectBackFileListList(BackFileList BackFileList);/*** 新增已上传文件列表** @param BackFileList 已上传文件列表* @return 结果*/public int insertBackFileList(BackFileList BackFileList);/*** 修改已上传文件列表** @param BackFileList 已上传文件列表* @return 结果*/public int updateBackFileList(BackFileList BackFileList);/*** 删除已上传文件列表** @param id 已上传文件列表ID* @return 结果*/public int deleteBackFileListById(Long id);/*** 批量删除已上传文件列表** @param ids 需要删除的数据ID* @return 结果*/public int deleteBackFileListByIds(Long[] ids);

}

BackFileListMapper.xml

<?xml version="1.0" encoding="UTF-8" ?>

<!DOCTYPE mapperPUBLIC "-//mybatis.org//DTD Mapper 3.0//EN""http://mybatis.org/dtd/mybatis-3-mapper.dtd">

<mapper namespace="com.wolfe.pointupload.mapper.BackFileListMapper"><resultMap type="BackFileList" id="BackFileListResult"><result property="id" column="id" /><result property="filename" column="filename" /><result property="identifier" column="identifier" /><result property="url" column="url" /><result property="location" column="location" /><result property="totalSize" column="total_size" /></resultMap><sql id="selectBackFileListVo">select id, filename, identifier, url, location, total_size from t_file_list</sql><select id="selectBackFileListList" parameterType="BackFileList" resultMap="BackFileListResult"><include refid="selectBackFileListVo"/><where><if test="filename != null and filename != ''"> and filename = #{filename}</if><if test="identifier != null and identifier != ''"> and identifier = #{identifier}</if><if test="url != null and url != ''"> and url = #{url}</if><if test="location != null and location != ''"> and location = #{location}</if><if test="totalSize != null "> and total_size = #{totalSize}</if></where></select><select id="selectBackFileListById" parameterType="Long" resultMap="BackFileListResult"><include refid="selectBackFileListVo"/>where id = #{id}</select><select id="selectSingleBackFileList" parameterType="BackFileList" resultType="int">select count(1) from t_file_list<where><if test="filename != null and filename != ''"> and filename = #{filename}</if><if test="identifier != null and identifier != ''"> and identifier = #{identifier}</if></where></select><insert id="insertBackFileList" parameterType="BackFileList" useGeneratedKeys="true" keyProperty="id">insert into t_file_list<trim prefix="(" suffix=")" suffixOverrides=","><if test="filename != null and filename != ''">filename,</if><if test="identifier != null and identifier != ''">identifier,</if><if test="url != null and url != ''">url,</if><if test="location != null and location != ''">location,</if><if test="totalSize != null ">total_size,</if></trim><trim prefix="values (" suffix=")" suffixOverrides=","><if test="filename != null and filename != ''">#{filename},</if><if test="identifier != null and identifier != ''">#{identifier},</if><if test="url != null and url != ''">#{url},</if><if test="location != null and location != ''">#{location},</if><if test="totalSize != null ">#{totalSize},</if></trim></insert><update id="updateBackFileList" parameterType="BackFileList">update t_file_list<trim prefix="SET" suffixOverrides=","><if test="filename != null and filename != ''">filename = #{filename},</if><if test="identifier != null and identifier != ''">identifier = #{identifier},</if><if test="url != null and url != ''">url = #{url},</if><if test="location != null and location != ''">location = #{location},</if><if test="totalSize != null ">total_size = #{totalSize},</if></trim>where id = #{id}</update><delete id="deleteBackFileListById" parameterType="Long">delete from t_file_list where id = #{id}</delete><delete id="deleteBackFileListByIds" parameterType="String">delete from t_file_list where id in<foreach item="id" collection="array" open="(" separator="," close=")">#{id}</foreach></delete></mapper>

BackChunkMapper.xml

<?xml version="1.0" encoding="UTF-8" ?>

<!DOCTYPE mapperPUBLIC "-//mybatis.org//DTD Mapper 3.0//EN""http://mybatis.org/dtd/mybatis-3-mapper.dtd">

<mapper namespace="com.wolfe.pointupload.mapper.BackChunkMapper"><resultMap type="BackChunk" id="BackChunkResult"><result property="id" column="id" /><result property="chunkNumber" column="chunk_number" /><result property="chunkSize" column="chunk_size" /><result property="currentChunkSize" column="current_chunk_size" /><result property="filename" column="filename" /><result property="identifier" column="identifier" /><result property="relativePath" column="relative_path" /><result property="totalChunks" column="total_chunks" /><result property="totalSize" column="total_size" /></resultMap><sql id="selectBackChunkVo">select id, chunk_number, chunk_size, current_chunk_size, filename, identifier, relative_path, total_chunks, total_size from t_chunk_info</sql><select id="selectBackChunkList" parameterType="BackChunk" resultMap="BackChunkResult"><include refid="selectBackChunkVo"/><where><if test="chunkNumber != null "> and chunk_number = #{chunkNumber}</if><if test="chunkSize != null "> and chunk_size = #{chunkSize}</if><if test="currentChunkSize != null "> and current_chunk_size = #{currentChunkSize}</if><if test="filename != null and filename != ''"> and filename = #{filename}</if><if test="identifier != null and identifier != ''"> and identifier = #{identifier}</if><if test="relativePath != null and relativePath != ''"> and relative_path = #{relativePath}</if><if test="totalChunks != null "> and total_chunks = #{totalChunks}</if><if test="totalSize != null "> and total_size = #{totalSize}</if></where>order by chunk_number desc</select><select id="selectBackChunkById" parameterType="Long" resultMap="BackChunkResult"><include refid="selectBackChunkVo"/>where id = #{id}</select><insert id="insertBackChunk" parameterType="BackChunk" useGeneratedKeys="true" keyProperty="id">insert into t_chunk_info<trim prefix="(" suffix=")" suffixOverrides=","><if test="chunkNumber != null ">chunk_number,</if><if test="chunkSize != null ">chunk_size,</if><if test="currentChunkSize != null ">current_chunk_size,</if><if test="filename != null and filename != ''">filename,</if><if test="identifier != null and identifier != ''">identifier,</if><if test="relativePath != null and relativePath != ''">relative_path,</if><if test="totalChunks != null ">total_chunks,</if><if test="totalSize != null ">total_size,</if></trim><trim prefix="values (" suffix=")" suffixOverrides=","><if test="chunkNumber != null ">#{chunkNumber},</if><if test="chunkSize != null ">#{chunkSize},</if><if test="currentChunkSize != null ">#{currentChunkSize},</if><if test="filename != null and filename != ''">#{filename},</if><if test="identifier != null and identifier != ''">#{identifier},</if><if test="relativePath != null and relativePath != ''">#{relativePath},</if><if test="totalChunks != null ">#{totalChunks},</if><if test="totalSize != null ">#{totalSize},</if></trim></insert><update id="updateBackChunk" parameterType="BackChunk">update t_chunk_info<trim prefix="SET" suffixOverrides=","><if test="chunkNumber != null ">chunk_number = #{chunkNumber},</if><if test="chunkSize != null ">chunk_size = #{chunkSize},</if><if test="currentChunkSize != null ">current_chunk_size = #{currentChunkSize},</if><if test="filename != null and filename != ''">filename = #{filename},</if><if test="identifier != null and identifier != ''">identifier = #{identifier},</if><if test="relativePath != null and relativePath != ''">relative_path = #{relativePath},</if><if test="totalChunks != null ">total_chunks = #{totalChunks},</if><if test="totalSize != null ">total_size = #{totalSize},</if></trim>where id = #{id}</update><delete id="deleteBackChunkById" parameterType="Long">delete from t_chunk_info where id = #{id}</delete><delete id="deleteBackChunkByIdentifier" parameterType="BackChunk">delete from t_chunk_info where identifier = #{identifier} and filename = #{filename}</delete><delete id="deleteBackChunkByIds" parameterType="String">delete from t_chunk_info where id in<foreach item="id" collection="array" open="(" separator="," close=")">#{id}</foreach></delete></mapper>

IBackFileService.java

package com.wolfe.pointupload.service;import com.wolfe.pointupload.domain.BackChunk;

import com.wolfe.pointupload.domain.BackFileList;

import com.wolfe.pointupload.domain.CheckChunkVO;import javax.servlet.http.HttpServletResponse;public interface IBackFileService {int postFileUpload(BackChunk chunk, HttpServletResponse response);CheckChunkVO getFileUpload(BackChunk chunk, HttpServletResponse response);int deleteBackFileByIds(Long id);int mergeFile(BackFileList fileInfo);

}

IBackChunkService.java

package com.wolfe.pointupload.service;import com.wolfe.pointupload.domain.BackChunk;import java.util.List;/*** 文件分片管理Service接口*/

public interface IBackChunkService {/*** 查询文件分片管理** @param id 文件分片管理ID* @return 文件分片管理*/public BackChunk selectBackChunkById(Long id);/*** 查询文件分片管理列表** @param backChunk 文件分片管理* @return 文件分片管理集合*/public List<BackChunk> selectBackChunkList(BackChunk backChunk);/*** 新增文件分片管理** @param backChunk 文件分片管理* @return 结果*/public int insertBackChunk(BackChunk backChunk);/*** 修改文件分片管理** @param backChunk 文件分片管理* @return 结果*/public int updateBackChunk(BackChunk backChunk);/*** 批量删除文件分片管理** @param ids 需要删除的文件分片管理ID* @return 结果*/public int deleteBackChunkByIds(Long[] ids);/*** 删除文件分片管理信息** @param id 文件分片管理ID* @return 结果*/public int deleteBackChunkById(Long id);

}

BackFileServiceImpl.java

package com.wolfe.pointupload.service.impl;import com.wolfe.pointupload.common.CommonConstant;

import com.wolfe.pointupload.domain.BackChunk;

import com.wolfe.pointupload.domain.BackFileList;

import com.wolfe.pointupload.domain.CheckChunkVO;

import com.wolfe.pointupload.mapper.BackChunkMapper;

import com.wolfe.pointupload.mapper.BackFileListMapper;

import com.wolfe.pointupload.service.IBackFileService;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Transactional;

import org.springframework.web.multipart.MultipartFile;import javax.servlet.http.HttpServletResponse;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.nio.file.StandardOpenOption;

import java.util.List;

import java.util.stream.Collectors;@Service

@Slf4j

public class BackFileServiceImpl implements IBackFileService {@Value("${config.upload-path}")private String uploadPath;private final static String folderPath = "/file";@Autowiredprivate BackChunkMapper backChunkMapper;@Autowiredprivate BackFileListMapper backFileListMapper;/*** 每一个上传块都会包含如下分块信息:* chunkNumber: 当前块的次序,第一个块是 1,注意不是从 0 开始的。* totalChunks: 文件被分成块的总数。* chunkSize: 分块大小,根据 totalSize 和这个值你就可以计算出总共的块数。注意最后一块的大小可能会比这个要大。* currentChunkSize: 当前块的大小,实际大小。* totalSize: 文件总大小。* identifier: 这个就是每个文件的唯一标示,md5码* filename: 文件名。* relativePath: 文件夹上传的时候文件的相对路径属性。* 一个分块可以被上传多次,当然这肯定不是标准行为,但是在实际上传过程中是可能发生这种事情的,这种重传也是本库的特性之一。* <p>* 根据响应码认为成功或失败的:* 200 文件上传完成* 201 文加快上传成功* 500 第一块上传失败,取消整个文件上传* 507 服务器出错自动重试该文件块上传*/@Override@Transactional(rollbackFor = Exception.class)public int postFileUpload(BackChunk chunk, HttpServletResponse response) {int result = CommonConstant.UPDATE_FAIL;MultipartFile file = chunk.getFile();log.debug("file originName: {}, chunkNumber: {}", file.getOriginalFilename(), chunk.getChunkNumber());Path path = Paths.get(generatePath(uploadPath + folderPath, chunk));try {Files.write(path, chunk.getFile().getBytes());log.debug("文件 {} 写入成功, md5:{}", chunk.getFilename(), chunk.getIdentifier());result = backChunkMapper.insertBackChunk(chunk);//写入数据库} catch (IOException e) {e.printStackTrace();response.setStatus(507);return CommonConstant.UPDATE_FAIL;}return result;}@Overridepublic CheckChunkVO getFileUpload(BackChunk chunk, HttpServletResponse response) {CheckChunkVO vo = new CheckChunkVO();//检查该文件是否存在于 backFileList 中,如果存在,直接返回skipUpload为true,执行闪传BackFileList backFileList = new BackFileList();backFileList.setIdentifier(chunk.getIdentifier());List<BackFileList> BackFileLists = backFileListMapper.selectBackFileListList(backFileList);if (BackFileLists != null && !BackFileLists.isEmpty()) {response.setStatus(HttpServletResponse.SC_CREATED);vo.setSkipUpload(true);return vo;}BackChunk resultChunk = new BackChunk();resultChunk.setIdentifier(chunk.getIdentifier());List<BackChunk> backChunks = backChunkMapper.selectBackChunkList(resultChunk);//将已存在的块的chunkNumber列表返回给前端,前端会规避掉这些块if (backChunks != null && !backChunks.isEmpty()) {List<Integer> collect = backChunks.stream().map(BackChunk::getChunkNumber).collect(Collectors.toList());vo.setUploaded(collect);}return vo;}@Overridepublic int deleteBackFileByIds(Long id) {return 0;}@Override@Transactional(rollbackFor = Exception.class)public int mergeFile(BackFileList fileInfo) {String filename = fileInfo.getFilename();String file = uploadPath + folderPath + "/" + fileInfo.getIdentifier() + "/" + filename;String folder = uploadPath + folderPath + "/" + fileInfo.getIdentifier();String url = folderPath + "/" + fileInfo.getIdentifier() + "/" + filename;merge(file, folder, filename);//当前文件已存在数据库中时,返回已存在标识if (backFileListMapper.selectSingleBackFileList(fileInfo) > 0) {return CommonConstant.UPDATE_EXISTS;}fileInfo.setLocation(file);fileInfo.setUrl(url);int i = backFileListMapper.insertBackFileList(fileInfo);if (i > 0) {//插入文件记录成功后,删除chunk表中的对应记录,释放空间BackChunk backChunk = new BackChunk();backChunk.setIdentifier(fileInfo.getIdentifier());backChunk.setFilename(fileInfo.getFilename());backChunkMapper.deleteBackChunkByIdentifier(backChunk);}return i;}/*** 功能描述:生成块文件所在地址*/private String generatePath(String uploadFolder, BackChunk chunk) {StringBuilder sb = new StringBuilder();//文件夹地址/md5sb.append(uploadFolder).append("/").append(chunk.getIdentifier());//判断uploadFolder/identifier 路径是否存在,不存在则创建if (!Files.isWritable(Paths.get(sb.toString()))) {log.info("path not exist,create path: {}", sb.toString());try {Files.createDirectories(Paths.get(sb.toString()));} catch (IOException e) {log.error(e.getMessage(), e);}}//文件夹地址/md5/文件名-1return sb.append("/").append(chunk.getFilename()).append("-").append(chunk.getChunkNumber()).toString();}/*** 文件合并** @param targetFile 要形成的文件名* @param folder 要形成的文件夹地址* @param filename 文件的名称*/public static void merge(String targetFile, String folder, String filename) {try {Files.createFile(Paths.get(targetFile));Files.list(Paths.get(folder)).filter(path -> !path.getFileName().toString().equals(filename)).sorted((o1, o2) -> {String p1 = o1.getFileName().toString();String p2 = o2.getFileName().toString();int i1 = p1.lastIndexOf("-");int i2 = p2.lastIndexOf("-");return Integer.valueOf(p2.substring(i2)).compareTo(Integer.valueOf(p1.substring(i1)));}).forEach(path -> {try {//以追加的形式写入文件Files.write(Paths.get(targetFile), Files.readAllBytes(path), StandardOpenOption.APPEND);//合并后删除该块Files.delete(path);} catch (IOException e) {log.error(e.getMessage(), e);}});} catch (IOException e) {log.error(e.getMessage(), e);}}

}

BackChunkServiceImpl.java

package com.wolfe.pointupload.service.impl;import com.wolfe.pointupload.domain.BackChunk;

import com.wolfe.pointupload.mapper.BackChunkMapper;

import com.wolfe.pointupload.service.IBackChunkService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;import java.util.List;/*** 文件分片管理Service业务层处理*/

@Service

public class BackChunkServiceImpl implements IBackChunkService {@Autowiredprivate BackChunkMapper backChunkMapper;/*** 查询文件分片管理** @param id 文件分片管理ID* @return 文件分片管理*/@Overridepublic BackChunk selectBackChunkById(Long id) {return backChunkMapper.selectBackChunkById(id);}/*** 查询文件分片管理列表** @param backChunk 文件分片管理* @return 文件分片管理*/@Overridepublic List<BackChunk> selectBackChunkList(BackChunk backChunk) {return backChunkMapper.selectBackChunkList(backChunk);}/*** 新增文件分片管理** @param backChunk 文件分片管理* @return 结果*/@Overridepublic int insertBackChunk(BackChunk backChunk) {return backChunkMapper.insertBackChunk(backChunk);}/*** 修改文件分片管理** @param backChunk 文件分片管理* @return 结果*/@Overridepublic int updateBackChunk(BackChunk backChunk) {return backChunkMapper.updateBackChunk(backChunk);}/*** 批量删除文件分片管理** @param ids 需要删除的文件分片管理ID* @return 结果*/@Overridepublic int deleteBackChunkByIds(Long[] ids) {return backChunkMapper.deleteBackChunkByIds(ids);}/*** 删除文件分片管理信息** @param id 文件分片管理ID* @return 结果*/@Overridepublic int deleteBackChunkById(Long id) {return backChunkMapper.deleteBackChunkById(id);}

}

FileController.java

package com.wolfe.pointupload.controller;import com.wolfe.pointupload.common.AjaxResult;

import com.wolfe.pointupload.common.CommonConstant;

import com.wolfe.pointupload.domain.BackChunk;

import com.wolfe.pointupload.domain.BackFileList;

import com.wolfe.pointupload.domain.CheckChunkVO;

import com.wolfe.pointupload.service.IBackFileService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.*;import javax.servlet.http.HttpServletResponse;@RestController

@RequestMapping("/file")

public class FileController {@Autowiredprivate IBackFileService backFileService;@GetMapping("/test")public String test() {return "Hello Wolfe.";}/*** 上传文件*/@PostMapping("/upload")public AjaxResult postFileUpload(@ModelAttribute BackChunk chunk, HttpServletResponse response) {int i = backFileService.postFileUpload(chunk, response);return AjaxResult.success(i);}/*** 检查文件上传状态*/@GetMapping("/upload")public CheckChunkVO getFileUpload(@ModelAttribute BackChunk chunk, HttpServletResponse response) {//查询根据md5查询文件是否存在CheckChunkVO fileUpload = backFileService.getFileUpload(chunk, response);return fileUpload;}/*** 删除*/@DeleteMapping("/{id}")public AjaxResult remove(@PathVariable("id") Long id) {return AjaxResult.success(backFileService.deleteBackFileByIds(id));}/*** 检查文件上传状态*/@PostMapping("/merge")public AjaxResult merge(@RequestBody BackFileList backFileList) {int i = backFileService.mergeFile(backFileList);if (i == CommonConstant.UPDATE_EXISTS.intValue()) {//应对合并时断线导致的无法重新申请合并的问题return new AjaxResult(200, "已合并,无需再次提交");}return AjaxResult.success(i);}

}

启动类 PointUploadApplication .java 添加 Mapper 扫描

@MapperScan("com.wolfe.**.mapper")

package com.wolfe.pointupload;import org.mybatis.spring.annotation.MapperScan;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;@MapperScan("com.wolfe.**.mapper")

@SpringBootApplication

public class PointUploadApplication {public static void main(String[] args) {SpringApplication.run(PointUploadApplication.class, args);}}

三、前端部分

初始化项目

npm i -g @vue/cli

npm i -g @vue/cli-init

vue init webpack point-upload-f

安装依赖

cd point-upload-f

npm install vue-simple-uploader --save

npm i spark-md5 --save

npm i jquery --save

npm i axios --save

新建 Upload.vue

<template><div>状态:<div id="status"></div><uploader ref="uploader":options="options":autoStart="true"@file-added="onFileAdded"@file-success="onFileSuccess"@file-progress="onFileProgress"@file-error="onFileError"></uploader></div>

</template><script>

import SparkMD5 from 'spark-md5';

import axios from 'axios';

import $ from 'jquery'export default {name: 'Upload',data() {return {options: {target: 'http://127.0.0.1:8081/file/upload',chunkSize: 5 * 1024 * 1000,fileParameterName: 'file',maxChunkRetries: 2,testChunks: true, //是否开启服务器分片校验checkChunkUploadedByResponse: function (chunk, message) {// 服务器分片校验函数,秒传及断点续传基础let objMessage = JSON.parse(message);if (objMessage.skipUpload) {return true;}return (objMessage.uploaded || []).indexOf(chunk.offset + 1) >= 0},headers: {Authorization: ''},query() {}}}},computed: {//Uploader实例uploader() {return this.$refs.uploader.uploader;}},methods: {onFileAdded(file) {console.log("... onFileAdded")this.computeMD5(file);},onFileProgress(rootFile, file, chunk) {console.log("... onFileProgress")},onFileSuccess(rootFile, file, response, chunk) {let res = JSON.parse(response);// 如果服务端返回需要合并if (res.needMerge) {// 文件状态设为“合并中”this.statusSet(file.id, 'merging');let param = {'filename': rootFile.name,'identifier': rootFile.uniqueIdentifier,'totalSize': rootFile.size}axios({method: 'post',url: "http://127.0.0.1:8081/file/merge",data: param}).then(res => {this.statusRemove(file.id);}).catch(e => {console.log("合并异常,重新发起请求,文件名为:", file.name)file.retry();});}},onFileError(rootFile, file, response, chunk) {console.log("... onFileError")},computeMD5(file) {let fileReader = new FileReader();let time = new Date().getTime();let blobSlice = File.prototype.slice || File.prototype.mozSlice || File.prototype.webkitSlice;let currentChunk = 0;const chunkSize = 10 * 1024 * 1000;let chunks = Math.ceil(file.size / chunkSize);let spark = new SparkMD5.ArrayBuffer();// 文件状态设为"计算MD5"this.statusSet(file.id, 'md5');file.pause();loadNext();fileReader.onload = (e => {spark.append(e.target.result);if (currentChunk < chunks) {currentChunk++;loadNext();// 实时展示MD5的计算进度this.$nextTick(() => {$(`.myStatus_${file.id}`).text('校验MD5 ' + ((currentChunk / chunks) * 100).toFixed(0) + '%')})} else {let md5 = spark.end();this.computeMD5Success(md5, file);console.log(`MD5计算完毕:${file.name} \nMD5:${md5} \n分片:${chunks} 大小:${file.size} 用时:${new Date().getTime() - time} ms`);}});fileReader.onerror = function () {this.error(`文件${file.name}读取出错,请检查该文件`)file.cancel();};function loadNext() {let start = currentChunk * chunkSize;let end = ((start + chunkSize) >= file.size) ? file.size : start + chunkSize;fileReader.readAsArrayBuffer(blobSlice.call(file.file, start, end));}},statusSet(id, status) {let statusMap = {md5: {text: '校验MD5',bgc: '#fff'},merging: {text: '合并中',bgc: '#e2eeff'},transcoding: {text: '转码中',bgc: '#e2eeff'},failed: {text: '上传失败',bgc: '#e2eeff'}}console.log(".....", status, "...:", statusMap[status].text)this.$nextTick(() => {// $(`<p class="myStatus_${id}"></p>`).appendTo(`.file_${id} .uploader-file-status`).css({$(`<p class="myStatus_${id}"></p>`).appendTo(`#status`).css({'position': 'absolute','top': '0','left': '0','right': '0','bottom': '0','zIndex': '1','line-height': 'initial','backgroundColor': statusMap[status].bgc}).text(statusMap[status].text);})},computeMD5Success(md5, file) {Object.assign(this.uploader.opts, {query: {...this.params,}})file.uniqueIdentifier = md5;file.resume();this.statusRemove(file.id);},statusRemove(id) {this.$nextTick(() => {$(`.myStatus_${id}`).remove();})},}

}

</script><style scoped></style>

修改 App.vue

<template><div id="app"><router-view/></div>

</template><script>

export default {name: 'App'

}

</script><style>

body {margin: 0;padding: 0;

}

#app {font-family: 'Avenir', Helvetica, Arial, sans-serif;-webkit-font-smoothing: antialiased;-moz-osx-font-smoothing: grayscale;text-align: center;color: #2c3e50;margin: 0;padding: 0;

}

</style>

修改路由 router/index.js

import Vue from 'vue'

import Router from 'vue-router'

import HelloWorld from '@/components/HelloWorld'

import Upload from '@/view/Upload'Vue.use(Router)export default new Router({routes: [{path: '/',name: 'HelloWorld',component: HelloWorld},{path: '/upload',name: 'HelloWorld',component: Upload}]

})

还要改一下 main.js , 把 vue-simple-uploader 组件加载进来 即全局注册

// The Vue build version to load with the `import` command

// (runtime-only or standalone) has been set in webpack.base.conf with an alias.

import Vue from 'vue'

import App from './App'

import router from './router'

import uploader from 'vue-simple-uploader'

Vue.config.productionTip = false

Vue.use(uploader)

/* eslint-disable no-new */

new Vue({el: '#app',router,components: { App },template: '<App/>'

})

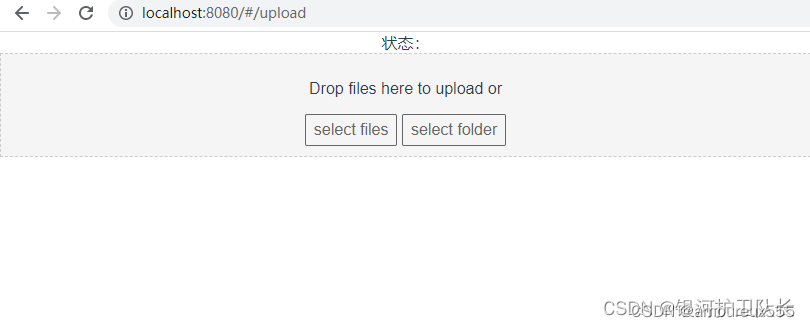

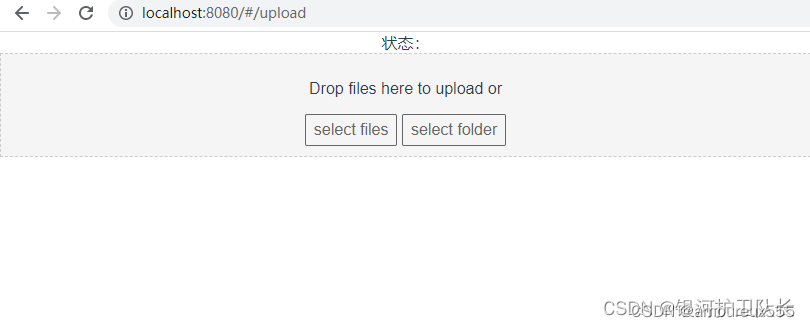

访问测试

四、数据库

准备数据库,新建数据库和数据表

mysql -u root -p

******CREATE DATABASE IF NOT EXISTS pointuploadDEFAULT CHARACTER SET utf8DEFAULT COLLATE utf8_general_ci;use pointupload;#文件信息表

CREATE TABLE `t_file_list` (`id` bigint NOT NULL AUTO_INCREMENT COMMENT '主键ID',`filename` varchar(64) COMMENT '文件名',`identifier` varchar(64) COMMENT '唯一标识,MD5',`url` varchar(128) COMMENT '链接',`location` varchar(128) COMMENT '本地地址',`total_size` bigint COMMENT '文件总大小',PRIMARY KEY (`id`) USING BTREE,UNIQUE KEY `FILE_UNIQUE_KEY` (`filename`,`identifier`) USING BTREE

) ENGINE=InnoDB;#上传文件分片信息表

CREATE TABLE `t_chunk_info` (`id` bigint NOT NULL AUTO_INCREMENT COMMENT '主键ID',`chunk_number` int COMMENT '文件块编号',`chunk_size` bigint COMMENT '分块大小',`current_chunk_size` bigint COMMENT '当前分块大小',`filename` varchar(255) COMMENT '文件名',`identifier` varchar(255) COMMENT '文件标识,MD5',`relative_path` varchar(255) COMMENT '相对路径',`total_chunks` int COMMENT '总块数',`total_size` bigint COMMENT '总大小',PRIMARY KEY (`id`) USING BTREE

) ENGINE=InnoDB ;

截至到这里,代码就结束了

下班~

相关文章:

前端 基于 vue-simple-uploader 实现大文件断点续传和分片上传

文章目录一、前言二、后端部分新建Maven 项目后端pom.xml配置文件 application.ymlHttpStatus.javaAjaxResult.javaCommonConstant.javaWebConfig.javaCheckChunkVO.javaBackChunk.javaBackFileList.javaBackChunkMapper.javaBackFileListMapper.javaBackFileListMapper.xmlBac…...

解决报错: ERR! code 128npm ERR! An unknown git error occurred

在github下载的项目运行时,进行npm install安装依赖时,出现如下错误:npm ERR! code 128npm ERR! An unknown git error occurrednpm ERR! command git --no-replace-objects ls-remote ssh://gitgithub.com/nhn/raphael.gitnpm ERR! gitgithu…...

聊城高新技术企业认定7项需要注意的问题 山东同邦科技分享

聊城高新技术企业认定7项需要注意的问题 山东同邦科技分享 山东省高新技术企业认定办公室发布《关于开展2021年度本市高新技术企业认定管理工作的通知》,高企认定中有哪些问题需要注意呢?下面我们一起来看一下。 一、知识产权 知识产权数量和质量双达…...

菊乐食品更新IPO招股书:收入依赖单一地区,规模不及认养一头牛

近日,四川菊乐食品股份有限公司(下称“菊乐食品”)预披露更新招股书,准备在深圳证券交易所主板上市,保荐机构为中信建投证券。据贝多财经了解,这已经是菊乐食品第四次冲刺A股上市,此前三次均未能…...

Elasticsearch安装IK分词器、配置自定义分词词库

一、分词简介 在Elasticsearch中,假设搜索条件是“华为手机平板电脑”,要求是只要满足了其中任意一个词语组合的数据都要查询出来。借助 Elasticseach 的文本分析功能可以轻松将搜索条件进行分词处理,再结合倒排索引实现快速检索。Elasticse…...

Linux嵌入式开发——shell脚本

文章目录Linux嵌入式开发——shell脚本一、shell脚本基本原则二、shell脚本语法2.1、编写shell脚本2.2、交互式shell脚本2.3、shell脚本的数值计算2.4、test命令&&运算符||运算符2.5、中括号[]判断符2.6、默认变量三、shell脚本条件判断if thenif then elsecase四、she…...

CV【5】:Layer normalization

系列文章目录 Normalization 系列方法(一):CV【4】:Batch normalization Normalization 系列方法(二):CV【5】:Layer normalization 文章目录系列文章目录前言2. Layer normalizati…...

跳跃游戏 II 解析

题目描述给定一个长度为 n 的 0 索引整数数组 nums。初始位置为 nums[0]。每个元素 nums[i] 表示从索引 i 向前跳转的最大长度。换句话说,如果你在 nums[i] 处,你可以跳转到任意 nums[i j] 处:0 < j < nums[i] i j < n返回到达 nums[n - 1] 的…...

易基因|猪肠道组织的表观基因组功能注释增强对复杂性状和人类疾病的生物学解释:Nature子刊

大家好,这里是专注表观组学十余年,领跑多组学科研服务的易基因。2021年10月6日,《Nat Commun》杂志发表了题为“Pig genome functional annotation enhances the biological interpretation of complex traits and human disease”的研究论文…...

)

01- NumPy 数据库 (机器学习)

numpy 数据库重点: numpy的主要数据格式: ndarray 列表转化为ndarray格式: np.array() np.save(x_arr, x) # 使用save可以存一个 ndarray np.savetxt(arr.csv, arr, delimiter ,) # 存储为 txt 文件 np.array([1, 2, 5, 8, 19], dtype float32) # 转换…...

RapperBot僵尸网络最新进化:删除恶意软件后仍能访问主机

自 2022 年 6 月中旬以来,研究人员一直在跟踪一个快速发展的 IoT 僵尸网络 RapperBot。该僵尸网络大量借鉴了 Mirai 的源代码,新的样本增加了持久化的功能,保证即使在设备重新启动或者删除恶意软件后,攻击者仍然可以通过 SSH 继续…...

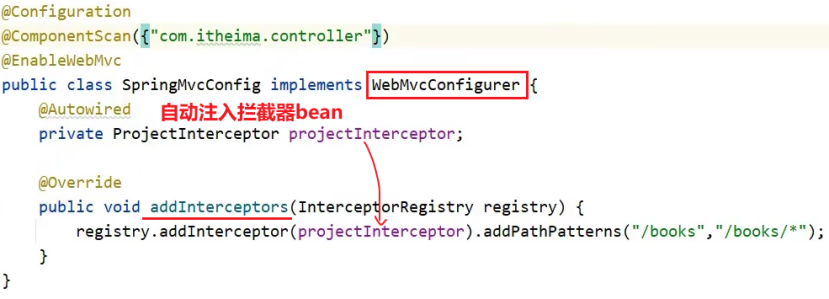

拦截器interceptor总结

拦截器一. 概念拦截器和AOP的区别:拦截器和过滤器的区别:二. 入门案例2.1 定义拦截器bean2.2 定义配置类2.3 执行流程2.4 简化配置类到SpringMvcConfig中一. 概念 引入: 消息从浏览器发送到后端,请求会先到达Tocmat服务器&#x…...

轻松实现微信小程序上传多文件/图片到腾讯云对象存储COS(免费额度)

概述 对象存储(Cloud Object Storage,COS)是腾讯云提供的一种存储海量文件的分布式存储服务,用户可通过网络随时存储和查看数据。个人账户首次开通COS可以免费领取50GB 标准存储容量包6个月(180天)的额度。…...

)

Golang中defer和return的执行顺序 + 相关测试题(面试常考)

参考文章: 【Golang】defer陷阱和执行原理 GO语言defer和return 的执行顺序 深入理解Golang defer机制,直通面试 面试富途的时候,遇到了1.2的这个进阶问题,没回答出来。这种题简直是 噩梦\color{purple}{噩梦}噩梦,…...

谁说菜鸟不会数据分析,不用Python,不用代码也轻松搞定

作为一个菜鸟,你可能觉得数据分析就是做表格的,或者觉得搞个报表很简单。实际上,当前有规模的公司任何一个岗位如果没有数据分析的思维和能力,都会被淘汰,数据驱动分析是解决日常问题的重点方式。很多时候,…...

php mysql保健品购物商城系统

目 录 1 绪论 1 1.1 开发背景 1 1.2 研究的目的和意义 1 1.3 研究现状 2 2 开发技术介绍 2 2.1 B/S体系结构 2 2.2 PHP技术 3 2.3 MYSQL数据库 4 2.4 Apache 服务器 5 2.5 WAMP 5 2.6 系统对软硬件要求 6 …...

Vue3电商项目实战-首页模块6【22-首页主体-补充-vue动画、23-首页主体-面板骨架效果、4-首页主体-组件数据懒加载、25-首页主体-热门品牌】

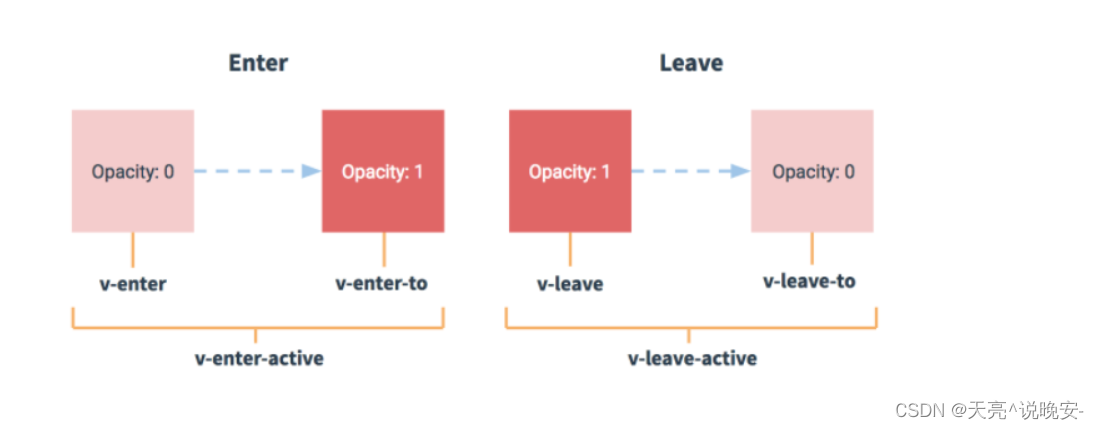

文章目录22-首页主体-补充-vue动画23-首页主体-面板骨架效果24-首页主体-组件数据懒加载25-首页主体-热门品牌22-首页主体-补充-vue动画 目标: 知道vue中如何使用动画,知道Transition组件使用。 当vue中,显示隐藏,创建移除&#x…...

linux 使用

一、操作系统命令 1、版本命令:lsb_release -a 2、内核命令:cat /proc/version 二、debian与CentOS区别 debian德班和CentOS是Linux里两个著名的版本。两者的包管理方式不同。 debian安装软件是用apt(apt-get install),而CentOS是用yum de…...

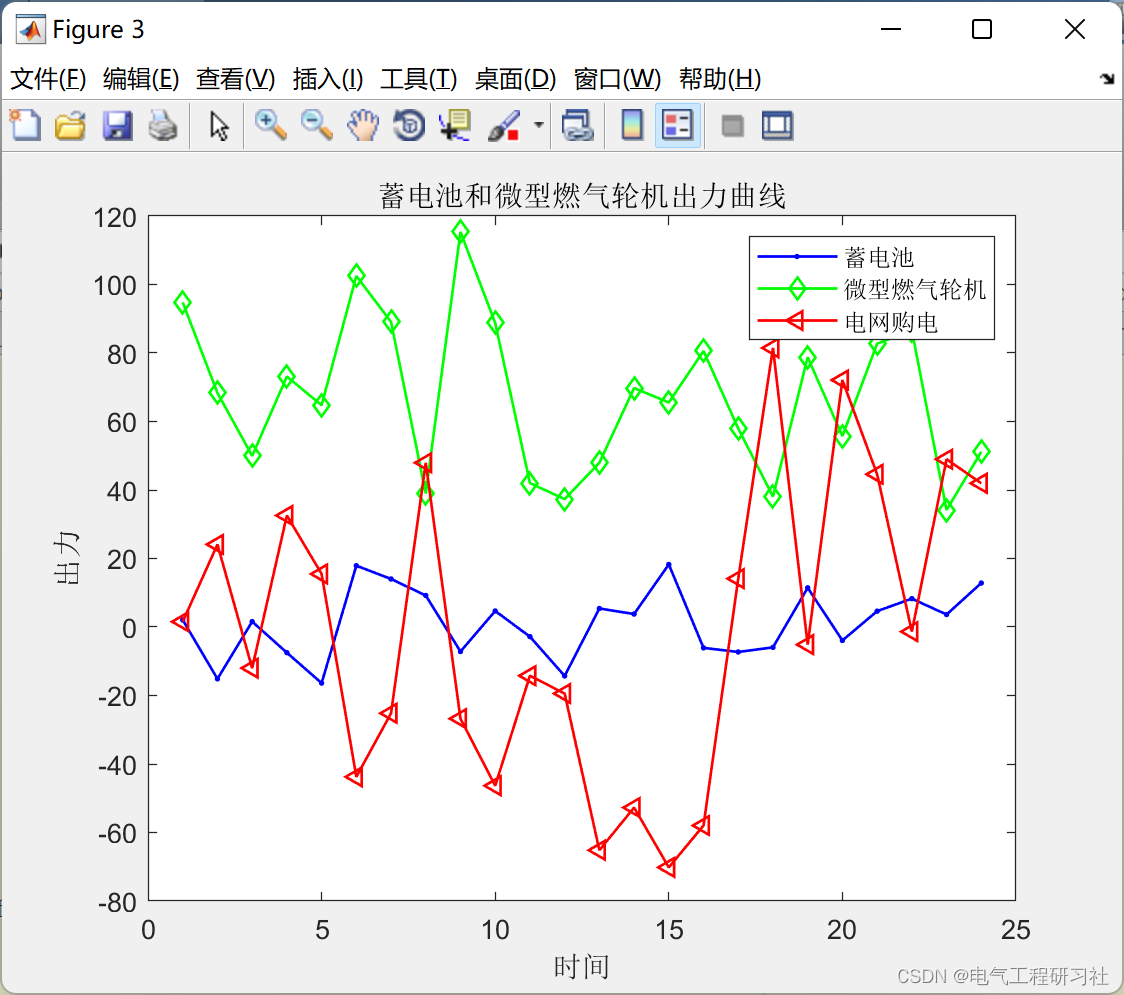

基于遗传算法的微电网调度(风、光、蓄电池、微型燃气轮机)(Matlab代码实现)

💥💥💥💞💞💞欢迎来到本博客❤️❤️❤️💥💥💥🏆博主优势:🌞🌞🌞博客内容尽量做到思维缜密,逻辑清…...

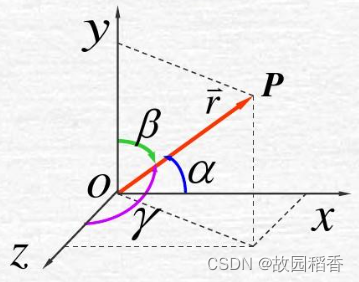

方向导数与梯度下降

文章目录方向角与方向余弦方向角方向余弦方向导数定义性质梯度下降梯度下降法(Gradient descent)是一个一阶最优化算法,通常也称为最速下降法。 要使用梯度下降法找到一个函数的局部极小值,必须向函数上当前点对应梯度(…...

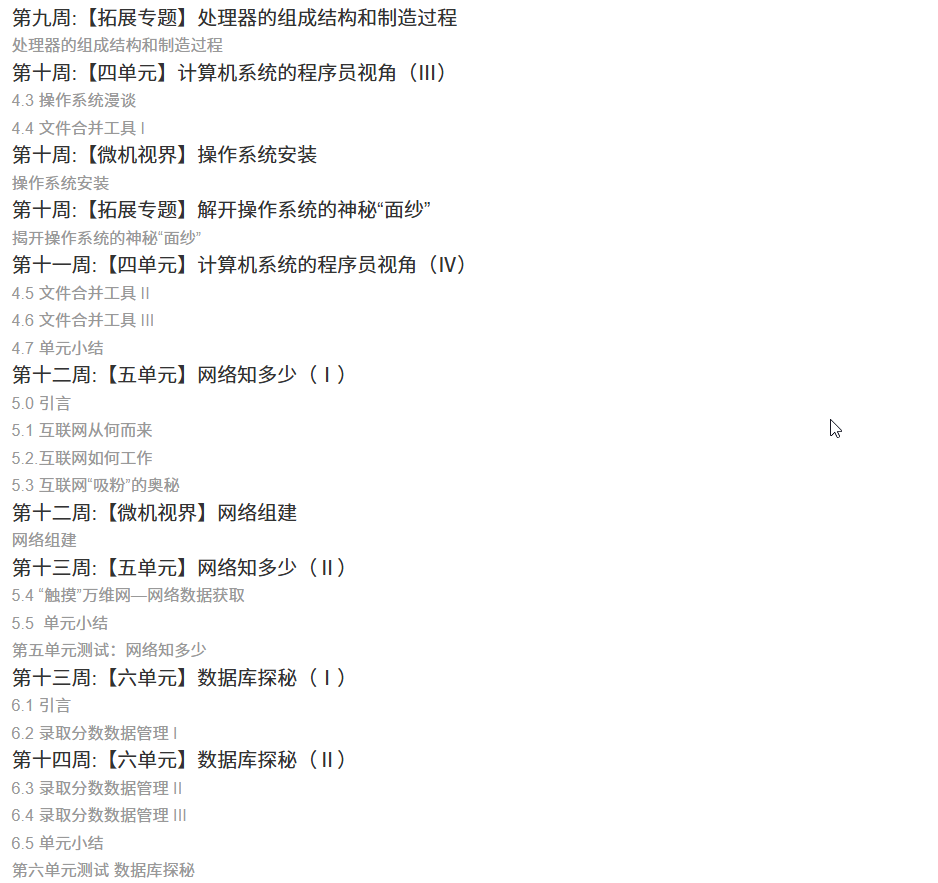

国防科技大学计算机基础课程笔记02信息编码

1.机内码和国标码 国标码就是我们非常熟悉的这个GB2312,但是因为都是16进制,因此这个了16进制的数据既可以翻译成为这个机器码,也可以翻译成为这个国标码,所以这个时候很容易会出现这个歧义的情况; 因此,我们的这个国…...

mongodb源码分析session执行handleRequest命令find过程

mongo/transport/service_state_machine.cpp已经分析startSession创建ASIOSession过程,并且验证connection是否超过限制ASIOSession和connection是循环接受客户端命令,把数据流转换成Message,状态转变流程是:State::Created 》 St…...

Docker 运行 Kafka 带 SASL 认证教程

Docker 运行 Kafka 带 SASL 认证教程 Docker 运行 Kafka 带 SASL 认证教程一、说明二、环境准备三、编写 Docker Compose 和 jaas文件docker-compose.yml代码说明:server_jaas.conf 四、启动服务五、验证服务六、连接kafka服务七、总结 Docker 运行 Kafka 带 SASL 认…...

连锁超市冷库节能解决方案:如何实现超市降本增效

在连锁超市冷库运营中,高能耗、设备损耗快、人工管理低效等问题长期困扰企业。御控冷库节能解决方案通过智能控制化霜、按需化霜、实时监控、故障诊断、自动预警、远程控制开关六大核心技术,实现年省电费15%-60%,且不改动原有装备、安装快捷、…...

使用van-uploader 的UI组件,结合vue2如何实现图片上传组件的封装

以下是基于 vant-ui(适配 Vue2 版本 )实现截图中照片上传预览、删除功能,并封装成可复用组件的完整代码,包含样式和逻辑实现,可直接在 Vue2 项目中使用: 1. 封装的图片上传组件 ImageUploader.vue <te…...

Linux云原生安全:零信任架构与机密计算

Linux云原生安全:零信任架构与机密计算 构建坚不可摧的云原生防御体系 引言:云原生安全的范式革命 随着云原生技术的普及,安全边界正在从传统的网络边界向工作负载内部转移。Gartner预测,到2025年,零信任架构将成为超…...

【决胜公务员考试】求职OMG——见面课测验1

2025最新版!!!6.8截至答题,大家注意呀! 博主码字不易点个关注吧,祝期末顺利~~ 1.单选题(2分) 下列说法错误的是:( B ) A.选调生属于公务员系统 B.公务员属于事业编 C.选调生有基层锻炼的要求 D…...

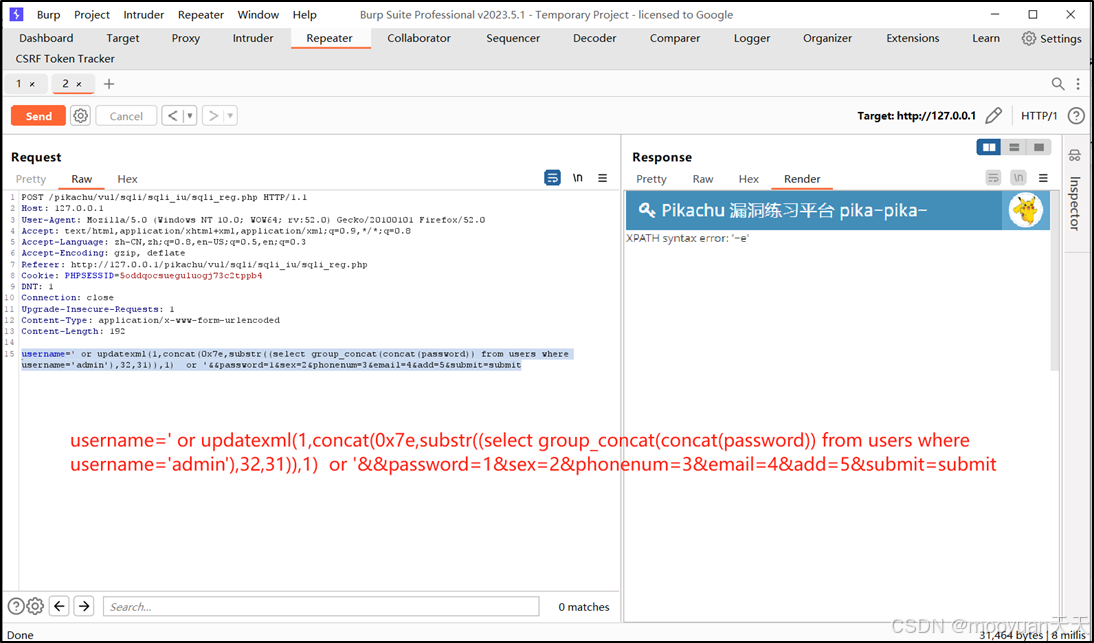

pikachu靶场通关笔记22-1 SQL注入05-1-insert注入(报错法)

目录 一、SQL注入 二、insert注入 三、报错型注入 四、updatexml函数 五、源码审计 六、insert渗透实战 1、渗透准备 2、获取数据库名database 3、获取表名table 4、获取列名column 5、获取字段 本系列为通过《pikachu靶场通关笔记》的SQL注入关卡(共10关࿰…...

【Oracle】分区表

个人主页:Guiat 归属专栏:Oracle 文章目录 1. 分区表基础概述1.1 分区表的概念与优势1.2 分区类型概览1.3 分区表的工作原理 2. 范围分区 (RANGE Partitioning)2.1 基础范围分区2.1.1 按日期范围分区2.1.2 按数值范围分区 2.2 间隔分区 (INTERVAL Partit…...

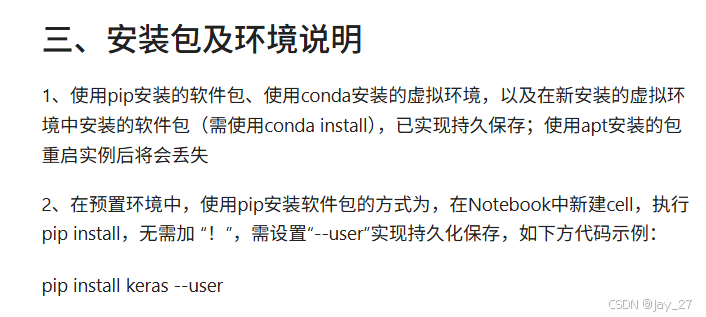

九天毕昇深度学习平台 | 如何安装库?

pip install 库名 -i https://pypi.tuna.tsinghua.edu.cn/simple --user 举个例子: 报错 ModuleNotFoundError: No module named torch 那么我需要安装 torch pip install torch -i https://pypi.tuna.tsinghua.edu.cn/simple --user pip install 库名&#x…...