使用ansible自动化部署Kubernetes

使用 kubeasz 部署 Kubernetes 集群

服务器列表:

| IP | 主机名 | 角色 |

|---|---|---|

| 192.168.100.142 | kube-master1,kube-master1.suosuoli.cn | K8s 集群主节点 1 |

| 192.168.100.144 | kube-master2,kube-master2.suosuoli.cn | K8s 集群主节点 2 |

| 192.168.100.146 | kube-master3,kube-master3.suosuoli.cn | K8s 集群主节点 3 |

| 192.168.100.160 | node1,node1.suosuoli.cn | K8s 集群工作节点 1 |

| 192.168.100.162 | node2,node2.suosuoli.cn | K8s 集群工作节点 2 |

| 192.168.100.164 | node3,node3.suosuoli.cn | K8s 集群工作节点 3 |

| 192.168.100.164 | etcd-node1,etcd-node1.suosuoli.cn | 集群状态存储 etcd |

| 192.168.100.150 | ha1,ha1.suosuoli.cn | K8s 主节点访问入口 1(高可用及负载均衡) |

| 192.168.100.152 | ha2,ha2.suosuoli.cn | K8s 主节点访问入口 1(高可用及负载均衡) |

| 192.168.100.154 | harbor,harbor.suosuoli.cn | 容器镜像仓库 |

| 192.168.100.200 | 无 | VIP |

一. 各主机环境准备

~# apt update

~# apt install python2.7

~# ln -sv /usr/bin/python2.7 /usr/bin/python

二. 管理端安装 Ansible

2.1 安装

root@kube-master1:~# apt install ansible

2.2 Ansible 服务器基于 Key 与被控主机通讯

root@kube-master1:~# cat batch-copyid.sh

#!/bin/bash

#

# simple script to batch diliver pubkey to some hosts.

#

IP_LIST="

192.168.100.142

192.168.100.144

192.168.100.146

192.168.100.160

192.168.100.162

192.168.100.164

192.168.100.166

"for host in ${IP_LIST}; dosshpass -p stevenux ssh-copy-id ${host} -o StrictHostKeyChecking=noif [ $? -eq 0 ]; thenecho "copy pubkey to ${host} done."elseecho "copy pubkey to ${host} failed."fi

done

三. 下载部署 Kubernetes 集群所需的文件

下载部署 Kubernetes 集群所需的二进制文件及 ansible playbook 和 roles,参考

集群部署前的准备

root@kube-master1:/etc/ansible# curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/2.2.0/easzup% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed

100 597 100 597 0 0 447 0 0:00:01 0:00:01 --:--:-- 447

100 12965 100 12965 0 0 4553 0 0:00:02 0:00:02 --:--:-- 30942

root@kube-master1:/etc/ansible# ls

ansible.cfg easzup hostsroot@kube-master1:/etc/ansible# chmode +x easzup# 开始下载

root@kube-master1:/etc/ansible# ./easzup -D

[INFO] Action begin : download_all

[INFO] downloading docker binaries 19.03.5% Total % Received % Xferd Average Speed Time Time Time CurrentDload Upload Total Spent Left Speed

100 60.3M 100 60.3M 0 0 1160k 0 0:00:53 0:00:53 --:--:-- 1111k

[INFO] generate docker service file

[INFO] generate docker config file

[INFO] prepare register mirror for CN

[INFO] enable and start docker

Synchronizing state of docker.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable docker

Failed to enable unit: Unit file /etc/systemd/system/docker.service is masked.

Failed to restart docker.service: Unit docker.service is masked. # 出错,提示docker.service masked

[ERROR] Action failed : download_all # 下载失败# 尝试unmask,但是service文件被删除了?

root@kube-master1:/etc/ansible# systemctl unmask docker.service

Removed /etc/systemd/system/docker.service.# 打开easzup脚本,将其生成docker.service文件的内容拷贝,自己写docker.service文件

root@kube-master1:/etc/ansible# vim easzup

...echo "[INFO] generate docker service file"cat > /etc/systemd/system/docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.io

[Service]

Environment="PATH=/opt/kube/bin:/bin:/sbin:/usr/bin:/usr/sbin"

ExecStart=/opt/kube/bin/dockerd

ExecStartPost=/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT

ExecReload=/bin/kill -s HUP \$MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

...# 编写docker.service启动文件

root@kube-master1:/etc/ansible# vim /etc/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.io

[Service]

Environment="PATH=/opt/kube/bin:/bin:/sbin:/usr/bin:/usr/sbin"

ExecStart=/opt/kube/bin/dockerd

ExecStartPost=/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT

ExecReload=/bin/kill -s HUP \$MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

接着下载:

root@kube-master1:/etc/ansible# ./easzup -D

[INFO] Action begin : download_all

/bin/docker

[WARN] docker is installed.

[INFO] downloading kubeasz 2.2.0

[INFO] run a temporary container

Unable to find image 'easzlab/kubeasz:2.2.0' locally

2.2.0: Pulling from easzlab/kubeasz

9123ac7c32f7: Pull complete

837e3bfc1a1b: Pull complete

Digest: sha256:a1fc4a75fde5aee811ff230e88ffa80d8bfb66e9c1abc907092abdbff073735e

Status: Downloaded newer image for easzlab/kubeasz:2.2.0

9ae404660a8e66a59ee4029d748aff96666121e847aa3667109630560e749bf8

[INFO] cp kubeasz code from the temporary container

[INFO] stop&remove temporary container

temp_easz

[INFO] downloading kubernetes v1.17.2 binaries

v1.17.2: Pulling from easzlab/kubeasz-k8s-bin

9123ac7c32f7: Already exists

fa197cdd54ac: Pull complete

Digest: sha256:d9fdc65a79a2208f48d5bf9a7e51cf4a4719c978742ef59b507bc8aaca2564f5

Status: Downloaded newer image for easzlab/kubeasz-k8s-bin:v1.17.2

docker.io/easzlab/kubeasz-k8s-bin:v1.17.2

[INFO] run a temporary container

16c0d1675f705417e662fd78871c2721061026869e5c90102c9d47c07bb63476

[INFO] cp k8s binaries

[INFO] stop&remove temporary container

temp_k8s_bin

[INFO] downloading extral binaries kubeasz-ext-bin:0.4.0

0.4.0: Pulling from easzlab/kubeasz-ext-bin

9123ac7c32f7: Already exists

96aeb45eaf70: Pull complete

Digest: sha256:cb7c51e9005a48113086002ae53b805528f4ac31e7f4c4634e22c98a8230a5bb

Status: Downloaded newer image for easzlab/kubeasz-ext-bin:0.4.0

docker.io/easzlab/kubeasz-ext-bin:0.4.0

[INFO] run a temporary container

062d302b19c21b80f02bc7d849f365c02eda002a1cd9a8e262512954f0dce019

[INFO] cp extral binaries

[INFO] stop&remove temporary container

temp_ext_bin

[INFO] downloading system packages kubeasz-sys-pkg:0.3.3

0.3.3: Pulling from easzlab/kubeasz-sys-pkg

e7c96db7181b: Pull complete

291d9a0e6c41: Pull complete

5f5b83293598: Pull complete

376121b0ab94: Pull complete

1c7cd77764e9: Pull complete

d8d58def0f00: Pull complete

Digest: sha256:342471d786ba6d9bb95c15c573fd7d24a6fd30de51049c2c0b543d09d28b5d9f

Status: Downloaded newer image for easzlab/kubeasz-sys-pkg:0.3.3

docker.io/easzlab/kubeasz-sys-pkg:0.3.3

[INFO] run a temporary container

13f40674a29b44357a551499224436eea1bae386b68138957ed93dd96bfc0f92

[INFO] cp system packages

[INFO] stop&remove temporary container

temp_sys_pkg

[INFO] downloading offline images

v3.4.4: Pulling from calico/cni

c87736221ed0: Pull complete

5c9ca5efd0e4: Pull complete

208ecfdac035: Pull complete

4112fed29204: Pull complete

Digest: sha256:bede24ded913fb9f273c8392cafc19ac37d905017e13255608133ceeabed72a1

Status: Downloaded newer image for calico/cni:v3.4.4

docker.io/calico/cni:v3.4.4

v3.4.4: Pulling from calico/kube-controllers

c87736221ed0: Already exists

e90e29149864: Pull complete

5d1329dbb1d1: Pull complete

Digest: sha256:b2370a898db0ceafaa4f0b8ddd912102632b856cc010bb350701828a8df27775

Status: Downloaded newer image for calico/kube-controllers:v3.4.4

docker.io/calico/kube-controllers:v3.4.4

v3.4.4: Pulling from calico/node

c87736221ed0: Already exists

07330e865cef: Pull complete

d4d8bb3c8ac5: Pull complete

870dc1a5d2d5: Pull complete

af40827f5487: Pull complete

76fa1069853f: Pull complete

Digest: sha256:1582527b4923ffe8297d12957670bc64bb4f324517f57e4fece3f6289d0eb6a1

Status: Downloaded newer image for calico/node:v3.4.4

docker.io/calico/node:v3.4.4

1.6.6: Pulling from coredns/coredns

c6568d217a00: Already exists

967f21e47164: Pull complete

Digest: sha256:41bee6992c2ed0f4628fcef75751048927bcd6b1cee89c79f6acb63ca5474d5a

Status: Downloaded newer image for coredns/coredns:1.6.6

docker.io/coredns/coredns:1.6.6

v2.0.0-rc3: Pulling from kubernetesui/dashboard

d8fcb18be2fe: Pull complete

Digest: sha256:c5d991d02937ac0f49cb62074ee0bd1240839e5814d6d7b51019f08bffd871a6

Status: Downloaded newer image for kubernetesui/dashboard:v2.0.0-rc3

docker.io/kubernetesui/dashboard:v2.0.0-rc3

v0.11.0-amd64: Pulling from easzlab/flannel

cd784148e348: Pull complete

04ac94e9255c: Pull complete

e10b013543eb: Pull complete

005e31e443b1: Pull complete

74f794f05817: Pull complete

Digest: sha256:bd76b84c74ad70368a2341c2402841b75950df881388e43fc2aca000c546653a

Status: Downloaded newer image for easzlab/flannel:v0.11.0-amd64

docker.io/easzlab/flannel:v0.11.0-amd64

v1.0.3: Pulling from kubernetesui/metrics-scraper

75d12d4b9104: Pull complete

fcd66fda0b81: Pull complete

53ff3f804bbd: Pull complete

Digest: sha256:40f1d5785ea66609b1454b87ee92673671a11e64ba3bf1991644b45a818082ff

Status: Downloaded newer image for kubernetesui/metrics-scraper:v1.0.3

docker.io/kubernetesui/metrics-scraper:v1.0.3

v0.3.6: Pulling from mirrorgooglecontainers/metrics-server-amd64

e8d8785a314f: Pull complete

b2f4b24bed0d: Pull complete

Digest: sha256:c9c4e95068b51d6b33a9dccc61875df07dc650abbf4ac1a19d58b4628f89288b

Status: Downloaded newer image for mirrorgooglecontainers/metrics-server-amd64:v0.3.6

docker.io/mirrorgooglecontainers/metrics-server-amd64:v0.3.6

3.1: Pulling from mirrorgooglecontainers/pause-amd64

Digest: sha256:59eec8837a4d942cc19a52b8c09ea75121acc38114a2c68b98983ce9356b8610

Status: Downloaded newer image for mirrorgooglecontainers/pause-amd64:3.1

docker.io/mirrorgooglecontainers/pause-amd64:3.1

v1.7.20: Pulling from library/traefik

42e7d26ec378: Pull complete

8a753f02eeff: Pull complete

ab927d94d255: Pull complete

Digest: sha256:5ec34caf19d114f8f0ed76f9bc3dad6ba8cf6d13a1575c4294b59b77709def39

Status: Downloaded newer image for traefik:v1.7.20

docker.io/library/traefik:v1.7.20

2.2.0: Pulling from easzlab/kubeasz

Digest: sha256:a1fc4a75fde5aee811ff230e88ffa80d8bfb66e9c1abc907092abdbff073735e

Status: Image is up to date for easzlab/kubeasz:2.2.0

docker.io/easzlab/kubeasz:2.2.0

[INFO] Action successed : download_all # 所有镜像都下载完成

下载成功后的目录和文件:

root@kube-master1:/etc/ansible# ll

total 132

drwxrwxr-x 11 root root 4096 Feb 1 10:55 ./

drwxr-xr-x 107 root root 4096 Apr 4 22:39 ../

-rw-rw-r-- 1 root root 395 Feb 1 10:35 01.prepare.yml

-rw-rw-r-- 1 root root 58 Feb 1 10:35 02.etcd.yml

-rw-rw-r-- 1 root root 149 Feb 1 10:35 03.containerd.yml

-rw-rw-r-- 1 root root 137 Feb 1 10:35 03.docker.yml

-rw-rw-r-- 1 root root 470 Feb 1 10:35 04.kube-master.yml

-rw-rw-r-- 1 root root 140 Feb 1 10:35 05.kube-node.yml

-rw-rw-r-- 1 root root 408 Feb 1 10:35 06.network.yml

-rw-rw-r-- 1 root root 77 Feb 1 10:35 07.cluster-addon.yml

-rw-rw-r-- 1 root root 3686 Feb 1 10:35 11.harbor.yml

-rw-rw-r-- 1 root root 431 Feb 1 10:35 22.upgrade.yml

-rw-rw-r-- 1 root root 1975 Feb 1 10:35 23.backup.yml

-rw-rw-r-- 1 root root 113 Feb 1 10:35 24.restore.yml

-rw-rw-r-- 1 root root 1752 Feb 1 10:35 90.setup.yml

-rw-rw-r-- 1 root root 1127 Feb 1 10:35 91.start.yml

-rw-rw-r-- 1 root root 1120 Feb 1 10:35 92.stop.yml

-rw-rw-r-- 1 root root 337 Feb 1 10:35 99.clean.yml

-rw-rw-r-- 1 root root 10283 Feb 1 10:35 ansible.cfg

drwxrwxr-x 3 root root 4096 Apr 4 22:43 bin/

drwxrwxr-x 2 root root 4096 Feb 1 10:55 dockerfiles/

drwxrwxr-x 8 root root 4096 Feb 1 10:55 docs/

drwxrwxr-x 3 root root 4096 Apr 4 22:49 down/

drwxrwxr-x 2 root root 4096 Feb 1 10:55 example/

-rw-rw-r-- 1 root root 414 Feb 1 10:35 .gitignore

drwxrwxr-x 14 root root 4096 Feb 1 10:55 manifests/

drwxrwxr-x 2 root root 4096 Feb 1 10:55 pics/

-rw-rw-r-- 1 root root 5607 Feb 1 10:35 README.md

drwxrwxr-x 23 root root 4096 Feb 1 10:55 roles/

drwxrwxr-x 2 root root 4096 Feb 1 10:55 tools/root@kube-master1:/etc/ansible# ll down/

total 792416

drwxrwxr-x 3 root root 4096 Apr 4 22:49 ./

drwxrwxr-x 11 root root 4096 Feb 1 10:55 ../

-rw------- 1 root root 203646464 Apr 4 22:46 calico_v3.4.4.tar

-rw------- 1 root root 40974848 Apr 4 22:46 coredns_1.6.6.tar

-rw------- 1 root root 126891520 Apr 4 22:47 dashboard_v2.0.0-rc3.tar

-rw-r--r-- 1 root root 63252595 Apr 4 22:32 docker-19.03.5.tgz

-rw-rw-r-- 1 root root 1737 Feb 1 10:35 download.sh

-rw------- 1 root root 55390720 Apr 4 22:47 flannel_v0.11.0-amd64.tar

-rw------- 1 root root 152580096 Apr 4 22:49 kubeasz_2.2.0.tar

-rw------- 1 root root 40129536 Apr 4 22:48 metrics-scraper_v1.0.3.tar

-rw------- 1 root root 41199616 Apr 4 22:48 metrics-server_v0.3.6.tar

drwxr-xr-x 2 root root 4096 Oct 19 22:56 packages/

-rw------- 1 root root 754176 Apr 4 22:49 pause_3.1.tar

-rw------- 1 root root 86573568 Apr 4 22:49 traefik_v1.7.20.tar

四. 部署

4.1 编写 hosts 文件

使用/etc/ansible/example/hosts.multi-node作为参考主机清单文件:

root@kube-master1:/etc/ansible# cp example/hosts.multi-node ./hosts

root@kube-master1:/etc/ansible# cat hosts

[etcd]

192.168.100.166 NODE_NAME=etcd1# master node(s)

[kube-master]

192.168.100.142

192.168.100.144

192.168.100.146# work node(s)

[kube-node]

192.168.100.160

192.168.100.162

192.168.100.164[harbor]

[ex-lb]

[chrony]

[all:vars]

CONTAINER_RUNTIME="docker"

CLUSTER_NETWORK="flannel"

PROXY_MODE="ipvs"

SERVICE_CIDR="172.20.0.0/16"

CLUSTER_CIDR="10.20.0.0/16"

NODE_PORT_RANGE="20000-40000"

CLUSTER_DNS_DOMAIN="cluster.local."

bin_dir="/usr/bin"

ca_dir="/etc/kubernetes/ssl"

base_dir="/etc/ansible"

4.2 测试所有被控机是否可达

root@kube-master1:/etc/ansible# ansible all -m ping

192.168.100.166 | SUCCESS => {"changed": false,"ping": "pong"

}

192.168.100.160 | SUCCESS => {"changed": false,"ping": "pong"

}

192.168.100.162 | SUCCESS => {"changed": false,"ping": "pong"

}

192.168.100.164 | SUCCESS => {"changed": false,"ping": "pong"

}

192.168.100.144 | SUCCESS => {"changed": false,"ping": "pong"

}

192.168.100.146 | SUCCESS => {"changed": false,"ping": "pong"

}

192.168.100.142 | SUCCESS => {"changed": false,"ping": "pong"

}

4.3 分步部署

在部署时,注意每步都验证是否安装成功。

4.3.1 部署前准备

roles/deploy:

roles/deploy/

├── defaults

│ └── main.yml # 配置文件:证书有效期,kubeconfig 相关配置

├── files

│ └── read-group-rbac.yaml # 只读用户的 rbac 权限配置

├── tasks

│ └── main.yml # 主任务脚本

└── templates├── admin-csr.json.j2 # kubectl客户端使用的admin证书请求模板├── ca-config.json.j2 # ca 配置文件模板├── ca-csr.json.j2 # ca 证书签名请求模板├── kube-proxy-csr.json.j2 # kube-proxy使用的证书请求模板└── read-csr.json.j2 # kubectl客户端使用的只读证书请求模板

root@kube-master1:/etc/ansible# ansible-playbook 01.prepare.yml

...

4.3.2 部署 etcd 集群

roles/etcd/:

root@kube-master1:/etc/ansible# tree roles/etcd/

roles/etcd/

├── clean-etcd.yml

├── defaults

│ └── main.yml

├── tasks

│ └── main.yml

└── templates├── etcd-csr.json.j2 # 可修改证书相应信息后部署└── etcd.service.j2

root@kube-master1:/etc/ansible# ansible-playbook 02.etcd.yml

...

在任意 etcd 节点验证 etcd 运行信息:

root@etcd-node1:~# systemctl status etcd

root@etcd-node1:~# journalctl -u etcd# 或者使用下面的命令查看节点健康状态

root@etcd-node1:~# apt install etcd-client

root@etcd-node1:~# for ip in ${NODE_IPS}; do ETCDCTL_API=3 etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem endpoint health; done

https://192.168.100.166:2379 is healthy: successfully committed proposal: took = 1.032861ms

4.3.3 部署 Docker

roles/docker/:

root@kube-master1:/etc/ansible# tree roles/docker/

roles/docker/

├── defaults

│ └── main.yml # 变量配置文件

├── files

│ ├── docker # bash 自动补全

│ └── docker-tag # 查询镜像tag的小工具

├── tasks

│ └── main.yml # 主执行文件

└── templates├── daemon.json.j2 # docker daemon 配置文件└── docker.service.j2 # service 服务模板

root@kube-master1:/etc/ansible# ansible-playbook 03.docker.yml

...

部署成功后验证信息:

~# systemctl status docker # 服务状态

~# journalctl -u docker # 运行日志

~# docker version

~# docker info

4.3.4 部署 Master 节点

部署 master 节点主要包含三个组件 apiserver scheduler controller-manager,其中:

- apiserver 提供集群管理的 REST API 接口,包括认证授权、数据校验以及集群状态变更等

- 只有 API Server 才直接操作 etcd

- 其他模块通过 API Server 查询或修改数据

- 提供其他模块之间的数据交互和通信的枢纽

- scheduler 负责分配调度 Pod 到集群内的 node 节点

- 监听 kube-apiserver,查询还未分配 Node 的 Pod

- 根据调度策略为这些 Pod 分配节点

- controller-manager 由一系列的控制器组成,它通过 apiserver 监控整个集群的状态,

并确保集群处于预期的工作状态

roles/kube-master/:

root@kube-master1:/etc/ansible# tree roles/kube-master/

roles/kube-master/

├── defaults

│ └── main.yml

├── tasks

│ └── main.yml

└── templates├── aggregator-proxy-csr.json.j2├── basic-auth.csv.j2├── basic-auth-rbac.yaml.j2├── kube-apiserver.service.j2├── kube-controller-manager.service.j2├── kubernetes-csr.json.j2└── kube-scheduler.service.j2

部署:

root@kube-master1:/etc/ansible# ansible-playbook 04.kube-master.yml

...

部署完成后查看 master 节点信息:

root@kube-master1:/etc/ansible# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

192.168.100.142 Ready,SchedulingDisabled master 4m17s v1.17.2 192.168.100.142 <none> Ubuntu 18.04.3 LTS 4.15.0-55-generic docker://19.3.5

192.168.100.144 Ready,SchedulingDisabled master 4m18s v1.17.2 192.168.100.144 <none> Ubuntu 18.04.3 LTS 4.15.0-55-generic docker://19.3.5

192.168.100.146 Ready,SchedulingDisabled master 4m17s v1.17.2 192.168.100.146 <none> Ubuntu 18.04.3 LTS 4.15.0-55-generic docker://19.3.5

4.3.5 部署 node 节点

roles/kube-node/:

root@kube-master1:/etc/ansible# tree roles/kube-node/

roles/kube-node/

├── defaults

│ └── main.yml

├── tasks

│ ├── create-kubelet-kubeconfig.yml

│ ├── main.yml

│ ├── node_lb.yml

│ └── offline.yml

└── templates├── cni-default.conf.j2├── haproxy.cfg.j2├── haproxy.service.j2├── kubelet-config.yaml.j2├── kubelet-csr.json.j2├── kubelet.service.j2└── kube-proxy.service.j2

部署:

root@kube-master1:/etc/ansible# ansible-playbook 05.kube-node.yml

...

查看全部节点信息:

root@kube-master1:/etc/ansible# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

192.168.100.142 Ready,SchedulingDisabled master 6m32s v1.17.2 192.168.100.142 <none> Ubuntu 18.04.3 LTS 4.15.0-55-generic docker://19.3.5

192.168.100.144 Ready,SchedulingDisabled master 6m33s v1.17.2 192.168.100.144 <none> Ubuntu 18.04.3 LTS 4.15.0-55-generic docker://19.3.5

192.168.100.146 Ready,SchedulingDisabled master 6m32s v1.17.2 192.168.100.146 <none> Ubuntu 18.04.3 LTS 4.15.0-55-generic docker://19.3.5

192.168.100.160 Ready node 31s v1.17.2 192.168.100.160 <none> Ubuntu 18.04.3 LTS 4.15.0-55-generic docker://19.3.5

192.168.100.162 Ready node 31s v1.17.2 192.168.100.162 <none> Ubuntu 18.04.3 LTS 4.15.0-55-generic docker://19.3.5

192.168.100.164 Ready node 31s v1.17.2 192.168.100.164 <none> Ubuntu 18.04.3 LTS 4.15.0-55-generic docker://19.3.5

4.3.6 部署网络 flannel

roles/flannel/:

root@kube-master1:/etc/ansible# tree roles/flannel/

roles/flannel/

├── defaults

│ └── main.yml

├── tasks

│ └── main.yml

└── templates└── kube-flannel.yaml.j2# 确保变量CLUSTER_NETWORK值为flannel

root@kube-master1:/etc/ansible# grep "CLUSTER_NETWORK" hosts

CLUSTER_NETWORK="flannel"

部署:

root@kube-master1:/etc/ansible# ansible-playbook 06.network.yml

...

部署完成后查看 flannel 是否都运行:

root@kube-master1:/etc/ansible# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system kube-flannel-ds-amd64-8k8jz 1/1 Running 0 29s 192.168.100.160 192.168.100.160 <none> <none>

kube-system kube-flannel-ds-amd64-f76vp 1/1 Running 0 29s 192.168.100.164 192.168.100.164 <none> <none>

kube-system kube-flannel-ds-amd64-hpgn8 1/1 Running 0 29s 192.168.100.142 192.168.100.142 <none> <none>

kube-system kube-flannel-ds-amd64-j2k8x 1/1 Running 0 29s 192.168.100.144 192.168.100.144 <none> <none>

kube-system kube-flannel-ds-amd64-spqg8 1/1 Running 0 29s 192.168.100.162 192.168.100.162 <none> <none>

kube-system kube-flannel-ds-amd64-strfx 1/1 Running 0 29s 192.168.100.146 192.168.100.146 <none> <none>

4.3.7 安装集群插件

集群插件安装角色定义了: cube-dns、metric server、dashboard 及 ingress 的安装。

roles/cluster-addon/:

root@kube-master1:/etc/ansible# tree roles/cluster-addon/

roles/cluster-addon/

├── defaults

│ └── main.yml

├── tasks

│ ├── ingress.yml

│ └── main.yml

└── templates├── coredns.yaml.j2├── kubedns.yaml.j2└── metallb├── bgp.yaml.j2├── layer2.yaml.j2└── metallb.yaml.j2

部署:

root@kube-master1:/etc/ansible# ansible-playbook 07.cluster-addon.yml

...

部署完成后查看相关的 service:

root@kube-master1:/etc/ansible# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 159m

kube-system dashboard-metrics-scraper ClusterIP 172.20.36.20 <none> 8000/TCP 108m

kube-system kube-dns ClusterIP 172.20.0.2 <none> 53/UDP,53/TCP,9153/TCP 108m

kube-system kubernetes-dashboard NodePort 172.20.230.96 <none> 443:35964/TCP 108m

kube-system metrics-server ClusterIP 172.20.146.16 <none> 443/TCP 108m

kube-system traefik-ingress-service NodePort 172.20.69.230 <none> 80:23456/TCP,8080:23823/TCP 108m

4.4 创建 Pod 资源测试网络

测试 Pod 资源配置清单:

root@kube-master1:/etc/ansible# cat /opt/k8s-data/pod-ex.yaml

apiVersion: v1

kind: Pod

metadata:name: nginx-podlabels:app: nginx

spec:containers:- name: nginx-podimage: nginx:1.16.1

创建该 Pod 资源:

root@kube-master1:/etc/ansible# kubectl apply -f /opt/k8s-data/pod-ex.yaml

pod/nginx-pod created

root@kube-master1:/etc/ansible# kubectl get -f /opt/k8s-data/pod-ex.yaml -w

NAME READY STATUS RESTARTS AGE

nginx-pod 1/1 Running 0 8s

验证网络连通性:

root@kube-master1:/etc/ansible# kubectl exec -it nginx-pod bash

root@nginx-pod:/# apt update

...

root@nginx-pod:/# apt install iputils-ping

...root@nginx-pod:/# ping 172.20.0.1

PING 172.20.0.1 (172.20.0.1) 56(84) bytes of data.

64 bytes from 172.20.0.1: icmp_seq=1 ttl=64 time=0.041 ms

64 bytes from 172.20.0.1: icmp_seq=2 ttl=64 time=0.044 ms

^C

--- 172.20.0.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 21ms

rtt min/avg/max/mdev = 0.041/0.042/0.044/0.006 msroot@nginx-pod:/# ping 192.168.100.142

PING 192.168.100.142 (192.168.100.142) 56(84) bytes of data.

64 bytes from 192.168.100.142: icmp_seq=1 ttl=63 time=0.296 ms

64 bytes from 192.168.100.142: icmp_seq=2 ttl=63 time=0.543 ms

^C

--- 192.168.100.142 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 8ms

rtt min/avg/max/mdev = 0.296/0.419/0.543/0.125 msroot@nginx-pod:/# ping www.baidu.com

PING www.a.shifen.com (104.193.88.123) 56(84) bytes of data.

64 bytes from 104.193.88.123: icmp_seq=4 ttl=127 time=211 ms

64 bytes from 104.193.88.123: icmp_seq=5 ttl=127 time=199 ms

^C

--- www.a.shifen.com ping statistics ---

6 packets transmitted, 2 received, 66.6667% packet loss, time 301ms

rtt min/avg/max/mdev = 199.135/205.145/211.156/6.027 msroot@nginx-pod:/# traceroute www.baidu.com

traceroute to www.baidu.com (104.193.88.123), 30 hops max, 60 byte packets1 10.20.4.1 (10.20.4.1) 0.036 ms 0.008 ms 0.007 ms2 192.168.100.2 (192.168.100.2) 0.083 ms 0.163 ms 0.191 ms # 虚拟机的网关3 * * *4 * * *5 * * *6 *^C

4.5 测试 Dashboard

拿到登录 token:

root@kube-master1:/etc/ansible# kubectl get secret -A | grep dashboard-token

kube-system kubernetes-dashboard-token-qcb5j kubernetes.io/service-account-token 3 105m

root@kube-master1:/etc/ansible# kubectl describe secret kubernetes-dashboard-token-qcb5j -n kube-system

Name: kubernetes-dashboard-token-qcb5j

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboardkubernetes.io/service-account.uid: 00ba4b90-b914-4e5f-87dd-a808542acc83Type: kubernetes.io/service-account-tokenData

====

ca.crt: 1346 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkRWWU45SmJEWTJZRmFZWHlEdFhhOGhKRzRWYkFEZ2M3LTU1TmZHQVlSRW8ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1xY2I1aiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjAwYmE0YjkwLWI5MTQtNGU1Zi04N2RkLWE4MDg1NDJhY2M4MyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.AR41PMi6jk_tzPX0Z88hdI6eEvldqa0wOnzJFciMa-hwnG3214dyROGwXoKtLvmshk8hjE2W11W9VBPQihF2kWqAalSQLF4WsX5LTL0X_FPO6EbYmPxUysFU9nWWRiw1UZD9WkBPl_JfLoz5LUVvrJm2fJJ80zIcrVEQ75c_Zxsgfl1k8BDMCFqcNDUiMCiJCuu-02aqdvMvXf7EYciOzqp36_wZTi9RNLEW7fZrnRu6LCIfn6XFn43nLnJuCupUZhqcSMVS0t8Qj5AY-XJRpyDamSseaF4gRwm7CixUq03Fdk8_5Esz16w2VNlZ0GINyGJTYmg8WOtQGMX9eoWHaA

相关文章:

使用ansible自动化部署Kubernetes

使用 kubeasz 部署 Kubernetes 集群 服务器列表: IP主机名角色192.168.100.142kube-master1,kube-master1.suosuoli.cnK8s 集群主节点 1192.168.100.144kube-master2,kube-master2.suosuoli.cnK8s 集群主节点 2192.168.100.146kube-master3,kube-master3.suosuoli…...

k8s v1.27.4 部署metrics-serverv:0.6.4,kube-prometheus

只有一个问题,原来的httpGet存活、就绪检测一直不通过,于是改为tcpSocket后pod正常。 wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml修改后的yaml文件,镜像修改为阿里云 apiVersion: …...

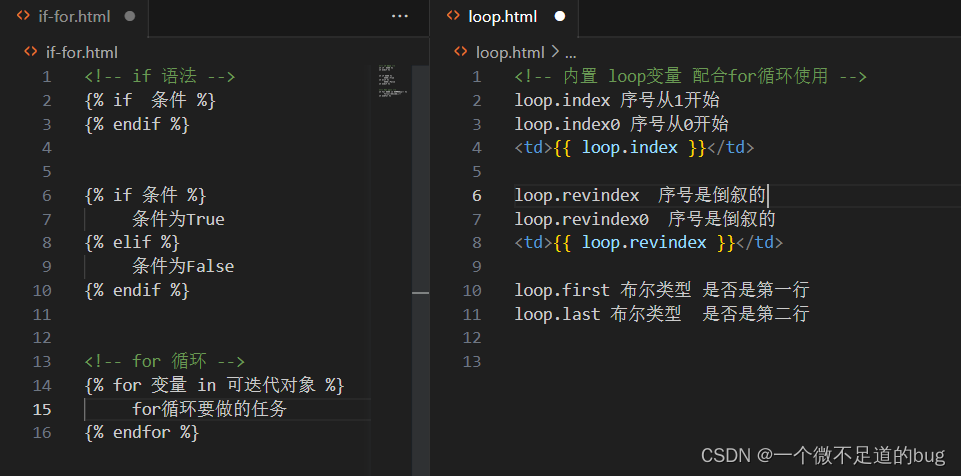

6-模板初步使用

官网: 中文版: 介绍-Jinja2中文文档 英文版: Template Designer Documentation — Jinja Documentation (2.11.x) 模板语法 1. 模板渲染 (1) app.py 准备数据 import jsonfrom flask import Flask,render_templateimport settingsapp Flask(__name__) app.config.from_obj…...

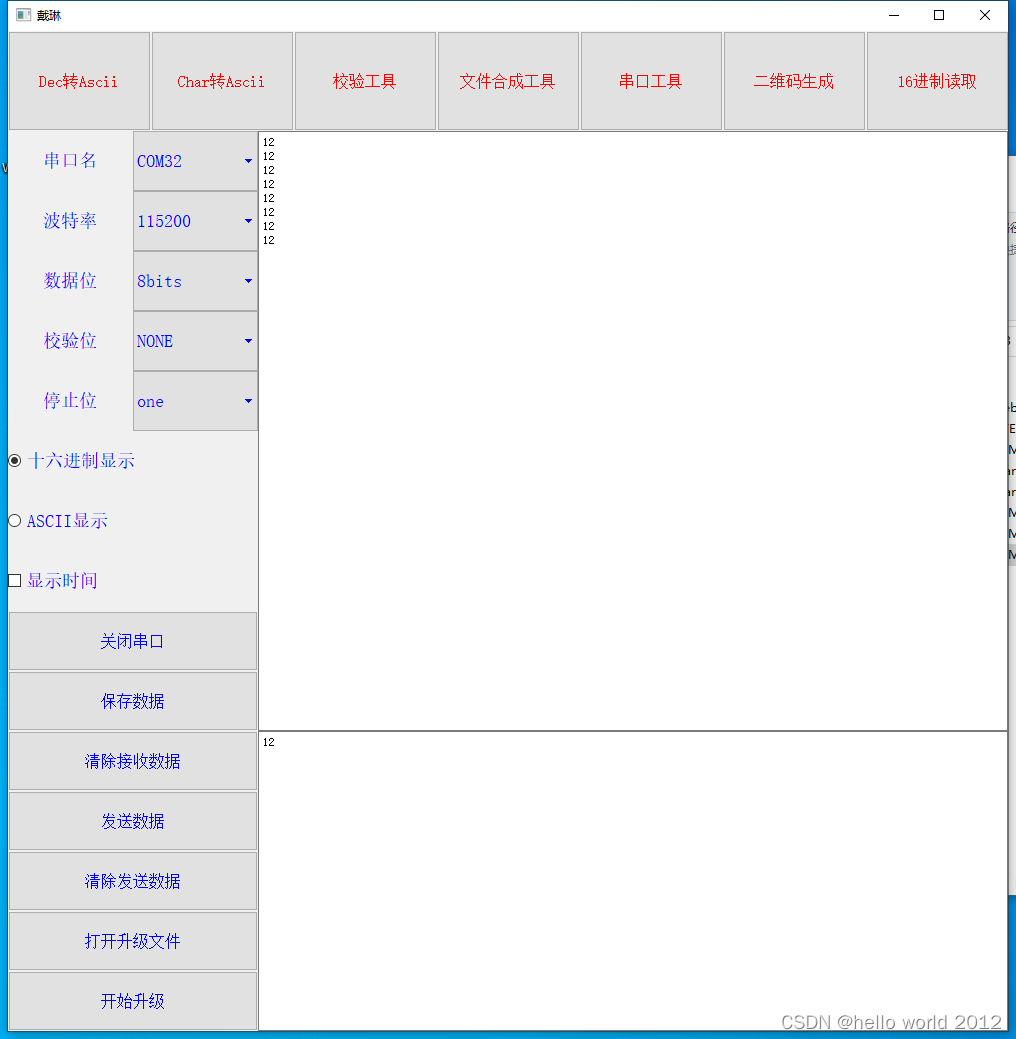

STM32CubeMX配置STM32F103 USB Virtual Port Com(HAL库开发)

1.配置外部高速晶振 2.勾选USB功能 3.将USB模式配置Virtual Port Com 4.将系统主频配置为72M,USB频率配置为48M. 5.配置好项目名称,开发环境,最后获取代码。 6.在CDC_Receive_FS函数中写入USB发送函数。这样USB接收到的数据就好原样发送。 7.将串口助手打…...

)

RocketMQ与Kafka对比(18项差异)

淘宝内部的交易系统使用了淘宝自主研发的Notify消息中间件,使用MySQL作为消息存储媒介,可完全水平扩容,为了进一步降低成本,我们认为存储部分可以进一步优化,2011年初,Linkin开源了Kafka这个优秀的消息中间件,淘宝中间件团队在对Kafka做过充分Review之后,Kafka无限消息…...

英文翻译照片怎么做?掌握这个方法轻松翻译

在现代社会中,英文已经成为了一种全球性的语言,因此,我们在阅读文章或者查看图片时,经常会遇到英文的内容。为了更好地理解这些英文内容,我们需要将其翻译成中文。在本文中,我将探讨图片中英文内容翻译的方…...

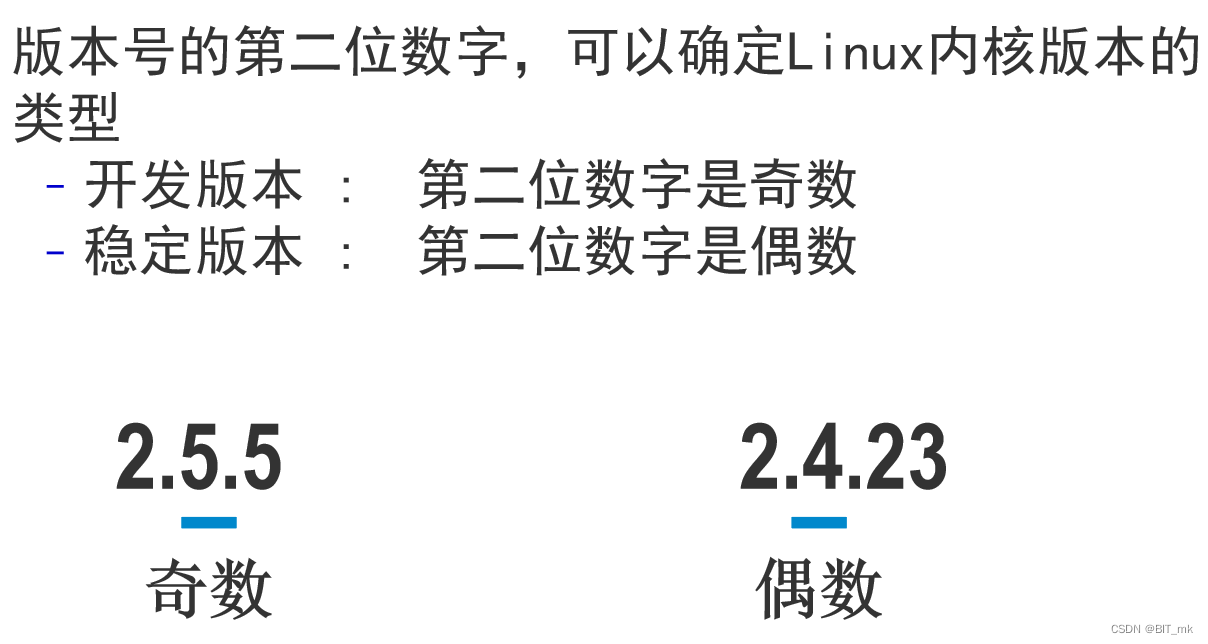

Linux介绍

目录 unix linux的版本号 linux对unix的继承 linux特性:安全性高 unix Unix是一个先进的、多用户、多任务的操作系统,被广泛用于服务器、工作站和移动设备。以下是Unix的一些关键特点和组件: 多用户系统:允许多个用户同时访…...

计算机竞赛 卷积神经网络手写字符识别 - 深度学习

文章目录 0 前言1 简介2 LeNet-5 模型的介绍2.1 结构解析2.2 C1层2.3 S2层S2层和C3层连接 2.4 F6与C5层 3 写数字识别算法模型的构建3.1 输入层设计3.2 激活函数的选取3.3 卷积层设计3.4 降采样层3.5 输出层设计 4 网络模型的总体结构5 部分实现代码6 在线手写识别7 最后 0 前言…...

[Go版]算法通关村第十三关白银——数组实现加法和幂运算

目录 数组实现加法专题题目:数组实现整数加法思路分析:复杂度:Go代码 题目:字符串加法思路分析:复杂度:Go代码 题目:二进制加法思路分析:复杂度:Go代码 幂运算专题题目&a…...

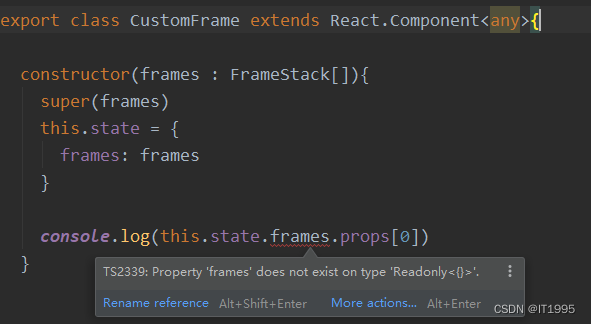

React笔记[tsx]-解决Property ‘frames‘ does not exist on type ‘Readonly<{}>‘

浏览器报错如下: 编辑器是这样的: 原因是React.Component<any>少了后面的any,改成这样即可: export class CustomFrame extends React.Component<any, any>{............ }...

ThinkPHP6.0+ 使用Redis 原始用法

composer 安装 predis/predis 依赖,或者安装php_redis.dll的扩展。 我这里选择的是predis/predis 依赖。 composer require predis/predis 进入config/cache.php 配置添加redis缓存支持 示例: <?php// -----------------------------------------…...

SRM系统询价竞价管理:优化采购流程的全面解析

SRM系统的询价竞价管理模块是现代企业采购管理中的重要工具。通过该模块,企业可以实现供应商的询价、竞价和合同管理等关键环节的自动化和优化。 一、概述 SRM系统是一种用于管理和优化供应商关系的软件系统。它通过集成各个环节,包括供应商信息管理、询…...

c++选择题笔记

局部变量能否和全局变量重名?可以,局部变量会屏蔽全局变量。在使用全局变量时需要使用 ":: "。拷贝构造函数:参数为同类型的对象的常量引用的构造函数函数指针:int (*f)(int,int) & max; 虚函数:在基类…...

Android2:构建交互式应用

一。创建项目 项目名Beer Adviser 二。更新布局 activity_main.xml <?xml version"1.0" encoding"utf-8"?><LinearLayout xmlns:android"http://schemas.android.com/apk/res/android"android:layout_width"match_parent"…...

ChatGLM-6B微调记录

目录 GLM-130B和ChatGLM-6BChatGLM-6B直接部署基于PEFT的LoRA微调ChatGLM-6B GLM-130B和ChatGLM-6B 对于三类主要预训练框架: autoregressive(无条件生成),GPT的训练目标是从左到右的文本生成。autoencoding(语言理解…...

Linux Kernel 4.12 或将新增优化分析工具

到 7 月初,Linux Kernel 4.12 预计将为修复所有安全漏洞而奠定基础,另外新增的是一个分析工具,对于开发者优化启动时间时会有所帮助。 新的「个别任务统一模型」(Per-Task Consistency Model)为主要核心实时修补&#…...

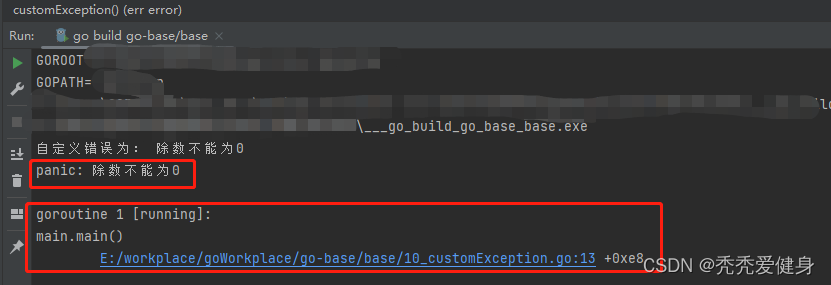

【30天熟悉Go语言】10 Go异常处理机制

作者:秃秃爱健身,多平台博客专家,某大厂后端开发,个人IP起于源码分析文章 😋。 源码系列专栏:Spring MVC源码系列、Spring Boot源码系列、SpringCloud源码系列(含:Ribbon、Feign&…...

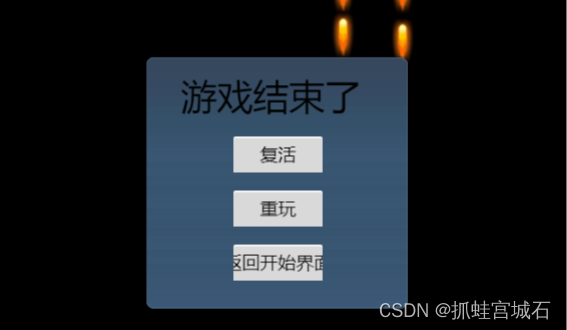

飞机打方块(四)游戏结束

一、游戏结束显示 1.新建节点 1.新建gameover节点 2.绑定canvas 3.新建gameover容器 4.新建文本节点 2.游戏结束逻辑 Barrier.ts update(dt: number) {//将自身生命值取整let num Math.floor(this.num);//在Label上显示this.num_lb.string num.toString();//获取GameCo…...

保研之旅1:西北工业大学电子信息学院夏令营

💥💥💞💞欢迎来到本博客❤️❤️💥💥 本人持续分享更多关于电子通信专业内容以及嵌入式和单片机的知识,如果大家喜欢,别忘点个赞加个关注哦,让我们一起共同进步~ &#x…...

[WMCTF 2023] crypto

似乎退步不了,这个比赛基本不会了,就作了两个简单题。 SIGNIN 第1个是签到题 from Crypto.Util.number import * from random import randrange from secret import flagdef pr(msg):print(msg)pr(br"""........ …...

docker详细操作--未完待续

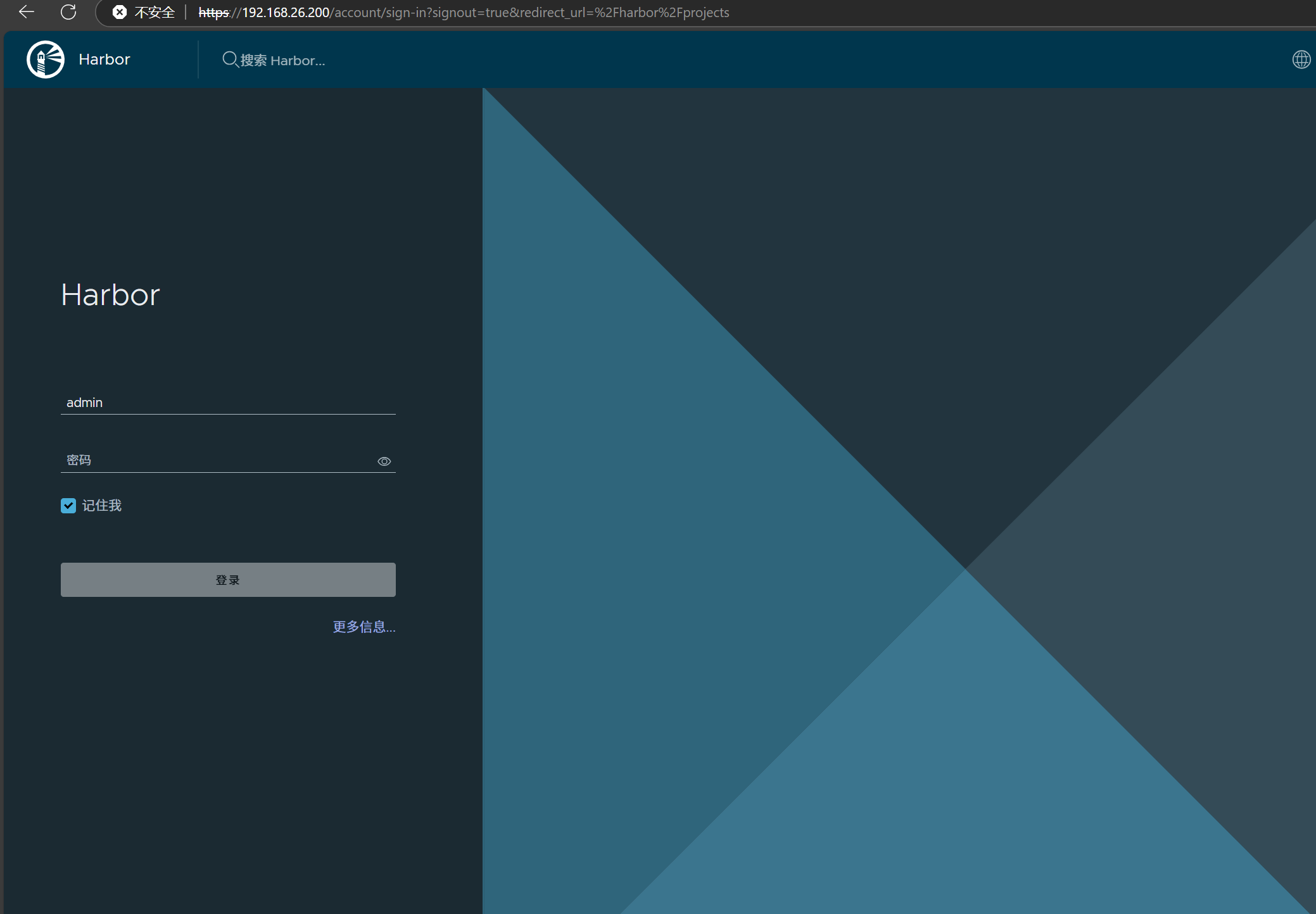

docker介绍 docker官网: Docker:加速容器应用程序开发 harbor官网:Harbor - Harbor 中文 使用docker加速器: Docker镜像极速下载服务 - 毫秒镜像 是什么 Docker 是一种开源的容器化平台,用于将应用程序及其依赖项(如库、运行时环…...

)

React Native 导航系统实战(React Navigation)

导航系统实战(React Navigation) React Navigation 是 React Native 应用中最常用的导航库之一,它提供了多种导航模式,如堆栈导航(Stack Navigator)、标签导航(Tab Navigator)和抽屉…...

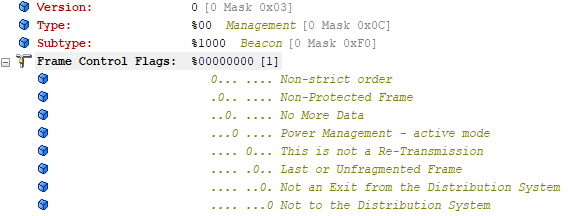

【WiFi帧结构】

文章目录 帧结构MAC头部管理帧 帧结构 Wi-Fi的帧分为三部分组成:MAC头部frame bodyFCS,其中MAC是固定格式的,frame body是可变长度。 MAC头部有frame control,duration,address1,address2,addre…...

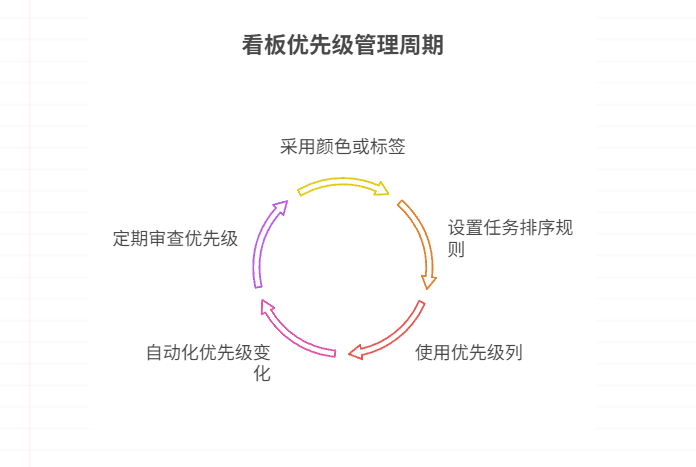

如何在看板中体现优先级变化

在看板中有效体现优先级变化的关键措施包括:采用颜色或标签标识优先级、设置任务排序规则、使用独立的优先级列或泳道、结合自动化规则同步优先级变化、建立定期的优先级审查流程。其中,设置任务排序规则尤其重要,因为它让看板视觉上直观地体…...

ssc377d修改flash分区大小

1、flash的分区默认分配16M、 / # df -h Filesystem Size Used Available Use% Mounted on /dev/root 1.9M 1.9M 0 100% / /dev/mtdblock4 3.0M...

解锁数据库简洁之道:FastAPI与SQLModel实战指南

在构建现代Web应用程序时,与数据库的交互无疑是核心环节。虽然传统的数据库操作方式(如直接编写SQL语句与psycopg2交互)赋予了我们精细的控制权,但在面对日益复杂的业务逻辑和快速迭代的需求时,这种方式的开发效率和可…...

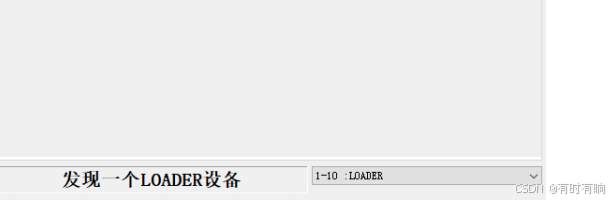

linux arm系统烧录

1、打开瑞芯微程序 2、按住linux arm 的 recover按键 插入电源 3、当瑞芯微检测到有设备 4、松开recover按键 5、选择升级固件 6、点击固件选择本地刷机的linux arm 镜像 7、点击升级 (忘了有没有这步了 估计有) 刷机程序 和 镜像 就不提供了。要刷的时…...

页面渲染流程与性能优化

页面渲染流程与性能优化详解(完整版) 一、现代浏览器渲染流程(详细说明) 1. 构建DOM树 浏览器接收到HTML文档后,会逐步解析并构建DOM(Document Object Model)树。具体过程如下: (…...

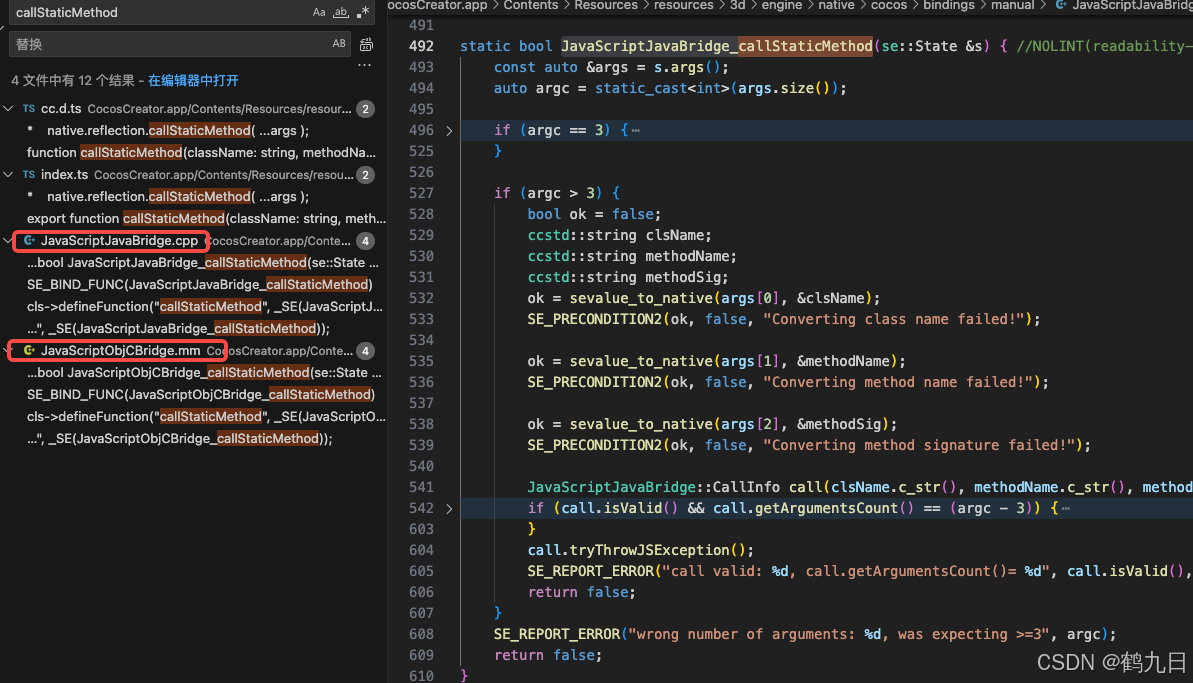

CocosCreator 之 JavaScript/TypeScript和Java的相互交互

引擎版本: 3.8.1 语言: JavaScript/TypeScript、C、Java 环境:Window 参考:Java原生反射机制 您好,我是鹤九日! 回顾 在上篇文章中:CocosCreator Android项目接入UnityAds 广告SDK。 我们简单讲…...

Robots.txt 文件

什么是robots.txt? robots.txt 是一个位于网站根目录下的文本文件(如:https://example.com/robots.txt),它用于指导网络爬虫(如搜索引擎的蜘蛛程序)如何抓取该网站的内容。这个文件遵循 Robots…...