【AR】使用深度API实现虚实遮挡

遮挡效果

本段描述摘自 https://developers.google.cn/ar/develop/depth

遮挡是深度API的应用之一。

遮挡(即准确渲染虚拟物体在现实物体后面)对于沉浸式 AR 体验至关重要。

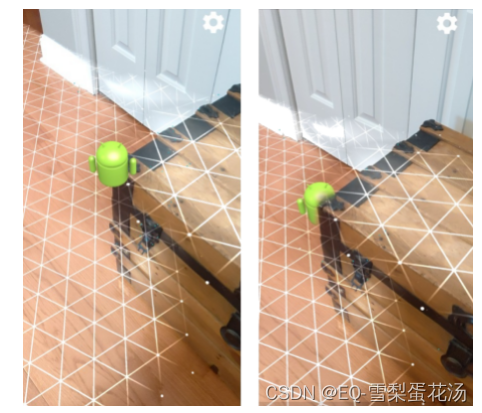

参考下图,假设场景中有一个Andy,用户可能需要放置在包含门边有后备箱的场景中。渲染时没有遮挡,Andy 会不切实际地与树干边缘重叠。如果您使用场景的深度来了解虚拟 Andy 相对于木箱等周围环境的距离,就可以准确地渲染 Andy 的遮挡效果,使其在周围环境中看起来更逼真。

图片源自 https://developers.google.cn/ar/develop/depth

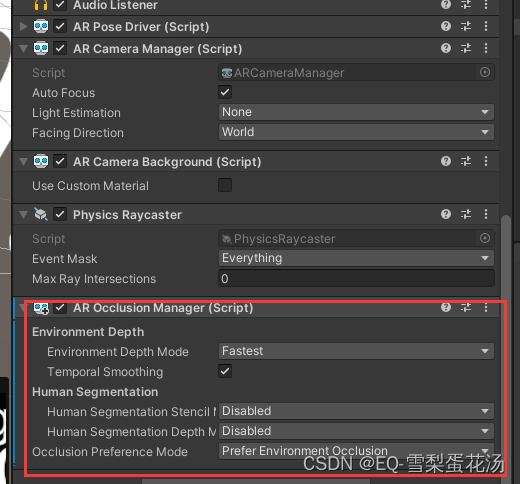

使用ARFoundation

ARFoundation中提供了AROcclusionManager脚本,在AR Session Origin的AR Camera对象上挂载该脚本即可。在场景启动后,会自动启用虚实遮挡(当然Depth Mode 不能选择的是disable)。

AROcclusionManager脚本内容如下:

using System;

using System.Collections.Generic;

using Unity.Collections;

using UnityEngine.Serialization;

using UnityEngine.XR.ARSubsystems;

using UnityEngine.Rendering;namespace UnityEngine.XR.ARFoundation

{/// <summary>/// The manager for the occlusion subsystem./// </summary>[DisallowMultipleComponent][DefaultExecutionOrder(ARUpdateOrder.k_OcclusionManager)][HelpURL(HelpUrls.ApiWithNamespace + nameof(AROcclusionManager) + ".html")]public sealed class AROcclusionManager :SubsystemLifecycleManager<XROcclusionSubsystem, XROcclusionSubsystemDescriptor, XROcclusionSubsystem.Provider>{/// <summary>/// The list of occlusion texture infos./// </summary>/// <value>/// The list of occlusion texture infos./// </value>readonly List<ARTextureInfo> m_TextureInfos = new List<ARTextureInfo>();/// <summary>/// The list of occlusion textures./// </summary>/// <value>/// The list of occlusion textures./// </value>readonly List<Texture2D> m_Textures = new List<Texture2D>();/// <summary>/// The list of occlusion texture property IDs./// </summary>/// <value>/// The list of occlusion texture property IDs./// </value>readonly List<int> m_TexturePropertyIds = new List<int>();/// <summary>/// The human stencil texture info./// </summary>/// <value>/// The human stencil texture info./// </value>ARTextureInfo m_HumanStencilTextureInfo;/// <summary>/// The human depth texture info./// </summary>/// <value>/// The human depth texture info./// </value>ARTextureInfo m_HumanDepthTextureInfo;/// <summary>/// The environment depth texture info./// </summary>/// <value>/// The environment depth texture info./// </value>ARTextureInfo m_EnvironmentDepthTextureInfo;/// <summary>/// The environment depth confidence texture info./// </summary>/// <value>/// The environment depth confidence texture info./// </value>ARTextureInfo m_EnvironmentDepthConfidenceTextureInfo;/// <summary>/// An event which fires each time an occlusion camera frame is received./// </summary>public event Action<AROcclusionFrameEventArgs> frameReceived;/// <summary>/// The mode for generating the human segmentation stencil texture./// This method is obsolete./// Use <see cref="requestedHumanStencilMode"/>/// or <see cref="currentHumanStencilMode"/> instead./// </summary>[Obsolete("Use requestedSegmentationStencilMode or currentSegmentationStencilMode instead. (2020-01-14)")]public HumanSegmentationStencilMode humanSegmentationStencilMode{get => m_HumanSegmentationStencilMode;set => requestedHumanStencilMode = value;}/// <summary>/// The requested mode for generating the human segmentation stencil texture./// </summary>public HumanSegmentationStencilMode requestedHumanStencilMode{get => subsystem?.requestedHumanStencilMode ?? m_HumanSegmentationStencilMode;set{m_HumanSegmentationStencilMode = value;if (enabled && descriptor?.humanSegmentationStencilImageSupported == Supported.Supported){subsystem.requestedHumanStencilMode = value;}}}/// <summary>/// Get the current mode in use for generating the human segmentation stencil mode./// </summary>public HumanSegmentationStencilMode currentHumanStencilMode => subsystem?.currentHumanStencilMode ?? HumanSegmentationStencilMode.Disabled;[SerializeField][Tooltip("The mode for generating human segmentation stencil texture.\n\n"+ "Disabled -- No human stencil texture produced.\n"+ "Fastest -- Minimal rendering quality. Minimal frame computation.\n"+ "Medium -- Medium rendering quality. Medium frame computation.\n"+ "Best -- Best rendering quality. Increased frame computation.")]HumanSegmentationStencilMode m_HumanSegmentationStencilMode = HumanSegmentationStencilMode.Disabled;/// <summary>/// The mode for generating the human segmentation depth texture./// This method is obsolete./// Use <see cref="requestedHumanDepthMode"/>/// or <see cref="currentHumanDepthMode"/> instead./// </summary>[Obsolete("Use requestedSegmentationDepthMode or currentSegmentationDepthMode instead. (2020-01-15)")]public HumanSegmentationDepthMode humanSegmentationDepthMode{get => m_HumanSegmentationDepthMode;set => requestedHumanDepthMode = value;}/// <summary>/// Get or set the requested human segmentation depth mode./// </summary>public HumanSegmentationDepthMode requestedHumanDepthMode{get => subsystem?.requestedHumanDepthMode ?? m_HumanSegmentationDepthMode;set{m_HumanSegmentationDepthMode = value;if (enabled && descriptor?.humanSegmentationDepthImageSupported == Supported.Supported){subsystem.requestedHumanDepthMode = value;}}}/// <summary>/// Get the current human segmentation depth mode in use by the subsystem./// </summary>public HumanSegmentationDepthMode currentHumanDepthMode => subsystem?.currentHumanDepthMode ?? HumanSegmentationDepthMode.Disabled;[SerializeField][Tooltip("The mode for generating human segmentation depth texture.\n\n"+ "Disabled -- No human depth texture produced.\n"+ "Fastest -- Minimal rendering quality. Minimal frame computation.\n"+ "Best -- Best rendering quality. Increased frame computation.")]HumanSegmentationDepthMode m_HumanSegmentationDepthMode = HumanSegmentationDepthMode.Disabled;/// <summary>/// Get or set the requested environment depth mode./// </summary>public EnvironmentDepthMode requestedEnvironmentDepthMode{get => subsystem?.requestedEnvironmentDepthMode ?? m_EnvironmentDepthMode;set{m_EnvironmentDepthMode = value;if (enabled && descriptor?.environmentDepthImageSupported == Supported.Supported){subsystem.requestedEnvironmentDepthMode = value;}}}/// <summary>/// Get the current environment depth mode in use by the subsystem./// </summary>public EnvironmentDepthMode currentEnvironmentDepthMode => subsystem?.currentEnvironmentDepthMode ?? EnvironmentDepthMode.Disabled;[SerializeField][Tooltip("The mode for generating the environment depth texture.\n\n"+ "Disabled -- No environment depth texture produced.\n"+ "Fastest -- Minimal rendering quality. Minimal frame computation.\n"+ "Medium -- Medium rendering quality. Medium frame computation.\n"+ "Best -- Best rendering quality. Increased frame computation.")]EnvironmentDepthMode m_EnvironmentDepthMode = EnvironmentDepthMode.Fastest;[SerializeField]bool m_EnvironmentDepthTemporalSmoothing = true;/// <summary>/// Whether temporal smoothing should be applied to the environment depth image. Query for support with/// [environmentDepthTemporalSmoothingSupported](xref:UnityEngine.XR.ARSubsystems.XROcclusionSubsystemDescriptor.environmentDepthTemporalSmoothingSupported)./// </summary>/// <value>When `true`, temporal smoothing is applied to the environment depth image. Otherwise, no temporal smoothing is applied.</value>public bool environmentDepthTemporalSmoothingRequested{get => subsystem?.environmentDepthTemporalSmoothingRequested ?? m_EnvironmentDepthTemporalSmoothing;set{m_EnvironmentDepthTemporalSmoothing = value;if (enabled && descriptor?.environmentDepthTemporalSmoothingSupported == Supported.Supported){subsystem.environmentDepthTemporalSmoothingRequested = value;}}}/// <summary>/// Whether temporal smoothing is applied to the environment depth image. Query for support with/// [environmentDepthTemporalSmoothingSupported](xref:UnityEngine.XR.ARSubsystems.XROcclusionSubsystemDescriptor.environmentDepthTemporalSmoothingSupported)./// </summary>/// <value>Read Only.</value>public bool environmentDepthTemporalSmoothingEnabled => subsystem?.environmentDepthTemporalSmoothingEnabled ?? false;/// <summary>/// Get or set the requested occlusion preference mode./// </summary>public OcclusionPreferenceMode requestedOcclusionPreferenceMode{get => subsystem?.requestedOcclusionPreferenceMode ?? m_OcclusionPreferenceMode;set{m_OcclusionPreferenceMode = value;if (enabled && subsystem != null){subsystem.requestedOcclusionPreferenceMode = value;}}}/// <summary>/// Get the current occlusion preference mode in use by the subsystem./// </summary>public OcclusionPreferenceMode currentOcclusionPreferenceMode => subsystem?.currentOcclusionPreferenceMode ?? OcclusionPreferenceMode.PreferEnvironmentOcclusion;[SerializeField][Tooltip("If both environment texture and human stencil & depth textures are available, this mode specifies which should be used for occlusion.")]OcclusionPreferenceMode m_OcclusionPreferenceMode = OcclusionPreferenceMode.PreferEnvironmentOcclusion;/// <summary>/// The human segmentation stencil texture./// </summary>/// <value>/// The human segmentation stencil texture, if any. Otherwise, <c>null</c>./// </value>public Texture2D humanStencilTexture{get{if (descriptor?.humanSegmentationStencilImageSupported == Supported.Supported &&subsystem.TryGetHumanStencil(out var humanStencilDescriptor)){m_HumanStencilTextureInfo = ARTextureInfo.GetUpdatedTextureInfo(m_HumanStencilTextureInfo,humanStencilDescriptor);DebugAssert.That(((m_HumanStencilTextureInfo.descriptor.dimension == TextureDimension.Tex2D)|| (m_HumanStencilTextureInfo.descriptor.dimension == TextureDimension.None)))?.WithMessage("Human Stencil Texture needs to be a Texture 2D, but instead is "+ $"{m_HumanStencilTextureInfo.descriptor.dimension.ToString()}.");return m_HumanStencilTextureInfo.texture as Texture2D;}return null;}}/// <summary>/// Attempt to get the latest human stencil CPU image. This provides directly access to the raw pixel data./// </summary>/// <remarks>/// The `XRCpuImage` must be disposed to avoid resource leaks./// </remarks>/// <param name="cpuImage">If this method returns `true`, an acquired `XRCpuImage`.</param>/// <returns>Returns `true` if the CPU image was acquired. Returns `false` otherwise.</returns>public bool TryAcquireHumanStencilCpuImage(out XRCpuImage cpuImage){if (descriptor?.humanSegmentationStencilImageSupported == Supported.Supported){return subsystem.TryAcquireHumanStencilCpuImage(out cpuImage);}cpuImage = default;return false;}/// <summary>/// The human segmentation depth texture./// </summary>/// <value>/// The human segmentation depth texture, if any. Otherwise, <c>null</c>./// </value>public Texture2D humanDepthTexture{get{if (descriptor?.humanSegmentationDepthImageSupported == Supported.Supported &&subsystem.TryGetHumanDepth(out var humanDepthDescriptor)){m_HumanDepthTextureInfo = ARTextureInfo.GetUpdatedTextureInfo(m_HumanDepthTextureInfo,humanDepthDescriptor);DebugAssert.That(m_HumanDepthTextureInfo.descriptor.dimension == TextureDimension.Tex2D|| m_HumanDepthTextureInfo.descriptor.dimension == TextureDimension.None)?.WithMessage("Human Depth Texture needs to be a Texture 2D, but instead is "+ $"{m_HumanDepthTextureInfo.descriptor.dimension.ToString()}.");return m_HumanDepthTextureInfo.texture as Texture2D;}return null;}}/// <summary>/// Attempt to get the latest environment depth confidence CPU image. This provides direct access to the/// raw pixel data./// </summary>/// <remarks>/// The `XRCpuImage` must be disposed to avoid resource leaks./// </remarks>/// <param name="cpuImage">If this method returns `true`, an acquired `XRCpuImage`.</param>/// <returns>Returns `true` if the CPU image was acquired. Returns `false` otherwise.</returns>public bool TryAcquireEnvironmentDepthConfidenceCpuImage(out XRCpuImage cpuImage){if (descriptor?.environmentDepthConfidenceImageSupported == Supported.Supported){return subsystem.TryAcquireEnvironmentDepthConfidenceCpuImage(out cpuImage);}cpuImage = default;return false;}/// <summary>/// The environment depth confidence texture./// </summary>/// <value>/// The environment depth confidence texture, if any. Otherwise, <c>null</c>./// </value>public Texture2D environmentDepthConfidenceTexture{get{if (descriptor?.environmentDepthConfidenceImageSupported == Supported.Supported&& subsystem.TryGetEnvironmentDepthConfidence(out var environmentDepthConfidenceDescriptor)){m_EnvironmentDepthConfidenceTextureInfo = ARTextureInfo.GetUpdatedTextureInfo(m_EnvironmentDepthConfidenceTextureInfo,environmentDepthConfidenceDescriptor);DebugAssert.That(m_EnvironmentDepthConfidenceTextureInfo.descriptor.dimension == TextureDimension.Tex2D|| m_EnvironmentDepthConfidenceTextureInfo.descriptor.dimension == TextureDimension.None)?.WithMessage("Environment depth confidence texture needs to be a Texture 2D, but instead is "+ $"{m_EnvironmentDepthConfidenceTextureInfo.descriptor.dimension.ToString()}.");return m_EnvironmentDepthConfidenceTextureInfo.texture as Texture2D;}return null;}}/// <summary>/// Attempt to get the latest human depth CPU image. This provides direct access to the raw pixel data./// </summary>/// <remarks>/// The `XRCpuImage` must be disposed to avoid resource leaks./// </remarks>/// <param name="cpuImage">If this method returns `true`, an acquired `XRCpuImage`.</param>/// <returns>Returns `true` if the CPU image was acquired. Returns `false` otherwise.</returns>public bool TryAcquireHumanDepthCpuImage(out XRCpuImage cpuImage){if (descriptor?.humanSegmentationDepthImageSupported == Supported.Supported){return subsystem.TryAcquireHumanDepthCpuImage(out cpuImage);}cpuImage = default;return false;}/// <summary>/// The environment depth texture./// </summary>/// <value>/// The environment depth texture, if any. Otherwise, <c>null</c>./// </value>public Texture2D environmentDepthTexture{get{if (descriptor?.environmentDepthImageSupported == Supported.Supported&& subsystem.TryGetEnvironmentDepth(out var environmentDepthDescriptor)){m_EnvironmentDepthTextureInfo = ARTextureInfo.GetUpdatedTextureInfo(m_EnvironmentDepthTextureInfo,environmentDepthDescriptor);DebugAssert.That(m_EnvironmentDepthTextureInfo.descriptor.dimension == TextureDimension.Tex2D|| m_EnvironmentDepthTextureInfo.descriptor.dimension == TextureDimension.None)?.WithMessage("Environment depth texture needs to be a Texture 2D, but instead is "+ $"{m_EnvironmentDepthTextureInfo.descriptor.dimension.ToString()}.");return m_EnvironmentDepthTextureInfo.texture as Texture2D;}return null;}}/// <summary>/// Attempt to get the latest environment depth CPU image. This provides direct access to the raw pixel data./// </summary>/// <remarks>/// The `XRCpuImage` must be disposed to avoid resource leaks./// </remarks>/// <param name="cpuImage">If this method returns `true`, an acquired `XRCpuImage`.</param>/// <returns>Returns `true` if the CPU image was acquired. Returns `false` otherwise.</returns>public bool TryAcquireEnvironmentDepthCpuImage(out XRCpuImage cpuImage){if (descriptor?.environmentDepthImageSupported == Supported.Supported){return subsystem.TryAcquireEnvironmentDepthCpuImage(out cpuImage);}cpuImage = default;return false;}/// <summary>/// Attempt to get the latest raw environment depth CPU image. This provides direct access to the raw pixel data./// </summary>/// <remarks>/// > [!NOTE]/// > The `XRCpuImage` must be disposed to avoid resource leaks./// This differs from <see cref="TryAcquireEnvironmentDepthCpuImage"/> in that it always tries to acquire the/// raw environment depth image, whereas <see cref="TryAcquireEnvironmentDepthCpuImage"/> depends on the value/// of <see cref="environmentDepthTemporalSmoothingEnabled"/>./// </remarks>/// <param name="cpuImage">If this method returns `true`, an acquired `XRCpuImage`.</param>/// <returns>Returns `true` if the CPU image was acquired. Returns `false` otherwise.</returns>public bool TryAcquireRawEnvironmentDepthCpuImage(out XRCpuImage cpuImage){if (subsystem == null){cpuImage = default;return false;}return subsystem.TryAcquireRawEnvironmentDepthCpuImage(out cpuImage);}/// <summary>/// Attempt to get the latest smoothed environment depth CPU image. This provides direct access to/// the raw pixel data./// </summary>/// <remarks>/// > [!NOTE]/// > The `XRCpuImage` must be disposed to avoid resource leaks./// This differs from <see cref="TryAcquireEnvironmentDepthCpuImage"/> in that it always tries to acquire the/// smoothed environment depth image, whereas <see cref="TryAcquireEnvironmentDepthCpuImage"/>/// depends on the value of <see cref="environmentDepthTemporalSmoothingEnabled"/>./// </remarks>/// <param name="cpuImage">If this method returns `true`, an acquired `XRCpuImage`.</param>/// <returns>Returns `true` if the CPU image was acquired. Returns `false` otherwise.</returns>public bool TryAcquireSmoothedEnvironmentDepthCpuImage(out XRCpuImage cpuImage){if (subsystem == null){cpuImage = default;return false;}return subsystem.TryAcquireSmoothedEnvironmentDepthCpuImage(out cpuImage);}/// <summary>/// Callback before the subsystem is started (but after it is created)./// </summary>protected override void OnBeforeStart(){requestedHumanStencilMode = m_HumanSegmentationStencilMode;requestedHumanDepthMode = m_HumanSegmentationDepthMode;requestedEnvironmentDepthMode = m_EnvironmentDepthMode;requestedOcclusionPreferenceMode = m_OcclusionPreferenceMode;environmentDepthTemporalSmoothingRequested = m_EnvironmentDepthTemporalSmoothing;ResetTextureInfos();}/// <summary>/// Callback when the manager is being disabled./// </summary>protected override void OnDisable(){base.OnDisable();ResetTextureInfos();InvokeFrameReceived();}/// <summary>/// Callback as the manager is being updated./// </summary>public void Update(){if (subsystem != null){UpdateTexturesInfos();InvokeFrameReceived();requestedEnvironmentDepthMode = m_EnvironmentDepthMode;requestedHumanDepthMode = m_HumanSegmentationDepthMode;requestedHumanStencilMode = m_HumanSegmentationStencilMode;requestedOcclusionPreferenceMode = m_OcclusionPreferenceMode;environmentDepthTemporalSmoothingRequested = m_EnvironmentDepthTemporalSmoothing;}}void ResetTextureInfos(){m_HumanStencilTextureInfo.Reset();m_HumanDepthTextureInfo.Reset();m_EnvironmentDepthTextureInfo.Reset();m_EnvironmentDepthConfidenceTextureInfo.Reset();}/// <summary>/// Pull the texture descriptors from the occlusion subsystem, and update the texture information maintained by/// this component./// </summary>void UpdateTexturesInfos(){var textureDescriptors = subsystem.GetTextureDescriptors(Allocator.Temp);try{int numUpdated = Math.Min(m_TextureInfos.Count, textureDescriptors.Length);// Update the existing textures that are in common between the two arrays.for (int i = 0; i < numUpdated; ++i){m_TextureInfos[i] = ARTextureInfo.GetUpdatedTextureInfo(m_TextureInfos[i], textureDescriptors[i]);}// If there are fewer textures in the current frame than we had previously, destroy any remaining unneeded// textures.if (numUpdated < m_TextureInfos.Count){for (int i = numUpdated; i < m_TextureInfos.Count; ++i){m_TextureInfos[i].Reset();}m_TextureInfos.RemoveRange(numUpdated, (m_TextureInfos.Count - numUpdated));}// Else, if there are more textures in the current frame than we have previously, add new textures for any// additional descriptors.else if (textureDescriptors.Length > m_TextureInfos.Count){for (int i = numUpdated; i < textureDescriptors.Length; ++i){m_TextureInfos.Add(new ARTextureInfo(textureDescriptors[i]));}}}finally{if (textureDescriptors.IsCreated){textureDescriptors.Dispose();}}}/// <summary>/// Invoke the occlusion frame received event with the updated textures and texture property IDs./// </summary>void InvokeFrameReceived(){if (frameReceived != null){int numTextureInfos = m_TextureInfos.Count;m_Textures.Clear();m_TexturePropertyIds.Clear();m_Textures.Capacity = numTextureInfos;m_TexturePropertyIds.Capacity = numTextureInfos;for (int i = 0; i < numTextureInfos; ++i){DebugAssert.That(m_TextureInfos[i].descriptor.dimension == TextureDimension.Tex2D)?.WithMessage($"Texture needs to be a Texture 2D, but instead is {m_TextureInfos[i].descriptor.dimension.ToString()}.");m_Textures.Add((Texture2D)m_TextureInfos[i].texture);m_TexturePropertyIds.Add(m_TextureInfos[i].descriptor.propertyNameId);}subsystem.GetMaterialKeywords(out List<string> enabledMaterialKeywords, out List<string>disabledMaterialKeywords);AROcclusionFrameEventArgs args = new AROcclusionFrameEventArgs();args.textures = m_Textures;args.propertyNameIds = m_TexturePropertyIds;args.enabledMaterialKeywords = enabledMaterialKeywords;args.disabledMaterialKeywords = disabledMaterialKeywords;frameReceived(args);}}}

}使用EQ-R实现

EQ-R

简介

EQ-Renderer是EQ基于sceneform(filament)扩展的一个用于安卓端的三维AR渲染器。

主要功能

它包含sceneform_v1.16.0中九成接口(剔除了如sfb资源加载等已弃用的内容),扩展了视频背景视图、解决了sceneform模型加载的内存泄漏问题、集成了AREngine和ORB-SLAM3、添加了场景坐标与地理坐标系(CGCS-2000)的转换方法。

注:由于精力有限,文档和示例都不完善。sceneform相关请直接参考谷歌官方文档,扩展部分接口说明请移步git联系。

相关链接

Git仓库

- EQ-Renderer的示例工程

码云

- EQ-Renderer的示例工程

EQ-R相关文档

- 文档目录

使用示例

需要在安卓清单中添加其值设为“com.google.ar.core.depth>

接口调用

在使用ARSceneLayout 创建AR布局控件时,在适当的地方修改深度遮挡模式即可,示例如下。

ARSceneLayout layout = new ARSceneLayout(this);//使用普通3d视图

layout.getSceneView.getCameraStream()setDepthOcclusionMode(DepthOcclusionMode.DEPTH_OCCLUSION_ENABLED);

实现方式

获取深度图和相机帧,在着色器中根据深度数据处理。

EQ-R基于filament

filament材质如下:

material {name : depth,shadingModel : unlit,blending : opaque,vertexDomain : device,parameters : [{type : samplerExternal,name : cameraTexture},{type : sampler2d,name : depthTexture},{type : float4x4,name : uvTransform}],requires : [uv0]

}fragment {void material(inout MaterialInputs material) {prepareMaterial(material);material.baseColor.rgb = inverseTonemapSRGB(texture(materialParams_cameraTexture, getUV0()).rgb);vec2 packed_depth = texture(materialParams_depthTexture, getUV0()).xy;float depth_mm = dot(packed_depth, vec2(255.f, 256.f * 255.f));vec4 view = mulMat4x4Float3(getClipFromViewMatrix(), vec3(0.f, 0.f, -depth_mm / 1000.f));float ndc_depth = view.z / view.w;gl_FragDepth = 1.f - ((ndc_depth + 1.f) / 2.f);}

}vertex {void materialVertex(inout MaterialVertexInputs material) {material.uv0 = mulMat4x4Float3(materialParams.uvTransform, vec3(material.uv0.x, material.uv0.y, 0.f)).xy;}

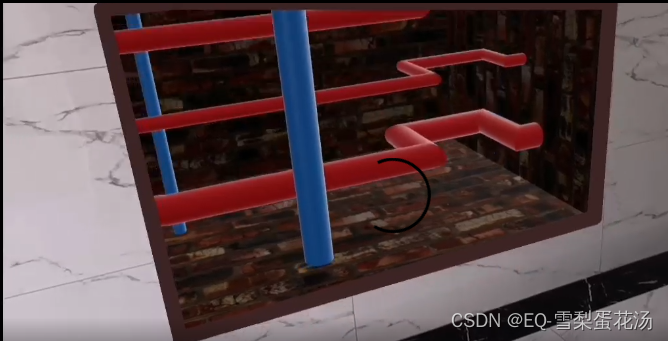

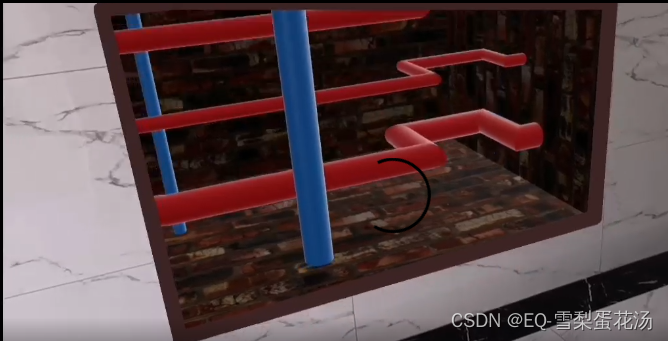

} 示例应用

之前基于Android(Java)做过的示例应用。

管线巡检示例 :开挖显示、卷帘效果…

相关文章:

【AR】使用深度API实现虚实遮挡

遮挡效果 本段描述摘自 https://developers.google.cn/ar/develop/depth 遮挡是深度API的应用之一。 遮挡(即准确渲染虚拟物体在现实物体后面)对于沉浸式 AR 体验至关重要。 参考下图,假设场景中有一个Andy,用户可能需要放置在包含…...

python-pytorch实现skip-gram 0.5.001

python-pytorch实现skip-gram 0.5.000 数据加载、切词准备训练数据准备模型和参数训练保存模型加载模型简单预测获取词向量画一个词向量的分布图使用词向量计算相似度参考数据加载、切词 按照链接https://blog.csdn.net/m0_60688978/article/details/137538274操作后,可以获得…...

C语言:约瑟夫环问题详解

前言 哈喽,宝子们!本期为大家带来一道C语言循环链表的经典算法题(约瑟夫环)。 目录 1.什么是约瑟夫环2.解决方案思路3.创建链表头结点4.创建循环链表5.删除链表6.完整代码实现 1.什么是约瑟夫环 据说著名历史学家Josephus有过以下…...

【刷题篇】回溯算法(二)

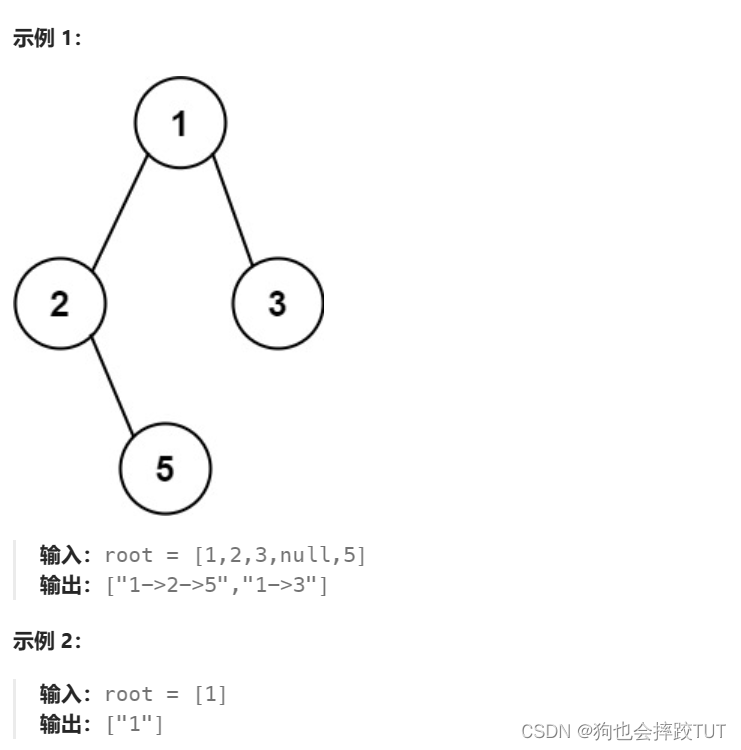

文章目录 1、求根节点到叶节点数字之和2、二叉树剪枝3、验证二叉搜索树4、二叉搜索树中第K小的元素5、二叉树的所有路径 1、求根节点到叶节点数字之和 给你一个二叉树的根节点 root ,树中每个节点都存放有一个 0 到 9 之间的数字。 每条从根节点到叶节点的路径都代表…...

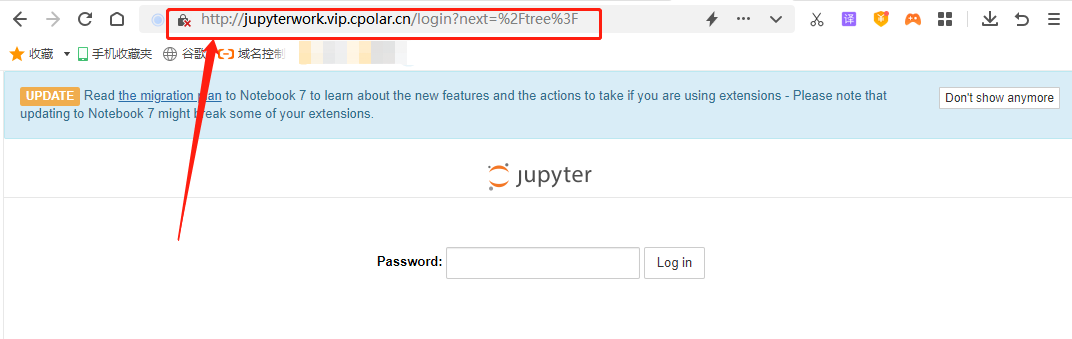

Windows系统本地部署Jupyter Notebook并实现公网访问编辑笔记

文章目录 1.前言2.Jupyter Notebook的安装2.1 Jupyter Notebook下载安装2.2 Jupyter Notebook的配置2.3 Cpolar下载安装 3.Cpolar端口设置3.1 Cpolar云端设置3.2.Cpolar本地设置 4.公网访问测试5.结语 1.前言 在数据分析工作中,使用最多的无疑就是各种函数、图表、…...

Ansible 实战Shell 插件和模块工具)

自动化运维(二十七)Ansible 实战Shell 插件和模块工具

Ansible 支持多种类型的插件,这些插件可以帮助你扩展和定制 Ansible 的功能。每种插件类型都有其特定的用途和应用场景。今天我们一起学习Shell 插件和模块工具。 一、 Shell 插件 Ansible shell 插件决定了 Ansible 如何在远程系统上执行命令。这些插件非常关键&a…...

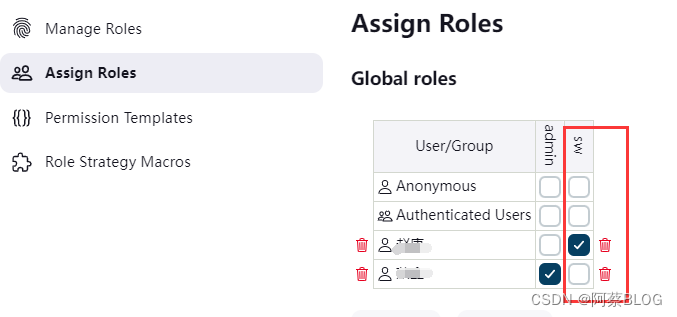

Jenkins使用-绑定域控与用户授权

一、Jenkins安装完成后,企业中使用,首先需要绑定域控以方便管理。 操作方法: 1、备份配置文件,防止域控绑定错误或授权策略选择不对,造成没办法登录,或登录后没有权限操作。 [roottest jenkins]# mkdir ba…...

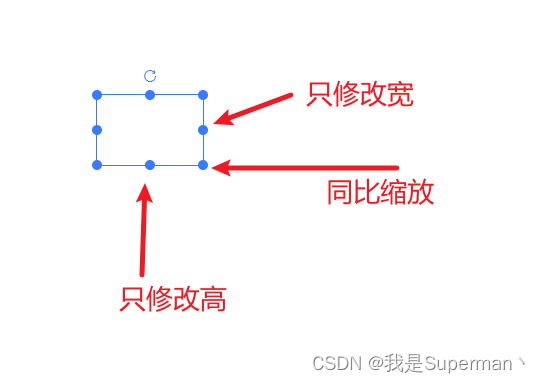

【前端】es-drager 图片同比缩放 缩放比 只修改宽 只修改高

【前端】es-drager 图片同比缩放 缩放比 ES Drager 拖拽组件 (vangleer.github.io) 核心代码 //初始宽 let width ref(108)//初始高 let height ref(72)//以下两个变量 用来区分是单独的修改宽 还是高 或者是同比 //缩放开始时的宽 let oldWidth 0 //缩放开始时的高 let o…...

蓝桥杯第十四届蓝桥杯大赛软件赛省赛C/C++ 大学 A 组题解

1.幸运数 题目链接:0幸运数 - 蓝桥云课 (lanqiao.cn) #include<bits/stdc.h> using namespace std; bool deng(string& num){int n num.size();int qian 0,hou 0;for(int i0;i<n/2;i) qian (num[i]-0);for(int in/2;i<n;i) hou (num[i]-0);r…...

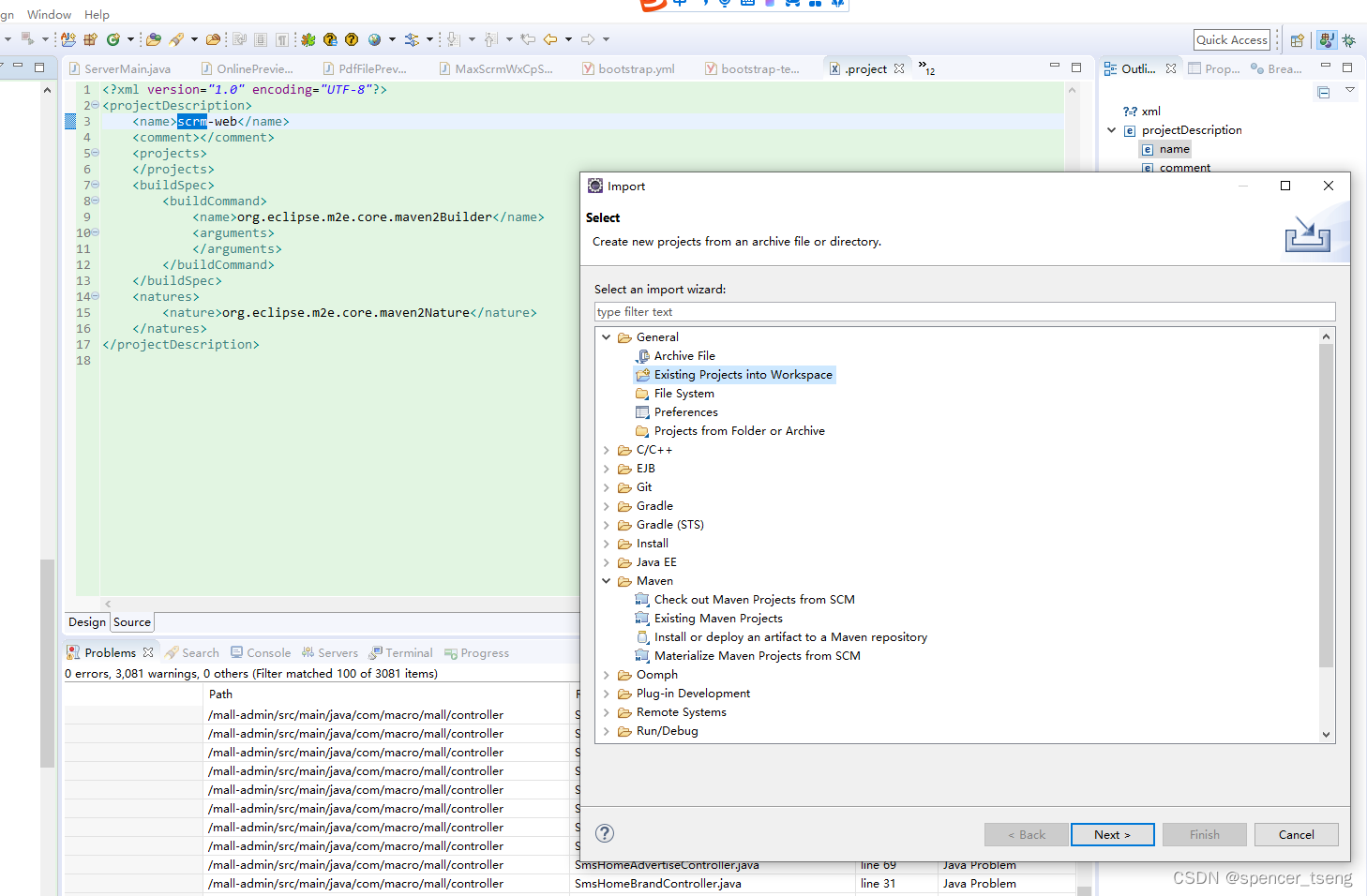

eclipse .project

.project <?xml version"1.0" encoding"UTF-8"?> <projectDescription> <name>scrm-web</name> <comment></comment> <projects> </projects> <buildSpec> <buil…...

react的闭包陷阱

React 的闭包陷阱是指在使用 React Hooks 时,由于闭包特性导致在某些函数或异步操作中无法正确访问到更新后状态或 prop 的值,而仍旧使用了旧值。下面通过几个代码示例来具体说明闭包陷阱的几种常见情形: 示例 1: useState 闭包陷阱 import…...

神经网络解决回归问题(更新ing)

神经网络应用于回归问题 优势是什么???生成数据集:通用神经网络拟合函数调整不同参数对比结果初始代码结果调整神经网络结构调整激活函数调整迭代次数增加早停法变量归一化处理正则化系数调整学习率调整 总结ingfnn.py进行计算&am…...

【小红书校招场景题】12306抢票系统

1 坐过高铁吧,有抢过票吗。你说说抢票系统对于后端开发人员而言会有哪些情况? 对于后端开发人员来说,开发和维护一个高铁抢票系统(如中国的12306)会面临一系列的挑战和情况。这些挑战主要涉及系统的性能、稳定性、数据…...

)

Spring(三)

1. Spring单例Bean是不是线程安全的? Spring单例Bean默认并不是线程安全的。由于多个线程可能访问同一份Bean实例,当Bean的内部包含了可变状态(mutable state)即有可修改的成员变量时,就可能出现线程安全问题。Spring容器不会自动…...

使用element-plus中的表单验证

标签页代码如下: // 注意:el-form中的数据绑定不可以用v-model,要使用:model <el-form ref"ruleFormRef" :rules"rules" :model"userTemp" label-width"80px"><el-row :gutter"20&qu…...

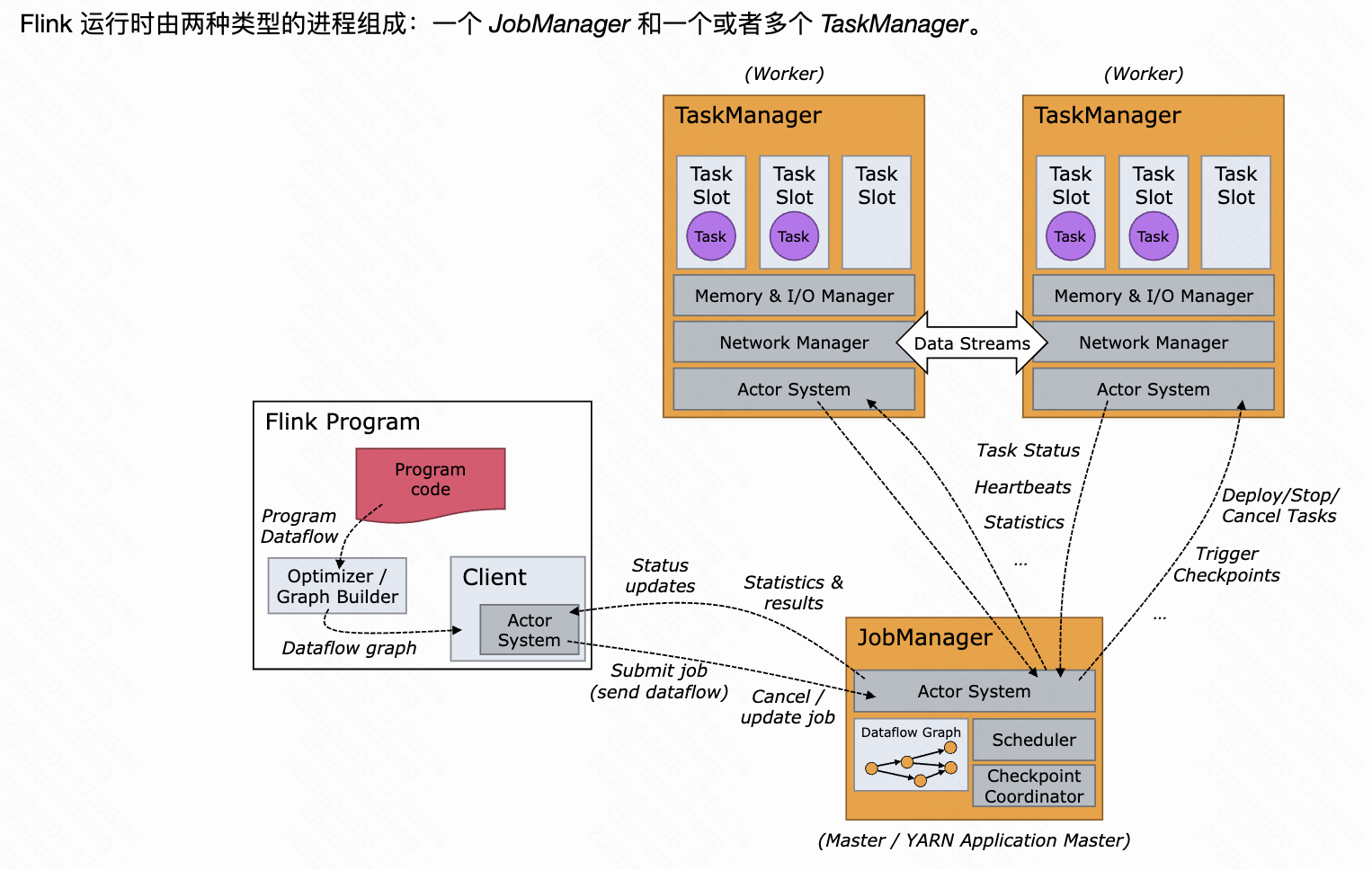

flinksql

Flink SQL 是 Apache Flink 项目中的一个重要组成部分,它允许开发者使用标准的 SQL 语言来处理流数据和批处理数据。Flink SQL 提供了一种声明式的编程范式,使得用户能够以一种简洁、高效且易于理解的方式来表达复杂的数据处理逻辑。 ### 背景 Flink SQL 的设计初衷是为了简…...

Dockerfile中 CMD和ENTRYPOINT的区别

在 Dockerfile 中,CMD 和 ENTRYPOINT 都用于指定容器启动时要执行的命令。它们之间的主要区别是: - CMD 用于定义容器启动时要执行的命令和参数,它设置的值可以被 Dockerfile 中的后续指令覆盖,包括在运行容器时传递的参数。如果…...

【TC3xx芯片】TC3xx芯片的总线内存保护

前言 广义上的内存保护,包括<<【TC3xx芯片】TC3xx芯片MPU介绍>>一文介绍的MPU(常规狭义上的内存保护),<<【TC3xx芯片】TC3xx芯片的Endinit功能详解>>一文中介绍的寄存器的EndInit保护,<<【TC3xx芯片】TC3xx芯片ACCEN寄存器保护详解>>一…...

抖音小店选品必经五个阶段,看你到哪一步了,直接决定店铺爆单率

大家好,我是电商笨笨熊 新手选品必经的阶段就是迷茫期,不知道怎么选品,在哪里选品,选择什么样的品; 而有些玩家也会在进入店铺后疯狂选品,但是上架的商品没有销量; 而这些都是每个玩家都要经…...

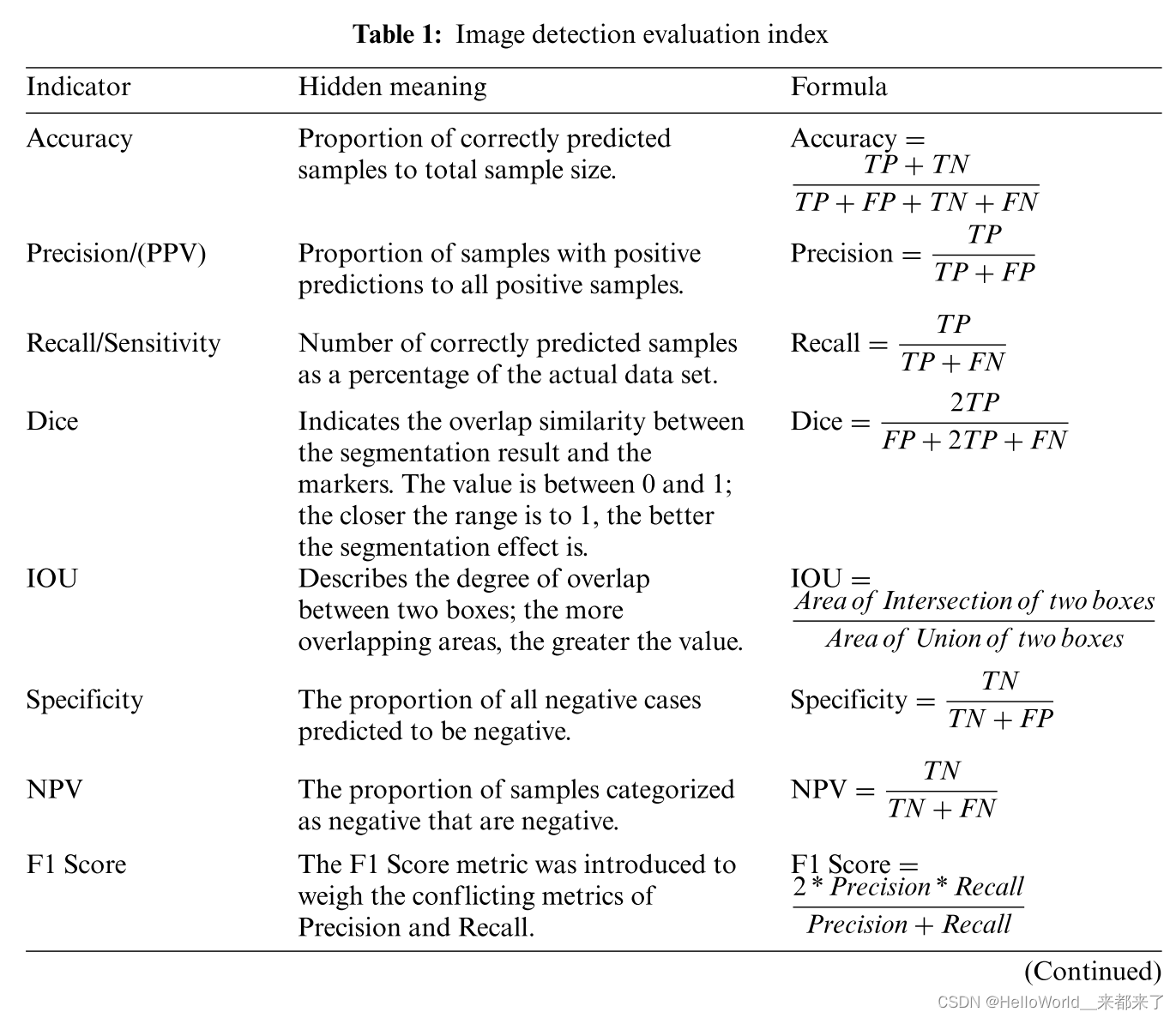

ML在骨科手术术前、书中、术后方法应用综述【含数据集】

达芬奇V手术机器人 近年来,人工智能(AI)彻底改变了人们的生活。人工智能早就在外科领域取得了突破性进展。然而,人工智能在骨科中的应用研究尚处于探索阶段。 本文综述了近年来深度学习和机器学习应用于骨科图像检测的最新成果,描述了其贡献、优势和不足。以及未来每项研究…...

《基于Apache Flink的流处理》笔记

思维导图 1-3 章 4-7章 8-11 章 参考资料 源码: https://github.com/streaming-with-flink 博客 https://flink.apache.org/bloghttps://www.ververica.com/blog 聚会及会议 https://flink-forward.orghttps://www.meetup.com/topics/apache-flink https://n…...

docker 部署发现spring.profiles.active 问题

报错: org.springframework.boot.context.config.InvalidConfigDataPropertyException: Property spring.profiles.active imported from location class path resource [application-test.yml] is invalid in a profile specific resource [origin: class path re…...

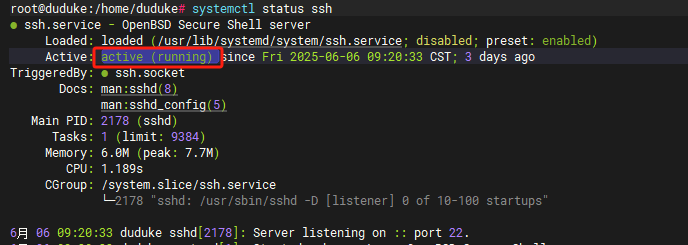

VM虚拟机网络配置(ubuntu24桥接模式):配置静态IP

编辑-虚拟网络编辑器-更改设置 选择桥接模式,然后找到相应的网卡(可以查看自己本机的网络连接) windows连接的网络点击查看属性 编辑虚拟机设置更改网络配置,选择刚才配置的桥接模式 静态ip设置: 我用的ubuntu24桌…...

IP如何挑?2025年海外专线IP如何购买?

你花了时间和预算买了IP,结果IP质量不佳,项目效率低下不说,还可能带来莫名的网络问题,是不是太闹心了?尤其是在面对海外专线IP时,到底怎么才能买到适合自己的呢?所以,挑IP绝对是个技…...

无人机侦测与反制技术的进展与应用

国家电网无人机侦测与反制技术的进展与应用 引言 随着无人机(无人驾驶飞行器,UAV)技术的快速发展,其在商业、娱乐和军事领域的广泛应用带来了新的安全挑战。特别是对于关键基础设施如电力系统,无人机的“黑飞”&…...

【Android】Android 开发 ADB 常用指令

查看当前连接的设备 adb devices 连接设备 adb connect 设备IP 断开已连接的设备 adb disconnect 设备IP 安装应用 adb install 安装包的路径 卸载应用 adb uninstall 应用包名 查看已安装的应用包名 adb shell pm list packages 查看已安装的第三方应用包名 adb shell pm list…...

Scrapy-Redis分布式爬虫架构的可扩展性与容错性增强:基于微服务与容器化的解决方案

在大数据时代,海量数据的采集与处理成为企业和研究机构获取信息的关键环节。Scrapy-Redis作为一种经典的分布式爬虫架构,在处理大规模数据抓取任务时展现出强大的能力。然而,随着业务规模的不断扩大和数据抓取需求的日益复杂,传统…...

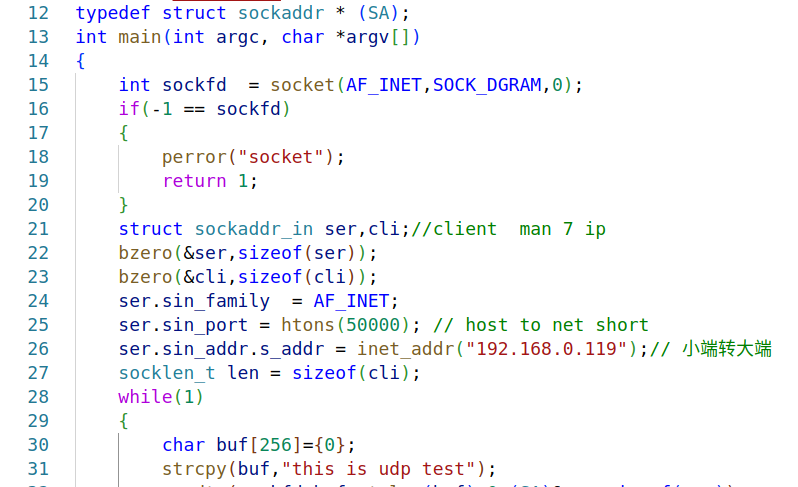

嵌入式学习之系统编程(九)OSI模型、TCP/IP模型、UDP协议网络相关编程(6.3)

目录 一、网络编程--OSI模型 二、网络编程--TCP/IP模型 三、网络接口 四、UDP网络相关编程及主要函数 编辑编辑 UDP的特征 socke函数 bind函数 recvfrom函数(接收函数) sendto函数(发送函数) 五、网络编程之 UDP 用…...

第八部分:阶段项目 6:构建 React 前端应用

现在,是时候将你学到的 React 基础知识付诸实践,构建一个简单的前端应用来模拟与后端 API 的交互了。在这个阶段,你可以先使用模拟数据,或者如果你的后端 API(阶段项目 5)已经搭建好,可以直接连…...

Unity VR/MR开发-VR开发与传统3D开发的差异

视频讲解链接:【XR马斯维】VR/MR开发与传统3D开发的差异【UnityVR/MR开发教程--入门】_哔哩哔哩_bilibili...