深度学习 Day27——利用Pytorch实现运动鞋识别

深度学习 Day27——利用Pytorch实现运动鞋识别

文章目录

- 深度学习 Day27——利用Pytorch实现运动鞋识别

- 一、查看colab机器配置

- 二、前期准备

- 1、导入依赖项并设置GPU

- 2、导入数据

- 三、构建CNN网络

- 四、训练模型

- 1、编写训练函数

- 2、编写测试函数

- 3、设置动态学习率

- 4、正式训练

- 五、结果可视化

- 六、指定图片预测

- 七、保存模型

- 八、修改

🍨 本文为🔗365天深度学习训练营 中的学习记录博客

🍦 参考文章:Pytorch实战 | 第P5周:运动鞋识别

🍖 原作者:K同学啊|接辅导、项目定制

一、查看colab机器配置

print("============查看GPU信息================")

# 查看GPU信息

!/opt/bin/nvidia-smi

print("==============查看pytorch版本==============")

# 查看pytorch版本

import torch

print(torch.__version__)

print("============查看虚拟机硬盘容量================")

# 查看虚拟机硬盘容量

!df -lh

print("============查看cpu配置================")

# 查看cpu配置

!cat /proc/cpuinfo | grep model\ name

print("=============查看内存容量===============")

# 查看内存容量

!cat /proc/meminfo | grep MemTotal

============查看GPU信息================

Fri Mar 17 11:27:06 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.85.12 Driver Version: 525.85.12 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 |

| N/A 69C P0 31W / 70W | 1241MiB / 15360MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------++-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

==============查看pytorch版本==============

1.13.1+cu116

============查看虚拟机硬盘容量================

Filesystem Size Used Avail Use% Mounted on

overlay 79G 26G 53G 33% /

tmpfs 64M 0 64M 0% /dev

shm 5.7G 19M 5.7G 1% /dev/shm

/dev/root 2.0G 1.1G 841M 58% /usr/sbin/docker-init

/dev/sda1 78G 46G 33G 59% /opt/bin/.nvidia

tmpfs 6.4G 36K 6.4G 1% /var/colab

tmpfs 6.4G 0 6.4G 0% /proc/acpi

tmpfs 6.4G 0 6.4G 0% /proc/scsi

tmpfs 6.4G 0 6.4G 0% /sys/firmware

drive 15G 3.9G 12G 26% /content/drive

============查看cpu配置================

model name : Intel(R) Xeon(R) CPU @ 2.20GHz

model name : Intel(R) Xeon(R) CPU @ 2.20GHz

=============查看内存容量===============

MemTotal: 13297200 kB

二、前期准备

1、导入依赖项并设置GPU

如果电脑支持GPU就使用GPU,否则就使用CPU。

import torch

import torch.nn as nn

import torchvision.transforms as transforms

import torchvision

from torchvision import transforms, datasets

import os,PIL,pathlibdevice = torch.device("cuda" if torch.cuda.is_available() else "cpu")device

device(type='cuda')

上面代码是用来导入所需的PyTorch库和设置设备的代码。

首先,代码导入了PyTorch库和一些常用的库,包括:

torch: PyTorch主库,用于定义和运行神经网络模型。torch.nn: PyTorch的神经网络模块,包括各种层、激活函数等组件。torchvision.transforms: PyTorch的图像变换模块,用于对图像进行预处理和增强。torchvision: PyTorch的计算机视觉库,包括各种数据集和模型。

接下来,代码定义了device变量,它会被用于指定模型运行的设备。如果计算机上有GPU可用,就会使用GPU作为设备,否则使用CPU。

最后,代码使用了device变量来确保在代码中指定的所有操作都在正确的设备上运行。

2、导入数据

import os,PIL,random,pathlibdata_dir = '/content/drive/Othercomputers/我的笔记本电脑/深度学习/data/Day14'

data_dir = pathlib.Path(data_dir)data_paths = list(data_dir.glob('*'))

classeNames = [str(path).split("/")[8] for path in data_paths]

classeNames

['train', 'test']

上面代码是用来加载数据集的代码。

首先,代码导入了一些常用的库,包括:

os: Python的标准库,用于操作文件和目录。PIL: Python Imaging Library,用于图像处理和操作。random: Python的随机数库,用于生成随机数。pathlib: Python的路径处理库,用于处理文件路径。

接下来,代码定义了data_dir变量,它是数据集所在的文件夹路径。然后,代码使用pathlib库中的Path方法将data_dir转换成Path对象。接着代码使用glob方法遍历data_dir下的所有文件,并将文件路径存储在data_paths列表中。最后代码使用列表解析将每个文件的类别名称提取出来,并存储在classeNames列表中。

train_datadir = '/content/drive/Othercomputers/我的笔记本电脑/深度学习/data/Day14/train/'

test_datadir = '/content/drive/Othercomputers/我的笔记本电脑/深度学习/data/Day14/test/'

train_transforms = transforms.Compose([transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛mean=[0.485, 0.456, 0.406],std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])test_transforms = transforms.Compose([transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛mean=[0.485, 0.456, 0.406],std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])train_dataset = datasets.ImageFolder(train_datadir,transform=train_transforms)

test_dataset = datasets.ImageFolder(test_datadir,transform=test_transforms)

上面这段代码是用来进行数据预处理和加载数据集的代码。

首先,代码定义了train_datadir和test_datadir变量,分别表示训练集和测试集所在的文件夹路径。

接着,代码使用transforms.Compose方法定义了两个数据预处理管道,分别是train_transforms和test_transforms。这些预处理管道包括三个操作:

transforms.Resize([224, 224]): 将输入图片resize成统一的尺寸,即将图片的宽和高都调整为224像素。transforms.ToTensor(): 将PIL Image或numpy.ndarray转换为tensor,并将像素值归一化到[0,1]之间。transforms.Normalize(): 标准化处理,将每个像素的数值转换为标准正太分布(高斯分布),使模型更容易收敛。其中,mean和std分别表示在每个通道上的均值和标准差,这些值从数据集中随机抽样计算得到。

最后,代码使用datasets.ImageFolder方法加载数据集,并将数据预处理管道作为参数传递进去,以便对数据进行预处理。这个方法会自动根据文件夹名字将图片分配到对应的类别中,即根据文件夹名字判断图片所属的类别,并返回一个ImageFolder对象。该对象包括classes属性和class_to_idx属性,分别表示数据集中所有类别的名称和对应的索引。

train_dataset

Dataset ImageFolderNumber of datapoints: 502Root location: /content/drive/Othercomputers/我的笔记本电脑/深度学习/data/Day14/train/StandardTransform

Transform: Compose(Resize(size=[224, 224], interpolation=bilinear, max_size=None, antialias=None)ToTensor()Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]))

test_dataset

Dataset ImageFolderNumber of datapoints: 76Root location: /content/drive/Othercomputers/我的笔记本电脑/深度学习/data/Day14/test/StandardTransform

Transform: Compose(Resize(size=[224, 224], interpolation=bilinear, max_size=None, antialias=None)ToTensor()Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]))

train_dataset.class_to_idx

{'adidas': 0, 'nike': 1}

train_dataset.class_to_idx返回一个字典,其中键是类别的名称,值是类别的索引。例如,如果数据集中有两个类别。

batch_size = 32train_dl = torch.utils.data.DataLoader(train_dataset,batch_size=batch_size,shuffle=True,num_workers=1)

test_dl = torch.utils.data.DataLoader(test_dataset,batch_size=batch_size,shuffle=True,num_workers=1)

这段代码使用PyTorch中的DataLoader类将数据集分成小批量。DataLoader类是一个迭代器,它为我们提供了一个方便的方式来遍历数据集。具体来说,batch_size参数指定每个小批量的大小,shuffle参数用于在每个epoch之前对数据集进行洗牌,以便每个小批量的数据是随机的。num_workers参数指定用于加载数据的子进程数量。

在这段代码中,train_dl和test_dl分别是训练集和测试集的DataLoader对象。它们可以用于迭代数据集中的所有小批量。

for X, y in test_dl:print(X.shape, y.shape)break

torch.Size([32, 3, 224, 224]) torch.Size([32])

这段代码用于检查测试集test_dl中的一个小批量的数据形状。具体来说,它迭代test_dl并打印出每个小批量的X和y的形状。X是一个包含小批量图像的张量,y是一个包含小批量标签的张量。

在这里,break语句是用来退出循环的。由于我们只想查看测试集中的第一个小批量,所以在迭代完第一个小批量后,我们使用break退出循环,从而避免迭代整个测试集。

三、构建CNN网络

import torch.nn.functional as Fclass Model(nn.Module):def __init__(self):super(Model, self).__init__()self.conv1=nn.Sequential(nn.Conv2d(3, 12, kernel_size=5, padding=0), # 12*220*220nn.BatchNorm2d(12),nn.ReLU())self.conv2=nn.Sequential(nn.Conv2d(12, 12, kernel_size=5, padding=0), # 12*216*216nn.BatchNorm2d(12),nn.ReLU())self.pool3=nn.Sequential(nn.MaxPool2d(2)) # 12*108*108self.conv4=nn.Sequential(nn.Conv2d(12, 24, kernel_size=5, padding=0), # 24*104*104nn.BatchNorm2d(24),nn.ReLU())self.conv5=nn.Sequential(nn.Conv2d(24, 24, kernel_size=5, padding=0), # 24*100*100nn.BatchNorm2d(24),nn.ReLU())self.pool6=nn.Sequential(nn.MaxPool2d(2)) # 24*50*50self.dropout = nn.Sequential(nn.Dropout(0.2))self.fc=nn.Sequential(nn.Linear(24*50*50, len(classeNames)))def forward(self, x):batch_size = x.size(0)x = self.conv1(x) # 卷积-BN-激活x = self.conv2(x) # 卷积-BN-激活x = self.pool3(x) # 池化x = self.conv4(x) # 卷积-BN-激活x = self.conv5(x) # 卷积-BN-激活x = self.pool6(x) # 池化x = self.dropout(x)x = x.view(batch_size, -1) # flatten 变成全连接网络需要的输入 (batch, 24*50*50) ==> (batch, -1), -1 此处自动算出的是24*50*50x = self.fc(x)return xdevice = "cuda" if torch.cuda.is_available() else "cpu"

print("Using {} device".format(device))model = Model().to(device)

model

Using cuda device

Model((conv1): Sequential((0): Conv2d(3, 12, kernel_size=(5, 5), stride=(1, 1))(1): BatchNorm2d(12, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU())(conv2): Sequential((0): Conv2d(12, 12, kernel_size=(5, 5), stride=(1, 1))(1): BatchNorm2d(12, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU())(pool3): Sequential((0): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))(conv4): Sequential((0): Conv2d(12, 24, kernel_size=(5, 5), stride=(1, 1))(1): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU())(conv5): Sequential((0): Conv2d(24, 24, kernel_size=(5, 5), stride=(1, 1))(1): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(2): ReLU())(pool6): Sequential((0): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))(dropout): Sequential((0): Dropout(p=0.2, inplace=False))(fc): Sequential((0): Linear(in_features=60000, out_features=2, bias=True))

)

这里定义了一个名为Model的类,它继承自nn.Module。这个类定义了一个卷积神经网络,用于对图像进行分类。

网络由几个卷积层、池化层、批归一化层和全连接层组成。在定义网络的过程中,使用了nn.Sequential()来包装卷积层、池化层和批归一化层,这样可以简化代码,并使网络的层次结构更加清晰。

在网络的前面几层,我们使用了两个卷积层和两个池化层,用于提取图像特征。在每个卷积层之后,我们使用了批归一化层来归一化特征,并使用ReLU激活函数来进行非线性变换。

在网络的后面,我们使用了一个dropout层来防止过拟合,并使用全连接层来对特征进行分类。

这个网络的输入是一张大小为(3,224,224)的RGB图像,它的输出是一个长度为类别数的向量,每个元素表示对应类别的概率。在定义网络时,我们使用了GPU进行加速。

for parameters in model.parameters():print(parameters)

Parameter containing:

tensor([[[[ 9.6060e-02, 9.2401e-02, 6.7425e-02, 1.2056e-02, -9.9758e-02],[-1.0824e-02, 9.7335e-02, 9.3475e-02, -2.5571e-02, -5.7923e-02],[ 4.9415e-02, 9.8333e-02, 5.7355e-02, 4.8626e-02, 1.1481e-01],[ 1.0505e-01, 1.1402e-01, 5.8047e-02, -6.0545e-02, 2.7217e-02],[ 1.0115e-02, -1.0047e-01, 1.0272e-01, -1.0180e-01, 5.2264e-02]],[[-4.5628e-03, 6.7565e-02, -5.0048e-02, 8.5315e-03, -2.5174e-02],[ 4.9135e-02, -2.7706e-02, -4.8262e-02, -9.0985e-02, 8.1255e-02],[-1.0129e-03, 1.8318e-02, -1.0743e-01, 1.1761e-02, -4.2666e-02],[-8.2110e-02, 2.0379e-02, 9.3840e-02, -7.1574e-02, 1.0615e-02],[-1.9736e-02, 7.6325e-03, -7.0950e-02, 1.9778e-02, 4.2545e-02]],[[ 5.8976e-02, 9.7360e-02, 1.8907e-02, -9.0375e-02, -6.2898e-02],[ 9.0713e-02, 3.2614e-03, 8.0968e-02, -2.7650e-02, 7.2571e-02],[ 7.1617e-02, -1.6072e-02, 1.1266e-01, -6.7463e-02, -6.4749e-02],[ 1.0922e-01, 9.7300e-02, -4.7397e-02, -3.5485e-02, 1.0837e-02],[-2.2401e-02, -1.5296e-02, 7.4977e-02, 7.9816e-02, -1.1275e-01]]],[[[-2.1675e-02, -7.1416e-02, 2.4015e-02, 1.1010e-01, -1.0515e-01],[-8.8525e-02, 3.6220e-02, -1.0883e-01, 2.2257e-02, 1.0374e-01],[ 9.4717e-02, 9.5264e-03, 7.8802e-02, -3.5534e-02, 9.8278e-02],[ 9.1698e-02, 9.0658e-02, 1.1031e-01, -1.7678e-02, 9.4840e-02],[ 9.7416e-02, -5.7902e-02, -8.4080e-03, 7.9692e-02, -6.8554e-02]],[[-1.0324e-01, -2.3537e-02, -8.2583e-02, 1.6221e-02, 8.7895e-02],[ 6.4176e-02, 5.3374e-02, 1.0656e-01, -9.4584e-02, -2.9927e-02],[-4.6313e-03, 4.2185e-02, -8.9109e-02, -7.9002e-02, -3.0450e-02],[-8.1337e-02, -5.6258e-02, -2.6750e-02, -6.4592e-02, 7.8501e-03],[ 1.9053e-02, -8.6203e-02, 5.3780e-02, -4.0041e-02, 1.0404e-01]],[[ 1.8114e-02, 3.8020e-02, 3.6531e-02, -9.7727e-02, -4.5570e-03],[ 6.5286e-03, 1.4791e-02, 3.4773e-02, 3.9594e-02, 7.2633e-02],[ 1.0241e-01, 2.2616e-02, 1.0698e-01, 9.0856e-02, -6.3831e-02],[-9.1372e-02, 2.4406e-03, -6.2849e-02, 7.0582e-02, -9.5347e-02],[-5.5474e-02, -4.8788e-02, -1.5687e-02, -9.8820e-02, 2.8222e-02]]],[[[-5.7229e-02, -5.1886e-02, 8.7926e-02, 1.5402e-02, 1.0498e-01],[ 1.1613e-02, 5.1340e-02, 5.7629e-02, -5.7509e-03, 7.0367e-02],[ 1.1131e-01, -3.8583e-02, 7.3952e-02, 5.4477e-02, 1.6835e-02],[-6.3244e-02, -7.0780e-04, -3.7792e-02, 8.5897e-02, 8.5086e-02],[ 2.9140e-02, -5.9367e-02, 1.0532e-01, 8.4249e-02, -1.8344e-02]],[[-9.8224e-02, -7.3789e-02, 1.1015e-01, -4.1248e-02, -9.0569e-02],[-9.0312e-03, 2.8969e-02, -2.5401e-02, -2.0637e-02, -1.0546e-01],[-7.1113e-02, 7.4204e-02, -2.6185e-02, -1.0684e-01, -1.0170e-02],[ 1.7048e-02, -3.3188e-02, -4.4334e-02, 7.1460e-02, 4.9882e-02],[-8.2371e-02, 1.0903e-01, -7.8248e-02, -2.7929e-02, -4.8683e-02]],[[-1.4578e-02, -1.0031e-01, -1.0676e-01, 8.7522e-02, -9.5921e-02],[ 1.1057e-02, 4.4337e-02, 1.0647e-01, 3.4220e-02, 1.1171e-01],[-5.8056e-02, -9.9917e-02, -2.9454e-02, 4.8303e-02, -4.0440e-02],[ 4.6228e-02, -2.9272e-02, -4.8776e-03, 9.3157e-02, -8.3275e-02],[ 3.5485e-02, 7.9741e-02, 4.6202e-02, -3.2463e-02, -9.0073e-02]]],[[[ 9.3934e-02, -1.0687e-01, 7.0664e-02, 1.1293e-01, -8.9853e-02],[ 9.1418e-02, 6.9751e-02, 1.0671e-01, 1.0613e-01, 5.6401e-02],[ 3.1894e-04, 8.7670e-02, -8.6517e-02, 7.1982e-02, -9.4428e-02],[ 9.1296e-02, 2.8231e-02, -2.9064e-02, -1.0517e-02, -8.1529e-02],[ 8.2028e-02, 3.1867e-02, 9.2387e-02, -7.9620e-02, -9.6493e-02]],[[-7.2534e-02, -6.2164e-02, -3.4466e-02, 5.1867e-02, -6.1500e-02],[-2.8505e-02, -5.0705e-02, 4.6551e-02, 9.2039e-02, 3.6352e-03],[-2.4083e-03, -7.4435e-02, -6.0531e-02, 8.3948e-02, -6.2877e-02],[ 8.3239e-03, -6.2506e-03, -5.1556e-02, 2.0557e-02, 2.1349e-02],[ 9.3386e-03, -6.4527e-02, -7.7853e-02, -5.5826e-02, -5.5201e-02]],[[-3.9760e-02, 9.6665e-02, 2.9193e-02, 5.6278e-02, -1.1203e-01],[-6.8661e-02, 4.7977e-02, 6.2226e-02, 6.4397e-02, 4.9699e-02],[ 6.5160e-02, 6.6359e-02, -4.8101e-03, 1.1534e-01, -1.1319e-01],[-3.5586e-02, -1.0979e-01, -5.9885e-02, -4.1243e-02, -2.8018e-02],[ 6.8514e-02, -1.4599e-03, 8.3349e-02, -9.6240e-02, 8.1126e-02]]],[[[-8.7761e-03, -4.2422e-02, -7.0019e-02, 5.5346e-02, 5.8148e-02],[-2.9922e-02, 8.0398e-02, -5.9926e-02, 6.5433e-02, 6.7134e-02],[ 1.1085e-01, 9.8875e-02, -9.2395e-02, 1.7918e-02, -2.6618e-02],[ 8.6992e-02, 9.0826e-02, 4.3505e-02, 1.0802e-01, 9.9428e-02],[ 2.9911e-02, 4.2577e-02, 3.4400e-02, -8.1177e-02, -6.9407e-03]],[[ 1.3570e-02, -3.2604e-02, 9.9270e-02, -9.3832e-02, 4.0635e-02],[-3.8474e-02, 1.3652e-02, 7.6710e-02, 1.0913e-01, -3.0779e-05],[ 3.3729e-02, 8.0929e-02, -3.4831e-02, -8.9535e-02, 2.9060e-02],[-7.2350e-02, -1.7538e-02, 6.4653e-02, -6.3036e-02, 2.7376e-02],[-8.6012e-02, -7.2183e-02, -2.9265e-02, -2.3666e-03, 3.1678e-02]],[[-5.5753e-02, -6.5099e-02, 5.0300e-02, -8.8747e-02, 7.1041e-02],[ 5.2973e-02, 3.1178e-02, 9.8396e-02, -1.6825e-02, -1.0855e-02],[ 1.7781e-02, 9.6180e-03, 2.2585e-03, -9.3196e-02, 6.6472e-02],[-1.1627e-02, -8.5261e-05, -6.9102e-04, -7.4616e-02, 4.9522e-02],[-1.1995e-03, 8.8120e-02, 3.7533e-02, -8.3185e-03, -3.7896e-02]]],[[[-9.2244e-02, -3.0946e-03, 5.4144e-02, -2.6193e-02, 4.8180e-03],[ 3.0559e-02, 4.2104e-02, 7.5642e-03, 2.3811e-02, -8.6459e-02],[ 3.8885e-02, 1.9563e-02, 1.1027e-01, -5.7230e-02, -1.5344e-02],[-3.3341e-02, 6.0089e-02, 8.3564e-02, 4.5455e-02, 8.2937e-02],[ 7.6337e-04, -4.2774e-02, 1.0761e-01, -1.0706e-01, 2.5963e-02]],[[-8.9589e-02, -7.7814e-02, -3.9726e-02, -9.1750e-02, 2.3158e-02],[-6.7212e-02, -5.7985e-02, -6.0730e-03, 2.8567e-02, 1.1057e-01],[ 7.0586e-02, 8.5273e-02, -2.2557e-02, -9.5090e-02, 8.9353e-02],[ 3.8719e-02, -6.0806e-03, 5.5194e-02, -2.3713e-02, -2.2048e-02],[ 1.7679e-02, 1.1429e-01, 3.5072e-02, -6.2151e-02, -1.0629e-01]],[[ 5.6943e-02, 9.8247e-03, -9.6066e-02, 7.1436e-03, 1.0088e-01],[ 1.5573e-02, 8.2000e-02, -1.0410e-02, 6.2976e-02, 2.1665e-02],[-5.0174e-03, -1.3698e-02, 2.7878e-02, 1.5698e-02, -1.0285e-01],[ 2.7775e-02, -2.4474e-02, -1.0204e-01, 4.5711e-03, -7.9750e-02],[ 2.3286e-02, 1.0110e-01, -6.4626e-03, 5.3503e-02, -6.2176e-02]]],[[[-7.5216e-02, -9.5773e-03, -1.1272e-01, -1.0989e-01, 2.6348e-02],[-1.1428e-02, 9.2886e-02, 3.6506e-02, -1.0349e-02, 3.0780e-02],[ 1.4283e-02, -6.7632e-02, -4.1362e-02, 2.1772e-02, -8.6690e-02],[-7.7260e-02, 3.5551e-02, 9.1182e-02, 6.3060e-03, -1.0574e-01],[-2.9827e-02, -9.8185e-02, 1.0389e-01, -8.4487e-02, -7.6125e-02]],[[ 7.7379e-02, 1.0301e-01, 5.9035e-02, 2.4980e-02, -1.1178e-01],[-5.5266e-02, 1.2489e-02, -5.2666e-02, -1.3631e-02, 3.2286e-02],[-4.8603e-02, 1.4178e-02, 1.1213e-01, 7.2701e-02, 1.1099e-02],[ 6.4582e-02, 3.8573e-02, 1.1261e-01, -6.9392e-03, -7.4693e-02],[ 1.3693e-02, -1.1937e-02, 9.9110e-02, 8.8679e-03, -6.7556e-02]],[[-5.9277e-02, -1.0004e-01, 4.1195e-02, -7.8211e-03, 1.0107e-01],[ 1.6598e-02, 4.2764e-02, -2.2293e-02, 7.9749e-02, 3.7418e-02],[ 2.8809e-02, -5.6906e-02, -1.0899e-01, -7.8994e-02, -5.4737e-03],[-1.8195e-03, -2.6369e-02, -3.8481e-02, 6.1187e-03, 8.0916e-02],[-2.5823e-02, -5.3791e-02, -3.4665e-02, 5.7799e-02, 5.9005e-03]]],[[[-5.4567e-02, 1.0023e-01, -4.9600e-02, 1.5484e-02, -4.0421e-02],[-2.8957e-02, -2.2951e-02, 6.4814e-02, 9.3404e-02, -4.3484e-02],[-7.9605e-02, 1.1459e-01, 8.9916e-02, 1.1108e-01, -2.4005e-02],[ 6.9501e-02, -9.6456e-02, -4.0463e-02, -1.0111e-02, 9.7287e-02],[ 1.4227e-03, -3.1944e-02, -1.1036e-01, -5.2793e-02, -8.1813e-02]],[[-8.5544e-02, 8.5575e-02, 7.1630e-02, -6.2161e-02, 9.7048e-02],[ 6.1794e-02, 3.2620e-02, -9.5251e-02, -1.0472e-01, 1.1528e-02],[-9.4783e-02, 5.1553e-02, -8.4402e-02, -4.9575e-02, 1.3916e-02],[ 9.3911e-02, -7.3401e-02, -1.0693e-01, 5.9657e-02, 1.0102e-02],[-1.1245e-01, 1.3848e-02, 1.0733e-01, -5.4834e-02, 1.3857e-02]],[[-7.8346e-02, 1.1503e-01, 5.0171e-02, 1.0722e-01, -3.8009e-02],[ 3.5175e-02, -3.1137e-02, 1.0037e-01, -4.7540e-02, 1.0512e-01],[ 9.0498e-02, 9.7212e-02, -3.0792e-02, -6.7146e-02, -8.7555e-02],[ 1.1393e-01, 1.1241e-01, -7.7737e-02, -4.3894e-02, 7.5398e-02],[-9.8697e-02, 1.1200e-02, -8.2761e-02, -9.8116e-02, 4.0538e-03]]],[[[-8.9392e-02, -7.4820e-02, 1.9807e-02, -4.1498e-02, 3.3555e-02],[ 4.8399e-02, -7.9680e-02, -7.4584e-02, -4.7271e-02, 5.3030e-02],[-6.8662e-02, 5.6404e-02, -7.6703e-02, -7.1734e-02, 1.1141e-02],[-3.2438e-02, -8.4010e-02, -8.5007e-02, -4.4276e-02, -7.8046e-02],[-7.6149e-03, 1.1397e-01, 7.0345e-02, 8.9544e-02, -1.1147e-01]],[[-2.4516e-02, 3.6588e-02, 1.5961e-02, 6.4551e-02, 9.0823e-02],[-3.8173e-02, -6.5932e-02, 4.6742e-02, -5.9848e-02, -9.1452e-02],[ 8.6022e-02, 9.8313e-02, 1.0603e-01, -4.8421e-02, 9.5133e-02],[ 1.0049e-01, 9.7302e-02, 8.3417e-02, 7.3290e-03, -4.0101e-02],[ 5.6456e-02, 3.1406e-02, -3.0831e-02, 7.7410e-02, 7.5253e-02]],[[ 8.8699e-02, -1.9792e-02, 8.7227e-02, -3.0146e-02, -3.1689e-02],[ 8.6143e-04, 5.6476e-02, 1.5957e-02, 9.9424e-02, 3.0322e-02],[ 6.8769e-02, 1.0833e-01, 2.5446e-02, 3.0413e-02, -4.9491e-02],[ 4.6998e-02, -2.7495e-03, -1.3401e-02, -1.0524e-01, 3.9163e-02],[ 5.3617e-03, -7.3668e-02, -1.0164e-01, -5.6592e-02, -6.4099e-02]]],[[[ 1.0758e-01, 3.0167e-02, -7.1444e-03, 1.0705e-02, 2.7975e-02],[-2.7629e-02, 9.8985e-02, -6.3463e-02, -9.9678e-02, -1.1469e-01],[-3.3050e-02, -5.1732e-02, -6.9894e-02, 2.0082e-02, -8.4052e-02],[-6.3114e-02, -1.1535e-01, -7.7200e-03, 8.8712e-02, -9.1910e-02],[ 9.9866e-02, 2.4586e-02, -1.9217e-02, 8.2818e-02, -4.7184e-02]],[[ 1.0822e-01, 9.6348e-02, -6.9164e-02, -5.5828e-02, -2.7494e-02],[-6.4830e-03, 5.1304e-02, -6.8741e-02, 9.3416e-02, 6.4191e-02],[-1.0118e-01, 5.7488e-02, -8.6759e-02, -1.0145e-02, -5.5605e-02],[-6.8587e-02, 8.0847e-02, -6.3793e-02, 1.3385e-02, -7.2546e-02],[ 3.5824e-02, 9.1437e-02, -1.1000e-01, 4.6491e-02, -7.1487e-02]],[[-5.3918e-02, -2.3215e-02, -3.5318e-02, -7.6040e-02, 7.4726e-02],[-1.4138e-02, 8.9504e-02, -5.3532e-02, -4.1313e-02, 3.3654e-02],[-7.3972e-02, 6.1106e-02, -1.0673e-01, -1.0847e-02, 6.0724e-02],[ 3.8895e-02, 1.2127e-02, 8.7929e-02, 3.8534e-02, 2.5439e-02],[-6.2754e-02, 3.6111e-02, 1.3968e-03, 9.9498e-02, 1.0361e-01]]],[[[ 9.0320e-02, -2.3697e-03, -4.5131e-02, -6.0436e-02, 9.1889e-02],[ 7.2517e-02, 5.5456e-02, -3.7808e-02, 2.6349e-02, 3.4338e-02],[ 1.0560e-01, 7.0728e-02, 7.9940e-02, 3.9555e-03, -5.1746e-02],[ 8.3772e-02, -5.6538e-02, -9.2199e-02, 8.6792e-02, 1.9055e-02],[-7.1809e-02, 2.9500e-02, 2.4513e-02, -9.6991e-02, 6.0078e-03]],[[ 6.0276e-02, 7.5685e-02, 1.4368e-02, 9.5592e-02, 8.3292e-02],[ 6.6281e-02, 5.7695e-02, 3.9793e-02, -9.9542e-02, -1.9526e-02],[-7.6453e-02, 1.1038e-01, 3.7571e-02, 6.1729e-02, 1.1157e-01],[ 4.5504e-02, -6.4318e-02, 1.2533e-02, -5.1845e-02, 8.5061e-02],[-8.9845e-02, -1.9525e-02, -3.7496e-02, 6.5087e-02, -7.8588e-02]],[[-4.4191e-02, 2.8607e-02, 8.7059e-02, -7.9101e-02, 2.3150e-02],[ 1.0367e-01, -9.4750e-02, -1.1040e-01, 1.9056e-02, 7.3760e-04],[-9.6915e-02, -9.8338e-02, -2.4449e-03, 9.9325e-02, 6.8151e-02],[-7.7237e-02, 5.7915e-02, 6.4576e-02, -1.8054e-03, 9.6132e-02],[ 2.3439e-02, 7.7535e-02, -2.2759e-02, -8.2194e-02, 5.8361e-02]]],[[[-4.5006e-02, -3.2527e-02, -1.0566e-01, -2.4934e-02, 7.0937e-02],[-1.8897e-02, 1.1395e-01, 9.9876e-02, 7.9380e-02, 9.2306e-02],[-1.1297e-02, -3.9163e-02, 5.4249e-02, -3.5688e-02, 7.9004e-02],[-6.7469e-02, -9.1536e-02, 2.4838e-02, -6.3080e-02, 5.1484e-02],[-3.3917e-02, 2.4580e-02, 9.0144e-02, -9.5288e-02, 1.2053e-02]],[[ 1.4092e-02, 1.5081e-02, 7.0240e-02, -8.8546e-03, -2.0334e-03],[ 9.2695e-02, 8.9636e-02, -7.3226e-02, -6.9203e-02, 3.2286e-02],[ 6.7067e-02, -6.2239e-03, -5.3309e-02, 5.0094e-02, -3.8358e-02],[ 7.2186e-02, 7.8682e-02, 2.3221e-02, -1.3907e-02, -8.1693e-02],[ 1.5625e-04, -9.5823e-02, 6.0817e-02, -8.1015e-02, 1.0263e-01]],[[-8.2497e-02, -5.1205e-02, -1.0026e-01, -8.7982e-02, -8.1581e-03],[ 7.0671e-02, -1.0617e-01, -6.1971e-02, -6.0562e-02, 3.9689e-02],[-1.9072e-02, -7.5798e-02, 3.3781e-02, -4.3096e-03, -5.5296e-02],[ 7.4562e-03, 6.2763e-03, -9.7845e-02, 7.0208e-02, -3.7844e-03],[ 3.8143e-02, 3.1558e-02, 3.5509e-02, -6.9402e-02, 5.3305e-02]]]],device='cuda:0', requires_grad=True)

Parameter containing:

tensor([-0.0289, 0.0871, -0.0278, -0.0651, 0.0272, -0.1133, 0.0690, 0.0005,0.0738, 0.0638, 0.0198, -0.0669], device='cuda:0',requires_grad=True)

Parameter containing:

tensor([1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.], device='cuda:0',requires_grad=True)

Parameter containing:

tensor([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.], device='cuda:0',requires_grad=True)

Parameter containing:

tensor([[[[ 1.6837e-02, 3.9049e-02, 3.8538e-03, 5.0147e-02, -1.7157e-02],[-4.8375e-03, 1.4341e-02, -3.9234e-02, 5.6335e-03, -2.2569e-02],[ 3.7758e-02, 3.0718e-02, 3.7152e-02, 3.1611e-03, -2.6436e-02],[ 4.4972e-03, 7.2603e-03, -3.5039e-02, -6.7876e-03, 1.2252e-02],[-4.8348e-02, 3.3239e-02, -4.6248e-02, 5.2280e-02, -4.9335e-02]],[[ 3.1791e-02, -2.0130e-02, 5.7484e-02, 5.2510e-02, 4.7151e-02],[-2.5711e-02, -5.2972e-02, -6.9194e-04, 6.5906e-03, -1.1975e-02],[ 3.1075e-02, -4.4184e-02, -3.0248e-02, -1.2002e-02, -8.0988e-03],[ 1.5171e-02, -4.3509e-02, -4.9740e-02, 3.7396e-02, 5.8021e-04],[-2.9594e-02, 5.7574e-02, 4.4145e-02, -4.2496e-03, 5.5825e-02]],[[-1.5965e-02, 1.9293e-02, -2.5857e-03, 2.7914e-02, 3.3629e-02],[-1.9290e-02, -4.8874e-02, -1.0183e-02, 4.2846e-02, -4.9118e-02],[ 3.7214e-03, -2.6626e-02, -4.8601e-02, -4.0442e-02, 1.0293e-02],[-1.6670e-02, 2.0373e-02, 5.4499e-02, -3.3739e-02, -4.3264e-04],[ 2.6394e-02, -2.9657e-02, -1.9047e-02, 3.5709e-02, -2.4829e-02]],...,[[-4.4016e-02, 4.1989e-02, 3.7908e-02, -5.1644e-02, 2.5056e-02],[-4.6698e-02, -1.5862e-02, -1.5832e-02, -2.3884e-02, 5.8090e-03],[ 1.3806e-02, 1.8406e-02, 4.9794e-02, -1.2504e-02, -1.5612e-02],[-4.7121e-02, -1.9058e-02, -3.3351e-02, 4.7181e-02, -2.5423e-02],[ 5.3276e-02, -2.3578e-02, -3.4645e-02, -5.7240e-02, -5.2432e-02]],[[ 2.7797e-02, 5.7381e-02, -2.9419e-02, 3.2252e-02, 1.8201e-02],[-2.9319e-03, -5.6008e-02, -3.7410e-02, 5.4881e-02, 1.0412e-02],[ 3.7508e-03, -1.5327e-02, 4.4945e-02, -2.4078e-02, 1.5766e-02],[ 2.2802e-02, -3.0392e-02, 2.8732e-02, 4.8300e-02, -2.9580e-02],[-1.6143e-02, -3.8459e-02, 4.2293e-02, 2.5387e-02, -3.4190e-02]],[[-4.8952e-02, -2.4501e-03, 3.4236e-02, -4.0241e-02, 5.6469e-02],[-1.6898e-02, 4.5197e-02, -1.9063e-02, 1.4187e-03, 5.7505e-02],[-3.5753e-02, -2.0879e-02, -4.4697e-02, -2.8234e-02, -2.6301e-02],[ 4.9708e-02, -1.8859e-02, -2.0904e-02, -3.3134e-02, 5.4800e-02],[-1.5992e-02, -4.7318e-02, 3.2858e-02, -2.9649e-02, 1.9607e-02]]],[[[-2.0137e-03, -5.4530e-02, 2.6117e-02, -5.3168e-02, -2.7477e-02],[ 5.4355e-02, 4.3513e-02, 3.8112e-02, -3.6133e-02, -2.9217e-02],[-4.3318e-04, 3.2799e-02, -2.5068e-02, 4.4374e-02, -8.8430e-03],[-5.2209e-02, 9.8843e-03, 1.6805e-02, -5.3476e-02, -3.5717e-02],[-4.9799e-02, -5.2044e-02, 4.8324e-02, 1.1956e-02, -3.9116e-02]],[[-1.5295e-02, 3.7768e-03, -3.6491e-02, -1.0080e-02, 2.1223e-02],[ 4.7294e-02, -2.8116e-02, -8.7573e-03, -3.6418e-02, -5.6063e-02],[ 2.3503e-02, -5.2238e-02, 4.3977e-02, -5.5652e-02, -5.6270e-02],[-2.0098e-02, 2.5324e-02, -1.4206e-02, -4.5407e-02, -2.8871e-02],[ 3.6818e-02, 3.1809e-02, 2.0549e-02, 1.3602e-03, -5.2057e-02]],[[-3.3096e-02, 3.2750e-02, 3.8008e-02, -3.1349e-02, -1.9195e-02],[ 3.1343e-02, 2.8444e-02, -3.4247e-02, 3.6718e-02, -2.5978e-02],[-3.7977e-02, 4.5427e-02, 4.1251e-02, 3.0914e-02, -1.2328e-02],[ 2.7434e-02, 5.4683e-02, 1.1949e-02, 3.6398e-02, 3.9781e-03],[-3.0962e-02, 3.7158e-02, -4.1151e-02, 1.7380e-02, -6.5435e-03]],...,[[-3.9804e-03, 6.8954e-03, 4.3355e-02, 5.3333e-02, -1.4471e-02],[ 2.7264e-02, -3.4434e-02, -1.2110e-02, -4.3257e-02, 5.1210e-02],[-3.6936e-02, -4.5285e-03, 2.6792e-03, 4.6887e-02, -4.7335e-03],[-3.0631e-02, 6.2793e-03, -2.4597e-02, 3.7827e-02, 1.6709e-02],[-2.2233e-03, 2.9004e-02, 2.9358e-03, 9.7834e-04, 5.6435e-02]],[[ 1.6769e-02, 4.3425e-03, 4.3504e-02, -4.0514e-02, -1.9398e-02],[-4.6759e-02, -2.7899e-02, -2.5840e-02, 4.0167e-02, -2.8096e-02],[-1.1065e-03, -6.5070e-03, -3.8825e-02, -8.9152e-03, -1.4197e-02],[ 1.0993e-02, -2.7987e-02, -9.0260e-03, -4.7638e-02, 5.9191e-04],[ 2.9704e-02, -3.6466e-02, -2.9013e-02, 4.3721e-03, -3.1700e-03]],[[ 4.2815e-02, 1.0447e-02, -1.4398e-02, -1.5149e-02, -8.6284e-03],[ 4.8887e-02, 3.9469e-02, 4.9770e-02, 4.8319e-02, -1.2185e-02],[ 1.3188e-02, 4.5354e-02, 1.2099e-02, -4.4321e-02, 5.4454e-02],[ 5.6542e-02, 3.1920e-02, -1.6821e-02, -7.6910e-03, 1.5424e-02],[ 4.2625e-02, -4.2033e-02, -1.1215e-02, -4.9485e-02, 5.4394e-02]]],[[[-3.6911e-02, -2.7153e-02, 5.6090e-02, 2.2732e-02, -4.6239e-02],[-2.6042e-02, -2.8152e-02, 2.7300e-02, 4.4891e-02, 1.1954e-02],[-1.7286e-02, 3.4384e-02, -1.9196e-03, -8.9332e-03, 5.2784e-02],[-5.8096e-04, -3.7728e-02, -2.0794e-02, -5.3936e-03, -4.0608e-02],[ 3.0157e-02, -4.3950e-02, -4.5071e-02, -1.0975e-02, -5.3778e-02]],[[-4.5037e-02, 5.0279e-02, 1.1896e-02, -7.4762e-04, 1.7788e-02],[ 2.7935e-02, 4.1864e-02, -5.2875e-03, 5.6237e-02, -3.6180e-02],[-1.7563e-02, 5.3053e-02, -3.4898e-02, -2.4415e-02, -3.2482e-02],[-3.6327e-02, 2.2283e-02, -1.3111e-02, -1.7090e-02, 1.1905e-02],[ 4.6541e-02, 5.1194e-02, 3.2681e-02, 1.9357e-02, 5.1249e-02]],[[-3.4045e-02, 2.7756e-02, -2.1690e-02, 5.4612e-02, -5.0788e-03],[ 9.7555e-03, 3.3462e-02, 2.3060e-02, 4.8267e-02, 5.4044e-02],[-6.3785e-03, -1.9752e-02, 4.7190e-02, -7.6234e-03, -2.4653e-02],[ 4.2609e-02, 7.6555e-03, -2.8521e-02, 4.1444e-02, -5.5703e-02],[-1.2559e-02, 5.3782e-02, 3.7557e-02, -3.9117e-02, -5.5476e-02]],...,[[-4.0647e-02, -2.3165e-02, 1.7792e-03, -2.8859e-02, -5.3714e-02],[ 3.0270e-02, 3.4608e-02, 4.1038e-02, 1.1513e-02, -8.0424e-03],[-3.7297e-02, 4.8651e-02, 4.7339e-02, 5.2963e-02, -4.8877e-02],[-5.2380e-02, -5.4120e-02, 1.3045e-02, -5.3007e-02, 5.9851e-03],[ 5.3149e-02, 4.6873e-02, 1.7081e-02, 4.3599e-02, -2.3412e-02]],[[-2.6898e-02, 4.4158e-02, 3.8821e-02, 8.0971e-03, -4.9367e-02],[-1.4927e-02, 2.7672e-02, -2.8663e-02, 2.6029e-03, 6.2249e-03],[-1.7705e-02, 1.9328e-02, 7.2222e-03, 1.7481e-03, -3.6296e-02],[ 1.4783e-02, 1.8448e-02, 2.7759e-02, -1.2760e-02, 2.5677e-02],[-2.7009e-02, 1.7319e-02, -3.0475e-02, -1.2150e-02, 3.8746e-02]],[[ 4.0195e-02, 2.9100e-02, -2.4754e-02, 3.8138e-02, 1.0771e-05],[ 5.4917e-02, 1.3178e-02, 2.1164e-05, 6.0068e-03, -2.7630e-02],[ 5.6187e-02, -5.7141e-02, 5.2759e-02, -4.1367e-02, 4.7616e-02],[ 2.8173e-02, -6.5878e-03, -5.3829e-02, -2.0749e-02, -2.8361e-02],[ 6.9831e-03, -1.9126e-04, -3.9858e-02, -5.4732e-02, -4.2842e-02]]],...,[[[-1.9013e-02, -5.3600e-03, 7.7614e-03, -2.0984e-03, 5.2262e-02],[-3.7892e-02, -3.6788e-02, -2.7654e-02, 3.6484e-02, 2.4321e-02],[ 2.7991e-02, -1.5580e-02, 6.6545e-03, 1.7822e-02, -1.2255e-02],[-3.7130e-02, 4.2035e-02, -3.5030e-02, -2.2396e-02, 2.7825e-02],[ 2.3606e-02, 4.8080e-02, -2.7872e-02, 4.6367e-02, -5.1613e-02]],[[ 1.9042e-02, 2.0172e-02, 3.1939e-02, 1.3274e-02, -5.4647e-02],[-5.1662e-02, -1.1911e-02, -1.9303e-02, -3.3922e-02, -1.4973e-02],[ 4.3827e-02, 9.5744e-03, -3.6370e-02, -1.4381e-02, 5.5386e-02],[-3.7355e-02, 1.6575e-02, 4.4935e-02, -2.2097e-02, -5.2480e-02],[-4.7451e-04, -2.2699e-02, -4.0683e-02, 3.1616e-02, 3.9061e-02]],[[ 5.2837e-02, -2.3200e-02, -3.7717e-02, 5.6925e-02, 3.4622e-02],[-4.4049e-02, 3.5307e-03, 6.7730e-03, -5.3809e-02, -7.8179e-03],[-6.9895e-03, -2.3828e-02, 4.3069e-02, 2.7141e-03, -4.2468e-02],[ 3.1411e-02, 9.6051e-03, -3.6271e-02, 3.0945e-02, 1.6273e-02],[-1.8224e-02, 1.8784e-03, -5.1884e-02, 4.4344e-02, -5.5485e-02]],...,[[-4.6217e-02, 3.7508e-02, 1.0990e-02, -4.0652e-02, -5.2942e-02],[-5.5321e-02, -4.8382e-02, 3.9043e-03, -4.5398e-02, 1.7088e-03],[-1.5275e-02, -5.4231e-02, 1.6449e-02, 8.0291e-03, 3.2663e-02],[ 5.0690e-02, 5.6142e-02, -3.3160e-02, 1.1630e-02, -4.6448e-02],[-3.4687e-02, -3.2918e-02, 4.3938e-03, -2.7993e-02, 1.0658e-02]],[[ 5.2382e-04, -2.8193e-02, 5.0076e-02, -4.6969e-02, -2.1574e-02],[ 4.0314e-03, 7.9599e-03, -3.9672e-02, 5.1095e-02, 5.5867e-03],[-3.1506e-02, 4.8461e-02, 5.7732e-02, -4.6152e-02, -1.1203e-02],[-3.4199e-03, 2.6994e-04, 4.7722e-02, -1.2197e-02, 1.9124e-02],[ 3.7981e-02, 1.7590e-02, 1.5927e-02, 1.7073e-02, 3.0175e-02]],[[ 3.4849e-02, 1.0712e-02, -2.3473e-02, 4.9545e-02, -4.9472e-02],[ 2.9144e-02, 2.4576e-02, -2.8464e-03, 1.5436e-02, 8.1556e-03],[-2.1450e-02, -4.3744e-02, 5.2049e-02, -2.1676e-02, -1.1865e-02],[-5.4224e-02, -4.2250e-02, -3.6545e-02, -4.3368e-02, 4.9460e-02],[ 1.2432e-02, -5.6373e-02, -2.0039e-02, 9.9015e-03, 4.2299e-02]]],[[[-3.5325e-02, 1.0264e-02, -4.1823e-02, 3.4763e-02, -8.3013e-03],[-2.8009e-02, -2.0148e-02, -2.3959e-02, -3.0306e-02, 1.2322e-02],[-4.9296e-02, -1.1369e-02, 5.4807e-02, 8.9153e-03, 6.7126e-03],[-4.3327e-03, 3.5289e-02, -1.6447e-02, 2.3374e-03, -2.6909e-02],[ 1.1520e-02, 1.4999e-02, -1.2072e-02, 4.0865e-02, -3.3325e-02]],[[ 4.9722e-02, 5.5850e-03, -5.1488e-02, 1.0781e-02, -2.8687e-02],[-5.7196e-02, -1.1550e-02, -3.9236e-02, 3.5052e-02, 5.2142e-02],[-2.7659e-02, -2.0552e-02, -5.4830e-02, -4.5723e-02, -4.7586e-03],[ 4.6742e-02, -1.0508e-02, -3.3030e-02, 3.6813e-02, 1.5391e-02],[-1.3507e-02, 2.8583e-02, -4.2759e-02, -3.2951e-02, -5.6734e-02]],[[ 2.5600e-02, 9.7809e-03, -4.5101e-02, -1.1172e-02, -8.7737e-03],[ 2.3152e-02, -4.8875e-02, -4.5229e-02, -5.1900e-02, -2.9288e-02],[ 3.1043e-02, -5.3002e-02, -2.6439e-02, -3.3528e-02, 5.3968e-02],[-1.0642e-02, -4.7040e-02, -4.4339e-02, -3.9437e-02, -4.4519e-02],[-3.5207e-02, -5.1676e-02, -1.8766e-02, -4.0103e-02, 4.8529e-02]],...,[[-2.9073e-02, -2.5656e-02, -4.1748e-02, 4.8099e-02, -3.9880e-02],[-1.1213e-02, -4.6002e-02, -4.4866e-02, 1.9938e-03, 5.5519e-02],[-4.7684e-02, 7.0067e-03, -4.8152e-02, 5.3141e-02, 4.1690e-02],[-2.8877e-02, 2.6818e-02, 4.1975e-02, -4.2013e-02, -4.5729e-02],[-1.0308e-03, 2.4134e-02, -4.6047e-02, -3.2336e-02, 3.0536e-02]],[[ 3.6514e-03, -4.1822e-02, -5.4447e-02, -1.8092e-03, -2.8325e-02],[ 5.6619e-02, 4.9003e-02, 3.5209e-02, 2.1405e-02, -1.5977e-02],[-2.2021e-02, -1.4353e-02, 2.2963e-02, 3.5575e-02, 2.6225e-02],[-2.3421e-02, -3.8486e-02, 4.3817e-02, -4.5809e-02, -5.7451e-02],[-2.3403e-02, -3.5608e-02, -5.5202e-02, 3.1219e-03, 5.2714e-02]],[[-2.4017e-02, 2.7344e-02, -3.8430e-02, -4.2425e-02, 1.8982e-02],[-3.2787e-02, -7.2447e-03, 3.5838e-02, 7.2880e-03, -1.8575e-02],[-4.2010e-02, -2.8528e-02, -1.4606e-02, -2.0051e-02, 2.5324e-02],[-3.4352e-02, 2.1891e-02, -2.2975e-02, -1.7091e-02, -1.9999e-02],[ 4.9602e-02, -2.0096e-03, 5.6375e-02, 9.1986e-03, -3.1043e-02]]],[[[ 2.9504e-02, -3.3338e-02, -3.9572e-02, 4.8774e-02, -1.9312e-02],[-1.8269e-02, -4.9871e-02, -1.9919e-02, -2.4939e-02, -4.7786e-02],[-3.8914e-02, 1.0305e-03, -1.2295e-03, 6.5598e-03, 7.5420e-04],[ 3.9938e-03, 1.5085e-02, 1.7318e-02, -3.5563e-02, 4.9810e-02],[ 2.2569e-02, -2.0196e-02, -1.4355e-02, 1.2871e-02, 4.0089e-03]],[[-2.8787e-02, 3.4114e-03, -3.6673e-03, -4.0377e-02, 1.7047e-03],[ 2.8056e-02, -9.6168e-03, -2.6062e-02, -4.4891e-02, -5.6187e-02],[ 7.6519e-03, -2.5823e-02, 4.0850e-02, 8.9825e-03, -4.3226e-02],[-4.7101e-02, 3.4003e-02, 1.2406e-02, 3.2654e-02, -1.5588e-02],[ 2.4781e-02, -1.3819e-02, -1.0470e-02, -3.8978e-02, 2.8552e-02]],[[-1.2191e-02, 2.4574e-02, -1.2759e-02, 1.0204e-02, -3.8920e-02],[-4.7290e-02, -8.2822e-03, -1.4608e-02, -3.2785e-02, -2.0993e-02],[ 2.1857e-02, 1.6716e-02, -5.4426e-02, -1.9320e-02, -1.4398e-02],[-2.0823e-02, 5.0982e-02, -3.7109e-02, 2.2250e-03, 4.0010e-02],[ 3.0249e-02, 3.9417e-02, -2.4593e-02, 4.8670e-02, 5.4934e-02]],...,[[-2.6042e-02, -4.8701e-02, 1.9671e-02, 1.6025e-02, 1.5332e-02],[-2.7460e-02, -2.9994e-02, -4.4949e-02, -1.4278e-02, -2.1574e-02],[-5.6778e-02, 4.0920e-02, -5.2823e-02, -2.5261e-02, -1.5468e-02],[ 5.0426e-02, 3.2968e-02, -4.9911e-02, 1.7937e-02, 1.8698e-02],[ 5.2937e-02, -4.9497e-02, -4.6185e-02, -5.4948e-02, -5.4088e-02]],[[ 3.1639e-03, 1.1487e-02, 2.9878e-03, 3.6307e-02, 2.7507e-02],[-6.7106e-03, -2.4675e-02, 8.0342e-03, -1.3175e-02, 3.3116e-02],[-1.7064e-02, -7.0472e-03, -3.5217e-02, 5.1772e-02, 1.4480e-03],[ 2.6057e-02, -1.0618e-02, -2.5451e-02, -4.4518e-02, -3.7743e-02],[-1.6037e-02, -2.1040e-03, -5.2004e-02, -3.0045e-02, -5.2870e-02]],[[-3.8264e-02, 4.5870e-02, -3.5810e-02, -1.9642e-02, 3.8383e-02],[ 2.3727e-02, 2.5647e-03, -3.9806e-02, 3.9353e-02, 5.2143e-02],[ 4.3153e-03, -2.6572e-03, 4.4094e-02, -1.6438e-02, 3.9486e-02],[-4.1081e-03, -3.8600e-03, 5.2646e-02, 1.7077e-02, -3.9488e-02],[ 3.8189e-02, 1.3658e-02, -3.9377e-02, -5.7080e-02, 5.0436e-04]]]],device='cuda:0', requires_grad=True)

Parameter containing:

tensor([-0.0526, 0.0124, -0.0394, 0.0130, 0.0484, -0.0356, -0.0515, 0.0451,0.0352, -0.0290, -0.0181, 0.0570], device='cuda:0',requires_grad=True)

Parameter containing:

tensor([1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.], device='cuda:0',requires_grad=True)

Parameter containing:

tensor([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.], device='cuda:0',requires_grad=True)

Parameter containing:

tensor([[[[-4.3393e-02, -1.9702e-02, 1.5823e-02, -5.2596e-02, 8.8547e-03],[ 1.6535e-02, -3.0951e-02, 2.7444e-02, 2.8257e-02, -4.3796e-02],[-4.6715e-03, -1.3344e-02, 5.5102e-02, -4.3840e-02, 1.3523e-02],[-3.3789e-02, -1.4194e-02, -1.4318e-02, 2.7611e-02, -4.8364e-02],[ 3.6455e-02, 5.0694e-02, 9.6924e-04, -5.1052e-02, -2.2502e-02]],[[-3.3091e-02, 1.0991e-02, -2.6153e-02, -1.5658e-02, -3.6574e-02],[ 4.3908e-02, -1.7977e-02, 4.8424e-02, -4.6695e-02, 2.3936e-02],[ 5.7117e-03, 4.7569e-02, -2.4338e-02, -1.1156e-02, -3.7163e-02],[-6.7889e-03, 2.5740e-03, -5.6094e-02, 6.4805e-03, -7.2748e-03],[-3.4819e-02, -5.4112e-02, 1.8623e-02, 4.4873e-02, 2.4577e-02]],[[ 2.1554e-02, -5.5628e-02, 7.6746e-03, 2.2270e-02, 3.9728e-02],[ 1.6932e-02, -5.0935e-02, -1.9438e-02, -3.7333e-02, -4.0664e-02],[ 5.0430e-02, -1.1277e-02, 4.0554e-02, -3.0072e-02, -3.3959e-02],[ 4.3340e-02, -4.1159e-02, 4.1016e-02, 2.8226e-02, -3.1860e-02],[ 4.8755e-02, -3.9886e-02, -4.8266e-02, -4.8674e-02, -2.2487e-02]],...,[[-3.6580e-02, -1.7776e-02, 5.7069e-02, -5.0038e-02, 4.6597e-02],[ 3.3335e-02, 3.0059e-02, -1.8499e-02, -5.4038e-02, 4.6671e-02],[ 1.1269e-02, -7.1936e-04, -1.9285e-02, -5.2828e-02, 1.0113e-02],[-1.8447e-02, -3.9199e-02, -2.3072e-02, -5.2358e-02, 4.8257e-02],[-1.7670e-02, -2.9976e-02, -5.3904e-02, -2.7855e-02, 5.2615e-02]],[[-4.0428e-02, 2.3450e-02, 2.4196e-02, 2.8038e-02, 2.7047e-02],[ 2.3967e-02, -4.6150e-02, 5.1170e-02, -4.1910e-03, 1.5826e-02],[-1.2196e-02, 1.7125e-02, -2.9103e-02, -4.7419e-02, 5.5972e-02],[-5.3242e-02, -4.1744e-02, 2.5940e-03, -2.1499e-02, 1.8111e-02],[-3.9439e-02, -5.3816e-02, -5.3358e-02, 1.6220e-02, 9.0951e-03]],[[-2.5779e-02, 1.8832e-03, -8.6694e-03, -7.8553e-03, -3.5193e-02],[ 4.3611e-02, -2.5024e-02, -1.8608e-03, 2.9665e-02, -2.3949e-02],[-2.4898e-03, 5.3940e-02, 5.4607e-03, 5.1272e-02, -1.3228e-03],[ 4.6906e-02, -5.8347e-03, -3.4845e-03, -2.3169e-02, -1.7511e-02],[-4.2166e-02, 2.8267e-02, -4.0925e-02, 1.7048e-02, 4.5771e-02]]],[[[ 3.1948e-02, -1.2083e-02, 9.9202e-03, -3.1588e-02, -2.1082e-02],[-2.6696e-02, -1.2512e-02, 3.2264e-02, 5.0794e-02, 2.4878e-02],[ 2.1373e-02, 4.5792e-02, -5.3931e-02, 3.0916e-03, 3.5746e-02],[-4.3344e-02, 1.7856e-02, -3.4874e-02, 5.2352e-02, 3.3698e-02],[ 4.7357e-02, -1.3911e-02, -4.4321e-02, -6.9109e-03, -9.4972e-05]],[[-3.8852e-02, -1.8029e-02, -5.2169e-02, 3.2907e-02, -3.0267e-02],[ 3.2567e-02, 2.1711e-02, -1.2914e-02, -4.5172e-02, -5.6557e-02],[ 3.4985e-02, 1.0991e-02, 1.9835e-02, -3.9212e-02, -4.2300e-02],[-4.4954e-02, -5.6341e-02, 2.2882e-02, 1.9402e-02, -5.5204e-02],[-5.2020e-03, -3.2613e-02, 1.6321e-02, -2.0038e-02, 7.4434e-03]],[[-5.0909e-02, 2.1867e-02, 3.1042e-02, 2.8418e-02, 2.7121e-02],[-2.7746e-03, 1.6255e-03, -9.6929e-03, 5.5831e-02, 3.7760e-02],[-4.8461e-02, -5.7336e-02, -4.7220e-02, -3.9434e-02, -2.8993e-02],[ 3.0191e-02, -2.6163e-02, 2.3583e-02, 2.4678e-02, 3.7768e-02],[ 3.6002e-02, -5.2207e-02, 1.1358e-02, -3.1582e-02, 3.8125e-03]],...,[[-4.0346e-02, -7.9137e-03, 1.4050e-02, 8.7913e-03, 1.2644e-02],[ 5.5082e-02, 5.3481e-02, -3.1151e-02, -2.0433e-02, -3.0660e-02],[ 2.6514e-02, 1.0212e-02, -2.5300e-02, 1.0645e-02, -3.8355e-02],[-4.0115e-02, -2.7833e-02, -3.6738e-02, -1.7261e-02, 3.8978e-02],[ 4.5288e-02, 1.1125e-02, -3.4610e-02, 1.6689e-02, -4.9436e-02]],[[ 2.2909e-02, 8.6307e-03, 1.7003e-02, 3.3835e-02, -3.1730e-02],[ 1.5669e-02, -4.6926e-02, -3.0182e-02, -3.4038e-02, -1.9528e-02],[-2.5644e-02, 2.4236e-02, -4.1166e-02, -5.2237e-02, -1.2767e-02],[-2.8088e-02, 5.4521e-02, 3.1146e-02, -3.8993e-02, 5.0811e-02],[-3.4987e-02, -1.8760e-02, -1.5899e-02, 2.7304e-02, -4.4466e-02]],[[-4.1364e-02, -1.1947e-02, -5.3464e-02, -2.1780e-02, 5.2713e-02],[-2.3782e-03, 4.5209e-02, -1.7166e-02, -2.4829e-02, 3.7713e-03],[ 1.4040e-02, 6.5331e-03, 1.1878e-02, 3.6791e-02, 5.6504e-02],[-5.4320e-02, 3.8940e-03, 4.0814e-03, -4.5454e-02, -9.0520e-04],[ 4.7516e-02, -4.5387e-02, 2.7438e-02, 3.4538e-03, -4.0870e-03]]],[[[-2.8538e-02, -2.9724e-02, -6.3884e-03, 1.7631e-02, -2.2743e-02],[-2.5766e-02, 7.0066e-04, -1.2442e-02, 2.5966e-02, 4.9824e-02],[-1.5107e-02, 4.9925e-02, -5.6049e-02, -3.8182e-02, 1.4881e-02],[ 3.2231e-02, -4.8313e-02, -2.8049e-02, -3.9517e-02, -2.0198e-02],[-1.7834e-02, 5.3966e-02, 1.8842e-02, 2.9159e-02, -2.5236e-02]],[[-3.6249e-02, -1.4029e-02, -5.1196e-02, 7.1040e-03, -1.8686e-02],[ 3.3060e-02, 2.7813e-03, -2.5725e-02, 1.6811e-02, -4.4237e-02],[-3.3256e-02, 4.7695e-02, -8.2575e-03, 9.3455e-03, 4.2799e-02],[ 2.0925e-03, 2.3671e-02, 1.2230e-02, -4.6037e-02, 3.3533e-03],[ 2.0114e-03, 9.4641e-03, 3.5257e-02, -2.5262e-02, -6.8958e-03]],[[-4.2327e-02, -5.1569e-02, -1.7445e-02, 2.8846e-02, -3.3348e-02],[-3.5933e-02, -4.9062e-02, -1.9634e-02, 4.9813e-02, 2.5616e-02],[ 5.6835e-02, -4.6371e-03, 5.5716e-02, 2.6323e-02, -5.0564e-02],[-2.3622e-02, 4.2030e-02, 2.7993e-02, 1.4487e-02, 1.3411e-03],[-5.3233e-02, 3.8830e-02, 1.0610e-02, 5.0090e-02, -7.2898e-03]],...,[[-3.7513e-02, 3.6601e-02, 9.8219e-03, 4.9729e-02, 1.2196e-02],[-2.5048e-02, -3.7832e-02, -1.8812e-02, -3.0897e-02, 1.7486e-03],[-3.4712e-02, -3.0691e-02, 4.1162e-02, 2.8908e-02, 3.9809e-02],[ 4.3811e-02, -3.2406e-02, 5.4954e-02, -5.4319e-02, 2.7378e-02],[-4.6015e-02, 2.0500e-02, -3.6620e-02, 1.0391e-02, -1.4360e-02]],[[-5.4064e-02, -4.9923e-02, 9.0568e-03, 2.7361e-02, 3.0152e-02],[ 1.5140e-03, -3.2261e-02, -5.7437e-02, 4.6700e-02, -3.2702e-02],[-1.3607e-02, 4.4696e-02, -9.0395e-03, 1.6407e-03, -3.5551e-02],[ 1.4253e-02, -5.1538e-02, 1.4135e-02, -4.9532e-03, -5.1421e-02],[-1.2397e-02, -5.1868e-02, -5.0479e-02, -5.1543e-02, 1.6274e-02]],[[-3.6875e-02, -2.9565e-02, 2.4207e-02, -2.5219e-02, 3.3831e-03],[-4.4595e-02, 2.7371e-03, 3.5197e-02, 4.6792e-02, 6.9583e-03],[ 2.1292e-02, -3.7290e-02, -1.1254e-02, 2.5695e-02, -1.3295e-02],[ 4.6468e-02, -2.4094e-02, -5.5154e-02, 1.1623e-02, 4.5315e-02],[-1.6372e-02, -1.1510e-02, -2.5126e-02, -3.3675e-02, 7.5242e-03]]],...,[[[ 9.6421e-03, 2.5606e-02, -1.1575e-03, -6.3610e-03, -2.3608e-02],[-5.3660e-02, -1.7265e-02, -7.9241e-03, -3.6515e-02, -1.3875e-02],[ 1.7385e-05, -1.0175e-02, -2.6411e-02, -2.9259e-02, -2.3777e-02],[-5.3561e-02, 4.1268e-02, 1.5934e-03, 3.6634e-02, -5.7525e-02],[-2.5928e-02, 5.2736e-02, 4.8662e-02, -8.1288e-03, 4.6342e-02]],[[-2.3142e-03, -1.5459e-03, 5.2505e-02, -2.1955e-02, 5.6238e-02],[-4.4134e-03, -5.2630e-02, -2.0574e-02, -1.6546e-02, -2.2536e-02],[ 3.2089e-02, -4.3899e-02, -2.8285e-02, 4.4318e-02, -7.8456e-03],[-6.8220e-03, -3.1780e-02, 9.0042e-03, -5.3753e-02, 5.2375e-02],[-3.6622e-02, -4.9866e-02, 1.7392e-02, -3.5998e-02, -1.8328e-02]],[[ 3.5683e-02, 3.0768e-02, -5.6631e-03, -3.9362e-02, -2.7071e-02],[ 2.3681e-02, -5.5181e-02, -2.7547e-02, -3.6996e-02, -2.2275e-02],[-5.4568e-02, -9.3689e-03, 8.1780e-03, -3.8608e-02, 9.4536e-03],[-6.7571e-03, 2.6980e-02, 4.8332e-02, 5.3948e-02, -2.6705e-02],[ 2.7594e-03, 1.2697e-02, -3.7670e-02, -3.1037e-02, 4.8120e-02]],...,[[ 4.7340e-04, -2.1782e-02, 3.7973e-02, 2.3803e-02, -3.7041e-02],[-2.1907e-02, 1.9787e-02, 4.9948e-02, -2.7256e-02, 5.1226e-02],[ 2.4355e-02, 3.6013e-02, -3.5562e-02, -3.2971e-02, 4.4055e-02],[-4.3542e-02, -4.5033e-04, 3.2259e-02, 5.5906e-02, -4.2678e-02],[-5.0952e-02, -2.5642e-02, 3.4505e-02, -5.0838e-02, 6.3299e-03]],[[-1.8066e-02, 9.2364e-03, 2.1775e-02, 3.5480e-02, 4.5085e-02],[ 1.5985e-02, 3.6757e-02, 2.7058e-02, -4.6332e-02, -5.2632e-02],[ 5.3516e-02, 1.7730e-02, -2.5545e-02, -3.5675e-04, 3.7615e-04],[ 3.6890e-02, -1.6223e-02, 9.7240e-03, 3.6815e-02, -3.3791e-02],[-3.1941e-02, 2.7248e-02, 5.2518e-02, -2.7165e-02, -2.4478e-02]],[[-4.1402e-02, 5.6141e-02, -3.0138e-02, 3.3564e-02, 3.5593e-02],[ 5.5081e-02, -5.2176e-02, 5.7258e-02, 1.3976e-02, -1.5439e-02],[-4.4326e-02, 3.1095e-03, 1.9244e-02, -4.4056e-02, 4.0178e-02],[-1.9885e-03, 7.4720e-03, -1.8580e-02, 4.8064e-02, 1.0835e-02],[-1.4230e-02, -3.6621e-02, 1.4199e-02, -2.3409e-03, 2.2698e-02]]],[[[-9.6354e-03, -9.9076e-03, -5.1627e-02, 3.8357e-02, -4.3520e-02],[-5.4843e-02, 3.8106e-02, -1.3494e-02, 4.9996e-02, 3.5638e-03],[-2.7890e-02, 6.2526e-03, -1.6921e-02, -3.6320e-02, -5.1004e-02],[-1.7164e-03, 7.8063e-04, 1.0567e-02, -2.5437e-02, -1.5472e-02],[ 2.9616e-02, 2.2005e-02, 3.3341e-02, 5.5215e-02, -3.8884e-02]],[[ 3.4728e-02, -2.2543e-02, 3.1360e-02, 6.9470e-03, -1.1227e-02],[ 3.0435e-02, -4.6206e-02, -7.2949e-03, -1.6219e-02, -3.4318e-02],[-5.6189e-02, -4.4805e-02, -1.7007e-02, 4.0465e-02, -1.1705e-02],[-2.2523e-02, -3.2318e-02, 3.8327e-02, -3.3378e-02, 2.0614e-02],[-9.4022e-03, 4.6063e-02, 2.6816e-02, 4.3897e-02, -2.2937e-02]],[[ 2.0164e-02, -1.3753e-02, -4.4331e-02, 4.8648e-03, -3.4258e-02],[-5.9295e-03, -4.2128e-02, -1.0395e-02, -2.8470e-02, -3.8904e-02],[ 4.2056e-02, -1.5321e-02, -5.4812e-03, 3.9591e-02, -8.0334e-03],[-5.0221e-02, 5.8469e-03, 5.2948e-02, -4.5542e-02, -4.8381e-02],[ 1.9196e-02, 3.4126e-02, -2.5747e-02, -3.2973e-02, 1.2743e-02]],...,[[ 5.2216e-02, 3.4517e-02, -1.9344e-02, 2.1945e-02, -8.7788e-03],[-4.8170e-02, 2.6933e-02, 5.6317e-02, -2.3254e-02, 2.2648e-03],[-9.0354e-03, -4.1885e-02, -1.9850e-02, 2.8009e-02, -8.2476e-03],[-4.6430e-02, -1.0547e-02, 4.0647e-02, 2.2058e-02, 5.3941e-02],[-4.8411e-02, 4.4979e-02, 3.1898e-02, -3.4932e-03, -4.6966e-02]],[[-4.1183e-02, 4.2140e-02, -3.1094e-02, 2.2745e-03, -1.6978e-02],[-4.7396e-02, 4.7654e-02, 2.2162e-06, -4.9743e-02, 1.9207e-03],[ 3.8321e-02, -1.2374e-02, -2.8978e-02, 3.3631e-02, -1.4325e-03],[-4.5332e-02, -2.8657e-03, -4.2569e-02, 3.2186e-03, 4.0338e-02],[ 5.4117e-02, -6.1246e-03, 3.1750e-02, 4.4451e-03, 8.0913e-03]],[[ 1.5026e-02, -1.7792e-02, -5.4613e-02, 2.3343e-03, -3.5947e-02],[ 9.7298e-03, -3.6770e-02, 3.4731e-02, -3.8064e-02, -3.6569e-02],[ 1.7383e-02, 2.4368e-02, -2.9315e-02, -6.9810e-03, -4.4046e-02],[ 1.8019e-02, 8.9441e-03, 7.6759e-03, -5.5034e-02, 1.3000e-02],[-6.1246e-03, 2.7351e-02, -5.0639e-02, -3.0510e-02, -1.5391e-02]]],[[[ 3.2440e-02, -5.4653e-02, -4.0238e-02, 2.6531e-02, -6.4406e-03],[ 6.8616e-03, 1.1521e-02, -5.2743e-02, -4.5631e-02, -1.9761e-03],[-2.3601e-02, 5.7238e-02, 1.6757e-02, -5.2229e-02, 4.5697e-02],[-1.7275e-02, -4.9420e-02, -5.4931e-02, -1.2029e-02, 4.5080e-02],[-3.9085e-02, -4.7324e-02, -9.1896e-03, -8.0668e-03, 4.1337e-02]],[[-8.2878e-03, 2.9522e-02, 6.7958e-03, 5.4831e-02, -4.8300e-02],[-3.6666e-02, 5.5407e-03, 4.8755e-02, 1.1368e-02, 1.9199e-02],[-1.6297e-02, 1.9881e-02, -2.5075e-02, -1.3650e-02, 8.8226e-03],[-1.8700e-02, -2.3155e-02, 5.7246e-02, -8.5036e-03, -2.5621e-02],[ 1.6033e-02, -5.2570e-02, -2.4244e-02, 4.4380e-02, 8.1392e-03]],[[-2.3694e-04, 2.0962e-02, 6.8800e-03, 3.7337e-02, -1.0049e-02],[-3.5632e-02, 3.0171e-02, 4.0477e-02, 5.1821e-02, 5.7561e-02],[-2.7109e-02, 5.4052e-02, -4.0591e-03, -2.0345e-02, 4.5038e-02],[ 4.4379e-02, -1.6092e-02, 1.4859e-02, -3.7222e-03, 4.8547e-02],[ 2.8474e-02, 4.1188e-02, 2.2538e-02, -3.6703e-02, 3.4549e-02]],...,[[-3.7689e-02, -1.0651e-02, 2.5426e-02, -2.9747e-02, 4.3810e-02],[-5.7667e-03, -1.5752e-02, 1.5073e-02, 4.4594e-02, -1.9026e-02],[ 3.2722e-02, -4.9479e-02, -5.3110e-02, -2.3039e-02, -5.5471e-02],[ 5.9091e-03, 3.4835e-02, 2.0828e-02, -2.2268e-03, 3.1578e-03],[ 1.8602e-02, 4.6325e-02, -2.3408e-02, -3.3139e-02, 1.5890e-02]],[[-2.2580e-02, -3.5659e-02, 2.0554e-02, 3.4855e-02, 3.8585e-02],[-4.7058e-02, 1.8050e-02, -1.2235e-02, 1.7946e-02, 5.5639e-02],[-1.3726e-02, -1.7434e-03, 4.0122e-02, 7.8085e-03, -3.5843e-02],[ 3.7617e-02, -5.6894e-02, -1.0999e-02, -2.7811e-02, 1.1625e-02],[ 2.5916e-02, 5.1480e-02, 4.5092e-03, 1.9102e-02, -1.1060e-02]],[[-5.2774e-03, 4.8809e-02, -2.8911e-03, -4.6811e-03, 1.3546e-02],[ 5.2580e-02, 4.3174e-02, -1.8207e-02, -4.2579e-02, 1.7383e-03],[ 5.2123e-02, 4.9834e-02, -4.7467e-02, -3.2865e-02, 1.2208e-02],[ 1.9134e-02, -4.3586e-03, -5.7675e-02, 1.3249e-02, 6.0633e-03],[ 5.4718e-02, 3.3295e-02, -3.9032e-02, -2.5888e-02, -5.0122e-02]]]],device='cuda:0', requires_grad=True)

Parameter containing:

tensor([-0.0343, -0.0105, 0.0554, -0.0327, -0.0157, -0.0483, -0.0158, -0.0054,0.0225, -0.0075, 0.0459, -0.0377, -0.0098, 0.0114, -0.0332, 0.0041,-0.0243, 0.0254, 0.0243, 0.0441, -0.0314, 0.0164, -0.0312, -0.0081],device='cuda:0', requires_grad=True)

Parameter containing:

tensor([1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.,1., 1., 1., 1., 1., 1.], device='cuda:0', requires_grad=True)

Parameter containing:

tensor([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.],device='cuda:0', requires_grad=True)

Parameter containing:

tensor([[[[-2.4765e-02, -1.7735e-02, -2.2314e-02, 4.1485e-03, 1.2194e-02],[-2.5217e-02, 3.0319e-02, 3.9987e-02, 2.7033e-02, 3.5071e-03],[-1.5179e-02, -3.7771e-02, 2.6642e-02, -5.0335e-03, 1.6665e-02],[-9.2902e-03, 1.8668e-02, 1.4462e-02, 3.1703e-02, 1.2906e-02],[-1.6104e-03, 3.0867e-02, 1.8716e-02, 3.8913e-02, -3.0275e-02]],[[-1.4637e-02, 2.5277e-02, -1.4589e-03, -8.8839e-03, -1.3487e-02],[-1.4275e-03, 3.3354e-02, -8.6240e-03, -8.6344e-03, 1.7886e-02],[ 1.5236e-02, -3.6411e-02, 3.6606e-02, 2.8865e-02, 7.2129e-03],[ 2.6995e-02, 2.2488e-03, 3.3801e-02, 3.3280e-02, 3.4757e-02],[ 2.4791e-02, 1.9653e-02, -2.2077e-02, -3.3391e-02, -1.6241e-02]],[[-3.6686e-02, -2.8631e-02, 3.0150e-02, 3.8176e-02, -2.7273e-02],[ 2.5665e-02, -2.7832e-02, -2.8505e-02, 1.6879e-02, -1.9434e-02],[ 3.1808e-02, 1.2339e-02, 3.7241e-02, -2.5811e-02, 3.6366e-02],[ 1.2803e-02, -2.5175e-02, -2.7616e-02, -1.6782e-02, 2.8047e-02],[-2.2803e-02, -1.5379e-02, -5.2964e-03, -5.4955e-03, -6.4809e-03]],...,[[-4.6762e-03, 8.5698e-03, -1.4288e-02, 2.8600e-02, -8.7856e-03],[ 2.2729e-02, -3.0590e-02, 3.9707e-02, -5.3177e-03, -3.1247e-02],[ 2.9181e-02, -2.0759e-02, -2.1634e-02, 1.3287e-02, 2.4968e-02],[ 3.4698e-03, -2.9572e-02, 1.5503e-02, 1.7875e-02, 2.9701e-02],[ 3.9612e-02, 3.8441e-02, -7.2631e-03, -3.3924e-02, 1.2610e-02]],[[ 3.6460e-02, -6.1630e-03, -1.4716e-02, 1.9261e-02, -2.9819e-02],[ 4.0327e-02, 5.1599e-03, 3.5214e-02, -4.2533e-03, -1.8357e-03],[ 2.9551e-02, 2.9900e-02, 9.3425e-04, -2.8236e-02, 1.8200e-02],[-6.2033e-03, -1.8919e-03, 2.1001e-03, 2.8031e-02, -1.0653e-02],[-2.3770e-02, -1.9679e-02, 3.4040e-02, -1.7531e-02, 2.8961e-02]],[[-3.7468e-02, 1.0085e-02, -1.4514e-02, -9.3508e-03, -2.1063e-02],[ 3.8456e-02, -2.3931e-02, 3.9429e-02, -3.1255e-02, 3.9015e-02],[-1.2115e-03, -3.4697e-02, -2.5641e-02, -2.1814e-03, 1.8698e-02],[-3.3705e-02, -3.9880e-02, 3.8480e-02, 3.7270e-02, 1.5184e-02],[ 2.8033e-03, -2.7198e-02, -1.6893e-02, -2.3830e-02, -1.6191e-03]]],[[[ 1.7724e-02, -1.8517e-02, 4.0073e-02, 2.3778e-02, -3.6122e-02],[ 2.0455e-02, 8.5373e-03, 2.4218e-02, 2.4424e-02, -6.7260e-03],[-2.5273e-02, -2.6570e-03, 1.1071e-02, -2.2359e-02, 7.1240e-03],[ 2.0457e-02, 1.5521e-02, -1.6968e-02, 2.2347e-02, -3.0078e-02],[ 3.1897e-02, -5.7144e-04, 3.5381e-02, -3.6819e-02, 3.9591e-02]],[[ 2.2192e-02, -4.0246e-02, 2.2996e-02, 3.2615e-02, -2.7935e-02],[ 2.2529e-02, 3.6758e-02, -2.2256e-02, -4.0569e-02, -3.9135e-02],[-3.2102e-02, -1.7423e-02, -3.5665e-02, 3.9809e-02, 3.7980e-02],[-3.2966e-02, -3.1640e-02, 2.7921e-02, 2.6152e-03, -1.8984e-02],[ 3.1796e-02, 2.1449e-02, 6.5183e-03, -1.9734e-02, 2.4427e-02]],[[ 1.9604e-02, 1.9464e-02, 1.5228e-02, 3.0998e-02, 9.7725e-03],[-3.8247e-02, 5.2945e-03, -9.8893e-03, 2.2337e-02, 2.4320e-03],[-2.9608e-02, -2.7739e-02, -1.6549e-02, -1.5780e-02, 8.6404e-03],[ 5.8460e-03, 3.1634e-02, -2.2685e-02, -2.7120e-02, -2.1898e-02],[ 3.8658e-02, 3.7018e-02, 4.0656e-02, -3.6532e-02, 2.7710e-02]],...,[[-1.6787e-02, 2.8266e-02, -1.3804e-02, -4.0432e-03, -1.9968e-02],[-3.8386e-02, -1.8282e-02, 4.2764e-03, -1.5567e-02, -1.6459e-02],[ 3.2305e-02, -2.8748e-02, 2.8317e-02, -5.9219e-04, 2.9662e-02],[-1.4855e-02, -3.0055e-02, -3.3090e-02, -2.8315e-02, -2.2627e-02],[-2.2141e-02, 6.7039e-03, 7.8689e-03, 4.0769e-02, 2.8226e-02]],[[-2.9580e-02, -8.3730e-03, 1.9745e-02, 3.5386e-02, 4.0687e-02],[-2.9807e-02, -4.0623e-02, 3.8496e-02, 3.8080e-02, -2.0054e-02],[ 3.6833e-02, -3.9444e-02, -9.3106e-03, 3.7075e-02, -9.2252e-03],[-1.9581e-02, -3.0419e-02, -3.2400e-02, -3.2106e-02, 2.0089e-02],[ 2.2214e-02, -3.8889e-02, -2.4273e-02, 2.8646e-02, -2.0902e-02]],[[ 2.6044e-02, 1.3506e-02, -3.5586e-02, 3.3742e-02, 3.7720e-03],[ 2.7820e-02, 2.6476e-02, 2.9901e-04, -2.0872e-02, -1.3234e-02],[-2.7877e-02, -3.0645e-02, -1.3512e-02, 4.7893e-03, 3.7626e-02],[-5.5791e-03, -3.4532e-02, 3.0803e-02, -7.9363e-04, 2.8644e-02],[ 1.1443e-02, -5.3269e-03, 3.8751e-02, -3.1376e-02, 1.9648e-02]]],[[[ 2.3520e-02, 3.9405e-02, 2.7236e-02, -1.4398e-03, 3.0713e-02],[ 1.3709e-03, 2.2644e-02, 3.4241e-02, -1.4733e-02, 3.9979e-02],[ 2.5306e-02, -1.3574e-02, 1.7837e-02, -3.2824e-02, 6.2002e-03],[-4.7620e-04, -5.5864e-03, -3.2258e-02, 1.9227e-02, 7.1254e-04],[ 1.6651e-02, -3.1621e-02, -8.5946e-04, -3.7131e-02, 2.8180e-02]],[[-3.9176e-02, -2.0964e-02, 2.9344e-03, -2.3874e-02, -2.2375e-02],[-3.1348e-02, -1.1454e-02, -5.9225e-03, -1.9528e-02, -2.0506e-02],[-3.9908e-02, -3.5269e-02, 2.9826e-02, -1.0333e-02, -3.4846e-02],[-3.0744e-02, -4.0618e-02, 1.2259e-02, 5.8339e-03, -1.3615e-02],[-1.8398e-02, -4.0223e-03, -2.1993e-03, 3.4312e-02, -3.2382e-02]],[[ 1.2827e-02, -1.8611e-03, -2.5796e-02, -2.7791e-02, -1.3923e-02],[ 2.1942e-02, 1.2841e-02, 3.4694e-02, 3.1515e-02, 6.5245e-03],[ 2.4174e-02, 2.6253e-02, -3.7449e-03, 2.6624e-02, -1.5550e-02],[ 1.9385e-02, 2.5331e-02, -3.5731e-02, -1.6060e-02, 2.2018e-02],[-3.5428e-02, -3.3113e-02, -1.4647e-02, 7.9271e-03, -9.9434e-03]],...,[[-3.0034e-02, 5.5409e-03, -4.7236e-03, 1.0790e-02, -4.4663e-03],[-1.9605e-02, 3.3866e-02, 3.5620e-04, -4.0249e-02, 9.3119e-03],[-3.0403e-02, -9.8221e-03, -1.3170e-02, 7.7145e-03, -5.9557e-03],[ 2.5364e-02, -2.6397e-02, -2.7853e-02, 5.3411e-03, 6.1778e-03],[-2.7727e-02, -2.7513e-02, 6.8216e-03, -2.7446e-02, 7.6623e-03]],[[ 2.7238e-02, 2.5387e-02, 2.0489e-02, 2.5743e-04, 8.8515e-03],[-2.1749e-02, 1.6888e-02, -3.1753e-02, -3.6710e-02, -9.6594e-03],[-1.7069e-02, 3.8358e-02, -8.4443e-03, 2.1373e-02, 1.2767e-02],[-8.7264e-03, 2.7989e-02, 2.3082e-02, 1.1195e-02, 8.2422e-03],[-1.2187e-03, 4.0239e-02, 4.0421e-02, 1.1262e-02, 3.1662e-02]],[[-3.5505e-02, -1.9352e-02, -6.5112e-03, 5.9180e-04, 1.6644e-02],[ 2.4671e-02, -6.6554e-03, 5.1865e-03, 1.3817e-02, 2.9876e-02],[ 2.7839e-02, -1.1419e-02, -1.3900e-02, 8.0933e-03, -1.4796e-02],[-5.1000e-03, 2.2708e-03, 1.0319e-02, -1.6282e-02, 4.0429e-02],[-2.4016e-02, -5.1243e-03, -1.7296e-02, 1.3517e-02, -3.9768e-02]]],...,[[[ 2.8284e-02, 3.8412e-02, -2.0484e-02, -2.4660e-02, -5.4223e-03],[-3.9787e-02, 1.0600e-02, 1.0667e-02, 9.1971e-03, 1.9710e-02],[-1.0082e-02, 1.5203e-02, -4.8096e-03, 1.3306e-03, 2.1829e-02],[ 2.5151e-02, -1.4450e-04, -2.1206e-02, -8.7957e-03, 4.0249e-02],[ 3.7190e-02, -3.4275e-03, -2.3738e-02, 6.0999e-03, 5.7159e-03]],[[ 1.4283e-02, 3.6204e-02, 1.1918e-02, 3.7461e-02, -3.2172e-02],[-2.0945e-02, 1.9187e-02, 3.7013e-02, 1.0665e-02, 1.3875e-02],[ 1.3069e-02, -1.5626e-02, 1.4363e-02, -3.2514e-02, -4.0107e-02],[ 2.3930e-03, -1.3743e-02, -7.3757e-03, 1.7989e-02, -3.0615e-02],[-5.6852e-04, -3.4268e-02, 2.7766e-02, 3.9503e-02, 1.6245e-02]],[[-4.5333e-03, -1.3381e-02, 3.4192e-02, -7.8036e-03, -3.5599e-02],[-3.4851e-02, -8.3401e-03, 1.9849e-02, -3.1701e-02, 1.1752e-02],[-5.2992e-03, -2.1896e-03, 1.3599e-02, 2.3380e-02, -3.4891e-02],[ 1.1581e-02, -2.9756e-02, -1.1204e-02, 3.8311e-02, -1.0786e-02],[-2.3986e-03, -2.3622e-02, 3.8532e-02, -2.3107e-02, 7.3541e-03]],...,[[-1.6414e-02, -1.0646e-02, 8.3095e-04, -1.6034e-02, 3.2443e-02],[ 2.0135e-02, -9.2456e-03, -3.6807e-02, 1.3763e-02, -6.7230e-03],[-7.9442e-03, -1.8994e-02, 3.9740e-02, -9.1644e-04, -1.8118e-02],[ 3.9622e-02, -1.1209e-03, 2.7081e-02, -1.1274e-02, 2.2439e-02],[ 2.1780e-02, -1.3448e-02, -2.3663e-02, 3.0393e-02, -8.5657e-04]],[[-1.6307e-02, -2.1879e-02, -1.3104e-02, 3.4300e-02, -5.6016e-03],[-2.4497e-02, -3.4338e-02, -1.2164e-02, 1.9887e-02, 3.1091e-02],[ 7.8670e-04, 3.8434e-02, -3.2476e-02, -2.9295e-02, -3.7748e-02],[ 2.3975e-02, -1.3433e-03, -5.9956e-04, -2.8767e-02, 2.9004e-03],[-7.7005e-03, 2.2033e-03, -1.0736e-02, -3.5385e-02, -6.5584e-03]],[[ 3.1709e-03, -2.5768e-03, 5.9727e-03, 4.3966e-03, 6.0488e-03],[ 2.0709e-02, -2.1493e-02, 2.7367e-02, -2.2778e-02, -2.1788e-02],[-1.6171e-02, -2.9823e-02, 1.5866e-02, 1.9103e-02, -1.0853e-02],[ 1.4687e-03, -3.3195e-03, -2.8090e-02, 1.3785e-03, 2.1003e-02],[-1.0703e-03, 7.2539e-03, 1.2703e-02, -3.0166e-02, -3.7519e-03]]],[[[ 2.0147e-02, -7.8512e-03, 2.1201e-03, 2.7301e-02, 2.2117e-03],[-1.2692e-02, 2.5100e-02, -3.5172e-02, -3.4259e-02, -3.8073e-02],[ 1.1940e-02, -8.2582e-03, 1.8954e-02, 3.9249e-02, 1.0248e-02],[ 3.3904e-02, -3.6658e-02, 2.3872e-02, 2.5232e-02, 1.7597e-02],[-1.7348e-02, -1.8948e-02, -3.1278e-02, 2.6166e-02, 3.2563e-02]],[[-1.8429e-02, 3.5163e-02, 1.8164e-02, 7.7108e-03, 2.3776e-02],[-2.6492e-02, 3.2009e-02, 1.5956e-02, 2.9387e-03, 1.6997e-02],[-3.4833e-02, -2.1851e-02, -3.3467e-02, 4.8601e-03, 3.8871e-02],[ 3.4503e-02, -3.9968e-03, 2.4355e-02, -3.8838e-02, -2.4707e-02],[ 2.0123e-02, -2.6569e-03, -2.4942e-03, -3.3806e-02, 1.1710e-02]],[[-2.7447e-02, 3.3669e-02, -1.9655e-02, 1.0345e-02, 2.1952e-02],[-3.0007e-02, 1.0382e-02, -3.5980e-04, 3.8827e-04, 2.9315e-02],[ 3.0474e-03, -1.2380e-02, -3.7343e-03, -8.9831e-03, 3.6197e-02],[-2.4068e-02, -1.6151e-02, 5.1995e-03, -2.4225e-02, 9.3679e-03],[ 6.9929e-03, -3.7653e-03, 5.3342e-03, -3.1699e-02, 1.4815e-02]],...,[[ 2.9328e-02, 2.0352e-02, -2.1761e-02, -2.7371e-02, 1.9102e-02],[ 1.1877e-02, 2.3006e-02, 1.4865e-02, 3.2129e-02, -2.9597e-02],[-3.5431e-02, -8.3991e-03, -2.0841e-02, 1.8332e-02, 3.7251e-03],[ 1.2275e-02, -7.5657e-03, -1.0787e-02, -2.0918e-02, -1.3345e-02],[ 2.6193e-02, -1.7719e-02, -2.6880e-03, -2.8453e-02, 3.2366e-02]],[[-2.2178e-02, 2.2958e-02, 3.2606e-02, 2.3568e-02, 3.6797e-02],[-3.9332e-02, -2.3038e-02, 1.7174e-02, -1.3975e-02, -9.0988e-03],[ 5.0126e-03, 2.0888e-02, 2.2238e-02, 1.5904e-02, 2.3810e-03],[ 2.3291e-02, 6.6413e-03, -9.9701e-03, 2.0130e-02, -2.0031e-03],[-2.8262e-02, 6.6573e-03, -5.0547e-03, 2.8676e-02, 5.6036e-04]],[[ 2.5365e-02, -4.9310e-03, 2.5356e-02, -2.6112e-02, 3.6472e-02],[ 8.2630e-03, -1.4996e-02, -4.8876e-03, 3.1789e-02, -2.8300e-02],[-2.8581e-02, 3.1031e-03, 1.8537e-02, -2.3389e-02, 6.3368e-04],[ 2.1395e-02, -2.0442e-02, -1.7464e-02, 2.6370e-02, -3.4423e-03],[-8.7594e-03, 1.8723e-02, 2.5701e-03, 1.8597e-02, -3.8589e-02]]],[[[-5.6868e-03, -3.6660e-03, 1.0115e-02, 5.3396e-03, -2.3511e-03],[ 1.5847e-02, -1.8601e-02, 3.5551e-02, -1.2622e-02, 3.4081e-02],[-3.5609e-02, -1.3660e-02, 3.9343e-02, 2.9453e-02, 4.1732e-04],[ 2.9150e-02, -2.2917e-02, 2.0494e-02, -3.3299e-02, 1.4031e-04],[ 3.7475e-03, -3.4443e-02, 3.5568e-02, 2.6202e-02, -2.6924e-02]],[[ 2.0897e-02, -5.8324e-03, 5.0832e-03, 1.1690e-02, 1.2060e-02],[-2.4613e-02, 2.6944e-02, -6.2512e-03, 1.9881e-02, 2.1538e-02],[ 3.1339e-02, 2.8380e-02, 2.0433e-02, -1.3667e-02, 1.2539e-02],[-3.2840e-02, -2.1102e-02, -1.9208e-02, -3.3452e-02, 5.0336e-03],[ 2.9125e-02, 8.9002e-03, -2.4055e-02, 9.7758e-03, -5.8162e-03]],[[ 9.8884e-03, -2.1451e-02, 2.1183e-02, -2.0039e-02, 6.9009e-03],[-2.1800e-02, 3.4313e-02, 2.4045e-02, -1.5496e-02, 9.0193e-03],[-4.1909e-03, -2.9477e-02, 6.9498e-03, 1.3369e-02, 2.2160e-03],[ 2.6711e-02, 2.2580e-02, -2.5143e-02, 4.0218e-02, 2.0031e-02],[ 5.2612e-03, 3.2146e-02, -1.5808e-02, -5.3031e-04, 1.8030e-02]],...,[[-3.4652e-02, -3.3289e-02, 4.2032e-03, -2.7956e-02, -1.1632e-03],[ 3.0004e-02, -3.8272e-02, -4.1440e-05, 3.2091e-02, 3.5329e-02],[ 4.0688e-03, 1.4292e-02, 4.8408e-03, 2.4958e-03, -4.2248e-03],[-2.5160e-02, 2.6122e-02, 3.7325e-02, 1.6107e-02, 3.2206e-02],[-2.8236e-02, 3.4360e-02, 3.8573e-02, 3.9449e-02, -1.1734e-03]],[[ 4.9711e-03, 1.0630e-02, 2.5379e-02, -1.3348e-02, 3.7514e-02],[ 2.9509e-02, -2.5026e-02, -1.8784e-02, -2.0663e-02, -3.7027e-02],[-3.7471e-02, -1.6006e-03, 2.3505e-02, -4.6965e-03, 2.8748e-02],[-1.1987e-02, -1.0230e-02, -3.2931e-02, -3.2549e-02, -2.9455e-02],[ 6.2422e-03, 2.0774e-02, 1.2155e-02, 5.2143e-03, 4.0720e-02]],[[ 1.0049e-02, -2.6256e-02, -3.7294e-02, -3.9350e-02, -2.1656e-02],[-3.8875e-02, 1.5199e-02, 6.2172e-03, 1.4338e-02, 1.4441e-02],[-3.5924e-02, -3.9949e-02, -1.6941e-02, 2.4640e-02, -7.7310e-03],[ 1.1742e-02, -9.4309e-03, 1.9659e-02, 1.4060e-02, 4.3048e-03],[-3.6885e-03, -1.5537e-02, 1.6142e-02, 9.7565e-03, 3.7728e-02]]]],device='cuda:0', requires_grad=True)

Parameter containing:

tensor([ 0.0096, -0.0325, 0.0406, 0.0143, -0.0151, 0.0116, 0.0243, 0.0351,-0.0078, -0.0354, 0.0267, 0.0402, 0.0232, -0.0246, -0.0159, 0.0190,-0.0214, 0.0077, 0.0048, 0.0228, -0.0077, -0.0340, -0.0037, 0.0102],device='cuda:0', requires_grad=True)

Parameter containing:

tensor([1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.,1., 1., 1., 1., 1., 1.], device='cuda:0', requires_grad=True)

Parameter containing:

tensor([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.],device='cuda:0', requires_grad=True)

Parameter containing:

tensor([[-0.0012, -0.0019, 0.0004, ..., -0.0018, -0.0019, -0.0035],[ 0.0004, -0.0022, -0.0010, ..., -0.0031, 0.0027, -0.0037]],device='cuda:0', requires_grad=True)

Parameter containing:

tensor([-0.0030, 0.0039], device='cuda:0', requires_grad=True)

通过上面代码我们打印了模型中所有参数。

for name,parameters in model.named_parameters():print(name,':',parameters.size())

conv1.0.weight : torch.Size([12, 3, 5, 5])

conv1.0.bias : torch.Size([12])

conv1.1.weight : torch.Size([12])

conv1.1.bias : torch.Size([12])

conv2.0.weight : torch.Size([12, 12, 5, 5])

conv2.0.bias : torch.Size([12])

conv2.1.weight : torch.Size([12])

conv2.1.bias : torch.Size([12])

conv4.0.weight : torch.Size([24, 12, 5, 5])

conv4.0.bias : torch.Size([24])

conv4.1.weight : torch.Size([24])

conv4.1.bias : torch.Size([24])

conv5.0.weight : torch.Size([24, 24, 5, 5])

conv5.0.bias : torch.Size([24])

conv5.1.weight : torch.Size([24])

conv5.1.bias : torch.Size([24])

fc.0.weight : torch.Size([2, 60000])

fc.0.bias : torch.Size([2])

这段代码是用来打印模型中每个参数的名字和对应的形状大小的。

具体实现是通过使用named_parameters()方法,该方法返回一个迭代器,其中包含模型中的每个参数及其名称。

对于迭代器中的每个元素,我们打印参数的名称和形状大小,以便我们更好地了解模型中的每个参数的含义和作用。

四、训练模型

1、编写训练函数

# 训练循环

def train(dataloader, model, loss_fn, optimizer):size = len(dataloader.dataset) # 训练集的大小num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)train_loss, train_acc = 0, 0 # 初始化训练损失和正确率for X, y in dataloader: # 获取图片及其标签X, y = X.to(device), y.to(device)# 计算预测误差pred = model(X) # 网络输出loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失# 反向传播optimizer.zero_grad() # grad属性归零loss.backward() # 反向传播optimizer.step() # 每一步自动更新# 记录acc与losstrain_acc += (pred.argmax(1) == y).type(torch.float).sum().item()train_loss += loss.item()train_acc /= sizetrain_loss /= num_batchesreturn train_acc, train_loss

这段代码是训练模型的循环,它接收一个数据集dataloader,模型model,损失函数loss_fn和优化器optimizer作为输入参数。循环会遍历整个数据集,每次迭代会获取一批图像和标签(X,y),并将它们送入模型进行前向传播得到预测输出pred。之后,计算模型预测输出和真实值y之间的差异,这个差异被称为损失loss。然后通过反向传播将损失传递回模型以计算梯度。最后,通过优化器更新模型参数。

在循环中还会记录训练的准确率train_acc和损失train_loss,最后返回它们的平均值作为整个训练过程的准确率和损失值。

2、编写测试函数

def test (dataloader, model, loss_fn):size = len(dataloader.dataset) # 测试集的大小num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)test_loss, test_acc = 0, 0# 当不进行训练时,停止梯度更新,节省计算内存消耗with torch.no_grad():for imgs, target in dataloader:imgs, target = imgs.to(device), target.to(device)# 计算losstarget_pred = model(imgs)loss = loss_fn(target_pred, target)test_loss += loss.item()test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()test_acc /= sizetest_loss /= num_batchesreturn test_acc, test_loss

这段代码实现了测试集的验证,用于验证模型在未见过的数据上的表现。对于每个数据样本,将其输入到模型中得到预测值,计算预测值与真实值之间的差距,并统计所有测试样本的损失和准确率。

函数的输入包括数据加载器(dataloader),模型(model),和损失函数(loss_fn)。数据加载器提供了测试集数据的输入和真实值,模型用于计算预测值,损失函数用于计算预测值和真实值之间的差距。

在函数中,首先定义了变量size和num_batches分别表示测试集的大小和测试数据的批次数目。接着使用torch.no_grad()上下文管理器将梯度停止计算,以节省计算内存消耗。然后遍历测试集中的每个数据样本,将其输入到模型中得到预测值,计算预测值与真实值之间的差距,并统计所有测试样本的损失和准确率。最后,返回测试集的准确率和损失。

3、设置动态学习率

def adjust_learning_rate(optimizer, epoch, start_lr):# 每 2 个epoch衰减到原来的 0.98lr = start_lr * (0.92 ** (epoch // 2))for param_group in optimizer.param_groups:param_group['lr'] = lrlearn_rate = 1e-4 # 初始学习率

optimizer = torch.optim.SGD(model.parameters(), lr=learn_rate)

这段代码是一个调整学习率的函数和优化器的初始化。函数名为adjust_learning_rate,接受三个参数:优化器optimizer、当前的训练epoch和初始学习率start_lr。在每两个epoch之后,调用此函数可以将学习率衰减为原来的0.92倍。优化器使用的是随机梯度下降算法(SGD),初始学习率为1e-4。

4、正式训练

loss_fn = nn.CrossEntropyLoss() # 创建损失函数

epochs = 40train_loss = []

train_acc = []

test_loss = []

test_acc = []for epoch in range(epochs):# 更新学习率(使用自定义学习率时使用)adjust_learning_rate(optimizer, epoch, learn_rate)model.train()epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)# scheduler.step() # 更新学习率(调用官方动态学习率接口时使用)model.eval()epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)train_acc.append(epoch_train_acc)train_loss.append(epoch_train_loss)test_acc.append(epoch_test_acc)test_loss.append(epoch_test_loss)# 获取当前的学习率lr = optimizer.state_dict()['param_groups'][0]['lr']template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, Lr:{:.2E}')print(template.format(epoch+1, epoch_train_acc*100, epoch_train_loss, epoch_test_acc*100, epoch_test_loss, lr))

print('Done')

Epoch: 1, Train_acc:55.2%, Train_loss:0.717, Test_acc:52.6%, Test_loss:0.689, Lr:1.00E-04

Epoch: 2, Train_acc:64.1%, Train_loss:0.657, Test_acc:68.4%, Test_loss:0.601, Lr:1.00E-04

Epoch: 3, Train_acc:68.7%, Train_loss:0.601, Test_acc:68.4%, Test_loss:0.578, Lr:9.20E-05

Epoch: 4, Train_acc:75.1%, Train_loss:0.540, Test_acc:76.3%, Test_loss:0.536, Lr:9.20E-05

Epoch: 5, Train_acc:74.3%, Train_loss:0.521, Test_acc:75.0%, Test_loss:0.539, Lr:8.46E-05

Epoch: 6, Train_acc:78.9%, Train_loss:0.484, Test_acc:75.0%, Test_loss:0.573, Lr:8.46E-05

Epoch: 7, Train_acc:80.3%, Train_loss:0.456, Test_acc:77.6%, Test_loss:0.516, Lr:7.79E-05

Epoch: 8, Train_acc:82.7%, Train_loss:0.449, Test_acc:77.6%, Test_loss:0.532, Lr:7.79E-05

Epoch: 9, Train_acc:82.9%, Train_loss:0.435, Test_acc:80.3%, Test_loss:0.556, Lr:7.16E-05

Epoch:10, Train_acc:85.1%, Train_loss:0.418, Test_acc:80.3%, Test_loss:0.525, Lr:7.16E-05

Epoch:11, Train_acc:86.7%, Train_loss:0.394, Test_acc:82.9%, Test_loss:0.492, Lr:6.59E-05

Epoch:12, Train_acc:87.3%, Train_loss:0.390, Test_acc:78.9%, Test_loss:0.449, Lr:6.59E-05

Epoch:13, Train_acc:88.4%, Train_loss:0.375, Test_acc:82.9%, Test_loss:0.525, Lr:6.06E-05

Epoch:14, Train_acc:86.5%, Train_loss:0.375, Test_acc:80.3%, Test_loss:0.474, Lr:6.06E-05

Epoch:15, Train_acc:89.4%, Train_loss:0.344, Test_acc:80.3%, Test_loss:0.475, Lr:5.58E-05

Epoch:16, Train_acc:90.8%, Train_loss:0.330, Test_acc:82.9%, Test_loss:0.466, Lr:5.58E-05

Epoch:17, Train_acc:88.6%, Train_loss:0.352, Test_acc:80.3%, Test_loss:0.469, Lr:5.13E-05

Epoch:18, Train_acc:89.6%, Train_loss:0.337, Test_acc:77.6%, Test_loss:0.512, Lr:5.13E-05

Epoch:19, Train_acc:90.8%, Train_loss:0.328, Test_acc:80.3%, Test_loss:0.472, Lr:4.72E-05

Epoch:20, Train_acc:91.2%, Train_loss:0.326, Test_acc:82.9%, Test_loss:0.481, Lr:4.72E-05

Epoch:21, Train_acc:92.0%, Train_loss:0.314, Test_acc:80.3%, Test_loss:0.455, Lr:4.34E-05

Epoch:22, Train_acc:91.4%, Train_loss:0.315, Test_acc:80.3%, Test_loss:0.486, Lr:4.34E-05

Epoch:23, Train_acc:93.8%, Train_loss:0.301, Test_acc:82.9%, Test_loss:0.420, Lr:4.00E-05

Epoch:24, Train_acc:92.4%, Train_loss:0.293, Test_acc:80.3%, Test_loss:0.435, Lr:4.00E-05

Epoch:25, Train_acc:93.4%, Train_loss:0.292, Test_acc:81.6%, Test_loss:0.432, Lr:3.68E-05

Epoch:26, Train_acc:92.2%, Train_loss:0.294, Test_acc:81.6%, Test_loss:0.441, Lr:3.68E-05

Epoch:27, Train_acc:93.0%, Train_loss:0.296, Test_acc:80.3%, Test_loss:0.436, Lr:3.38E-05

Epoch:28, Train_acc:93.2%, Train_loss:0.283, Test_acc:80.3%, Test_loss:0.483, Lr:3.38E-05

Epoch:29, Train_acc:92.0%, Train_loss:0.281, Test_acc:80.3%, Test_loss:0.429, Lr:3.11E-05

Epoch:30, Train_acc:94.2%, Train_loss:0.271, Test_acc:80.3%, Test_loss:0.489, Lr:3.11E-05

Epoch:31, Train_acc:94.0%, Train_loss:0.276, Test_acc:80.3%, Test_loss:0.448, Lr:2.86E-05

Epoch:32, Train_acc:94.8%, Train_loss:0.267, Test_acc:84.2%, Test_loss:0.426, Lr:2.86E-05

Epoch:33, Train_acc:94.4%, Train_loss:0.267, Test_acc:80.3%, Test_loss:0.428, Lr:2.63E-05

Epoch:34, Train_acc:94.2%, Train_loss:0.266, Test_acc:81.6%, Test_loss:0.430, Lr:2.63E-05

Epoch:35, Train_acc:94.8%, Train_loss:0.265, Test_acc:82.9%, Test_loss:0.450, Lr:2.42E-05

Epoch:36, Train_acc:94.6%, Train_loss:0.269, Test_acc:80.3%, Test_loss:0.469, Lr:2.42E-05

Epoch:37, Train_acc:95.0%, Train_loss:0.256, Test_acc:82.9%, Test_loss:0.396, Lr:2.23E-05

Epoch:38, Train_acc:94.8%, Train_loss:0.252, Test_acc:81.6%, Test_loss:0.444, Lr:2.23E-05

Epoch:39, Train_acc:93.4%, Train_loss:0.266, Test_acc:81.6%, Test_loss:0.469, Lr:2.05E-05

Epoch:40, Train_acc:94.0%, Train_loss:0.265, Test_acc:81.6%, Test_loss:0.485, Lr:2.05E-05

Done

这段代码是一个完整的训练循环,包括了模型训练、模型测试、学习率调整等步骤。主要实现了对模型在训练集和测试集上的损失和正确率的计算,以及每个epoch结束后的输出。

训练循环的主要流程如下:

- 定义损失函数和训练优化器;

- 循环迭代训练集,计算预测值和真实值之间的误差,并进行反向传播和参数更新;

- 每个epoch结束后,使用测试集评估模型的性能,并记录测试集上的损失和正确率;

- 更新学习率;

- 输出每个epoch的损失和正确率。

这段代码中的train()和test()函数分别实现了训练集和测试集上的模型训练和评估过程,adjust_learning_rate()函数实现了学习率的自适应调整。最终的训练结果可以通过输出的训练和测试集上的损失和正确率进行评估。

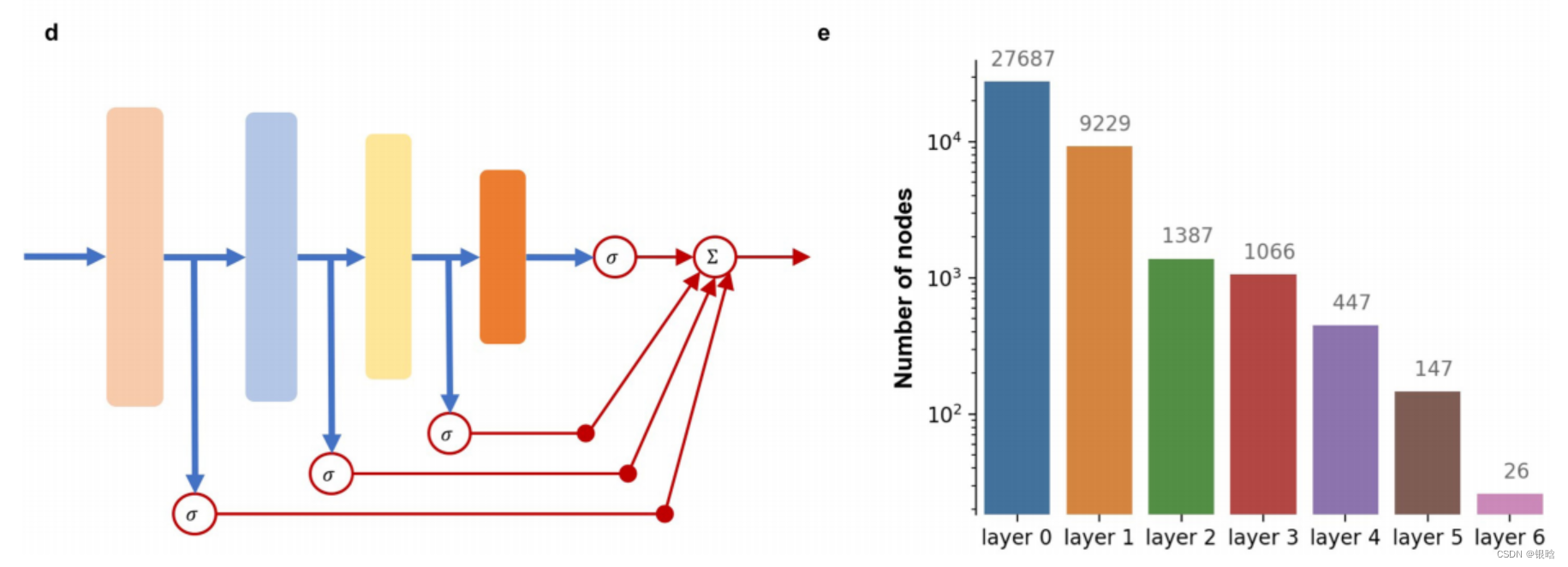

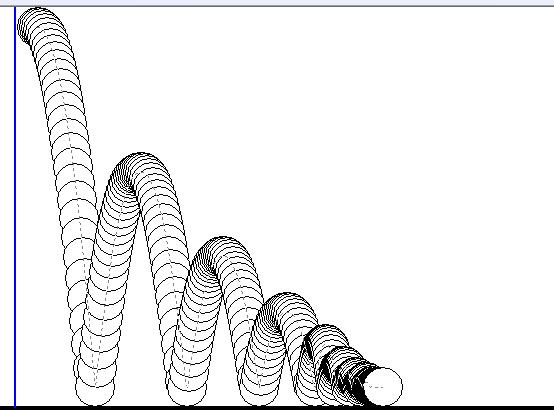

五、结果可视化

import matplotlib.pyplot as plt

#隐藏警告

import warnings

warnings.filterwarnings("ignore") #忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 #分辨率epochs_range = range(epochs)plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

六、指定图片预测

from PIL import Image classes = list(train_dataset.class_to_idx)def predict_one_image(image_path, model, transform, classes):test_img = Image.open(image_path).convert('RGB')# plt.imshow(test_img) # 展示预测的图片test_img = transform(test_img)img = test_img.to(device).unsqueeze(0)model.eval()output = model(img)_,pred = torch.max(output,1)pred_class = classes[pred]print(f'预测结果是:{pred_class}')# 预测训练集中的某张照片

predict_one_image(image_path='/content/drive/Othercomputers/我的笔记本电脑/深度学习/data/Day14/test/nike/16.jpg', model=model, transform=train_transforms, classes=classes)

预测结果是:nike

七、保存模型

# 模型保存

PATH = './model.pth' # 保存的参数文件名

torch.save(model.state_dict(), PATH)# 将参数加载到model当中

model.load_state_dict(torch.load(PATH, map_location=device))

八、修改

我修改了优化器,更换成了Adam,准确率提高了一些。

...

Epoch:36, Train_acc:100.0%, Train_loss:0.017, Test_acc:86.8%, Test_loss:0.291, Lr:2.42E-05

Epoch:37, Train_acc:100.0%, Train_loss:0.015, Test_acc:86.8%, Test_loss:0.379, Lr:2.23E-05

Epoch:38, Train_acc:100.0%, Train_loss:0.015, Test_acc:88.2%, Test_loss:0.366, Lr:2.23E-05

Epoch:39, Train_acc:100.0%, Train_loss:0.014, Test_acc:86.8%, Test_loss:0.300, Lr:2.05E-05

Epoch:40, Train_acc:100.0%, Train_loss:0.014, Test_acc:88.2%, Test_loss:0.365, Lr:2.05E-05

Done

相关文章:

深度学习 Day27——利用Pytorch实现运动鞋识别

深度学习 Day27——利用Pytorch实现运动鞋识别 文章目录深度学习 Day27——利用Pytorch实现运动鞋识别一、查看colab机器配置二、前期准备1、导入依赖项并设置GPU2、导入数据三、构建CNN网络四、训练模型1、编写训练函数2、编写测试函数3、设置动态学习率4、正式训练五、结果可…...

Springboot 整合dom4j 解析xml 字符串 转JSONObject

前言 本文只介绍使用 dom4j 以及fastjson的 方式, 因为平日使用比较多。老的那个json也能转,而且还封装好了XML,但是本文不做介绍。 正文 ①加入 pom 依赖 <dependency><groupId>dom4j</groupId><artifactId>dom4j…...

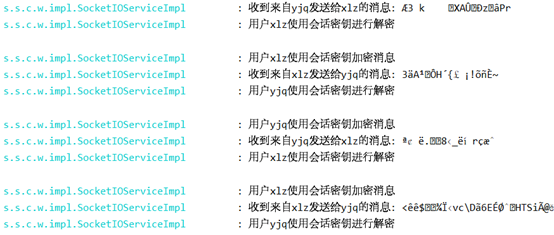

网络安全实验——安全通信软件safechat的设计

网络安全实验——安全通信软件safechat的设计 仅供参考,请勿直接抄袭,抄袭者后果自负。 仓库地址: 后端地址:https://github.com/yijunquan-afk/safechat-server 前端地址: https://github.com/yijunquan-afk/safec…...

【MySQL】MySQL的事务

目录 概念 什么是事务? 理解事务 事务操作 事务的特性 事务的隔离级别 事务的隔离级别-操作 概念 数据库存储引擎是数据库底层软件组织,数据库管理系统(DBMS)使用数据引擎进行创建、查 询、更新和删除数据。 不同的存储引擎提供…...

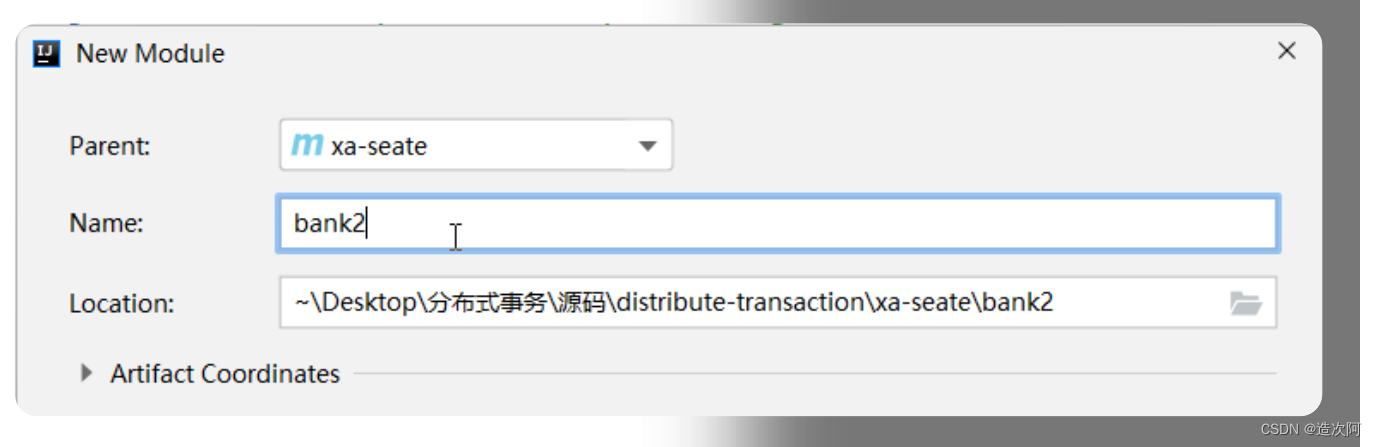

Java分布式事务(七)

文章目录🔥Seata提供XA模式实现分布式事务_业务说明🔥Seata提供XA模式实现分布式事务_下载启动Seata服务🔥Seata提供XA模式实现分布式事务_转账功能实现上🔥Seata提供XA模式实现分布式事务_转账功能实现下🔥Seata提供X…...

二十八、实战演练之定义用户类模型、迁移用户模型类

1. Django默认用户模型类 (1)Django认证系统中提供了用户模型类User保存用户的数据。 User对象是认证系统的核心。 (2)Django认证系统用户模型类位置 django.contrib.auth.models.User(3)父类AbstractUs…...

Java Virtual Machine的结构 3

1 Run-Time Data Areas 1.1 The pc Register 1.2 Java Virtual Machine Stacks 1.3 Heap 1.4 Method Area JVM方法区是在JVM所有线程中共享的内存区域,在编程语言中方法区是用于存储编译的代码、在操作系统进程中方法区是用于存储文本段,在JVM中方法…...

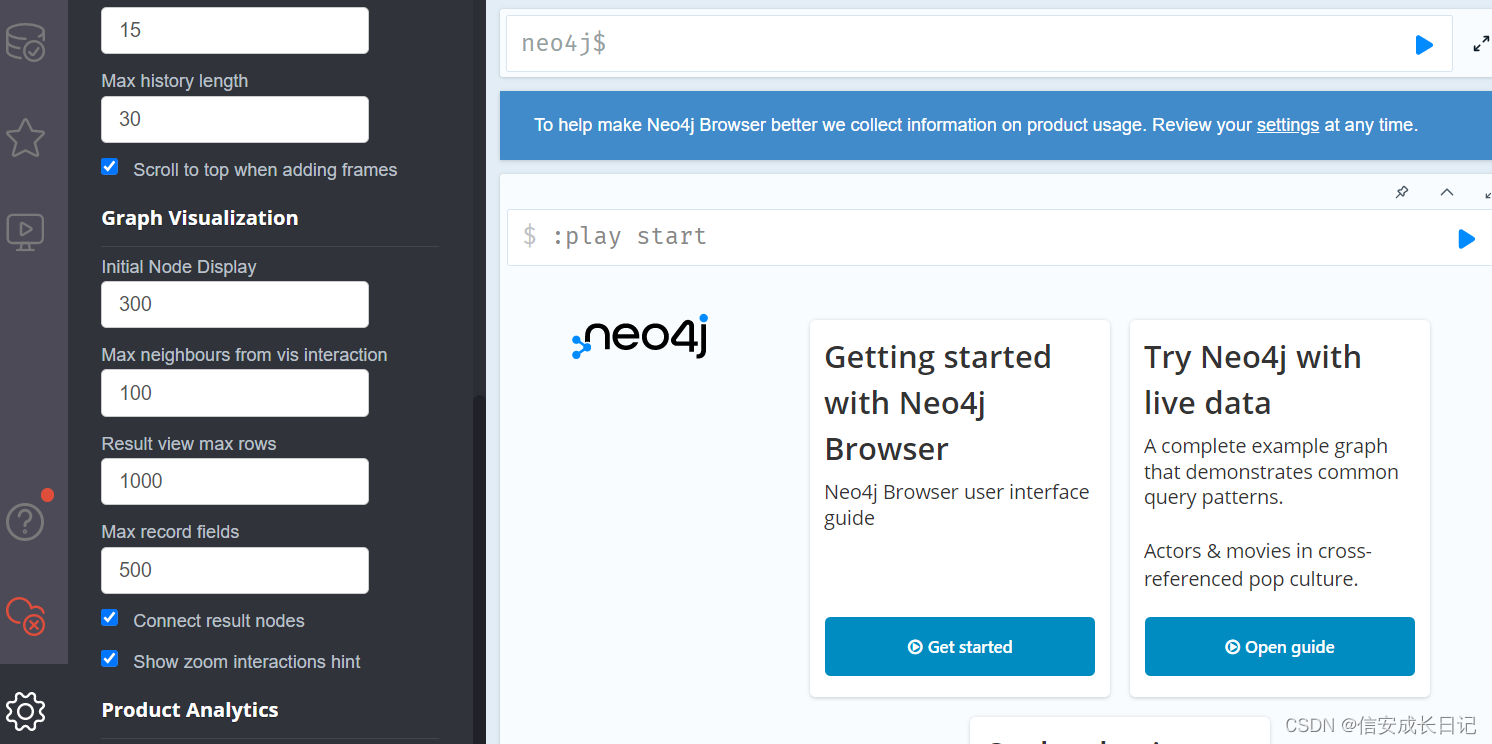

linux ubuntu22 安装neo4j

环境:neo4j 5 ubuntu22 openjdk-17 neo4j 5 对 jre 版本要求是 17 及以上,且最好是 openjdk,使用比较新的 ubuntu 系统安装比较好, centos7 因为没有维护,yum 找不到 openjdk-17了。 官方的 debian 系列安装教程&a…...

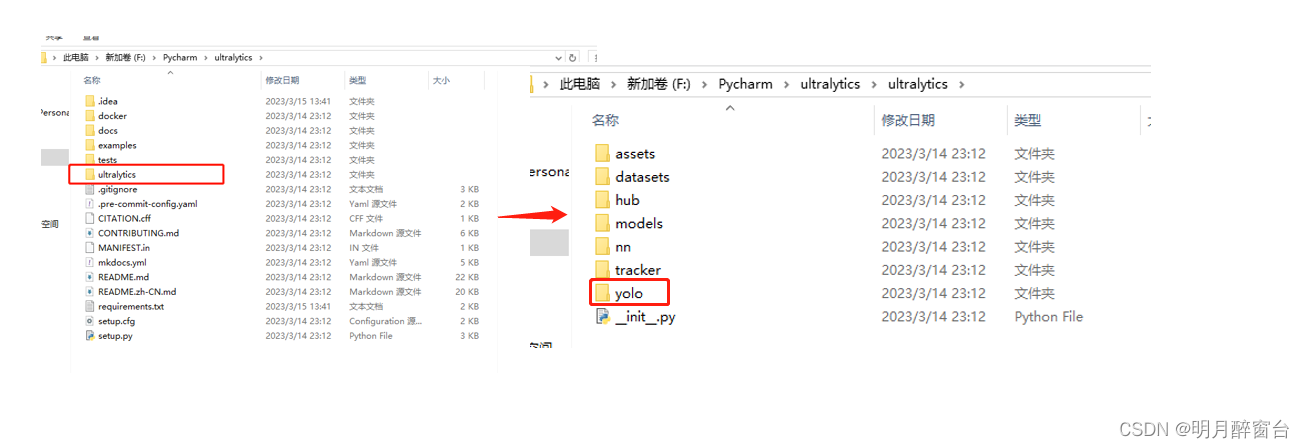

模型实战(7)之YOLOv8推理+训练自己的数据集详解

YOLOv8推理+训练自己的数据集详解 最近刚出的yolov8模型确实很赞啊,亲测同样的数据集用v5和v8两个模型训练+预测,结果显示v8在检测精度和准确度上明显强于v5。下边给出yolov8的效果对比图: 关于v8的结构原理在此不做赘述,随便搜一下到处都是。1.环境搭建 进入github进行git…...

火车进出栈问题 题解

来源 卡特兰数 个人评价(一句话描述对这个题的情感) …~%?..,# *☆&℃$︿★? 1 题面 一列火车n节车厢,依次编号为1,2,3,…,n。每节车厢有两种运动方式,进栈与出栈,问n节车厢出栈的可能排列方式有多少种。 输入…...

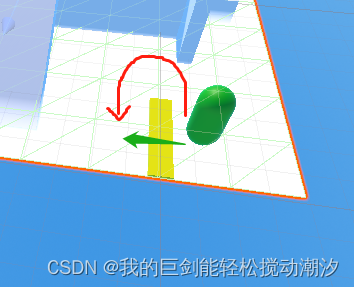

Unity学习日记12(导航走路相关、动作完成度返回参数)

目录 动作的曲线与函数 创建遮罩 导航走路 设置导航网格权重 动作的曲线与函数 执行动作,根据动作完成度返回参数。 函数,在代码内执行同名函数即可调用。在执行关键帧时调用。 创建遮罩 绿色为可效用位置 将其运用到Animator上的遮罩,可…...

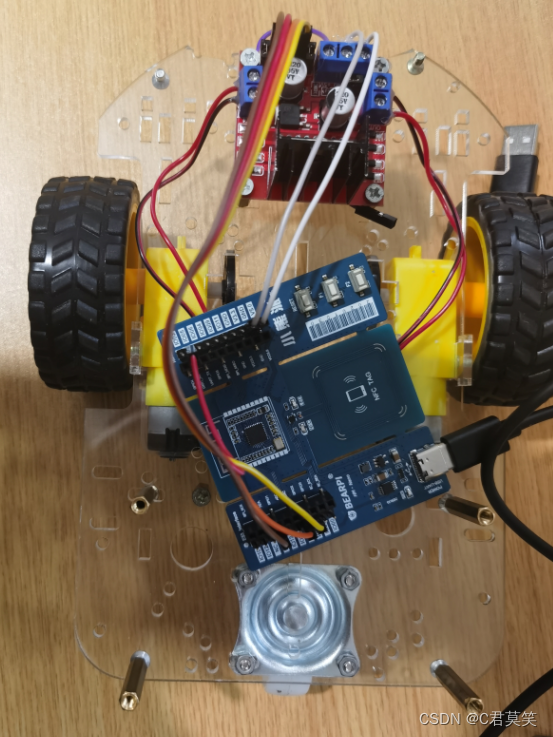

基于bearpi的智能小车--Qt上位机设计

基于bearpi的智能小车--Qt上位机设计 前言一、界面原型1.主界面2.网络配置子窗口模块二、设计步骤1.界面原型设计2.控件添加信号槽3.源码解析3.1.网络链接核心代码3.2.网络设置子界面3.3.小车控制核心代码总结前言 最近入手了两块小熊派开发板,借智能小车案例,进行鸿蒙设备学…...

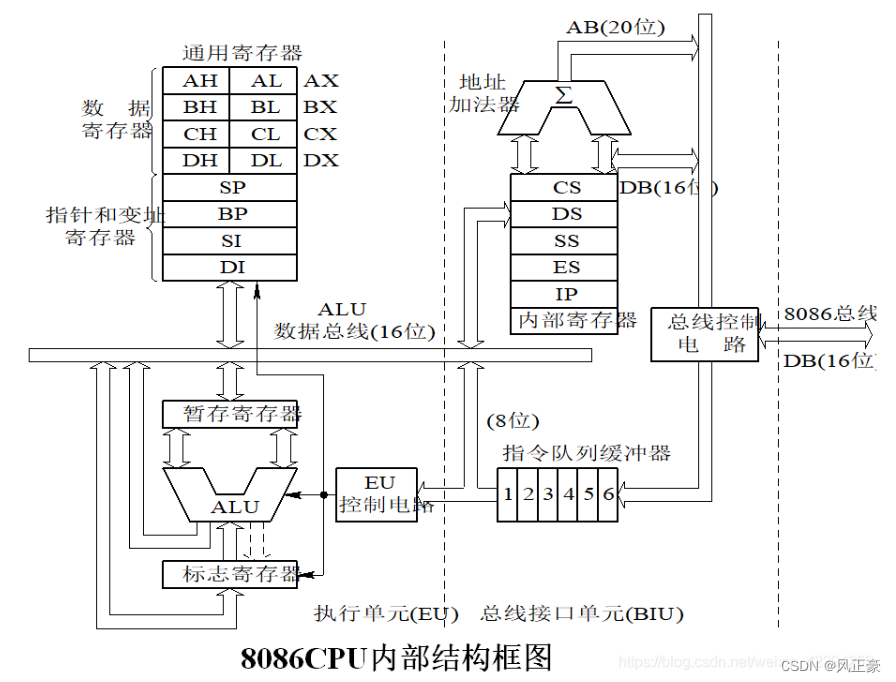

汇编语言与微机原理(1)基础知识

前言(1)本人使用的是王爽老师的汇编语言第四版和学校发的微机原理教材配合学习。(2)推荐视频教程通俗易懂的汇编语言(王爽老师的书);贺老师C站账号网址;(3)文…...

ASEMI代理瑞萨TW8825-LA1-CR汽车芯片

编辑-Z TW8825-LA1-CR在单个封装中集成了创建多用途车载LCD显示系统所需的许多功能。它集成了高质量的2D梳状NTSC/PAL/SECAM视频解码器、三重高速RGB ADC、高质量缩放器、多功能OSD和高性能MCU。TW8825-LA1-CR其图像视频处理能力包括任意缩放、全景缩放、图像镜像、图像调整和…...

什么是 .com 域名?含义和用途又是什么?

随着网络的发展,网络上出现了各种不同后缀的域名,这些域名的后缀各有不同的含义,也有不同的用途。今天,我们就一起来探讨一下 .com 后缀的域名知识。 .com 域名是一种最常见的顶级域名,它是由美国国家网络信息中心&…...

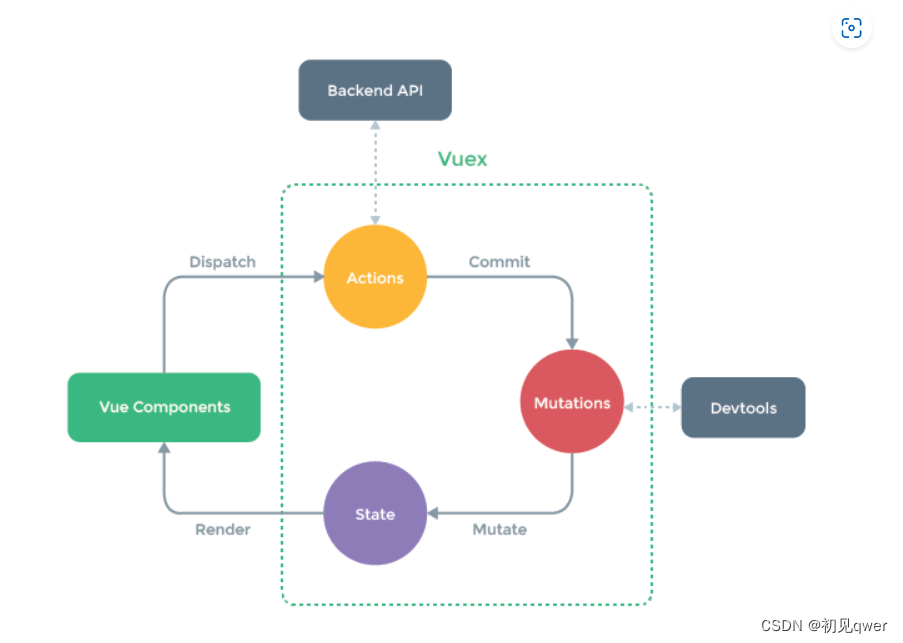

VueX快速入门(适合后端,无脑入门!!!)

文章目录前言State和Mutations基础简化gettersMutationsActions(异步)Module总结前言 作为一个没啥前端基础(就是那种跳过js直接学vue的那种。。。)的后端选手。按照自己的思路总结了一下对VueX的理解。大佬勿喷qAq。 首先我们需要…...

前列腺癌论文笔记

名词解释 MRF: 磁共振指纹打印技术( MR Fingerprinting)是近几年发展起来的最新磁共振技术,以一种全新的方法对数据进行采集、后处理和实现可视化。 MRF使用一种伪随机采集方法,取代了过去为获得个体感兴趣的参数特征而使用重复系列数据的采集方法&…...

Python+Yolov5道路障碍物识别

PythonYolov5道路障碍物识别如需安装运行环境或远程调试,见文章底部个人QQ名片,由专业技术人员远程协助!前言这篇博客针对<<PythonYolov5道路障碍物识别>>编写代码,代码整洁,规则,易读。 学习与…...

全新升级,EasyV 3D高德地图组件全新上线

当我们打开任意一个可视化搭建工具或者搜索数据可视化等关键词,我们会发现「地图」是可视化领域中非常重要的一种形式,对于许多可视化应用场景都具有非常重要的意义,那对于EasyV,地图又意味着什么呢?EasyV作为数字孪生…...

从管理到变革,优秀管理者的进阶之路

作为一位管理者,了解自身需求、企业需求和用户需求是非常重要的。然而,仅仅满足这些需求是不够的。我们还需要进行系统化的思考,以了解我们可以为他人提供什么价值,以及在企业中扮演什么样的角色。只有清晰的自我定位,…...

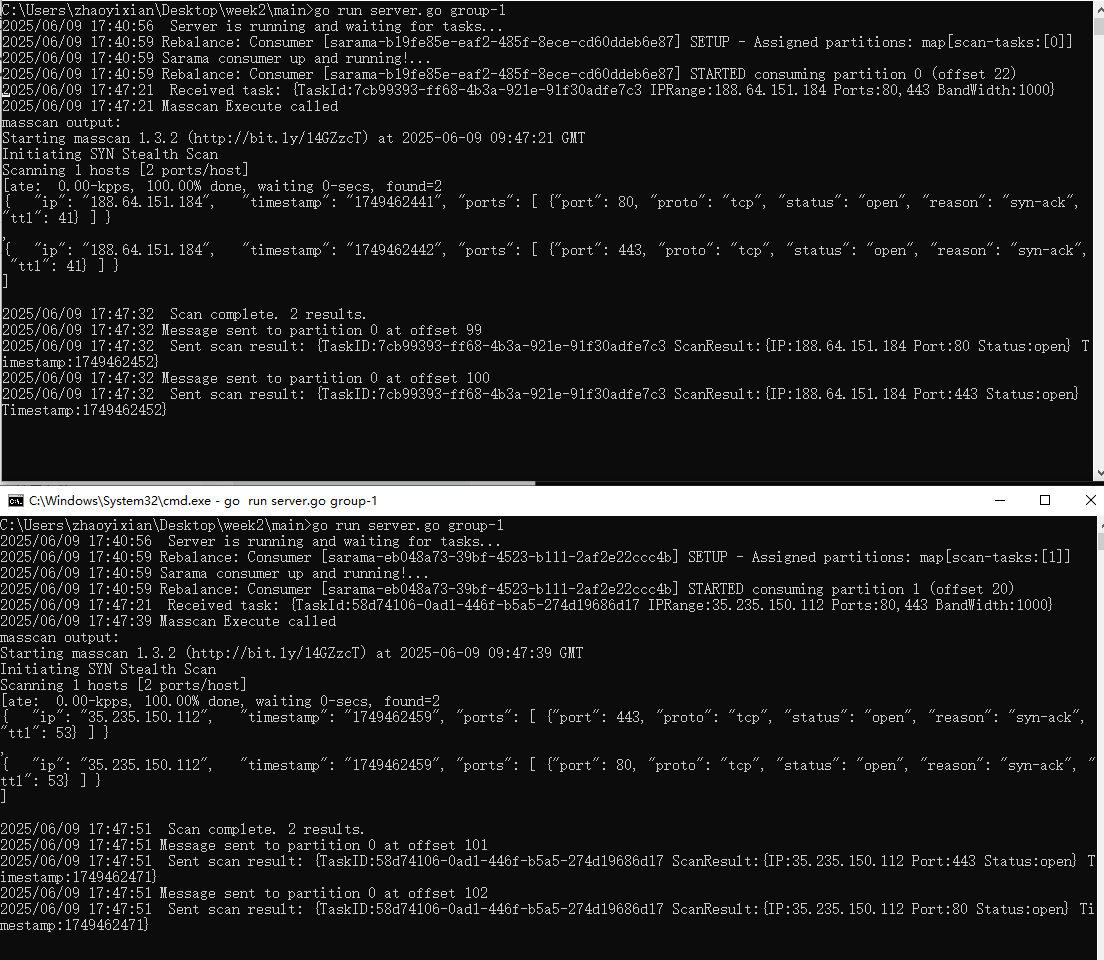

【kafka】Golang实现分布式Masscan任务调度系统

要求: 输出两个程序,一个命令行程序(命令行参数用flag)和一个服务端程序。 命令行程序支持通过命令行参数配置下发IP或IP段、端口、扫描带宽,然后将消息推送到kafka里面。 服务端程序: 从kafka消费者接收…...

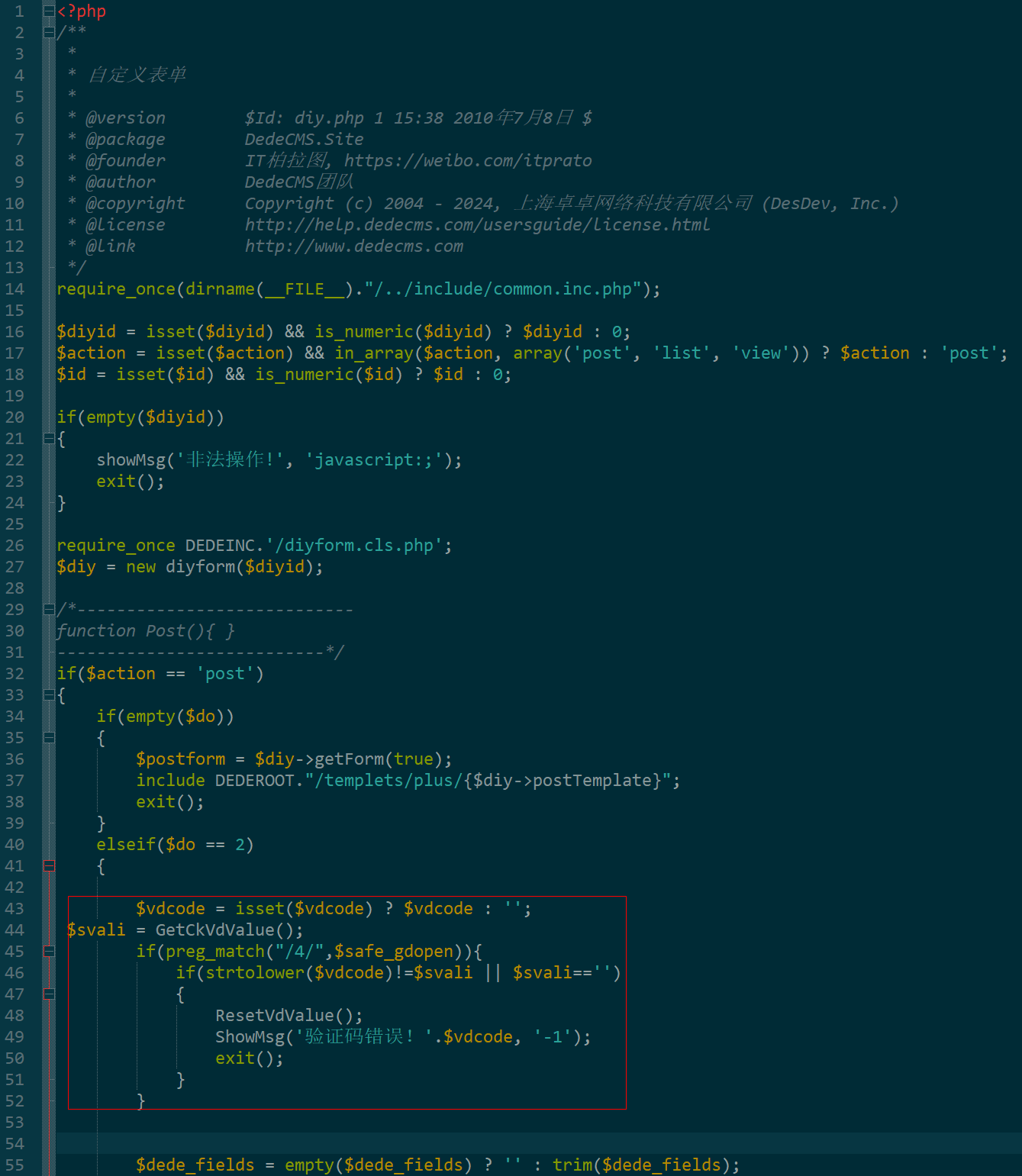

dedecms 织梦自定义表单留言增加ajax验证码功能

增加ajax功能模块,用户不点击提交按钮,只要输入框失去焦点,就会提前提示验证码是否正确。 一,模板上增加验证码 <input name"vdcode"id"vdcode" placeholder"请输入验证码" type"text&quo…...

OkHttp 中实现断点续传 demo

在 OkHttp 中实现断点续传主要通过以下步骤完成,核心是利用 HTTP 协议的 Range 请求头指定下载范围: 实现原理 Range 请求头:向服务器请求文件的特定字节范围(如 Range: bytes1024-) 本地文件记录:保存已…...

Mac软件卸载指南,简单易懂!

刚和Adobe分手,它却总在Library里给你写"回忆录"?卸载的Final Cut Pro像电子幽灵般阴魂不散?总是会有残留文件,别慌!这份Mac软件卸载指南,将用最硬核的方式教你"数字分手术"࿰…...

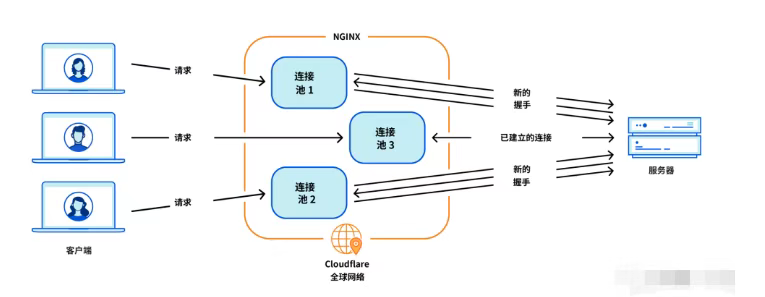

Cloudflare 从 Nginx 到 Pingora:性能、效率与安全的全面升级

在互联网的快速发展中,高性能、高效率和高安全性的网络服务成为了各大互联网基础设施提供商的核心追求。Cloudflare 作为全球领先的互联网安全和基础设施公司,近期做出了一个重大技术决策:弃用长期使用的 Nginx,转而采用其内部开发…...

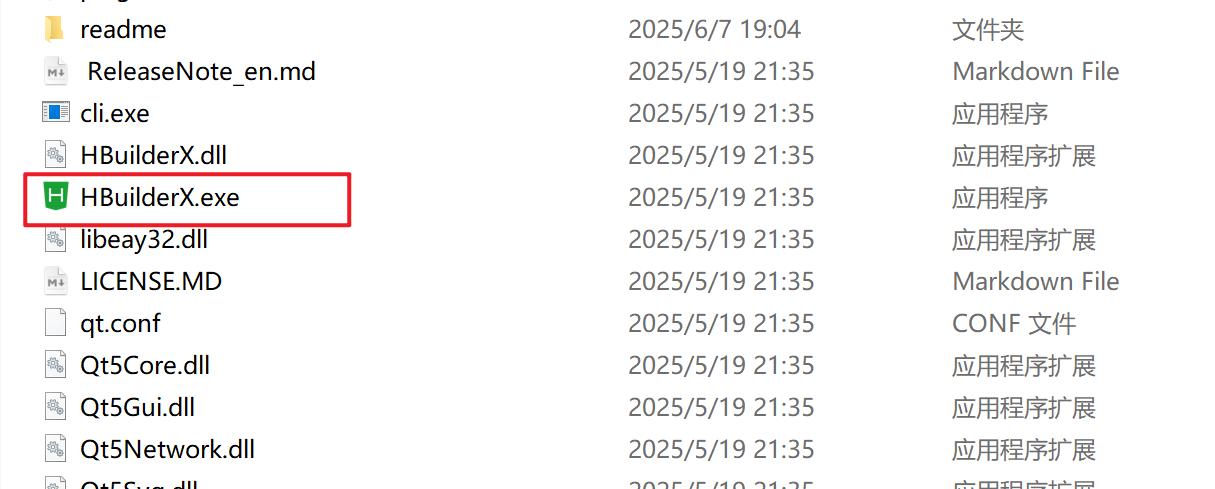

HBuilderX安装(uni-app和小程序开发)

下载HBuilderX 访问官方网站:https://www.dcloud.io/hbuilderx.html 根据您的操作系统选择合适版本: Windows版(推荐下载标准版) Windows系统安装步骤 运行安装程序: 双击下载的.exe安装文件 如果出现安全提示&…...

【开发技术】.Net使用FFmpeg视频特定帧上绘制内容

目录 一、目的 二、解决方案 2.1 什么是FFmpeg 2.2 FFmpeg主要功能 2.3 使用Xabe.FFmpeg调用FFmpeg功能 2.4 使用 FFmpeg 的 drawbox 滤镜来绘制 ROI 三、总结 一、目的 当前市场上有很多目标检测智能识别的相关算法,当前调用一个医疗行业的AI识别算法后返回…...

MFC 抛体运动模拟:常见问题解决与界面美化

在 MFC 中开发抛体运动模拟程序时,我们常遇到 轨迹残留、无效刷新、视觉单调、物理逻辑瑕疵 等问题。本文将针对这些痛点,详细解析原因并提供解决方案,同时兼顾界面美化,让模拟效果更专业、更高效。 问题一:历史轨迹与小球残影残留 现象 小球运动后,历史位置的 “残影”…...

[免费]微信小程序问卷调查系统(SpringBoot后端+Vue管理端)【论文+源码+SQL脚本】

大家好,我是java1234_小锋老师,看到一个不错的微信小程序问卷调查系统(SpringBoot后端Vue管理端)【论文源码SQL脚本】,分享下哈。 项目视频演示 【免费】微信小程序问卷调查系统(SpringBoot后端Vue管理端) Java毕业设计_哔哩哔哩_bilibili 项…...

在 Spring Boot 中使用 JSP

jsp? 好多年没用了。重新整一下 还费了点时间,记录一下。 项目结构: pom: <?xml version"1.0" encoding"UTF-8"?> <project xmlns"http://maven.apache.org/POM/4.0.0" xmlns:xsi"http://ww…...