hive映射es表任务失败,无错误日志一直报Task Transitioned from NEW to SCHEDULED

一、背景

要利用gpt产生的存放在es种的日志表做统计分析,通过hive建es的映射表,将es的数据拉到hive里面。

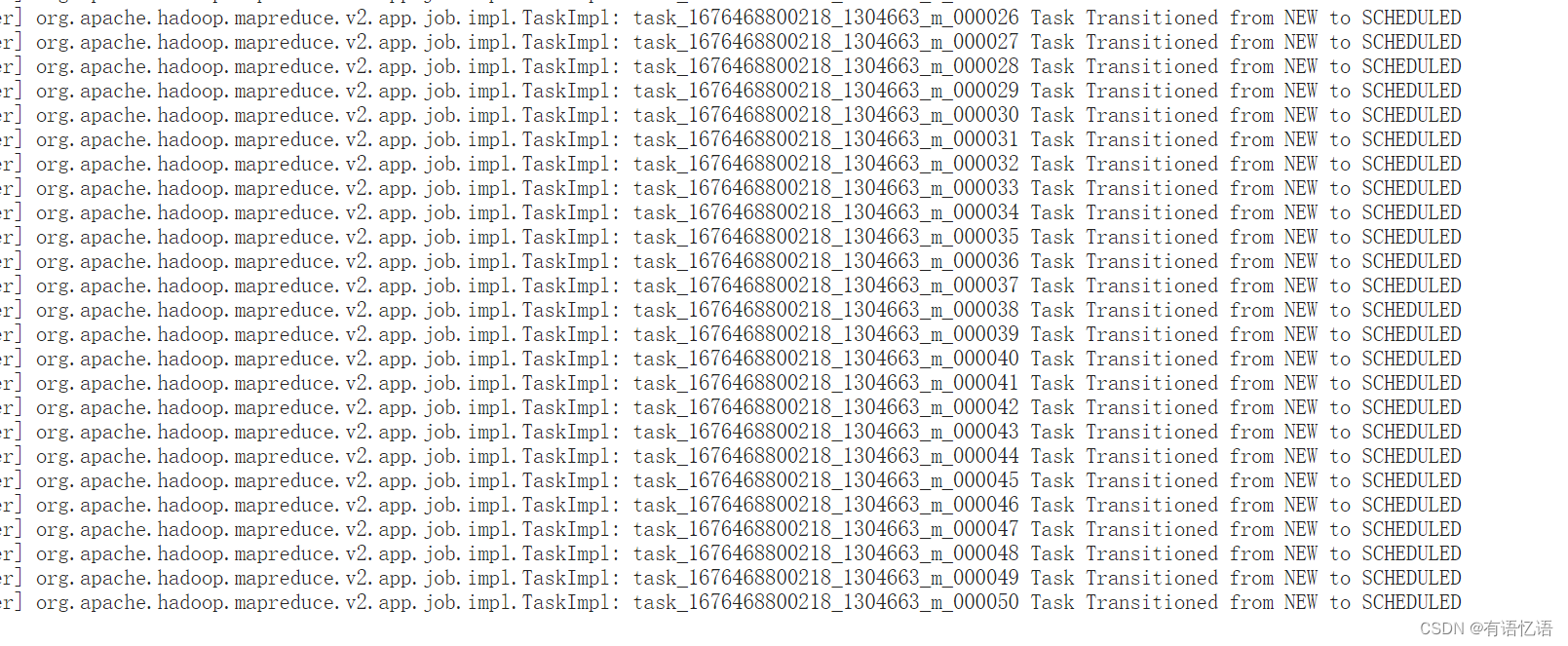

在最初的时候同事写的是全量拉取,某一天突然任务报错,但是没有错误日志一直报:Task Transitioned from NEW to SCHEDULED。

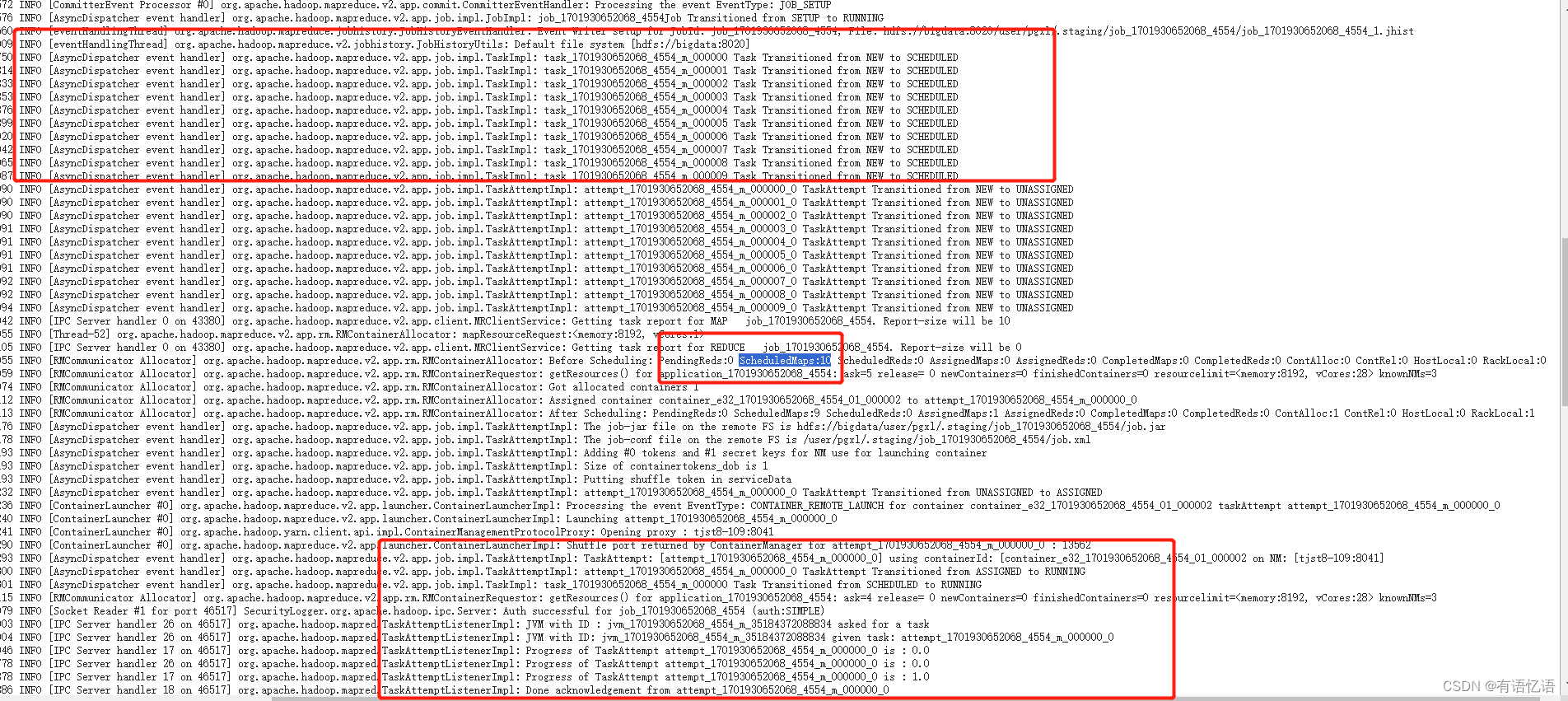

表象如下:

请求ApplicationMaster页面会很慢,最终报如下错误:

HTTP ERROR 500

Problem accessing /proxy/application_1701930652068_0336/. Reason:Connection to http://tjst8-110:35686 refused

Caused by:

org.apache.http.conn.HttpHostConnectException: Connection to http://tjst8-110:35686 refusedat org.apache.http.impl.conn.DefaultClientConnectionOperator.openConnection(DefaultClientConnectionOperator.java:190)at org.apache.http.impl.conn.ManagedClientConnectionImpl.open(ManagedClientConnectionImpl.java:294)at org.apache.http.impl.client.DefaultRequestDirector.tryExecute(DefaultRequestDirector.java:704)at org.apache.http.impl.client.DefaultRequestDirector.execute(DefaultRequestDirector.java:520)at org.apache.http.impl.client.AbstractHttpClient.execute(AbstractHttpClient.java:906)at org.apache.http.impl.client.AbstractHttpClient.execute(AbstractHttpClient.java:805)at org.apache.http.impl.client.AbstractHttpClient.execute(AbstractHttpClient.java:784)at org.apache.hadoop.yarn.server.webproxy.WebAppProxyServlet.proxyLink(WebAppProxyServlet.java:193)at org.apache.hadoop.yarn.server.webproxy.WebAppProxyServlet.doGet(WebAppProxyServlet.java:352)at javax.servlet.http.HttpServlet.service(HttpServlet.java:707)at javax.servlet.http.HttpServlet.service(HttpServlet.java:820)at org.mortbay.jetty.servlet.ServletHolder.handle(ServletHolder.java:511)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1221)at com.google.inject.servlet.FilterChainInvocation.doFilter(FilterChainInvocation.java:66)at com.sun.jersey.spi.container.servlet.ServletContainer.doFilter(ServletContainer.java:900)at com.sun.jersey.spi.container.servlet.ServletContainer.doFilter(ServletContainer.java:834)at org.apache.hadoop.yarn.server.resourcemanager.webapp.RMWebAppFilter.doFilter(RMWebAppFilter.java:141)at com.sun.jersey.spi.container.servlet.ServletContainer.doFilter(ServletContainer.java:795)at com.google.inject.servlet.FilterDefinition.doFilter(FilterDefinition.java:163)at com.google.inject.servlet.FilterChainInvocation.doFilter(FilterChainInvocation.java:58)at com.google.inject.servlet.ManagedFilterPipeline.dispatch(ManagedFilterPipeline.java:118)at com.google.inject.servlet.GuiceFilter.doFilter(GuiceFilter.java:113)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212)at org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter.doFilter(StaticUserWebFilter.java:109)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212)at org.apache.hadoop.security.authentication.server.AuthenticationFilter.doFilter(AuthenticationFilter.java:622)at org.apache.hadoop.security.token.delegation.web.DelegationTokenAuthenticationFilter.doFilter(DelegationTokenAuthenticationFilter.java:291)at org.apache.hadoop.security.authentication.server.AuthenticationFilter.doFilter(AuthenticationFilter.java:574)at org.apache.hadoop.yarn.server.security.http.RMAuthenticationFilter.doFilter(RMAuthenticationFilter.java:84)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212)at org.apache.hadoop.http.HttpServer2$QuotingInputFilter.doFilter(HttpServer2.java:1296)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212)at org.apache.hadoop.http.NoCacheFilter.doFilter(NoCacheFilter.java:45)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212)at org.apache.hadoop.http.NoCacheFilter.doFilter(NoCacheFilter.java:45)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212)at org.mortbay.jetty.servlet.ServletHandler.handle(ServletHandler.java:399)at org.mortbay.jetty.security.SecurityHandler.handle(SecurityHandler.java:216)at org.mortbay.jetty.servlet.SessionHandler.handle(SessionHandler.java:182)at org.mortbay.jetty.handler.ContextHandler.handle(ContextHandler.java:767)at org.mortbay.jetty.webapp.WebAppContext.handle(WebAppContext.java:450)at org.mortbay.jetty.handler.ContextHandlerCollection.handle(ContextHandlerCollection.java:230)at org.mortbay.jetty.handler.HandlerWrapper.handle(HandlerWrapper.java:152)at org.mortbay.jetty.Server.handle(Server.java:326)at org.mortbay.jetty.HttpConnection.handleRequest(HttpConnection.java:542)at org.mortbay.jetty.HttpConnection$RequestHandler.headerComplete(HttpConnection.java:928)at org.mortbay.jetty.HttpParser.parseNext(HttpParser.java:549)at org.mortbay.jetty.HttpParser.parseAvailable(HttpParser.java:212)at org.mortbay.jetty.HttpConnection.handle(HttpConnection.java:404)at org.mortbay.io.nio.SelectChannelEndPoint.run(SelectChannelEndPoint.java:410)at org.mortbay.thread.QueuedThreadPool$PoolThread.run(QueuedThreadPool.java:582)

Caused by: java.net.ConnectException: Connection refused (Connection refused)at java.net.PlainSocketImpl.socketConnect(Native Method)at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)at java.net.Socket.connect(Socket.java:589)at org.apache.http.conn.scheme.PlainSocketFactory.connectSocket(PlainSocketFactory.java:127)at org.apache.http.impl.conn.DefaultClientConnectionOperator.openConnection(DefaultClientConnectionOperator.java:180)... 50 more

Caused by:

java.net.ConnectException: Connection refused (Connection refused)at java.net.PlainSocketImpl.socketConnect(Native Method)at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)at java.net.Socket.connect(Socket.java:589)at org.apache.http.conn.scheme.PlainSocketFactory.connectSocket(PlainSocketFactory.java:127)at org.apache.http.impl.conn.DefaultClientConnectionOperator.openConnection(DefaultClientConnectionOperator.java:180)at org.apache.http.impl.conn.ManagedClientConnectionImpl.open(ManagedClientConnectionImpl.java:294)at org.apache.http.impl.client.DefaultRequestDirector.tryExecute(DefaultRequestDirector.java:704)at org.apache.http.impl.client.DefaultRequestDirector.execute(DefaultRequestDirector.java:520)at org.apache.http.impl.client.AbstractHttpClient.execute(AbstractHttpClient.java:906)at org.apache.http.impl.client.AbstractHttpClient.execute(AbstractHttpClient.java:805)at org.apache.http.impl.client.AbstractHttpClient.execute(AbstractHttpClient.java:784)at org.apache.hadoop.yarn.server.webproxy.WebAppProxyServlet.proxyLink(WebAppProxyServlet.java:193)at org.apache.hadoop.yarn.server.webproxy.WebAppProxyServlet.doGet(WebAppProxyServlet.java:352)at javax.servlet.http.HttpServlet.service(HttpServlet.java:707)at javax.servlet.http.HttpServlet.service(HttpServlet.java:820)at org.mortbay.jetty.servlet.ServletHolder.handle(ServletHolder.java:511)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1221)at com.google.inject.servlet.FilterChainInvocation.doFilter(FilterChainInvocation.java:66)at com.sun.jersey.spi.container.servlet.ServletContainer.doFilter(ServletContainer.java:900)at com.sun.jersey.spi.container.servlet.ServletContainer.doFilter(ServletContainer.java:834)at org.apache.hadoop.yarn.server.resourcemanager.webapp.RMWebAppFilter.doFilter(RMWebAppFilter.java:141)at com.sun.jersey.spi.container.servlet.ServletContainer.doFilter(ServletContainer.java:795)at com.google.inject.servlet.FilterDefinition.doFilter(FilterDefinition.java:163)at com.google.inject.servlet.FilterChainInvocation.doFilter(FilterChainInvocation.java:58)at com.google.inject.servlet.ManagedFilterPipeline.dispatch(ManagedFilterPipeline.java:118)at com.google.inject.servlet.GuiceFilter.doFilter(GuiceFilter.java:113)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212)at org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter.doFilter(StaticUserWebFilter.java:109)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212)at org.apache.hadoop.security.authentication.server.AuthenticationFilter.doFilter(AuthenticationFilter.java:622)at org.apache.hadoop.security.token.delegation.web.DelegationTokenAuthenticationFilter.doFilter(DelegationTokenAuthenticationFilter.java:291)at org.apache.hadoop.security.authentication.server.AuthenticationFilter.doFilter(AuthenticationFilter.java:574)at org.apache.hadoop.yarn.server.security.http.RMAuthenticationFilter.doFilter(RMAuthenticationFilter.java:84)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212)at org.apache.hadoop.http.HttpServer2$QuotingInputFilter.doFilter(HttpServer2.java:1296)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212)at org.apache.hadoop.http.NoCacheFilter.doFilter(NoCacheFilter.java:45)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212)at org.apache.hadoop.http.NoCacheFilter.doFilter(NoCacheFilter.java:45)at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212)at org.mortbay.jetty.servlet.ServletHandler.handle(ServletHandler.java:399)at org.mortbay.jetty.security.SecurityHandler.handle(SecurityHandler.java:216)at org.mortbay.jetty.servlet.SessionHandler.handle(SessionHandler.java:182)at org.mortbay.jetty.handler.ContextHandler.handle(ContextHandler.java:767)at org.mortbay.jetty.webapp.WebAppContext.handle(WebAppContext.java:450)at org.mortbay.jetty.handler.ContextHandlerCollection.handle(ContextHandlerCollection.java:230)at org.mortbay.jetty.handler.HandlerWrapper.handle(HandlerWrapper.java:152)at org.mortbay.jetty.Server.handle(Server.java:326)at org.mortbay.jetty.HttpConnection.handleRequest(HttpConnection.java:542)at org.mortbay.jetty.HttpConnection$RequestHandler.headerComplete(HttpConnection.java:928)at org.mortbay.jetty.HttpParser.parseNext(HttpParser.java:549)at org.mortbay.jetty.HttpParser.parseAvailable(HttpParser.java:212)at org.mortbay.jetty.HttpConnection.handle(HttpConnection.java:404)at org.mortbay.io.nio.SelectChannelEndPoint.run(SelectChannelEndPoint.java:410)at org.mortbay.thread.QueuedThreadPool$PoolThread.run(QueuedThreadPool.java:582)

最初代码:

drop table wedw_ods.chdisease_gpt_opt_log_df;

CREATE external TABLE wedw_ods.chdisease_gpt_opt_log_df( id string comment "主键"

,trace_id string comment "调用链id"

,per_user_type int comment "操作用户类型"

,oper_user_id string comment "操作用户id(并不特指公司的userId)"

,oper_user_name string comment "操作用户名"

,oper_type string comment "操作类型(增删查改)"

,oper_module string comment "操作模块"

,data_owner string comment "数据所属的用户id"

,oper_data_id string comment "操作数据的唯一标识"

,log_desc string comment "日志说明"

,gmt_created timestamp comment "创建时间"

,oper_result string comment "操作结果"

,is_deleted int comment "是否删除"

) STORED BY 'org.elasticsearch.hadoop.hive.EsStorageHandler'

TBLPROPERTIES(

'es.resource'='chdisease_gpt_opt_log@1697709238/_doc',

'es.nodes'='10.60.8.103:9200,10.60.8.104:9200,10.60.8.105:9200',

'es.read.metadata' = 'true', --是否读取es元数据

'es.net.http.auth.user'='******',

'es.net.http.auth.pass'='******',

'es.index.read.missing.as.empty'='false',

'es.mapping.names' = 'id:id,trace_id:trace_id,per_user_type:per_user_type,oper_user_id:oper_user_id,oper_user_name:oper_user_name,oper_type:oper_type,oper_module:oper_module,data_owner:data_owner,oper_data_id:oper_data_id,log_desc:log_desc,gmt_created:gmt_created,oper_result:oper_result,is_deleted:is_deleted'

); drop table if exists wedw_dw.chdisease_gpt_opt_log_df;

CREATE TABLE if not exists wedw_dw.chdisease_gpt_opt_log_df(id string comment "主键"

,trace_id string comment "调用链id"

,per_user_type int comment "操作用户类型"

,oper_user_id string comment "操作用户id(并不特指公司的userId)"

,oper_user_name string comment "操作用户名"

,oper_type string comment "操作类型(增删查改)"

,oper_module string comment "操作模块"

,data_owner string comment "数据所属的用户id"

,oper_data_id string comment "操作数据的唯一标识"

,log_desc_es string comment "日志说明"

,log_desc string comment "日志说明"

,gmt_created timestamp comment "创建时间"

,oper_result string comment "操作结果"

,is_deleted int comment "是否删除")

comment 'gpt消息记录业务表'

row format delimited

fields terminated by '\t'

lines terminated by '\n'

stored as textfile

;insert overwrite table wedw_dw.chdisease_gpt_opt_log_df

select id

,trace_id

,per_user_type

,oper_user_id

,oper_user_name

,oper_type

,oper_module

,data_owner

,oper_data_id

,log_desc as log_desc_es

,regexp_replace(regexp_replace(translate(translate(translate(translate(log_desc,'\n',''),'\r',' '),'\t',' '),'\\',''),'(\\"\\{)','\\{'),'(\\}\\")','\\}') as log_desc

,from_unixtime(unix_timestamp(gmt_created)-28800,'yyyy-MM-dd HH:mm:ss') as gmt_created

,regexp_replace(regexp_replace(translate(translate(translate(translate(oper_result,'\n',''),'\r',' '),'\t',' '),'\\',''),'(\\"\\{)','\\{'),'(\\}\\")','\\}') as oper_result

,is_deleted

from wedw_dw.chdisease_gpt_opt_log_df;二、排查思路

1、怀疑hive和es的网络,但是测试了一下可以请求到es集群,且可以直接查询es的映射表(仅限于select * from wedw_dw.chdisease_gpt_opt_log_df,不能做mapreduce操作 ),所以es和hive集群通信没有问题(正常来说这种错误应该有很明显的报错日志)

2、怀疑是不是因为es有数据变化的原因,然后建es映射表的时候只取今天的数据,因为今天的数据还在变化中,结果成功了,因此排除是因为数据变化的原因。

3、然后又用了截至昨天全量的数据去跑,发现又回到了最初的表象一直报Task Transitioned from NEW to SCHEDULED,最后任务失败。

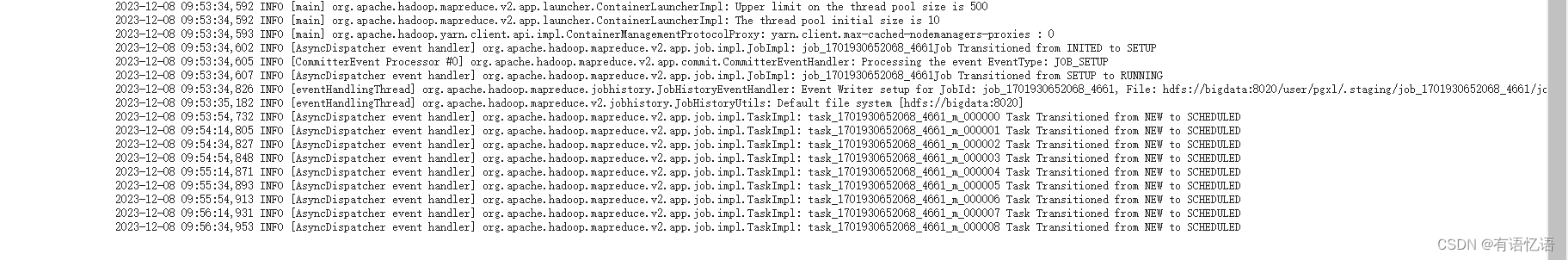

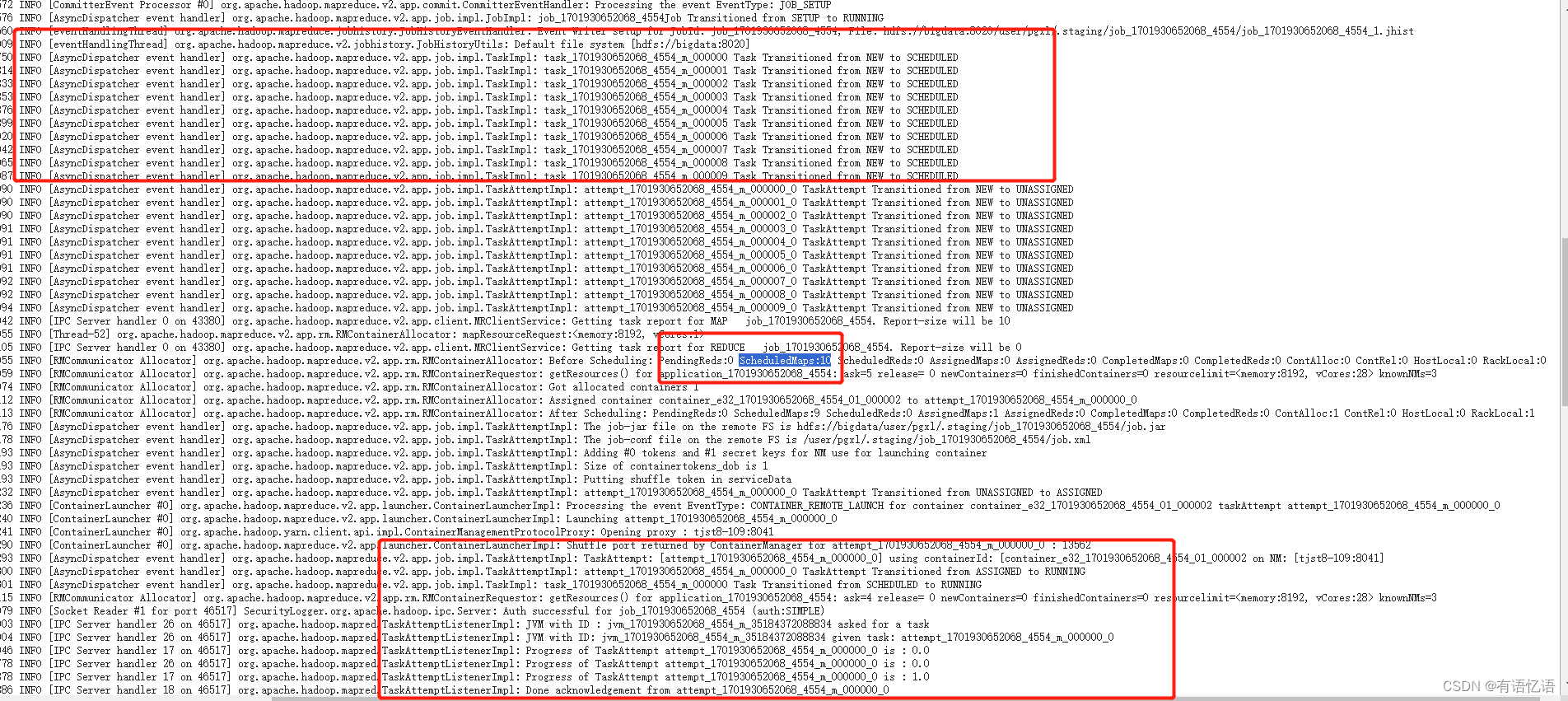

因此得出结论,是es中的数据量太多,hive资源较少,在生成maptask的时候超时,无法计算的问题。

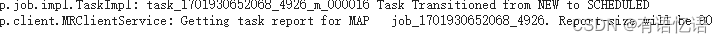

根据日志可知大概有90个maptask需要生成,生成一个maptask预计需要20s,因此可以暂时先设置30分钟

可以设置mapreduce超时时间间隔大小:

set mapreduce.task.timeout = 1800000; // 单位为毫秒,设置MapReduce任务的超时时间为10分钟,即600000毫秒,此时设置为30分钟

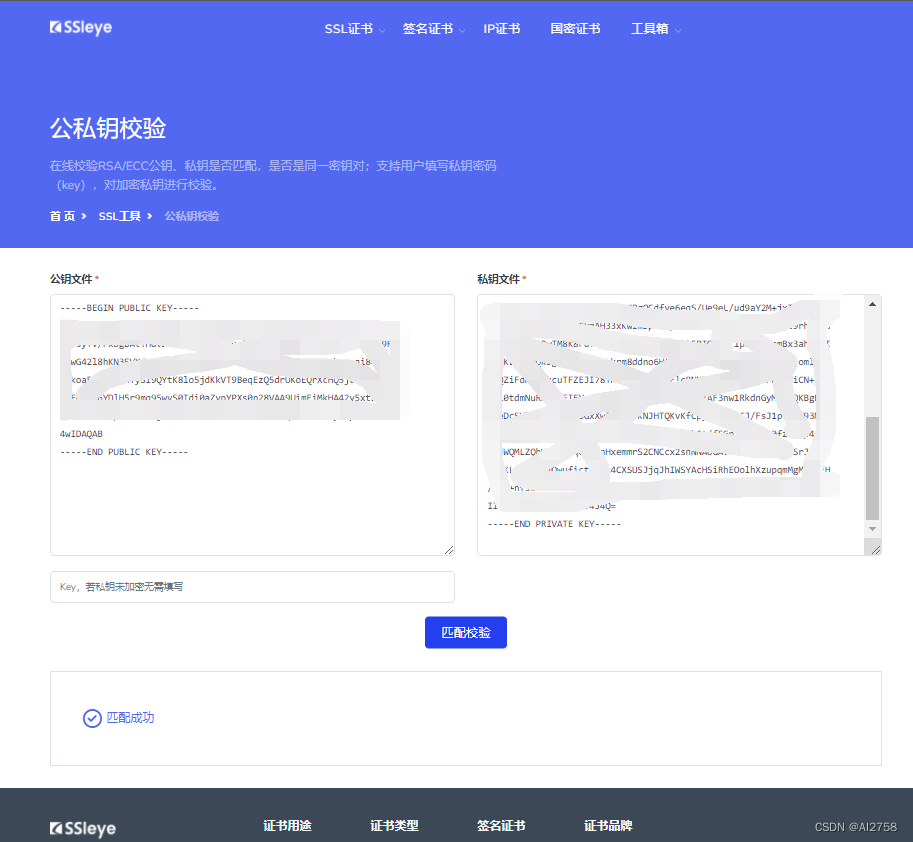

成功的日志:

三、修改完后的代码和日志

drop table wedw_ods.chdisease_gpt_opt_log_df;

CREATE external TABLE wedw_ods.chdisease_gpt_opt_log_df( id string comment "主键"

,trace_id string comment "调用链id"

,per_user_type int comment "操作用户类型"

,oper_user_id string comment "操作用户id(并不特指公司的userId)"

,oper_user_name string comment "操作用户名"

,oper_type string comment "操作类型(增删查改)"

,oper_module string comment "操作模块"

,data_owner string comment "数据所属的用户id"

,oper_data_id string comment "操作数据的唯一标识"

,log_desc string comment "日志说明"

,gmt_created timestamp comment "创建时间"

,oper_result string comment "操作结果"

,is_deleted int comment "是否删除"

) STORED BY 'org.elasticsearch.hadoop.hive.EsStorageHandler'

TBLPROPERTIES(

'es.resource'='chdisease_gpt_opt_log@1697709238/_doc',

'es.nodes'='10.60.8.103:9200,10.60.8.104:9200,10.60.8.105:9200',

'es.read.metadata' = 'true', --是否读取es元数据

'es.net.http.auth.user'='elastic',

'es.net.http.auth.pass'='streamcenter',

'es.index.read.missing.as.empty'='false',

'es.query'='{"query": {"range": {"gmt_created": {"lt": "now","gte": "now/d"}}}

}',

'es.mapping.names' = 'id:id,trace_id:trace_id,per_user_type:per_user_type,oper_user_id:oper_user_id,oper_user_name:oper_user_name,oper_type:oper_type,oper_module:oper_module,data_owner:data_owner,oper_data_id:oper_data_id,log_desc:log_desc,gmt_created:gmt_created,oper_result:oper_result,is_deleted:is_deleted'

); drop table if exists wedw_dw.chdisease_gpt_opt_log_df;

CREATE TABLE if not exists wedw_dw.chdisease_gpt_opt_log_df(id string comment "主键"

,trace_id string comment "调用链id"

,per_user_type int comment "操作用户类型"

,oper_user_id string comment "操作用户id(并不特指公司的userId)"

,oper_user_name string comment "操作用户名"

,oper_type string comment "操作类型(增删查改)"

,oper_module string comment "操作模块"

,data_owner string comment "数据所属的用户id"

,oper_data_id string comment "操作数据的唯一标识"

,log_desc_es string comment "日志说明"

,log_desc string comment "日志说明"

,gmt_created timestamp comment "创建时间"

,oper_result string comment "操作结果"

,is_deleted int comment "是否删除")

comment 'gpt消息记录业务表'

row format delimited

fields terminated by '\t'

lines terminated by '\n'

stored as textfile

;insert overwrite table wedw_dw.chdisease_gpt_opt_log_df

select id

,trace_id

,per_user_type

,oper_user_id

,oper_user_name

,oper_type

,oper_module

,data_owner

,oper_data_id

,log_desc as log_desc_es

,regexp_replace(regexp_replace(translate(translate(translate(translate(log_desc,'\n',''),'\r',' '),'\t',' '),'\\',''),'(\\"\\{)','\\{'),'(\\}\\")','\\}') as log_desc

,from_unixtime(unix_timestamp(gmt_created)-28800,'yyyy-MM-dd HH:mm:ss') as gmt_created

,regexp_replace(regexp_replace(translate(translate(translate(translate(oper_result,'\n',''),'\r',' '),'\t',' '),'\\',''),'(\\"\\{)','\\{'),'(\\}\\")','\\}') as oper_result

,is_deleted

from wedw_dw.chdisease_gpt_opt_log_df;Log Type: syslogLog Upload Time: Thu Dec 07 16:51:57 +0800 2023Log Length: 330402023-12-07 16:51:07,583 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Created MRAppMaster for application appattempt_1701930652068_0327_000001

2023-12-07 16:51:07,789 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Executing with tokens:

2023-12-07 16:51:07,789 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Kind: YARN_AM_RM_TOKEN, Service: , Ident: (org.apache.hadoop.yarn.security.AMRMTokenIdentifier@2e1d27ba)

2023-12-07 16:51:08,293 WARN [main] org.apache.hadoop.util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2023-12-07 16:51:08,486 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: OutputCommitter set in config org.apache.hadoop.hive.ql.io.HiveFileFormatUtils$NullOutputCommitter

2023-12-07 16:51:08,488 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: OutputCommitter is org.apache.hadoop.hive.ql.io.HiveFileFormatUtils$NullOutputCommitter

2023-12-07 16:51:08,604 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.jobhistory.EventType for class org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler

2023-12-07 16:51:08,605 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.job.event.JobEventType for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$JobEventDispatcher

2023-12-07 16:51:08,605 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.job.event.TaskEventType for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$TaskEventDispatcher

2023-12-07 16:51:08,606 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.job.event.TaskAttemptEventType for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$TaskAttemptEventDispatcher

2023-12-07 16:51:08,606 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.commit.CommitterEventType for class org.apache.hadoop.mapreduce.v2.app.commit.CommitterEventHandler

2023-12-07 16:51:08,611 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.speculate.Speculator$EventType for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$SpeculatorEventDispatcher

2023-12-07 16:51:08,611 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.rm.ContainerAllocator$EventType for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$ContainerAllocatorRouter

2023-12-07 16:51:08,612 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncher$EventType for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$ContainerLauncherRouter

2023-12-07 16:51:08,654 INFO [main] org.apache.hadoop.mapreduce.v2.jobhistory.JobHistoryUtils: Default file system [hdfs://bigdata:8020]

2023-12-07 16:51:08,678 INFO [main] org.apache.hadoop.mapreduce.v2.jobhistory.JobHistoryUtils: Default file system [hdfs://bigdata:8020]

2023-12-07 16:51:08,699 INFO [main] org.apache.hadoop.mapreduce.v2.jobhistory.JobHistoryUtils: Default file system [hdfs://bigdata:8020]

2023-12-07 16:51:08,712 INFO [main] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Emitting job history data to the timeline server is not enabled

2023-12-07 16:51:08,754 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.job.event.JobFinishEvent$Type for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$JobFinishEventHandler

2023-12-07 16:51:08,953 INFO [main] org.apache.hadoop.metrics2.impl.MetricsConfig: loaded properties from hadoop-metrics2.properties

2023-12-07 16:51:09,047 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled snapshot period at 10 second(s).

2023-12-07 16:51:09,048 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: MRAppMaster metrics system started

2023-12-07 16:51:09,063 INFO [main] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Adding job token for job_1701930652068_0327 to jobTokenSecretManager

2023-12-07 16:51:09,181 INFO [main] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Not uberizing job_1701930652068_0327 because: not enabled; too much input; too much RAM;

2023-12-07 16:51:09,202 INFO [main] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Input size for job job_1701930652068_0327 = 614427268. Number of splits = 2

2023-12-07 16:51:09,202 INFO [main] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Number of reduces for job job_1701930652068_0327 = 0

2023-12-07 16:51:09,202 INFO [main] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: job_1701930652068_0327Job Transitioned from NEW to INITED

2023-12-07 16:51:09,203 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: MRAppMaster launching normal, non-uberized, multi-container job job_1701930652068_0327.

2023-12-07 16:51:09,227 INFO [main] org.apache.hadoop.ipc.CallQueueManager: Using callQueue class java.util.concurrent.LinkedBlockingQueue

2023-12-07 16:51:09,236 INFO [Socket Reader #1 for port 42597] org.apache.hadoop.ipc.Server: Starting Socket Reader #1 for port 42597

2023-12-07 16:51:09,361 INFO [main] org.apache.hadoop.yarn.factories.impl.pb.RpcServerFactoryPBImpl: Adding protocol org.apache.hadoop.mapreduce.v2.api.MRClientProtocolPB to the server

2023-12-07 16:51:09,362 INFO [IPC Server listener on 42597] org.apache.hadoop.ipc.Server: IPC Server listener on 42597: starting

2023-12-07 16:51:09,362 INFO [IPC Server Responder] org.apache.hadoop.ipc.Server: IPC Server Responder: starting

2023-12-07 16:51:09,363 INFO [main] org.apache.hadoop.mapreduce.v2.app.client.MRClientService: Instantiated MRClientService at tjst8-110/10.60.8.110:42597

2023-12-07 16:51:09,420 INFO [main] org.mortbay.log: Logging to org.slf4j.impl.Log4jLoggerAdapter(org.mortbay.log) via org.mortbay.log.Slf4jLog

2023-12-07 16:51:09,425 INFO [main] org.apache.hadoop.security.authentication.server.AuthenticationFilter: Unable to initialize FileSignerSecretProvider, falling back to use random secrets.

2023-12-07 16:51:09,429 INFO [main] org.apache.hadoop.http.HttpRequestLog: Http request log for http.requests.mapreduce is not defined

2023-12-07 16:51:09,438 INFO [main] org.apache.hadoop.http.HttpServer2: Added global filter 'safety' (class=org.apache.hadoop.http.HttpServer2$QuotingInputFilter)

2023-12-07 16:51:09,469 INFO [main] org.apache.hadoop.http.HttpServer2: Added filter AM_PROXY_FILTER (class=org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter) to context mapreduce

2023-12-07 16:51:09,469 INFO [main] org.apache.hadoop.http.HttpServer2: Added filter AM_PROXY_FILTER (class=org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter) to context static

2023-12-07 16:51:09,472 INFO [main] org.apache.hadoop.http.HttpServer2: adding path spec: /mapreduce/*

2023-12-07 16:51:09,472 INFO [main] org.apache.hadoop.http.HttpServer2: adding path spec: /ws/*

2023-12-07 16:51:09,480 INFO [main] org.apache.hadoop.http.HttpServer2: Jetty bound to port 40643

2023-12-07 16:51:09,480 INFO [main] org.mortbay.log: jetty-6.1.26.cloudera.4

2023-12-07 16:51:09,530 INFO [main] org.mortbay.log: Extract jar:file:/opt/cloudera/parcels/CDH-5.8.2-1.cdh5.8.2.p0.3/jars/hadoop-yarn-common-2.6.0-cdh5.8.2.jar!/webapps/mapreduce to /tmp/Jetty_0_0_0_0_40643_mapreduce____eqlnk/webapp

2023-12-07 16:51:09,887 INFO [main] org.mortbay.log: Started HttpServer2$SelectChannelConnectorWithSafeStartup@0.0.0.0:40643

2023-12-07 16:51:09,887 INFO [main] org.apache.hadoop.yarn.webapp.WebApps: Web app /mapreduce started at 40643

2023-12-07 16:51:10,169 INFO [main] org.apache.hadoop.yarn.webapp.WebApps: Registered webapp guice modules

2023-12-07 16:51:10,174 INFO [main] org.apache.hadoop.ipc.CallQueueManager: Using callQueue class java.util.concurrent.LinkedBlockingQueue

2023-12-07 16:51:10,174 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.speculate.DefaultSpeculator: JOB_CREATE job_1701930652068_0327

2023-12-07 16:51:10,174 INFO [Socket Reader #1 for port 35256] org.apache.hadoop.ipc.Server: Starting Socket Reader #1 for port 35256

2023-12-07 16:51:10,178 INFO [IPC Server Responder] org.apache.hadoop.ipc.Server: IPC Server Responder: starting

2023-12-07 16:51:10,178 INFO [IPC Server listener on 35256] org.apache.hadoop.ipc.Server: IPC Server listener on 35256: starting

2023-12-07 16:51:10,210 INFO [main] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: nodeBlacklistingEnabled:true

2023-12-07 16:51:10,210 INFO [main] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: maxTaskFailuresPerNode is 3

2023-12-07 16:51:10,210 INFO [main] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: blacklistDisablePercent is 33

2023-12-07 16:51:10,290 INFO [main] org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider: Failing over to rm65

2023-12-07 16:51:10,339 INFO [main] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: maxContainerCapability: <memory:14000, vCores:6>

2023-12-07 16:51:10,339 INFO [main] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: queue: root.wedw

2023-12-07 16:51:10,343 INFO [main] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Upper limit on the thread pool size is 500

2023-12-07 16:51:10,343 INFO [main] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: The thread pool initial size is 10

2023-12-07 16:51:10,345 INFO [main] org.apache.hadoop.yarn.client.api.impl.ContainerManagementProtocolProxy: yarn.client.max-cached-nodemanagers-proxies : 0

2023-12-07 16:51:10,356 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: job_1701930652068_0327Job Transitioned from INITED to SETUP

2023-12-07 16:51:10,359 INFO [CommitterEvent Processor #0] org.apache.hadoop.mapreduce.v2.app.commit.CommitterEventHandler: Processing the event EventType: JOB_SETUP

2023-12-07 16:51:10,362 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: job_1701930652068_0327Job Transitioned from SETUP to RUNNING

2023-12-07 16:51:10,426 INFO [eventHandlingThread] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Event Writer setup for JobId: job_1701930652068_0327, File: hdfs://bigdata:8020/user/pgxl/.staging/job_1701930652068_0327/job_1701930652068_0327_1.jhist

2023-12-07 16:51:10,449 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1701930652068_0327_m_000000 Task Transitioned from NEW to SCHEDULED

2023-12-07 16:51:10,495 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1701930652068_0327_m_000001 Task Transitioned from NEW to SCHEDULED

2023-12-07 16:51:10,498 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1701930652068_0327_m_000000_0 TaskAttempt Transitioned from NEW to UNASSIGNED

2023-12-07 16:51:10,498 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1701930652068_0327_m_000001_0 TaskAttempt Transitioned from NEW to UNASSIGNED

2023-12-07 16:51:10,500 INFO [Thread-52] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: mapResourceRequest:<memory:8192, vCores:1>

2023-12-07 16:51:10,790 INFO [eventHandlingThread] org.apache.hadoop.mapreduce.v2.jobhistory.JobHistoryUtils: Default file system [hdfs://bigdata:8020]

2023-12-07 16:51:11,124 INFO [IPC Server handler 0 on 42597] org.apache.hadoop.mapreduce.v2.app.client.MRClientService: Getting task report for MAP job_1701930652068_0327. Report-size will be 2

2023-12-07 16:51:11,182 INFO [IPC Server handler 0 on 42597] org.apache.hadoop.mapreduce.v2.app.client.MRClientService: Getting task report for REDUCE job_1701930652068_0327. Report-size will be 0

2023-12-07 16:51:11,343 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Before Scheduling: PendingReds:0 ScheduledMaps:2 ScheduledReds:0 AssignedMaps:0 AssignedReds:0 CompletedMaps:0 CompletedReds:0 ContAlloc:0 ContRel:0 HostLocal:0 RackLocal:0

2023-12-07 16:51:11,386 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: getResources() for application_1701930652068_0327: ask=4 release= 0 newContainers=0 finishedContainers=0 resourcelimit=<memory:270336, vCores:92> knownNMs=3

2023-12-07 16:51:12,404 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Got allocated containers 2

2023-12-07 16:51:12,407 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Assigned container container_e32_1701930652068_0327_01_000002 to attempt_1701930652068_0327_m_000001_0

2023-12-07 16:51:12,410 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Assigned container container_e32_1701930652068_0327_01_000003 to attempt_1701930652068_0327_m_000000_0

2023-12-07 16:51:12,410 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:0 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:2 AssignedReds:0 CompletedMaps:0 CompletedReds:0 ContAlloc:2 ContRel:0 HostLocal:2 RackLocal:0

2023-12-07 16:51:12,457 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: The job-jar file on the remote FS is hdfs://bigdata/user/pgxl/.staging/job_1701930652068_0327/job.jar

2023-12-07 16:51:12,459 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: The job-conf file on the remote FS is /user/pgxl/.staging/job_1701930652068_0327/job.xml

2023-12-07 16:51:12,466 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Adding #0 tokens and #1 secret keys for NM use for launching container

2023-12-07 16:51:12,466 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Size of containertokens_dob is 1

2023-12-07 16:51:12,466 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Putting shuffle token in serviceData

2023-12-07 16:51:12,498 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1701930652068_0327_m_000001_0 TaskAttempt Transitioned from UNASSIGNED to ASSIGNED

2023-12-07 16:51:12,500 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1701930652068_0327_m_000000_0 TaskAttempt Transitioned from UNASSIGNED to ASSIGNED

2023-12-07 16:51:12,503 INFO [ContainerLauncher #0] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_LAUNCH for container container_e32_1701930652068_0327_01_000002 taskAttempt attempt_1701930652068_0327_m_000001_0

2023-12-07 16:51:12,503 INFO [ContainerLauncher #1] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_LAUNCH for container container_e32_1701930652068_0327_01_000003 taskAttempt attempt_1701930652068_0327_m_000000_0

2023-12-07 16:51:12,508 INFO [ContainerLauncher #0] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Launching attempt_1701930652068_0327_m_000001_0

2023-12-07 16:51:12,508 INFO [ContainerLauncher #1] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Launching attempt_1701930652068_0327_m_000000_0

2023-12-07 16:51:12,510 INFO [ContainerLauncher #0] org.apache.hadoop.yarn.client.api.impl.ContainerManagementProtocolProxy: Opening proxy : tjst8-109:8041

2023-12-07 16:51:12,538 INFO [ContainerLauncher #1] org.apache.hadoop.yarn.client.api.impl.ContainerManagementProtocolProxy: Opening proxy : tjst8-110:8041

2023-12-07 16:51:12,581 INFO [ContainerLauncher #0] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Shuffle port returned by ContainerManager for attempt_1701930652068_0327_m_000001_0 : 13562

2023-12-07 16:51:12,581 INFO [ContainerLauncher #1] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Shuffle port returned by ContainerManager for attempt_1701930652068_0327_m_000000_0 : 13562

2023-12-07 16:51:12,584 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: TaskAttempt: [attempt_1701930652068_0327_m_000001_0] using containerId: [container_e32_1701930652068_0327_01_000002 on NM: [tjst8-109:8041]

2023-12-07 16:51:12,590 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1701930652068_0327_m_000001_0 TaskAttempt Transitioned from ASSIGNED to RUNNING

2023-12-07 16:51:12,591 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: TaskAttempt: [attempt_1701930652068_0327_m_000000_0] using containerId: [container_e32_1701930652068_0327_01_000003 on NM: [tjst8-110:8041]

2023-12-07 16:51:12,591 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1701930652068_0327_m_000000_0 TaskAttempt Transitioned from ASSIGNED to RUNNING

2023-12-07 16:51:12,592 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1701930652068_0327_m_000001 Task Transitioned from SCHEDULED to RUNNING

2023-12-07 16:51:12,592 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1701930652068_0327_m_000000 Task Transitioned from SCHEDULED to RUNNING

2023-12-07 16:51:13,413 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: getResources() for application_1701930652068_0327: ask=4 release= 0 newContainers=0 finishedContainers=0 resourcelimit=<memory:253952, vCores:88> knownNMs=3

2023-12-07 16:51:14,102 INFO [Socket Reader #1 for port 35256] SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for job_1701930652068_0327 (auth:SIMPLE)

2023-12-07 16:51:14,128 INFO [IPC Server handler 0 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID : jvm_1701930652068_0327_m_35184372088835 asked for a task

2023-12-07 16:51:14,129 INFO [IPC Server handler 0 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID: jvm_1701930652068_0327_m_35184372088835 given task: attempt_1701930652068_0327_m_000000_0

2023-12-07 16:51:14,672 INFO [Socket Reader #1 for port 35256] SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for job_1701930652068_0327 (auth:SIMPLE)

2023-12-07 16:51:14,683 INFO [IPC Server handler 0 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID : jvm_1701930652068_0327_m_35184372088834 asked for a task

2023-12-07 16:51:14,683 INFO [IPC Server handler 0 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID: jvm_1701930652068_0327_m_35184372088834 given task: attempt_1701930652068_0327_m_000001_0

2023-12-07 16:51:20,997 INFO [IPC Server handler 6 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000000_0 is : 0.0

2023-12-07 16:51:21,530 INFO [IPC Server handler 3 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000001_0 is : 0.0

2023-12-07 16:51:24,043 INFO [IPC Server handler 7 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000000_0 is : 0.2022089

2023-12-07 16:51:24,572 INFO [IPC Server handler 6 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000001_0 is : 0.2001992

2023-12-07 16:51:27,082 INFO [IPC Server handler 5 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000000_0 is : 0.2022089

2023-12-07 16:51:27,635 INFO [IPC Server handler 7 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000001_0 is : 0.2001992

2023-12-07 16:51:30,127 INFO [IPC Server handler 1 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000000_0 is : 0.40259877

2023-12-07 16:51:30,695 INFO [IPC Server handler 5 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000001_0 is : 0.39992914

2023-12-07 16:51:33,176 INFO [IPC Server handler 9 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000000_0 is : 0.40259877

2023-12-07 16:51:33,732 INFO [IPC Server handler 1 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000001_0 is : 0.39992914

2023-12-07 16:51:36,221 INFO [IPC Server handler 0 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000000_0 is : 0.60032296

2023-12-07 16:51:36,776 INFO [IPC Server handler 9 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000001_0 is : 0.59991705

2023-12-07 16:51:39,265 INFO [IPC Server handler 3 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000000_0 is : 0.60032296

2023-12-07 16:51:39,820 INFO [IPC Server handler 2 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000001_0 is : 0.7993565

2023-12-07 16:51:42,311 INFO [IPC Server handler 6 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000000_0 is : 0.60032296

2023-12-07 16:51:42,861 INFO [IPC Server handler 11 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000001_0 is : 0.7993565

2023-12-07 16:51:44,870 INFO [IPC Server handler 10 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000001_0 is : 0.7993565

2023-12-07 16:51:44,922 INFO [IPC Server handler 15 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000001_0 is : 1.0

2023-12-07 16:51:44,925 INFO [IPC Server handler 17 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Done acknowledgement from attempt_1701930652068_0327_m_000001_0

2023-12-07 16:51:44,930 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1701930652068_0327_m_000001_0 TaskAttempt Transitioned from RUNNING to SUCCESS_FINISHING_CONTAINER

2023-12-07 16:51:44,947 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: Task succeeded with attempt attempt_1701930652068_0327_m_000001_0

2023-12-07 16:51:44,949 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1701930652068_0327_m_000001 Task Transitioned from RUNNING to SUCCEEDED

2023-12-07 16:51:44,952 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Num completed Tasks: 1

2023-12-07 16:51:45,351 INFO [IPC Server handler 7 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000000_0 is : 0.801217

2023-12-07 16:51:45,481 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Before Scheduling: PendingReds:0 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:2 AssignedReds:0 CompletedMaps:1 CompletedReds:0 ContAlloc:2 ContRel:0 HostLocal:2 RackLocal:0

2023-12-07 16:51:46,495 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e32_1701930652068_0327_01_000002

2023-12-07 16:51:46,496 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1701930652068_0327_m_000001_0 TaskAttempt Transitioned from SUCCESS_FINISHING_CONTAINER to SUCCEEDED

2023-12-07 16:51:46,497 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:0 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:1 AssignedReds:0 CompletedMaps:1 CompletedReds:0 ContAlloc:2 ContRel:0 HostLocal:2 RackLocal:0

2023-12-07 16:51:46,497 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1701930652068_0327_m_000001_0:

2023-12-07 16:51:46,498 INFO [ContainerLauncher #2] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_COMPLETED for container container_e32_1701930652068_0327_01_000002 taskAttempt attempt_1701930652068_0327_m_000001_0

2023-12-07 16:51:48,391 INFO [IPC Server handler 5 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000000_0 is : 0.801217

2023-12-07 16:51:50,143 INFO [IPC Server handler 8 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000000_0 is : 0.801217

2023-12-07 16:51:50,195 INFO [IPC Server handler 0 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1701930652068_0327_m_000000_0 is : 1.0

2023-12-07 16:51:50,198 INFO [IPC Server handler 3 on 35256] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Done acknowledgement from attempt_1701930652068_0327_m_000000_0

2023-12-07 16:51:50,200 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1701930652068_0327_m_000000_0 TaskAttempt Transitioned from RUNNING to SUCCESS_FINISHING_CONTAINER

2023-12-07 16:51:50,200 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: Task succeeded with attempt attempt_1701930652068_0327_m_000000_0

2023-12-07 16:51:50,200 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1701930652068_0327_m_000000 Task Transitioned from RUNNING to SUCCEEDED

2023-12-07 16:51:50,202 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Num completed Tasks: 2

2023-12-07 16:51:50,203 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: job_1701930652068_0327Job Transitioned from RUNNING to COMMITTING

2023-12-07 16:51:50,205 INFO [CommitterEvent Processor #1] org.apache.hadoop.mapreduce.v2.app.commit.CommitterEventHandler: Processing the event EventType: JOB_COMMIT

2023-12-07 16:51:50,223 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Calling handler for JobFinishedEvent

2023-12-07 16:51:50,224 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: job_1701930652068_0327Job Transitioned from COMMITTING to SUCCEEDED

2023-12-07 16:51:50,226 INFO [Thread-73] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: We are finishing cleanly so this is the last retry

2023-12-07 16:51:50,226 INFO [Thread-73] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Notify RMCommunicator isAMLastRetry: true

2023-12-07 16:51:50,226 INFO [Thread-73] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: RMCommunicator notified that shouldUnregistered is: true

2023-12-07 16:51:50,226 INFO [Thread-73] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Notify JHEH isAMLastRetry: true

2023-12-07 16:51:50,226 INFO [Thread-73] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: JobHistoryEventHandler notified that forceJobCompletion is true

2023-12-07 16:51:50,226 INFO [Thread-73] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Calling stop for all the services

2023-12-07 16:51:50,227 INFO [Thread-73] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Stopping JobHistoryEventHandler. Size of the outstanding queue size is 0

2023-12-07 16:51:50,276 INFO [eventHandlingThread] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Copying hdfs://bigdata:8020/user/pgxl/.staging/job_1701930652068_0327/job_1701930652068_0327_1.jhist to hdfs://bigdata:8020/user/history/done_intermediate/pgxl/job_1701930652068_0327-1701939065771-pgxl-Airflow+HiveOperator+task+for+airflow%2D10%2D68.Test_t-1701939110221-2-0-SUCCEEDED-root.wedw-1701939070347.jhist_tmp

2023-12-07 16:51:50,305 INFO [eventHandlingThread] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Copied to done location: hdfs://bigdata:8020/user/history/done_intermediate/pgxl/job_1701930652068_0327-1701939065771-pgxl-Airflow+HiveOperator+task+for+airflow%2D10%2D68.Test_t-1701939110221-2-0-SUCCEEDED-root.wedw-1701939070347.jhist_tmp

2023-12-07 16:51:50,308 INFO [eventHandlingThread] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Copying hdfs://bigdata:8020/user/pgxl/.staging/job_1701930652068_0327/job_1701930652068_0327_1_conf.xml to hdfs://bigdata:8020/user/history/done_intermediate/pgxl/job_1701930652068_0327_conf.xml_tmp

2023-12-07 16:51:50,335 INFO [eventHandlingThread] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Copied to done location: hdfs://bigdata:8020/user/history/done_intermediate/pgxl/job_1701930652068_0327_conf.xml_tmp

2023-12-07 16:51:50,347 INFO [eventHandlingThread] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Moved tmp to done: hdfs://bigdata:8020/user/history/done_intermediate/pgxl/job_1701930652068_0327.summary_tmp to hdfs://bigdata:8020/user/history/done_intermediate/pgxl/job_1701930652068_0327.summary

2023-12-07 16:51:50,350 INFO [eventHandlingThread] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Moved tmp to done: hdfs://bigdata:8020/user/history/done_intermediate/pgxl/job_1701930652068_0327_conf.xml_tmp to hdfs://bigdata:8020/user/history/done_intermediate/pgxl/job_1701930652068_0327_conf.xml

2023-12-07 16:51:50,352 INFO [eventHandlingThread] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Moved tmp to done: hdfs://bigdata:8020/user/history/done_intermediate/pgxl/job_1701930652068_0327-1701939065771-pgxl-Airflow+HiveOperator+task+for+airflow%2D10%2D68.Test_t-1701939110221-2-0-SUCCEEDED-root.wedw-1701939070347.jhist_tmp to hdfs://bigdata:8020/user/history/done_intermediate/pgxl/job_1701930652068_0327-1701939065771-pgxl-Airflow+HiveOperator+task+for+airflow%2D10%2D68.Test_t-1701939110221-2-0-SUCCEEDED-root.wedw-1701939070347.jhist

2023-12-07 16:51:50,353 INFO [Thread-73] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Stopped JobHistoryEventHandler. super.stop()

2023-12-07 16:51:50,354 INFO [Thread-73] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: KILLING attempt_1701930652068_0327_m_000000_0

2023-12-07 16:51:50,355 INFO [Thread-73] org.apache.hadoop.yarn.client.api.impl.ContainerManagementProtocolProxy: Opening proxy : tjst8-110:8041

2023-12-07 16:51:50,381 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1701930652068_0327_m_000000_0 TaskAttempt Transitioned from SUCCESS_FINISHING_CONTAINER to SUCCEEDED

2023-12-07 16:51:50,384 INFO [Thread-73] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Setting job diagnostics to

2023-12-07 16:51:50,384 INFO [Thread-73] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: History url is http://tjst8-110:19888/jobhistory/job/job_1701930652068_0327

2023-12-07 16:51:50,391 INFO [Thread-73] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Waiting for application to be successfully unregistered.

2023-12-07 16:51:51,394 INFO [Thread-73] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Final Stats: PendingReds:0 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:1 AssignedReds:0 CompletedMaps:1 CompletedReds:0 ContAlloc:2 ContRel:0 HostLocal:2 RackLocal:0

2023-12-07 16:51:51,396 INFO [Thread-73] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Deleting staging directory hdfs://bigdata /user/pgxl/.staging/job_1701930652068_0327

2023-12-07 16:51:51,411 INFO [Thread-73] org.apache.hadoop.ipc.Server: Stopping server on 35256

2023-12-07 16:51:51,412 INFO [IPC Server listener on 35256] org.apache.hadoop.ipc.Server: Stopping IPC Server listener on 35256

2023-12-07 16:51:51,413 INFO [TaskHeartbeatHandler PingChecker] org.apache.hadoop.mapreduce.v2.app.TaskHeartbeatHandler: TaskHeartbeatHandler thread interrupted

2023-12-07 16:51:51,413 INFO [IPC Server Responder] org.apache.hadoop.ipc.Server: Stopping IPC Server Responder

2023-12-07 16:51:51,415 INFO [Ping Checker] org.apache.hadoop.yarn.util.AbstractLivelinessMonitor: TaskAttemptFinishingMonitor thread interrupted

四、最终任务需要改成增量任务,此处不在说明

相关文章:

hive映射es表任务失败,无错误日志一直报Task Transitioned from NEW to SCHEDULED

一、背景 要利用gpt产生的存放在es种的日志表做统计分析,通过hive建es的映射表,将es的数据拉到hive里面。 在最初的时候同事写的是全量拉取,某一天突然任务报错,但是没有错误日志一直报:Task Transitioned from NEW t…...

手眼标定 - 最终精度和误差优化心得

手眼标定 - 标定误差优化项 一、TCP标定误差优化1、注意标定针摆放范围2、TCP标定时的点次态与工作姿态尽可能保持相近 二、深度相机对齐矩阵误差1、手动计算对齐矩阵 三、手眼标定拍照姿态1、TCP标定姿态优先2、水平放置棋盘格优先 为减少最终手眼标定的误差,可做或…...

pytorch一致数据增强

分割任务对 image 做(某些)transform 时,要对 label(segmentation mask)也做对应的 transform,如 Resize、RandomRotation 等。如果对 image、label 分别用 transform 处理一遍,则涉及随机操作的…...

MapReduce

1. MapReduce是什么?请简要说明它的工作原理。 MapReduce是一种编程模型,主要用于处理大规模数据集的并行运算,特别是非结构化数据。这个模型的核心思想是将大数据处理任务分解为两个主要步骤:Map和Reduce。用户只需实现map()和r…...

Spring Boot 快速入门

Spring Boot 快速入门 什么是Spring Boot Spring Boot是一个用于简化Spring应用开发的框架,它基于Spring框架,提供了自动配置、快速开发等特性,使得开发者可以更加便捷地构建独立的、生产级别的Spring应用。 开始使用Spring Boot 步骤一&a…...

什么是神经网络的非线性

大家好啊,我是董董灿。 最近在写《计算机视觉入门与调优》(右键,在新窗口中打开链接)的小册,其中一部分说到激活函数的时候,谈到了神经网络的非线性问题。 今天就一起来看看,为什么神经网络需…...

——浅谈用户体验测试的主要功能)

前端知识(十四)——浅谈用户体验测试的主要功能

用户体验(User Experience,简称UX)在现代软件和产品开发中变得愈发重要。为了确保产品能够满足用户期望,提高用户满意度,用户体验测试成为不可或缺的环节。本文将详细探讨用户体验测试的主要功能,以及它在产品开发过程中的重要性 …...

解决前端跨域问题,后端解决方法

Spring CloudVue前后端分离项目报错:Network Error;net::ERR_FAILED(请求跨越)-CSDN博客记录自用...

【网络奇缘系列】计算机网络|数据通信方式|数据传输方式

🌈个人主页: Aileen_0v0🔥系列专栏: 一见倾心,再见倾城 --- 计算机网络~💫个人格言:"没有罗马,那就自己创造罗马~" 这篇文章是关于计算机网络中数据通信的基础知识点, 从模型,术语再到数据通信方式&#…...

数组 注意事项

1.一维数组的初始化 int a[5]{1,2,3,4,5}; 合法 int a[5]{1,2,3}; 合法 int a[]{1,2,3,4,5}; 合法,后面决定前面的大小 int a[5]{1,2,3,4,5,6}; 不合法! 2.一维数组的定义 int a[5] 合法 int a[11] 合法 int a[1/24] 合法 int x5,a[x…...

day11 滑动窗口中的最大值

class MyQueue { //单调队列(从大到小)public:deque<int> que; // 使用deque来实现单调队列// 每次弹出的时候,比较当前要弹出的数值是否等于队列出口元素的数值,如果相等则弹出。// 同时pop之前判断队列当前是否为空。void…...

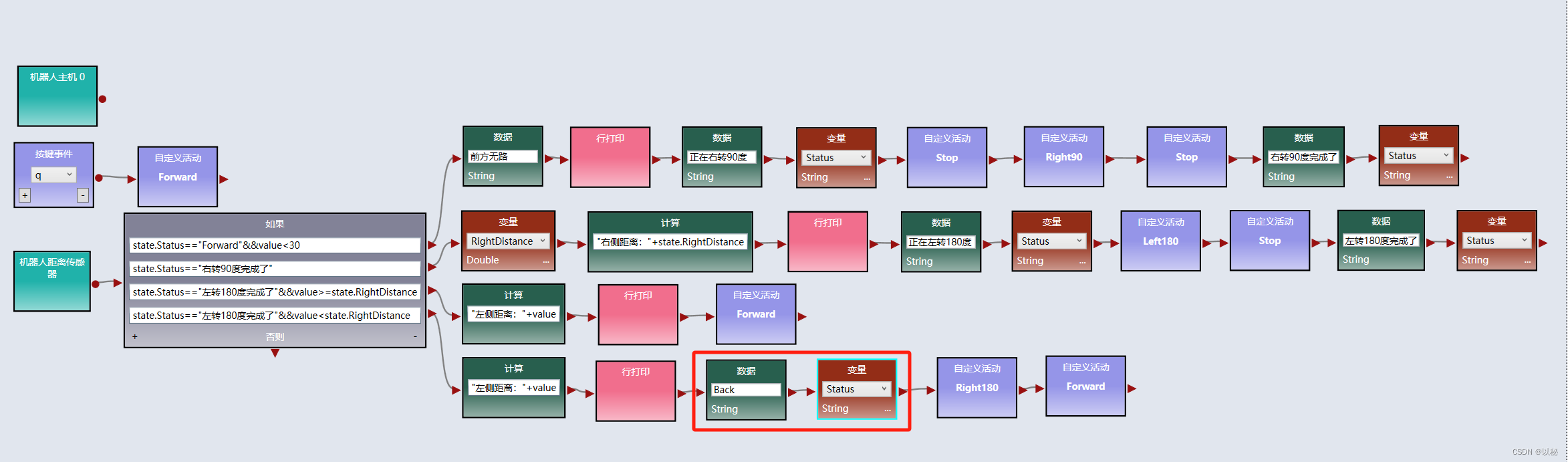

viple模拟器使用(五):Web 2D模拟器中实现两距离局部最优迷宫算法

关于两距离局部最优迷宫算法的原理本文不再赘述,详情请参考:viple模拟器使用(四),归纳总结为: 前方有路,则直行; 前方无路,则右转90度,标记右转完成ÿ…...

)

每日一道算法题 3(2023-12-11)

题目描述: VLAN是一种对局域网设备进行逻辑划分的技术,为了标识不同的VLAN,引入VLAN ID(1-4094之间的整数)的概念。 定义一个VLAN ID的资源池(下称VLAN资源池),资源池中连续的VLAN用开始VLAN-结束VLAN表示,不连续的用单…...

【Android】查看keystore的公钥和私钥

前言: 查看前准备好.keystore文件,安装并配置openssl、keytool。文件路径中不要有中文。 一、查看keystore的公钥: 1.从keystore中获取MD5证书 keytool -list -v -keystore gamekeyold.keystore 2.导出公钥文件 keytool -export -alias …...

ChatGPT的常识

什么是ChatGPT? ChatGPT是一个基于GPT模型的聊天机器人,GPT即“Generative Pre-training Transformer”,是一种预训练的语言模型。ChatGPT使用GPT-2和GPT-3两种模型来生成自然语言响应,从而与人类进行真实的对话。 ChatGPT的设计…...

Spring Boot中的事务是如何实现的?懂吗?

SpringBoot中的事务管理,用得好,能确保数据的一致性和完整性;用得不好,可能会给性能带来不小的影响哦。 基本使用 在SpringBoot中,事务的使用非常简洁。首先,得感谢Spring框架提供的Transactional注解&am…...

应用安全:JAVA反序列化漏洞之殇

应用安全:JAVA反序列化漏洞之殇 概述 序列化是让Java对象脱离Java运行环境的一种手段,可以有效的实现多平台之间的通信、对象持久化存储。Java 序列化是指把 Java 对象转换为字节序列的过程便于保存在内存、文件、数据库中,ObjectOutputStream类的 wri…...

)

基于以太坊的智能合约开发Solidity(函数继承篇)

参考教程:【实战篇】1、函数重载_哔哩哔哩_bilibili 1、函数重载: pragma solidity ^0.5.17;contract overLoadTest {//不带参数function test() public{}//带一个参数function test(address account) public{}//参数类型不同,虽然uint160可…...

【论文极速读】LVM,视觉大模型的GPT时刻?

【论文极速读】LVM,视觉大模型的GPT时刻? FesianXu 20231210 at Baidu Search Team 前言 这一周,LVM在arxiv上刚挂出不久,就被众多自媒体宣传为『视觉大模型的GPT时刻』,笔者抱着强烈的好奇心,在繁忙工作之…...

TS基础语法

前言: 因为在写前端的时候,发现很多UI组件的语法都已经开始使用TS语法,不学习TS根本看不到懂,所以简单的学一下TS语法。为了看UI组件的简单代码,不至于一脸懵。 一、安装node 对于windows来讲,node版本高…...

Opencv中的addweighted函数

一.addweighted函数作用 addweighted()是OpenCV库中用于图像处理的函数,主要功能是将两个输入图像(尺寸和类型相同)按照指定的权重进行加权叠加(图像融合),并添加一个标量值&#x…...

【机器视觉】单目测距——运动结构恢复

ps:图是随便找的,为了凑个封面 前言 在前面对光流法进行进一步改进,希望将2D光流推广至3D场景流时,发现2D转3D过程中存在尺度歧义问题,需要补全摄像头拍摄图像中缺失的深度信息,否则解空间不收敛…...

智能在线客服平台:数字化时代企业连接用户的 AI 中枢

随着互联网技术的飞速发展,消费者期望能够随时随地与企业进行交流。在线客服平台作为连接企业与客户的重要桥梁,不仅优化了客户体验,还提升了企业的服务效率和市场竞争力。本文将探讨在线客服平台的重要性、技术进展、实际应用,并…...

C++ 基础特性深度解析

目录 引言 一、命名空间(namespace) C 中的命名空间 与 C 语言的对比 二、缺省参数 C 中的缺省参数 与 C 语言的对比 三、引用(reference) C 中的引用 与 C 语言的对比 四、inline(内联函数…...

【Web 进阶篇】优雅的接口设计:统一响应、全局异常处理与参数校验

系列回顾: 在上一篇中,我们成功地为应用集成了数据库,并使用 Spring Data JPA 实现了基本的 CRUD API。我们的应用现在能“记忆”数据了!但是,如果你仔细审视那些 API,会发现它们还很“粗糙”:有…...

鸿蒙中用HarmonyOS SDK应用服务 HarmonyOS5开发一个生活电费的缴纳和查询小程序

一、项目初始化与配置 1. 创建项目 ohpm init harmony/utility-payment-app 2. 配置权限 // module.json5 {"requestPermissions": [{"name": "ohos.permission.INTERNET"},{"name": "ohos.permission.GET_NETWORK_INFO"…...

JDK 17 新特性

#JDK 17 新特性 /**************** 文本块 *****************/ python/scala中早就支持,不稀奇 String json “”" { “name”: “Java”, “version”: 17 } “”"; /**************** Switch 语句 -> 表达式 *****************/ 挺好的ÿ…...

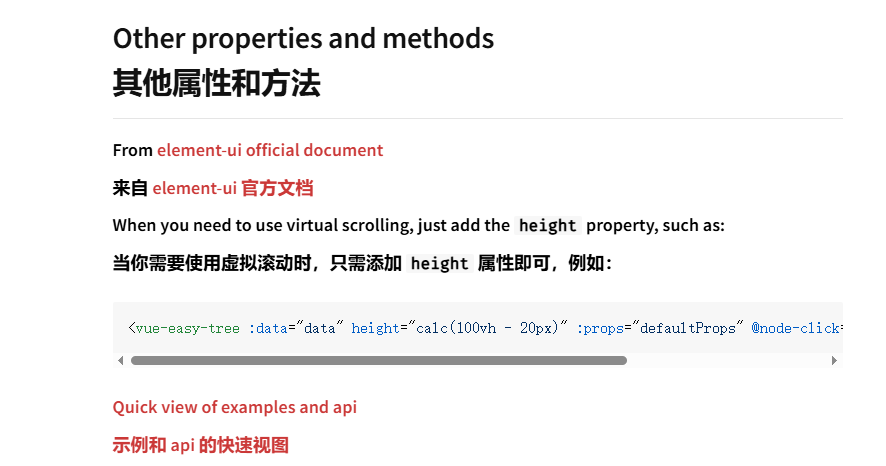

tree 树组件大数据卡顿问题优化

问题背景 项目中有用到树组件用来做文件目录,但是由于这个树组件的节点越来越多,导致页面在滚动这个树组件的时候浏览器就很容易卡死。这种问题基本上都是因为dom节点太多,导致的浏览器卡顿,这里很明显就需要用到虚拟列表的技术&…...

Java线上CPU飙高问题排查全指南

一、引言 在Java应用的线上运行环境中,CPU飙高是一个常见且棘手的性能问题。当系统出现CPU飙高时,通常会导致应用响应缓慢,甚至服务不可用,严重影响用户体验和业务运行。因此,掌握一套科学有效的CPU飙高问题排查方法&…...

return this;返回的是谁

一个审批系统的示例来演示责任链模式的实现。假设公司需要处理不同金额的采购申请,不同级别的经理有不同的审批权限: // 抽象处理者:审批者 abstract class Approver {protected Approver successor; // 下一个处理者// 设置下一个处理者pub…...