GAN生成对抗模型根据minist数据集生成手写数字图片

文章目录

- 1.项目介绍

- 2相关网站

- 3具体的代码及结果

- 导入工具包

- 设置超参数

- 定义优化器,以及损失函数

- 训练时的迭代过程

- 训练结果的展示

1.项目介绍

通过用minist数据集进行训练,得到一个GAN模型,可以生成与minist数据集类似的图片。

GAN是一种生成模型,它的目的是通过学习真实数据的分布来生成新的数据。GAN由两个网络组成,一个是生成器(Generator),一个是判别器(Discriminator)。生成器的任务是从随机噪声中生成类似于真实数据的样本,判别器的任务是判断给定的样本是真实的还是生成的。GAN的训练过程可以看作是一种对抗博弈,生成器和判别器互相竞争,不断提高自己的能力,最终达到生成器生成的样本和真实数据分布一致,判别器无法区分真假的状态。通过GAN我们可以生成足以以假乱真的图像,GAN被广泛的应用在图像生成,语音生成等场景中。例如经典的换脸应用DeepFakes背后的技术便是GAN.

生成对抗网络的组成:

生成器网络 、判别器网络

目标:

总体目标:

生成模型,根据已有的图片,生成与已有的图片类似的图片

训练目标:

判别器

能够正确的识别真的图片

能够正确的识别假的图片

生成器

能够生成的能被判别器判断为真的图片

本文是在google drive中部署的,部署过程参考博客:colab部署过程

2相关网站

参考的网站:github pytorch代码

所有的代码文件:提取码:f3vq

3具体的代码及结果

导入工具包

import os

import torch

import torchvision

import torch.nn as nn

from torchvision import transforms

from torchvision.utils import save_image

import os

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import torch

from torch import nn

import torch.optim as optim

import torchvision

#pip install torchvision

from torchvision import transforms, models, datasets

#https://pytorch.org/docs/stable/torchvision/index.html

import imageio

import time

import warnings

import random

import sys

import copy

import json

from PIL import Image设置超参数

# Device configuration

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')# Hyper-parameters

latent_size = 64

hidden_size = 256

image_size = 784

num_epochs = 200

batch_size = 100

# latent_size:这是潜在向量的大小,用作生成器网络的输入以生成假图像。

#latent_size 的大小会影响生成图像的多样性和质量。如果 latent_size 太小,则生成器可能无法捕获数据分布的所有变化,从而导致生成图像缺乏多样性。如果 latent_size 太大,则生成器可能会过拟合训练数据,从而导致生成图像质量下降。

# hidden_size:这是隐藏层的大小,用于定义生成器和鉴别器网络中隐藏层的大小。

# image_size:这是图像的大小,表示图像的像素数。在这种情况下,图像被重塑为一维张量,因此图像大小等于图像的长度。

# num_epochs:这是训练期间整个数据集通过网络的次数。

# batch_size:每批次中图像的数量。

sample_dir = 'samples'# Create a directory if not exists

#创建一个sample数据集用来存储数据,一个是minist中的真实的图像,以一个是我们的生成器生成的图像

if not os.path.exists(sample_dir):os.makedirs(sample_dir)

#transforms.ToTensor()是一个函数,它可以将PIL.Image或者numpy.ndarray格式的图像转换为torch.FloatTensor格式的张量,并且将像素值范围缩放到[0, 1]之间。

#transforms.Normalize(mean, std)是一个类,它可以对张量图像进行标准化,即减去给定的均值mean并除以给定的标准差std。这样做可以使得图像的分布接近标准正态分布,有利于模型的训练和收敛。

transform = transforms.Compose([transforms.ToTensor(),transforms.Normalize(mean=[0.5], # 1 for greyscale channelsstd=[0.5])])

#在 Colab 文件系统的 /content/drive/ 目录下挂载您的 Google Drive

from google.colab import drive

drive.mount('/content/drive/')

# 指定当前的工作文件夹

import os

# 此处为google drive中的文件路径,drive为之前指定的工作根目录,要加上

os.chdir("/content/drive/MyDrive/gan/")

# MNIST dataset

mnist = torchvision.datasets.MNIST(root='./data',train=True,transform=transform,download=True)# Data loader

data_loader = torch.utils.data.DataLoader(dataset=mnist,batch_size=batch_size,shuffle=True)

#定义判别器

# Discriminator

D = nn.Sequential(nn.Linear(image_size, hidden_size),#该函数相比于ReLU,保留了一些负轴的值,缓解了激活值过小而导致神经元参数无法更新的问题,其中α\alphaα默认0.01。nn.LeakyReLU(0.2),nn.Linear(hidden_size, hidden_size),nn.LeakyReLU(0.2),nn.Linear(hidden_size, 1),#将值映射到0~1nn.Sigmoid())

#定义生成器

# Generator

G = nn.Sequential(nn.Linear(latent_size, hidden_size),nn.ReLU(),nn.Linear(hidden_size, hidden_size),nn.ReLU(),nn.Linear(hidden_size, image_size),#将值映射到-1~1nn.Tanh())

# Device setting

D = D.to(device)

G = G.to(device)

定义优化器,以及损失函数

# Binary cross entropy loss and optimizer

#nn.BCELoss()函数是二分类交叉熵损失函数,用于计算二分类问题中的交叉熵损失

criterion = nn.BCELoss()

d_optimizer = torch.optim.Adam(D.parameters(), lr=0.0002)

g_optimizer = torch.optim.Adam(G.parameters(), lr=0.0002)

#定义函数将生成器生成的图像的像素值的范围转化为0~1

# denorm函数的作用是将输入张量的值从[-1,1]范围转换为[0,1]范围。

def denorm(x):out = (x + 1) / 2return out.clamp(0, 1)

# reset_grad函数的作用是将判别器和生成器的梯度清零,以便进行下一次反向传播

def reset_grad():d_optimizer.zero_grad()g_optimizer.zero_grad()# Start training

total_step = len(data_loader)

for epoch in range(num_epochs):for i, (images, _) in enumerate(data_loader):images = images.reshape(batch_size, -1).to(device)# Create the labels which are later used as input for the BCE lossreal_labels = torch.ones(batch_size, 1).to(device)fake_labels = torch.zeros(batch_size, 1).to(device)# ================================================================== ## Train the discriminator ## ================================================================== ##训练判别器,让判别器能够对真实的和虚假的图片都进行判断# Compute BCE_Loss using real images where BCE_Loss(x, y): - y * log(D(x)) - (1-y) * log(1 - D(x))# Second term of the loss is always zero since real_labels == 1outputs = D(images)#计算对于真实样本的损失#真实标签列表是作为BCELoss公式中的y参数,它和预测值x一起计算交叉熵。#具体来说,当y为1时,损失值为-log(x),#当y为0时,损失值为-log(1-x)。#这样可以保证当预测值x和真实标签y一致时,损失值最小,当预测值x和真实标签y相反时,损失值最大。d_loss_real = criterion(outputs, real_labels)real_score = outputs# Compute BCELoss using fake images# First term of the loss is always zero since fake_labels == 0z = torch.randn(batch_size, latent_size).to(device)fake_images = G(z)outputs = D(fake_images)#计算对于虚假样本的损失d_loss_fake = criterion(outputs, fake_labels)fake_score = outputs# Backprop and optimize#总损失为判断两个判断错误的损失,既我们希望找到一个对于正确错误的样本都能进行判断正确的判别器d_loss = d_loss_real + d_loss_fakereset_grad()d_loss.backward()d_optimizer.step()# ================================================================== ## Train the generator ## ================================================================== ## Compute loss with fake images#随机生成一个输入,也就是虚假的图片z = torch.randn(batch_size, latent_size).to(device)#根据虚假输入,生成一个图片fake_images = G(z)#给判别器判断outputs = D(fake_images)# We train G to maximize log(D(G(z)) instead of minimizing log(1-D(G(z)))# For the reason, see the last paragraph of section 3. https://arxiv.org/pdf/1406.2661.pdf#计算生成器的损失,我们希望,生成的图像能和真实的图像类似,所以这里y取1,只考虑-ylog(p(x)),也就是判断正确为真的损失g_loss = criterion(outputs, real_labels)# Backprop and optimizereset_grad()g_loss.backward()g_optimizer.step()if (i+1) % 200 == 0:print('Epoch [{}/{}], Step [{}/{}], d_loss: {:.4f}, g_loss: {:.4f}, D(x): {:.2f}, D(G(z)): {:.2f}'.format(epoch, num_epochs, i+1, total_step, d_loss.item(), g_loss.item(),real_score.mean().item(), fake_score.mean().item()))# Save real imagesif (epoch+1) == 1:images = images.reshape(images.size(0), 1, 28, 28)save_image(denorm(images), os.path.join(sample_dir, 'real_images.png'))# Save sampled images#每一次迭代,我们保存我们的生成器能生成的虚假的图像,看看我们的生成器生成的图片在逐步的真实话的过程fake_images = fake_images.reshape(fake_images.size(0), 1, 28, 28)save_image(denorm(fake_images), os.path.join(sample_dir, 'fake_images-{}.png'.format(epoch+1)))

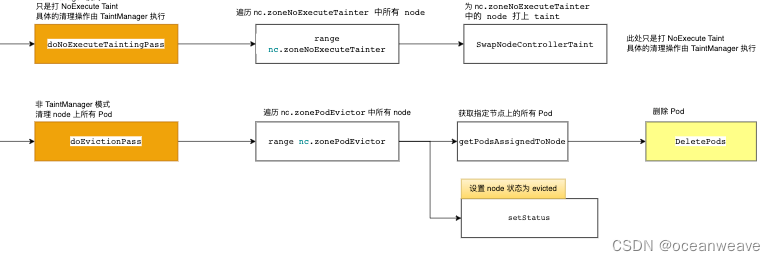

训练时的迭代过程

我们可以看到其中每次迭代的过程中

g_loss:生成器的损失在下降(说明生成器生成的图像越来越能被判别器识别为真实的图像)

D(G(z)): 判别器对于生成器生成的样本识别真样本的概率在上升(说明生成器的效果在不断提高,逐渐的可以生成真实的样本)

Epoch [0/200], Step [200/600], d_loss: 0.0252, g_loss: 5.2554, D(x): 1.00, D(G(z)): 0.02

Epoch [0/200], Step [400/600], d_loss: 0.1340, g_loss: 4.9320, D(x): 0.95, D(G(z)): 0.07

Epoch [0/200], Step [600/600], d_loss: 0.2178, g_loss: 4.7700, D(x): 0.93, D(G(z)): 0.07

Epoch [1/200], Step [200/600], d_loss: 0.3207, g_loss: 2.5174, D(x): 0.88, D(G(z)): 0.07

Epoch [1/200], Step [400/600], d_loss: 1.6857, g_loss: 3.8114, D(x): 0.64, D(G(z)): 0.30

Epoch [1/200], Step [600/600], d_loss: 1.2818, g_loss: 2.4387, D(x): 0.79, D(G(z)): 0.39

Epoch [2/200], Step [200/600], d_loss: 0.3939, g_loss: 3.4207, D(x): 0.91, D(G(z)): 0.19

Epoch [2/200], Step [400/600], d_loss: 0.2278, g_loss: 2.5563, D(x): 0.93, D(G(z)): 0.11

Epoch [2/200], Step [600/600], d_loss: 0.6092, g_loss: 4.0915, D(x): 0.83, D(G(z)): 0.19

Epoch [3/200], Step [200/600], d_loss: 0.2883, g_loss: 3.6595, D(x): 0.87, D(G(z)): 0.07

Epoch [3/200], Step [400/600], d_loss: 0.5897, g_loss: 2.6272, D(x): 0.81, D(G(z)): 0.15

Epoch [3/200], Step [600/600], d_loss: 0.8164, g_loss: 2.3492, D(x): 0.81, D(G(z)): 0.31

Epoch [4/200], Step [200/600], d_loss: 0.7031, g_loss: 2.1948, D(x): 0.84, D(G(z)): 0.25

Epoch [4/200], Step [400/600], d_loss: 0.1647, g_loss: 3.7823, D(x): 0.98, D(G(z)): 0.08

Epoch [4/200], Step [600/600], d_loss: 0.1939, g_loss: 3.5391, D(x): 0.91, D(G(z)): 0.05

Epoch [5/200], Step [200/600], d_loss: 0.1912, g_loss: 3.8443, D(x): 0.92, D(G(z)): 0.05

Epoch [5/200], Step [400/600], d_loss: 0.1662, g_loss: 3.8918, D(x): 0.97, D(G(z)): 0.11

Epoch [5/200], Step [600/600], d_loss: 0.3716, g_loss: 3.8777, D(x): 0.87, D(G(z)): 0.06

Epoch [6/200], Step [200/600], d_loss: 0.5468, g_loss: 3.8969, D(x): 0.85, D(G(z)): 0.10

Epoch [6/200], Step [400/600], d_loss: 0.1739, g_loss: 3.8602, D(x): 0.94, D(G(z)): 0.04

Epoch [6/200], Step [600/600], d_loss: 0.2472, g_loss: 4.8380, D(x): 0.91, D(G(z)): 0.04

Epoch [7/200], Step [200/600], d_loss: 0.2011, g_loss: 4.5990, D(x): 0.92, D(G(z)): 0.06

Epoch [7/200], Step [400/600], d_loss: 0.3489, g_loss: 5.6411, D(x): 0.90, D(G(z)): 0.06

Epoch [7/200], Step [600/600], d_loss: 0.3301, g_loss: 3.0097, D(x): 0.93, D(G(z)): 0.16

Epoch [8/200], Step [200/600], d_loss: 0.3328, g_loss: 3.4204, D(x): 0.92, D(G(z)): 0.13

Epoch [8/200], Step [400/600], d_loss: 0.1620, g_loss: 3.1926, D(x): 0.96, D(G(z)): 0.09

Epoch [8/200], Step [600/600], d_loss: 0.2524, g_loss: 3.4148, D(x): 0.98, D(G(z)): 0.18

Epoch [9/200], Step [200/600], d_loss: 0.1735, g_loss: 3.2235, D(x): 0.95, D(G(z)): 0.06

Epoch [9/200], Step [400/600], d_loss: 0.1905, g_loss: 4.0506, D(x): 0.94, D(G(z)): 0.07

Epoch [9/200], Step [600/600], d_loss: 0.1437, g_loss: 4.8081, D(x): 0.96, D(G(z)): 0.08

Epoch [10/200], Step [200/600], d_loss: 0.0497, g_loss: 5.0490, D(x): 0.98, D(G(z)): 0.02

Epoch [10/200], Step [400/600], d_loss: 0.0652, g_loss: 5.5561, D(x): 0.97, D(G(z)): 0.01

Epoch [10/200], Step [600/600], d_loss: 0.1745, g_loss: 4.9265, D(x): 0.93, D(G(z)): 0.03

Epoch [11/200], Step [200/600], d_loss: 0.1788, g_loss: 4.2305, D(x): 0.95, D(G(z)): 0.07

Epoch [11/200], Step [400/600], d_loss: 0.0884, g_loss: 4.9210, D(x): 0.97, D(G(z)): 0.03

Epoch [11/200], Step [600/600], d_loss: 0.4536, g_loss: 5.0761, D(x): 0.91, D(G(z)): 0.18

Epoch [12/200], Step [200/600], d_loss: 0.3983, g_loss: 5.9821, D(x): 0.93, D(G(z)): 0.13

Epoch [12/200], Step [400/600], d_loss: 0.2873, g_loss: 5.5326, D(x): 0.91, D(G(z)): 0.08

Epoch [12/200], Step [600/600], d_loss: 0.1984, g_loss: 4.5899, D(x): 0.97, D(G(z)): 0.12

Epoch [13/200], Step [200/600], d_loss: 0.1524, g_loss: 4.7841, D(x): 0.94, D(G(z)): 0.03

Epoch [13/200], Step [400/600], d_loss: 0.1075, g_loss: 4.5099, D(x): 0.98, D(G(z)): 0.07

Epoch [13/200], Step [600/600], d_loss: 0.5001, g_loss: 6.8877, D(x): 0.84, D(G(z)): 0.01

Epoch [14/200], Step [200/600], d_loss: 0.0878, g_loss: 4.6591, D(x): 0.98, D(G(z)): 0.06

Epoch [14/200], Step [400/600], d_loss: 0.2258, g_loss: 4.7830, D(x): 0.97, D(G(z)): 0.13

Epoch [14/200], Step [600/600], d_loss: 0.2287, g_loss: 4.9709, D(x): 0.93, D(G(z)): 0.07

Epoch [15/200], Step [200/600], d_loss: 0.2274, g_loss: 5.0505, D(x): 0.94, D(G(z)): 0.07

Epoch [15/200], Step [400/600], d_loss: 0.1501, g_loss: 5.0111, D(x): 0.94, D(G(z)): 0.04

Epoch [15/200], Step [600/600], d_loss: 0.0859, g_loss: 5.2517, D(x): 0.95, D(G(z)): 0.02

Epoch [16/200], Step [200/600], d_loss: 0.1900, g_loss: 4.7658, D(x): 0.95, D(G(z)): 0.04

Epoch [16/200], Step [400/600], d_loss: 0.1400, g_loss: 7.4018, D(x): 0.97, D(G(z)): 0.03

Epoch [16/200], Step [600/600], d_loss: 0.1485, g_loss: 5.5882, D(x): 0.99, D(G(z)): 0.10

Epoch [17/200], Step [200/600], d_loss: 0.2869, g_loss: 4.3017, D(x): 0.90, D(G(z)): 0.02

Epoch [17/200], Step [400/600], d_loss: 0.2603, g_loss: 5.7215, D(x): 0.98, D(G(z)): 0.17

Epoch [17/200], Step [600/600], d_loss: 0.1268, g_loss: 5.8928, D(x): 0.97, D(G(z)): 0.06

Epoch [18/200], Step [200/600], d_loss: 0.0614, g_loss: 6.3626, D(x): 0.97, D(G(z)): 0.02

Epoch [18/200], Step [400/600], d_loss: 0.3950, g_loss: 5.1696, D(x): 0.95, D(G(z)): 0.17

Epoch [18/200], Step [600/600], d_loss: 0.0887, g_loss: 4.8490, D(x): 0.97, D(G(z)): 0.03

Epoch [19/200], Step [200/600], d_loss: 0.1939, g_loss: 3.7379, D(x): 0.95, D(G(z)): 0.09

Epoch [19/200], Step [400/600], d_loss: 0.3316, g_loss: 5.7292, D(x): 0.88, D(G(z)): 0.01

Epoch [19/200], Step [600/600], d_loss: 0.1429, g_loss: 4.8458, D(x): 0.96, D(G(z)): 0.05

Epoch [20/200], Step [200/600], d_loss: 0.1976, g_loss: 7.0141, D(x): 0.93, D(G(z)): 0.04

Epoch [20/200], Step [400/600], d_loss: 0.1226, g_loss: 5.1962, D(x): 0.95, D(G(z)): 0.02

Epoch [20/200], Step [600/600], d_loss: 0.2435, g_loss: 7.1254, D(x): 0.92, D(G(z)): 0.01

Epoch [21/200], Step [200/600], d_loss: 0.3504, g_loss: 4.0122, D(x): 0.90, D(G(z)): 0.09

Epoch [21/200], Step [400/600], d_loss: 0.3238, g_loss: 5.0485, D(x): 0.94, D(G(z)): 0.14

Epoch [21/200], Step [600/600], d_loss: 0.2065, g_loss: 3.8730, D(x): 0.94, D(G(z)): 0.06

Epoch [22/200], Step [200/600], d_loss: 0.3684, g_loss: 4.4913, D(x): 0.87, D(G(z)): 0.02

Epoch [22/200], Step [400/600], d_loss: 0.1684, g_loss: 4.6138, D(x): 0.93, D(G(z)): 0.05

Epoch [22/200], Step [600/600], d_loss: 0.2458, g_loss: 5.2389, D(x): 0.93, D(G(z)): 0.08

Epoch [23/200], Step [200/600], d_loss: 0.3259, g_loss: 4.3412, D(x): 0.88, D(G(z)): 0.07

Epoch [23/200], Step [400/600], d_loss: 0.2862, g_loss: 4.0427, D(x): 0.94, D(G(z)): 0.10

Epoch [23/200], Step [600/600], d_loss: 0.4527, g_loss: 3.7884, D(x): 0.92, D(G(z)): 0.18

Epoch [24/200], Step [200/600], d_loss: 0.1750, g_loss: 4.4059, D(x): 0.93, D(G(z)): 0.04

Epoch [24/200], Step [400/600], d_loss: 0.1966, g_loss: 4.7848, D(x): 0.96, D(G(z)): 0.10

Epoch [24/200], Step [600/600], d_loss: 0.2126, g_loss: 3.6014, D(x): 0.96, D(G(z)): 0.11

Epoch [25/200], Step [200/600], d_loss: 0.4429, g_loss: 3.7987, D(x): 0.88, D(G(z)): 0.07

Epoch [25/200], Step [400/600], d_loss: 0.3222, g_loss: 4.3068, D(x): 0.94, D(G(z)): 0.16

Epoch [25/200], Step [600/600], d_loss: 0.2818, g_loss: 4.9270, D(x): 0.94, D(G(z)): 0.07

Epoch [26/200], Step [200/600], d_loss: 0.2719, g_loss: 5.2220, D(x): 0.95, D(G(z)): 0.14

Epoch [26/200], Step [400/600], d_loss: 0.4343, g_loss: 4.7186, D(x): 0.85, D(G(z)): 0.05

Epoch [26/200], Step [600/600], d_loss: 0.4143, g_loss: 4.4156, D(x): 0.86, D(G(z)): 0.03

Epoch [27/200], Step [200/600], d_loss: 0.3997, g_loss: 3.5575, D(x): 0.92, D(G(z)): 0.13

Epoch [27/200], Step [400/600], d_loss: 0.3701, g_loss: 4.4439, D(x): 0.87, D(G(z)): 0.02

Epoch [27/200], Step [600/600], d_loss: 0.0873, g_loss: 4.3754, D(x): 0.98, D(G(z)): 0.05

Epoch [28/200], Step [200/600], d_loss: 0.4607, g_loss: 3.8893, D(x): 0.95, D(G(z)): 0.19

Epoch [28/200], Step [400/600], d_loss: 0.3372, g_loss: 5.0975, D(x): 0.97, D(G(z)): 0.16

Epoch [28/200], Step [600/600], d_loss: 0.2284, g_loss: 5.4233, D(x): 0.92, D(G(z)): 0.06

Epoch [29/200], Step [200/600], d_loss: 0.3817, g_loss: 4.3107, D(x): 0.93, D(G(z)): 0.12

Epoch [29/200], Step [400/600], d_loss: 0.3336, g_loss: 3.7298, D(x): 0.97, D(G(z)): 0.20

Epoch [29/200], Step [600/600], d_loss: 0.2664, g_loss: 4.5941, D(x): 0.92, D(G(z)): 0.08

Epoch [30/200], Step [200/600], d_loss: 0.4520, g_loss: 3.2173, D(x): 0.87, D(G(z)): 0.16

Epoch [30/200], Step [400/600], d_loss: 0.4444, g_loss: 2.4830, D(x): 0.91, D(G(z)): 0.21

Epoch [30/200], Step [600/600], d_loss: 0.2679, g_loss: 4.0562, D(x): 0.92, D(G(z)): 0.08

Epoch [31/200], Step [200/600], d_loss: 0.4414, g_loss: 4.9419, D(x): 0.93, D(G(z)): 0.20

Epoch [31/200], Step [400/600], d_loss: 0.4257, g_loss: 4.2394, D(x): 0.90, D(G(z)): 0.16

Epoch [31/200], Step [600/600], d_loss: 0.1577, g_loss: 4.1475, D(x): 0.93, D(G(z)): 0.05

Epoch [32/200], Step [200/600], d_loss: 0.6468, g_loss: 3.1813, D(x): 0.79, D(G(z)): 0.09

Epoch [32/200], Step [400/600], d_loss: 0.7241, g_loss: 4.1790, D(x): 0.78, D(G(z)): 0.12

Epoch [32/200], Step [600/600], d_loss: 0.4787, g_loss: 3.5841, D(x): 0.87, D(G(z)): 0.14

Epoch [33/200], Step [200/600], d_loss: 0.2906, g_loss: 3.6536, D(x): 0.94, D(G(z)): 0.15

Epoch [33/200], Step [400/600], d_loss: 0.4640, g_loss: 3.3191, D(x): 0.93, D(G(z)): 0.18

Epoch [33/200], Step [600/600], d_loss: 0.6650, g_loss: 3.3779, D(x): 0.83, D(G(z)): 0.20

Epoch [34/200], Step [200/600], d_loss: 0.3749, g_loss: 3.5957, D(x): 0.90, D(G(z)): 0.14

Epoch [34/200], Step [400/600], d_loss: 0.3069, g_loss: 4.4897, D(x): 0.92, D(G(z)): 0.13

Epoch [34/200], Step [600/600], d_loss: 0.4221, g_loss: 3.1977, D(x): 0.86, D(G(z)): 0.11

Epoch [35/200], Step [200/600], d_loss: 0.5369, g_loss: 2.4619, D(x): 0.80, D(G(z)): 0.11

Epoch [35/200], Step [400/600], d_loss: 0.5826, g_loss: 2.3311, D(x): 0.94, D(G(z)): 0.29

Epoch [35/200], Step [600/600], d_loss: 0.4576, g_loss: 3.8068, D(x): 0.83, D(G(z)): 0.06

Epoch [36/200], Step [200/600], d_loss: 0.2154, g_loss: 3.4432, D(x): 0.93, D(G(z)): 0.08

Epoch [36/200], Step [400/600], d_loss: 0.4272, g_loss: 3.7969, D(x): 0.91, D(G(z)): 0.17

Epoch [36/200], Step [600/600], d_loss: 0.4357, g_loss: 4.6301, D(x): 0.86, D(G(z)): 0.06

Epoch [37/200], Step [200/600], d_loss: 0.3840, g_loss: 3.5209, D(x): 0.86, D(G(z)): 0.09

Epoch [37/200], Step [400/600], d_loss: 0.2520, g_loss: 4.5026, D(x): 0.93, D(G(z)): 0.09

Epoch [37/200], Step [600/600], d_loss: 0.2975, g_loss: 4.3481, D(x): 0.96, D(G(z)): 0.12

Epoch [38/200], Step [200/600], d_loss: 0.2116, g_loss: 4.2693, D(x): 0.96, D(G(z)): 0.12

Epoch [38/200], Step [400/600], d_loss: 0.2980, g_loss: 5.6267, D(x): 0.91, D(G(z)): 0.08

Epoch [38/200], Step [600/600], d_loss: 0.3867, g_loss: 3.2204, D(x): 0.85, D(G(z)): 0.09

Epoch [39/200], Step [200/600], d_loss: 0.5077, g_loss: 2.9204, D(x): 0.94, D(G(z)): 0.25

Epoch [39/200], Step [400/600], d_loss: 0.5755, g_loss: 3.8340, D(x): 0.82, D(G(z)): 0.07

Epoch [39/200], Step [600/600], d_loss: 0.3072, g_loss: 4.0534, D(x): 0.88, D(G(z)): 0.09

Epoch [40/200], Step [200/600], d_loss: 0.2913, g_loss: 3.2628, D(x): 0.94, D(G(z)): 0.11

Epoch [40/200], Step [400/600], d_loss: 0.5462, g_loss: 3.0916, D(x): 0.82, D(G(z)): 0.12

Epoch [40/200], Step [600/600], d_loss: 0.6271, g_loss: 3.8926, D(x): 0.80, D(G(z)): 0.12

Epoch [41/200], Step [200/600], d_loss: 0.4911, g_loss: 3.1461, D(x): 0.90, D(G(z)): 0.19

Epoch [41/200], Step [400/600], d_loss: 0.4260, g_loss: 3.4678, D(x): 0.87, D(G(z)): 0.11

Epoch [41/200], Step [600/600], d_loss: 0.6902, g_loss: 2.5126, D(x): 0.76, D(G(z)): 0.15

Epoch [42/200], Step [200/600], d_loss: 0.4464, g_loss: 3.1702, D(x): 0.83, D(G(z)): 0.12

Epoch [42/200], Step [400/600], d_loss: 0.3756, g_loss: 2.6393, D(x): 0.89, D(G(z)): 0.12

Epoch [42/200], Step [600/600], d_loss: 0.6360, g_loss: 3.0586, D(x): 0.79, D(G(z)): 0.18

Epoch [43/200], Step [200/600], d_loss: 0.4233, g_loss: 3.2700, D(x): 0.86, D(G(z)): 0.12

Epoch [43/200], Step [400/600], d_loss: 0.6836, g_loss: 3.4518, D(x): 0.80, D(G(z)): 0.21

Epoch [43/200], Step [600/600], d_loss: 0.7702, g_loss: 2.3113, D(x): 0.76, D(G(z)): 0.19

Epoch [44/200], Step [200/600], d_loss: 0.3849, g_loss: 2.8337, D(x): 0.87, D(G(z)): 0.11

Epoch [44/200], Step [400/600], d_loss: 0.5281, g_loss: 2.4929, D(x): 0.87, D(G(z)): 0.21

Epoch [44/200], Step [600/600], d_loss: 0.7222, g_loss: 2.9932, D(x): 0.85, D(G(z)): 0.27

Epoch [45/200], Step [200/600], d_loss: 0.5053, g_loss: 3.4436, D(x): 0.85, D(G(z)): 0.15

Epoch [45/200], Step [400/600], d_loss: 0.5699, g_loss: 3.0064, D(x): 0.80, D(G(z)): 0.15

Epoch [45/200], Step [600/600], d_loss: 0.5866, g_loss: 2.7113, D(x): 0.83, D(G(z)): 0.19

Epoch [46/200], Step [200/600], d_loss: 0.5891, g_loss: 2.5745, D(x): 0.81, D(G(z)): 0.14

Epoch [46/200], Step [400/600], d_loss: 0.4424, g_loss: 2.5302, D(x): 0.82, D(G(z)): 0.10

Epoch [46/200], Step [600/600], d_loss: 0.6589, g_loss: 2.2096, D(x): 0.76, D(G(z)): 0.16

Epoch [47/200], Step [200/600], d_loss: 0.5520, g_loss: 2.6966, D(x): 0.85, D(G(z)): 0.21

Epoch [47/200], Step [400/600], d_loss: 0.7059, g_loss: 2.7294, D(x): 0.81, D(G(z)): 0.22

Epoch [47/200], Step [600/600], d_loss: 0.2912, g_loss: 3.7787, D(x): 0.88, D(G(z)): 0.08

Epoch [48/200], Step [200/600], d_loss: 0.4149, g_loss: 2.3708, D(x): 0.90, D(G(z)): 0.19

Epoch [48/200], Step [400/600], d_loss: 0.4266, g_loss: 3.3905, D(x): 0.87, D(G(z)): 0.13

Epoch [48/200], Step [600/600], d_loss: 0.3298, g_loss: 3.2459, D(x): 0.90, D(G(z)): 0.11

Epoch [49/200], Step [200/600], d_loss: 0.3318, g_loss: 3.5093, D(x): 0.92, D(G(z)): 0.14

Epoch [49/200], Step [400/600], d_loss: 0.6507, g_loss: 3.4914, D(x): 0.78, D(G(z)): 0.11

Epoch [49/200], Step [600/600], d_loss: 0.6534, g_loss: 2.7849, D(x): 0.76, D(G(z)): 0.08

Epoch [50/200], Step [200/600], d_loss: 0.6406, g_loss: 3.6847, D(x): 0.82, D(G(z)): 0.20

Epoch [50/200], Step [400/600], d_loss: 0.6941, g_loss: 2.9424, D(x): 0.80, D(G(z)): 0.21

Epoch [50/200], Step [600/600], d_loss: 0.4733, g_loss: 3.2116, D(x): 0.86, D(G(z)): 0.15

Epoch [51/200], Step [200/600], d_loss: 0.3287, g_loss: 3.6701, D(x): 0.91, D(G(z)): 0.16

Epoch [51/200], Step [400/600], d_loss: 0.5537, g_loss: 1.9568, D(x): 0.79, D(G(z)): 0.11

Epoch [51/200], Step [600/600], d_loss: 0.6470, g_loss: 2.4228, D(x): 0.80, D(G(z)): 0.22

Epoch [52/200], Step [200/600], d_loss: 0.8183, g_loss: 3.1269, D(x): 0.73, D(G(z)): 0.13

Epoch [52/200], Step [400/600], d_loss: 0.4611, g_loss: 2.3535, D(x): 0.88, D(G(z)): 0.20

Epoch [52/200], Step [600/600], d_loss: 0.5158, g_loss: 2.3248, D(x): 0.85, D(G(z)): 0.20

Epoch [53/200], Step [200/600], d_loss: 0.5410, g_loss: 2.3491, D(x): 0.82, D(G(z)): 0.17

Epoch [53/200], Step [400/600], d_loss: 0.6135, g_loss: 2.0376, D(x): 0.79, D(G(z)): 0.19

Epoch [53/200], Step [600/600], d_loss: 0.6995, g_loss: 3.0768, D(x): 0.76, D(G(z)): 0.15

Epoch [54/200], Step [200/600], d_loss: 0.7788, g_loss: 2.3476, D(x): 0.84, D(G(z)): 0.32

Epoch [54/200], Step [400/600], d_loss: 0.6057, g_loss: 2.6538, D(x): 0.78, D(G(z)): 0.16

Epoch [54/200], Step [600/600], d_loss: 0.6524, g_loss: 1.9913, D(x): 0.83, D(G(z)): 0.26

Epoch [55/200], Step [200/600], d_loss: 0.4699, g_loss: 3.0903, D(x): 0.88, D(G(z)): 0.20

Epoch [55/200], Step [400/600], d_loss: 0.6438, g_loss: 2.4830, D(x): 0.77, D(G(z)): 0.18

Epoch [55/200], Step [600/600], d_loss: 0.8641, g_loss: 1.5713, D(x): 0.80, D(G(z)): 0.32

Epoch [56/200], Step [200/600], d_loss: 0.5685, g_loss: 1.8569, D(x): 0.78, D(G(z)): 0.17

Epoch [56/200], Step [400/600], d_loss: 0.4462, g_loss: 2.5452, D(x): 0.82, D(G(z)): 0.13

Epoch [56/200], Step [600/600], d_loss: 0.5907, g_loss: 2.4652, D(x): 0.75, D(G(z)): 0.12

Epoch [57/200], Step [200/600], d_loss: 0.4843, g_loss: 3.0264, D(x): 0.83, D(G(z)): 0.16

Epoch [57/200], Step [400/600], d_loss: 0.5594, g_loss: 2.5355, D(x): 0.82, D(G(z)): 0.20

Epoch [57/200], Step [600/600], d_loss: 0.5502, g_loss: 2.2562, D(x): 0.80, D(G(z)): 0.15

Epoch [58/200], Step [200/600], d_loss: 0.6652, g_loss: 2.0351, D(x): 0.82, D(G(z)): 0.24

Epoch [58/200], Step [400/600], d_loss: 0.4033, g_loss: 2.9153, D(x): 0.90, D(G(z)): 0.20

Epoch [58/200], Step [600/600], d_loss: 0.6348, g_loss: 2.1119, D(x): 0.88, D(G(z)): 0.30

Epoch [59/200], Step [200/600], d_loss: 0.6415, g_loss: 3.1282, D(x): 0.81, D(G(z)): 0.20

Epoch [59/200], Step [400/600], d_loss: 0.5136, g_loss: 3.3376, D(x): 0.82, D(G(z)): 0.17

Epoch [59/200], Step [600/600], d_loss: 0.6462, g_loss: 2.3404, D(x): 0.76, D(G(z)): 0.16

Epoch [60/200], Step [200/600], d_loss: 0.5160, g_loss: 2.9145, D(x): 0.80, D(G(z)): 0.14

Epoch [60/200], Step [400/600], d_loss: 0.7120, g_loss: 2.6986, D(x): 0.79, D(G(z)): 0.27

Epoch [60/200], Step [600/600], d_loss: 0.4580, g_loss: 2.8799, D(x): 0.88, D(G(z)): 0.17

Epoch [61/200], Step [200/600], d_loss: 0.5593, g_loss: 2.7334, D(x): 0.77, D(G(z)): 0.13

Epoch [61/200], Step [400/600], d_loss: 0.7277, g_loss: 2.6792, D(x): 0.90, D(G(z)): 0.30

Epoch [61/200], Step [600/600], d_loss: 0.7283, g_loss: 1.6875, D(x): 0.68, D(G(z)): 0.11

Epoch [62/200], Step [200/600], d_loss: 0.3957, g_loss: 3.4459, D(x): 0.89, D(G(z)): 0.18

Epoch [62/200], Step [400/600], d_loss: 0.5846, g_loss: 1.6489, D(x): 0.81, D(G(z)): 0.17

Epoch [62/200], Step [600/600], d_loss: 0.7358, g_loss: 2.3752, D(x): 0.82, D(G(z)): 0.28

Epoch [63/200], Step [200/600], d_loss: 0.5577, g_loss: 2.9186, D(x): 0.87, D(G(z)): 0.21

Epoch [63/200], Step [400/600], d_loss: 0.6466, g_loss: 2.4374, D(x): 0.85, D(G(z)): 0.27

Epoch [63/200], Step [600/600], d_loss: 0.6891, g_loss: 2.2632, D(x): 0.84, D(G(z)): 0.30

Epoch [64/200], Step [200/600], d_loss: 0.8519, g_loss: 2.3878, D(x): 0.65, D(G(z)): 0.12

Epoch [64/200], Step [400/600], d_loss: 0.6176, g_loss: 2.4059, D(x): 0.81, D(G(z)): 0.20

Epoch [64/200], Step [600/600], d_loss: 0.8032, g_loss: 3.1006, D(x): 0.68, D(G(z)): 0.12

Epoch [65/200], Step [200/600], d_loss: 0.5564, g_loss: 2.9973, D(x): 0.77, D(G(z)): 0.14

Epoch [65/200], Step [400/600], d_loss: 0.7254, g_loss: 2.0362, D(x): 0.81, D(G(z)): 0.24

Epoch [65/200], Step [600/600], d_loss: 0.5845, g_loss: 2.7048, D(x): 0.75, D(G(z)): 0.11

Epoch [66/200], Step [200/600], d_loss: 0.6310, g_loss: 2.9230, D(x): 0.79, D(G(z)): 0.20

Epoch [66/200], Step [400/600], d_loss: 0.5095, g_loss: 2.3467, D(x): 0.77, D(G(z)): 0.13

Epoch [66/200], Step [600/600], d_loss: 0.5869, g_loss: 2.3648, D(x): 0.74, D(G(z)): 0.13

Epoch [67/200], Step [200/600], d_loss: 0.7529, g_loss: 2.2525, D(x): 0.72, D(G(z)): 0.15

Epoch [67/200], Step [400/600], d_loss: 0.6669, g_loss: 2.1734, D(x): 0.76, D(G(z)): 0.19

Epoch [67/200], Step [600/600], d_loss: 0.6977, g_loss: 2.0799, D(x): 0.77, D(G(z)): 0.20

Epoch [68/200], Step [200/600], d_loss: 0.5344, g_loss: 2.2810, D(x): 0.85, D(G(z)): 0.21

Epoch [68/200], Step [400/600], d_loss: 0.7502, g_loss: 2.7689, D(x): 0.73, D(G(z)): 0.21

Epoch [68/200], Step [600/600], d_loss: 0.8459, g_loss: 2.0199, D(x): 0.84, D(G(z)): 0.36

Epoch [69/200], Step [200/600], d_loss: 0.5745, g_loss: 2.1853, D(x): 0.78, D(G(z)): 0.18

Epoch [69/200], Step [400/600], d_loss: 0.7515, g_loss: 2.3225, D(x): 0.77, D(G(z)): 0.25

Epoch [69/200], Step [600/600], d_loss: 0.7967, g_loss: 1.8125, D(x): 0.74, D(G(z)): 0.22

Epoch [70/200], Step [200/600], d_loss: 0.7456, g_loss: 2.2358, D(x): 0.81, D(G(z)): 0.27

Epoch [70/200], Step [400/600], d_loss: 0.6154, g_loss: 2.4855, D(x): 0.81, D(G(z)): 0.24

Epoch [70/200], Step [600/600], d_loss: 0.5187, g_loss: 2.1255, D(x): 0.85, D(G(z)): 0.22

Epoch [71/200], Step [200/600], d_loss: 0.5937, g_loss: 3.1329, D(x): 0.80, D(G(z)): 0.19

Epoch [71/200], Step [400/600], d_loss: 0.5692, g_loss: 2.5442, D(x): 0.79, D(G(z)): 0.19

Epoch [71/200], Step [600/600], d_loss: 0.4669, g_loss: 2.8289, D(x): 0.84, D(G(z)): 0.18

Epoch [72/200], Step [200/600], d_loss: 0.5994, g_loss: 2.6761, D(x): 0.82, D(G(z)): 0.20

Epoch [72/200], Step [400/600], d_loss: 0.4832, g_loss: 2.6731, D(x): 0.83, D(G(z)): 0.17

Epoch [72/200], Step [600/600], d_loss: 0.5769, g_loss: 2.7867, D(x): 0.78, D(G(z)): 0.17

Epoch [73/200], Step [200/600], d_loss: 0.6073, g_loss: 2.0403, D(x): 0.79, D(G(z)): 0.17

Epoch [73/200], Step [400/600], d_loss: 0.7357, g_loss: 2.4262, D(x): 0.74, D(G(z)): 0.20

Epoch [73/200], Step [600/600], d_loss: 0.5897, g_loss: 2.4739, D(x): 0.82, D(G(z)): 0.21

Epoch [74/200], Step [200/600], d_loss: 0.6338, g_loss: 2.2802, D(x): 0.80, D(G(z)): 0.23

Epoch [74/200], Step [400/600], d_loss: 0.5724, g_loss: 2.7530, D(x): 0.84, D(G(z)): 0.23

Epoch [74/200], Step [600/600], d_loss: 0.6505, g_loss: 2.5929, D(x): 0.78, D(G(z)): 0.18

Epoch [75/200], Step [200/600], d_loss: 0.6580, g_loss: 2.5763, D(x): 0.85, D(G(z)): 0.28

Epoch [75/200], Step [400/600], d_loss: 0.6089, g_loss: 2.0517, D(x): 0.81, D(G(z)): 0.22

Epoch [75/200], Step [600/600], d_loss: 0.6480, g_loss: 2.3134, D(x): 0.83, D(G(z)): 0.27

Epoch [76/200], Step [200/600], d_loss: 0.8040, g_loss: 2.1533, D(x): 0.77, D(G(z)): 0.28

Epoch [76/200], Step [400/600], d_loss: 0.7031, g_loss: 2.7626, D(x): 0.79, D(G(z)): 0.24

Epoch [76/200], Step [600/600], d_loss: 0.7798, g_loss: 1.9259, D(x): 0.77, D(G(z)): 0.26

Epoch [77/200], Step [200/600], d_loss: 0.6174, g_loss: 2.2541, D(x): 0.76, D(G(z)): 0.16

Epoch [77/200], Step [400/600], d_loss: 0.7185, g_loss: 1.5864, D(x): 0.78, D(G(z)): 0.22

Epoch [77/200], Step [600/600], d_loss: 0.6941, g_loss: 2.3483, D(x): 0.82, D(G(z)): 0.27

Epoch [78/200], Step [200/600], d_loss: 0.8584, g_loss: 2.3806, D(x): 0.72, D(G(z)): 0.22

Epoch [78/200], Step [400/600], d_loss: 0.6060, g_loss: 1.8562, D(x): 0.83, D(G(z)): 0.24

Epoch [78/200], Step [600/600], d_loss: 0.7914, g_loss: 2.5783, D(x): 0.82, D(G(z)): 0.32

Epoch [79/200], Step [200/600], d_loss: 0.7219, g_loss: 2.3257, D(x): 0.73, D(G(z)): 0.20

Epoch [79/200], Step [400/600], d_loss: 0.7538, g_loss: 2.2944, D(x): 0.78, D(G(z)): 0.27

Epoch [79/200], Step [600/600], d_loss: 0.6531, g_loss: 2.0533, D(x): 0.80, D(G(z)): 0.24

Epoch [80/200], Step [200/600], d_loss: 0.9207, g_loss: 1.8896, D(x): 0.64, D(G(z)): 0.16

Epoch [80/200], Step [400/600], d_loss: 0.7419, g_loss: 2.2362, D(x): 0.69, D(G(z)): 0.17

Epoch [80/200], Step [600/600], d_loss: 0.5812, g_loss: 2.3372, D(x): 0.76, D(G(z)): 0.14

Epoch [81/200], Step [200/600], d_loss: 0.5252, g_loss: 2.2365, D(x): 0.78, D(G(z)): 0.17

Epoch [81/200], Step [400/600], d_loss: 0.7609, g_loss: 2.1495, D(x): 0.75, D(G(z)): 0.26

Epoch [81/200], Step [600/600], d_loss: 0.7870, g_loss: 2.3520, D(x): 0.75, D(G(z)): 0.26

Epoch [82/200], Step [200/600], d_loss: 0.7311, g_loss: 2.2137, D(x): 0.78, D(G(z)): 0.27

Epoch [82/200], Step [400/600], d_loss: 0.6972, g_loss: 1.7540, D(x): 0.77, D(G(z)): 0.26

Epoch [82/200], Step [600/600], d_loss: 0.8349, g_loss: 1.7994, D(x): 0.84, D(G(z)): 0.34

Epoch [83/200], Step [200/600], d_loss: 0.8138, g_loss: 2.1877, D(x): 0.80, D(G(z)): 0.32

Epoch [83/200], Step [400/600], d_loss: 0.7913, g_loss: 2.0897, D(x): 0.72, D(G(z)): 0.23

Epoch [83/200], Step [600/600], d_loss: 0.9098, g_loss: 1.5447, D(x): 0.66, D(G(z)): 0.23

Epoch [84/200], Step [200/600], d_loss: 0.8892, g_loss: 1.7672, D(x): 0.73, D(G(z)): 0.30

Epoch [84/200], Step [400/600], d_loss: 0.5531, g_loss: 2.2540, D(x): 0.80, D(G(z)): 0.21

Epoch [84/200], Step [600/600], d_loss: 0.8780, g_loss: 2.0353, D(x): 0.79, D(G(z)): 0.35

Epoch [85/200], Step [200/600], d_loss: 0.6664, g_loss: 2.4569, D(x): 0.83, D(G(z)): 0.25

Epoch [85/200], Step [400/600], d_loss: 0.9369, g_loss: 1.9261, D(x): 0.72, D(G(z)): 0.30

Epoch [85/200], Step [600/600], d_loss: 0.8626, g_loss: 1.3774, D(x): 0.74, D(G(z)): 0.30

Epoch [86/200], Step [200/600], d_loss: 0.8138, g_loss: 2.2834, D(x): 0.72, D(G(z)): 0.24

Epoch [86/200], Step [400/600], d_loss: 0.9225, g_loss: 2.0189, D(x): 0.70, D(G(z)): 0.29

Epoch [86/200], Step [600/600], d_loss: 0.7091, g_loss: 2.5431, D(x): 0.75, D(G(z)): 0.22

Epoch [87/200], Step [200/600], d_loss: 0.7513, g_loss: 1.6684, D(x): 0.72, D(G(z)): 0.23

Epoch [87/200], Step [400/600], d_loss: 0.7172, g_loss: 1.7539, D(x): 0.82, D(G(z)): 0.30

Epoch [87/200], Step [600/600], d_loss: 1.0858, g_loss: 2.5704, D(x): 0.64, D(G(z)): 0.24

Epoch [88/200], Step [200/600], d_loss: 0.8175, g_loss: 2.5280, D(x): 0.74, D(G(z)): 0.25

Epoch [88/200], Step [400/600], d_loss: 0.7610, g_loss: 1.8840, D(x): 0.78, D(G(z)): 0.28

Epoch [88/200], Step [600/600], d_loss: 0.7132, g_loss: 2.2979, D(x): 0.72, D(G(z)): 0.18

Epoch [89/200], Step [200/600], d_loss: 0.8786, g_loss: 1.6000, D(x): 0.72, D(G(z)): 0.25

Epoch [89/200], Step [400/600], d_loss: 0.7933, g_loss: 1.9502, D(x): 0.71, D(G(z)): 0.23

Epoch [89/200], Step [600/600], d_loss: 0.5541, g_loss: 2.7170, D(x): 0.81, D(G(z)): 0.19

Epoch [90/200], Step [200/600], d_loss: 0.9670, g_loss: 1.5065, D(x): 0.64, D(G(z)): 0.23

Epoch [90/200], Step [400/600], d_loss: 0.8945, g_loss: 1.5057, D(x): 0.74, D(G(z)): 0.32

Epoch [90/200], Step [600/600], d_loss: 0.8275, g_loss: 1.9291, D(x): 0.66, D(G(z)): 0.20

Epoch [91/200], Step [200/600], d_loss: 0.7411, g_loss: 2.1024, D(x): 0.75, D(G(z)): 0.24

Epoch [91/200], Step [400/600], d_loss: 0.8613, g_loss: 1.7976, D(x): 0.72, D(G(z)): 0.27

Epoch [91/200], Step [600/600], d_loss: 0.9204, g_loss: 1.9358, D(x): 0.81, D(G(z)): 0.38

Epoch [92/200], Step [200/600], d_loss: 0.5769, g_loss: 1.8164, D(x): 0.85, D(G(z)): 0.26

Epoch [92/200], Step [400/600], d_loss: 0.8222, g_loss: 1.5064, D(x): 0.73, D(G(z)): 0.26

Epoch [92/200], Step [600/600], d_loss: 0.5844, g_loss: 2.5825, D(x): 0.78, D(G(z)): 0.20

Epoch [93/200], Step [200/600], d_loss: 0.6836, g_loss: 1.9087, D(x): 0.74, D(G(z)): 0.22

Epoch [93/200], Step [400/600], d_loss: 0.7328, g_loss: 1.7869, D(x): 0.72, D(G(z)): 0.22

Epoch [93/200], Step [600/600], d_loss: 0.7112, g_loss: 1.6405, D(x): 0.79, D(G(z)): 0.28

Epoch [94/200], Step [200/600], d_loss: 0.7915, g_loss: 1.7422, D(x): 0.71, D(G(z)): 0.24

Epoch [94/200], Step [400/600], d_loss: 0.7935, g_loss: 1.4080, D(x): 0.85, D(G(z)): 0.36

Epoch [94/200], Step [600/600], d_loss: 1.0396, g_loss: 1.4284, D(x): 0.76, D(G(z)): 0.35

Epoch [95/200], Step [200/600], d_loss: 0.7436, g_loss: 2.2755, D(x): 0.73, D(G(z)): 0.18

Epoch [95/200], Step [400/600], d_loss: 0.8688, g_loss: 1.8874, D(x): 0.76, D(G(z)): 0.30

Epoch [95/200], Step [600/600], d_loss: 0.8444, g_loss: 2.3308, D(x): 0.66, D(G(z)): 0.19

Epoch [96/200], Step [200/600], d_loss: 0.7442, g_loss: 2.3771, D(x): 0.73, D(G(z)): 0.22

Epoch [96/200], Step [400/600], d_loss: 0.7074, g_loss: 2.2321, D(x): 0.74, D(G(z)): 0.18

Epoch [96/200], Step [600/600], d_loss: 0.8868, g_loss: 1.4519, D(x): 0.67, D(G(z)): 0.21

Epoch [97/200], Step [200/600], d_loss: 0.8345, g_loss: 1.6682, D(x): 0.72, D(G(z)): 0.28

Epoch [97/200], Step [400/600], d_loss: 0.7934, g_loss: 2.1283, D(x): 0.68, D(G(z)): 0.17

Epoch [97/200], Step [600/600], d_loss: 0.7260, g_loss: 1.4867, D(x): 0.76, D(G(z)): 0.25

Epoch [98/200], Step [200/600], d_loss: 0.7596, g_loss: 2.1651, D(x): 0.76, D(G(z)): 0.24

Epoch [98/200], Step [400/600], d_loss: 0.8643, g_loss: 1.9000, D(x): 0.69, D(G(z)): 0.24

Epoch [98/200], Step [600/600], d_loss: 0.7319, g_loss: 1.6552, D(x): 0.75, D(G(z)): 0.25

Epoch [99/200], Step [200/600], d_loss: 0.7711, g_loss: 1.7455, D(x): 0.69, D(G(z)): 0.20

Epoch [99/200], Step [400/600], d_loss: 0.6971, g_loss: 2.1426, D(x): 0.80, D(G(z)): 0.25

Epoch [99/200], Step [600/600], d_loss: 0.8339, g_loss: 2.5612, D(x): 0.68, D(G(z)): 0.23

Epoch [100/200], Step [200/600], d_loss: 0.9574, g_loss: 1.6630, D(x): 0.65, D(G(z)): 0.26

Epoch [100/200], Step [400/600], d_loss: 0.9069, g_loss: 1.6404, D(x): 0.65, D(G(z)): 0.23

Epoch [100/200], Step [600/600], d_loss: 0.7358, g_loss: 1.6987, D(x): 0.74, D(G(z)): 0.24

Epoch [101/200], Step [200/600], d_loss: 0.8096, g_loss: 1.5011, D(x): 0.73, D(G(z)): 0.26

Epoch [101/200], Step [400/600], d_loss: 0.9509, g_loss: 1.5224, D(x): 0.75, D(G(z)): 0.32

Epoch [101/200], Step [600/600], d_loss: 1.0163, g_loss: 1.7723, D(x): 0.76, D(G(z)): 0.41

Epoch [102/200], Step [200/600], d_loss: 0.9929, g_loss: 1.3493, D(x): 0.73, D(G(z)): 0.37

Epoch [102/200], Step [400/600], d_loss: 0.8234, g_loss: 2.2110, D(x): 0.67, D(G(z)): 0.19

Epoch [102/200], Step [600/600], d_loss: 0.6964, g_loss: 2.1019, D(x): 0.74, D(G(z)): 0.21

对分别对生成器和判别器的模型进行保存

# Save the model checkpoints

torch.save(G.state_dict(), 'G.ckpt')

torch.save(D.state_dict(), 'D.ckpt')

训练结果的展示

一开提供的minist的真实数据的图片

通过迭代1次过后生成器生成的图片

通过迭代10次过后生成器生成的图片

通过迭代50次过后生成器生成的图片

通过迭代103次过后生成器生成的图片

我们可以看到,随着迭代次数的增加,我们的生产起生成的图像逐渐的与minist数据的提供的数据图像相类似。

相关文章:

GAN生成对抗模型根据minist数据集生成手写数字图片

文章目录 1.项目介绍2相关网站3具体的代码及结果导入工具包设置超参数定义优化器,以及损失函数训练时的迭代过程训练结果的展示 1.项目介绍 通过用minist数据集进行训练,得到一个GAN模型,可以生成与minist数据集类似的图片。 GAN是一种生成模…...

【K8S源码之Pod漂移】整体概况分析 controller-manager 中的 nodelifecycle controller(Pod的驱逐)

参考 k8s 污点驱逐详解-源码分析 - 掘金 k8s驱逐篇(5)-kube-controller-manager驱逐 - 良凯尔 - 博客园 k8s驱逐篇(6)-kube-controller-manager驱逐-NodeLifecycleController源码分析 - 良凯尔 - 博客园 k8s驱逐篇(7)-kube-controller-manager驱逐-taintManager源码分析 - 良…...

[保研/考研机试] KY212 二叉树遍历 华中科技大学复试上机题 C++实现

题目链接: 二叉树遍历_牛客题霸_牛客网二叉树的前序、中序、后序遍历的定义: 前序遍历:对任一子树,先访问根,然后遍历其左子树,最。题目来自【牛客题霸】https://www.nowcoder.com/share/jump/43719512169…...

CSS笔记

介绍 CSS导入方式 三种方法都将文字设置成了红色 CSS选择器 元素选择器 id选择器 图中div将颜色控制为红色,#name将颜色控制为蓝色,谁控制的范围最小,谁就生效,所以第二个div是蓝色的。id属性值要唯一,否则报错。 clas…...

链栈Link-Stack

0、节点结构体定义 typedef struct SNode{int data;struct SNode *next; } SNode, *LinkStack; 1、初始化 bool InitStack(LinkStack &S) //S为栈顶指针(存数据的头节点) {S NULL;return true; } 2、入栈 bool Push(LinkStack &S, int e) {…...

Ubuntu 20系统WIFI设置静态IP地址,以及断连问题

最近工作需要购置了一台GPU机器,然后搭建了深度学习的运行环境,在工作中将这台机器当做深度学习的服务器来使用,前期已经配置好多用户以及基础环境。但最近通过xshell连接总是不间断的出现断连现象。 补充一点,Ubuntu系统中与网…...

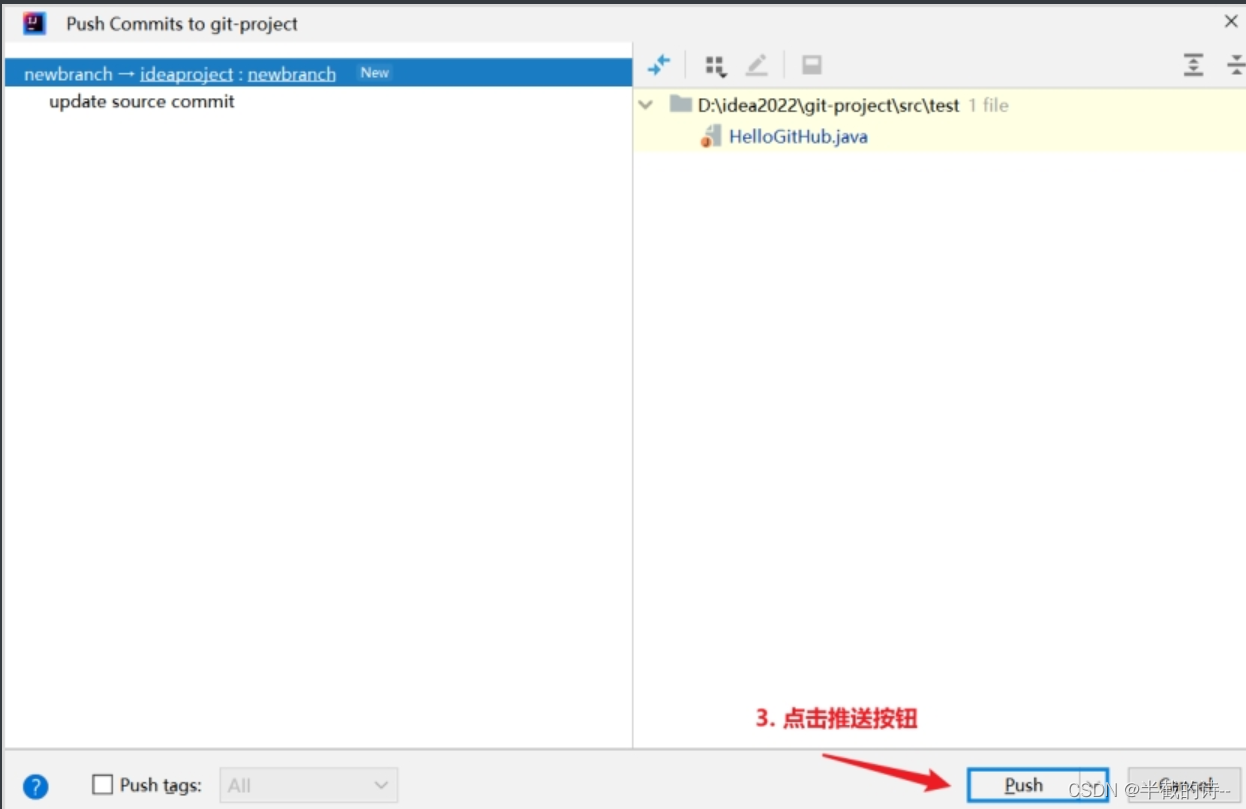

(一)idea连接GitHub的全部流程(注册GitHub、idea集成GitHub、增加合作伙伴、跨团队合作、分支操作)

(二)Git在公司中团队内合作和跨团队合作和分支操作的全部流程(一篇就够)https://blog.csdn.net/m0_65992672/article/details/132336481 4.1、简介 Git是一个免费的、开源的*分布式**版本控制**系统*,可以快速高效地…...

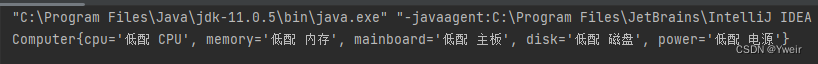

-bash: java: command not found笔记

文章目录 场景解决方案找java的方法find命令进行查找根据java进程找寻具体位置 场景 linux系统执行java命令时报错: -bash: java: command not found。 解决方案 可能是没有安装java(这种情况比较少)或者安装了java但是没有设置环境变量(一般是这种情况)。 找ja…...

C++ typename and .template

https://makecleanandmake.com/2015/07/20/leading-typename-dot-template-and-why-they-are-necessary/ typename Obj<T>::type var;v.template m<int>();...

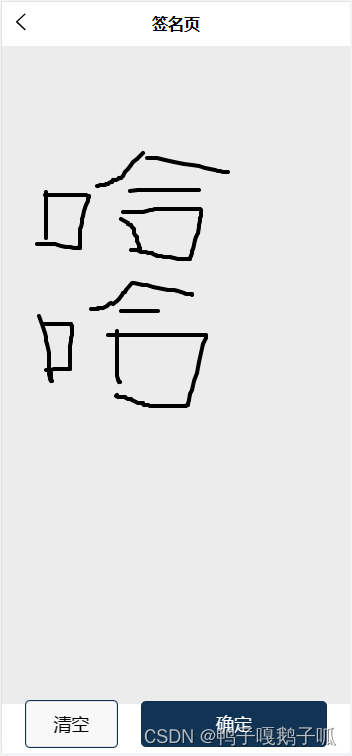

uniapp,使用canvas制作一个签名版

先看效果图 我把这个做成了页面,没有做成组件,因为之前我是配合uview-plus的popup弹出层使用的,这种组件好像是没有生命周期的,第一次打开弹出层可以正常写字,但是关闭之后再打开就不会显示绘制的线条了,还…...

【大数据】Flink 详解(五):核心篇 Ⅳ

Flink 详解(五):核心篇 Ⅳ 45、Flink 广播机制了解吗? 从图中可以理解 广播 就是一个公共的共享变量,广播变量存于 TaskManager 的内存中,所以广播变量不应该太大,将一个数据集广播后࿰…...

设计模式-建造者模式

核心思想 抽取共同的行为,允许使用者指定复杂对象的类型和内容,不需要了解内部的构建细节使用多个简单的行为构建一个复杂的对象,将对象的构建过程和它的表示分离,同样的构建过程可以创建不同的表示 优缺点 优点 使用者不需要知…...

flutter 设置app图标

使用插件 flutter_launcher_icons 在 pubspec.yaml 配置文件中 加入 dev_dependencies dev_dependencies: flutter_launcher_icons: "^0.13.1" 准备好app得 icon 图标 其中icon的名字为icon.png 创建assets文件夹 和子文件夹icon iamge 配置静态资源路径 完整配置…...

守护网络安全:深入了解DDOS攻击防护手段

ddos攻击防护手段有哪些?在数字化快速发展的时代,网络安全问题日益凸显,其中分布式拒绝服务(DDOS)攻击尤为引人关注。这种攻击通过向目标网站或服务器发送大量合法或非法的请求,旨在使目标资源无法正常处理其他用户的请求,从而达…...

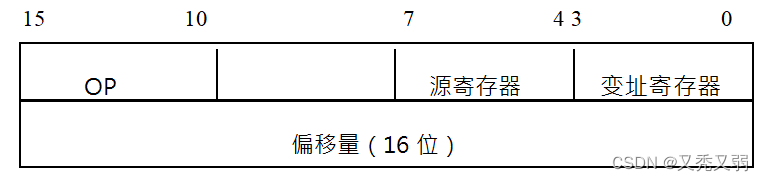

计组 | 寻址方式

目录 一、知识点 1.寻址方式什么? 2.根据操作数所在的位置,都有哪些寻址方式? 3.直接寻址 4.立即寻址 5.隐含寻址 6.相对寻址 7.寄存器 8.寄存器-寄存器型(RR)、寄存器-存储器型(RS)和…...

matlab工具箱Filter Designer设计butterworth带通滤波器

1、在matlab控制界面输入fdatool; 2、在显示的界面中选择合适的参数;本实验中采样频率是200,低通30hz,高通60hz,点击butterworth滤波器。 3、点击设计滤波器按钮后,在生成的界面点击红框按钮,可生成simulink模型到当前…...

)

Python学习笔记第六十天(Matplotlib Pyplot)

Python学习笔记第六十天 Matplotlib Pyplot后记 Matplotlib Pyplot Pyplot 是 Matplotlib 的子库,提供了和 MATLAB 类似的绘图 API。 Pyplot 是常用的绘图模块,能很方便让用户绘制 2D 图表。 Pyplot 包含一系列绘图函数的相关函数,每个函数…...

服务器自动备份、打包、传输脚本

备份脚本 #!/bin/bash #author cheng #备份服务器自动打包归档每天的备份文件 Path/backhistory Host$(hostname) Date$(date %F) Dest${Host}_${Date}#创建目录 mkdir -p ${Path}/${Dest}#打包文件到目录 cd / && \#结合autoback.sh脚本,它往那个地方备&a…...

Docker 的数据管理 网络通信

目录 1.管理容器数据的方式 数据卷 数据卷的容器 2.操作命令 3.Docker 镜像的创建 1.管理容器数据的方式 数据卷 可以独立于容器生命周期存储的机制 可提供持久化 数据共享 docker run -v /var/www:/data1 --name web1 -it centos:7 /bin/bash 数据卷的容器 用来提供持久化数…...

目标检测YOLO实战应用案例100讲-基于孤立森林算法的高光谱遥感图像异常目标检测

目录 前言 孤立森林算法的基本理论 2.1 引言 2.2 孤立森林算法的基本思想...

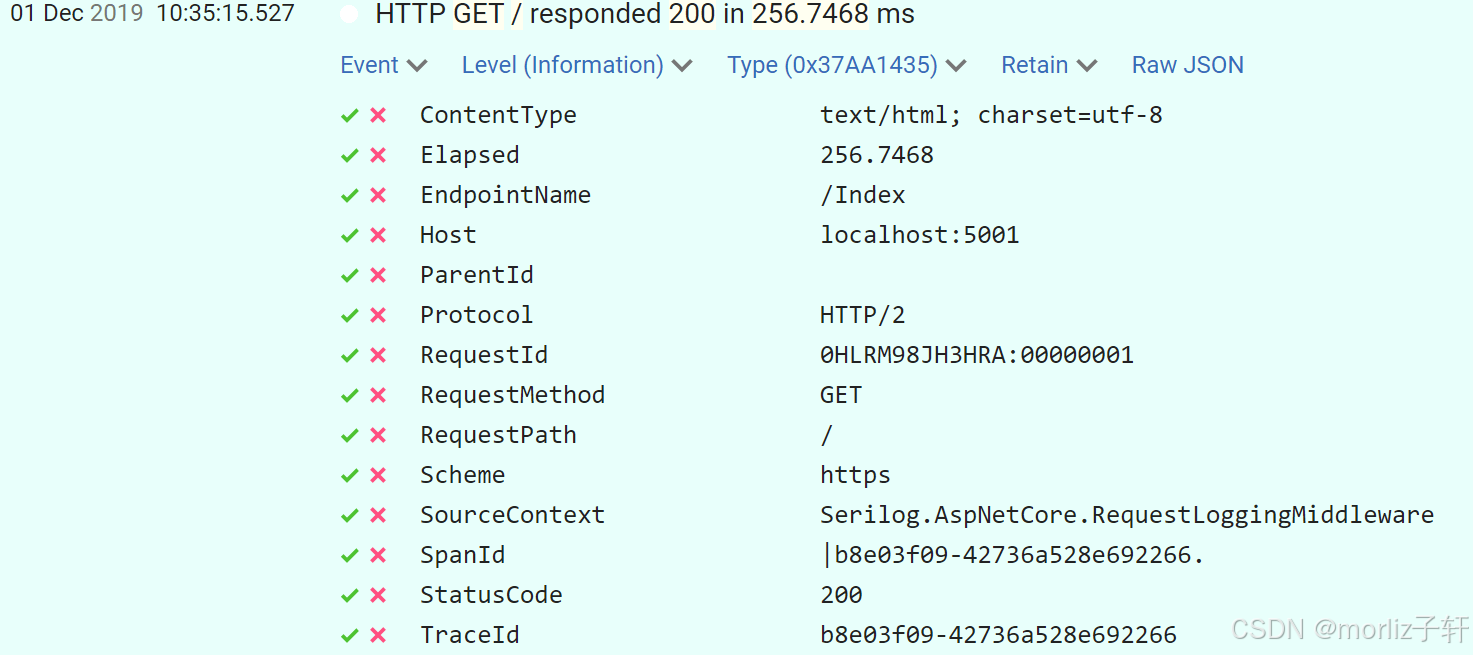

深入浅出Asp.Net Core MVC应用开发系列-AspNetCore中的日志记录

ASP.NET Core 是一个跨平台的开源框架,用于在 Windows、macOS 或 Linux 上生成基于云的新式 Web 应用。 ASP.NET Core 中的日志记录 .NET 通过 ILogger API 支持高性能结构化日志记录,以帮助监视应用程序行为和诊断问题。 可以通过配置不同的记录提供程…...

边缘计算医疗风险自查APP开发方案

核心目标:在便携设备(智能手表/家用检测仪)部署轻量化疾病预测模型,实现低延迟、隐私安全的实时健康风险评估。 一、技术架构设计 #mermaid-svg-iuNaeeLK2YoFKfao {font-family:"trebuchet ms",verdana,arial,sans-serif;font-size:16px;fill:#333;}#mermaid-svg…...

服务器硬防的应用场景都有哪些?

服务器硬防是指一种通过硬件设备层面的安全措施来防御服务器系统受到网络攻击的方式,避免服务器受到各种恶意攻击和网络威胁,那么,服务器硬防通常都会应用在哪些场景当中呢? 硬防服务器中一般会配备入侵检测系统和预防系统&#x…...

渲染学进阶内容——模型

最近在写模组的时候发现渲染器里面离不开模型的定义,在渲染的第二篇文章中简单的讲解了一下关于模型部分的内容,其实不管是方块还是方块实体,都离不开模型的内容 🧱 一、CubeListBuilder 功能解析 CubeListBuilder 是 Minecraft Java 版模型系统的核心构建器,用于动态创…...

MVC 数据库

MVC 数据库 引言 在软件开发领域,Model-View-Controller(MVC)是一种流行的软件架构模式,它将应用程序分为三个核心组件:模型(Model)、视图(View)和控制器(Controller)。这种模式有助于提高代码的可维护性和可扩展性。本文将深入探讨MVC架构与数据库之间的关系,以…...

)

OpenLayers 分屏对比(地图联动)

注:当前使用的是 ol 5.3.0 版本,天地图使用的key请到天地图官网申请,并替换为自己的key 地图分屏对比在WebGIS开发中是很常见的功能,和卷帘图层不一样的是,分屏对比是在各个地图中添加相同或者不同的图层进行对比查看。…...

Hive 存储格式深度解析:从 TextFile 到 ORC,如何选对数据存储方案?

在大数据处理领域,Hive 作为 Hadoop 生态中重要的数据仓库工具,其存储格式的选择直接影响数据存储成本、查询效率和计算资源消耗。面对 TextFile、SequenceFile、Parquet、RCFile、ORC 等多种存储格式,很多开发者常常陷入选择困境。本文将从底…...

解决:Android studio 编译后报错\app\src\main\cpp\CMakeLists.txt‘ to exist

现象: android studio报错: [CXX1409] D:\GitLab\xxxxx\app.cxx\Debug\3f3w4y1i\arm64-v8a\android_gradle_build.json : expected buildFiles file ‘D:\GitLab\xxxxx\app\src\main\cpp\CMakeLists.txt’ to exist 解决: 不要动CMakeLists.…...

Proxmox Mail Gateway安装指南:从零开始配置高效邮件过滤系统

💝💝💝欢迎莅临我的博客,很高兴能够在这里和您见面!希望您在这里可以感受到一份轻松愉快的氛围,不仅可以获得有趣的内容和知识,也可以畅所欲言、分享您的想法和见解。 推荐:「storms…...

WebRTC调研

WebRTC是什么,为什么,如何使用 WebRTC有什么优势 WebRTC Architecture Amazon KVS WebRTC 其它厂商WebRTC 海康门禁WebRTC 海康门禁其他界面整理 威视通WebRTC 局域网 Google浏览器 Microsoft Edge 公网 RTSP RTMP NVR ONVIF SIP SRT WebRTC协…...