celery分布式异步任务队列-4.4.7

文章目录

- celery介绍

- 兼容性

- 简单使用

- 安装

- 使用方式

- 功能介绍

- 常用案例

- 获取任务的返回值

- 任务中使用logging

- 定义任务基类

- 任务回调函数

- No result will be stored

- Result will be stored

- 任务的追踪、失败重试

- python setup.py install

- ln -s /run/shm /dev/shm

- Optional configuration, see the application user guide.

- incomplete partial: add(?, 2)

- resolves the partial: add(8, 2)

- (4 + 4) * 8

- (? + 4) * 8

- or using the fully qualified name of the object:

- app.config_from_object('module:Config')

- Calling the task with two arguments works:

- Calling the task with only one argument fails:

- Works locally, but the worker receiving the task will raise an error.

- OK:

- BAD:

- OK:

- No result will be stored

- Result will be stored

- Task expires after one minute from now.

- Also supports datetime

- Do not ignore result (default)

- is the same as

- 2 + 2 + 4 + 8

- (16 + 4) * 8

- ((4 + 16) * 2 + 4) * 8

- (4 + 4) * 8 * 10

- Inspect all nodes.

- Specify multiple nodes to inspect.

- Specify a single node to inspect.

- Names of nodes to start

- most people will only start one node:

- but you can also start multiple and configure settings

- for each in CELERYD_OPTS

- alternatively, you can specify the number of nodes to start:

- Absolute or relative path to the 'celery' command:

- App instance to use

- comment out this line if you don't use an app

- or fully qualified:

- Where to chdir at start.

- Extra command-line arguments to the worker

- Configure node-specific settings by appending node name to arguments:

- Set logging level to DEBUG

- %n will be replaced with the first part of the nodename.

- Workers should run as an unprivileged user.

- You need to create this user manually (or you can choose

- a user/group combination that already exists (e.g., nobody).

- If enabled pid and log directories will be created if missing,

- and owned by the userid/group configured.

- Absolute or relative path to the 'celery' command:

- App instance to use

- comment out this line if you don't use an app

- or fully qualified:

- Where to chdir at start.

- Extra arguments to celerybeat

- sh -x /etc/init.d/celeryd start

- C_FAKEFORK=1 sh -x /etc/init.d/celeryd start

- Name of nodes to start

- here we have a single node

- or we could have three nodes:

- Absolute or relative path to the 'celery' command:

- App instance to use

- comment out this line if you don't use an app

- or fully qualified:

- How to call manage.py

- Extra command-line arguments to the worker

- - %n will be replaced with the first part of the nodename.

- - %I will be replaced with the current child process index

- and is important when using the prefork pool to avoid race conditions.

- you may wish to add these options for Celery Beat

version 4.4.7 学习总结

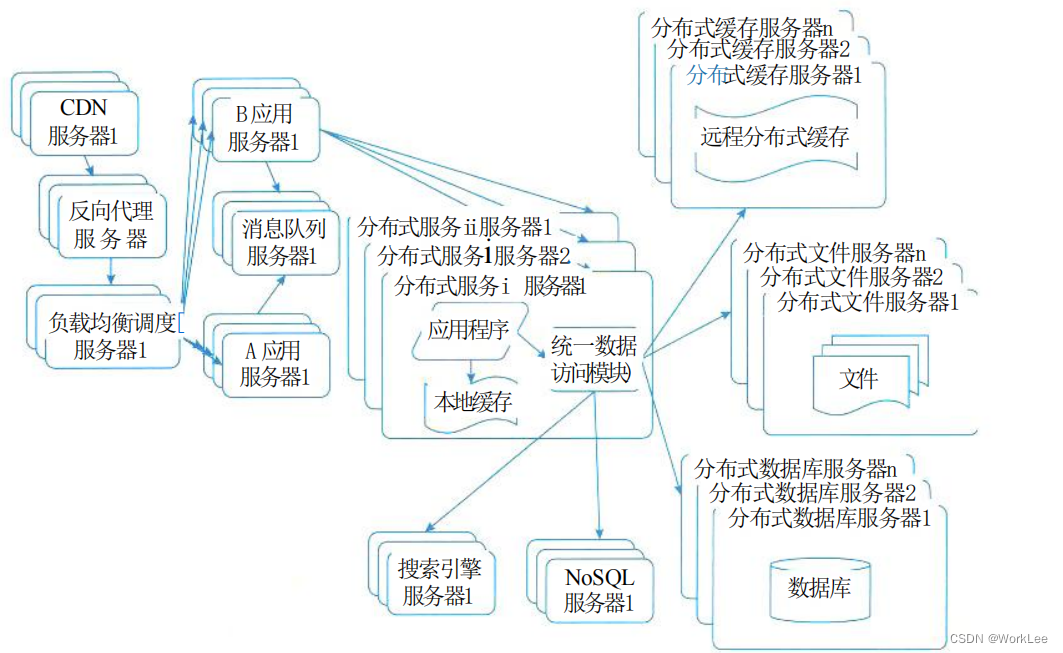

celery介绍

- python实现、开源、遵循BSD许可的分布式任务队列;

- 可以处理大量消息,简单、灵活、可靠的分布式系统,专注任务的实时处理和定时调度处理;

- 它是线程、进程分配任务的一种机制,官方仅做支持linux开发。

- 五大部分:

- task,任务

- beat,定时调度管理器

- broker,中间代理,用于存储消息、转发消息(如rabbitmq/redis)

- worker,工作进程,处理任务

- backend,存储任务执行结果 (如redis/mongodb)

兼容性

Celery version 4.0 runs on

Python ❨2.7, 3.4, 3.5❩

Celery 5.x

Python 3.5 or newer is required.

简单使用

安装

- pip 安装

# 官方仅支持linux(上线时),windows下也可以使用(测试)

pip3 install -U celery # -U升级所有包到最新

- 源码安装

下载

tar -zxvf celery-0.0.0.tar.gz

$ cd celery-0.0.0

$ python setup.py build

$ python setup.py install

使用方式

参考django-celery使用

Celery is a project with minimal funding, so we don’t support Microsoft Windows. Please don’t open any issues related to that platform.

功能介绍

Brokers:消息中间件

RabbitMQ, Redis, Amazon SQS, and more…

Concurrency,并发

prefork (multiprocessing),

eventlet, gevent 协成并发

thread (multithreaded)

solo (single threaded)

Result Stores,结果存储

AMQP, Redis

Memcached,

SQLAlchemy, Django ORM

Elasticsearch, Riak

MongoDB, CouchDB, Couchbase, ArangoDB

Amazon DynamoDB, Amazon S3

Serialization,序列化

pickle, json, yaml, msgpack.

zlib, bzip2 compression.

Monitoring,监控

监控worker进程执行情况。

Work-flows,工作流

including grouping, chaining, chunking, and more.

Time & Rate Limits,时间限制

一定时间内可以执行的任务数;

每个任务可以执行的时间;

Scheduling,定时调度

指定任务何时执行,定时周期性执行;

Resource Leak Protection,资源泄露保护

The --max-tasks-per-child option is used for user tasks leaking resources, like memory or file descriptors, that are simply out of your control.

User Components,用户组件

自定义worker进程等

常用案例

准备程序

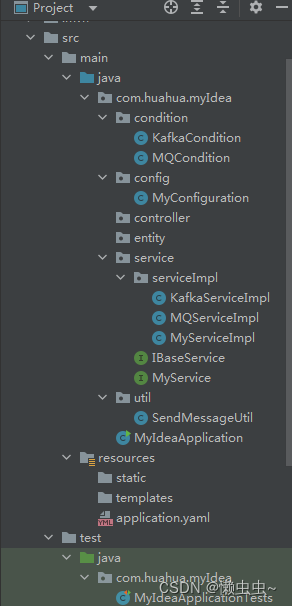

pkg/celery.py

from celery import Celery# 创建应用

app = Celery("app_name", broker="redis://@192.168.0.112:6379/0", backend="redis://@192.168.0.112:6379/1", include=["celery_pkg"])# 自动发现任务

app.autodiscover_tasks(packages=["celery_pkg"])

pkg/tasks.py

import time

from .celery import app# 定义任务

@app.task(bind=True)

def func1(task, a, b, c): # task的当前任务,一般写作self,不用自己传参n = 0while n < 3:time.sleep(3)print(n)n += 1try:raise ValueError("fail")except ValueError as e:task.retry(exc=e, throw=True, countdown=10, max_retries=3)return a + b + c@app.task(ignore_result=True) # 该任务不存储结果

def func2(name):time.sleep(2)return namefrom celery.exceptions import Ignore

@app.task(bind=True)

def func3(self, user):raise Ignore() # 让worker忽略当前任务#raise Retry() 告诉worker任务是重试

获取任务的返回值

- 提交任务使用delay(p1, p2, …),它是 apply_async((p1,p2,…), …) 的快捷写法;

- 返回一个AsyncResult 实例对象;

- 任务的状态celery.states.xxx

- PENDING,待执行或者未知的任务;

- STARTED,开始执行的任务;

- SUCCESS,成功的任务;

- FAILURE,失败的任务;

- RETRY,重试的任务;

- REVOKED,取消的任务;

- 更新任务的状态

- task.update_state(self, state, meta)

- 案例

@app.task(bind=True) # bind 绑定任务对象,传入upload_files

def upload_files(self, filenames):for i, file in enumerate(filenames):if not self.request.called_directly:# 更新状态self.update_state(state='PROGRESS', # 自定义状态meta={'current': i, 'total': len(filenames)})

from pkg.tasks import func1, func2In [3]: async_result = func1.delay(1,2,3) # 异步执行

In [4]: async_result

Out[4]: <AsyncResult: f133eb52-45b9-4593-807d-7b6cab8f6e54># 获取任务id

In [6]: async_result.id

Out[6]: 'f133eb52-45b9-4593-807d-7b6cab8f6e54'

# 任务的执行状态

In [7]: async_result.status

Out[7]: 'FAILURE'In [10]: async_result.failed()

Out[10]: TrueIn [11]: async_result.successful()

Out[11]: FalseIn [12]: async_result.date_done

Out[12]: datetime.datetime(2023, 9, 25, 14, 25, 17, 38713)# 获取任务返回值,会阻塞

In [14]: async_result.get(timeout=1, propagate=False) # 不要traceback信息

Out[14]: ValueError('fail')AsyncResult实例对象必须调用get() or forget() 释放(存储)资源。

任务中使用logging

pass

定义任务基类

# tasks.py

from .celery import app # app.Task

from celery import Task# 定义任务类

class MyTask(Task):def run(self, a, b):print("自定义任务.")return a + b# 使用基类

@app.task(base=MyTask)

def func(a, b):return a + b # or app = Celery('tasks', task_cls='your.module.path:DatabaseTask')# 数据库连接基类

class DatabaseTask(Task):_db = None@propertydef db(self):if self._db is None:self._db = Database.connect()return self._db

任务回调函数

after_return(self, status, retval, task_id, args, kwargs, einfo) 任务返回则调用

参数:

-

status – 当前任务状态

-

retval – 返回值

-

task_id

-

args – 执行时传的参数

-

kwargs 执行时传的参数

-

einfo – 异常对象

on_failure(self, exc, task_id, args, kwargs, einfo) 任务失败执行

参数:

- exc – 异常信息

on_retry(self, exc, task_id, args, kwargs, einfo) 任务重试时执行

on_success(self, retval, task_id, args, kwargs) 任务成功时执行

Requests and custom requests

Upon receiving a message to run a task, the worker creates a request to represent such demand.

Custom task classes may override which request class to use by changing the attribute celery.app.task.Task.Request. You may either assign the custom request class itself, or its fully qualified name.

The request has several responsibilities. Custom request classes should cover them all – they are responsible to actually run and trace the task. We strongly recommend to inherit from celery.worker.request.Request.

When using the pre-forking worker, the methods on_timeout() and on_failure() are executed in the main worker process. An application may leverage such facility to detect failures which are not detected using celery.app.task.Task.on_failure().

As an example, the following custom request detects and logs hard time limits, and other failures.

import logging

from celery.worker.request import Request

logger = logging.getLogger(‘my.package’)

class MyRequest(Request):

‘A minimal custom request to log failures and hard time limits.’

def on_timeout(self, soft, timeout):super(MyRequest, self).on_timeout(soft, timeout)if not soft:logger.warning('A hard timeout was enforced for task %s',self.task.name)def on_failure(self, exc_info, send_failed_event=True, return_ok=False):super(Request, self).on_failure(exc_info,send_failed_event=send_failed_event,return_ok=return_ok)logger.warning('Failure detected for task %s',self.task.name)

class MyTask(Task):

Request = MyRequest # you can use a FQN ‘my.package:MyRequest’

@app.task(base=MyTask)

def some_longrunning_task():

# use your imagination

How it works

Here come the technical details. This part isn’t something you need to know, but you may be interested.

All defined tasks are listed in a registry. The registry contains a list of task names and their task classes. You can investigate this registry yourself:

from proj.celery import app

app.tasks

{‘celery.chord_unlock’:

<@task: celery.chord_unlock>,

‘celery.backend_cleanup’:

<@task: celery.backend_cleanup>,

‘celery.chord’:

<@task: celery.chord>}

This is the list of tasks built into Celery. Note that tasks will only be registered when the module they’re defined in is imported.

The default loader imports any modules listed in the imports setting.

The app.task() decorator is responsible for registering your task in the applications task registry.

When tasks are sent, no actual function code is sent with it, just the name of the task to execute. When the worker then receives the message it can look up the name in its task registry to find the execution code.

This means that your workers should always be updated with the same software as the client. This is a drawback, but the alternative is a technical challenge that’s yet to be solved.

Tips and Best Practices

Ignore results you don’t want

If you don’t care about the results of a task, be sure to set the ignore_result option, as storing results wastes time and resources.

@app.task(ignore_result=True)

def mytask():

something()

Results can even be disabled globally using the task_ignore_result setting.

Results can be enabled/disabled on a per-execution basis, by passing the ignore_result boolean parameter, when calling apply_async or delay.

@app.task

def mytask(x, y):

return x + y

No result will be stored

result = mytask.apply_async(1, 2, ignore_result=True)

print result.get() # -> None

Result will be stored

result = mytask.apply_async(1, 2, ignore_result=False)

print result.get() # -> 3

By default tasks will not ignore results (ignore_result=False) when a result backend is configured.

The option precedence order is the following:

Global task_ignore_result

ignore_result option

Task execution option ignore_result

More optimization tips

You find additional optimization tips in the Optimizing Guide.

Avoid launching synchronous subtasks

Having a task wait for the result of another task is really inefficient, and may even cause a deadlock if the worker pool is exhausted.

Make your design asynchronous instead, for example by using callbacks.

Bad:

@app.task

def update_page_info(url):

page = fetch_page.delay(url).get()

info = parse_page.delay(url, page).get()

store_page_info.delay(url, info)

@app.task

def fetch_page(url):

return myhttplib.get(url)

@app.task

def parse_page(page):

return myparser.parse_document(page)

@app.task

def store_page_info(url, info):

return PageInfo.objects.create(url, info)

Good:

def update_page_info(url):

# fetch_page -> parse_page -> store_page

chain = fetch_page.s(url) | parse_page.s() | store_page_info.s(url)

chain()

@app.task()

def fetch_page(url):

return myhttplib.get(url)

@app.task()

def parse_page(page):

return myparser.parse_document(page)

@app.task(ignore_result=True)

def store_page_info(info, url):

PageInfo.objects.create(url=url, info=info)

Here I instead created a chain of tasks by linking together different signature()’s. You can read about chains and other powerful constructs at Canvas: Designing Work-flows.

By default Celery will not allow you to run subtasks synchronously within a task, but in rare or extreme cases you might need to do so. WARNING: enabling subtasks to run synchronously is not recommended!

@app.task

def update_page_info(url):

page = fetch_page.delay(url).get(disable_sync_subtasks=False)

info = parse_page.delay(url, page).get(disable_sync_subtasks=False)

store_page_info.delay(url, info)

@app.task

def fetch_page(url):

return myhttplib.get(url)

@app.task

def parse_page(url, page):

return myparser.parse_document(page)

@app.task

def store_page_info(url, info):

return PageInfo.objects.create(url, info)

add a callback to a group of tasks

split a task into several chunks

optimize the worker

see a list of built-in task states

create custom task states

set a custom task name

任务的追踪、失败重试

pass

know what queue a task was delivered to

see a list of running workers

purge all messages

inspect what the workers are doing

see what tasks a worker has registered

migrate tasks to a new broker

see a list of event message types

contribute to Celery

learn about available configuration settings

get a list of people and companies using Celery

write my own remote control command

change worker queues at runtime

Jump to ⟶

Brokers

Applications

Tasks

Calling

Workers

Daemonizing

Monitoring

Optimizing

Security

Routing

Configuration

Django

Contributing

Signals

FAQ

API Reference

Installation

You can install Celery either via the Python Package Index (PyPI) or from source.

To install using pip:

$ pip install -U Celery

Bundles

Celery also defines a group of bundles that can be used to install Celery and the dependencies for a given feature.

You can specify these in your requirements or on the pip command-line by using brackets. Multiple bundles can be specified by separating them by commas.

$ pip install “celery[librabbitmq]”

$ pip install “celery[librabbitmq,redis,auth,msgpack]”

The following bundles are available:

Serializers

celery[auth]

for using the auth security serializer.

celery[msgpack]

for using the msgpack serializer.

celery[yaml]

for using the yaml serializer.

Concurrency

celery[eventlet]

for using the eventlet pool.

celery[gevent]

for using the gevent pool.

Transports and Backends

celery[librabbitmq]

for using the librabbitmq C library.

celery[redis]

for using Redis as a message transport or as a result backend.

celery[sqs]

for using Amazon SQS as a message transport (experimental).

celery[tblib]

for using the task_remote_tracebacks feature.

celery[memcache]

for using Memcached as a result backend (using pylibmc)

celery[pymemcache]

for using Memcached as a result backend (pure-Python implementation).

celery[cassandra]

for using Apache Cassandra as a result backend with DataStax driver.

celery[couchbase]

for using Couchbase as a result backend.

celery[arangodb]

for using ArangoDB as a result backend.

celery[elasticsearch]

for using Elasticsearch as a result backend.

celery[riak]

for using Riak as a result backend.

celery[dynamodb]

for using AWS DynamoDB as a result backend.

celery[zookeeper]

for using Zookeeper as a message transport.

celery[sqlalchemy]

for using SQLAlchemy as a result backend (supported).

celery[pyro]

for using the Pyro4 message transport (experimental).

celery[slmq]

for using the SoftLayer Message Queue transport (experimental).

celery[consul]

for using the Consul.io Key/Value store as a message transport or result backend (experimental).

celery[django]

specifies the lowest version possible for Django support.

You should probably not use this in your requirements, it’s here for informational purposes only.

Downloading and installing from source

Download the latest version of Celery from PyPI:

https://pypi.org/project/celery/

You can install it by doing the following,:

$ tar xvfz celery-0.0.0.tar.gz

$ cd celery-0.0.0

$ python setup.py build

python setup.py install

The last command must be executed as a privileged user if you aren’t currently using a virtualenv.

Using the development version

With pip

The Celery development version also requires the development versions of kombu, amqp, billiard, and vine.

You can install the latest snapshot of these using the following pip commands:

$ pip install https://github.com/celery/celery/zipball/master#egg=celery

$ pip install https://github.com/celery/billiard/zipball/master#egg=billiard

$ pip install https://github.com/celery/py-amqp/zipball/master#egg=amqp

$ pip install https://github.com/celery/kombu/zipball/master#egg=kombu

$ pip install https://github.com/celery/vine/zipball/master#egg=vine

With git

Please see the Contributing section.

Brokers

Release

4.4

Date

Jul 31, 2020

Celery supports several message transport alternatives.

Broker Instructions

Using RabbitMQ

Installation & Configuration

Installing the RabbitMQ Server

Setting up RabbitMQ

Installing RabbitMQ on macOS

Configuring the system host name

Starting/Stopping the RabbitMQ server

Installation & Configuration

RabbitMQ is the default broker so it doesn’t require any additional dependencies or initial configuration, other than the URL location of the broker instance you want to use:

broker_url = ‘amqp://myuser:mypassword@localhost:5672/myvhost’

For a description of broker URLs and a full list of the various broker configuration options available to Celery, see Broker Settings, and see below for setting up the username, password and vhost.

Installing the RabbitMQ Server

See Installing RabbitMQ over at RabbitMQ’s website. For macOS see Installing RabbitMQ on macOS.

Note

If you’re getting nodedown errors after installing and using rabbitmqctl then this blog post can help you identify the source of the problem:

http://www.somic.org/2009/02/19/on-rabbitmqctl-and-badrpcnodedown/

Setting up RabbitMQ

To use Celery we need to create a RabbitMQ user, a virtual host and allow that user access to that virtual host:

$ sudo rabbitmqctl add_user myuser mypassword

$ sudo rabbitmqctl add_vhost myvhost

$ sudo rabbitmqctl set_user_tags myuser mytag

$ sudo rabbitmqctl set_permissions -p myvhost myuser “." ".” “.*”

Substitute in appropriate values for myuser, mypassword and myvhost above.

See the RabbitMQ Admin Guide for more information about access control.

Installing RabbitMQ on macOS

The easiest way to install RabbitMQ on macOS is using Homebrew the new and shiny package management system for macOS.

First, install Homebrew using the one-line command provided by the Homebrew documentation:

ruby -e “$(curl -fsSL https://raw.github.com/Homebrew/homebrew/go/install)”

Finally, we can install RabbitMQ using brew:

$ brew install rabbitmq

After you’ve installed RabbitMQ with brew you need to add the following to your path to be able to start and stop the broker: add it to the start-up file for your shell (e.g., .bash_profile or .profile).

PATH=$PATH:/usr/local/sbin

Configuring the system host name

If you’re using a DHCP server that’s giving you a random host name, you need to permanently configure the host name. This is because RabbitMQ uses the host name to communicate with nodes.

Use the scutil command to permanently set your host name:

$ sudo scutil --set HostName myhost.local

Then add that host name to /etc/hosts so it’s possible to resolve it back into an IP address:

127.0.0.1 localhost myhost myhost.local

If you start the rabbitmq-server, your rabbit node should now be rabbit@myhost, as verified by rabbitmqctl:

$ sudo rabbitmqctl status

Status of node rabbit@myhost …

[{running_applications,[{rabbit,“RabbitMQ”,“1.7.1”},

{mnesia,“MNESIA CXC 138 12”,“4.4.12”},

{os_mon,“CPO CXC 138 46”,“2.2.4”},

{sasl,“SASL CXC 138 11”,“2.1.8”},

{stdlib,“ERTS CXC 138 10”,“1.16.4”},

{kernel,“ERTS CXC 138 10”,“2.13.4”}]},

{nodes,[rabbit@myhost]},

{running_nodes,[rabbit@myhost]}]

…done.

This is especially important if your DHCP server gives you a host name starting with an IP address, (e.g., 23.10.112.31.comcast.net). In this case RabbitMQ will try to use rabbit@23: an illegal host name.

Starting/Stopping the RabbitMQ server

To start the server:

$ sudo rabbitmq-server

you can also run it in the background by adding the -detached option (note: only one dash):

$ sudo rabbitmq-server -detached

Never use kill (kill(1)) to stop the RabbitMQ server, but rather use the rabbitmqctl command:

$ sudo rabbitmqctl stop

When the server is running, you can continue reading Setting up RabbitMQ.

Using Redis

Installation

For the Redis support you have to install additional dependencies. You can install both Celery and these dependencies in one go using the celery[redis] bundle:

$ pip install -U “celery[redis]”

Configuration

Configuration is easy, just configure the location of your Redis database:

app.conf.broker_url = ‘redis://localhost:6379/0’

Where the URL is in the format of:

redis://:password@hostname:port/db_number

all fields after the scheme are optional, and will default to localhost on port 6379, using database 0.

If a Unix socket connection should be used, the URL needs to be in the format:

redis+socket:///path/to/redis.sock

Specifying a different database number when using a Unix socket is possible by adding the virtual_host parameter to the URL:

redis+socket:///path/to/redis.sock?virtual_host=db_number

It is also easy to connect directly to a list of Redis Sentinel:

app.conf.broker_url = ‘sentinel://localhost:26379;sentinel://localhost:26380;sentinel://localhost:26381’

app.conf.broker_transport_options = { ‘master_name’: “cluster1” }

Visibility Timeout

The visibility timeout defines the number of seconds to wait for the worker to acknowledge the task before the message is redelivered to another worker. Be sure to see Caveats below.

This option is set via the broker_transport_options setting:

app.conf.broker_transport_options = {‘visibility_timeout’: 3600} # 1 hour.

The default visibility timeout for Redis is 1 hour.

Results

If you also want to store the state and return values of tasks in Redis, you should configure these settings:

app.conf.result_backend = ‘redis://localhost:6379/0’

For a complete list of options supported by the Redis result backend, see Redis backend settings.

If you are using Sentinel, you should specify the master_name using the result_backend_transport_options setting:

app.conf.result_backend_transport_options = {‘master_name’: “mymaster”}

Caveats

Visibility timeout

If a task isn’t acknowledged within the Visibility Timeout the task will be redelivered to another worker and executed.

This causes problems with ETA/countdown/retry tasks where the time to execute exceeds the visibility timeout; in fact if that happens it will be executed again, and again in a loop.

So you have to increase the visibility timeout to match the time of the longest ETA you’re planning to use.

Note that Celery will redeliver messages at worker shutdown, so having a long visibility timeout will only delay the redelivery of ‘lost’ tasks in the event of a power failure or forcefully terminated workers.

Periodic tasks won’t be affected by the visibility timeout, as this is a concept separate from ETA/countdown.

You can increase this timeout by configuring a transport option with the same name:

app.conf.broker_transport_options = {‘visibility_timeout’: 43200}

The value must be an int describing the number of seconds.

Key eviction

Redis may evict keys from the database in some situations

If you experience an error like:

InconsistencyError: Probably the key (‘_kombu.binding.celery’) has been

removed from the Redis database.

then you may want to configure the redis-server to not evict keys by setting the timeout parameter to 0 in the redis configuration file.

Group result ordering

Versions of Celery up to and including 4.4.6 used an unsorted list to store result objects for groups in the Redis backend. This can cause those results to be be returned in a different order to their associated tasks in the original group instantiation.

Celery 4.4.7 and up introduce an opt-in behaviour which fixes this issue and ensures that group results are returned in the same order the tasks were defined, matching the behaviour of other backends. This change is incompatible with workers running versions of Celery without this feature, so the feature must be turned on using the boolean result_chord_ordered option of the result_backend_transport_options setting, like so:

app.conf.result_backend_transport_options = {

‘result_chord_ordered’: True

}

Using Amazon SQS

Installation

For the Amazon SQS support you have to install additional dependencies. You can install both Celery and these dependencies in one go using the celery[sqs] bundle:

$ pip install celery[sqs]

Configuration

You have to specify SQS in the broker URL:

broker_url = ‘sqs://ABCDEFGHIJKLMNOPQRST:ZYXK7NiynGlTogH8Nj+P9nlE73sq3@’

where the URL format is:

sqs://aws_access_key_id:aws_secret_access_key@

Please note that you must remember to include the @ sign at the end and encode the password so it can always be parsed correctly. For example:

from kombu.utils.url import safequote

aws_access_key = safequote(“ABCDEFGHIJKLMNOPQRST”)

aws_secret_key = safequote(“ZYXK7NiynG/TogH8Nj+P9nlE73sq3”)

broker_url = “sqs://{aws_access_key}:{aws_secret_key}@”.format(

aws_access_key=aws_access_key, aws_secret_key=aws_secret_key,

)

The login credentials can also be set using the environment variables AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY, in that case the broker URL may only be sqs://.

If you are using IAM roles on instances, you can set the BROKER_URL to: sqs:// and kombu will attempt to retrieve access tokens from the instance metadata.

Options

Region

The default region is us-east-1 but you can select another region by configuring the broker_transport_options setting:

broker_transport_options = {‘region’: ‘eu-west-1’}

See also

An overview of Amazon Web Services regions can be found here:

http://aws.amazon.com/about-aws/globalinfrastructure/

Visibility Timeout

The visibility timeout defines the number of seconds to wait for the worker to acknowledge the task before the message is redelivered to another worker. Also see caveats below.

This option is set via the broker_transport_options setting:

broker_transport_options = {‘visibility_timeout’: 3600} # 1 hour.

The default visibility timeout is 30 seconds.

Polling Interval

The polling interval decides the number of seconds to sleep between unsuccessful polls. This value can be either an int or a float. By default the value is one second: this means the worker will sleep for one second when there’s no more messages to read.

You must note that more frequent polling is also more expensive, so increasing the polling interval can save you money.

The polling interval can be set via the broker_transport_options setting:

broker_transport_options = {‘polling_interval’: 0.3}

Very frequent polling intervals can cause busy loops, resulting in the worker using a lot of CPU time. If you need sub-millisecond precision you should consider using another transport, like RabbitMQ , or Redis .

Long Polling

SQS Long Polling is enabled by default and the WaitTimeSeconds parameter of ReceiveMessage operation is set to 10 seconds.

The value of WaitTimeSeconds parameter can be set via the broker_transport_options setting:

broker_transport_options = {‘wait_time_seconds’: 15}

Valid values are 0 to 20. Note that newly created queues themselves (also if created by Celery) will have the default value of 0 set for the “Receive Message Wait Time” queue property.

Queue Prefix

By default Celery won’t assign any prefix to the queue names, If you have other services using SQS you can configure it do so using the broker_transport_options setting:

broker_transport_options = {‘queue_name_prefix’: ‘celery-’}

Predefined Queues

If you want Celery to use a set of predefined queues in AWS, and to never attempt to list SQS queues, nor attempt to create or delete them, pass a map of queue names to URLs using the predefined_queue_urls setting:

broker_transport_options = {

‘predefined_queues’: {

‘my-q’: {

‘url’: ‘https://ap-southeast-2.queue.amazonaws.com/123456/my-q’,

‘access_key_id’: ‘xxx’,

‘secret_access_key’: ‘xxx’,

}

}

}

Caveats

If a task isn’t acknowledged within the visibility_timeout, the task will be redelivered to another worker and executed.

This causes problems with ETA/countdown/retry tasks where the time to execute exceeds the visibility timeout; in fact if that happens it will be executed again, and again in a loop.

So you have to increase the visibility timeout to match the time of the longest ETA you’re planning to use.

Note that Celery will redeliver messages at worker shutdown, so having a long visibility timeout will only delay the redelivery of ‘lost’ tasks in the event of a power failure or forcefully terminated workers.

Periodic tasks won’t be affected by the visibility timeout, as it is a concept separate from ETA/countdown.

The maximum visibility timeout supported by AWS as of this writing is 12 hours (43200 seconds):

broker_transport_options = {‘visibility_timeout’: 43200}

SQS doesn’t yet support worker remote control commands.

SQS doesn’t yet support events, and so cannot be used with celery events, celerymon, or the Django Admin monitor.

Results

Multiple products in the Amazon Web Services family could be a good candidate to store or publish results with, but there’s no such result backend included at this point.

Warning

Don’t use the amqp result backend with SQS.

It will create one queue for every task, and the queues will not be collected. This could cost you money that would be better spent contributing an AWS result store backend back to Celery 😃

Broker Overview

This is comparison table of the different transports supports, more information can be found in the documentation for each individual transport (see Broker Instructions).

Name

Status

Monitoring

Remote Control

RabbitMQ

Stable

Yes

Yes

Redis

Stable

Yes

Yes

Amazon SQS

Stable

No

No

Zookeeper

Experimental

No

No

Experimental brokers may be functional but they don’t have dedicated maintainers.

Missing monitor support means that the transport doesn’t implement events, and as such Flower, celery events, celerymon and other event-based monitoring tools won’t work.

Remote control means the ability to inspect and manage workers at runtime using the celery inspect and celery control commands (and other tools using the remote control API).

First Steps with Celery

Celery is a task queue with batteries included. It’s easy to use so that you can get started without learning the full complexities of the problem it solves. It’s designed around best practices so that your product can scale and integrate with other languages, and it comes with the tools and support you need to run such a system in production.

In this tutorial you’ll learn the absolute basics of using Celery.

Learn about;

Choosing and installing a message transport (broker).

Installing Celery and creating your first task.

Starting the worker and calling tasks.

Keeping track of tasks as they transition through different states, and inspecting return values.

Celery may seem daunting at first - but don’t worry - this tutorial will get you started in no time. It’s deliberately kept simple, so as to not confuse you with advanced features. After you have finished this tutorial, it’s a good idea to browse the rest of the documentation. For example the Next Steps tutorial will showcase Celery’s capabilities.

Choosing a Broker

RabbitMQ

Redis

Other brokers

Installing Celery

Application

Running the Celery worker server

Calling the task

Keeping Results

Configuration

Where to go from here

Troubleshooting

Worker doesn’t start: Permission Error

Result backend doesn’t work or tasks are always in PENDING state

Choosing a Broker

Celery requires a solution to send and receive messages; usually this comes in the form of a separate service called a message broker.

There are several choices available, including:

RabbitMQ

RabbitMQ is feature-complete, stable, durable and easy to install. It’s an excellent choice for a production environment. Detailed information about using RabbitMQ with Celery:

Using RabbitMQ

If you’re using Ubuntu or Debian install RabbitMQ by executing this command:

$ sudo apt-get install rabbitmq-server

Or, if you want to run it on Docker execute this:

$ docker run -d -p 5672:5672 rabbitmq

When the command completes, the broker will already be running in the background, ready to move messages for you: Starting rabbitmq-server: SUCCESS.

Don’t worry if you’re not running Ubuntu or Debian, you can go to this website to find similarly simple installation instructions for other platforms, including Microsoft Windows:

http://www.rabbitmq.com/download.html

Redis

Redis is also feature-complete, but is more susceptible to data loss in the event of abrupt termination or power failures. Detailed information about using Redis:

Using Redis

If you want to run it on Docker execute this:

$ docker run -d -p 6379:6379 redis

Other brokers

In addition to the above, there are other experimental transport implementations to choose from, including Amazon SQS.

See Broker Overview for a full list.

Installing Celery

Celery is on the Python Package Index (PyPI), so it can be installed with standard Python tools like pip or easy_install:

$ pip install celery

Application

The first thing you need is a Celery instance. We call this the Celery application or just app for short. As this instance is used as the entry-point for everything you want to do in Celery, like creating tasks and managing workers, it must be possible for other modules to import it.

In this tutorial we keep everything contained in a single module, but for larger projects you want to create a dedicated module.

Let’s create the file tasks.py:

from celery import Celery

app = Celery(‘tasks’, broker=‘pyamqp://guest@localhost//’)

@app.task

def add(x, y):

return x + y

The first argument to Celery is the name of the current module. This is only needed so that names can be automatically generated when the tasks are defined in the main module.

The second argument is the broker keyword argument, specifying the URL of the message broker you want to use. Here using RabbitMQ (also the default option).

See Choosing a Broker above for more choices – for RabbitMQ you can use amqp://localhost, or for Redis you can use redis://localhost.

You defined a single task, called add, returning the sum of two numbers.

Running the Celery worker server

You can now run the worker by executing our program with the worker argument:

$ celery -A tasks worker --loglevel=info

Note

See the Troubleshooting section if the worker doesn’t start.

In production you’ll want to run the worker in the background as a daemon. To do this you need to use the tools provided by your platform, or something like supervisord (see Daemonization for more information).

For a complete listing of the command-line options available, do:

$ celery worker --help

There are also several other commands available, and help is also available:

$ celery help

Calling the task

To call our task you can use the delay() method.

This is a handy shortcut to the apply_async() method that gives greater control of the task execution (see Calling Tasks):

from tasks import add

add.delay(4, 4)

The task has now been processed by the worker you started earlier. You can verify this by looking at the worker’s console output.

Calling a task returns an AsyncResult instance. This can be used to check the state of the task, wait for the task to finish, or get its return value (or if the task failed, to get the exception and traceback).

Results are not enabled by default. In order to do remote procedure calls or keep track of task results in a database, you will need to configure Celery to use a result backend. This is described in the next section.

Keeping Results

If you want to keep track of the tasks’ states, Celery needs to store or send the states somewhere. There are several built-in result backends to choose from: SQLAlchemy/Django ORM, MongoDB, Memcached, Redis, RPC (RabbitMQ/AMQP), and – or you can define your own.

For this example we use the rpc result backend, that sends states back as transient messages. The backend is specified via the backend argument to Celery, (or via the result_backend setting if you choose to use a configuration module):

app = Celery(‘tasks’, backend=‘rpc://’, broker=‘pyamqp://’)

Or if you want to use Redis as the result backend, but still use RabbitMQ as the message broker (a popular combination):

app = Celery(‘tasks’, backend=‘redis://localhost’, broker=‘pyamqp://’)

To read more about result backends please see Result Backends.

Now with the result backend configured, let’s call the task again. This time you’ll hold on to the AsyncResult instance returned when you call a task:

result = add.delay(4, 4)

The ready() method returns whether the task has finished processing or not:

result.ready()

False

You can wait for the result to complete, but this is rarely used since it turns the asynchronous call into a synchronous one:

result.get(timeout=1)

8

In case the task raised an exception, get() will re-raise the exception, but you can override this by specifying the propagate argument:

result.get(propagate=False)

If the task raised an exception, you can also gain access to the original traceback:

result.traceback

Warning

Backends use resources to store and transmit results. To ensure that resources are released, you must eventually call get() or forget() on EVERY AsyncResult instance returned after calling a task.

See celery.result for the complete result object reference.

Configuration

Celery, like a consumer appliance, doesn’t need much configuration to operate. It has an input and an output. The input must be connected to a broker, and the output can be optionally connected to a result backend. However, if you look closely at the back, there’s a lid revealing loads of sliders, dials, and buttons: this is the configuration.

The default configuration should be good enough for most use cases, but there are many options that can be configured to make Celery work exactly as needed. Reading about the options available is a good idea to familiarize yourself with what can be configured. You can read about the options in the Configuration and defaults reference.

The configuration can be set on the app directly or by using a dedicated configuration module. As an example you can configure the default serializer used for serializing task payloads by changing the task_serializer setting:

app.conf.task_serializer = ‘json’

If you’re configuring many settings at once you can use update:

app.conf.update(

task_serializer=‘json’,

accept_content=[‘json’], # Ignore other content

result_serializer=‘json’,

timezone=‘Europe/Oslo’,

enable_utc=True,

)

For larger projects, a dedicated configuration module is recommended. Hard coding periodic task intervals and task routing options is discouraged. It is much better to keep these in a centralized location. This is especially true for libraries, as it enables users to control how their tasks behave. A centralized configuration will also allow your SysAdmin to make simple changes in the event of system trouble.

You can tell your Celery instance to use a configuration module by calling the app.config_from_object() method:

app.config_from_object(‘celeryconfig’)

This module is often called “celeryconfig”, but you can use any module name.

In the above case, a module named celeryconfig.py must be available to load from the current directory or on the Python path. It could look something like this:

celeryconfig.py:

broker_url = ‘pyamqp://’

result_backend = ‘rpc://’

task_serializer = ‘json’

result_serializer = ‘json’

accept_content = [‘json’]

timezone = ‘Europe/Oslo’

enable_utc = True

To verify that your configuration file works properly and doesn’t contain any syntax errors, you can try to import it:

$ python -m celeryconfig

For a complete reference of configuration options, see Configuration and defaults.

To demonstrate the power of configuration files, this is how you’d route a misbehaving task to a dedicated queue:

celeryconfig.py:

task_routes = {

‘tasks.add’: ‘low-priority’,

}

Or instead of routing it you could rate limit the task instead, so that only 10 tasks of this type can be processed in a minute (10/m):

celeryconfig.py:

task_annotations = {

‘tasks.add’: {‘rate_limit’: ‘10/m’}

}

If you’re using RabbitMQ or Redis as the broker then you can also direct the workers to set a new rate limit for the task at runtime:

$ celery -A tasks control rate_limit tasks.add 10/m

worker@example.com: OK

new rate limit set successfully

See Routing Tasks to read more about task routing, and the task_annotations setting for more about annotations, or Monitoring and Management Guide for more about remote control commands and how to monitor what your workers are doing.

Where to go from here

If you want to learn more you should continue to the Next Steps tutorial, and after that you can read the User Guide.

Troubleshooting

There’s also a troubleshooting section in the Frequently Asked Questions.

Worker doesn’t start: Permission Error

If you’re using Debian, Ubuntu or other Debian-based distributions:

Debian recently renamed the /dev/shm special file to /run/shm.

A simple workaround is to create a symbolic link:

ln -s /run/shm /dev/shm

Others:

If you provide any of the --pidfile, --logfile or --statedb arguments, then you must make sure that they point to a file or directory that’s writable and readable by the user starting the worker.

Result backend doesn’t work or tasks are always in PENDING state

All tasks are PENDING by default, so the state would’ve been better named “unknown”. Celery doesn’t update the state when a task is sent, and any task with no history is assumed to be pending (you know the task id, after all).

Make sure that the task doesn’t have ignore_result enabled.

Enabling this option will force the worker to skip updating states.

Make sure the task_ignore_result setting isn’t enabled.

Make sure that you don’t have any old workers still running.

It’s easy to start multiple workers by accident, so make sure that the previous worker is properly shut down before you start a new one.

An old worker that isn’t configured with the expected result backend may be running and is hijacking the tasks.

The --pidfile argument can be set to an absolute path to make sure this doesn’t happen.

Make sure the client is configured with the right backend.

If, for some reason, the client is configured to use a different backend than the worker, you won’t be able to receive the result. Make sure the backend is configured correctly:

result = task.delay()

print(result.backend)

Next Steps

The First Steps with Celery guide is intentionally minimal. In this guide I’ll demonstrate what Celery offers in more detail, including how to add Celery support for your application and library.

This document doesn’t document all of Celery’s features and best practices, so it’s recommended that you also read the User Guide

Using Celery in your Application

Calling Tasks

Canvas: Designing Work-flows

Routing

Remote Control

Timezone

Optimization

What to do now?

Using Celery in your Application

Our Project

Project layout:

proj/init.py

/celery.py

/tasks.py

proj/celery.py

from future import absolute_import, unicode_literals

from celery import Celery

app = Celery(‘proj’,

broker=‘amqp://’,

backend=‘amqp://’,

include=[‘proj.tasks’])

Optional configuration, see the application user guide.

app.conf.update(

result_expires=3600,

)

if name == ‘main’:

app.start()

In this module you created our Celery instance (sometimes referred to as the app). To use Celery within your project you simply import this instance.

The broker argument specifies the URL of the broker to use.

See Choosing a Broker for more information.

The backend argument specifies the result backend to use.

It’s used to keep track of task state and results. While results are disabled by default I use the RPC result backend here because I demonstrate how retrieving results work later. You may want to use a different backend for your application. They all have different strengths and weaknesses. If you don’t need results, it’s better to disable them. Results can also be disabled for individual tasks by setting the @task(ignore_result=True) option.

See Keeping Results for more information.

The include argument is a list of modules to import when the worker starts. You need to add our tasks module here so that the worker is able to find our tasks.

proj/tasks.py

from future import absolute_import, unicode_literals

from .celery import app

@app.task

def add(x, y):

return x + y

@app.task

def mul(x, y):

return x * y

@app.task

def xsum(numbers):

return sum(numbers)

Starting the worker

The celery program can be used to start the worker (you need to run the worker in the directory above proj):

$ celery -A proj worker -l info

When the worker starts you should see a banner and some messages:

--------------- celery@halcyon.local v4.0 (latentcall)

— ***** -----

– ******* ---- [Configuration]

- *** — * — . broker: amqp://guest@localhost:5672//

- ** ---------- . app: main:0x1012d8590

- ** ---------- . concurrency: 8 (processes)

- ** ---------- . events: OFF (enable -E to monitor this worker)

- ** ----------

- *** — * — [Queues]

– ******* ---- . celery: exchange:celery(direct) binding:celery

— ***** -----

[2012-06-08 16:23:51,078: WARNING/MainProcess] celery@halcyon.local has started.

– The broker is the URL you specified in the broker argument in our celery module. You can also specify a different broker on the command-line by using the -b option.

– Concurrency is the number of prefork worker process used to process your tasks concurrently. When all of these are busy doing work, new tasks will have to wait for one of the tasks to finish before it can be processed.

The default concurrency number is the number of CPU’s on that machine (including cores). You can specify a custom number using the celery worker -c option. There’s no recommended value, as the optimal number depends on a number of factors, but if your tasks are mostly I/O-bound then you can try to increase it. Experimentation has shown that adding more than twice the number of CPU’s is rarely effective, and likely to degrade performance instead.

Including the default prefork pool, Celery also supports using Eventlet, Gevent, and running in a single thread (see Concurrency).

– Events is an option that causes Celery to send monitoring messages (events) for actions occurring in the worker. These can be used by monitor programs like celery events, and Flower – the real-time Celery monitor, which you can read about in the Monitoring and Management guide.

– Queues is the list of queues that the worker will consume tasks from. The worker can be told to consume from several queues at once, and this is used to route messages to specific workers as a means for Quality of Service, separation of concerns, and prioritization, all described in the Routing Guide.

You can get a complete list of command-line arguments by passing in the --help flag:

$ celery worker --help

These options are described in more detailed in the Workers Guide.

Stopping the worker

To stop the worker simply hit Control-c. A list of signals supported by the worker is detailed in the Workers Guide.

In the background

In production you’ll want to run the worker in the background, described in detail in the daemonization tutorial.

The daemonization scripts uses the celery multi command to start one or more workers in the background:

$ celery multi start w1 -A proj -l info

celery multi v4.0.0 (latentcall)

Starting nodes…

w1.halcyon.local: OK

You can restart it too:

$ celery multi restart w1 -A proj -l info

celery multi v4.0.0 (latentcall)

Stopping nodes…

w1.halcyon.local: TERM -> 64024

Waiting for 1 node…

w1.halcyon.local: OK

Restarting node w1.halcyon.local: OK

celery multi v4.0.0 (latentcall)

Stopping nodes…

w1.halcyon.local: TERM -> 64052

or stop it:

$ celery multi stop w1 -A proj -l info

The stop command is asynchronous so it won’t wait for the worker to shutdown. You’ll probably want to use the stopwait command instead, which ensures that all currently executing tasks are completed before exiting:

$ celery multi stopwait w1 -A proj -l info

Note

celery multi doesn’t store information about workers so you need to use the same command-line arguments when restarting. Only the same pidfile and logfile arguments must be used when stopping.

By default it’ll create pid and log files in the current directory. To protect against multiple workers launching on top of each other you’re encouraged to put these in a dedicated directory:

$ mkdir -p /var/run/celery

$ mkdir -p /var/log/celery

$ celery multi start w1 -A proj -l info --pidfile=/var/run/celery/%n.pid

–logfile=/var/log/celery/%n%I.log

With the multi command you can start multiple workers, and there’s a powerful command-line syntax to specify arguments for different workers too, for example:

$ celery multi start 10 -A proj -l info -Q:1-3 images,video -Q:4,5 data

-Q default -L:4,5 debug

For more examples see the multi module in the API reference.

About the --app argument

The --app argument specifies the Celery app instance to use, in the form of module.path:attribute

But it also supports a shortcut form. If only a package name is specified, it’ll try to search for the app instance, in the following order:

With --app=proj:

an attribute named proj.app, or

an attribute named proj.celery, or

any attribute in the module proj where the value is a Celery application, or

If none of these are found it’ll try a submodule named proj.celery:

an attribute named proj.celery.app, or

an attribute named proj.celery.celery, or

Any attribute in the module proj.celery where the value is a Celery application.

This scheme mimics the practices used in the documentation – that is, proj:app for a single contained module, and proj.celery:app for larger projects.

Calling Tasks

You can call a task using the delay() method:

from proj.tasks import add

add.delay(2, 2)

This method is actually a star-argument shortcut to another method called apply_async():

add.apply_async((2, 2))

The latter enables you to specify execution options like the time to run (countdown), the queue it should be sent to, and so on:

add.apply_async((2, 2), queue=‘lopri’, countdown=10)

In the above example the task will be sent to a queue named lopri and the task will execute, at the earliest, 10 seconds after the message was sent.

Applying the task directly will execute the task in the current process, so that no message is sent:

add(2, 2)

4

These three methods - delay(), apply_async(), and applying (call), make up the Celery calling API, which is also used for signatures.

A more detailed overview of the Calling API can be found in the Calling User Guide.

Every task invocation will be given a unique identifier (an UUID) – this is the task id.

The delay and apply_async methods return an AsyncResult instance, which can be used to keep track of the tasks execution state. But for this you need to enable a result backend so that the state can be stored somewhere.

Results are disabled by default because there is no result backend that suits every application; to choose one you need to consider the drawbacks of each individual backend. For many tasks keeping the return value isn’t even very useful, so it’s a sensible default to have. Also note that result backends aren’t used for monitoring tasks and workers: for that Celery uses dedicated event messages (see Monitoring and Management Guide).

If you have a result backend configured you can retrieve the return value of a task:

res = add.delay(2, 2)

res.get(timeout=1)

4

You can find the task’s id by looking at the id attribute:

res.id

d6b3aea2-fb9b-4ebc-8da4-848818db9114

You can also inspect the exception and traceback if the task raised an exception, in fact result.get() will propagate any errors by default:

res = add.delay(2, ‘2’)

res.get(timeout=1)

Traceback (most recent call last):

File “”, line 1, in

File “celery/result.py”, line 221, in get

return self.backend.wait_for_pending(

File “celery/backends/asynchronous.py”, line 195, in wait_for_pending

return result.maybe_throw(callback=callback, propagate=propagate)

File “celery/result.py”, line 333, in maybe_throw

self.throw(value, self._to_remote_traceback(tb))

File “celery/result.py”, line 326, in throw

self.on_ready.throw(*args, **kwargs)

File “vine/promises.py”, line 244, in throw

reraise(type(exc), exc, tb)

File “vine/five.py”, line 195, in reraise

raise value

TypeError: unsupported operand type(s) for +: ‘int’ and ‘str’

If you don’t wish for the errors to propagate, you can disable that by passing propagate:

res.get(propagate=False)

TypeError(“unsupported operand type(s) for +: ‘int’ and ‘str’”)

In this case it’ll return the exception instance raised instead – so to check whether the task succeeded or failed, you’ll have to use the corresponding methods on the result instance:

res.failed()

True

res.successful()

False

So how does it know if the task has failed or not? It can find out by looking at the tasks state:

res.state

‘FAILURE’

A task can only be in a single state, but it can progress through several states. The stages of a typical task can be:

PENDING -> STARTED -> SUCCESS

The started state is a special state that’s only recorded if the task_track_started setting is enabled, or if the @task(track_started=True) option is set for the task.

The pending state is actually not a recorded state, but rather the default state for any task id that’s unknown: this you can see from this example:

from proj.celery import app

res = app.AsyncResult(‘this-id-does-not-exist’)

res.state

‘PENDING’

If the task is retried the stages can become even more complex. To demonstrate, for a task that’s retried two times the stages would be:

PENDING -> STARTED -> RETRY -> STARTED -> RETRY -> STARTED -> SUCCESS

To read more about task states you should see the States section in the tasks user guide.

Calling tasks is described in detail in the Calling Guide.

Canvas: Designing Work-flows

You just learned how to call a task using the tasks delay method, and this is often all you need. But sometimes you may want to pass the signature of a task invocation to another process or as an argument to another function, for which Celery uses something called signatures.

A signature wraps the arguments and execution options of a single task invocation in such a way that it can be passed to functions or even serialized and sent across the wire.

You can create a signature for the add task using the arguments (2, 2), and a countdown of 10 seconds like this:

add.signature((2, 2), countdown=10)

tasks.add(2, 2)

There’s also a shortcut using star arguments:

add.s(2, 2)

tasks.add(2, 2)

And there’s that calling API again…

Signature instances also support the calling API, meaning they have delay and apply_async methods.

But there’s a difference in that the signature may already have an argument signature specified. The add task takes two arguments, so a signature specifying two arguments would make a complete signature:

s1 = add.s(2, 2)

res = s1.delay()

res.get()

4

But, you can also make incomplete signatures to create what we call partials:

incomplete partial: add(?, 2)

s2 = add.s(2)

s2 is now a partial signature that needs another argument to be complete, and this can be resolved when calling the signature:

resolves the partial: add(8, 2)

res = s2.delay(8)

res.get()

10

Here you added the argument 8 that was prepended to the existing argument 2 forming a complete signature of add(8, 2).

Keyword arguments can also be added later; these are then merged with any existing keyword arguments, but with new arguments taking precedence:

s3 = add.s(2, 2, debug=True)

s3.delay(debug=False) # debug is now False.

As stated, signatures support the calling API: meaning that

sig.apply_async(args=(), kwargs={}, **options)

Calls the signature with optional partial arguments and partial keyword arguments. Also supports partial execution options.

sig.delay(*args, **kwargs)

Star argument version of apply_async. Any arguments will be prepended to the arguments in the signature, and keyword arguments is merged with any existing keys.

So this all seems very useful, but what can you actually do with these? To get to that I must introduce the canvas primitives…

The Primitives

group

chain

chord

map

starmap

chunks

These primitives are signature objects themselves, so they can be combined in any number of ways to compose complex work-flows.

Note

These examples retrieve results, so to try them out you need to configure a result backend. The example project above already does that (see the backend argument to Celery).

Let’s look at some examples:

Groups

A group calls a list of tasks in parallel, and it returns a special result instance that lets you inspect the results as a group, and retrieve the return values in order.

from celery import group

from proj.tasks import add

group(add.s(i, i) for i in range(10))().get()

[0, 2, 4, 6, 8, 10, 12, 14, 16, 18]

Partial group

g = group(add.s(i) for i in range(10))

g(10).get()

[10, 11, 12, 13, 14, 15, 16, 17, 18, 19]

Chains

Tasks can be linked together so that after one task returns the other is called:

from celery import chain

from proj.tasks import add, mul

(4 + 4) * 8

chain(add.s(4, 4) | mul.s(8))().get()

64

or a partial chain:

(? + 4) * 8

g = chain(add.s(4) | mul.s(8))

g(4).get()

64

Chains can also be written like this:

(add.s(4, 4) | mul.s(8))().get()

64

Chords

A chord is a group with a callback:

from celery import chord

from proj.tasks import add, xsum

chord((add.s(i, i) for i in range(10)), xsum.s())().get()

90

A group chained to another task will be automatically converted to a chord:

(group(add.s(i, i) for i in range(10)) | xsum.s())().get()

90

Since these primitives are all of the signature type they can be combined almost however you want, for example:

upload_document.s(file) | group(apply_filter.s() for filter in filters)

Be sure to read more about work-flows in the Canvas user guide.

Routing

Celery supports all of the routing facilities provided by AMQP, but it also supports simple routing where messages are sent to named queues.

The task_routes setting enables you to route tasks by name and keep everything centralized in one location:

app.conf.update(

task_routes = {

‘proj.tasks.add’: {‘queue’: ‘hipri’},

},

)

You can also specify the queue at runtime with the queue argument to apply_async:

from proj.tasks import add

add.apply_async((2, 2), queue=‘hipri’)

You can then make a worker consume from this queue by specifying the celery worker -Q option:

$ celery -A proj worker -Q hipri

You may specify multiple queues by using a comma-separated list. For example, you can make the worker consume from both the default queue and the hipri queue, where the default queue is named celery for historical reasons:

$ celery -A proj worker -Q hipri,celery

The order of the queues doesn’t matter as the worker will give equal weight to the queues.

To learn more about routing, including taking use of the full power of AMQP routing, see the Routing Guide.

Remote Control

If you’re using RabbitMQ (AMQP), Redis, or Qpid as the broker then you can control and inspect the worker at runtime.

For example you can see what tasks the worker is currently working on:

$ celery -A proj inspect active

This is implemented by using broadcast messaging, so all remote control commands are received by every worker in the cluster.

You can also specify one or more workers to act on the request using the --destination option. This is a comma-separated list of worker host names:

$ celery -A proj inspect active --destination=celery@example.com

If a destination isn’t provided then every worker will act and reply to the request.

The celery inspect command contains commands that don’t change anything in the worker; it only returns information and statistics about what’s going on inside the worker. For a list of inspect commands you can execute:

$ celery -A proj inspect --help

Then there’s the celery control command, which contains commands that actually change things in the worker at runtime:

$ celery -A proj control --help

For example you can force workers to enable event messages (used for monitoring tasks and workers):

$ celery -A proj control enable_events

When events are enabled you can then start the event dumper to see what the workers are doing:

$ celery -A proj events --dump

or you can start the curses interface:

$ celery -A proj events

when you’re finished monitoring you can disable events again:

$ celery -A proj control disable_events

The celery status command also uses remote control commands and shows a list of online workers in the cluster:

$ celery -A proj status

You can read more about the celery command and monitoring in the Monitoring Guide.

Timezone

All times and dates, internally and in messages use the UTC timezone.

When the worker receives a message, for example with a countdown set it converts that UTC time to local time. If you wish to use a different timezone than the system timezone then you must configure that using the timezone setting:

app.conf.timezone = ‘Europe/London’

Optimization

The default configuration isn’t optimized for throughput. By default, it tries to walk the middle way between many short tasks and fewer long tasks, a compromise between throughput and fair scheduling.

If you have strict fair scheduling requirements, or want to optimize for throughput then you should read the Optimizing Guide.

If you’re using RabbitMQ then you can install the librabbitmq module, an AMQP client implemented in C:

$ pip install librabbitmq

What to do now?

Now that you have read this document you should continue to the User Guide.

There’s also an API reference if you’re so inclined.

Resources

Getting Help

Mailing list

IRC

Bug tracker

Wiki

Contributing

License

Getting Help

Mailing list

For discussions about the usage, development, and future of Celery, please join the celery-users mailing list.

IRC

Come chat with us on IRC. The #celery channel is located at the Freenode network.

Bug tracker

If you have any suggestions, bug reports, or annoyances please report them to our issue tracker at https://github.com/celery/celery/issues/

Wiki

https://github.com/celery/celery/wiki

Contributing

Development of celery happens at GitHub: https://github.com/celery/celery

You’re highly encouraged to participate in the development of celery. If you don’t like GitHub (for some reason) you’re welcome to send regular patches.

Be sure to also read the Contributing to Celery section in the documentation.

License

This software is licensed under the New BSD License. See the LICENSE file in the top distribution directory for the full license text.

User Guide

Release

4.4

Date

Jul 31, 2020

Application

Main Name

Configuration

Laziness

Breaking the chain

Abstract Tasks

The Celery library must be instantiated before use, this instance is called an application (or app for short).

The application is thread-safe so that multiple Celery applications with different configurations, components, and tasks can co-exist in the same process space.

Let’s create one now:

from celery import Celery

app = Celery()

app

The last line shows the textual representation of the application: including the name of the app class (Celery), the name of the current main module (main), and the memory address of the object (0x100469fd0).

Main Name

Only one of these is important, and that’s the main module name. Let’s look at why that is.

When you send a task message in Celery, that message won’t contain any source code, but only the name of the task you want to execute. This works similarly to how host names work on the internet: every worker maintains a mapping of task names to their actual functions, called the task registry.

Whenever you define a task, that task will also be added to the local registry:

@app.task

… def add(x, y):

… return x + y

add

<@task: main.add>

add.name

main.add

app.tasks[‘main.add’]

<@task: main.add>

and there you see that main again; whenever Celery isn’t able to detect what module the function belongs to, it uses the main module name to generate the beginning of the task name.

This is only a problem in a limited set of use cases:

If the module that the task is defined in is run as a program.

If the application is created in the Python shell (REPL).

For example here, where the tasks module is also used to start a worker with app.worker_main():

tasks.py:

from celery import Celery

app = Celery()

@app.task

def add(x, y): return x + y

if name == ‘main’:

app.worker_main()

When this module is executed the tasks will be named starting with “main”, but when the module is imported by another process, say to call a task, the tasks will be named starting with “tasks” (the real name of the module):

from tasks import add

add.name

tasks.add

You can specify another name for the main module:

app = Celery(‘tasks’)

app.main

‘tasks’

@app.task

… def add(x, y):

… return x + y

add.name

tasks.add

See also

Names

Configuration

There are several options you can set that’ll change how Celery works. These options can be set directly on the app instance, or you can use a dedicated configuration module.

The configuration is available as app.conf:

app.conf.timezone

‘Europe/London’

where you can also set configuration values directly:

app.conf.enable_utc = True

or update several keys at once by using the update method:

app.conf.update(

… enable_utc=True,

… timezone=‘Europe/London’,

…)

The configuration object consists of multiple dictionaries that are consulted in order:

Changes made at run-time.

The configuration module (if any)

The default configuration (celery.app.defaults).

You can even add new default sources by using the app.add_defaults() method.

See also

Go to the Configuration reference for a complete listing of all the available settings, and their default values.

config_from_object

The app.config_from_object() method loads configuration from a configuration object.

This can be a configuration module, or any object with configuration attributes.

Note that any configuration that was previously set will be reset when config_from_object() is called. If you want to set additional configuration you should do so after.

Example 1: Using the name of a module

The app.config_from_object() method can take the fully qualified name of a Python module, or even the name of a Python attribute, for example: “celeryconfig”, “myproj.config.celery”, or “myproj.config:CeleryConfig”:

from celery import Celery

app = Celery()

app.config_from_object(‘celeryconfig’)

The celeryconfig module may then look like this:

celeryconfig.py:

enable_utc = True

timezone = ‘Europe/London’

and the app will be able to use it as long as import celeryconfig is possible.

Example 2: Passing an actual module object

You can also pass an already imported module object, but this isn’t always recommended.

Tip

Using the name of a module is recommended as this means the module does not need to be serialized when the prefork pool is used. If you’re experiencing configuration problems or pickle errors then please try using the name of a module instead.

import celeryconfig

from celery import Celery

app = Celery()

app.config_from_object(celeryconfig)

Example 3: Using a configuration class/object

from celery import Celery

app = Celery()

class Config:

enable_utc = True

timezone = ‘Europe/London’

app.config_from_object(Config)

or using the fully qualified name of the object:

app.config_from_object(‘module:Config’)

config_from_envvar

The app.config_from_envvar() takes the configuration module name from an environment variable

For example – to load configuration from a module specified in the environment variable named CELERY_CONFIG_MODULE:

import os

from celery import Celery

#: Set default configuration module name

os.environ.setdefault(‘CELERY_CONFIG_MODULE’, ‘celeryconfig’)

app = Celery()

app.config_from_envvar(‘CELERY_CONFIG_MODULE’)

You can then specify the configuration module to use via the environment:

$ CELERY_CONFIG_MODULE=“celeryconfig.prod” celery worker -l info

Censored configuration

If you ever want to print out the configuration, as debugging information or similar, you may also want to filter out sensitive information like passwords and API keys.

Celery comes with several utilities useful for presenting the configuration, one is humanize():

app.conf.humanize(with_defaults=False, censored=True)

This method returns the configuration as a tabulated string. This will only contain changes to the configuration by default, but you can include the built-in default keys and values by enabling the with_defaults argument.

If you instead want to work with the configuration as a dictionary, you can use the table() method:

app.conf.table(with_defaults=False, censored=True)

Please note that Celery won’t be able to remove all sensitive information, as it merely uses a regular expression to search for commonly named keys. If you add custom settings containing sensitive information you should name the keys using a name that Celery identifies as secret.

A configuration setting will be censored if the name contains any of these sub-strings:

API, TOKEN, KEY, SECRET, PASS, SIGNATURE, DATABASE

Laziness

The application instance is lazy, meaning it won’t be evaluated until it’s actually needed.

Creating a Celery instance will only do the following:

Create a logical clock instance, used for events.

Create the task registry.

Set itself as the current app (but not if the set_as_current argument was disabled)

Call the app.on_init() callback (does nothing by default).

The app.task() decorators don’t create the tasks at the point when the task is defined, instead it’ll defer the creation of the task to happen either when the task is used, or after the application has been finalized,

This example shows how the task isn’t created until you use the task, or access an attribute (in this case repr()):

@app.task

def add(x, y):

… return x + y

type(add)

<class ‘celery.local.PromiseProxy’>

add.evaluated()

False

add # <-- causes repr(add) to happen

<@task: main.add>

add.evaluated()

True

Finalization of the app happens either explicitly by calling app.finalize() – or implicitly by accessing the app.tasks attribute.

Finalizing the object will:

Copy tasks that must be shared between apps

Tasks are shared by default, but if the shared argument to the task decorator is disabled, then the task will be private to the app it’s bound to.

Evaluate all pending task decorators.

Make sure all tasks are bound to the current app.

Tasks are bound to an app so that they can read default values from the configuration.

The “default app”

Celery didn’t always have applications, it used to be that there was only a module-based API, and for backwards compatibility the old API is still there until the release of Celery 5.0.

Celery always creates a special app - the “default app”, and this is used if no custom application has been instantiated.

The celery.task module is there to accommodate the old API, and shouldn’t be used if you use a custom app. You should always use the methods on the app instance, not the module based API.

For example, the old Task base class enables many compatibility features where some may be incompatible with newer features, such as task methods:

from celery.task import Task # << OLD Task base class.

from celery import Task # << NEW base class.

The new base class is recommended even if you use the old module-based API.

Breaking the chain

While it’s possible to depend on the current app being set, the best practice is to always pass the app instance around to anything that needs it.

I call this the “app chain”, since it creates a chain of instances depending on the app being passed.

The following example is considered bad practice:

from celery import current_app

class Scheduler(object):

def run(self):app = current_app

Instead it should take the app as an argument:

class Scheduler(object):